Abstract

PURPOSE The purpose of this study was to evaluate patient outcomes in the National Demonstration Project (NDP) of practices’ transition to patient-centered medical homes (PCMHs).

METHODS In 2006, a total of 36 family practices were randomized to facilitated or self-directed intervention groups. Progress toward the PCMH was measured by independent assessments of how many of 39 predominantly technological NDP model components the practices adopted. We evaluated 2 types of patient outcomes with repeated cross-sectional surveys and medical record audits at baseline, 9 months, and 26 months: patient-rated outcomes and condition-specific quality of care outcomes. Patient-rated outcomes included core primary care attributes, patient empowerment, general health status, and satisfaction with the service relationship. Condition-specific outcomes were measures of the quality of care from the Ambulatory Care Quality Alliance (ACQA) Starter Set and measures of delivery of clinical preventive services and chronic disease care.

RESULTS Practices adopted substantial numbers of NDP components over 26 months. Facilitated practices adopted more new components on average than self-directed practices (10.7 components vs 7.7 components, P=.005). ACQA scores improved over time in both groups (by 8.3% in the facilitated group and by 9.1% in the self-directed group, P <.0001) as did chronic care scores (by 5.2% in the facilitated group and by 5.0% in the self-directed group, P=.002), with no significant differences between groups. There were no improvements in patient-rated outcomes. Adoption of PCMH components was associated with improved access (standardized beta [Sβ]=0.32, P = .04) and better prevention scores (Sβ=0.42, P=.001), ACQA scores (Sβ=0.45, P = .007), and chronic care scores (Sβ=0.25, P =.08).

CONCLUSIONS After slightly more than 2 years, implementation of PCMH components, whether by facilitation or practice self-direction, was associated with small improvements in condition-specific quality of care but not patient experience. PCMH models that call for practice change without altering the broader delivery system may not achieve their intended results, at least in the short term.

Keywords: Primary health care; family practice; organizational innovation; patient-centered medical home; National Demonstration Project; patient-centered care; outcomes assessment, patient; practice-based research

INTRODUCTION

An emerging model to guide primary care practice improvement in the United States is the patient-centered medical home (PCMH). The PCMH is operationalized as a set of best practices for primary care delivery, but ideally also includes supportive changes in the larger system, including payment reform.1 In 2007, the major primary care organizations released a statement of guiding principles for the PCMH, including ready access to care, patient-centeredness, teamwork, population management, and care coordination.1,2 Subsequently, the National Committee for Quality Assurance created a set of practice attributes to be used for a program whereby practices can achieve recognition as PCMHs.3

Dozens of demonstration projects evaluating the PCMH are now under way across the United States, although few have sought to implement the entire set of best practices.4 Early reports from these projects are encouraging. In particular, coordination of care linked to primary care practices is substantially reducing overall costs while increasing the quality of care for patients with severe chronic illness.5,6 In addition, better outcomes at lower cost were noted after a large integrated delivery system reduced its primary care clinicians’ panel size, lengthened visits, and embedded care management in its electronic medical record (EMR).7 The cost savings in several of these projects exceeds the added investment in primary care services.6,7

As these demonstration projects are poised to be disseminated more widely, important questions about the PCMH remain unanswered, including how to best define and measure the PCMH, whether certain measures of medical home attainment correlate more closely with improved outcomes, and how practices should develop into medical homes.

Although the rationale for the PCMH is drawn mainly from studies of single attributes of primary care, such as continuous relationships, early evidence suggests that more global measures of PCMH attainment are also associated with outcomes. In separate studies, 2 different PCMH measures were associated with fewer hospitalizations and emergency department visits8 and less disparity in access.9 Whether the PCMH is associated with other important outcomes, such as patient enablement, improved overall health status, and receipt of appropriate preventive and chronic disease care, is unknown.

In the current study, we evaluated patient outcomes from the National Demonstration Project (NDP), a 2-year project funded by the American Academy of Family Physicians. The NDP was designed to help primary care practices adopt a defined set of PCMH components that emerged from the Future of Family Medicine project.10 The NDP model included elements of access, care management, information technology, quality improvement, team care, practice management, specific clinical services, and integration with other entities in the health care system and community.11

To guide our evaluation, we framed 2 overall questions for analysis in the NDP. The first question was whether adoption of NDP model components and patient outcomes would be superior in practices that worked with a practice facilitator relative to those adopting them through a self-directed process. This question bears on future strategies for promoting adoption of the PCMH. The second question was whether adoption of NDP model components would improve patient outcomes, regardless of group assignment. Answering this question is important because there will be many different implementations of the PCMH, and it will be important to understand the relative effectiveness of different versions. In answering both questions, we felt that it would be important to evaluate 2 types of outcomes: first, a set of patient-rated outcomes that are considered fundamental pillars of primary care (eg, easy access to first-contact care, comprehensive care, coordination of care, and personal relationship over time) and second, quality of care for common conditions.

METHODS

Study Design

The study was a clinical trial with randomization at the practice level and observations at both the practice level and the patient level. Data on preventive service delivery, chronic care, and patient experiences were collected in the 2 study groups (facilitated and self-directed practices) at baseline, 9 months, and 26 months. The protocol for this study was approved by the institutional review board (IRB) of the American Academy of Family Physicians in Leawood, Kansas, and the IRBs of each of the participating institutions.

Sample and Intervention

The NDP was launched in June 2006 by the American Academy of Family Physicians to implement a new model of care consistent with the PCMH.10 The methods, sample, details of the intervention, practice change processes, and an emergent theory of practice change are described elsewhere in this supplement.12–18 In brief, 36 family medicine practices from across the United States were selected from 337 applicants. Practices were randomized into either a facilitated group or a self-directed group. Facilitated practices received ongoing assistance from a change facilitator; consultations from a panel of experts in practice economics, health information technology, and quality improvement; discounted software technology; training in the NDP model; and support by telephone and e-mail. They also were involved in 4 learning sessions and regular group conference calls. Self-directed practices were given access to Web-based practice improvement tools and services, but did not receive facilitator assistance.

Collection of Patient Outcome Data and Implementation Data

Methods of data collection are described in detail elsewhere.13 Practices provided confidential lists of consecutive patients seen after 3 index dates: baseline (July 3, 2006), 9 months (April 1, 2007), and 26 months (August 1, 2008). To minimize the Hawthorne effect, these dates were disclosed to the practices only after the patient visit windows had passed. Trained research nurses collected information on rates of delivery of preventive services and measures of chronic disease care either on site or by remote access of the practices’ EMRs. Using a specified protocol, 60 consecutive patients were selected for medical record audit in each practice at each index date.13 To collect patient ratings of the practices, a consecutive sample of 120 patients at each practice at each index date received a questionnaire on demographics, primary care attributes, satisfaction with the practice, health status, and patient-centered outcomes.

PCMH Measure

One of the authors (E.E.S.) collected information on the implementation of NDP model components by visiting each practice for a 2- to 3-day evaluation and assessing the presence of specific components with telephone interviews with key informants in each practice. The NDP model PCMH measure was the proportion of the 39 measurable NDP model components that were implemented at baseline and at 26 months, as listed in Table 1▶. This measure emphasized the technological components of PCMH implementation.

Table 1.

The 39 Components of the NDP Model Assessed, by Domain

| Access to care and information (6 components) |

| Same-day appointments |

| Laboratory results highly accessible |

| Online patient services |

| e-Visits |

| Group visits |

| After-hours access coverage |

| Care management (4 components) |

| Population management |

| Wellness promotion |

| Disease prevention |

| Patient engagement/education |

| Practice services (5 components) |

| Comprehensive acute and chronic care |

| Prevention screening |

| Surgical procedures |

| Ancillary therapeutic/support |

| Ancillary diagnostic services |

| Continuity of care (5 components) |

| Community-based services |

| Hospital care |

| Behavioral health care |

| Maternity care |

| Case management |

| Practice management (5 components) |

| Disciplined financial management |

| Cost-benefit decision making |

| Revenue enhancement |

| Personnel/HR management |

| Optimized office design |

| Quality and safety (5 components) |

| Medication management |

| Patient satisfaction feedback |

| Clinical outcomes analysis |

| Quality improvement |

| Practice-based team care |

| Health information technology (5 components) |

| Electronic medical record |

| Electronic prescribing |

| Population management/registry |

| Practice Web site |

| Patient portal |

| Practice-based care (4 components) |

| Provider leadership |

| Shared mission and vision |

| Effective communication |

| Task designation by skill set |

NDP=National Demonstration Project; HR=human resources.

Patient Outcome Measures

We evaluated 2 categories of patient outcome measures: patient-rated outcomes and measures of the quality of care for specific conditions. Details for these measures are given in Table 2▶.

Table 2.

Description of Patient-Rated and Condition-Specific Outcomes

| Measure and Scale | Description |

|---|---|

| Patient-rated outcomesa | |

| Access to first-contact care (ACES; range, 0–1.0) | Help as soon as needed for an illness or injury; appointment for a checkup or routine care as soon as needed; answer to medical question the same day when calling during regular office hours; help or advice needed when calling after regular office hours |

| Coordination of care (CPCI; range, 0–1.0) | Keeps track of all my health care; follows up on a problem I’ve had, either at the next visit or by mail, e-mail, or telephone; follows up on my visit to other health care professionals; helps me interpret my laboratory tests, x-rays, or visits to other doctors; communicates with other health professionals I see |

| Comprehensive care (CPCI; range, 0–1.0) | Handles emergencies; care of almost any medical problem I may have; go for help with a personal or medical problem; go for care for an ongoing medical problem such as high blood pressure; go for a checkup to prevent illness |

| Personal relationship over time (CPCI; range, 0–1.0) | Knows a lot about my family medical history; have been through a lot together; understands what is important to me regarding my health; knows my medical history very well; takes my beliefs and wishes into account in caring for me; knows whether or not I exercise, eat right, smoke, or drink alcohol; knows me well as a person (such as hobbies, job, etc) |

| Global practice experience (range, 0–1.0) (all or none) | Strongly agree with: “I receive the care I want and need when and how I want and need it,” and strongly agree with: “I am delighted with this practice.” |

| Self-reported health status (range, 1–5) | In general how would you rate your overall health status? (excellent, very good, good, fair, poor) |

| Patient empowerment (range, 0–2.0) | Patient enablement (PEI; range, 0–1.0): In relation to your most recent visit, are you: able to cope with life; able to understand your illness; able to cope with your illness; able to keep yourself healthy; confident about your health; able to help yourself? (response options for each: much better, better, same or less, N/A) |

| Consultation and relational empathy measure (CARE; range, 0–1.0): For your last doctor’s visit, how was the doctor at: making you feel at ease; letting you tell your “story”; really listening; being interested in you as a whole person; fully understanding your concerns; showing care and compassion; being positive; explaining things clearly; helping you take control; and making a plan of action with you? (response options for each: excellent, very good, good, fair, poor, N/A) | |

| Satisfaction with service relationship (range, 0–3.0) | Physician satisfaction (ACES-SF; range, 0–2.0): rating of personal physician (0 = worst, 10 = best); recommend personal physician to family and friends (5 = definitely yes, 1=definitely not) |

| Cultural responsiveness (ACGME; range, 0–1.0): the practice looks down on me and the way I live my life; the practice treats me with respect and dignity; the practice would provide better care if I were of a different race (for each, 5 = strongly agree, 1 = strongly disagree) | |

| Condition-specific outcomesb | |

| ACQA Starter Set measure (16 measures) | 7 prevention measures: breast cancer screening, colon cancer screening, cervical cancer screening, tobacco use history, advice for smoking cessation, seasonal influenza vaccination, and pneumonia vaccination |

| 2 coronary artery disease measures: prescription of lipid-lowering medications, prescription of aspirin prophylaxis | |

| 6 diabetes measures: HbA1c measurement, HbA1c under control, blood pressure at target, lipid measurement, LDL cholesterol at target, retinal examination up to date | |

| 1 acute care measure: appropriate use of antibiotics in children for upper respiratory tract infections | |

| Prevention score (percentage of eligible patients meeting recommendations) | US Preventive Services Task Force recommendations by age and sex as of July 2006 |

| Chronic disease care score (range, 0–1.0) | Percentage of patients having a diagnosis of diabetes, hypertension, coronary artery disease, and hyperlipidemia receiving recommended treatments and assessments |

| 8 diabetes measures: LDL cholesterol measured in previous year, LDL cholesterol <100 mg/dL, retinal examination by eye professional in previous year, HbA1c measured in the previous year, HbA1c <9%, last blood pressure <130/80 mm Hg, foot examination in the previous year, aspirin prophylaxis | |

| 2 hypertension measures: blood pressure at target (<140/90 mm Hg if nondiabetic, <130/80 mm Hg if diabetic), on aspirin prophylaxis | |

| 3 coronary artery disease measures: blood pressure at target, aspirin prophylaxis, on lipid-lowering therapy | |

| 4 hyperlipidemia measures: on lipid-lowering therapy, LDL at target (<100 mg/dL for diabetic patients and <130 mg/dL for nondiabetic patients), blood pressure at target, on aspirin prophylaxis | |

ACES = Ambulatory Care Experiences Survey; ACES-SF = Ambulatory Care Experiences Survey–Short Form; ACGME = American Council for Graduate Medical Education; ACQA = Ambulatory Care Quality Alliance; CARE = consultation and relational empathy; CPCI = Components of Primary Care Index; HbA1c=hemoglobin A1c; LDL=low-density lipoprotein; N/A=not applicable; PEI=Patient Enablement Index.

a Data collected by self-administered patient questionnaires.

b Data collected by medical record audits.

Patient-Rated Outcomes

We assessed patient-rated outcomes from patients’ responses on a questionnaire (the patient outcomes survey). These outcomes included primary care attributes, which were drawn from previously described measures of access, comprehensiveness, coordination, and continuous relationships.19–24 Global practice experience was a new measure developed for this study using the all-or-none composite quality score based on Institute of Medicine criteria.25,26 Patient empowerment combined a patient enablement index (PEI)27–30 and a measure of the consultation process, namely, consultation and relational empathy (CARE).28–30 Satisfaction with the service relationship combined 2 items pertaining to satisfaction with one’s physician from the Ambulatory Care Experiences Survey (ACES) short form and 3 items from a cultural responsiveness survey from the American Council for Graduate Medical Education (ACGME) survey.23,31,32 General health status was measured with a single item.33

Condition-Specific Quality of Care Outcomes

We obtained 3 condition-specific quality scores from a medical record audit. The Ambulatory Care Quality Alliance (ACQA) Starter Set measure includes 16 of 26 measures proposed by this consensus group, addressing both prevention and chronic disease.34 We calculated an overall prevention score by assessing receipt of age- and sex-specific interventions recommended by the US Preventive Services Task Force in July 2006.35 We calculated a chronic disease score by examining the percentage of patients with identified target conditions receiving recommended quality measures for coronary artery disease, hypertension, diabetes, and hyperlipidemia. An overall percentage composite quality score was calculated for each of the condition-specific quality scores using the methodology of Reeves et al.26 We also attempted to measure quality of depression care and of acute care for upper respiratory tract infections, but the samples within practices were too small to reliably calculate estimates for these items.

Analyses

Our overall analytic strategy was to assess the clinical and statistical significance of changes in patient-rated outcomes in the NDP from baseline to the 26-month end point. Because the serial waves of patient surveys and medical record audits included different patient samples over time, repeated-measures analysis at the patient level was not appropriate; the unit of analysis is therefore the clinic. For analysis of outcomes, we used patient data aggregated by clinic.

To compare the effect of facilitated and self-directed interventions at the clinic level, we used a full factorial repeated-measures analysis of variance (ANOVA) model where group assignment, time (baseline vs 26-month end point), and the interaction effect of group and time were used as predictors of the outcomes. Time was the within-practice factor and group was the between-practice factor.

To evaluate whether adoption of NDP model components was associated with patient outcomes, we used a set of generalized linear repeated-measures models, with each patient-rated or condition-specific outcome at 26 months as the dependent variable in 1 model, and included the change in the NDP model components as a covariate in the model, thus controlling for the outcome measure at baseline. We evaluated the direction and statistical significance of the relationship between NDP model components and the outcomes.

Note that power in these practice-level analyses was low. Depending on the specific model, power ranged from .30 to .57 for main effects and was even lower to detect interaction effects. Because of this limitation in power, we considered a difference having a P value of less than .15 to be a trend.

RESULTS

Sample Characteristics and Response Rates

A total of 36 practices enrolled in the NDP and 31 completed the study—16 in the facilitated group and 15 in the self-directed group. One facilitated practice withdrew because the larger system IRB could not approve participation in the study, and the other facilitated practice closed during the NDP because of financial pressures. One self-directed practice felt that the NDP data collection requirements were too burdensome in the context of other practice priorities, and 2 other self-directed practices closed during the NDP (1 when the rural hospital across the street closed and 1 when the larger health system decided to close the practice for health system priorities beyond the practice).

A total of 1,067 patients from practices completing the NDP returned questionnaires at baseline (29% response rate), 882 patients did so at 9 months (24% response rate), and 760 did so at 26 months (21% response rate). The medical record audits included 1,964 patients at baseline (99.9% review rate) and 1,861 at the 26-month assessment (100% review rate). Analyses reported here include only the 29 practices with both baseline and 26-month data. One facilitated practice completed the study but was unable to provide baseline medical record data because their EMR data were lost during the NDP. Another facilitated practice was unable to provide a patient roster needed for the last wave of patient surveys because of competing priorities.

The characteristics of patients completing questionnaires and patients whose medical records were reviewed are shown in Table 3▶. Respondents from the facilitated practices were older than those from self-directed practices. The proportions of women, minorities, and those with higher educational attainment were similar across groups. A higher proportion of patients in the self-directed practices had been using the practice for 10 years or less.

Table 3.

Characteristics of Patients Completing Questionnaires and Patients Whose Records Were Reviewed, by Group and Time Point

|

Baseline |

26 Months |

|||

|---|---|---|---|---|

| Characteristic | Facilitated | Self-Directed | Facilitated | Self-Directed |

| Patient questionnaires | n=568 | n=499 | n=377 | n=383 |

| Age, mean (SD), y | 55 (20.7) | 49 (21.2) | 56 (20.7) | 52 (18.5) |

| Women, No. (%) | 374 (67) | 334 (68) | 264 (71) | 263 (71) |

| Race, No. (%) | ||||

| White/Caucasian | 525 (95) | 449 (95) | 348 (95) | 345 (95) |

| Black/African American | 17 (3) | 7 (2) | 10 (3) | 7 (2) |

| Other | 11 (2) | 19 (4) | 7 (2) | 12 (3) |

| Ethnicity, No. (%) | ||||

| Hispanic or Latino | 13 (3) | 17 (4) | 9 (3) | 13 (4) |

| Highest educational grade completed, No. (%) | ||||

| Less than high school graduation | 67 (12) | 65 (14) | 44 (12) | 34 (9) |

| High school graduate or GED | 129 (24) | 119 (25) | 102 (28) | 77 (21) |

| Some college or 2-year degree | 166 (31) | 142 (30) | 122 (33) | 118 (33) |

| 4-year college graduate | 83 (15) | 71 (15) | 50 (14) | 60 (17) |

| More than 4 years of college | 96 (18) | 81 (17) | 50 (14) | 72 (20) |

| Employment status, No. (%) | ||||

| Employed | 240 (45) | 220 (48) | 137 (37) | 172 (47) |

| Unemployed | 6 (1) | 4 (1) | 6 (2) | 7 (2) |

| In school | 35 (7) | 39 (8) | 20 (5) | 15 (4) |

| Disabled | 25 (5) | 22 (5) | 17 (5) | 14 (4) |

| Looking after home | 39 (7) | 51 (11) | 28 (8) | 44 (12) |

| Retired | 158 (30) | 102 (22) | 139 (38) | 96 (26) |

| Other | 29 (6) | 24 (5) | 21 (6) | 16 (4) |

| With practice ≤10 years, No. (%) | 395 (72) | 389 (80) | 251 (67) | 304 (80) |

| Medical record audit | n = 960 | n = 1,023 | n = 963 | n = 898 |

| Age, mean (SD), y | 50 (58) | 44 (23) | 45 (23) | 41 (23) |

| Women, No. (%) | 555 (58) | 623 (62) | 580 (61) | 551 (61) |

GED=general equivalency diploma.

Patient Outcomes in Facilitated vs Self-Directed Practices

At baseline, facilitated practices had an average of 17.0 NDP model components in place (44% of all components) and self-directed practices had an average of 20.1 components in place (52% of all components) (P = .02). Both facilitated and self-directed practices added NDP model components during the 26-month follow-up, but facilitated practices added more, 10.7 components vs 7.7 in the self-directed group (ANOVA, F test for group-by-time interaction: P = .005).

Table 4▶ shows a comparison of facilitated and self-directed practices’ patient-rated and condition-specific outcomes. In terms of condition-specific quality of care, we observed absolute improvements in ACQA scores over 26 months of 9.1% in the self-directed group and 8.3% in the facilitated group (ANOVA, F test for time [within-group] effect: P <.001). The group difference was not statistically significant (group-by-time interaction: P = .85). Absolute improvements in chronic care scores over 26 months were smaller, 5.0% in the self-directed group vs 5.2% in the facilitated group (ANOVA, F test for within-group effect: P = .002) and did not differ between groups (group-by-time interaction: P = .92). Absolute improvements in prevention scores were not statistically significant, but there was a trend for the group-by-time interaction favoring the facilitated group (P = .09).

Table 4.

Patient Outcomes by Group and Time Point

|

Patient-Rated Outcomes |

Condition-Specific Outcomesa |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Group and Time Point, and P Values | Access to Care | Care Coordination | Comprehensive Care | Personal Relationship Over Time | Global Practice Experienceb | Service Relationship Satisfaction | Patient Empowerment | Self-Rated Health Status | ACQA,c% | Prevention,d% | Chronic Care,e % |

| Facilitated (n=15) | |||||||||||

| Baseline | .88 | .76 | .82 | .76 | .28 | .91 | .67 | .66 | 39.8 | 36.8 | 53.4 |

| 9 months | .88 | .76 | .82 | .77 | .29 | .91 | .68 | .69 | 46.0 | 37.0 | 52.4 |

| 26 months | .86 | .75 | .81 | .76 | .26 | .90 | .69 | .68 | 48.1 | 41.1 | 58.7 |

| Changef | −.02 | −.01 | −.01 | −.00 | −.02 | −.01 | +.02 | +.02 | +8.3 | +4.3 | +5.2 |

| Self-directed (n=14) | |||||||||||

| Baseline | .87 | .75 | .84 | .76 | .32 | .91 | .67 | .68 | 35.9 | 40.5 | 42.3 |

| 9 months | .86 | .73 | .82 | .74 | .32 | .89 | .67 | .68 | 39.7 | 39.2 | 46.6 |

| 26 months | .86 | .73 | .81 | .75 | .33 | .90 | .69 | .70 | 45.0 | 39.8 | 47.3 |

| Changef | −.01 | −.02 | −.03 | −.01 | +.01 | −.01 | +.02 | +.02 | +9.1 | −0.7 | +5.0 |

| P values | |||||||||||

| Within groupg | .11 | .11 | .06 | .38 | .92 | .28 | .19 | .15 | .000 | .25 | .002 |

| Between grouph | .64 | .38 | .70 | .62 | .34 | .54 | .96 | .42 | .20 | .68 | .003 |

| Group by timei | .71 | .46 | .25 | .86 | .31 | .83 | .93 | .80 | .85 | .09 | .92 |

ACQA = Ambulatory Care Quality Alliance; ANOVA = analysis of variance.

Notes: Values in bold meet the study’s definition for a trend (P <.15). Analysis was performed using a generalized full factorial analysis of variance (ANOVA).

a Composite score, represents overall percentage of all eligible reviewed care events that met criteria.

b Composite score, all or none.

c For the 16 measures assessed.

d Percentage of age- and sex-specific recommendations of the US Preventive Services Task Force (July 2006) that were met.

e Percentage of recommended measures met for diabetes mellitus, hypertension, hyperlipidemia, and coronary artery disease.

f Change from baseline to 26-month time point.

g Indicates whether there is significant change over time regardless of group.

h Indicates whether there are significant group differences regardless of time.

i Indicates whether there is significant differential change over time between the groups.

In contrast, there were no significant improvements in patient-rated outcomes, including ratings of the 4 pillars of primary care (easy access to first-contact care, comprehensive care, coordination of care, and personal relationship over time), global practice experience, patient empowerment, and self-rated health status. There were trends for very small decreases in coordination of care (P = .11), comprehensive care (P = .06), and access to first-contact care (P = .11) in both groups.

Adoption of the NDP Model Components and Changes in Patient Outcomes

At baseline, practices had an average of 46% of the NDP model components in place (range, 20%−70%). Baseline scores for a composite of the 4 pillars of primary care as rated by patients (easy access to first-contact care, coordination of care, comprehensive care, personal relationship over time, and global practice experience) among practices averaged 3.5 on a 5-point scale (range, 3.1–4.2). The percentage of model components and the composite score of patient-rated primary care were not significantly correlated at baseline (Pearson correlation = −0.08).

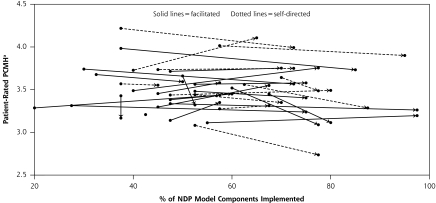

Over the course of the NDP, practices increased their proportion of NDP model components adopted by an average of 24% (range, 0%−50%), while the patient-rated primary care attributes score decreased by an average of 0.05 (95% confidence interval, −0.13 to 0.02), or about one-fifth of a standard deviation. Changes in NDP model components and patient-rated primary care attributes over time were only weakly correlated (Pearson correlation = 0.11, P = .55). The covariation of practices’ progress in adopting the model components and patient-rated primary care attributes is displayed in Figure 1▶. Adoption of model components during the NDP was associated with improved access (standardized beta [Sβ] = 0.32, P = .04) and with better prevention scores (Sβ = 0.42, P = .001), ACQA scores (Sβ = 0.45, P = .007), and chronic disease scores (Sβ = 0.25, P = .08). Adoption of NDP model components was not associated with patient-rated outcomes other than access, including health status, satisfaction with the service relationship, patient empowerment, coordination of care, comprehensiveness of care, personal relationship over time, or global practice experience.

Figure 1.

Composite score of patient-rated attributes vs NDP model components implemented at baseline and 26 months.

NDP=National Demonstration Project; arrow origin=baseline; arrow termination=26 months.

Notes: Each line represents 1 practice. The scale for the composite score for patient-rated primary care attributes ranged from 1 to 5, with higher scores indicating a higher level of attributes.

a Nutting PA, Crabtree BF, Stewart EE, et al. Effect of facilitation on practice outcomes in the National Demonstration Project model of the patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1):s33–s44.16

DISCUSSION

Main Findings

Practices in both the facilitated and self-directed groups were able to adopt multiple components of the NDP model of the PCMH over 26 months. Practices that received intensive coaching from a facilitator adopted more model components. Adopting these predominantly technological elements of the PCMH appeared to have a price, however, as average patient ratings of the practices’ core primary care attributes slipped slightly, regardless of group assignment.

Overall, only the condition-specific quality of care measures improved, and only modestly, over 26 months. Yet important variations were evident within this overall result. Practices that adopted more model components achieved better quality of care scores for chronic disease management, ACQA measures, and prevention services. That NDP model components and patient-rated outcomes were poorly correlated indicates that there may be trade-offs, at least in the short term, in implementing these components.

Strengths and Limitations

The study had a number of notable strengths. Enrolled practices were diverse in size and geographic location, although most were small, nonacademic, and independent practices, similar in practice organization to those that still constitute the bulk of the US primary care workforce.36 Patient-level outcomes included a broad and deep array of measures including ratings of the primary care experience, health care quality (medical record measures), patient empowerment, health status, and well-validated measures of primary care’s core attributes, as well as an Institute of Medicine–defined summary score (global practice experience).25 We were able to obtain nearly complete data from the medical record audit for the condition-specific outcomes studied. Adoption of NDP model components was measured by a combination of direct observation and key informant reports. This multimethod process evaluation of practice transformation also enabled a quantitative assessment of practices’ progress in implementing model components, as reported elsewhere in this supplement.16

The study is subject to a number of important limitations, however. Practices chosen for the NDP from a large number of applicants likely represent a selected group of highly motivated practices participating in a high-profile demonstration project. Improvements observed during the NDP may therefore demonstrate what can be achieved when prior ambition and commitment are high. Although few practices dropped out, given the small number of practices initially enrolled, the power to detect small differences in outcomes was limited. It should also be emphasized that the study lacked a true control group, as the self-directed group received a low level of support; thus, inferences about whether attaining the PCMH as defined in the NDP model improves outcomes are based on the overall sample rather than the comparison of facilitated and self-directed practices. These inferences should also be interpreted in the context of a 26-month observation interval. Given the extensive changes asked of the practices, they may have needed more time for ongoing cycles of executing and adapting to change. Also, as noted in the Methods section, statistical power for time and group comparisons was limited.

Limitations in patient-level data included the low response rates to the patient questionnaires. Although selection biases are possible, they were likely to be similar in the samples compared over time. How much selection bias the low response rates might have caused is difficult to assess because IRB stipulations related to the Health Insurance Portability and Accountability Act (HIPAA) precluded characterizing nonresponders. And although targets for medical record audits were met, the medical record samples did not include enough patients with depression or upper respiratory tract infection to calculate reliable quality of care measurements for these conditions. Finally, the practices chosen for the NDP included few low-income and minority patients, limiting the study’s generalizability for those populations.

Implications

Answering the 2 research questions posed in the Introduction—whether adoption of NDP model components and patient outcomes are superior with facilitation, and whether adoption improves patient outcomes—is important for deriving insights for the PCMH’s future development. One robust finding was the dissociation between implementation of the NDP model’s predominantly technological components and improvements in patient-rated primary care attributes. In other words, some practices improved their patient experiences while also implementing the NDP components, whereas the majority did not, as shown in the figure. As shown in the article by Nutting et al16 in this supplement, adaptive reserve, a measure of participatory leadership and learning organization, 37 improves a practice’s ability to implement the NDP model of the PCMH.

Slippage in patient-rated primary care attributes after the NDP began suggests that technological improvements may come at a price. The intense efforts needed to phase in new technology may have temporarily distracted attention from interpersonal aspects of care. For example, attending to an EMR in an examination room may interfere with the process of delivering patient-centered care,38 or the rapid-fire implementation of many model components may exhaust practice members’ energy for improving patient experience.39 It is also possible that practice change is difficult for patients, particularly in practices that have long-standing relationships and established functional routines for meeting patient needs. Because of this potential tradeoff, future PCMH evaluations will need to consider both disease-focused and patient-centered outcomes.40

Given that both facilitated and self-directed groups were successful at adopting NDP model components, why did outcomes change so modestly in the NDP? One potential explanation is that it takes time and additional work to turn a new process into an effective function.41 An example will illustrate the distinction. A disease registry is a process in which all patients with a specified disease are listed and tracked. Its function, however, is to improve outcomes for that disease, a goal that will not be accomplished simply by creating the registry. After the registry is created, practices must pay active attention to what the registry is telling them about their performance and must then make iterative changes designed to improve results. A hypothesis we are exploring in our qualitative evaluation15,16 is whether a facilitator helps turn a new process into an effective function, through follow-up work to ensure that the process is used effectively or by increasing the practice’s global effectiveness at adopting change.

A final set of implications emerges from comparing NDP results with those of other early demonstration projects.42 In one respect, the NDP was the most ambitious of these projects, attempting to implement nearly all of the PCMH attributes (except for payment reform) that were subsequently set forth in the joint statement by major primary care organizations,1 whereas other demonstration projects have focused more narrowly on a limited set of attributes, such as improving coordination of care,6,43,44 patient-clinician relationships,7 information technology for decision support,7,43 or connection to community resources.6 One hypothesis, therefore, to account for the findings of the NDP is that the other projects’ more limited implementation plans (especially those guided by progress on a set of clinical outcomes) may lead to better results than more global PCMH implementation plans. But it would be misleading to compare the NDP’s results with those achieved elsewhere without acknowledging other projects’ strong focus on redesigning delivery systems to support the core functions of primary care. Through strategies such as investing in additional clinicians to allow smaller patient panels and longer visits,7 hiring shared case managers, launching community-wide quality improvement initiatives, and aligning local payers around strategic payment reforms,6 these other projects critically altered the external determinants of practices’ success or failure. Without these essential reforms of the delivery system, practices’ own attempts to deliver on the promise of a medical home are unlikely to succeed. We therefore caution those interpreting the NDP results that we have evaluated only a single specific model for PCMH implementation and for a relatively short time.

CONCLUSIONS

Developing practices into PCMHs is a complex endeavor that requires substantial time, energy, and attention to potential trade-offs. In the NDP, 2 years of effort yielded substantial adoption of PCMH components, although there was modest impact on quality of care and no improvement in patient-rated outcomes. Given how much was asked of the practices, 2 years may not have been enough time to pursue the iterative cycles of learning and testing improvements that are necessary to realize substantial gains in the patient experience.

Any interpretation of NDP findings must bear in mind what its strategy for change did and did not include. The change strategy focused on practices, asking them to implement an ambitious array of best practices from the NDP model of the PCMH. Judged on this basis, practices were successful in adopting a large number of new model components. What the change strategy did not include, however, were interventions to alter the delivery system beyond individual practices.45 Without fundamental transformation of the health care landscape that promotes coordination, close ties to community resources, payment reform, and other support for the PCMH, practices going it alone will face a daunting uphill climb.

Acknowledgments

The NDP was designed and implemented by TransforMED, LLC, a wholly-owned subsidiary of the AAFP. We are indebted to the participants in the NDP and to TransforMED for their tireless work. The authors also want to recognize the efforts of Luzmaria Jaén and Bridget Hendrix, who provided considerable support with medical record abstraction, data entry, and coordination of survey collection.

Conflicts of interest: The authors’ funding partially supports their time devoted to the evaluation, but they have no financial stake in the outcome. The authors’ agreement with the funders gives them complete independence in conducting the evaluation and allows them to publish the findings without prior review by the funders. The authors have full access to and control of study data. The funders had no role in writing or submitting the manuscript.

Disclaimer: Drs Stange, Nutting, and Ferrer, who are editors of the Annals, were not involved in the editorial evaluation of or decision to publish this article.

Funding support: The independent evaluation of the National Demonstration Project (NDP) practices was supported by the American Academy of Family Physicians (AAFP) and The Commonwealth Fund. The Commonwealth Fund is a national, private foundation based in New York City that supports independent research on health care issues and makes grants to improve health care practice and policy.

Publication of the journal supplement is supported by the American Academy of Family Physicians Foundation, the Society of Teachers of Family Medicine Foundation, the American Board of Family Medicine Foundation, and The Commonwealth Fund.

Dr Stange’s time was supported in part by a Clinical Research Professorship from the American Cancer Society.

Disclaimer: The views presented here are those of the authors and not necessarily those of The Commonwealth Fund, its directors, officers, or staff.

REFERENCES

- 1.American Academy of Family Physicians (AAFP), American Academy of Pediatrics (AAP), American College of Physicians (ACP), American Osteopathic Association (AOA). Joint Principles of the Patient-Centered Medical Home. February 2007. http://www.aafp.org/pcmh/principles.pdf. Accessed Jun 11, 2009.

- 2.Patient-Centered Primary Care Collaborative. http://www.pcpcc.net/. Accessed Jun 25, 2009.

- 3.National Committee for Quality Assurance. Physician Practice Connections—Patient-Centered Medical Home. http://www.ncqa.org/tabid/631/Default.aspx. Accessed Jun 11, 2009.

- 4.Iglehart JK. No place like home—testing a new model of care delivery. N Engl J Med. 2008;359(12):1200–1202. [DOI] [PubMed] [Google Scholar]

- 5.Boult C, Reider L, Frey K, et al. Early effects of “Guided Care” on the quality of health care for multimorbid older persons: a cluster-randomized controlled trial. J Gerontol A Biol Sci Med Sci. 2008;63(3):321–327. [DOI] [PubMed] [Google Scholar]

- 6.Steiner BD, Denham AC, Ashkin E, Newton WP, Wroth T, Dobson LA Jr. Community care of North Carolina: improving care through community health networks. Ann Fam Med. 2008;6(4):361–367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Reid RJ, Fishman PA, Yu O, et al. Patient-centered medical home demonstration: a prospective, quasi-experimental, before and after evaluation. Am J Manag Care. 2009;15(9):e71–e87. [PubMed] [Google Scholar]

- 8.Cooley WC, McAllister JW, Sherrieb K, Kuhlthau K. Improved outcomes associated with medical home implementation in pediatric primary care. Pediatrics. 2009;124(1):358–364. [DOI] [PubMed] [Google Scholar]

- 9.Beal AC, Doty SE, Shea KK, Davis K. Closing the Divide: How Medical Homes Promote Equity in Health Care: Results from the Commonwealth Fund 2006 Health Care Quality Survey. New York, NY: The Commonwealth Fund; 2007.

- 10.Martin JC, Avant RF, Bowman MA, et al; Future of Family Medicine Project Leadership Committee. The future of family medicine: a collaborative project of the family medicine community. Ann Fam Med. 2004;2(Suppl 1):S3–S32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.TransforMED. National Demonstration Project. http://www.transformed.com/ndp.cfm. 2006. Accessed Jun 11, 2009.

- 12.Crabtree BF, Nutting PA, Miller WL, Stange KC, Stewart EE, Jaén CR. Summary of the National Demonstration Project and recommendations for the patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1):s80–s90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jaén CR, Crabtree BF, Palmer R, et al. Methods for evaluating practice change toward a patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1):s9–s20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Miller WL, Crabtree BF, Nutting PA, Stange KC, Jaén CR. Primary care practice development: a relationship-centered approach. Ann Fam Med. 2010;8(Suppl 1):s68–s79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Nutting PA, Crabtree BF, Miller WL, Stewart EE, Stange KC, Jaén CR. Journey to the patient-centered medical home: a qualitative analysis of the experiences of practices in the National Demonstration Project. Ann Fam Med. 2010;8(Suppl 1):s45–s56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nutting PA, Crabtree BF, Stewart EE, et al. Effect of facilitation on practice outcomes in the National Demonstration Project model of the patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1): s33–s44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Stange KC, Miller WL, Nutting PA, Crabtree BF, Stewart EE, Jaén CR. Context for understanding the National Demonstration Project and the patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1): s2–s8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stewart EE, Nutting PA, Crabtree BF, Stange KC, Miller WL, Jaén CR. Implementing the patient-centered medical home: observation and description of the National Demonstration Project. Ann Fam Med. 2010;8(Suppl 1):s21–s32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Flocke S. Primary care instrument [letter to editor]. J Fam Pract. 1998;46:12.9451356 [Google Scholar]

- 20.Flocke SA. Measuring attributes of primary care: development of a new instrument. J Fam Pract. 1997;45(1):64–74. [PubMed] [Google Scholar]

- 21.Flocke SA, Stange KC, Zyzanski SJ. The association of attributes of primary care with the delivery of clinical preventive services. Med Care. 1998;36(8 Suppl):AS21–AS30. [DOI] [PubMed] [Google Scholar]

- 22.Flocke SA, Miller WL, Crabtree BF. Relationships between physician practice style, patient satisfaction, and attributes of primary care. J Fam Pract. 2002;51(10):835–840. [PubMed] [Google Scholar]

- 23.Safran DG. Defining the future of primary care: what can we learn from patients? Ann Intern Med. 2003;138(3):248–255. [DOI] [PubMed] [Google Scholar]

- 24.Safran DG, Karp M, Coltin K, et al. Measuring patients’ experiences with individual primary care physicians. Results of a statewide demonstration project. J Gen Intern Med. 2006;21(1):13–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Institute of Medicine. Committee on Quality of Health Care in America. Crossing the Quality Chasm: A New Health System For the 21st Century. Washington, DC: National Academy Press; 2001.

- 26.Reeves D, Campbell SM, Adams J, Shekelle PG, Kontopantelis E, Roland MO. Combining multiple indicators of clinical quality: an evaluation of different analytic approaches. Med Care. 2007;45(6): 489–496. [DOI] [PubMed] [Google Scholar]

- 27.Howie JG, Heaney DJ, Maxwell M, Walker JJ. A comparison of a Patient Enablement Instrument (PEI) against two established satisfaction scales as an outcome measure of primary care consultations. Fam Pract. 1998;15(2):165–171. [DOI] [PubMed] [Google Scholar]

- 28.Howie JG, Heaney D, Maxwell M. Quality, core values and the general practice consultation: issues of definition, measurement and delivery. Fam Pract. 2004;21(4):458–468. [DOI] [PubMed] [Google Scholar]

- 29.Mercer SW, Maxwell M, Heaney D, Watt GC. The consultation and relational empathy (CARE) measure: development and preliminary validation and reliability of an empathy-based consultation process measure. Fam Pract. 2004;21(6):699–705. [DOI] [PubMed] [Google Scholar]

- 30.Mercer SW, McConnachie A, Maxwell M, Heaney D, Watt GC. Relevance and practical use of the Consultation and Relational Empathy (CARE) measure in general practice. Fam Pract. 2005;22(3):328–334. [DOI] [PubMed] [Google Scholar]

- 31.Like RC. Clinical Cultural Competence Questionnaire. Pre-Training Version. 2010. http://www2.umdnj.edu/fmedweb/chfcd/Cultural_competency_questionnaire%20_pre-training.pdf. Accessed May 6, 2010.

- 32.Safran DG, Kosinski M, Tarlov AR, et al. The Primary Care Assessment Survey: tests of data quality and measurement performance. Med Care. 1998;36(5):728–739. [DOI] [PubMed] [Google Scholar]

- 33.Krause NM, Jay GM. What do global self-rated health items measure? Med Care. 1994;32(9):930–942. [DOI] [PubMed] [Google Scholar]

- 34.Agency for Healthcare Research and Quality. Ambulatory Care Quality Alliance. Recommended Starter Set: Clinical Performance Measures for Ambulatory Care. http://www.ahrq.gov/qual/aqastart.htm. 2005. Accessed Jun 11, 2009.

- 35.U.S. Preventive Services Task Force. The Guide to Clinical Preventive Services 2006: Recommendations From the U.S. Preventive Services Task Force. Washington, DC: Lippincott Williams & Wilkins; 2006.

- 36.Hing E, Burt CW. Characteristics of office-based physicians and their medical practices: United States, 2005–2006. Vital Health Stat. 2008;13(166):1–34. [PubMed] [Google Scholar]

- 37.Crabtree BF, Miller WL, McDaniel RR, Strange KC, Nutting PA, Jaén CR. A survivor’s guide for primary care physicians. J Fam Pract. 2009;58(8):E1–E7. [PMC free article] [PubMed] [Google Scholar]

- 38.Han YY, Carcillo JA, Venkataraman ST, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics. 2005;116(6):1506–1512. [DOI] [PubMed] [Google Scholar]

- 39.Nutting PA, Miller WL, Crabtree BF, Jaén CR, Stewart EE, Stange KC. Initial lessons from the first national demonstration project on practice transformation to a patient-centered medical home. Ann Fam Med. 2009;7(3):254–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Berenson RA, Hammons T, Gans DN, et al. A house is not a home: keeping patients at the center of practice redesign. Health Aff (Mill-wood). 2008;27(5):1219–1230. [DOI] [PubMed] [Google Scholar]

- 41.Ferrer RL, Hambidge SJ, Maly RC. The essential role of generalists in health care systems. Ann Intern Med. 2005;142(8):691–699. [DOI] [PubMed] [Google Scholar]

- 42.The White House. Advanced Primary Care Models. 2010. 8-10-2009. http://www.whitehouse.gov/video/Health-Care-Stakeholder-Discussion-Advanced-Models-of-Primary-Care. Accessed May 6, 2010.

- 43.Paulus RA, Davis K, Steele GD. Continuous innovation in health care: implications of the Geisinger experience. Health Aff (Millwood). 2008;27(5):1235–1245. [DOI] [PubMed] [Google Scholar]

- 44.Leff B, Reider L, Frick KD, et al. Guided care and the cost of complex healthcare: a preliminary report. Am J Manag Care. 2009;15(8): 555–559. [PubMed] [Google Scholar]

- 45.Fisher ES. Building a medical neighborhood for the medical home. N Engl J Med. 2008;359(12):1202–1205. [DOI] [PMC free article] [PubMed] [Google Scholar]