Abstract

Prior work using a matching task between images that were complementary in spatial frequency and orientation information suggested that the representation of faces, but not objects, retains low-level spatial frequency (SF) information (Biederman & Kalocsai. 1997). In two experiments, we reexamine the claim that faces are uniquely sensitive to changes in SF. In contrast to prior work, we used a design allowing the computation of sensitivity and response criterion for each category, and in one experiment, equalized low-level image properties across object categories. In both experiments, we find that observers are sensitive to SF changes for upright and inverted faces and nonface objects. Differential response biases across categories contributed to a larger sensitivity for faces, but even sensitivity showed a larger effect for faces, especially when faces were upright and in a front-facing view. However, when objects were inverted, or upright but shown in a three-quarter view, the matching of objects and faces was equally sensitive to SF changes. Accordingly, face perception does not appear to be uniquely affected by changes in SF content.

Keywords: face perception, spatial frequency

Introduction

Broadly speaking, two categories of information are thought to be more critical for face than object perception: information about the configural relations between parts and the specific spatial frequency (SF) information present in images. Generally, studies report quantitative differences between face and object perception on measures designed to index how observers rely on these sources of information. For instance, a disadvantage for processing upside-down faces (a face inversion effect, see Rossion & Gauthier, 2002 for review) has been used as an indirect measure of sensitivity to configural relations. But inversion typically also affects the perception of objects, just less so than it affects face perception (Rossion & Gauthier, 2002). Such evidence may not be strong enough to support the claim that face perception relies on one or several processes that are not available to object perception (McKone, Kanwisher & Duchaine, 2007). Typically, such claims are made on the basis of qualitative differences between faces and non-face objects. In this work, we revisit prior claims that face perception differs qualitatively from that of objects in terms of its sensitivity to SF information (Biederman & Kalocsai, 1997).

There could be a process unique to face perception even if behavioral measures generally find only a quantitative difference between faces and objects. This would be the case if face perception also relies to some degree on part-based processes that are shared with generic object processing. Ideally, however, some tasks could be designed to be sensitive only to the process hypothesized to be face-specific, so that a qualitative behavioral difference can be documented. One measure that was suggested to reveal such a qualitative difference is the alignment effect in the composite task (Robbins & McKone, 2007; Young, Hellawell & Hay, 1987). In this task, participants are asked to selectively attend to one part of a face made of the top and bottom halves of different faces, with these two halves aligned or misaligned. When the parts are aligned, participants have difficulty ignoring the irrelevant part of the composite1. However, a recent study showed that observers trained to individuate objects from a novel category also demonstrated an alignment effect in a composite task (Wong, Palmeri & Gauthier, in press). While some hallmarks of face processing can be obtained only in expert observers, other effects once thought to be unique to faces have been obtained with objects in novice observers. This is the case with the whole-part advantage: the finding that face parts studied in the context of a whole face are better recognized than in isolation (Tanaka & Farah, 1993). While the effect was originally obtained for faces and not houses, later studies reported a significant, albeit smaller, whole-part advantage in novice viewers with dogs, cars, and novel objects called Greebles (e.g., Tanaka & Gauthier, 1997).

The present study is an investigation of one of the rare behavioral effects so far only observed for faces. We call this effect the “Complementation Effect” (CE), and it indexes the sensitivity of face perception to manipulations of SFs. Although this has been relatively less studied than other effects, face perception is reported to be highly sensitive to SF filtering (Fiser, Subramaniam & Biederman, 2001; Goffaux, Gauthier & Rossion, 2002) and to other types of manipulations of image format, such as contrast reversal (Gaspar, Bennett & Sekuler, 2008; Hayes, 1988; Subramaniam & Biederman, 1997) and the use of line drawings (e.g., Bruce et al., 1992). These manipulations have a more limited impact on object recognition (Biederman, 1987; Biederman & Ju, 1988; Liu et al., 2000; Nederhouser et al., 2007), suggesting that face and object perception may rely on different mechanisms and/or representations. Specifically, Biederman and Kalocsai (1997) explored the SF sensitivity of face perception. Complementary images were created by dividing the SF-by-orientation space into an 8 × 8 matrix and filtering out every odd diagonal of cells to form one version of an image and every even diagonal of cells to form the second image. These two versions of the same image are complementary in the sense that they do not overlap in any specific combination of SF and orientation (see Figure 1). As might be expected, participants demonstrated a CE for faces, whereby they were poorer verifying and matching complementary faces relative to identical faces in both a name verification priming task and a same-different sequential matching task. But, perhaps more surprisingly, no CE was observed in either paradigm for common objects or chairs. Because the naming task was inherently confounded by task demands and the level of categorization (i.e., objects were named at the basic-level while famous faces were named at the subordinate-level), we are focusing here on understanding the face-object discrepancy observed via the sequential matching paradigm. Biederman and Kalocsai argue that this difference arises because the visual system represents faces and objects in distinct ways. They propose that non-face objects are stored as qualitative constructions of volumetric structural units (geons) that can be recovered from images based on non-accidental properties found in an edge description of the object, devoid of the original SF image information (Biederman, 1987). In contrast, face representations are thought to preserve the specific information from V1-type cell outputs, accounting for why face perception is highly sensitive to SF manipulations.

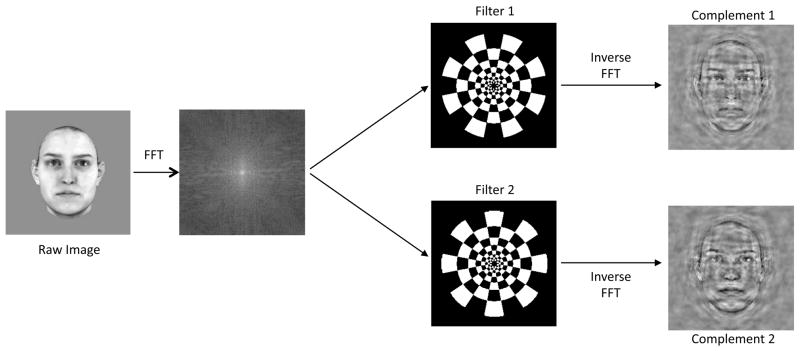

Figure 1.

Spatial Frequency (SF) and Orientation filtering. Two complementary images were created by filtering a single input image in the Fourier domain into an 8 by 8 radial matrix of SF-orientation information. Two separate filters were applied to preserve alternating combinations of the SF-orientation content of the original image. Thus, when returned to the spatial domain via inverse FFT, the complementary pair of images share no overlapping combinations of SF and orientation information.

Given that the CE was originally obtained for faces but not for non-face objects in novice observers (Biederman & Kalocsai, 1997), another study asked whether this effect may increase with perceptual expertise. Yue, Tjan, and Biederman (2006) trained participants with novel objects called blobs. All participants – those trained with blobs and those with no pre-testing exposure – showed robust CEs for faces and none for blobs. In addition, using fMRI these authors found that relative to an identical pair of images, a complementary pair of faces, but not blobs, reduced fMRI adaptation in the fusiform face area. The results of Biederman and Kalocsai (1997) and Yue et al. (2006) suggest that the CE is unique to faces. This is consistent with other work finding that the matching of objects such as chairs shows little sensitivity to manipulations of the overlap in SF content (Collin et al., 2004).

In the following experiments we revisit the question of whether the CE is unique to faces, guided by four main motivations. First, Biederman & Kalocsai (1997) and Yue et al. (2006) measured the CE by comparing accuracy in identical vs. complementary trials, when face or object identity was the same. The trials in which item identity (and thus the correct response) was different were not used because they could not be assigned to either condition (identical or complementary). Therefore, it is possible that observers applied different response criteria to face and non-face conditions tested in Biederman & Kalocsai and Yue et al.’s same-different matching tasks.

Indeed, important differences in response biases between conditions, even when trials are not presented in different blocks, have been observed in other face processing studies and, when not accounted for, can lead to misleading conclusions (e.g., Wenger & Ingvalson, 2002, Cheung, Richler, Palmeri & Gauthier, in press). Therefore, to verify that the interaction between category and complementation is not due to differential response bias, we blocked trials by complementation condition so that two sets of different trials would be associated with identical vs. complementary conditions, allowing computation of discriminability and response bias. Second, in Experiment 1 we used an inversion manipulation to explore whether the CE can be attributed to configural processing, typically associated with face perception. While stimulus inversion is not a direct manipulation of configural processing, it is generally accepted that inversion affects the processing of configural information (Thompson, 1980; Searcy & Bartlett, 1996; Tanaka & Sengco, 1997). Third, prior comparisons of the CE between faces and objects made no attempt at matching the SF content of the original images across categories. It is possible that face perception is most sensitive to complementation because faces contain more information in a particular region of the SF space than control objects. Therefore, in Experiment 1 we match images of faces, cars and chairs in terms of low-level image properties, before applying the complementation filters. Finally, in Experiment 2 we investigate whether the symmetry of facial images used in prior work and in our Experiment 1 plays a role in the CE. It is possible that the radial symmetry of the filters is particularly disruptive to the encoding of symmetrical objects such as front facing images, because the filter would create symmetrical changes that may be especially likely to be interpreted as structural information (rather than alterations due to filtering).

Experiment 1

Methods

Participants

Thirty-four individuals (11 male, mean age 20 years) participated for a small honorarium or course credit. All participants had normal or corrected-to-normal visual acuity. The experiment was approved by the Institutional Review Board at Vanderbilt University, and all participants provided written informed consent.

Stimuli and Material

Stimuli were digitized, eight-bit greyscale images of 15 faces with hair cropped (from the Max-Planck Institute for Biological Cybernetics in Tuebingen, Germany), 15 cars (all profile views obtained from www.tirerack.com), as well as 15 chairs (obtained from C. Collin, and used in Collin et al., 2004). They were presented on a 21-inch CRT monitor (refresh rate = 100 Hz) using a Macintosh G4 computer running Matlab with the Psychophysics Toolbox extension (Brainard, 1997; Pelli, 1997).

First, stimulus area was matched across object categories, such that the smallest rectangle containing each object occupied approximately 33% of the square window in which it was centered. The square window was presented in two sizes, with smaller sizes at 57 by 57 pixels and spanning approximately 1.98 × 1.98 degrees of visual angle, and larger sizes at 113 by 113 pixels and spanning approximately 3.95 × 3.95 degrees of visual angle.

Before filtering SF-orientation information, we equated a number of low-level image properties (i.e., luminance distributions and Fourier amplitude at each SF) using functions from the SHINE (Spectrum, Histogram, and Intensity Normalization and Equalization) program written with Matlab (see supplemental online information). First, the luminance histograms of the foregrounds and the backgrounds of all source images were collected, averaged separately across the set, and applied to each stimulus. Second, we obtained the average Fourier amplitude spectrum across the set and equated the rotational average amplitude for each SF across all stimuli. The equalization steps were performed iteratively 25 times to reach a high degree of simultaneous equalization of luminance histograms and Fourier amplitudes.

After images were SHINEd, they were filtered with a method identical to that used by Biederman and Kalocsai (1997) and Yue, Tjan, and Biederman (2006). The original images were Fourier transformed and filtered using two complementary filters (Figure 1). Each filter eliminated the highest (above 181 cycles/image) and lowest (below 12 cycles/image, corresponding to approximately 7.5 cycles per face width (c/fw)) SFs. Note that prior work suggests that face recognition relies primarily on a middle band of SFs (approximately 8–16 c/fw – see Collin et al., 2004 for recent review). The surviving area of the Fourier domain was divided into an 8-by-8 matrix with 8 orientations (increasing in successive steps of 22.5 degrees) by 8 SFs (covering four octaves in steps of 0.5 octaves). This manipulation created two complementary pairs of images, whereby every other 32 SF-orientation combinations in a radial checkerboard pattern in the Fourier domain was ascribed to one image and the remaining combinations were assigned to the complementary member of that image pair. As such, both complementary members of a pair contained all 8 SFs and all 8 orientations but in unique combinations. Thus, the two complementary images shared no common information about the objects in the Fourier domain. After images were filtered in the Fourier domain, they were converted back to images in the spatial domain via the inverse FFT. The final processed images (see Figure 2) were presented at a viewing distance of 58 cm.

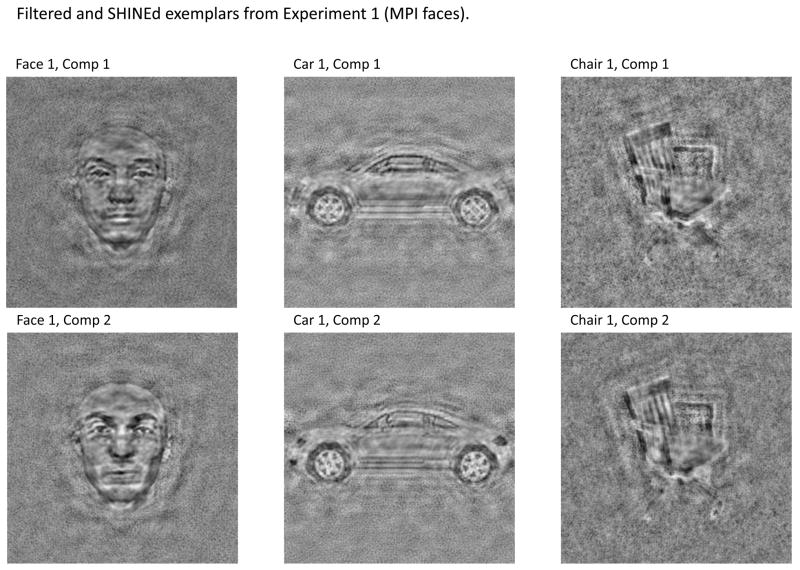

Figure 2.

Example images from Experiment 1. Displayed are pairs of filtered and SHINEd exemplars from each object category (face, car, chair).

Procedure

We used a 3×2×2 within-subjects design, with factors being Category (face, car or chair identity), Complementation (identical or complementary) and Orientation (upright or inverted). Trials were blocked according to SF composition (identical or complementary) in order to conduct signal detection analysis, and the visual angle of the image always varied from study to probe, either 2 or 4 degrees of visual angle. There were six blocks (three identical, three complementary) of 192 trials (64 face trials, 64 car trials and 64 chair trials), where image category and orientation varied randomly within a block. Block order was randomized across subjects. Participants were given 12 practice trials and offered a break every 64 trials.

On each trial, participants judged whether a pair of sequentially presented images (either two faces, two cars, or two chairs) was of the same identity. Relative to the study image, the probe image could be (a) the same identity and the same SF (i.e., the exact image), (b) the same identity and a complementary SF, or (c) a different exemplar altogether. The manipulation of interest was therefore SF overlap or complementarity – as opposed to the SF content per se – of two sequentially flashed images. Participants were instructed to make their judgments based on identity alone, regardless of differences in image size or SF content (described to subjects as “blurriness”). As in Yue et al. (2006), image size always differed from study to probe (2 to 4 deg or 4 to 2 deg), so that no part of the effect could be attributed to image matching.

Each trial began with a 500ms fixation cross, followed by a target stimulus (face, car or chair) in the center of the screen for 200ms. After a 300ms inter-stimulus-interval a probe stimulus of the same category appeared for 200ms. Participants had to make a same/different judgment regarding the identity of the target and probe within 1800ms.

Following the matching task with filtered images, all participants completed a test of car expertise to quantify their skill at car identification (Curby, Glazek & Gauthier, in press; Gauthier, Curby & Epstein, 2005; Gauthier et al., 2000; Grill-Spector, Knouf & Kanwisher, 2004; Rossion et al., 2007; Xu, 2005). We did not explicitly recruit car experts, but the data were acquired to explore whether natural variation in car expertise may account for a potential difference between cars and chairs. In this task, participants made same/different judgments on car images (at the level of make and model, regardless of year) and bird images (at the level of species). For each of 112 car trials and 112 bird trials, the first stimulus appeared for 1000ms, followed by a 500ms mask. A second stimulus then appeared and remained visible until a same/different response was made or 5000ms elapsed. A separate sensitivity score was calculated for cars (Car d′) and birds (Bird d′). The difference between these measures (Car d′ – Bird d′) yields a Car Expertise Index for each participant. Participants span a limited range of car performance, comparable to the range we observed for birds (Car d′ range = 0.31 to 2.25; Bird d′ range = 0.55 to 1.55; Car Expertise Index range = −0.52 to 1.42). Expertise data were not obtained for two individuals who failed to take the Expertise test due to personal time constraints. We made no effort to recruit participants that were experts with cars, so the range of car expertise was limited relative to prior work focusing on car expertise.

Results

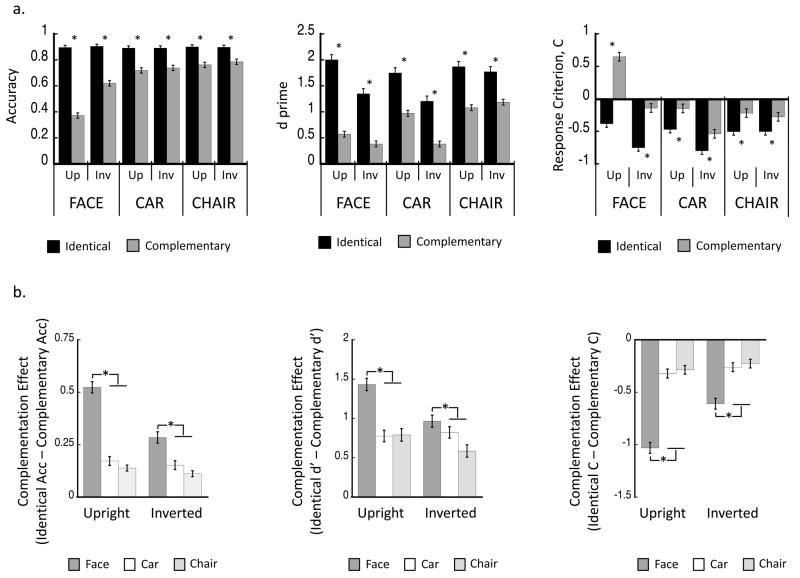

Figure 3a presents the mean results in each condition for three dependent variables. First, we consider accuracy on same trials only to provide an index that is comparable to what was used in prior studies (Biederman & Kalocsai, 1997; Yue et al., 2006). Second, we look at discriminability (d′) and response criterion (C), incorporating both the same and the different trials into our design. Paired t-tests revealed a significant CE in every condition using either accuracy, d′, or response criterion (all ps < .0001).

Figure 3.

Experiment 1 results (N=34). (a) Mean values for same-different matching of identical and complementary faces, cars, and chairs presented either upright or inverted are represented using three dependent measures: accuracy for same trials, sensitivity (d′), and response criterion (C). For each orientation of all stimulus categories using both accuracy and d′ measures, subjects were significantly better matching Identical relative to Complementary pairs of images. Error bars represent the standard error of the mean. (b) The CE (Identical – Complementary) associated with accuracy, d′ and C measurements is plotted for upright and inverted faces, cars, and chairs. Error bars represent standard error of the mean.

Data were analyzed using a 3×2×2 ANOVA with within-subject factors being Category (face, car, or chair), Complementation (identical or complementary), and Orientation (upright or inverted).

Considering first Accuracy, all main effects and interactions were significant (all ps < .0001). As expected, performance was better for identical pair trials than complementary pair trials (F(2,33)=203.28), and for upright than inverted trials (F(2,33)=52.80). When investigating the main effect of Category type (F(2,33)=31.46) via Bonferroni post-hoc tests (per-comparison alpha level = 0.017), we found that performance was better for chairs and cars relative to faces, and equivalent for chairs and cars.

To investigate the three-way interaction between Category, Complementation and Orientation (F(2,66)=36,67), we conducted a 2-way ANOVA directly on participants’ mean complementation scores for each condition (Identical - Complementary, see Figure 3b). Again, both main effects (those of Category and Orientation) and the interaction were significant (all ps ≤ .0001). Of particular interest is the Category × Orientation interaction (F(2,66)=36.67), which we followed up with post hoc tests (Bonferroni, using a per-comparison alpha = .0056). Only faces saw their CE reduced by inversion. In addition, upright faces showed a larger CE than both upright cars and chairs (with no difference between cars and chairs themselves), and this pattern persisted for inverted stimuli. Note, however, that there was a significant CE in all conditions (all ps ≤ .0001).

How do these results compare with a signal detection analysis that uses both same and different trials to separate discriminability from response criterion? When analyzing d′ in a 3×2×2 ANOVA, again, all main effects and interactions were significant (all ps < .01). We emphasize here only those effects that depart qualitatively from the analysis on accuracy. When looking at the main effect of Category on sensitivity (F(2,33)=29.54, p<.0001) via a Bonferroni post-hoc comparison test (per-comparison alpha level = . 017), we found that performance was better for chairs relative to faces and cars, and equivalent for face and car trials.

To investigate the three-way interaction between Category, Complementation, and Orientation (F(2,33)=4.58), we conducted a 2-way ANOVA directly on participants’ mean complementation scores for each condition (Identical - Complementary, see Figure 3b). Again, both main effects and interaction were significant (Category (p<.0001), Orientation (p<.01), Category × Orientation (p=.01)). Of particular interest is the Category × Orientation interaction (F(2,66)=4.58). Post hoc tests (Bonferroni, alpha=.0056) showed only faces saw their CE reduced by inversion. In addition, while for upright stimuli faces showed a larger CE than both cars and chairs (no difference between non-face categories), for inverted stimuli the face CE was not significantly larger than that for cars or chairs.

It is easy to appreciate from Figure 3 that response criterion contributed to exaggerate the CE in accuracy for upright faces. A 3×2×2 ANOVA shows all main effects and interactions to be significant (all ps < .0001). To investigate the 3-way interaction (F(2,66)=13.245) we performed a 2×2 ANOVA directly on CEs (Identical - Complementary). All main effects and interactions were significant (all ps < .0001). Of interest is the interaction between Category and Orientation (F(2,66)=13.245), which we followed up with post-hoc tests (Bonferroni, alpha=.0056). It revealed that only for faces was the CE in response criterion influenced by inversion. In addition, for both upright and inverted stimuli, there was a larger CE for faces relative to either cars or chairs (which were statistically equivalent).

For brevity, analyses on response times are not reported here but the mean values for all conditions are shown in Appendix A. They were generally consistent with those for d-prime and did not suggest any speed-accuracy tradeoffs.

Discussion

In Experiment 1, we observed a CE in all conditions (faces, cars and chairs, both upright and inverted), even when analyzing only accuracy on same trials. As such, with stimuli equated in a number of low-level properties (luminance histograms and Fourier amplitudes), we failed to replicate prior results in which no CE was observed for objects (Biederman & Kalocsai, 1997; Yue et al., 2006). We did, however, observe as in these studies that the CE for faces was larger than that for objects. Importantly, this difference was exaggerated by the use of accuracy for same trials as a dependant variable, as revealed through an important difference in participants’ response criterion between faces and objects. While observers were generally biased to respond “same” in most conditions of this experiment, they showed a unique bias to say “different” for complementary images of upright faces. This bias is independent of the ability of observers to judge whether the complementary images are the same or not. Indeed, the same response bias is not observed in other conditions where performance is either better (e.g., complementary chairs) or poorer (e.g., complementary inverted cars or faces) than for upright faces.

Given this important effect of response bias, it is more appropriate to compare the CE for faces and objects using sensitivity (d′). Using d′, we still find the largest CE for upright faces, compared not only to other object categories but also to inverted faces, suggesting that the larger CE for faces is not fully accounted for by low level differences that may persist between faces and objects despite the application of SHINE. That is, while we have eliminated many of these differences across categories using SHINE, it remains possible that this procedure affected information that was more diagnostic for the discrimination of faces than other objects. The inversion effect suggests that the magnitude of the face CE is influenced by configural processing, well-known to be more available for upright than inverted faces (Thompson, 1980; Searcy & Bartlett, 1996; Tanaka & Sengco, 1997). When only inverted stimuli are considered, the face CE is no longer larger than the car CE. Note that cars showed an overall inversion effect comparable to that found with faces (when only cars and faces are included in an ANOVA using d′, the Category × Orientation effect is not significant, p = .11). Cars are highly familiar and mono-oriented objects, and car inversion effects with car novices have been observed in other tasks (Gauthier et al., 2000). Despite this, inversion for cars did not interact with the magnitude of the CE.

Experiment 2

In Experiment 2, we address the following issues. First, because we found a CE for objects even using accuracy as a dependent measure, which was not the case in Biederman & Kalocsai (1997) and Yue et al. (2006), we wanted to ensure that the SHINE procedure was not the cause of the CE for objects. We therefore filtered images of faces and chairs (the category that was maximally different from faces in Experiment 1) without using SHINE, as in the previous studies. Second, while prior work used actual face photographs, Experiment 1 employed images from a face database wherein each face represents a linear combination, or morphing, of a set of prototypical faces created from cylindrical 3D laser scanning (Blanz & Vetter, 1999; Troje & Bulthoff, 1996). To test for the generalization of our effects, we therefore used a different set of faces: regular face photographs from the CVL database. Finally, we wanted to investigate the possible role of image symmetry in the CE. In Experiment 1, faces were shown in a symmetrical front-facing view, whereas cars were displayed in profile and chairs rotated towards the right about 45°. It is possible that these differences contributed to the larger CE for upright faces, because the radially symmetrical SF-orientation filter applied would have produced symmetrical changes on the faces that may have been especially likely to be interpreted as changes in the shape of the faces. Therefore, in Experiment 2 we measured the CE for 0° and 45° views of both faces and chairs.

Methods

Participants

Thirty individuals (15 male, mean age 19 years) participated for a small honorarium or course credit. All participants had normal or corrected-to-normal visual acuity. The experiment was approved by the Institutional Review Board at Vanderbilt University, and all participants provided written informed consent.

Stimuli and Material

Stimuli were images (30 faces with hair cropped from the Computer Vision Laboratory at the University of Ljubljana in Ljubljana, Slovenia, and 30 chairs obtained from C. Collin and used in Collin et al., 2004). For each object category, half of the images were front-on image views (0° rotation) while the other half were quarter views (45° rotation - See Figure 4). All images were filtered into complementary pairs as in Experiment 1, and then transformed to two different sizes, either 113 by 113 pixels (~3.95 × 3.95 degrees of visual angle) or 226 × 226 pixels (~7.85 × 7.85 degrees of visual angle). All images were presented at a viewing distance of 58 cm.

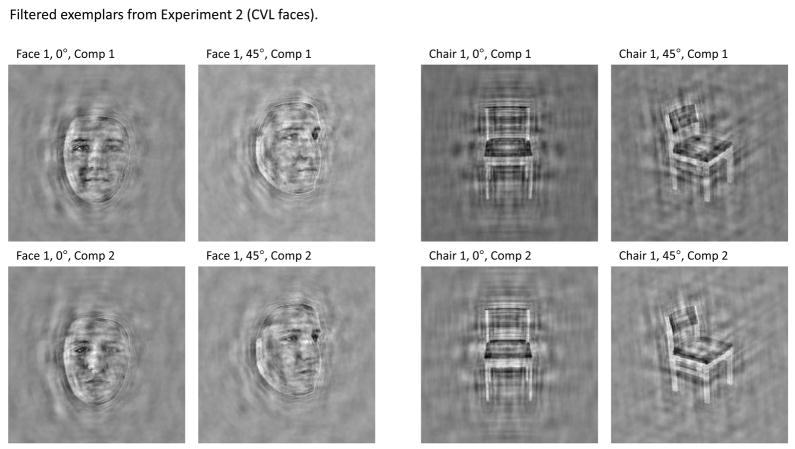

Figure 4.

Example images from Experiment 2. Displayed are pairs of filtered images from each target category (0°- and 45°-view faces and chairs).

Procedure

This experiment employed a 2×2×2 repeated-measures design with the following factors: Category (face or chair), SF-orientation content (identical or complementary), and Viewpoint (0° view or 45° view). The viewpoint of the stimuli varied randomly across trials, though both stimuli within a trial were always shown in the same view. As before, image size differed from study to probe to reduce image matching, and trials were blocked according to SF content (identical or complementary) to allow for the computation of sensitivity and response bias.

Participants completed eight blocks of 128 trials each: four blocks of identical SForientation pairs and four blocks of complementary SF-orientation pairs. Stimulus category and viewpoint varied randomly within a block, allowing 256 trials for each combination (i.e., 0° faces, 0° chairs, 45° faces, 45° chairs). Block order was randomized across subjects. Each subject began with 15 practice trials, and breaks were offered every 64 trials. Participants determined whether the probe stimulus was the same or different identity from the target stimulus.

Results

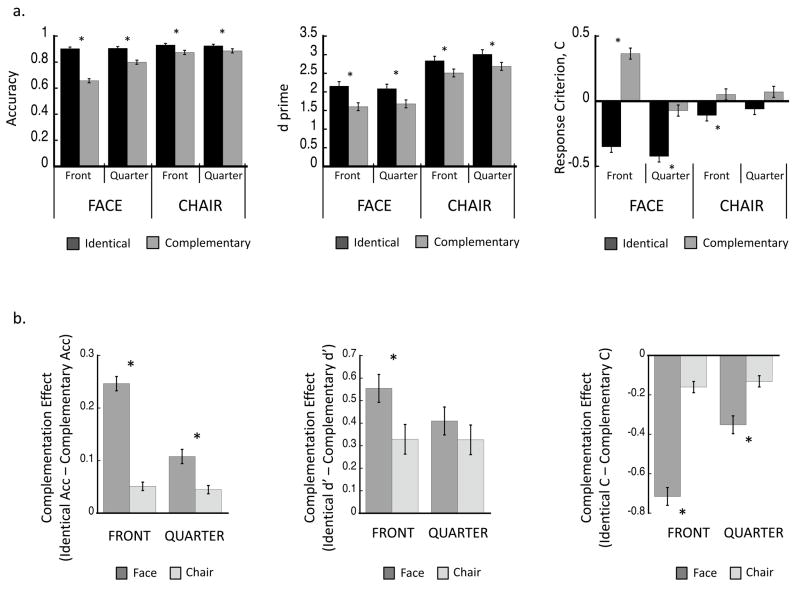

Figure 5 presents accuracy on same trials, discriminability (d′) and response criterion (C). Paired t-tests revealed a significant CE in every condition using accuracy, d′, and C measures (all ps <.02). For each dependant variable, the results were further analyzed using a 2×2×2 ANOVA with factors (all within-subjects) being Category (face, chair), SF-orientation manipulation (identical, complementary), and Viewpoint (0°, 45°).

Figure 5.

Experiment 2 results (N=30). (a) Mean values for same-different matching of identical and complementary faces and chairs at either 0° rotation or 45° rotation are represented using three dependent measures: accuracy for same trials, sensitivity (d′), and response criterion (C). Error bars represent standard error of the mean. (b) The CE (Identical – Complementary) associated with accuracy, d′ and C measurements is plotted for 0° and 45° views of faces and chairs. Error bars represent standard error of the mean.

For Accuracy, all main effects and interactions were significant (all ps < .0002). Performance was better overall for chairs than faces (Facc(1,29)=32.14), for 45° than 0° views (Facc(1,29)=53.17), and for identical than complementary pairs (Facc(1,29)=182.74).

To investigate the 3-way interaction (Facc(1,29)=33.94), we conducted 2×2 ANOVAs directly on CE scores (Identical – Complementary, see Figure 5b). All main effects and interaction were significant (p<.0001). We were especially interested in the Category × Viewpoint interaction (Facc(2,29)=33.94), which we followed with Bonferroni post-hoc tests (per-comparison alpha = .0125). The CE for faces was greater than for chairs regardless of viewpoint. In addition, while face stimuli showed a reduced CE when rotated 45°, chair stimuli showed comparable CEs for 45° views than for 0° views.

When analyzing d′ in a 2×2×2 ANOVA, the main effects of Category (Fd′(1,29)=234.22, p<.0001) and Complementation (Fd′(1,29)=55.12, p < .0001) remained significant. Viewpoint showed only a marginally significant effect (Fd′(1,29)=3.68, p = .06). The only other significant effect was an interaction between Category and Complementation (Fd′(1,29)=5.41, p = .03). Post-hoc tests (Bonferroni, per-comparison alpha = .0125) revealed that with both sets of stimuli (identical and complementary) subjects were better with chairs than faces, although there was a significant CE for both categories.

To explore the effect of image symmetry using d′, we also conducted a two-way ANOVA (Category × Viewpoint) on the CE for this measure. Neither the effect of viewpoint (Fd′(1,29)<1, n.s.) nor the interaction effect (Fd′(1,29)<1, n.s.) were significant using d′. It is interesting to note, therefore, that viewpoint has less of an impact with d′ than with accuracy.

Considering response criterion (C) in a 2×2×2 ANOVA, all main effects and interactions were significant (ps < .001) except the main effect of category (FC(1,29)=2.80, p=.10). As in Experiment 1, participants demonstrated a particularly large bias to say “different” for complementary pairs of 0° view face images. To investigate the significant 3-way ANOVA, we computed a 2×2 ANOVA directly on CE (Identical - Complementary). The interaction between Category and Viewpoint (FC(1,29)=12.96, p = .001) reflected the fact that there was, according to post-hoc tests (Bonferroni, per-comparison alpha = .0125), a significant effect of viewpoint for faces but not for chairs (as is easily appreciated from Figure 5b). Response times for Experiment 2 are reported in Appendix A.

Discussion

In summary, Experiment 2 is consistent with Experiment 1, replicating and extending several findings. We find CEs for both faces and objects, and differences between faces and objects are amplified when using Accuracy by the presence of a differential response bias. Moreover, viewpoint reduced the response bias difference between identical and complementary trials for faces but not for chairs.

General Discussion

Using the same SF-orientation filtering mechanism as employed here, Biederman and Kalocsai (1997) concluded that face recognition, but not object recognition, is sensitive to changes in the SF content of the image. On this basis they suggested that faces and objects are stored in qualitatively unique ways in the brain: faces are represented as a collection of outputs of V1-type cells preserving their original SF- and orientation-selective information, while objects are represented as structural units composed of lines and edges derived from harmonics that transcend scale and are devoid of SF information.

In contrast, our results indicate that significant CEs can also be obtained with chairs and cars (regardless of whether they are processed to match the low-level properties of faces). The CE is obtained with upright and inverted objects, regardless of viewpoint. We suggest, therefore, that the CE is not unique to faces.

Why was the CE not obtained with objects in prior work? While it is always difficult to interpret null effects, we should note that our results are generally consistent with those of Biederman & Kalocsai (1997) and Yue et al. (2006) in that we found the CE to be larger for faces than objects. It is possible that by chance the prior work used certain parameters – such as object viewpoint and analyses with hit rates only – that produced more sensitivity to the CE for faces than objects. Importantly, our findings suggest that the magnitude of the CE is influenced by several factors that are unlikely caused by a qualitative difference in underlying representations across categories. On the one hand, there is an important difference in response bias between faces and objects, which inflates the size of the CE for faces. We do not have a theoretical account of this difference, but we note that such a differential bias between faces and objects has been observed in other tasks (Wenger & Ingvalson, 2002; Cheung et al., in press). An anonymous reviewer of this paper suggested that the differential bias effect for faces may be based on our blocking by complementarity, so that we could unambiguously attribute different trials to the identical or the complementary condition, and that a similar bias difference may not have been found in Biedermand & Kaloscai (1997) and Yue et al., (2006) who only blocked trials by category. To test this account we re-analyzed data from an experiment in a different paper (Williams & Gauthier, submitted). In that study (N=39) which used the same filters as the present work, there were upright and inverted face and car trials blocked by category and randomized by complementarity as in prior work. To assign the “different” trials to complementary or identical conditions, we simply followed the same rule as for the “same” trials: namely, whether the filter was identical or complementary. This re-analysis replicated the large difference in response bias between faces and cars even when trials were randomized as in prior work (Mean C for upright identical faces =; upright complementary faces =; upright identical cars =; upright complementary cars =; Interaction between category and complementarity = GIVE F for interaction HERE). This allows us to reject the possibility that the differential bias effects for upright faces obtained in our experiments can be explained by blocking by complementarity.

Another factor that could help explain the discrepancy with prior work is that in the present study, we found that both inversion and rotation in depth reduced the CE for faces. The objects manipulated in prior studies may not have been shown in a symmetrical view as were the faces (e.g., the blobs used in Yue et al., 2006). Therefore, differences in viewpoint or symmetry between faces and objects, coupled with the presence of response bias, may have contributed to reducing the CE for objects in prior work. Clearly other differences could have been influential, as well; for example the CEs we observed in Experiment 2 were considerable smaller than those from Experiment 1, an effect not predicted but which could result from any of several confounded differences in methods (use of SHINE, different stimulus sets, different image sizes).

Nonetheless, beyond the general cost of complementation, our results are consistent with the idea that the matching of front views of faces is more affected by manipulations of the spatial information than is object matching. In contrast to Collin et al. (2004) who found no inversion effect on the sensitivity to SF overlap for faces, we found inversion to reduce the CE for faces but not that for cars or chairs in both our experiments. This suggests that the magnitude of the CE may depend on configural processing. Indeed, while inversion does not only affect configural processing, an effect of inversion limited to face perception is more suggestive that configural processing is involved. In recent work, inversion effects observed for faces and not for objects have been replicated in expert observers (Curby, Glazek & Gauthier, in press), and configural processing has also been found to increase with expertise (Gauthier & Tarr, 2002; Wong, Palmeri & Gauthier, in press). Therefore, an inversion effect for faces and not for objects is consistent, albeit indirectly, with a role of expertise in the CE. We found that rotation in depth also reduced the CE for faces, and this manipulation has been found not to interact with configural processing (McKone, 2008). The CE could be affected by inversion for the same core reason as it is reduced by rotation in depth, or the two effects could have unique underlying causes. Future work should address these questions and, in particular, more intentionally test the role of expertise in the CE, because expertise effects should be particularly reduced for very unfamiliar inverted views.

In summary, we found that the CE can be obtained with non-face objects. Using a measure of discriminability to reduce the role of important responses biases, we found that the CE was larger in magnitude for faces than for objects, and most pronounced for upright front views of faces. With inversion, or rotation in depth, the effect of complementation was no longer face-specific.

Supplementary Material

Appendix A. Response times (ms) for correct trials in Experiments 1 and 2

Experiment 1: Response time (ms) for same trials.

| FACE | CAR | CHAIR | ||||

|---|---|---|---|---|---|---|

| Upright | Inverted | Upright | Inverted | Upright | Inverted | |

| Identical | 468 | 504 | 485 | 505 | 464 | 492 |

| Complementary | 578 | 600 | 576 | 589 | 559 | 592 |

Experiment 2: Response time (ms) for same trials.

| FACE | CHAIR | |||

|---|---|---|---|---|

| 0° View | 45° View | 0° View | 45° View | |

| Identical | 523 | 492 | 480 | 466 |

| Complementary | 617 | 552 | 512 | 510 |

Footnotes

See Gauthier & Bukach (2007) and McKone & Robbins (2007) for a debate regarding different experimental designs to measure configural and holistic processing using composite stimuli.

References

- Biederman I. Recognition-by-components: A theory of human image understanding. Psychological Review. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Biederman I, Ju G. Surface versus edge-based determinants of visual recognition. Cognitive Psychology. 1988;20:38–64. doi: 10.1016/0010-0285(88)90024-2. [DOI] [PubMed] [Google Scholar]

- Biederman I, Kalocsai P. Neurocomputational bases of object and face recognition. Philosophical Transactions of the Royal Society of London, Series B Biological Sciences. 1997;352:1203–1219. doi: 10.1098/rstb.1997.0103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanz V, Vetter T. A Morphable Model for the Synthesis of 3D Faces. Presented at Proceedings of the 26th annual conference on Computer graphics and interactive techniques.1999. [Google Scholar]

- Bruce V, Hanna E, Dench N, Healey P, Burton M. The importance of “mass” in line drawings of faces. Applied Cognitive Psychology. 1992;6:619–628. [Google Scholar]

- Cheung O, Richler J, Palmeri T, Gauthier I. Revisiting the role of spatial frequencies in the holistic processing of faces. Journal of Experimental Psychology: Human Perception and Performance. doi: 10.1037/a0011752. (in press) [DOI] [PubMed] [Google Scholar]

- Collin CA, Liu CH, Troje NF, McMullen PA, Chaudhuri A. Face recognition is affected by similarity in spatial frequency range to a greater degree than within-category object recognition. Journal of Experimental Psychology: Human Perception and Performance. 2004;30:975–987. doi: 10.1037/0096-1523.30.5.975. [DOI] [PubMed] [Google Scholar]

- Curby K, Glazek K, Gauthier I. Perceptual expertise increases visual short term memory capacity. Journal of Experimental Psychology: Human Perception and Performance. doi: 10.1037/0096-1523.35.1.94. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiser J, Subramaniam S, Biederman I. Size tuning in the absence of spatial frequency tuning in object recognition. Vision Research. 2001;41:1931–1950. doi: 10.1016/s0042-6989(01)00062-1. [DOI] [PubMed] [Google Scholar]

- Gaspar CM, Bennett PJ, Sekuler AB. The effects of face inversion and contrast-reversal on efficiency and internal noise. Vision Research. 2008;48:1084–1095. doi: 10.1016/j.visres.2007.12.014. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Curby KM, Epstein R. Activity of spatial frequency channels in the fusiform face-selective area relates to expertise in car recognition. Cognitive and Affective Behavioral Neuroscience. 2005;5:222–234. doi: 10.3758/cabn.5.2.222. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Unraveling mechanisms for expert object recognition: Bridging brain activity and behavior. Journal of Experimental Psychology: Human Perception and Performance. 2002;28:431–446. doi: 10.1037//0096-1523.28.2.431. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW. The fusiform “face area” is part of a network that processes faces at the individual level. Journal of Cognitive Neuroscience. 2000;12:495–504. doi: 10.1162/089892900562165. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N. The fusiform face area subserves face perception, not generic within category identification. Nature Neuroscience. 2004;7:555–562. doi: 10.1038/nn1224. [DOI] [PubMed] [Google Scholar]

- Goffaux V, Gauthier I, Rossion B. Spatial scale contribution to early visual differences between face and object processing. Cognitive Brain Research. 2002;16:416–424. doi: 10.1016/s0926-6410(03)00056-9. [DOI] [PubMed] [Google Scholar]

- Hayes A. Identification of two-tone images; some implications for high- and low-spatial-frequency processes in human vision. Perception. 1988;17:429–436. doi: 10.1068/p170429. [DOI] [PubMed] [Google Scholar]

- Liu CH, Collin CA, Rainville SJM, Chaudhuri A. The effects of spatial frequency overlap on face recognition. Journal of Experimental Psychology: Human Perception and Performance. 2000;29:729–743. doi: 10.1037//0096-1523.26.3.956. [DOI] [PubMed] [Google Scholar]

- McKone E. Configural processing and face viewpoint. Journal of Experimental Psychology: Human Perception and Performance. 2008;34:310–327. doi: 10.1037/0096-1523.34.2.310. [DOI] [PubMed] [Google Scholar]

- McKone E, Kanwisher N, Duchaine BC. Can generic expertise explain special processing for faces? TRENDS in Cognitive Sciences. 2007;11:8–15. doi: 10.1016/j.tics.2006.11.002. [DOI] [PubMed] [Google Scholar]

- Nederhouser M, Yue X, Mangini MC, Biederman I. The deleterious effect of contrast reversal on recognition is unique to faces, not objects. Vision Research. 2007;47:2134–2142. doi: 10.1016/j.visres.2007.04.007. [DOI] [PubMed] [Google Scholar]

- Robbins R, McKone E. Can holistic processing be learned for inverted faces? Cognition. 2003;88:79–107. doi: 10.1016/s0010-0277(03)00020-9. [DOI] [PubMed] [Google Scholar]

- Rossion B, Collins D, Goffaux V, Curran T. Long-term expertise with artificial objects increases visual competition with early face categorization processes. Journal of Cognitive Neuroscience. 2007;19:543–555. doi: 10.1162/jocn.2007.19.3.543. [DOI] [PubMed] [Google Scholar]

- Rossion B, Gauthier I. How does the brain process upright and inverted faces? Behavioral and Cognitive Neuroscience Reviews. Developmental Psychobiology. 2002;1:63–75. doi: 10.1177/1534582302001001004. [DOI] [PubMed] [Google Scholar]

- Searcy JM, Bartlett JC. Inversion and processing of component and spatial-relational information in faces. Journal of Experimental Psychology: Human Perception and Performance. 1996;22:904–914. doi: 10.1037//0096-1523.22.4.904. [DOI] [PubMed] [Google Scholar]

- Subramaniam S, Biederman I. Does contrast reversal affect object identification? Investigative Ophthalmology & Visual Science. 1997;38:998. [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. Quarterly Journal Experimental Psychology. 1993;46:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Gauthier I. Expertise in object and face recognition. In: Goldstone, Medin, Schyns, editors. Psychology of Learning and Motivation Series, Special Volume: Perceptual Mechanisms Learning. Vol. 36. San Diego, CA: Academic Press; 1997. pp. 83–125. [Google Scholar]

- Tanaka JW, Sengco JA. Features and their configuration in face recognition. Memory & Cognition. 1997;25:583–592. doi: 10.3758/bf03211301. [DOI] [PubMed] [Google Scholar]

- Thompson P. Margaret Thatcher: a new illusion. Perception. 1980;9:483–484. doi: 10.1068/p090483. [DOI] [PubMed] [Google Scholar]

- Troje N, Bülthoff HH. Face recognition under varying poses: The role of texture and shape. Vision Research. 1996;36:1761–1771. doi: 10.1016/0042-6989(95)00230-8. [DOI] [PubMed] [Google Scholar]

- Wenger MJ, Ingvalson EM. A decisional component of holistic encoding. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:872–892. [PubMed] [Google Scholar]

- Wong AC-N, Palmeri TJ, Gauthier I. Conditions for face-like expertise with objects: Becoming a Ziggerin expert – but which type? Journal of Psychological Sciences. doi: 10.1111/j.1467-9280.2009.02430.x. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu Y. Revisiting the role of the fusiform face area in visual expertise. Cerebral Cortex. 2005;15:1234–1242. doi: 10.1093/cercor/bhi006. [DOI] [PubMed] [Google Scholar]

- Young AW, Hellawell D, Hay DC. Configural information in face perception. Perception. 1987;16:747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]

- Yue X, Tjan BS, Biederman I. What makes faces special? Vision Research. 2006;46:3802–3811. doi: 10.1016/j.visres.2006.06.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.