Abstract

Fear and disgust expressions are not arbitrary social cues. expressing fear maximizes sensory exposure (e.g., increases visual and nasal input), whereas expressing disgust reduces sensory exposure (e.g., decreases visual and nasal input).1 A similar effect of these emotional expressions has recently been found to modify sensory exposure at the level of the central nervous system (attention) in people perceiving these expressions.2 At an attentional level, sensory exposure is increased when perceiving fear and reduced when perceiving disgust. These findings suggest that response preparations are transmitted by expressers to perceivers. However, the processes involved in the transmission of such emotional action tendencies remain unclear. We suggest that emotional contagion by means of grounded cognition theories could be a simple, ecological and straight-forward explanation of this effect. The contagion through embodied simulation of others’ emotional states with simple, efficient and very fast facial mimicry may represent the underlying process.

Key words: fear, disgust, attention, embodiment, emotion, grounded cognition

One of the acknowledged key functions of facial expressions in primates is to communicate quickly and efficiently the expresser’s internal affective state to peers. In other words, facial expressions offer specific evocative information to the perceiver3 and allow for important regulation strategies between the expresser and the perceiver.4 However, besides the idea that facial expressions represent important social cues, recent physiological data indicates that facial expressions may also modify sensory acquisition. At a physiological level, a recent study1 has shown that fear and disgust are not arbitrary social signals. In expressers, fear was found to maximize sensory exposure (e.g., increases visual input) while disgust was found to reduce sensory exposure (e.g., decreases visual input) (and the same function seems to be present for anger and surprise as well5).

A similar relationship between emotional expressions and attention also occurs at the cognitive (i.e., attentional) level when people perceive emotional expressions.6,7 As well, we have shown by using the attentional blink (AB) paradigm that expressed fear and disgust emotions produced, on perceivers’ attentional system, a similar process of closure (induced by disgust) versus exposure (induced by fear) to external perceptual cues (see Figure 1 for an example of the type of trial used).2 The AB refers to the negative effect of the first target (T1) on the second target (T2) identification within a period of 200–500 ms following T1. It was recently suggested that this effect involves two processes, described in the ‘boost and bounce’ theory.8 Firstly, the detection of T1 results in a transient attentional enhancement (boost), which can also enhance the processing of distractor/s presented immediately after T1, but before T2. However, the distractor/s then elicit attentional suppression (bounce), which impairs the processing of the subsequent T2.

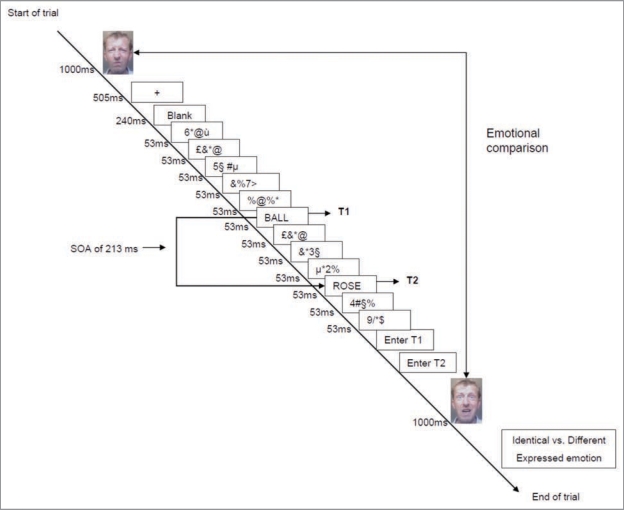

Figure 1.

Schematic overview of a typical trial with a stimulus onset asynchrony (SOA) of 213 milliseconds in the study by Vermeulen et al.2 Each trial started by presenting a facial expression of fear or disgust directly followed by the rapid serial visual presentation (RSVP). Each stimulus was presented one at a time in the centre of the screen for 53 ms. After the participants entered the target words (T1 and T2) they saw, they had to decide whether the second face expressed a same or a different emotion than the fist face they saw.

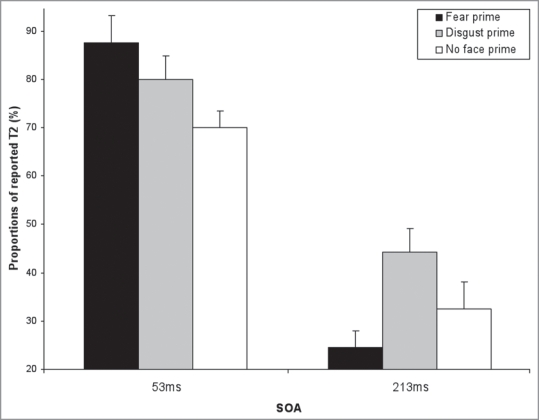

In our study,2 we found that processing fear faces impairs the detection of T2 to a greater extent than does the processing of disgust faces. When comparing (Fig. 2) with an analogous experimental design9 without facial expressions prime, these findings seem to be related to both an increased blink due to perceived fear emotion and to a decreased blink due to perceived disgust emotion.

Figure 2.

The influence of the emotion prime on second target detection (T2) depends on the stimulus onset asynchrony (SOA). In the study by Vermeulen et al.2 the report of T2 targets was significantly better (p < 0.001) if preceded by a disgust face than by a fear face. Compared to a “No face prime” condition from another study,9 at an SOA of 213 ms, a decrease appears for fear faces, whereas disgust seems to increase T2 report.

The fact that perceiving certain facial emotions involves similar effects (i.e., consequences) as expressing those facial emotions suggests that expressing and perceiving facial expressions of emotion may engage similar processes. Then, the underlying question is to determine how the transmission (i.e., contagiousness) of these emotional properties by expressers to perceivers can induce modifications of information intake in perceivers.

Towards a Grounded Attentional Contagion Account in Perceivers

Thus, an interesting question that follows our findings is related to the cognitive and emotional processes involved in the modification of perceivers’ behavioural efficiency. How can perceivers be cognitively influenced by an observed facial expression of emotion? In other words, how can a perceiver replicate the sensory and cognitive consequences displayed by an expresser? Facial expressions are known to spontaneously evoke (i.e., as a mirror) emotion responses in the perceiver, as it was shown by studies on facial mimicry. For instance, Dimberg and colleagues showed that angry expressions evoked similar facial expressions in the receiver10 and that these reactions can be present even when facial expressions were presented too quickly to allow conscious perception.11 Collectively, the literature in psychological science and cognitive neuroscience suggests that emotional expressions can “automatically” evoke prepared responses4 or action tendencies12 in others and this information is likely to influence the subsequent behavioural responses of the perceiver. As such, besides their role of social cues4 or their role of biomechanical (sensory) interface,1,5 facial expressions can also play another role in affective life, which is to serve as the grounding (i.e., a cognitive support) for the processing of emotional information.13 Indeed, research from the embodied or grounded cognition literature has demonstrated that individuals use simulations to represent knowledge.14–16 These simulations can occur in different sensory modalities9,17,18 and affective systems as well.13,19–21 For instance, a series of studies have found that participants expressed emotion on their faces when trying to represent discrete emotional contents from words. As an example, when participants had to indicate whether the words slug or vomit were related to an emotion, they expressed disgust on their faces, as measured by the contraction of the levator labialis (used to wrinkle the nose).19 When taken together, these findings suggest that facial expressions also constitute a cognitive support used to reflect on or to access to the affective meaning of a given emotion, and this processing often involves the display of a facial expression of emotion.13,19 As the grounded cognition models suggest, the same structures are active inside our brain during the first- and the third-person experience of emotions.13,22–24 The activation of this “shared manifold space”22 which is related to the automatic activation of grounded simulation routines allows for the creation of a bridge between others and ourselves.24 In other words, the comprehension of others’ facial expression depends on the re-activation of the neural structures normally devoted to our own personal experience of emotions.24

From this standpoint, it can be suggested that when humans perceive facial expressions of emotion they automatically behave as if they were actually experiencing and expressing that particular emotion. As such, perceivers are likely to act on the environment as expressers do. Since the specific forms of facial expressions may have originated to maximize beneficial adaptation in expressers,1,5 perceivers will similarly maximize their adaptation if they can mimic the communicated expressers’ internal state. As we found in our attentional blink study,2 such mimicry would quickly prepare perceivers to interact with environmental changes (i.e., attentional modulation) and probably to behave in the most adaptive way. Like a domino effect, facial adaptation in expressers will be transmitted to perceivers through mimicry and simulation processes. So, adapted response tendencies are primed in perceivers following the mere perception of expressers’ internal affective states. Such an interpretation would offer an additional added value to modal (i.e., grounded or embodied) as compared to amodal models of cognition.24,25 Classic “amodal” models posit that information is encoded in an abstract form that is functionally independent from the sensory systems that processed it.25 As such, a parallel effect of facial expression in expressers and perceivers might not be predicted a priori by amodal models. The added value of modal representations of knowledge consists of a rapid and very efficient adaptive response (i.e., through mimicry and simulation) in perceivers as soon as a facial expression is processed, even if that expression is not consciously perceived.11 This might explain the specific role of basic perceptual information during emotional processing.26,27

In order to determine whether this effect could be due to emotional contagion from expressers to perceivers, further studies that manipulate or that measure facial responses in perceivers are needed. For instance, it may be suggested that, as it was experimentally19,21 done recently, blocking facial reactions in perceivers would decrease the emotional modulation of attention.2 Moreover, electromyographic measures of facial muscles like the levator labialis (for disgusted emotional expressions) or the medial frontalis (for fearful expressions) may be employed to confirm the role of facial simulation19 in the appearance of the emotional modulation of the attentional blink. An implication of grounded cognition in this process would suggest that embodied emotion not only serves as the basis (i.e., an off-line cognitive support) for representing and understanding emotions13 but that it has also a role in allowing the transmission of internal states required for the survival of the species.

Footnotes

Previously published online: www.landesbioscience.com/journals/cib/article/10922

References

- 1.Susskind JM, Lee DH, Cusi A, Feiman R, Grabski W, Anderson AK. Expressing fear enhances sensory acquisition. Nature Neurosci. 2008;11:843–850. doi: 10.1038/nn.2138. [DOI] [PubMed] [Google Scholar]

- 2.Vermeulen N, Godefroid J, Mermillod M. Emotional modulation of attention: fear increases but disgust reduces the attentional blink. PLoS One. 2009;4:7924. doi: 10.1371/journal.pone.0007924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Schyns PG, Petro LS, Smith ML. Transmission of facial expressions of emotion co-evolved with their efficient decoding in the brain: behavioral and brain evidence. PLoS One. 2009;4:5625. doi: 10.1371/journal.pone.0005625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Keltner D, Ekman P, Gonzaga GC, Beer J. Facial expression of emotion. In: Davidson RJ, Scherer KR, Goldsmith HH, editors. Handbook of affective sciences. New-York: Oxford University Press; 2003. pp. 415–432. [Google Scholar]

- 5.Susskind JM, Anderson AK. Facial expression form and function. Commun Integr Biol. 2008;1:148–149. doi: 10.4161/cib.1.2.6999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Anderson AK, Christoff K, Panitz D, De Rosa E, Gabrieli JDE. Neural correlates of the automatic processing of threat facial signals. J Neurosci. 2003;23:5627–5633. doi: 10.1523/JNEUROSCI.23-13-05627.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Phelps EA, Ling S, Carrasco M. Emotion facilitates perception and potentiates the perceptual benefits of attention. Psychol Sci. 2006;17:292–299. doi: 10.1111/j.1467-9280.2006.01701.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Olivers CNL, Meeter M. A boost and bounce theory of temporal attention. Psychol Rev. 2008;115:836–863. doi: 10.1037/a0013395. [DOI] [PubMed] [Google Scholar]

- 9.Vermeulen N, Mermillod M, Godefroid J, Corneille O. Unintended embodiment of concepts into percepts: Sensory activation boosts attention for same-modality concepts in the attentional blink paradigm. Cognition. 2009;112:467–472. doi: 10.1016/j.cognition.2009.06.003. [DOI] [PubMed] [Google Scholar]

- 10.Dimberg U. Facial Electromyography and Emotional-Reactions. Psychophysiology. 1990;27:481–494. doi: 10.1111/j.1469-8986.1990.tb01962.x. [DOI] [PubMed] [Google Scholar]

- 11.Dimberg U, Thunberg M, Elmehed K. Unconscious facial reactions to emotional facial expressions. Psychol Sci. 2000;11:86–89. doi: 10.1111/1467-9280.00221. [DOI] [PubMed] [Google Scholar]

- 12.Frijda NH. The emotions. Cambridge: Cambridge University Press; 1986. [Google Scholar]

- 13.Niedenthal PM. Embodying emotion. Science. 2007;316:1002–1005. doi: 10.1126/science.1136930. [DOI] [PubMed] [Google Scholar]

- 14.Barsalou LW. Perceptual symbol systems. Behav Brain Sci. 1999;22:577–660. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- 15.Barsalou LW. Grounded cognition. Ann Rev Psychol. 2008;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- 16.Barsalou LW, Simmons WK, Barbey AK, Wilson CD. Grounding conceptual knowledge in modality-specific systems. Trends Cogn Sci. 2003;7:84–91. doi: 10.1016/s1364-6613(02)00029-3. [DOI] [PubMed] [Google Scholar]

- 17.van Dantzig, Pecher D, Zeelenberg R, Barsalou LW. Perceptual processing affects conceptual processing. Cogn Sci. 2008;32:579–590. doi: 10.1080/03640210802035365. [DOI] [PubMed] [Google Scholar]

- 18.Vermeulen N, Corneille O, Niedenthal PM. Sensory load incurs conceptual processing costs. Cognition. 2008;109:287–294. doi: 10.1016/j.cognition.2008.09.004. [DOI] [PubMed] [Google Scholar]

- 19.Niedenthal PM, Mondillon L, Winkielman P, Vermeulen N. Embodiment of emotion concepts. J Pers Soc Psychol. 2009;96:1120–1136. doi: 10.1037/a0015574. [DOI] [PubMed] [Google Scholar]

- 20.Vermeulen N, Niedenthal PM, Luminet O. Switching between sensory and affective systems incurs processing costs. Cogn Sci. 2007;31:183–192. doi: 10.1080/03640210709336990. [DOI] [PubMed] [Google Scholar]

- 21.Oberman LM, Winklelman P, Ramachandran VS. Face to face: Blocking facial mimicry can selectively impair recognition of emotional expressions. Soc Neurosci. 2007;2:167–178. doi: 10.1080/17470910701391943. [DOI] [PubMed] [Google Scholar]

- 22.Gallese V. The roots of empathy: the shared manifold hypothesis and the neural basis of intersubjectivity. Psychopathology. 2003;36:171–180. doi: 10.1159/000072786. [DOI] [PubMed] [Google Scholar]

- 23.Gallese V. The manifold nature of interpersonal relations: the quest for a common mechanism. Philos Trans R Soc Lond B Biol Sci. 2003;358:517–528. doi: 10.1098/rstb.2002.1234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends Cogn Sci. 2004;8:396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- 25.Fodor JA. The language of thought Cambridge. Mass. USA: Harvard University Press; 1975. [Google Scholar]

- 26.Mermillod M, Vermeulen N, Lundqvist D, Niedenthal PM. Neural computation as a tool to differentiate perceptual from emotional processes: The case of anger superiority effect. Cognition. 2009;110:346–357. doi: 10.1016/j.cognition.2008.11.009. [DOI] [PubMed] [Google Scholar]

- 27.Mermillod M, Vuilleumier P, Peyrin C, Alleysson D, Marendaz C. The importance of low spatial frequency information for recognising fearful facial expressions. Connection Science. 2009;21:75–83. [Google Scholar]