Abstract

The aim of this functional magnetic resonance imaging study is to identify neuroanatomical substrates underlying phonological processing of segmental (consonant, rhyme) and suprasegmental (tone) units. An auditory verbal recognition paradigm was employed in which native speakers of Mandarin Chinese were required to match a phonological unit that occurs in a list of three syllables to the corresponding unit of a following probe. Results show that hemispheric asymmetries arise depending on the type of phonological unit. In direct contrasts between phonological units, tones, relative to consonants and rhymes, yield increased activation in frontoparietal areas of the right hemisphere. This finding indicates that the cortical circuitry subserving lexical tones differs from that of consonants or rhymes.

Keywords: auditory, fMRI, language, prosody, lexical tone, Mandarin, selective attention, verbal working memory

Tone languages give us a unique window on the neurobiology of suprasegmental vs. segmental processing because of the phonemic status of pitch variations at the level of the syllable or morpheme. For instance, in Mandarin Chinese, consonants and rhymes differ in duration and the order in which their information unfolds over the course of a syllable. Both are units of segmental information. Rhymes and tones, on the other hand, are coterminous in duration and order in the syllable, but rhymes are segmental, tones suprasegmental [1].

Functional magnetic resonance imaging (fMRI) suggests that speech prosody perception, including lexical tone, is mediated primarily by the right hemisphere (RH), but is lateralized to the LH for post-perceptual processing depending on its linguistic status in a particular language [2–5]. But differential patterns of cortical activation are not driven by language experience alone. They may also be driven by differences in acoustic features associated with specific types of phonological units. For instance, it has been shown that hemispheric specialization of consonants is dissociable from vowels during phonetic discrimination [6]. In the production of Mandarin tones, tones elicit more activity in the RH than vowels [7]. This is especially remarkable since tones are primarily realized upon vowels or rhymes with which they are associated. In Mandarin, early event-related brain potentials reveal RH dominance regardless of linguistic function (tone, intonation)[8], but opposite patterns of hemispheric dominance for tones (RH) vs. consonants (LH)[9]. In Japanese, near infrared spectroscopy (NIRS) shows stronger left- and right-dominant responses for vowels and prosodic contrasts in sentence type, respectively [10]. Using fMRI, it has been demonstrated in Mandarin that selective attention to a target tone of a syllable relative to the whole syllable recruits a left dorsal frontoparietal network [11], and that distracters between the target tone and its probe may induce articulatory encoding with engagement of a fronto-cerebellar network including a left dorsal frontal region [12]. Whether hemispheric specialization of tones is dissociable from that of rhymes is an empirical question.

The aim of this functional magnetic resonance imaging (fMRI) study is to identify the neuroanatomical substrates subserving phonological processing by providing pair-wise contrasts between suprasegmental (tone, T) and segmental (consonant, C; rhyme, R) phonological units concurrently. An auditory recognition paradigm is used in which subjects are asked to match a phonological unit within a three-syllable list to the corresponding unit of a following probe syllable. Two types of matching sequences are distinguished by either fixing or randomly varying the position of syllables containing the target units in a three-syllable list. Matching judgments for random sequences, compared to fixed, are expected to increase the neural activity for encoding of phonological units and their associated working memory. We further expect to elicit differential patterns of hemispheric asymmetry as a function of the type of phonological unit – segmental vs. suprasegmental.

Methods

Subjects

Twelve adult native speakers of Mandarin (6 male; 6 female) from mainland China, ranging in age from 23–32, participated in this study. All subjects were strongly right-handed (laterality quotient: M = 94%, SD = 9) [13]; and exhibited normal hearing sensitivity. All subjects gave informed consent in compliance with a protocol approved by the Institutional Review Board of Indiana University Purdue University Indianapolis and Clarian Health.

Stimuli

A stimulus list of three Mandarin monosyllables followed by a probe monosyllable made up a sequence for each trial. All syllables (maximum duration = 450 ms) were produced by a native Chinese male speaker. A matching sequence contained a target syllable in the stimulus list sharing a phonological unit (consonant, C; rhyme, R; or tone, T) in common with the probe. In a non-matching sequence, none of the three syllables in the stimulus list contained a phonological unit in common with the following probe.

Two types of matching sequences were constructed based on the positions of the target syllables (Table 1). A fixed matching sequence located the target syllable in the last position of the stimulus list. A corresponding random matching sequence was derived from a fixed matching sequence by varying the target syllable in a random position (first, second, or last) of the stimulus list. Two types of non-matching sequences were also similarly constructed (see Text, SDC 1, for listeners’ guide to samples; see Audio, SDC 2, to listen to samples). There were a total of 16 fixed matching sequences, and corresponding random matching sequences, sharing consonant, rhyme, and tone, respectively (see Table, SDC 3, for complete list of sequences). Within each sequence, no adjacent syllables formed a disyllabic word. Occurrences of different consonants, rhymes, and tones were balanced across sequences.

Table 1.

Sample auditory stimuli for consonant, rhyme, and tone matching tasks

| Unit |

Position |

Syllable List |

Probe |

Matching |

||

|---|---|---|---|---|---|---|

| P1 |

P2 |

P3 |

||||

| Consonant | Random | bian4 | wang1 | she2 | ba3 | yes |

| cen2 | zhai4 | feng3 | zhe1 | yes | ||

| liu1 | zuo2 | song4 | kui3 | no | ||

| Consonant | Fixed | she2 | wang1 | bian4 | ba3 | yes |

| cen2 | feng3 | zhai4 | zhe1 | yes | ||

| liu1 | song4 | zuo2 | kui3 | no | ||

| Rhyme | Random | xi1 | tuan2 | lou3 | pi4 | yes |

| zhuo1 | pin4 | da3 | ma2 | yes | ||

| zou1 | se4 | nian2 | kuang3 | no | ||

| Rhyme | Fixed | tuan2 | lou3 | xi1 | pi4 | yes |

| zhuo1 | pin4 | da3 | ma2 | yes | ||

| se4 | nian2 | zou1 | kuang3 | no | ||

| Tone | Random | bao1 | hun4 | mu2 | zhi1 | yes |

| kai3 | tu2 | huang4 | mei2 | yes | ||

| hei1 | liu2 | gong3 | can4 | no | ||

| Tone | Fixed | hun4 | mu2 | bao1 | zhi1 | yes |

| kai3 | huang4 | tu2 | mei2 | yes | ||

| hei1 | gong3 | liu2 | can4 | no | ||

Note. Chinese syllables are written in Pinyin transcription. Phonological units (consonant, rhyme, tone) in the probe are marked in bold; a target unit from the syllable list is also marked in bold when it matches the corresponding unit in the probe. P1, P2, and P3 represent the first, second, and third positions, respectively, in the syllable list.

Task procedure

There were three paired experimental tasks designed to contrast random (r) vs. fixed (f) target positions on the three phonological units: Cr vs. Cf, Rr vs. Rf, and Tr vs. Tf. Tasks with fixed target positions served as the control (baseline) for tasks with random target positions. In the Cr task, for example, subjects were instructed to judge whether any of the three syllables in the list had a consonant matching to the consonant of the probe. In the Cf task, subjects were instructed to judge whether the consonant of the last syllable matched that of the probe, ignoring the first and second syllables of the list. They responded by pressing the left mouse button. Instructions were delivered in Mandarin via headphones immediately preceding each task block: e.g., Cr, “consonant - random position”; Rf, “rhyme - fixed position”. Prior to imaging, subjects were trained to a high level of accuracy (≥ 85%) on all tasks using different stimuli from those presented during functional imaging.

Three functional imaging scans were conducted, each focusing on a single phonological unit (C, R, T). In each 7.5 min scan, a pair of tasks (e.g., Cr & Cf) were presented in blocked format (36s) in an alternating boxcar design with 18s rest periods separating the task blocks. A block design paradigm was chosen to enhance statistical power of detection. There were eight task blocks in a scan, four per task (e.g., four Cr and four Cf blocks). Each block contained eight 4.5 s trials, four matching and four non-matching sequences, presented in random order. For each of the three scans, there were 32 trials per fixed and random matching sequences, respectively, for a sum total of 64 trials. The cumulative total of trials across the three scans was 192: (matching + non-matching = 8 trials) × (4 blocks) × (2 positions) × (3 units). The order of imaging scans for phonological units (C, R, T) and task blocks (random, fixed) within each scan was counterbalanced across subjects.

The timing architecture of a trial consisted of the syllable list + probe (2300 ms, on average) and the response interval (2200 ms). Stimulus onset asynchrony within the syllable list was 500 ms. A silent interval of 350 ms was inserted between the syllable list and its probe. Each sequence of four syllables (list + probe) fell within the span for short term memory and attention [14,15].

Image acquisition

Imaging was performed on a 1.5T Signa GE LX Horizon scanner (Waukesha, WI) equipped with a birdcage transmit-receive radiofrequency head coil. Functional volumes were acquired using a blood oxygenation level dependent (BOLD) contrast sensitive gradient echo echo-planar imaging pulse sequence (2.25 s repetition time; 50 ms echo time; 90° flip angle; 64 × 64 acquisition matrix; 24 cm × 24 cm field of view, 16 7.5 mm thick contiguous axial slices). Prior to functional imaging, whole-brain high-resolution anatomic images were acquired in 124 contiguous axial slices using a 3D spoiled gradient-recalled acquisition in the steady state sequence for purposes of anatomic localization and transformation to a standard stereotactic system.

Image analysis

Image analysis was conducted using the SPM5 software package (Wellcome Department of Imaging Neuroscience, University College, London, UK). For each subject, functional image volumes were corrected for slice acquisition timing differences and rigid-body realigned to the initial volume of the first functional scan. Each subject’s high-resolution anatomical images were co-registered to the mean image of all three functional scans and segmented into tissue components. During segmentation, spatial parameters were applied to transform the functional volumes into the Montreal Neurological Institute (MNI) space, and then resampled to 2 mm (isotropic) voxels and smoothed by a 6 mm full-width at half-maximum (FWHM) Gaussian kernel.

Subjects’ responses to various stimuli were modeled using SPM’s canonical hemodynamic response function and its time and dispersion derivatives to account for variations in response onsets and durations. The model also included six movement parameter regressors obtained during realignment allowing for residual movement-induced effects. The effects of serial correlations in fMRI time series were taken into account using a first order autoregressive model, while a high-pass filter with a cut-off of 1/128 Hz was applied to each voxel’s time series to remove low frequency noise.

For C, R, and T scans, respectively, summary contrast images representing average activation differences between random and fixed matching sequences were calculated across blocks (Cr>Cf, Rr>Rf, Tr>Tf). Comparisons of Cr, Rr, or Tr with rest were performed for calibration purposes. In addition, contrast with the rest blocks also facilitated better capture of the hemodynamic response for each task block of interest. For direct comparisons of phonological units (C vs. R; C vs. T; R vs. T), three summary contrast images representing average activation differences between phonological units ([Cr>Cf] - [Rr>Rf]; [Cr>Cf] -[Tr>Tf]; [Rr>Rf] - [Tr>Tf]) were similarly calculated across blocks within and between related functional scans.

Statistical inferences for each phonological unit relative to rest were made using a Gaussian field theory derived cluster level significance (pcluster < 0.05), corrected for multiple comparisons in a search volume comprising all voxels within SPM’s gray matter template after smoothing with a 6 mm FWHM Gaussian kernel. The voxel-wise height threshold for comparisons between random and fixed conditions within phonological units was set at pvoxel < 0.001 (uncorrected), while comparisons between phonological units were conducted using pvoxel < 0.005 (uncorrected).

Results

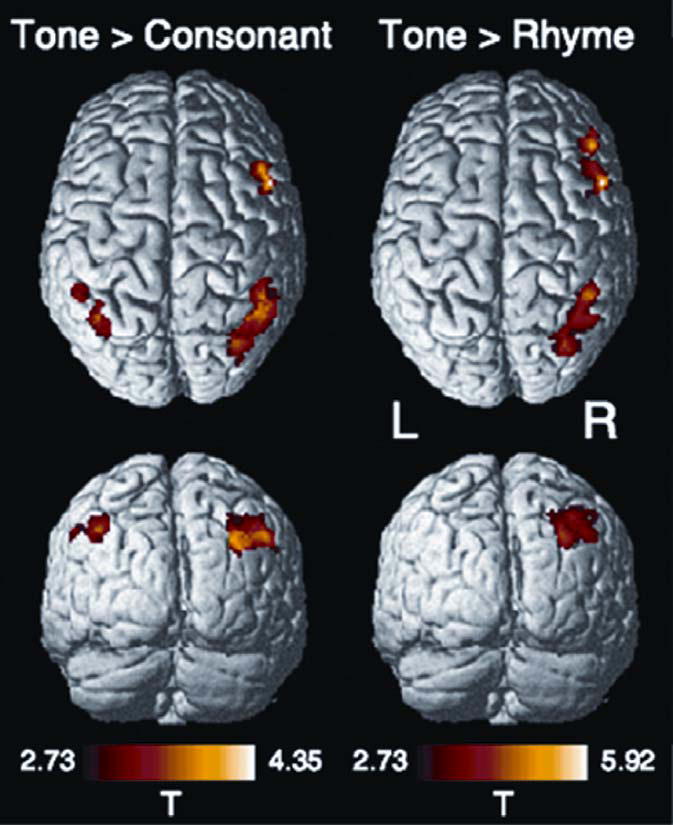

A comparison of random vs. fixed matching positions yielded numerous common areas of increased activity (Table 2), regardless of phonological unit, in frontal and parietal areas bilaterally, as well as in the anterior insula, frontal operculum, and anterior cingulate gyrus (see Figure, SDC 4, for activation maps). A direct comparison of phonological units revealed significant fronto-parietal activations predominantly in the RH for T relative to C or R (Fig. 1; see Table, SDC 5, for summary of significant clusters of activation). In the case of T vs. C, activity was centered in dorsal aspects of the inferior frontal gyrus (IFG) in the RH near the junction of the inferior frontal/precentral sulci. Activations were observed more extensively in the right inferior parietal lobule. In the case of T vs. R, there were two activation foci in the right frontal lobe, one localized predominantly in the pars opercularis, with the other centered more anteriorly in the inferior frontal sulcus. The peak focus of activation in the right inferior parietal lobule was centered dorsally near the intraparietal sulcus.

Table 2.

Summary of significant clusters of activation for single subtraction comparisons between random and fixed conditions per phonological unit

| Consonant Random [Cr] > Consonant Fixed [Cf] | |||||||

|---|---|---|---|---|---|---|---|

| Side | Brain region | BA | kE | MNI coordinates (mm) | Z | ||

| x | y | z | |||||

| L | Inferior frontal gyrus | 44 | 1071 | −36 | 8 | 24 | 5.05 |

| L | Inferior parietal lobule | 40 | 411 | −32 | −56 | 34 | 4.93 |

| R | Anterior cingulate gyrus | 32 | 275 | 4 | 20 | 44 | 3.72 |

| R | Insula, frontal operculum | 13/47 | 267 | 34 | 30 | 6 | 4.26 |

| R | Middle frontal gyrus | 6 | 199 | 32 | −2 | 44 | 4.59 |

| R | Inferior parietal lobule | 40 | 133 | 30 | −56 | 40 | 4.07 |

| Rhyme Random [Rr] > Rhyme Fixed [Rf] | |||||||

| L | Inferior frontal gyrus | 44 | 1828 | −36 | 22 | 26 | 4.77 |

| L | Inferior parietal lobule | 40 | 823 | −26 | −66 | 44 | 5.39 |

| L | Anterior cingulate gyrus | 32 | 660 | −4 | 22 | 42 | 4.69 |

| R | Insula, frontal operculum | 13/47 | 289 | 32 | 26 | −4 | 4.56 |

| M | Cerebellar vermis | 201 | 10 | −78 | −28 | 4.14 | |

| R | Inferior parietal lobule | 40 | 152 | 36 | −54 | 40 | 4.16 |

| R | Middle frontal gyrus | 6 | 98 | 28 | −4 | 38 | 3.79 |

| Tone Random [Tr] > Tone Fixed [Tf] | |||||||

| L | Inferior/middle frontal gyrus | 44/9 | 2702 | −44 | 8 | 30 | 5.50 |

| L | Inferior parietal lobule | 40 | 1561 | −28 | −64 | 38 | 5.02 |

| M | Cerebellar vermis | 236 | −2 | −78 | −30 | 4.98 | |

| R | Insula, frontal operculum | 13/47 | 2233 | 30 | 30 | −4 | 5.48 |

| R | Inferior parietal lobule | 40 | 1533 | 40 | −60 | 48 | 5.45 |

| R | Middle frontal gyrus | 6 | 238 | 36 | −4 | 48 | 4.34 |

| M | Anterior cingulate gyrus | 32 | 887 | 0 | 18 | 50 | 5.17 |

Note. kE refers to cluster extent. Coordinates (x, y, z) of peak activation are expressed in millimeters in the MNI space. Z refers to peak Z-score value within a cluster. Statistical significance was inferred at the cluster level, pcluster < 0.05, after correcting for multiple comparisons within gray matter voxels in the whole brain. Voxel-wise height threshold, p = 0.001. L, left hemisphere; R, right hemisphere; M, medial; BA, Brodmann area.

Figure 1.

Rendered statistical maps showing significant differences in BOLD response of tone over consonant ([Tr-Tf] > [Cr-Cf]; left column) and tone over rhyme ([Tr-Tf] > [Rr-Rf]; right column). Only voxels found within significant clusters (p < 0.05, corrected for multiple comparisons) are shown. The color scale depicts the range of t-statistic values. Voxel-wise display threshold, p = 0.005, uncorrected; Z > 3.72. L = left; R = right.

Two-way (position × unit) mixed model ANOVAs of reaction time and response accuracy revealed that regardless of phonological unit, reaction time and % correct were larger in the random- than in the fixed-matching task (see Figure, SDC 6, for display of reaction time and % correct by unit and task). Post hoc multiple comparisons (αBonferroni = 0.05) further revealed that response accuracy for both R and T was higher than C across tasks, whereas no difference was observed between R and T.

Discussion

Using an auditory immediate recognition paradigm [16], it is demonstrated that hemispheric asymmetries arise as a function of the type of phonological unit. In direct contrasts of phonological units, T, as compared to C or R, shows increased activation in frontoparietal areas of the RH. This rightward asymmetry of a suprasegmental unit (T) whose primary acoustic correlate is voice fundamental frequency, as compared to segmental units (C, R), is congruent with the well-established role of the RH in mediating speech prosody [3,17].

Neural substrates of phonological processing

Direct contrasts between segmental and suprasegmental units ([Tr-Tf] > [Cr-Cf]; [Tr-Tf] > [Rr-Rf]) reveal rightward asymmetry of the frontoparietal network for tones, as compared to consonants and rhymes. Because the task paradigm is identical across units for random-matching as well as fixed-matching conditions, all three units (C, R, T) recruit key structures of a frontoparietal network consistent with the extant literature on verbal short-term memory. Using direct contrasts, we are able to observe neural activity specific to tonal encoding and its separate memory processes, as compared to segmental.

However, the limited temporal resolution of fMRI does not permit us to tease apart specific processes associated with verbal working memory, i.e., encoding, storage, retrieval, comparison, matching, decision making, etc. All of these processes, perceptual as well as post-perceptual, are processed rapidly within hundreds of milliseconds [18]. For instance, the rightward asymmetry of the frontoparietal network for tones, relative to consonants and rhymes, may be attributable to the well-established role of the RH in mediating pitch. This would be consistent with the view that hemispheric asymmetries arise from low-level features of sounds [19,20]. On the other hand, the observed rightward asymmetry for tones in the frontal lobe is also consistent with the view that dorsolateral prefrontal cortex carries out temporal integration of information when making stimulus comparisons in short-term memory, and that it actively organizes sequences of responses based on explicit retrieval of information from posterior cortical association systems [21]. Though unable to fractionate temporal stages of phonological processing in this study, these data point to a fruitful line of research using magnetoencephalography (MEG) to reveal differences in spatiotemporal dynamics associated with suprasegmental and segmental information.

Effects of task performance on brain activation patterns

It is unlikely that differences in task performance can account for the differential patterns of activation in T vs. C or R in right frontoparietal cortex. Chinese subjects’ reaction times are homogeneous irrespective of phonological unit. Reaction time is presumed to reflect decision-making processes, and appears to be positively correlated with increased activity in inferior frontal regions [22]. Yet we find no differences in reaction time between C, R, and T. However, response accuracy is observed to be higher for rhymes and tones than for consonants. This disparity is likely due to the relative degree-of-change over time in acoustic properties of rhymes and tones (slowly changing voice fundament frequency and higher harmonics) versus onset consonants (rapidly changing bursts and formant transitions). Indeed, the perceptual trace of rapidly-changing cues has been shown to decay faster in working memory [23]. But the RH advantage for T over R cannot be accounted for by differences in decay rates. Instead, our findings argue for a view of working memory that emerges from the integrated action of neural processes subserving acoustic/auditory features associated with specific types of phonological units, i.e., suprasegmental vs. segmental.

Conclusions

This study demonstrates that neural circuitry subserving phonological processing is differentially engaged depending upon whether the unit is segmental or suprasegmental. The rightward asymmetry in frontoparietal regions for tones, relative to consonants and rhymes, is consistent with the idea of differential hemispheric specialization on the basis of both attentional demands and perceptual cues.

Supplementary Material

Acknowledgments

Sources of support: NIH research grants R01 DC04584 (JTG); R01 EB03990 (TMT); NIH postdoctoral traineeship (XL)

References

- 1.Yip M. Tone. New York: Cambridge University Press; 2003. [Google Scholar]

- 2.Gandour JT, Tong Y, Wong D, Talavage T, Dzemidzic M, Xu Y, et al. Hemispheric roles in the perception of speech prosody. Neuroimage. 2004;23:344–357. doi: 10.1016/j.neuroimage.2004.06.004. [DOI] [PubMed] [Google Scholar]

- 3.Wildgruber D, Ackermann H, Kreifelts B, Ethofer T. Cerebral processing of linguistic and emotional prosody: fMRI studies. Prog Brain Res. 2006;156:249–268. doi: 10.1016/S0079-6123(06)56013-3. [DOI] [PubMed] [Google Scholar]

- 4.Wong PC. Hemispheric specialization of linguistic pitch patterns. Brain Res Bull. 2002;59:83–95. doi: 10.1016/s0361-9230(02)00860-2. [DOI] [PubMed] [Google Scholar]

- 5.Zatorre RJ, Gandour JT. Neural specializations for speech and pitch: moving beyond the dichotomies. Philos Trans R Soc Lond B Biol Sci. 2008;363:1087–1104. doi: 10.1098/rstb.2007.2161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Jancke L, Wustenberg T, Scheich H, Heinze HJ. Phonetic perception and the temporal cortex. Neuroimage. 2002;15:733–746. doi: 10.1006/nimg.2001.1027. [DOI] [PubMed] [Google Scholar]

- 7.Liu L, Peng D, Ding G, Jin Z, Zhang L, Li K, et al. Dissociation in the neural basis underlying Chinese tone and vowel production. Neuroimage. 2006;29:515–523. doi: 10.1016/j.neuroimage.2005.07.046. [DOI] [PubMed] [Google Scholar]

- 8.Ren GQ, Yang Y, Li X. Early cortical processing of linguistic pitch patterns as revealed by the mismatch negativity. Neuroscience. 2009;162:87–95. doi: 10.1016/j.neuroscience.2009.04.021. [DOI] [PubMed] [Google Scholar]

- 9.Luo H, Ni JT, Li ZH, Li XO, Zhang DR, Zeng FG, et al. Opposite patterns of hemisphere dominance for early auditory processing of lexical tones and consonants. Proc Natl Acad Sci U S A. 2006;103:19558–19563. doi: 10.1073/pnas.0607065104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Minagawa-Kawai Y, Naoi N, Kikuchi N, Yamamoto J, Nakamura K, Kojima S. Cerebral laterality for phonemic and prosodic cue decoding in children with autism. Neuroreport. 2009;20:1219–1224. doi: 10.1097/WNR.0b013e32832fa65f. [DOI] [PubMed] [Google Scholar]

- 11.Li X, Gandour J, Talavage T, Wong D, Dzemidzic M, Lowe M, et al. Selective attention to lexical tones recruits left dorsal frontoparietal network. Neuroreport. 2003;14:2263–2266. doi: 10.1097/00001756-200312020-00025. [DOI] [PubMed] [Google Scholar]

- 12.Li X, Wong D, Gandour J, Dzemidzic M, Tong Y, Talavage T, et al. Neural network for encoding immediate memory in phonological processing. Neuroreport. 2004;15:2459–2462. doi: 10.1097/00001756-200411150-00005. [DOI] [PubMed] [Google Scholar]

- 13.Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- 14.Cowan N. The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behav Brain Sci. 2000;24:87–185. doi: 10.1017/s0140525x01003922. [DOI] [PubMed] [Google Scholar]

- 15.Zhang G, Simon HA. STM capacity for Chinese words and idioms: Chunking and acoustical loop hypotheses. Mem Cognit. 1985;13:193–201. doi: 10.3758/bf03197681. [DOI] [PubMed] [Google Scholar]

- 16.Sternberg S. Memory-scanning: mental processes revealed by reaction-time experiments. Am Sci. 1969;57:421–457. [PubMed] [Google Scholar]

- 17.Friederici AD, Alter K. Lateralization of auditory language functions: a dynamic dual pathway model. Brain Lang. 2004;89:267–276. doi: 10.1016/S0093-934X(03)00351-1. [DOI] [PubMed] [Google Scholar]

- 18.Halgren E, Boujon C, Clarke J, Wang C, Chauvel P. Rapid distributed fronto-parieto-occipital processing stages during working memory in humans. Cereb Cortex. 2002;12:710–728. doi: 10.1093/cercor/12.7.710. [DOI] [PubMed] [Google Scholar]

- 19.Poeppel D. The analysis of speech in different temporal integration windows: Cerebral lateralization as ‘asymmetric sampling in time’. Speech Communication. 2003;41:245–255. [Google Scholar]

- 20.Zatorre RJ, Belin P. Spectral and temporal processing in human auditory cortex. Cereb Cortex. 2001;11:946–953. doi: 10.1093/cercor/11.10.946. [DOI] [PubMed] [Google Scholar]

- 21.Goldman-Rakic PS. The prefrontal landscape: implications of functional architecture for understanding human mentation and the central executive. In: Roberts A, Robbins T, Weiskrantz L, editors. The prefrontal cortex: Executive and cognitive functions. New York: Oxford University Press; 1998. pp. 88–102. [Google Scholar]

- 22.Binder JR, Liebenthal E, Possing ET, Medler DA, Ward BD. Neural correlates of sensory and decision processes in auditory object identification. Nat Neurosci. 2004;7:295–301. doi: 10.1038/nn1198. [DOI] [PubMed] [Google Scholar]

- 23.Mirman D, Holt LL, McClelland JL. Categorization and discrimination of nonspeech sounds: differences between steady-state and rapidly-changing acoustic cues. J Acoust Soc Am. 2004;116:1198–1207. doi: 10.1121/1.1766020. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.