Abstract

Sensory information can interact to impact perception and behavior. Foods are appreciated according to their appearance, smell, taste and texture. Athletes and dancers combine visual, auditory, and somatosensory information to coordinate their movements. Under laboratory settings, detection and discrimination are likewise facilitated by multisensory signals. Research over the past several decades has shown that the requisite anatomy exists to support interactions between sensory systems in regions canonically designated as exclusively unisensory in their function and, more recently, that neural response interactions occur within these same regions, including even primary cortices and thalamic nuclei, at early post-stimulus latencies. Here, we review evidence concerning direct links between early, low-level neural response interactions and behavioral measures of multisensory integration.

Keywords: multisensory, behavior, brain imaging, neurophysiology, crossmodal

Introduction

There are myriad everyday situations where information from the different senses provides either redundant or complementary information to facilitate perception and behavior. One example of such multisensory interactions is speech perception in noisy environments, where facial information improves comprehension (e.g., Sumby and Pollack, 1954). Other examples include the detection and localization of stimuli either in naturalistic or laboratory settings, where performance is often facilitated by multisensory stimuli (reviewed in Stein and Meredith, 1993; see also Murray et al., 2005; Zampini et al., 2007; Tajadura-Jimenez et al., 2009 for examples using auditory-somatosensory stimuli in humans). The facilitation of reaction times to multisensory stimuli is one instantiation of a redundant signals effect (Raab, 1962). On the one hand, this effect could be explained by truly independent processing of each sensory modality, such that the faster of the two mediates response execution (typically, a button-press or eye movement) on any given trial. When there are two sources of information, performance is facilitated because the probability of either of the two sources leading to a fast response is higher than either source alone – a purely statistical phenomenon referred to as probability summation. Under this framework, no neural response interactions are required. However, notable examples exist for neural response interactions, even when probability summation fully accounts for behavioral gains (e.g., Murray et al., 2001; Sperdin et al., 2009). On the other hand, the facilitation can exceed expectations based on probability summation (Miller, 1982), in which case neural response interactions, at some stage prior to response initiation, need to be invoked. One corollary of this facilitation is the importance of identifying those neural response interactions that are (causally) linked to behavioral improvements.

A Paradigm Shift for Models of Multisensory Processing

Research over the past decade or so has led to a significant paradigm shift in the manner in which neuroscientists conceive of the neural underpinnings of multisensory interactions on the one hand, and more generally the organization of the various sensory systems on the other hand. The traditional view held that the sensory systems were largely segregated at low-levels and early latencies of processing (e.g., Jones and Powell, 1970), with interactions and integration only occurring within higher-order brain regions, and at relatively late stages of processing. By consequence, any multisensory effects observed within low-level brain regions were presumed to be the product of feedback modulations from such higher-order structures. Instead, low-level and early multisensory effects have now been documented using anatomic, physiological, and brain imaging methods (reviewed in Wallace et al., 2004; Schroeder and Foxe, 2005; Ghazanfar and Schroeder, 2006; Kayser and Logothetis, 2007; Senkowski et al., 2008; Stein and Stanford, 2008). This new framework has consequently spurred interest in determining the precise circumstances when multisensory interactions and their behavioral consequences will and will not occur.

The Case of Auditory-Somatosensory Interactions

The case of auditory-somatosensory neural response interactions, wherein the response to the multisensory stimulus does not equal the summed responses from the constituent unisensory conditions, is illustrative of this abovementioned paradigm shift. Humans and non-human primates exhibit non-linear neural response interactions within the initial post-stimulus processing stages (Foxe et al., 2000; Schroeder et al., 2001; Murray et al., 2005; Lakatos et al., 2007). These interactions manifest at early latencies within secondary (also termed belt) regions of auditory cortex adjacent to primary (also termed core) auditory cortices (Schroeder et al., 2001, 2003; Fu et al., 2003; Kayser et al., 2005; Murray et al., 2005; Gonzalez Andino et al., 2005a; see also Cappe and Barone, 2005; Hackett et al., 2007a,b; Smiley et al., 2007; Cappe et al., 2009; for corresponding anatomic data). These early and low-level effects are seen despite paradigmatic variations in terms of passive stimulus presentation versus performance of a simple stimulus detection task (in the case of studies in humans) or even the use of anesthetics (in the case of studies in non-human primates).

The robustness of auditory-somatosensory interactions is also supported, albeit indirectly, by the cumulative psychophysical findings in humans. Facilitative effects on reaction time speed have now been observed not only when the stimuli are presented to the same location in space, but also when the stimuli are spatially misaligned. This is the case for left-right (Murray et al., 2005), front-back (Zampini et al., 2007), as well as near-far (Tajadura-Jimenez et al., 2009), spatial disparities (see also Gillmeister and Eimer, 2007; Yau et al., 2009). Such findings have been used to generate hypotheses concerning the spatial representation of auditory and somatosensory information within regions, and at latencies when the initial response interactions are observed. The rationale is predicated on the so-called “spatial rule” of multisensory interactions, which stipulates that the receptive field organization of a neuron (or neural population) is a determining feature of multisensory interactions and their quality (Stein and Meredith, 1993). Based on this principle and the above findings, it has been hypothesized that the initial auditory-somatosensory neural response interactions are occurring within brain regions whose neuronal population consists of large (potentially 360°) auditory spatial representations and unilateral somatosensory (i.e., hand) representations. Some support for this hypothesis is found in electrophysiological studies in humans (Murray et al., 2005) and monkeys (Fu et al., 2003) that varied the spatial position of the stimuli. For example, Murray et al. (2005) performed source estimations of neural response interactions and showed effects within the left caudal auditory cortices when the somatosensory stimulus was to the right hand, irrespective of whether the sound was within the left or right hemispace (and vice versa).

These kinds of results suggest that spatial information is not a determining factor in whether facilitative effects at a population level will be observed (though see Lakatos et al., 2007 for data concerning oscillatory activity within primary auditory cortex in response to contralateral and ipsilateral somatosensory input). We would hasten to note, however, that effects would not be expected to circumvent constraints enforced by a given neuron's receptive field properties or spatial tuning (e.g., Murray and Spierer, 2009, for a discussion of such issues). To address this more directly, we recently introduced a new paradigm combining a stimulus detection task, like that described above, with intermittent probes about the spatial location of stimuli on the preceding trial. In this way, we could assess whether the task-relevance of spatial information is sufficient for limiting facilitative effects to spatially aligned conditions (Sperdin et al., 2010). This was not the case. Rather, performance on stimulus detection was facilitated to an equal extent – both when stimuli were spatially aligned and misaligned. Still, other findings indicate that the particular body surface stimulated (Tajadura-Jimenez et al., 2009; see also Fu et al., 2003), and acoustic features of the sounds (Yau et al., 2009; see also commentary by Foxe, 2009) may play determinant roles in the pattern of behavioral (and neurophysiological) effects one observes.

More generally, and in part because of the large consistency in the above effects, auditory-somatosensory interactions represent a situation in which one might reasonably conclude that early effects within low-level cortices are relatively automatic, and unaffected by cognitive factors (Kayser et al., 2005). By extension, one hypothesis is that these interactions are not causally linked to behavioral outcome, as they appear to be robust – irrespective of whether or not anesthetics are used, and irrespective of variations in task demands. Closer inspection of some of the details of the extant studies, however, reveal several challenges with using the majority of the above studies to generate hypotheses regarding links between early and low-level neural response interactions and behavior. In the case of studies in monkeys, none included the performance of a task. However, electrophysiological recordings in Schroeder et al. (2001) and Lakatos et al. (2007), and hemodynamic imaging (Kayser et al., 2005), have been performed in awake and fixating animals. It will not be surprising if the coming years begin introducing behavioral tasks into their recording setups in animals (e.g., Komura et al., 2005; Hirokawa et al., 2008 for such types of studies in rats). In the case of studies of auditory-somatosensory interactions in humans, only the study by Murray et al. (2005) included a behavioral task – in particular a simple detection paradigm. Moreover, this study introduced some advances in electrical neuroimaging analyses of scalp-recorded electroencephalographic data and event-related potentials (Michel et al., 2004, 2009; Murray et al., 2008, 2009) to the domain of multisensory research. These analyses, along with application of source estimations, have the promise of facilitating the comparison of findings from humans with those from animal models (e.g., Gonzalez Andino et al., 2005a), as well as those from EEG (or MEG) with those from fMRI (e.g., compare localization in Foxe et al., 2002 with that in Murray et al., 2005). Of particular benefit is that these kinds of analyses circumvent two major statistical pitfalls of traditional voltage waveform analyses by using reference- independent measurements and by taking advantage of the added information from multi-channel recordings. In addition, and of high relevance for the multisensory researcher, these kinds of analyses permit the differentiation of two major families of neurophysiological mechanisms of multisensory interactions depicted in Figure 1. On the one hand, electrical neuroimaging analyses can differentiate whether an effect at a given latency follows from modulations in response strength vs response topography. The former would be consistent with a gain modulation, whereas the latter would forcibly follow from changes in the underlying configuration of intracranial sources according to Helmholz's principles (Lehmann, 1987). That is, these analyses can statistically determine if and when there are generator configurations uniquely active under multisensory conditions. On the other hand, electrical neuroimaging analyses – in large part because they are reference- independent – also allow for the differentiation of supra-additive and sub-additive interactions. This is particularly useful because the directionality of effects observed at individual voltage waveforms will vary with the choice of the reference (as will the presence and latency of statistical effects).

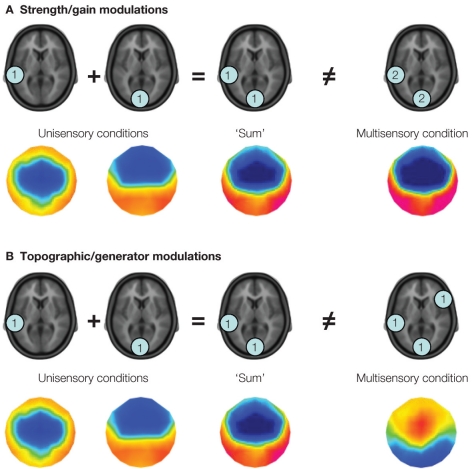

Figure 1.

Two potential varieties of multisensory interactions assessable by applying a linear model to the analysis of event-related potentials. The linear model involves comparing the summed responses from unisensory conditions with the response to the multisensory stimulus. In this figure, the level of activity (arbitrary units) within fictive brain regions is illustrated within the blue discs. In panel (A) modulations in response strength are illustrated, wherein the same set of brain regions is observed in response to the summed unisensory and multisensory conditions, albeit with greater magnitude in the latter case. This is illustrative of a supra-additive gain modulation. The colored topographic maps illustrate what one might observe in the ERP data. In panel (B) modulations in the configuration of brain regions active under multisensory stimulus conditions are illustrated, such that brain regions otherwise inactive under unisensory conditions are observed. In terms of event-related potential analyses, this latter mechanism would manifest as a modulation in the topography of the electric field at the scalp, which is illustrated in the voltage maps below. It should be noted that these two mechanisms, i.e., gain and generator modulations, can co-occur.

Early, Low-Level Auditory-Somatosensory Interactions are Linked to Fast Stimulus Detection

In Sperdin et al. (2009) we showed that early non-linear neural response interactions within low-level auditory cortices influence subsequent reaction time speed. To reach this conclusion, we sorted ERPs to auditory, somatosensory, and combined auditory-somatosensory multisensory stimuli according to a median split of reaction times during a detection task with these stimuli (see Figure 2). In this way we could separate both behavioral and electrophysiological responses according to whether the reaction time on a given trial was relatively fast or slow. At a behavioral level, only trials leading to fast reaction times produced facilitation in excess of predictions based on probability summation (and, therefore, necessitating invocation of neural response interactions). By contrast, the facilitation in the case of multisensory trials leading to slow reaction times was fully explained by probability summation.

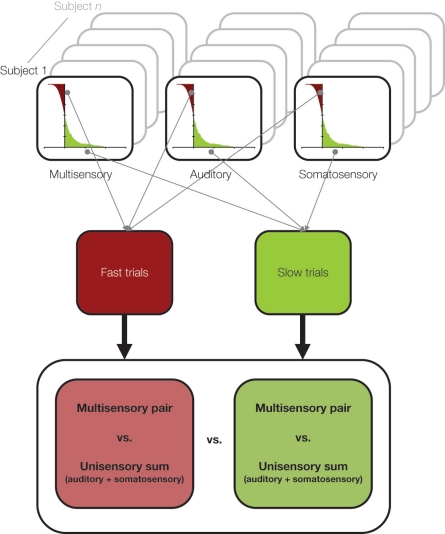

Figure 2.

A schematic of the median split analysis approach applied in Sperdin et al. (2009). For each subject and stimulus condition, trials were sorted according to RT speed. Those with RTs faster than the median were considered “fast” and those slower than the median were considered “slow”. Event-related potentials were likewise separately averaged according to RT speed, and compared using the linear model schematized in Figure 1. Data were analyzed in using a multi-factorial within subjects design.

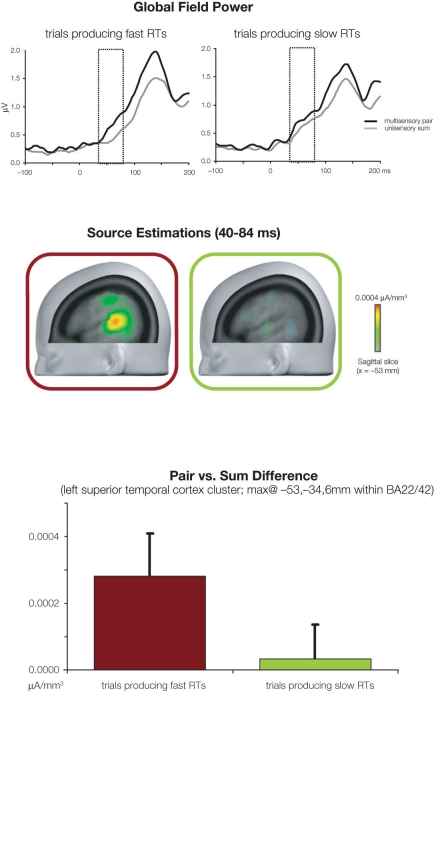

At a neurophysiological level, we observed non-linear neural response interactions over two time periods within the initial 200-ms post- stimulus onset (Figure 3). Over the 40–84 ms post-stimulus period, supra-additive modulations in response strength were observed when reaction times were ultimately fast (and by extension when an explanation of the reaction time facilitation based on probability summation did not suffice). No non-linear interactions were observed over this time period when reaction times were ultimately slow (and by extension when probability summation fully accounted for the reaction time facilitation). These early non-linear interactions, in the case of trials leading to fast reaction times, were moreover localized to posterior regions of the superior temporal cortex that have been repeatedly documented as an auditory-somatosensory convergence and integration zone (Foxe et al., 2000, 2002; Schroeder et al., 2001, 2003; Fu et al., 2003; Cappe and Barone, 2005; Gonzalez Andino et al., 2005a; Kayser et al., 2005; Murray et al., 2005; Hackett et al., 2007a,b; Smiley et al., 2007; Cappe et al., 2009). Over the 86–128 ms post-stimulus period, supra-additive modulations in response strength were observed independent of the ultimate speed of reaction times (and of whether probability summation accounted for the reaction time facilitation). That is, the presence or absence of violation of probability summation at a behavioral level was not a determining factor in whether non-linear neural response interactions were observed. Rather, early non-linear interactions were limited to trials leading to fast reaction times and also to violation of probability summation. Whether both of these psychophysical features (i.e., reaction time speed and violation of probability summation) are the outcome of early non-linear interactions awaits further investigation.

Figure 3.

Evidence for the impact of early non-linear and supra-additive neural response interactions on RT speed. The top panels illustrate global field power waveforms in response to multisensory stimulus pairs, and the summed unisensory responses for trials producing fast and slow RTs (left and right panels, respectively). While non-linear neural response interactions began at 40 ms post-stimulus for trials producing fast RTs, such was only the case from 86 ms onwards for trials producing slow RTs. The middle portion illustrates the difference in source estimations over the 40–84 ms post-stimulus period between responses to multisensory stimulus pairs and summed unisensory responses for trials producing fast and slow RTs (red and green framed images, respectively). The sagittal slice is shown at x = −53 mm using the Talairach and Tournoux (1988) coordinate system. The bottom panel illustrates the mean scalar value of differential activity within a cluster of 25 solution points within the left superior temporal cortex. There were significantly greater non-linear multisensory neural response interactions in the case of trials producing fast RTs.

Another important finding regarding the underlying mechanism of auditory-somatosensory interactions is that there was no evidence in our analyses for modulations in response topography and, therefore, no evidence that auditory-somatosensory multisensory interactions recruit distinct configurations of brain regions. Rather, our results are consistent with a mechanism based on changes in the gain of responses within brain regions already active under unisensory conditions (see also Gonzalez Andino et al., 2005a; Murray et al., 2005). The specific pattern leading to early supra-additive non-linear interactions in the case of fast trials is also noteworthy (c.f. Figures 2 and 3 in Sperdin et al., 2009). Responses to the multisensory condition did not significantly differ as a function of reaction time speed. Rather, responses to unisensory stimuli were significantly weaker when reaction times were ultimately fast. At a mechanistic level, this pattern would suggest that changes in unisensory processing may be at the root of whether performance is fast or slow.

It is likewise of note that it was the earlier period of non-linear interactions that modulated with later reaction time speed (i.e., that at 40–84 ms), as opposed to the later period that did not exhibit such a modulation (i.e., that at 86–128 ms). This pattern suggests that interactions that are behaviorally relevant during the time course of post-stimulus brain responses may be dissociable from those that are not. The electrophysiological effect at 40–84 ms appears to be linked to the relative (i.e., faster vs slower for a given participant) rather than absolute reaction time. In other words, electrophysiological effects were consistently observed over the 40–84 ms period despite the fact that what was labeled as fast reaction times varied across participants (i.e., individual means for “fast” multisensory trials ranged from 218–521 ms; mean ± sem = 309 ± 34 ms). Further research applying single-trial analysis methods (e.g., De Lucia et al., 2007, 2010; Murray et al., 2009) will be required to shed further light on this aspect of our results.

Toward Identifying Mechanisms for Behaviorally-Relevant Multisensory Interactions

Advances in animal recordings

While Sperdin et al. (2009) showed that early non-linear neural response interactions measured in ERPs from humans differ as a function of reaction time speed, they do not in and of themselves identify the underlying mechanism. In many regards, it can be argued that the converse situation (i.e., exquisite mechanistic information in the absence of links to performance or perception) exists in the overwhelming majority of studies of multisensory processing in animals, because often no task is required or recordings are conducted in anesthetized preparations. Such being said, some data do exist. For example, one of the earliest (to our knowledge) multisensory neurophysiological experiments in awake and behaving monkeys suggested that sensory-related responses within pre-central neurons was not related (at least in some obvious way) to reaction time (Lamarre et al., 1983). By contrast, the latency of movement-related activity corresponded to the animal's reaction times. However, a more detailed analysis of responses from a subset of six neurons recorded by Lamarre et al. (1983) indicated that the facilitation of reaction times following multisensory (auditory-visual) stimulation was not due to the speeding up of activity within motor cortices (Miller et al., 2001). Any facilitation of neural responses – they contended – was likely occurring at early processing stages that were not recorded in their study. In agreement with this prediction, Wang et al. (2008) indeed observed facilitation of neural response latencies within macaque primary visual cortex in response to multisensory vs visual stimuli. This effect was task-dependent, such that facilitation was only observed during an active discrimination task requiring saccadic eye movements and was absent when the monkey was passively viewing. Similarly, recordings from the superior colliculus of the anesthetized cat show there to be an initial response enhancement expressed as shortened response latencies and stronger response magnitude under multisensory conditions (Rowland and Stein, 2007, 2008; Rowland et al., 2007). However, any direct link to behavior is obfuscated by the use of an anesthetized preparation. More germane, this sampling of studies highlight the added information provided by the analysis of dynamic information within firing rates, and the potential ability to link neural activity (whether firing rates, post- synaptic potentials, or other varieties) to performance/perception when experiments are conducted in awake and behaving preparations.

Transcranial magnetic stimulation (TMS) as a tool for identifying behaviorally-relevant multisensory interactions

Most recently, our own group (in collaboration with that of Gregor Thut and Vincenzo Romei) has focused on using single-pulse transcranial magnetic stimulation (TMS) in combination with psychophysics to identify causal links between neurophysiological and behavioral metrics of multisensory interactions. In one study, we showed that single-pulse TMS applied to the occipital pole affects simple reaction times to visual and auditory stimuli in equally large but opposite ways (Romei et al., 2007). That is, TMS significantly slowed reaction times to visual stimuli and significantly facilitated reaction times to auditory stimuli (there were no reliable effects on reaction times to multisensory stimuli). Moreover, these effects were temporally delimited, occurring over the 60–90ms period, and the beneficial interaction effect of combined unisensory auditory and TMS-induced visual cortex stimulation matched and was correlated with the RT-facilitation after external multisensory AV stimulation without TMS. This pattern suggests that multisensory interactions occur between the stimulus-evoked auditory and TMS-induced visual cortex activities. Further evidence for such was provided by a follow-up experiment showing that auditory input enhances excitability within the visual cortex itself (using phosphene-induction via TMS as a measure) over a similarly early time period (75–120 ms) (see also Ramos-Estebanez et al., 2007).

We recently extended this latter finding by showing that auditory-driven changes in visual excitability depend on the quality of the sound, such that structured sounds (as opposed to white noise versions) signaling approach/looming resulted in the highest excitability enhancement, and, furthermore, occurred at pre-perceptual levels (i.e., at latencies and stimulus durations too short for reliable psychophysical discrimination of the sound types) (Romei et al., 2009). The collective findings provide indications of the behavioral relevance of early auditory inputs into low-level visual cortices. Identifying the precise neurophysiological mechanism will undoubtedly benefit from simultaneous EEG-TMS acquisitions currently underway in our laboratories (for recent methodological reviews see, e.g., Ilmoniemi and Kičicč, 2010; Miniussi and Thut, 2010; Thut and Pascual-Leone, 2010). This kind of approach would likewise prove informative in fully unraveling the spatio-temporal dynamics of the behavioral relevance of specific auditory-somatosensory interactions.

Attention and arousal

Variations in multisensory interactions that in turn impact reaction time might simply follow from fluctuations in participants’ level of attention, such that fast trials were the result of high levels of attention and vice versa. Both spatial attention (Talsma and Woldorff, 2005) and selective attention (Talsma et al., 2007) can indeed modulate auditory-visual multisensory integration. In these studies, attention resulted in larger and/or supra-additive effects within the initial 200-ms post- stimulus presentation. In Sperdin et al. (2009), however, subjects were instructed to attend to both sensory modalities (i.e., audition and touch) and received no instructions regarding the spatial position of the stimuli. Rather, they performed a simple detection task irrespective of the spatial position of the stimuli (see also Sperdin et al., 2010, for an examination of when spatial attention was engaged). Therefore, it is unlikely that our participants were modulating their spatial or selective attention in a systematic manner – though we cannot unequivocally rule such out. Nonetheless, that our behavioral results show a redundant signals effect would not be predicted if the participants had selectively attended (systematically) to one or the other sensory modality. In terms of spatial attention, all eight stimulus conditions (i.e., four unisensory and four multisensory; see Murray et al., 2005 for details) were equally probable within a block of trials, and the fact that all spatial combinations resulted in multisensory facilitation of RTs (detailed in Murray et al., 2005) would suggest that participants indeed attended to both left and right hemispaces simultaneously. Finally, examination of the distribution of trials producing fast and slow RTs showed there to be an even distribution throughout the duration of the experiment. This would argue against a systematic effect of attention, arousal or fatigue.

Neural oscillations

Another possible mechanism is based on studies implicating a role of oscillatory activity in multisensory interactions. Lakatos et al. (2007) demonstrated that somatosensory inputs into supragranular layers of primary auditory cortices can serve to reset the phase of ongoing oscillatory activity that in turn modulates the responsiveness to auditory stimuli across the cortical layers. The phase of the reset oscillations was linked to whether the auditory (and by extension multisensory) response was enhanced or suppressed (see also Lakatos et al., 2008, for evidence of the role of delta phase in reaction times to visual stimuli, and more recently Lakatos et al., 2009 for evidence for the role of attention in these reset phenomena). Response amplification occurred under multisensory conditions when somatosensory inputs into primary auditory cortex led to an optimal phase of ongoing theta (∼7 Hz) and gamma (∼35 Hz) band activity. It will be important to determine if and how these kinds of effects might manifest elsewhere (e.g., in regions of the superior temporal cortex where auditory-somatosensory interactions were observed in Sperdin et al., 2009) and might impact task performance. Nonetheless, that responses to multisensory were amplified could provide not only a mechanism for enhanced attention and perception (cf. Lakatos et al., 2007, 2008, 2009; Schroeder et al., 2008; Schroeder and Lakatos, 2009), but also faster performance with such stimuli (i.e., because higher amplitude and steeper sloping responses will meet thresholds earlier; cf. Martuzzi et al., 2007, for an example of a study examining response dynamics in humans during an auditory-visual detection task). In the case of the present study, trials producing fast reaction times may be those where ongoing oscillations (in primary auditory cortex or elsewhere) are reset such that their phase is optimal for response enhancement. Along these lines, the impact of pre-stimulus oscillatory activity on stimulus-related responses has been demonstrated in humans, such that pre-stimulus alpha activity can predict the accuracy of perception (Romei et al., 2008; see also Silvanto and Pascual-Leone, 2008; Busch et al., 2009; Mathewson et al., 2009; Britz and Michel, 2010). Such being said, no pre-stimulus effects were observed in our analyses, even when pre-stimulus baseline correction was not performed. However, we – of course – cannot entirely exclude the possibility of pre-stimulus effects that are not phase-locked to stimulus onset. It may be possible to resolve the contributions of specific frequencies within specific brain regions to multisensory interactions and behavior by applying single-trial time-frequency analyses subsequent to source estimations (e.g., Gonzalez Andino et al., 2005a,b; see also Van Zaen et al., 2010 for methods for adaptive frequency tracking).

A role for post-stimulus oscillatory activity has also been advocated as a mechanism. Oscillatory activity within the beta frequency range (13–30 Hz) has been proposed to contribute to reaction time speed. After applying a Morlet wavelet transform to EEG data acquired during the completion of an auditory-visual simple detection task, Senkowski et al. (2006) observed a negative correlation between evoked beta power over the 50–170ms time period and reaction time speed (when measured collectively across unisensory and multisensory conditions). Greater beta power was thus linked to faster reaction times, independent of stimulus condition, though separate analyses in this study also indicate that beta power was significantly stronger for multisensory conditions. Consequently, it is challenging to resolve whether any correlation was driven by the variability across stimulus conditions (i.e., data from the same participant from the different stimulus conditions was treated as independent measurements in the correlation analysis). Others have obtained the opposite result. Pogosyan et al. (2009) observed that voluntary reaction times were slowed after the entrainment of motor cortical activity at 20 Hz using transcranial alternating-current stimulation. Clearly, additional research is required to resolve these discrepant findings and to draw closer links between alterations in oscillatory activity and multisensory interactions specifically, as opposed to general influences on reaction time speed irrespective of stimulation conditions.

While we did not specifically examine oscillatory activity in our work (though this is the focus of ongoing analyses), it may be the case that modulations in synchronous neural activity are in turn driving the differences we observed in terms of response strength. That is, stronger responses may be the consequence of more synchronous activity, whereas weaker responses may be the consequence of less synchronous activity. One possibility, then, is that enhanced synchrony leads to faster processing (e.g., by meeting a threshold level more quickly) either in the brain region(s) exhibiting enhanced synchrony or in a downstream target region. This variety of mechanism would also be consistent with our observation of weaker responses to multisensory vs unisensory conditions on trials when reaction times were fast. If a mechanism based on fluctuations in neural synchrony is indeed at the root of whether reaction times are ultimately fast, then an immediately ensuing question is to identify the cause/mediator for such fluctuations. Another possibility, which is not exclusive to the above, is that distinct anatomic pathways are involved in trials producing faster vs slower reaction times. There is now evidence, at least in non-human primates, for both cortico-cortical (Cappe and Barone, 2005; Hackett et al., 2007a; Smiley et al., 2007) and cortico-thalamo-cortical (Hackett et al., 2007b; Cappe et al., 2009) pathways; whereby, auditory and somatosensory information can converge and interact. Whether there is any variation in the routing of responses that would in turn result in faster or slower reaction times is unknown in the case of auditory-somatosensory interactions, but there is evidence that suggests that such is occurring during a simple visual detection task (Saron et al., 2003; Martuzzi et al., 2006). While we cannot unequivocally rule out this latter possibility as a contributing mechanism, our analyses (both of the surface-recorded ERPs and source estimations thereof) would suggest that our effects derive from modulations in the strength of activity of a common network of brain regions (though the sensitivity of ERPs to activity in sub-cortical structures is limited).

Outlook

There is a growing body of evidence highlighting the behavioral and perceptual relevance of early and low-level multisensory phenomena. The early stages of such research are bound to focus more on demonstrating such links, rather than on identifying their precise neurophysiological mechanisms. However, it is also the case that such findings are likely a harbinger of future advances in the analysis of multisensory datasets either because of improvements in signal analysis, either in humans (e.g., Gonzalez Andino et al., 2005a; Murray et al., 2005, 2008; Sperdin et al., 2009) or in animals (e.g., Rowland and Stein, 2008), or because of the improved feasibility of conducting experiments in awake and behaving animals (e.g., Wang et al., 2008; Cappe et al., 2010). Such notwithstanding, it will be essential – in our view – to go beyond the demonstration of correlates of behavioral outcome. Instead, causal evidence will be needed to draw firmer conclusions about underlying mechanisms. One promising direction is the combination of different brain imaging methods in humans to render greater mechanistic information. The urgency for this line of research also comes from accumulating evidence showing that multisensory interactions play a central role during both development (e.g., Lickliter and Bahrick, 2000; Neil et al., 2006; Wallace et al., 2006; Polley et al., 2008) and aging (e.g., Laurienti et al., 2006; Hugenschmidt et al., 2009), as well as in neurodegenerative and neuropsychiatric illnesses (e.g., Ross et al., 2007; Blau et al., 2009; see also comment by Wallace, 2009). Consequently, there is growing interest in applying multisensory phenomena as a potential diagnostic and rehabilitation tool. In conclusion, the field of multisensory research now seems well-poised to conjoin its neurophysiological and psychophysical findings.

Conflict of Interest Statement

This research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work has been supported by the Swiss National Science Foundation (grant #3100AO-118419 to Micah M. Murray) and the Leenaards Foundation (2005 Prize for the Promotion of Scientific Reserch to Micah M. Murray). We thank John Foxe for his support during the acquisition and analysis of the original dataset.

Key Concept

- Multisensory interactions

Operationally, we use this term to refer to any instance where information from one sensory modality affects the processing and/or response to that from another sensory modality.

- Redundant signals effect

The improvement in stimulus detection and discrimination performance following presentation of multiple stimuli.

- Probability summation

The increased likelihood of improved performance by presenting multiple, independent stimuli – each competing to mediate motor responses. By analogy, the likelihood of rolling at least one ‘6’ on a die increases the more often it is rolled. Facilitation exceeding probability summation is a hallmark of neural response interactions (though the converse need not be true).

- Neural response interactions

To assess multisensory integration, responses to multisensory stimulus pairs are typically contrasted with the summed responses to the constituent unisensory stimuli. Differences are indicative of synergistic responses under multisensory conditions.

- Spatial rule

This principle of multisensory interactions, originally described in the seminal works of Stein and Meredith (1993), stipulates that facilitative effects depend on external stimuli falling within the excitatory zone of a neuron's receptive field for each sensory modality. This zone need not be synonymous with the external position of the stimuli across the senses.

- Source estimations

This refers to solutions to the bio-electromagnetic inverse problem, which is the reconstruction of intracranial sources based on surface recordings. A fuller treatment can be found in Michel et al. (2004) or Grave de Peralta Menendez et al. (2004).

- Electrical neuroimaging analyses

This is a set of analyses of EEG data involving reference-independent measures (as opposed to individual ERP waveforms) of the entire electric field recorded at the scalp, and the estimation of intracranial sources (e.g., Michel et al., 2004; Murray et al., 2008).

- Event-related potential

This is the time-locked average of EEG epochs to an external or internal event. The term was first introduced by Vaughan Jr. (1969).

- Response strength vs response topography

Strength refers to the spatial standard deviation across the electric field at the scalp, quantified as the root mean square of the voltage measurements across the electrode montage. Topography refers to the shape of the electric field with changes quantified as the root mean square of the difference between two strength-normalized measurements across electrodes.

- Supra-additive and sub-additive interactions

These terms refer to when the response to multisensory stimuli are greater or less than, respectively, the summed responses to the constituent unisensory conditions. In the case of electrical neuroimaging analyses of event-related potentials, we restrict the use of these terms to instances of modulations in response strength rather than response topography.

Biography

Micah M. Murray is Associate Director of the Electroencephalography Brain Mapping Core of the Center for Biomedical Imaging and a Senior Research Scientist within the Neuropsychology and Neurorehabilitation Service and Radiology Service at the Centre Hospitalier Universitaire Vaudois and University of Lausanne, Switzerland. His research focuses on brain dynamics of sensory and cognitive processes in humans, combining tools from psychophysics and neuropsychology, electroencephalography, functional magnetic resonance imaging, and transcranial magnetic stimulation.

Micah M. Murray is Associate Director of the Electroencephalography Brain Mapping Core of the Center for Biomedical Imaging and a Senior Research Scientist within the Neuropsychology and Neurorehabilitation Service and Radiology Service at the Centre Hospitalier Universitaire Vaudois and University of Lausanne, Switzerland. His research focuses on brain dynamics of sensory and cognitive processes in humans, combining tools from psychophysics and neuropsychology, electroencephalography, functional magnetic resonance imaging, and transcranial magnetic stimulation.

References

- Blau V., van Atteveldt N., Ekkebus M., Goebel R., Blomert L. (2009). Reduced neural integration of letters and speech sounds links phonological and reading deficits in adult dyslexia. Curr. Biol. 19, 503–508 10.1016/j.cub.2009.01.065 [DOI] [PubMed] [Google Scholar]

- Britz J., Michel C. M. (2010). Errors can be related to pre-stimulus differences in ERP topography and their concomitant sources. Neuroimage 49, 2774–2782 10.1016/j.neuroimage.2009.10.033 [DOI] [PubMed] [Google Scholar]

- Busch N. A., Dubois J., VanRullen R. (2009). The phase of ongoing EEG oscillations predicts visual perception. J. Neurosci. 29, 7869–7876 10.1523/JNEUROSCI.0113-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappe C., Barone P. (2005). Heteromodal connections supporting multisensory integration at low levels of cortical processing in the monkey. Eur. J. Neurosci. 22, 2886–2902 10.1111/j.1460-9568.2005.04462.x [DOI] [PubMed] [Google Scholar]

- Cappe C., Morel A., Barone P., Rouiller E. M. (2009). The thalamocortical projection systems in primate: an anatomical support for multisensory and sensorimotor interplay. Cereb. Cortex 19, 2025–2037 10.1093/cercor/bhn228 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappe C., Murray M. M., Barone P., Rouiller E. M. (2010). Multisensory facilitation of behavior in monkeys: effects of stimulus intensity. J. Cogn. Neurosci., published online 4 January. [DOI] [PubMed] [Google Scholar]

- De Lucia M., Michel C. M., Clarke S., Murray M. M. (2007). Single-subject EEG analysis based on topographic information. Int. J. Bioelectromagnetism. 9, 168–171 [Google Scholar]

- De Lucia M., Michel C. M., Murray M. M. (2010). Comparing ICA-based and single-trial topographic ERP analyses. Brain Topogr. [in press.] [DOI] [PubMed] [Google Scholar]

- Foxe J. J. (2009). Multisensory integration: frequency tuning of audio-tactile integration. Curr. Biol. 19, 373–375 10.1016/j.cub.2009.03.029 [DOI] [PubMed] [Google Scholar]

- Foxe J. J., Morocz I. A., Murray M. M., Higgins B. A., Javitt D. C., Schroeder C. E. (2000). Multisensory auditory-somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res. Cogn. Brain Res. 10, 77–83 10.1016/S0926-6410(00)00024-0 [DOI] [PubMed] [Google Scholar]

- Foxe J. J., Wylie G. R., Martinez A., Schroeder C. E., Javitt D. C., Guilfoyle D., Ritter W., Murray M. M. (2002). Auditory-somatosensory multisensory processing in auditory association cortex: an fMRI study. J. Neurophysiol. 88, 540–543 [DOI] [PubMed] [Google Scholar]

- Fu K. M., Johnston T. A., Shah A. S., Arnold L., Smiley J., Hackett T. A., Garraghty P. E., Schroeder C. E. (2003). Auditory cortical neurons respond to somatosensory stimulation. J. Neurosci. 23, 7510–7515 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar A. A., Schroeder C. E. (2006). Is neocortex essentially multisensory? Trends Cogn. Sci. (Regul. Ed.) 10, 278–285 [DOI] [PubMed] [Google Scholar]

- Gillmeister H., Eimer M. (2007). Tactile enhancement of auditory detection and perceived loudness. Brain Res. 1160, 58–68 10.1016/j.brainres.2007.03.041 [DOI] [PubMed] [Google Scholar]

- Gonzalez Andino S. L., Murray M. M., Foxe J. J., de Peralta Menendez R. G. (2005a). How single-trial electrical neuroimaging contributes to multisensory research. Exp. Brain Res. 166, 298–304 10.1007/s00221-005-2371-1 [DOI] [PubMed] [Google Scholar]

- Gonzalez Andino S. L., Michel C. M., Thut G., Landis T., Grave de Peralta R. (2005b). Prediction of response speed by anticipatory high-frequency (gamma band) oscillations in the human brain. Hum. Brain Mapp. 24, 50–58 10.1002/hbm.20056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grave de Peralta Menendez R., Murray M. M., Michel C. M., Martuzzi R., Gonzalez Andino S. L. (2004). Electrical neuroimaging based on biophysical constraints. Neuroimage 21, 527–539 10.1016/j.neuroimage.2003.09.051 [DOI] [PubMed] [Google Scholar]

- Hackett T. A., Smiley J. F., Ulbert I., Karmos G., Lakatos P., de la Mothe L. A., Schroeder C. E. (2007a). Sources of somatosensory input to the caudal belt areas of auditory cortex. Perception 36, 1419–1430 10.1068/p5841 [DOI] [PubMed] [Google Scholar]

- Hackett T. A., De La Mothe L. A., Ulbert I., Karmos G., Smiley J., Schroeder C. E. (2007b). Multisensory convergence in auditory cortex, II. Thalamocortical connections of the caudal superior temporal plane. J. Comp. Neurol. 502, 924–952 10.1002/cne.21326 [DOI] [PubMed] [Google Scholar]

- Hirokawa J., Bosch M., Sakata S., Sakurai Y., Yamamori T. (2008). Functional role of the secondary visual cortex in multisensory facilitation in rats. Neuroscience 153, 1402–1417 10.1016/j.neuroscience.2008.01.011 [DOI] [PubMed] [Google Scholar]

- Hugenschmidt C. E., Mozolic J. L., Tan H., Kraft R. A., Laurienti P. J. (2009). Age-related increase in cross-sensory noise in resting and steady-state cerebral perfusion. Brain Topogr. 21, 241–251 10.1007/s10548-009-0098-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ilmoniemi R. J., Kičič D. (2010). Methodology for combined TMS and EEG. Brain Topogr. 22, 233–248 10.1007/s10548-009-0123-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones E. G., Powell T. P. (1970). An anatomical study of converging sensory pathways within the cerebral cortex of the monkey. Brain 93, 793–820 10.1093/brain/93.4.793 [DOI] [PubMed] [Google Scholar]

- Kayser C., Logothetis N. K. (2007). Do early sensory cortices integrate cross-modal information? Brain Struct. Funct. 212, 121–132 10.1007/s00429-007-0154-0 [DOI] [PubMed] [Google Scholar]

- Kayser C., Petkov C. I., Augath M., Logothetis N. K. (2005). Integration of touch and sound in auditory cortex. Neuron 48, 373–384 10.1016/j.neuron.2005.09.018 [DOI] [PubMed] [Google Scholar]

- Komura Y., Tamura R., Uwano T., Nishijo H., Ono T. (2005). Auditory thalamus integrates visual inputs into behavioral gains. Nat. Neurosci. 8, 1203–1209 10.1038/nn1528 [DOI] [PubMed] [Google Scholar]

- Lakatos P., Chen C. M., O'Connell M. N., Mills A., Schroeder C. E. (2007). Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron 53, 279–292 10.1016/j.neuron.2006.12.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P., Karmos G., Mehta A. D., Ulbert I., Schroeder C. E. (2008). Entrainment of neuronal oscillations as a mechanism of attentional selection. Science 320, 110–113 10.1126/science.1154735 [DOI] [PubMed] [Google Scholar]

- Lakatos P., O'Connell M. N., Barczak A., Mills A., Javitt D. C., Schroeder C. E. (2009). The leading sense: supramodal control of neurophysiological context by attention. Neuron 64, 419–430 10.1016/j.neuron.2009.10.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamarre Y., Busby L., Spidalieri G. (1983). Fast ballistic arm movements triggered by visual, auditory, and somesthetic stimuli in the monkey. I. Activity of precentral cortical neurons. J. Neurophysiol. 50, 1343–1358 [DOI] [PubMed] [Google Scholar]

- Laurienti P. J., Burdette J. H., Maldjian J. A., Wallace M. T. (2006). Enhanced multisensory integration in older adults. Neurobiol. Aging 27, 1155–1163 10.1016/j.neurobiolaging.2005.05.024 [DOI] [PubMed] [Google Scholar]

- Lehmann D. (1987). Principles of spatial analysis. In Handbook of electroencephalography and clinical neurophysiology, Vol. 1, Methods of analysis of brain electrical and magnetic signals, Gevins A. S., Remond A., eds (Amsterdam, Elsevier; ), pp. 309–405 [Google Scholar]

- Lickliter R., Bahrick L. E. (2000). The development of infant intersensory perception: advantages of a comparative convergent-operations approach. Psychol. Bull. 126, 260–280 10.1037/0033-2909.126.2.260 [DOI] [PubMed] [Google Scholar]

- Martuzzi R., Murray M. M., Maeder P. P., Fornari E., Thiran J. -P., Clarke S., Michel C. M., Meuli R. A. (2006). Visuo-motor pathways in humans revealed by event-related fMRI. Exp. Brain Res. 170, 472–487 10.1007/s00221-005-0232-6 [DOI] [PubMed] [Google Scholar]

- Martuzzi R., Murray M. M., Michel C. M., Thiran J. -P., Maeder P. P., Clarke S., Meuli R. A. (2007). Multisensory interactions within human primary cortices revealed by BOLD dynamics. Cereb. Cortex 17, 1672–1679 10.1093/cercor/bhl077 [DOI] [PubMed] [Google Scholar]

- Mathewson K. E., Gratton G., Fabiani M., Beck D. M., Ro T. (2009). To see or not to see: prestimulus alpha phase predicts visual awareness. J. Neurosci. 29, 2725–2732 10.1523/JNEUROSCI.3963-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michel C. M., Koenig T., Brandies D., Gianotti L. R. R., Wackermann J. (2009). Electrical Neuroimaging. Cambridge, UK, Cambridge University Press; 10.1017/CBO9780511596889 [DOI] [Google Scholar]

- Michel C. M., Murray M. M., Lantz G., Gonzalez S., Spinelli L., Grave de Peralta R. (2004). EEG source imaging. Clin. Neurophysiol. 115, 2195–2222 10.1016/j.clinph.2004.06.001 [DOI] [PubMed] [Google Scholar]

- Miller J. (1982). Divided attention: evidence for coactivation with redundant signals. Cogn. Psychol. 14, 247–279 10.1016/0010-0285(82)90010-X [DOI] [PubMed] [Google Scholar]

- Miller J., Ulrich R., Lamarre Y. (2001). Locus of the redundant-signals effect in bimodal divided attention: a neurophysiological analysis. Percept. Psychophys. 63, 555–562 [DOI] [PubMed] [Google Scholar]

- Miniussi C., Thut G. (2010). Combining TMS and EEG offers new prospects in cognitive neuroscience. Brain Topogr. 22, 249–256 10.1007/s10548-009-0083-8 [DOI] [PubMed] [Google Scholar]

- Murray M. M., Brunet D., Michel C. M. (2008). Topographic ERP analyses: a step-by-step tutorial review. Brain Topogr. 20, 249–264 10.1007/s10548-008-0054-5 [DOI] [PubMed] [Google Scholar]

- Murray M. M., De Lucia M., Brunet D., Michel C. M. (2009). Principles of topographic analyses for electrical neuroimaging. In Brain Signal Analysis, Handy T. C., ed. (Cambridge, MIT Press; ), pp. 21–54 [Google Scholar]

- Murray M. M., Foxe J. J., Higgins B. A., Javitt D. C., Schroeder C. E. (2001). Visuo-spatial neural response interactions in early cortical processing during a simple reaction time task: a high-density electrical mapping study. Neuropsychologia 39, 828–844 10.1016/S0028-3932(01)00004-5 [DOI] [PubMed] [Google Scholar]

- Murray M. M., Molholm S., Michel C. M., Heslenfeld D. J., Ritter W., Javitt D. C., Schroeder C. E., Foxe J. J. (2005). Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb. Cortex 15, 963–974 10.1093/cercor/bhh197 [DOI] [PubMed] [Google Scholar]

- Murray M. M., Spierer L. (2009). Auditory spatio-temporal brain dynamics and their consequences for multisensory interactions in humans. Hear. Res. 258, 121–133 10.1016/j.heares.2009.04.022 [DOI] [PubMed] [Google Scholar]

- Neil P. A., Chee-Ruiter C., Scheier C., Lewkowicz D. J., Shimojo S. (2006). Development of multisensory spatial integration and perception in humans. Dev. Sci. 9, 454–464 10.1111/j.1467-7687.2006.00512.x [DOI] [PubMed] [Google Scholar]

- Pogosyan A., Gaynor L. D., Eusebio A., Brown P. (2009). Boosting cortical activity at Beta-band frequencies slows movement in humans. Curr. Biol. 19, 1637–1641 10.1016/j.cub.2009.07.074 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polley D. B., Hillock A. R., Spankovich C., Popescu M. V., Royal D. W., Wallace M. T. (2008). Development and plasticity of intra- and intersensory information processing. J. Am. Acad. Audiol. 19, 780–798 10.3766/jaaa.19.10.6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raab D. H. (1962). Statistical facilitation of simple reaction times. Trans. N Y Acad. Sci. 24, 574–590 [DOI] [PubMed] [Google Scholar]

- Ramos-Estebanez C., Merabet L. B., Machii K., Fregni F., Thut G., Wagner T. A., Romei V., Amedi A., Pascual-Leone A. (2007). Visual phosphene perception modulated by subthreshold cross modal sensory stimulation. J. Neurosci. 27, 4178–4181 10.1523/JNEUROSCI.5468-06.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romei V., Murray M. M., Cappe C., Thut G. (2009). Preperceptual and stimulus-selective enhancement of low-level human visual cortex excitability by sounds. Curr. Biol. 19, 1799–1805 10.1016/j.cub.2009.09.027 [DOI] [PubMed] [Google Scholar]

- Romei V., Murray M. M., Merabet L. B., Thut G. (2007). Occipital transcranial magnetic stimulation has opposing effects on visual and auditory stimulus detection: implications for multisensory interactions. J. Neurosci. 27, 11465–11472 10.1523/JNEUROSCI.2827-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romei V., Rihs T., Brodbeck V., Thut G. (2008). Resting electroencephalogram alpha-power over posterior sites indexes baseline visual cortex excitability. Neuroreport 19, 203–208 10.1097/WNR.0b013e3282f454c4 [DOI] [PubMed] [Google Scholar]

- Ross L. A., Saint-Amour D., Leavitt V. M., Molholm S., Javitt D. C., Foxe J. J. (2007). Impaired multisensory processing in schizophrenia: deficits in the visual enhancement of speech comprehension under noisy environmental conditions. Schizophr. Res. 97, 173–183 10.1016/j.schres.2007.08.008 [DOI] [PubMed] [Google Scholar]

- Rowland B. A., Quessy S., Stanford T. R., Stein B. E. (2007). Multisensory integration shortens physiological response latencies. J. Neurosci. 27, 5879–5884 10.1523/JNEUROSCI.4986-06.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowland B. A., Stein B. E. (2007). Multisensory integration produces an initial response enhancement. Front. Integr. Neurosci. 1:4. 10.3389/neuro.07.004.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowland B. A., Stein B. E. (2008). Temporal profiles of response enhancement in multisensory integration. Front. Neurosci. 2, 218–224 10.3389/neuro.01.033.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saron C. D., Foxe J. J., Simpson G. V., Vaughan H. G., Jr. (2003). Interhemispheric visuomotor activation: spatiotemporal electrophysiology related to reaction time. In The Parallel Brain, Zaidel E., Iacoboni M., eds (Cambridge, MA, MIT Press; ), pp. 171–219 [Google Scholar]

- Schroeder C. E., Foxe J. (2005). Multisensory contributions to low-level, ‘unisensory’ processing. Curr. Opin. Neurobiol. 15, 454–458 10.1016/j.conb.2005.06.008 [DOI] [PubMed] [Google Scholar]

- Schroeder C. E., Lakatos P. (2009). Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 32, 9–18 10.1016/j.tins.2008.09.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder C. E., Lakatos P., Kajikawa Y., Partan S., Puce A. (2008). Neuronal oscillations and visual amplification of speech. Trends Cogn. Sci. 12, 106–113 10.1016/j.tics.2008.01.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder C. E., Lindsley R. W., Specht C., Marcovici A., Smiley J. F., Javitt D. C. (2001). Somatosensory input to auditory association cortex in the macaque monkey. J. Neurophysiol. 85, 1322–1327 [DOI] [PubMed] [Google Scholar]

- Schroeder C. E., Smiley J., Fu K. G., McGinnis T., O'Connell M. N., Hackett T. A. (2003). Anatomical mechanisms and functional implications of multisensory convergence in early cortical processing. Int. J. Psychophysiol. 50, 5–17 10.1016/S0167-8760(03)00120-X [DOI] [PubMed] [Google Scholar]

- Senkowski D., Molholm S., Gomez-Ramirez M., Foxe J. J. (2006). Oscillatory beta activity predicts response speed during a multisensory audiovisual reaction time task: a high-density electrical mapping study. Cereb. Cortex 16, 1556–1565 10.1093/cercor/bhj091 [DOI] [PubMed] [Google Scholar]

- Senkowski D., Schneider T. R., Foxe J. J., Engel A. K. (2008). Crossmodal binding through neural coherence: implications for multisensory processing. Trends Neurosci. 31, 401–409 10.1016/j.tins.2008.05.002 [DOI] [PubMed] [Google Scholar]

- Silvanto J., Pascual-Leone A. (2008). State-dependency of transcranial magnetic stimulation. Brain Topogr. 21, 1–10 10.1007/s10548-008-0067-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smiley J. F., Hackett T. A., Ulbert I., Karmas G., Lakatos P., Javitt D. C., Schroeder C. E. (2007). Multisensory convergence in auditory cortex, I. Cortical connections of the caudal superior temporal plane in macaque monkeys. J. Comp. Neurol. 502, 894–923 10.1002/cne.21325 [DOI] [PubMed] [Google Scholar]

- Sperdin H. F., Cappe C., Foxe J. J., Murray M. M. (2009). Early, low-level auditory-somatosensory multisensory interactions impact reaction time speed. Front. Integr. Neurosci. 3:2. 10.3389/neuro.07.002.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sperdin H. F., Cappe C., Murray M. M. (2010). Auditory-somatosensory multisensory interactions in humans and the role of spatial attention. Neuropsychologia, in revision. [DOI] [PubMed] [Google Scholar]

- Stein B. E., Meredith M. A. (1993). The Merging of the Senses. Cambridge, Massachusetts, MIT Press [Google Scholar]

- Stein B. E., Stanford T. R. (2008). Multisensory integration: current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 9, 255–266 10.1038/nrn2331 [DOI] [PubMed] [Google Scholar]

- Sumby W. H., Pollack I. (1954). Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 26, 212–215 10.1121/1.1907309 [DOI] [Google Scholar]

- Talsma D., Doty T. J., Woldorff M. G. (2007). Selective attention and audiovisual integration: is attending to both modalities a prerequisite for early integration? Cereb. Cortex. 17, 679–690 10.1093/cercor/bhk016 [DOI] [PubMed] [Google Scholar]

- Talsma D., Woldorff M. G. (2005). Selective attention and multisensory integration: multiple phases of effects on the evoked brain activity. J. Cogn. Neurosci. 17, 1098–1114 10.1162/0898929054475172 [DOI] [PubMed] [Google Scholar]

- Tajadura-Jimenez A., Kitagawa N., Valjamae A., Zampini M., Murray M. M., Spence C. (2009). Auditory-somatosensory multisensory interactions are spatially modulated by stimulated body surface and acoustic spectra. Neuropsychologia 47, 195–203 10.1016/j.neuropsychologia.2008.07.025 [DOI] [PubMed] [Google Scholar]

- Talairach J., Tournoux P. (1988). Co-planar Stereotaxic Atlas of the Human Brain. New York, Thieme [Google Scholar]

- Thut G., Pascual-Leone A. (2010). A review of combined TMS-EEG studies to characterize lasting effects of repetitive TMS and assess their usefulness in cognitive and clinical neuroscience. Brain Topogr. 22, 219–232 10.1007/s10548-009-0115-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Zaen J., Uldry L., Duchêne C., Prudat Y., Meuli R. A., Murray M. M., Vesin J. M. (2010). Adaptive tracking of EEG oscillations. J. Neurosci. Methods 186, 97–106 10.1016/j.jneumeth.2009.10.018 [DOI] [PubMed] [Google Scholar]

- Vaughan H. G., Jr. (1969). The relationship of brain activity to scalp recordings of event-related potentials. In Averaged Evoked Potentials; Methods, Results, Evaluations, Donchin E., Lindsley D. B. eds [Washington, D.C., National Aeronautics and Space Administration (NASA No. SP 191)], pp. 45–94 [Google Scholar]

- Wallace M. T. (2009). Dyslexia: bridging the gap between hearing and reading. Curr. Biol. 19, R260–R262 10.1016/j.cub.2009.01.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace M. T., Carriere B. N., Perrault T. J., Jr., Vaughan J. W., Stein B. E. (2006). The development of cortical multisensory integration. J. Neurosci. 26, 11844–11849 10.1523/JNEUROSCI.3295-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace M. T., Ramachandran R., Stein B. E. (2004). A revised view of sensory cortical parcellation. Proc. Natl. Acad. Sci. U.S.A. 101, 2167–2172 10.1073/pnas.0305697101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Y., Celebrini S., Trotter Y., Barone P. (2008). Visuo-auditory interactions in the primary visual cortex of the behaving monkey: electrophysiological evidence. BMC Neurosci. 9, 79. 10.1186/1471-2202-9-79 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yau J. M., Olenczak J. B., Dammann J. F., Bensmaia S. J. (2009). Temporal frequency channels are linked across audition and touch. Curr. Biol. 19, 561–566 10.1016/j.cub.2009.02.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zampini M., Torresan D., Spence C., Murray M. M. (2007). Auditory- somtosensory multisensory interactions in front and rear space. Neuropsychologia 45, 1869–1877 10.1016/j.neuropsychologia.2006.12.004 [DOI] [PubMed] [Google Scholar]