Abstract

Objective

The primary goal of the present study was to determine how cochlear implant melody recognition was affected by the frequency range of the melodies, the harmonicity of these melodies, and the number of activated electrodes. The secondary goal was to investigate whether melody recognition and speech recognition were differentially affected by the limitations imposed by cochlear implant processing.

Design

Four experiments were conducted. In the first experiment, eleven cochlear implant users used their clinical processors to recognize melodies of complex harmonic tones with their fundamental frequencies being in the low (104-262 Hz), middle (207-523 Hz), and high (414-1046 Hz) ranges. In the second experiment, melody recognition with pure tones was compared to melody recognition with complex harmonic tones in 4 subjects. In the third experiment, melody recognition was measured as a function of the number of electrodes in 5 subjects. In the fourth experiment, vowel and consonant recognition were measured as a function of the number of electrodes in the same 5 subjects who participated in the third experiment.

Results

Frequency range significantly affected cochlear implant melody recognition with higher frequency ranges producing better performance. Pure tones produced significantly better performance than complex harmonic tones. Increasing the number of activated electrodes did not affect performance with low- and middle-frequency melodies, but produced better performance with high-frequency melodies. Large individual variability was observed for melody recognition but its source seemed to be different from the source of the large variability observed in speech recognition.

Conclusion

Contemporary cochlear implants do not adequately encode either temporal pitch or place pitch cues. Melody recognition and speech recognition require different signal processing strategies in future cochlear implants.

Cochlear implants electrically stimulate the auditory nerve to restore hearing for those suffering from severe-to-profound hearing loss. Though great strides have been made in speech recognition for cochlear implant users, their music appreciation, particularly melody recognition, is still poor. With the exception of a recent study (Nimmons, Kang, Drennan, Longnion, Ruffin, Worman et al., 2007) which showed that an implant user (L4) scored 81% in a 12-item closed-set melody recognition task, all studies have found low-level performance (12-40% correct) in melody recognition tasks without rhythmic cues. As an extreme example, a “star” cochlear implant listener could hold a successful phone conversation but might not be able to tell apart two simple nursery melodies (e.g., Gfeller, Turner, Mehr, Woodworth, Fearn, Knutson et al., 2002; Kong, Cruz, Jones, & Zeng, 2004; Spahr & Dorman, 2004).

Both acoustic and perceptual differences between speech and music sounds contribute to this somewhat surprising dichotomy. In general, spectral and temporal envelope cues contribute to speech intelligibility (e.g., Remez, Rubin, Pisoni, & Carrell, 1981; Shannon, Zeng, Kamath, Wygonski, & Ekelid, 1995), whereas spectral and temporal fine-structure cues contribute to melody recognition (e.g., Smith, Delgutte, & Oxenham, 2002). Harmonicity is a particularly important fine structure that consists of multiples of the fundamental frequency. A harmonic sound usually produces salient pitch in normal-hearing listeners but appears to be inaccessible to cochlear-implant listeners.

Current cochlear implants all use similar signal processing: The algorithm first filters a sound into 12-24 narrow-band signals, then extracts the temporal envelope from all or some of these narrow-band signals with the highest levels; the algorithm compresses the temporal envelope, uses it to amplitude-modulate a fixed rate carrier, and finally stimulates the electrode corresponding to the narrow-band signal's center frequency (McDermott, McKay, & Vandali, 1992; Vandali, Whitford, Plant, & Clark, 2000; Wilson, Finley, Lawson, Wolford, Eddington, & Rabinowitz, 1991). The signal processing algorithm in current cochlear implants provides a reasonably good representation of spectral and temporal envelope cues, but cannot encode spectral and temporal fine-structure cues accurately. For instance, formants can be approximated by relative changes in current levels across the intra-cochlear electrode array, whereas harmonic fine structure is not explicitly extracted and, even if it is extracted, there is no guarantee that it will be encoded properly due to mismatched frequency-to-place maps in cochlear implants (e.g., Boex, Baud, Cosendai, Sigrist, Kos, & Pelizzone, 2006). Similarly, temporal envelopes can be accurately represented by amplitude modulation of a relatively high-rate carrier, but temporal fine structure is lost in cochlear implants (e.g., Wilson, Finley, Lawson, & Zerbi, 1997).

Overall, recent studies examining relative contributions of spectral and temporal cues have focused on speech stimuli with little or no attention paid to music perception (Geurts & Wouters, 2001; Green, Faulkner, & Rosen, 2002, 2004; Laneau, Wouters, & Moonen, 2004; Nie, Zeng, & Barco, 2005; Qin & Oxenham, 2005; Xu, Tsai, & Pfingst, 2002; Zeng, Nie, Stickney, Kong, Vongphoe, Bhargave et al., 2005). These previous studies suggest that the current cochlear implants produce weak perception of voice pitch. Here, we evaluated relative contributions of spectral and temporal cues to melody recognition in cochlear implant listeners. First, we systemically measured recognition of melodies in three frequency ranges: low (104-262 Hz), middle (207-523 Hz), and high (414-1046 Hz) range. The low melody range was similar to the voice fundamental frequency range, whereas the high range was similar to the first formant frequency range (Hillenbrand, Getty, Clark, & Wheeler, 1995). Because a 200-400 Hz low-pass cutoff frequency is typically used to extract temporal envelope, pitch information in the low and middle melody ranges would be likely conveyed by the temporal envelope cue, whereas pitch information in the high range would be likely conveyed by the electrode place cue in modern multi-electrode cochlear implants. Exp. 1 will explicitly test this hypothesis.

Second, we compared melody recognition between pure tones and complex harmonic tones over the same three frequency ranges. A pure tone is likely to stimulate one electrode, or at most two electrodes if the frequency of a note happens to fall between two adjacent filters. Therefore, a pure tone should allow for a clearer pitch contour based on a single excitation site than a complex harmonic tone does. Even if the complex harmonic tone can be resolved by cochlear implant filters, they will unlikely maintain the tonotopic relationship required for harmonic pitch perception because of the mismatched frequency-to-place map (e.g., Boex, Baud, Cosendai, Sigrist, Kos, & Pelizzone, 2006; Oxenham, Bernstein, & Penagos, 2004). Exp. 2 will test the hypothesis that melodies consisting of pure tones are easier to recognize than those consisting of complex harmonic tones, particularly in the low-frequency range where the cochlear implant filters' frequency resolution is high.

Third, we tested melody recognition while varying the number of activated electrodes. Previous studies found that speech recognition improved with an increased number of electrodes but reached a plateau unexpectedly with 7-10 electrodes (Fishman, Shannon, & Slattery, 1997; Friesen, Shannon, Baskent, & Wang, 2001; Garnham, O'Driscoll, Ramsden, & Saeed, 2002). Because pitch perception of complex harmonic tones and speech recognition rely on different acoustic cues, one might predict a different pattern of results between melody recognition and speech recognition as a function of the number of electrodes. To explicitly test this hypothesis, Exp. 3 tested melody recognition while Exp. 4 tested speech recognition as a function of the number of electrodes as a comparison.

Experiment 1: Melody recognition as a function of frequency range

Previous studies on cochlear implant melody performance used a similar 200-600 Hz, middle-frequency frequency range, and found generally poor performance in different subject populations with different devices (Gfeller & Lansing, 1991; Gfeller, Turner, Mehr, Woodworth, Fearn, Knutson et al., 2002; Kong, Cruz, Jones, & Zeng, 2004; McDermott, 2004; Nimmons, Kang, Drennan, Longnion, Ruffin, Worman et al., 2007; Spahr & Dorman, 2004). To determine the contribution of the stimulus to melody recognition independent of the contribution of the device and subject, we measured within-subject performance across a wide range of melody frequencies in a sample size of 11 CI users.

Methods

Subjects

Eleven cochlear implant users (C1-C11) were recruited based on availability for this experiment. They all grew up in the United States and were familiar with melodies used in the present study. None of them had received formal music training prior to or as a part of this study. Some of them had participated in earlier research in our laboratory. They were all native English speakers and had relatively good phoneme recognition in quiet (48-91% correct for consonants and 31-82% for vowels). Table 1 describes age at the time of testing, etiology, age of onset, duration of deafness, duration of implant usage, implant device, speech processing strategy, number of electrodes, and other relevant information. The average age of the participants was 56 years at the time of the experiment. The average duration of implant usage was 3 years. Depending on the device, as well as availability, the number of activated electrodes could be 12, 14, 16, or 20.

Table 1.

Background information for the 11 cochlear implant subjects involved in the study. Age, onset age, duration of deafness, and duration of cochlear implant usage variables all expressed in the unit of “years”. Cons represents percent correct scores for consonant recognition in quiet. Vowel represents percent scores for vowel recognition in quiet. NT stands for “Not Tested”.

| Subject | Age | Etiology | Onset Age | Onset Type | Dur of deafness | Dur of CI | Device-Strategy | # electrode | Cons | Vowel |

|---|---|---|---|---|---|---|---|---|---|---|

| C1 | 70 | Tumor and virus | 63 | Sudden | 1 | 7 | N24-ACE | 20 | 84 | 41 |

| C2 | 72 | Unknown | 56 | Progressive | 11 | 4 | N24-ACE | 16 | 54 | 51 |

| C3 | 79 | Unknown | 65 | Progressive | 12 | 2 | N24-CIS | 12 | 58 | 49 |

| C4 | 42 | Otosclerosis | 36 | Progressive | 3 | 3 | N24-SPEAK | 20 | 78 | 64 |

| C5 | 51 | Unknown | 45 | Progressive | 2 | 4 | CII-CIS | 16 | 86 | 68 |

| C6 | 69 | Nerve damage | 50 | Sudden | 16 | 3 | CII-CIS | 14 | 74 | 70 |

| C7 | 26 | Genetic | Birth | Congenital | 19 | 7 | Medel-CIS | 12 | 88 | 67 |

| C8 | 41 | High fever | 6 | Sudden | 31 | 4 | Medel-CIS | 12 | 48 | 31 |

| C9 | 29 | Genetic | Birth | Congenital | 27 | 2 | CII-CIS | 16 | NT | NT |

| C10 | 61 | Genetic | 40 | Progressive | 7 | 1 | N24-ACE | 20 | 91 | 82 |

| C11 | 77 | Blood clot | 38 | Sudden | 38 | 1 | N24-ACE | 20 | 76 | 64 |

Stimuli

Twelve isochronous melodies were used, including: Old MacDonald Had a Farm; Twinkle, Twinkle, Little Star; London Bridge is Falling Down; Mary Had a Little Lamb; This Old Man; Yankee Doodle; She'll be Coming 'Round the Mountain; Happy Birthday; Lullaby, and Goodnight; Take Me Out to the Ball Game; Auld Lang Syne; and Star Spangled Banner. The fundamental frequency of these melodies spanned three different frequency ranges: low (104 - 262 Hz), middle (207 - 523 Hz), and high (414 -1046 Hz). The low-range melodies were 1 octave lower, while the high-range melodies were 1 octave higher than the middle-range melodies which had been used in a previous study conducted in the same laboratory (Kong, Stickney, & Zeng, 2005). These melodies were created using a software synthesizer (ReBirth RB-338, version 2.0.1). Each melody had 12-14 notes and contained no rhythmic cues. Each note had the same duration (350) with a silent period of 150 msec between notes. The harmonic structure contained no apparent resonance frequencies and had a roughly -20 dB per octave slope from 200 to 4,000 Hz.

Procedure

A 12-item closed-set, forced-choice procedure was used. All subjects used their clinical maps in this experiment. The melodies were presented via direct connection from the speech processor to the computer. The volume was adjusted to a comfortable level as judged by each individual subject. Melodies with the same frequency range were treated as a block and the presentation order of the blocks was randomized. Within each block, each melody was played 3 times in random order, producing a block of 36 melodies. The subjects had a short practice session (10-15 minutes) before taking the formal test in order to become familiar with both the graphic-user-interface and the stimuli. The subjects received feedback on accuracy during training or but no feedback during testing.

Results

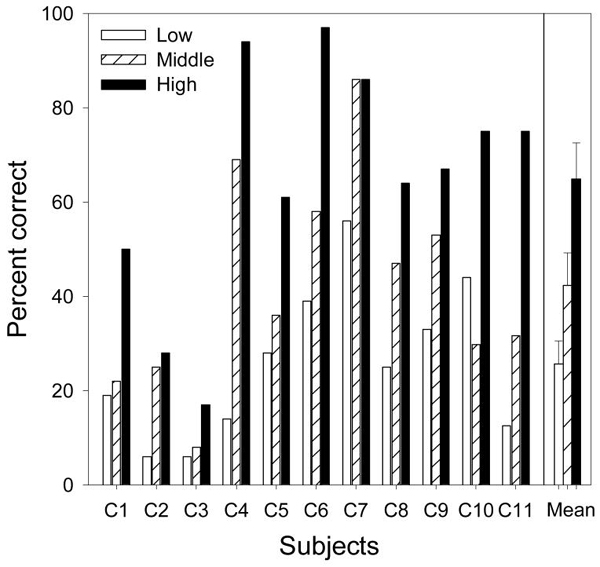

Figure 1 shows percent correct scores for melody recognition as a function of melody frequency range (low range=unfilled bars; middle range=hatched bars; high range=filled bars) by 11 cochlear implant users. The mean melody recognition score at each frequency range plus one standard error from the mean is depicted at the rightmost position on the x-axis. Note the monotonic increase in performance as the melody frequency range is increased. A repeated-measures ANOVA showed that the melody frequency range was a significant factor influencing cochlear implant melody recognition [F(2,20) = 43.0, p<0.001]. On average, the percent score increased significantly from 25.7% for low-frequency melodies to 42.3% for middle-frequency melodies and to 64.9% for high-frequency melodies (post-hoc, two-tailed, paired t-test, p<0.025).

Figure 1.

Melody recognition scores in the low, middle, and high frequency ranges for 11 cochlear implant users, as well as their mean scores. Error bars represent one standard error from the mean.

Note also the large individual variability in Fig. 1. The individual percent scores ranged from chance performance at 6% (C2 and C3) to 56% (C7) for the low range melodies, from 8% (C3) to 86% (C7) for the middle range melodies, and from 17% (C3) to almost perfect performance at 97% (C6) for the high range melodies.

Experiment 2: Melody recognition with puretone stimuli

Because Exp. 1 used complex harmonic tones, their pitch can be theoretically conveyed by multiple mechanisms, including the salient pitch of the resolved lower harmonics and the less salient pitch of the unresolved harmonics (Oxenham, Bernstein, & Penagos, 2004; Ritsma, 1967; Schouten, Ritsma, & Cardozo, 1962). The variable but generally poor performance in implant melody recognition in Exp. 1 could be a result of poor temporal representation, or poor place representation, or both. Exp. 2 used pure tones as the stimuli in an attempt to improve melody recognition. The working hypothesis was that a pure tone would accurately represent the temporal pitch at least up to the roughly 400-Hz envelope extraction frequency (Kiefer, von Ilberg, Rupprecht, Hubner-Egner, & Knecht, 2000; Loizou, Stickney, Mishra, & Assmann, 2003; Vandali, 2001). In addition, the pure tone would represent the place pitch more accurately than the complex harmonic tone because the former activates 1 or 2 electrodes whereas the latter activates more electrodes.

Four of the 11 cochlear implant subjects participated in this experiment (C1, C2, C8, and C9). The same 3 sets of 12 melodies were used in this experiment. In contrast to complex harmonic tones in Exp. 1, the notes in the melodies were presented using pure tones. However, the same procedure as in Exp. 1 was used in this experiment.

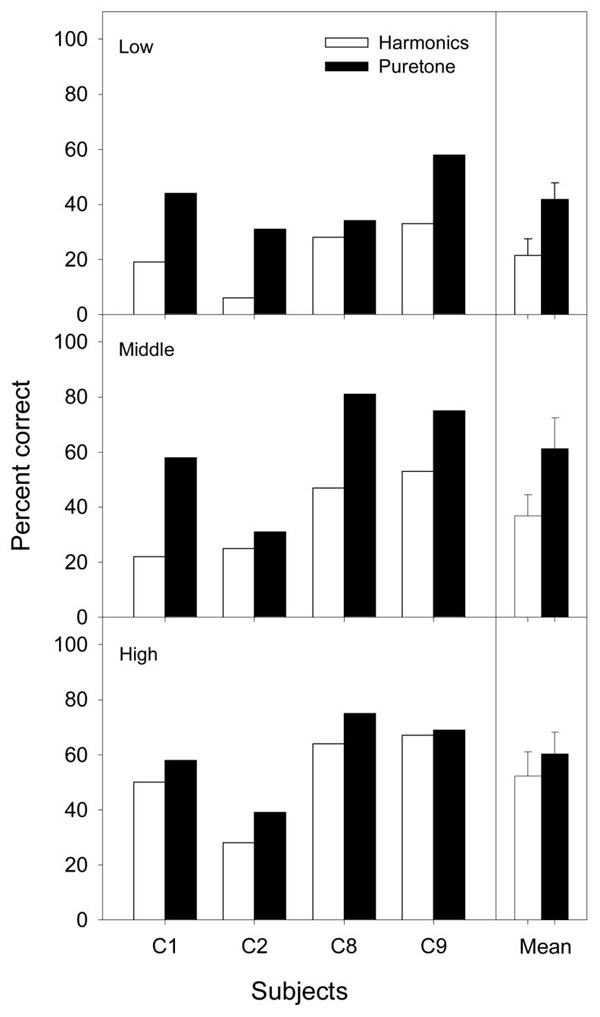

Results

Figure 2 compares the percent of melodies identified correctly for complex harmonic tones (unfilled bars, from Figure 1) and pure tones (filled bars) in low, middle, and high frequency ranges (low=top panel; middle=middle panel; high=bottom panel). Individual data are shown on the x-axis, while the rightmost position of each panel shows the mean score and standard error from the mean. Overall, pure tone melodies produced significantly better performance than complex harmonic tones [F(1,3) = 84.2, p < 0.05]. The frequency range was also a significant factor in determining the amount of improvement [F(2,6) = 15.5, p<0.05]. The improvement was 20.3 percentage points in the low-frequency range, 24.5 percentage points in the middle-frequency range, and 8.0 percentage points in the high-frequency range. These results are consistent with the working hypothesis that better representation of both the temporal and place pitches produced better melody recognition with pure tones than complex harmonic tones in cochlear implants, particularly for low and middle range melodies. The present results also indicate that current cochlear implants cannot process complex pitch effectively.

Figure 2.

Melody recognition scores for 4 cochlear implant users in the low, middle, and high frequency ranges with melodies presented as either complex harmonic tones or pure tones. The rightmost part of the figure has mean melody recognition scores for complex harmonic tones and pure tones in each respective melody range. Error bars represent one standard error from the mean.

Experiment 3: Melody recognition as a function of number of electrodes

Another means of evaluating relative contributions of temporal and spectral cues to cochlear implant performance is to reduce the number of activating electrodes systematically. This method has been used effectively to study speech recognition (Fishman, Shannon, & Slattery, 1997; Friesen, Shannon, Baskent, & Wang, 2001; Garnham, O'Driscoll, Ramsden, & Saeed, 2002) but has not been applied to music perception. Because recognition of speech and melody relies on very different cues (Smith, Delgutte, & Oxenham, 2002), it would be interesting to see whether melody recognition produces different patterns of results than speech recognition with reduced number of electrodes.

Methods

Subjects

Only Nucleus users participated in the next two experiments because of limited subject availability and access to the Nucleus device mapping interface. These Nucleus subjects were C1, C2, C3, C10, and C11.

Stimuli

The same 12 melodies consisting of complex harmonic tones were used in this experiment. Again, the melodies were presented in the low, middle, and high frequency ranges.

Procedure

This experiment followed the same protocol as used in previous cochlear implant speech studies (e.g., Fishman, Shannon, & Slattery, 1997). The intent of this experimental protocol was to study the effects of processing parameters rather than the effect of learning on melody recognition. Experimental CIS strategies with different numbers of electrodes were implemented using a laboratory owned speech processor. Six conditions were created to produce experimental maps with 1, 2, 4, 7, 10, or 20 activated electrodes for subjects C1, C10, and C11. Since C2 had only 16 functioning electrodes, this subject's 6 maps had 1, 2, 4, 7, 10, or 16 electrodes. Accordingly, C3 had only 4 maps with 1, 4, 7, or 12 electrodes. The test order of these experimental maps was randomized for each subject.

Table 2 shows frequency ranges mapped to the activated electrodes for the 6 maps in subject C1. Subject C10 and C11 had similar maps. Since subjects C2 and C3 had only 16 and 12 usable electrodes in their original map, the exact number of their activated electrodes was different from C1, C10 and C11. These electrodes were chosen to be evenly spaced and as far apart as possible. For example, subject C2 had electrodes 11 and 19 activated for the 2-electrode map condition. All subjects used MP1+2 stimulation mode. Threshold (T) and comfortable loudness (C) levels were determined individually for each electrode. Because the per-channel stimulation rate increased with the number of activated electrodes being reduced when making a new map, the T and C levels were reassessed to ensure that the new map produced a sensation between threshold and comfortable loudness. All subjects practiced with a body-worn loaner processor until they felt comfortable to take the melody test.

Table 2.

Maps for subject C1 showing frequencies presented to the when 20, 10, 7, 4, 2, or 1 electrode(s) are activated.

| Electrode | 22 | 21 | 20 | 19 | 18 | 17 | 16 | 15 | 13 | 12 | 11 | 10 | 9 | 8 | 7 | 6 | 4 | 3 | 2 | 1 |

| Freq in Hz (20) |

187-312 | 312-437 | 437-562 | 562-687 | 687-812 | 812-937 | 937-1062 | 1062-1187 | 1187-1437 | 1437-1687 | 1687-1937 | 1937-2312 | 2312-2687 | 2687-3187 | 3187-3687 | 3687-4312 | 4312-5062 | 5062-5937 | 5937-6937 | 6937-7937 |

| Electrode | 21 | 19 | 17 | 15 | 12 | 10 | 8 | 6 | 3 | 1 | ||||||||||

| Freq in Hz (10) |

187-437 | 437-687 | 687-1062 | 1062-1437 | 1437-1937 | 1937-2562 | 2562-3437 | 3437-4562 | 4562-6062 | 6062-7937 | ||||||||||

| Electrode | 21 | 18 | 15 | 11 | 8 | 4 | 1 | |||||||||||||

| Freq in Hz (7) |

187-562 | 562-1062 | 1062-1562 | 1562-2312 | 2312-3437 | 3437-5187 | 5187-7937 | |||||||||||||

| Electrode | 20 | 15 | 9 | 3 | ||||||||||||||||

| Freq in Hz (4) |

187-1062 | 1062-2062 | 2062-4062 | 4062-7937 | ||||||||||||||||

| Electrode | 16 | 6 | ||||||||||||||||||

| Freq in Hz (2) |

187-1062 | 1062-7937 | ||||||||||||||||||

| Electrode | 10 | |||||||||||||||||||

| Freq in Hz (1) |

187-7937 |

Results

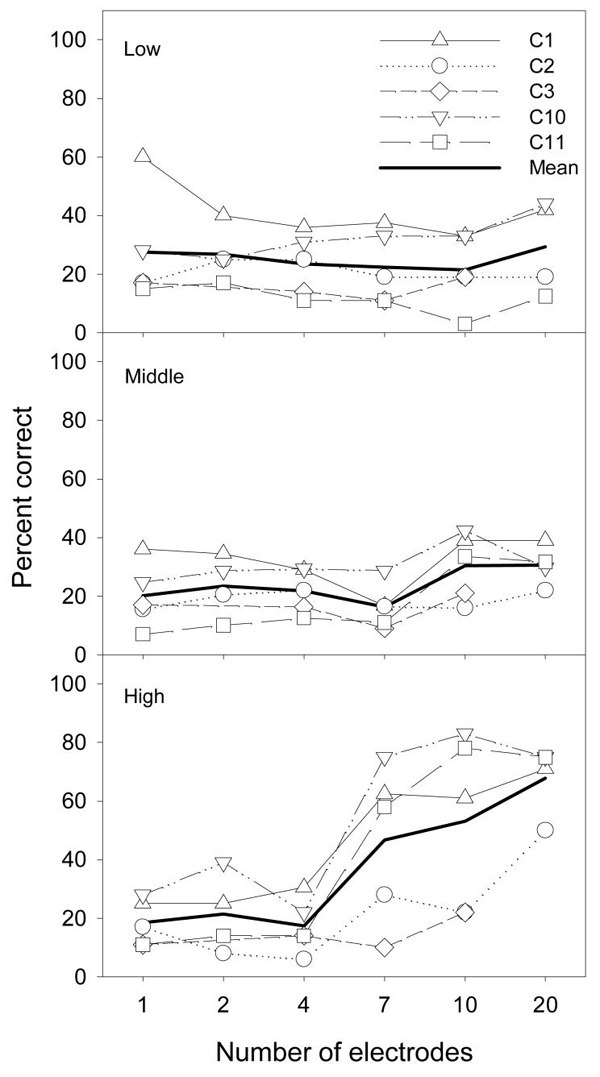

Figure 3 shows percent correct scores in melody recognition as a function of the number of electrodes in the low (top panel), middle (middle panel), and high (bottom panel) frequency ranges. The open symbols represent individual data, while the thick line represents the mean data. To perform statistical analysis, subject C2's data with 16 electrodes were used as if 20 electrodes had been activated, but subject C3's data were not used because of two missing data points.

Figure 3.

Melody recognition for 5 cochlear implant users in the low, middle, and high frequency ranges with 1, 2, 4, 7, 10, or 20 activated electrodes. The thick lines represent the mean scores.

Overall, the number of activated electrodes was a significant factor [F(5,15) = 15.2, p < 0.05], but the frequency range of the melodies was not significant [F(2,6) = 4.3, p > 0.05]. Because there was a significant interaction between the two factors [F(10,30) = 24.8, p < 0.05], the effect of the number of electrodes was analyzed for each frequency range. The results showed that, on average, the implant subjects performed equally poorly (20-30% correct) regardless of the number of electrodes for both the low-frequency range [F(5,15) = 0.2, p > 0.05] and the middle-frequency range [F(5,15) = 2.9, p > 0.05]. Note the relatively large individual variability, particularly for C1 who scored 60% correct for the low frequency range with only 1 electrode. In contrast, the melody recognition scores increased from around 20% with 1-4 electrodes to 40-65% with 7-20 electrodes for the high-frequency range [F(5,15) = 34.0, p < 0.05]. These results suggest that current cochlear-implant users only have access to the pitch cues under limited conditions. They have limited access to the temporal pitch cue when the pitch range is relatively low and to the place pitch cue when the melody frequency range is high (400-1000 Hz).

Experiment 4: Comparison with speech recognition

To directly compare the difference between speech and music perception, Exp. 4 measured phoneme recognition and compared it to melody recognition in the same cochlear-implant subjects tested in Exp. 3.

Methods

Subjects

The same 5 cochlear-implant users who participated in Exp. 3 participated in this experiment (C1, C2, C3, C10, and C11).

Stimuli

Vowel recognition was tested using 12 /hvd/ syllables, including: heed, hod, hud, hid, hawed, heard, head, hood, hayed, had, who'd, and hoed (Hillenbrand, Getty, Clark, & Wheeler, 1995). Ten different talkers were used, including 5 males and 5 females.

Consonant recognition was tested using 20 /aCa/ syllables, including: aba, aga, ama, asa, ava, acha, aja, ana, asha, awa, ada, aka, apa, ata, aya, afa, ala, ara, atha, and aza (Shannon, Jensvold, Padilla, Robert, & Wang, 1999). Eight talkers were used, including 5 males and 3 females.

Procedure

Stimuli were presented via direct connection from the sound card of a computer to a loaner speech processor. The loaner speech processor, just as in Exp. 3, had both the clinical map and the experimental maps with different number of activated electrodes.

The subjects practiced as long as needed to feel comfortable with the interface and stimulus before taking each of the vowel and consonant tests. The vowel and consonant tests were given in a sound attenuated room. The volume for each of the tests was adjusted to a comfortable level deemed by the subject by changing both the volume on the computer and the volume on the loaner speech processor. Each token from each talker was presented 3 times each in random order. Overall percent correct scores were reported as the outcome measure.

Results

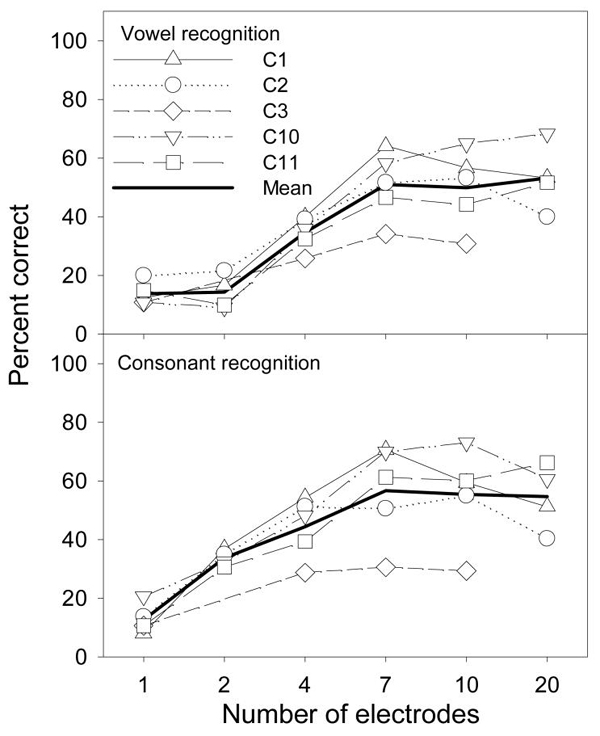

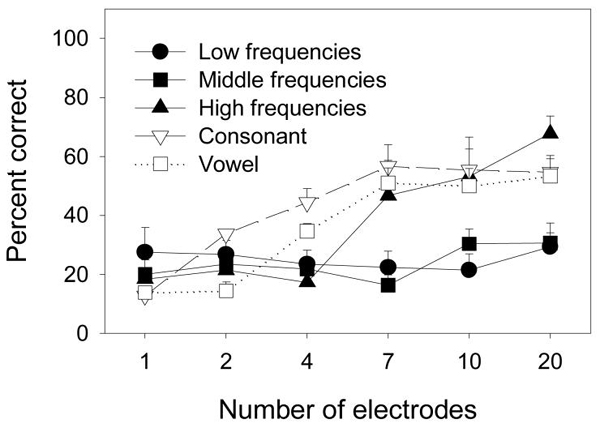

Figure 4 shows vowel (top panel) and consonant (bottom panel) recognition scores as a function of the number of activated electrodes. Open symbols represent individual data and the thick line represents the mean data. The chance performance is 8% for vowel recognition and 5% for consonant recognition. Although the same overall trend was observed for both the vowel and consonant data, there was a significant difference between the sets of data [F(1,3) = 18.4, p < 0.05]. A post-hoc test showed a significant difference for the 2-electrode condition (vowel=14% and consonant=34%) and the 4-electrode condition (vowel=35% and consonant=44%). In contrast to the flat pattern observed with low- and middle-range melody recognition, both vowel and consonant data showed a monotonic function with the number of electrodes [F(5,15) = 52.7, p < 0.05]. Similar to previous results (e.g., Fishman, Shannon, & Slattery, 1997), both vowel and consonant recognition reached an asymptotic level of performance (~50%) at 7 electrodes.

Figure 4.

Vowel and consonant recognition scores for 5 cochlear implant users with 1, 2, 4, 7, 10, or 20 activated electrodes. The thick lines represent the mean scores.

Figure 5 contrasts mean phoneme recognition scores (open symbols) with mean melody scores (filled symbols), obtained from the same subjects. There were clear similarities and differences between melody and speech data. High-frequency melody recognition resembled the monotonically increasing function for vowel and consonant recognition, whereas low- and middle-frequency melody recognition functions were flat. ANOVA showed no statistically significant difference between high-frequency melody recognition and either consonant recognition [F(1,3) = 1.1, p > 0.05] or vowel recognition [F(1,3) = 0.2, p > 0.05].

Figure 5.

Mean melody recognition scores for cochlear implant users in the low, middle, and high frequency ranges using various numbers of electrodes (filled symbols). Mean vowel and consonant scores for cochlear implant users are also depicted as open symbols. Error bars represent one standard error from the mean.

On the other hand, ANOVA revealed a statistically significant difference between low-frequency melody recognition and consonant recognition [F(1,3) = 11.6, p < 0.05] as well as between middle-frequency melody recognition and consonant recognition [F(1,3) = 64.1, p < 0.05]. There was a statistically significant difference between middle-frequency melody recognition and vowel recognition [F(1,3) = 30.6, p < 0.05], but there was no statistically significant difference between low-frequency melody recognition and vowel recognition [F(1,3) = 5.6, p = 0.09]. If only 1-, 4-, 7-, and 10-electrode conditions were considered, which would allow for C3's data to be included, then a significant difference would be observed between low-frequency melody recognition and vowel recognition [F(1,4) = 11.3, p < 0.05].

Discussion

Melody recognition and speech recognition

To further examine the relationship between melody and speech recognition, a two-tailed, bi-variant, Pearson correlation was performed between three-range melody recognition (Fig. 1) and both consonant and vowel recognition scores (the last two columns in Table 1). Not surprisingly, there was significant correlation between low-range and middle-range melody recognition (r=0.64, p<0.05), low-range and high-range melody recognition (r=0.62, p<0.05), as well as middle-range and high-range melody recognition (r=0.80, p<0.01). Similarly, there was significant correlation between consonant and vowel recognition (r=0.72, p<0.05). However, there was no significant correlation between any range of melody recognition and phoneme recognition (p>0.05). This lack of correlation supports the hypothesis that melody recognition and speech recognition involve two different processes (e.g., Smith, Delgutte, & Oxenham, 2002; Zatorre, Belin, & Penhune, 2002).

Individual variability

Although the overall performance was low, relatively large individual variability was still observed in cochlear-implant melody recognition (see Fig. 1). It is possible that some of this large variability is due to device limitation. For example, subject C4, the user of the SPEAK strategy, which typically has a pulse rate of 250 Hz and therefore is not likely to convey useful temporal pitch cues for even the low frequency range melodies, shows the largest difference in performance across frequency ranges of any of the subjects. To further probe possible causes and predictors of melody recognition, correlational analysis was performed between subject variables (see Table 1) and melody recognition (Fig. 1). Except for a significant negative correlation (r=-0.79, p<0.01) between age and middle-range melody recognition, no significant correlation was found between any of the three subject variables (age, duration of deafness, and duration of implant use) or any of the three-ranges of melody recognition scores. The present study has a relatively small sample size, but its results suggest that at present we do not understand the causes of the large individual variability, nor can we predict it.

Melody recognition and frequency resolution

Because psychophysical data have shown that cochlear-implant users can discriminate temporal pitch up to at least 300 Hz (e.g., Pijl, 1995; Zeng, 2002), one might be surprised to find that the low-range melody recognition actually produced the poorest result. The reason for this discrepancy is that the psychophysical data are obtained by varying stimulation rates on a single electrode, whereas the melody recognition data are collected through the implant user's speech processor (CIS, SPEAK or ACE) that does not explicitly extract and encode the fundamental frequency. This discrepancy clearly reveals the limitation of current cochlear implants in extracting and encoding low-frequency temporal information (e.g., Geurts & Wouters, 2001; Green, Faulkner, & Rosen, 2002, 2004; Qin & Oxenham, 2005; Xu, Tsai, & Pfingst, 2002; Zeng, Nie, Stickney, Kong, Vongphoe, Bhargave et al., 2005).

Better performance with the high frequency range, as shown in the present study, is likely a result of increased frequency resolution in the frequency-to-electrode map. Current implants typically use the Greenwood-like map, which provides proportionally more channels in the middle- to high-frequency range than the low-frequency range, at least on the logarithmic scale (Greenwood, 1990). For example, to divide a broad bandwidth from 100 to 8000 Hz into 20 narrow frequency bands, the Greenwood map would allocate 2 channels in the 200-400 Hz frequency range but 4-5 channels in the 500-1000 Hz range (see Table 3). Indeed, psychophysical evidence has shown lower pitch difference limens by allocating more filters in the F0 range (Geurts & Wouters, 2001) or better melody recognition with semitone-spaced filters for mid-frequency range melodies (Kasturi & Loizou, 2007).

However, caution need to be taken when attempting to generalize the relationship between increased frequency resolution and functional improvement (e.g., Carroll & Zeng, 2007). Assuming roughly an equal frequency range so that frequency resolution is proportional to the number of electrodes, one would expect that users of the 22-electrode Nucleus device might do better than users of the 16-electrode Clarion or the 12-electrode Med-El devices. The sample size was too small in the present study to make statistically significant comparison, but no such trend was observed (see Fig. 1). A much larger sample size between 15 Clarion and 15 Nucleus users found no difference in melody recognition either (Spahr & Dorman, 2004). Channel interactions may be a factor limiting the extent to which spectral resolution can be improved by increasing the number of stimulating electrodes.

Pure tones versus complex harmonic tones

The present study showed significantly better melody recognition with pure tones than with complex harmonic tones. This difference in performance demonstrates a lack of coding of complex pitch in current implants. When listening to pure tones, typically one electrode is being stimulated per note. However, when listening to complex harmonic tones, several electrodes are likely to be stimulated per note. If the lower harmonics were resolved and appropriately mapped to the right place in the cochlea, one would not expect such a difference in performance between pure tones and harmonic stimuli (Dai, 2000; Oxenham, Bernstein, & Penagos, 2004; Ritsma, 1967). Existing evidence suggests that we have not restored a pure tone sensation in terms of frequency resolution and frequency selectivity, let alone the harmonic sensation in electric hearing (Zeng, 2004).

Encoding melody in cochlear implants

Although music is seldom made up of pure tones in real-life listening situations, better performance with pure tones than harmonic tones suggests that as an intermediate step, pure tone-like stimuli can be used to improve encoding of melody in cochlear implants. The reason for taking this intermediate step is twofold. First, in the foreseeable future, it is going to be difficult to restore harmonic sensation, particularly the salient resolved harmonic pitch, in cochlear implants. Second, the pure tone encoding approach can be relatively easily implemented to address the shortcoming of current commercial cochlear implants, which only implicitly encode the pitch information in the temporal envelope domain.

Explicit coding of pitch can be implemented by varying the carrier rate on one or more electrodes (e.g., Fearn & Wolfe, 2000; Pijl, 1995; Zeng, 2002) or the modulation frequency of a fixed carrier (e.g., Geurts & Wouters, 2001; Laneau, Wouters, & Moonen, 2006; McKay, McDermott, & Clark, 1994). Melody recognition or melody-related performance can be improved using such a strategy, but often at the expense of speech recognition (e.g., Green, Faulkner, Rosen, & Macherey, 2005).

An alternative approach is to use place cues to improve melody or voice pitch recognition. Wouters and colleagues have suggested that a greater number of electrodes be used than are currently allocated in the fundamental frequency range (Geurts & Wouters, 2004; Laneau, Wouters, & Moonen, 2004). Given the limited total available number of electrodes in the current devices, a trade-off is required in channel densities between low and high frequencies. Other options of increasing the number of electrodes will require new electrode design and technology (Hillman, Badi, Normann, Kertesz, & Shelton, 2003) or the application of virtual channels (Donaldson, Kreft, & Litvak, 2005; Townshend, Cotter, Van Compernolle, & White, 1987). It is likely that the extent to which the actual cochlear implant users may benefit from these enriched signals or alternative processing schemes will depend on their residual capacities.

Summary

Cochlear-implant melody recognition is best when listening to high-frequency melodies (414-1046 Hz) as opposed to low- or middle- frequency melodies (104-262 and 207-523 Hz) and when listening to pure tones instead of complex harmonic tones. The number of electrodes had no effect on low- and middle-frequency melody recognition, but affected high-frequency melody recognition in a way similar to phoneme recognition. Similar to speech recognition, cochlear implant melody recognition exhibited large individual variability. The source of the large variability in melody recognition seems to be different from the source of the variability in speech recognition.

Acknowledgments

We would like to thank all of the cochlear-implant users who devoted their time to this study. We also thank Rachel Cruz for her help in programming the cochlear-implant user's map and three anonymous reviewers for their helpful comments. Sonya Singh received a research fellowship from the UCI Undergraduate Research Opportunity Program and is currently a medical student at UCI. Ying-Yee Kong is currently affiliated with the Department of Speech-Language Pathology and Audiology at Northeastern University, Boston. This work was supported by a grant from the National Institutes of Health (2RO1 DC002267).

References

- Boex C, Baud L, Cosendai G, Sigrist A, Kos MI, Pelizzone M. Acoustic to electric pitch comparisons in cochlear implant subjects with residual hearing. J Assoc Res Otolaryngol. 2006;7(2):110–124. doi: 10.1007/s10162-005-0027-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll J, Zeng FG. Fundamental frequency discrimination and speech perception in noise in cochlear implant simulations. Hear Res. 2007;231(1-2):42–53. doi: 10.1016/j.heares.2007.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dai H. On the relative influence of individual harmonics on pitch judgment. J Acoust Soc Am. 2000;107(2):953–959. doi: 10.1121/1.428276. [DOI] [PubMed] [Google Scholar]

- Donaldson GS, Kreft HA, Litvak L. Place-pitch discrimination of single-versus dual-electrode stimuli by cochlear implant users (L) J Acoust Soc Am. 2005;118(2):623–626. doi: 10.1121/1.1937362. [DOI] [PubMed] [Google Scholar]

- Fearn R, Wolfe J. Relative importance of rate and place: experiments using pitch scaling techniques with cochlear implants recipients. Ann Otol Rhinol Laryngol Suppl. 2000;185:51–53. doi: 10.1177/0003489400109s1221. [DOI] [PubMed] [Google Scholar]

- Fishman KE, Shannon RV, Slattery WH. Speech recognition as a function of the number of electrodes used in the SPEAK cochlear implant speech processor. J Speech Lang Hear Res. 1997;40(5):1201–1215. doi: 10.1044/jslhr.4005.1201. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Baskent D, Wang X. Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J Acoust Soc Am. 2001;110(2):1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- Garnham C, O'Driscoll M, Ramsden, Saeed S. Speech understanding in noise with a Med-El COMBI 40+ cochlear implant using reduced channel sets. Ear Hear. 2002;23(6):540–552. doi: 10.1097/00003446-200212000-00005. [DOI] [PubMed] [Google Scholar]

- Geurts L, Wouters J. Coding of the fundamental frequency in continuous interleaved sampling processors for cochlear implants. J Acoust Soc Am. 2001;109(2):713–726. doi: 10.1121/1.1340650. [DOI] [PubMed] [Google Scholar]

- Geurts L, Wouters J. Better place-coding of the fundamental frequency in cochlear implants. J Acoust Soc Am. 2004;115(2):844–852. doi: 10.1121/1.1642623. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Lansing CR. Melodic, rhythmic, and timbral perception of adult cochlear implant users. J Speech Hear Res. 1991;34(4):916–920. doi: 10.1044/jshr.3404.916. [DOI] [PubMed] [Google Scholar]

- Gfeller K, Turner C, Mehr M, Woodworth G, Fearn R, Knutson J, et al. Recognition of familiar melodies by adult cochlear implant recipients and normal-hearing adults. Cochlear Implants International. 2002;3(1):29–53. doi: 10.1179/cim.2002.3.1.29. [DOI] [PubMed] [Google Scholar]

- Green T, Faulkner A, Rosen S. Spectral and temporal cues to pitch in noise-excited vocoder simulations of continuous-interleaved-sampling cochlear implants. J Acoust Soc Am. 2002;112(5 Pt 1):2155–2164. doi: 10.1121/1.1506688. [DOI] [PubMed] [Google Scholar]

- Green T, Faulkner A, Rosen S. Enhancing temporal cues to voice pitch in continuous interleaved sampling cochlear implants. J Acoust Soc Am. 2004;116(4 Pt 1):2298–2310. doi: 10.1121/1.1785611. [DOI] [PubMed] [Google Scholar]

- Green T, Faulkner A, Rosen S, Macherey O. Enhancement of temporal periodicity cues in cochlear implants: effects on prosodic perception and vowel identification. J Acoust Soc Am. 2005;118(1):375–385. doi: 10.1121/1.1925827. [DOI] [PubMed] [Google Scholar]

- Greenwood DD. A cochlear frequency-position function for several species--29 years later. J Acoust Soc Am. 1990;87(6):2592–2605. doi: 10.1121/1.399052. [DOI] [PubMed] [Google Scholar]

- Hillenbrand J, Getty LA, Clark MJ, Wheeler K. Acoustic characteristics of American English vowels. J Acoust Soc Am. 1995;97(5 Pt 1):3099–3111. doi: 10.1121/1.411872. [DOI] [PubMed] [Google Scholar]

- Hillman T, Badi AN, Normann RA, Kertesz T, Shelton C. Cochlear nerve stimulation with a 3-dimensional penetrating electrode array. Otol Neurotol. 2003;24(5):764–768. doi: 10.1097/00129492-200309000-00013. [DOI] [PubMed] [Google Scholar]

- Kasturi K, Loizou PC. Effect of filter spacing on melody recognition: acoustic and electric hearing. J Acoust Soc Am. 2007;122(2):EL29–34. doi: 10.1121/1.2749078. [DOI] [PubMed] [Google Scholar]

- Kiefer J, von Ilberg C, Rupprecht V, Hubner-Egner J, Knecht R. Optimized speech understanding with the continuous interleaved sampling speech coding strategy in patients with cochlear implants: effect of variations in stimulation rate and number of channels. Ann Otol Rhinol Laryngol. 2000;109(11):1009–1020. doi: 10.1177/000348940010901105. [DOI] [PubMed] [Google Scholar]

- Kong YY, Cruz R, Jones JA, Zeng FG. Music perception with temporal cues in acoustic and electric hearing. Ear Hear. 2004;25(2):173–185. doi: 10.1097/01.aud.0000120365.97792.2f. [DOI] [PubMed] [Google Scholar]

- Kong YY, Stickney GS, Zeng FG. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117(3 Pt 1):1351–1361. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- Laneau J, Wouters J, Moonen M. Relative contributions of temporal and place pitch cues to fundamental frequency discrimination in cochlear implantees. J Acoust Soc Am. 2004;116(6):3606–3619. doi: 10.1121/1.1823311. [DOI] [PubMed] [Google Scholar]

- Laneau J, Wouters J, Moonen M. Improved music perception with explicit pitch coding in cochlear implants. Audiol Neurootol. 2006;11(1):38–52. doi: 10.1159/000088853. [DOI] [PubMed] [Google Scholar]

- Loizou PC, Stickney G, Mishra L, Assmann P. Comparison of speech processing strategies used in the Clarion implant processor. Ear Hear. 2003;24(1):12–19. doi: 10.1097/01.AUD.0000052900.42380.50. [DOI] [PubMed] [Google Scholar]

- McDermott HJ. Music perception with cochlear implants: a review. Trends Amplif. 2004;8(2):49–82. doi: 10.1177/108471380400800203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDermott HJ, McKay CM, Vandali AE. A new portable sound processor for the University of Melbourne/Nucleus Limited multielectrode cochlear implant. J Acoust Soc Am. 1992;91(6):3367–3371. doi: 10.1121/1.402826. [DOI] [PubMed] [Google Scholar]

- McKay CM, McDermott HJ, Clark GM. Pitch percepts associated with amplitude-modulated current pulse trains in cochlear implantees. J Acoust Soc Am. 1994;96(5 Pt 1):2664–2673. doi: 10.1121/1.411377. [DOI] [PubMed] [Google Scholar]

- Nie K, Zeng FG, Barco A. Spectral and temporal cues in cochlear implant speech perception. Ear Hear. 2005 doi: 10.1097/01.aud.0000202312.31837.25. submitted. [DOI] [PubMed] [Google Scholar]

- Nimmons GL, Kang RS, Drennan WR, Longnion J, Ruffin C, Worman T, et al. Clinical Assessment of Music Perception in Cochlear Implant Listeners. Otol Neurotol. 2007 doi: 10.1097/mao.0b013e31812f7244. Publish Ahead of Print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oxenham AJ, Bernstein JG, Penagos H. Correct tonotopic representation is necessary for complex pitch perception. Proc Natl Acad Sci U S A. 2004;101(5):1421–1425. doi: 10.1073/pnas.0306958101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pijl S. Musical pitch perception with pulsatile stimulation of single electrodes in patients implanted with the Nucleus cochlear implant. Ann Otol Rhinol Laryngol Suppl. 1995;166:224–227. [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of envelope-vocoder processing on F0 discrimination and concurrent-vowel identification. Ear Hear. 2005;26(5):451–460. doi: 10.1097/01.aud.0000179689.79868.06. [DOI] [PubMed] [Google Scholar]

- Remez RE, Rubin PE, Pisoni DB, Carrell TD. Speech perception without traditional speech cues. Science. 1981;212(4497):947–949. doi: 10.1126/science.7233191. [DOI] [PubMed] [Google Scholar]

- Ritsma RJ. Frequencies Dominant in the Perception of the Pitch of Complex Sounds. The Journal of the Acoustical Society of America. 1967;42(1):191–198. doi: 10.1121/1.1910550. [DOI] [PubMed] [Google Scholar]

- Schouten JF, Ritsma RJ, Cardozo BL. Pitch of the Residue. Journal of the Acoustical Society of America. 1962;34(8 (Part 2)):1418–1424. [Google Scholar]

- Shannon RV, Jensvold A, Padilla M, Robert ME, Wang X. Consonant recordings for speech testing. J Acoust Soc Am. 1999;106(6):L71–74. doi: 10.1121/1.428150. [DOI] [PubMed] [Google Scholar]

- Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M. Speech recognition with primarily temporal cues. Science. 1995;270(5234):303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- Smith ZM, Delgutte B, Oxenham AJ. Chimaeric sounds reveal dichotomies in auditory perception. Nature. 2002;416(6876):87–90. doi: 10.1038/416087a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spahr AJ, Dorman MF. Performance of subjects fit with the Advanced Bionics CII and Nucleus 3G cochlear implant devices. Arch Otolaryngol Head Neck Surg. 2004;130(5):624–628. doi: 10.1001/archotol.130.5.624. [DOI] [PubMed] [Google Scholar]

- Townshend B, Cotter N, Van Compernolle D, White RL. Pitch perception by cochlear implant subjects. J Acoust Soc Am. 1987;82(1):106–115. doi: 10.1121/1.395554. [DOI] [PubMed] [Google Scholar]

- Vandali AE. Emphasis of short-duration acoustic speech cues for cochlear implant users. J Acoust Soc Am. 2001;109(5 Pt 1):2049–2061. doi: 10.1121/1.1358300. [DOI] [PubMed] [Google Scholar]

- Vandali AE, Whitford LA, Plant KL, Clark GM. Speech perception as a function of electrical stimulation rate: using the Nucleus 24 cochlear implant system. Ear Hear. 2000;21(6):608–624. doi: 10.1097/00003446-200012000-00008. [DOI] [PubMed] [Google Scholar]

- Wilson BS, Finley CC, Lawson DT, Wolford RD, Eddington DK, Rabinowitz WM. Better speech recognition with cochlear implants. Nature. 1991;352(6332):236–238. doi: 10.1038/352236a0. [DOI] [PubMed] [Google Scholar]

- Wilson BS, Finley CC, Lawson DT, Zerbi M. Temporal representations with cochlear implants. Am J Otol. 1997;18(6 Suppl):S30–34. [PubMed] [Google Scholar]

- Xu L, Tsai Y, Pfingst BE. Features of stimulation affecting tonal-speech perception: Implications for cochlear prostheses. J Acoust Soc Am. 2002;112(1):247–258. doi: 10.1121/1.1487843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. Trends Cogn Sci. 2002;6(1):37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]

- Zeng FG. Temporal pitch in electric hearing. Hear Res. 2002;174(1-2):101–106. doi: 10.1016/s0378-5955(02)00644-5. [DOI] [PubMed] [Google Scholar]

- Zeng FG. Auditory Prostheses: Past, Present, and Future. In: Zeng FG, Popper AN, Fay RR, editors. Cochlear Implants: Auditory Prostheses and Electric Hearing. Vol. 20. Springer-Verlag; New York: 2004. pp. 1–13. [Google Scholar]

- Zeng FG, Nie K, Stickney GS, Kong YY, Vongphoe M, Bhargave A, et al. Speech recognition with amplitude and frequency modulations. Proc Natl Acad Sci U S A. 2005;102(7):2293–2298. doi: 10.1073/pnas.0406460102. [DOI] [PMC free article] [PubMed] [Google Scholar]