Abstract

Rationale and Objectives

The U.S. Mammography Quality Standards Act (MQSA) mandates medical audits to track breast cancer outcomes data associated with interpretive performance. The objectives of our study were to assess the content and style of audits and examine use of, attitudes toward, and perceptions of the value that radiologists' have regarding mandated medical audits.

Materials and Methods

Radiologists (n=364) at mammography registries in seven U.S. states contributing data to the Breast Cancer Surveillance Consortium (BCSC) were invited to participate. We examined radiologists' demographic characteristics, clinical experience, and use, attitudes and perceived value of audit reports from results of a self-administered survey. Information on the content and style of BCSC audits provided to radiologists and facilities were obtained from site investigators. Radiologists' characteristics were analyzed according to whether or not they self-reported receiving regular mammography audit reports. Latent class analysis was used to classify radiologists' individual perceptions of audit reports into overall probabilities of having “favorable,” “less favorable,” “neutral,” or “unfavorable” attitudes toward audit reports.

Results

Seventy-one percent (257 of 364) of radiologists completed the survey; two radiologists did not complete the audit survey question, leaving 255 for the final study cohort. Most survey respondents received regular audits (91%), paid close attention to their audit numbers (83%), found the reports valuable (87%), and felt that audit reports prompted them to improve interpretative performance (75%). Variability was noted in the style, target audience and frequency of reports provided by the BCSC registries. One in four radiologists reported that if congress mandates more intensive auditing requirements but does not provide funding to support this regulation they may stop interpreting mammograms.

Conclusion

Radiologists working in breast imaging generally had favorable opinions of audit reports, which were mandated by Congress; however, almost one in ten radiologists reported that they did not receive audits.

Keywords: mammography, quality assurance, medical audit

Introduction

In 1992, the U.S. Congress enacted the Mammography Quality Standards Act (MQSA), which established the first national quality standards for mammography facilities in the United States. MQSA was initiated in response to concerns from the public and medical community about the extensive variability of mammography among facilities1,2. The goal of MQSA is to provide all women living in the U.S. with equal access to quality mammography, regardless of their geographic location. Under MQSA, mammography facilities are required to have a U.S. Food and Drug Administration (FDA)-approved accreditation body review their radiological equipment, personnel qualifications and quality assurance processes every three years to ensure that baseline quality standards are practiced1, 3.

Mammography outcome audits are one of the quality assurance regulations for mammography facilities that fall under MQSA. The basic elements of MQSA's medical audit include: 1) a method to collect follow-up data for positive mammograms (defined as mammograms with final BI-RADS® assessment categories of “Suspicious” or “Highly suggestive of malignancy”); 2) a system to collect pathology results (benign versus malignant) for all biopsies performed among mammograms interpreted as “Suspicious” or Highly suggestive of malignancy”; 3) methods to correlate pathology and mammography results; and 4) review of known false negatives (examinations assessed as “negative,” “benign,” or “probably benign” that became known to the facility as positive for cancer within 12 months of mammography examination).4,5 In addition, at least once every 12 months facilities are required to designate an interpreting physician to review the medical outcomes data and notify other interpreting physicians that their individual results are available for review. FDA regulations do not specifically require that individual radiologists review their outcomes5. Approaches to implement the elements described above are left to the facility's discretion.1, 4, 5

To the best of our knowledge, only one previous qualitative study of 25 U.S. radiologists has explored radiologists' perceptions and use of their mammography audit data6. We had the opportunity to examine the attitudes and use of medical audits among radiologists working in Breast Cancer Surveillance Consortium (BCSC) mammography facilities. Over the last decade mammography facilities in seven U.S. states have submitted data to the BCSC7. The BCSC registries in turn provide data back to the facilities that could fulfill the minimum MQSA medical outcomes audit requirement. BCSC registries have the unique ability to provide substantially more audit data than the minimum MQSA requirements. While the BCSC registries have produced many scientific papers8, the content of the individual registry audit reports has not been reviewed and little is known about radiologists' attitudes and use of the audit data.

Our study goals were to examine whether radiologists report seeing their mammography audit results, and to describe the radiologists' attitudes about the audit and the perceived value of their reports. We also describe the style and content of performance audit data provided by the BCSC to participating facilities.

Materials and Methods

Institutional Review Board (IRB) and Informed Consent Process

The study was approved by the IRB of the University of Washington and all seven BCSC sites.

Radiologist Survey

Our survey was mailed to all radiologists who interpreted screening and diagnostic mammograms in 2005-2006 at seven geographically distinct BCSC sites. Details of BCSC practices and survey development have been reported previously7,9, 10-11. We mailed radiologists who were actively interpreting mammograms at a BCSC facility a self-administered survey between January 2006 and September 2007. Seventy-one percent (257 of 364) of radiologists returned the survey with informed consent. A copy of the radiologist survey is available online12.

Survey questions included radiologist age, years of practice, affiliation with academic medical centers, completing a breast imaging fellowship, estimates of annual volume of screening and diagnostic mammograms, and percentage of practice time spent in breast imaging. The survey also included questions on mammography audits including whether radiologists received audit reports showing their performance, what year they began to receive the reports, and how many times per year they receive the audit reports. We excluded two radiologists who had missing data on audit questions, leaving a total of 255 radiologists for analyses. Respondents who reported receiving regular mammography audit reports were then asked to rate the following six statements about audit reports using a 5-point Likert scale (strongly disagree, disagree, neutral, agree, strongly agree):

I trust the accuracy of the reports.

I pay close attention to my audit numbers.

Gathering the audit report data is valuable to my practice.

Audit reports prompt me to review cancers missed on mammography.

Audit reports prompt me to improve interpretive performance.

If congress mandates more intensive auditing requirements but does not provide funding to support this regulation, I may stop interpreting mammograms.

BCSC Audit Performance Reports

In addition to the radiologist survey, we collected information on the content and format of reports that BCSC registries provided to facilities between 2003 and 2005. We used the 2003-2005 BCSC reports, as these were the reports that radiologists were most likely to have viewed prior to receipt of the radiologist survey. Data on audit content and format were obtained from the BCSC registry data managers and verified by principal investigators at each BCSC registry.

The results of the content analyses and details of the audit performance reports provided by the seven BCSC sites are presented in Table 1. All reports provided summary data at the facility level, and all but one site reported results for individual radiologists. The reports ranged in length from one to 12 pages of core data, with two sites providing additional pages of pathology data. Three sites used graphics in addition to numeric displays. The frequency of distribution ranged from quarterly to annually. All sites included information on the percentage of screening mammography exams that were BI-RADS category 0 (recommendation for further imaging) and categories 4/5 (recommendation for biopsy/surgical consultation). The other performance data varied among the sites. However, with the information provided in the reports, and if radiologists were so inclined and knew how, they would be able to derive many common measures of performance. Three of the seven sites included results of biopsies performed. Information on cancer outcome data, such as stage, percentage of cancers with minimal disease or node negative, was not available at all sites.

Table 1. Summary of Breast Cancer Surveillance Consortium (BCSC) Performance Reports, by site*.

| Site 1 | Site 2 | Site 3 | Site 4 | Site 5 | Site 6 | Site 7 | |

|---|---|---|---|---|---|---|---|

| Target audience for distribution: | |||||||

| Individual radiologists | Yes | No | No | Yes | Yes | Yes | Yes |

| Mammography facility | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| Format: | |||||||

| Number of pages | 1 | 3† | 12 | 6† | 3 | 6 | 5 |

| Report uses figures | No | No | No | Yes | No | Yes | Yes |

| Frequency of reports | Annual | Annual | Annual | Quarterly & Annual | Annual† | Annual | Annual |

| BI-RADS Data Reported | |||||||

| Percent or number of screening mammograms that have recommendation for further imaging (BI-RADS category 0) | Reported at all sites | ||||||

| Percent or number of screening mammograms that have recommendation for biopsy/surgical consult (BI-RADS categories 4/5) | |||||||

| Other performance data reported § ‖ | |||||||

| False negative exams | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| True positive exams | Yes | Yes | Yes | Yes | Yes | Yes | *** |

| False positive exams | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| PPV1 | Yes | *** | Yes | *** | Yes | Yes | Yes |

| PPV2 | Yes | No | Yes | *** | Yes | Yes | Yes |

| PPV3 | No | No | Yes | *** | *** | No | Yes |

| Abnormal interpretation (recall) rate | *** | *** | Yes | Yes | Yes | Yes | Yes |

| Data on breast biopsy | |||||||

| Biopsy results for individual women | No | Yes | No | Yes | Yes | No | Yes |

| Cancer Outcome Data | |||||||

| Cancer detection rate for 1000 screening exams | *** | *** | Yes | *** | Yes | *** | Yes |

| Cancer staging | No | Yes | Yes | No | No | No | No |

| % of cancers found that are minimal disease (invasive <10 mm or DCIS) | No | *** | Yes | No | Yes | Yes | No |

| % of cancers found that are node negative | No | *** | No | No | Yes | No | Yes |

Sites 1 and 2 did not compile performance reports after 2003 and 2005, respectively, thus all the data presented here for these sites represents performance reports sent in 2003 or 2005. Summary of changes for reports since 2005: Site 3 added a column to each facility-specific report that provides comparative statistics for all mammography facilities in their local registry; Site 4 added a table that provides biopsy-yield for most recent data (2005 reports for Site 4 were based on data with a 365 day lag); Site 7 added data on cancer staging (minimal cancers and % of invasive node negative cancers).

Additional pathology reports are provided for cancer cases.

Facilities can receive monthly reports by request and these reports only list positive mammograms and biopsy results for both positive mammogram and negative mammograms.

Yes=data is reported; No=data not reported;

if radiologist is inclined to calculate performance measure, data are available in the report to allow calculation.

Performance definitions are based on American College of Radiology Breast Imaging Reporting and Data System (BI-RADS)37

Statistical Analysis

We describe the characteristics of radiologists stratified according to whether or not they self-reported receiving regular mammography audit reports. Among radiologists who reported receiving audit reports, we calculated the distribution of their likert-scale responses to questions on attitudes and perceived value regarding medical audit reports and used chi-squared tests to compare characteristics among radiologists who agreed/strongly agreed with the audit survey statements with those who were neutral, disagreed, or strongly disagreed.

We used latent class analysis13 to describe the overall attitude of radiologists toward audit reports. A latent class is a characteristic that is not directly measured from the study, but can be inferred based on other measured characteristics. Our aim was to identify classes that would represent groups of radiologists' overall perceptions of audit reports (which were not directly measured) based on responses to the six individual attitude statements. We identified four latent classes describing overall attitudes toward audit reports: favorable, less favorable, neutral and unfavorable toward audit reports. For example, using latent class analysis, we estimated the probability that a random radiologist in the “favorable” class “agrees” that audit reports are valuable to their practice. In this way, we assessed how specific aspects of audit reports related to their overall perception. Statistical analyses were performed using fSAS 9.1 (SAS Institute, Cary, NC). The latent class analyses were performed using the PROC LCA add on for SAS, which can be downloaded at http://methodology.psu.edu/index.php/downloads/proclcalta14, 15.

A significance level of p < 0.05 was used to determine statistical significance.

Results

The 255 respondent radiologists interpreted mammograms at 337 mammography facilities in the United States during the study period. Among them, the majority of radiologists (n=233, 91%) reported that they received regular audit reports. Radiologists were more likely to indicate receiving audit reports if they had interpreted mammograms for >10 years or reported interpreting >2000 screening mammograms per year (data not shown). Of the 233 radiologists receiving regular audit data, the frequency was described as once per year (n=155, 67%), more than once per year (n=57, 24%), and the remainder reported seeing their audit reports, but did not respond to the question about frequency (n= 21, 9%).

The 22 radiologists who reported not receiving audit reports were distributed across three BCSC registries (data not shown). Most of the 22 radiologists (N=16, 73%) worked at mammography facilities served by the two BCSC registries that provide audit reports only to facilities, rather than directly to the radiologists.

Among the larger cohort of radiologists (n=255), including those who reported receiving and those who reported not receiving the reports, 26% overall agreed or strongly agreed with the statement that they would stop interpreting mammograms if congress mandated more intensive auditing requirements but did not provide funding to support the regulation. Radiologists who receive audits were more likely to disagree with this statement.

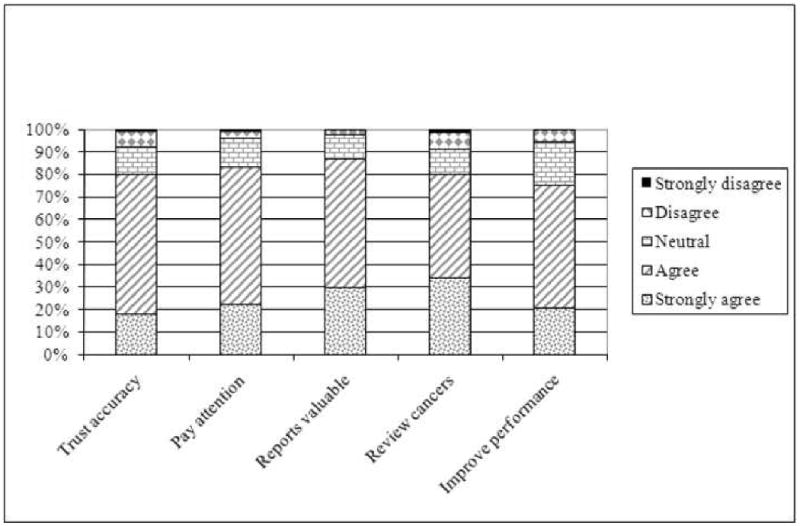

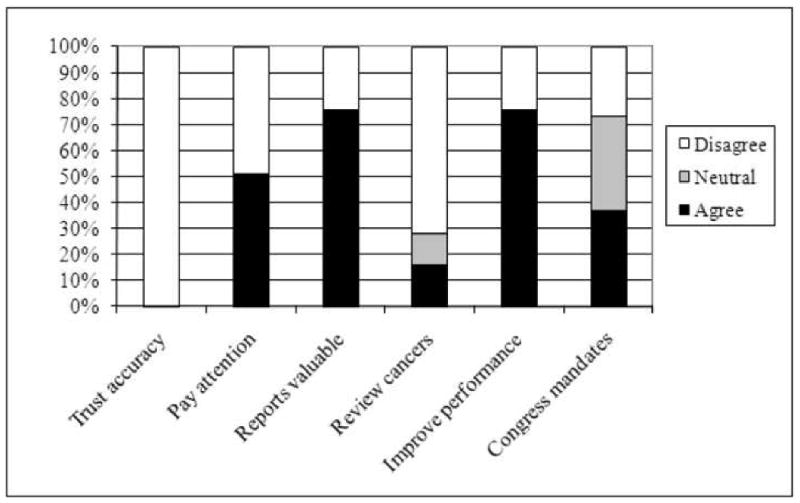

Among the radiologists who reported receiving the reports (N=233), Figure 1 illustrates their attitudes toward audit reports for five of the survey questions and shows that most radiologists in our study agreed or strongly agreed with each statement. Between 74-86% of respondents agreed or strongly agreed that they trusted the accuracy of audit reports, paid attention to them, considered the reports valuable, and were prompted by the reports to review missed cancers and improve their interpretive performance.

Figure 1.

Radiologists' attitudes toward mammography audit reports among radiologists who self-reported receiving audit reports (N=233)

Table 2 shows the percentage of radiologists receiving audit reports who agreed or strongly agreed with survey questions regarding attitudes about audit reports, by radiologist characteristics. The only statistically significant differences across radiologist characteristics who did and did not agree or strongly agree was in the survey question “If congress mandates more intensive auditing requirements but does not provide funding to support the regulation, I may stop interpreting mammograms.” Radiologists who reported that they might leave the field of mammography if congress mandated additional requirements were more likely to be younger, with fewer years experience interpreting mammograms, and to self-report a lower total volume of interpretive mammograms in the preceding year. Trends were noted among radiologist characteristics in their responses to the other survey questions, but the differences were not statistically significant. For example, radiologists who were less positive about audits were more likely to be fellowship trained and report annual volume in the lowest range categories.

Table 2.

Among radiologists who receive audits reports (n=233), the percent who agree or strongly agree with survey questions regarding attitudes about mammography audit reports, by radiologist characteristic.* †

| Would leave mammography if intensive requirements mandated without funding | Attitudes toward Audit Reports | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Trust accuracy | Pay attention | Reports are valuable | Prompts them to review cancers | Improves their performance | |||||

| N=233 | (100%) | N=61 | N=187 | N=193 | N=200 | N=184 | N=172 | ||

| Radiologist Characteristics | Percentage who agree or strongly agree with survey questions about audit reports | ||||||||

| Demographics | |||||||||

| Age group | 30-34 | 4 | (1.7) | 75.0 | 100.0 | 75.0 | 75.0 | 50.0 | 75.0 |

| 35-44 | 58 | (24.9) | 39.7 | 81.0 | 91.4 | 89.7 | 75.9 | 80.7 | |

| 45-54 | 78 | (33.5) | 19.5 | 75.6 | 81.8 | 83.1 | 80.5 | 75.6 | |

| 55+ | 93 | (39.9) | 26.4 | 82.6 | 79.6 | 88.2 | 82.8 | 29.4 | |

| Gender | Male | 168 | (72.1) | 31.3 | 81.4 | 85.6 | 88.0 | 79.0 | 74.3 |

| Female | 65 | (27.9) | 20.3 | 76.9 | 76.9 | 83.1 | 81.5 | 76.6 | |

| Clinical Experience | |||||||||

| Years interpreting mammograms | <10 | 47 | (20.3) | 42.6 | 85.1 | 87.2 | 80.9 | 72.3 | 70.2 |

| 10-19 | 84 | (36.2) | 22.6 | 78.6 | 86.9 | 89.3 | 79.8 | 81.9 | |

| 20+ | 101 | (43.5) | 26.5 | 79.0 | 78.0 | 88.0 | 83.0 | 72.0 | |

| Fellowship | No | 214 | (91.6) | 27.1 | 81.2 | 93.6 | 87.3 | 80.3 | 75.0 |

| Yes | 19 | (8.2) | 10.5 | 68.4 | 79.0 | 79.0 | 73.7 | 73.7 | |

| Academic affiliation | No | ||||||||

| Affiliation | 189 | (82.2) | 30.7 | 80.3 | 81.9 | 85.1 | 79.8 | 72.9 | |

| Adjunct or primary | 41 | (17.8) | 19.5 | 80.5 | 90.2 | 95.1 | 80.5 | 85.0 | |

| Hours spent in breast imaging | ≤20 | 134 | (60.1) | 31.8 | 80.6 | 84.3 | 87.2 | 78.2 | 75.9 |

| 21-40 | 49 | (22.0) | 14.3 | 77.1 | 81.3 | 83.7 | 81.6 | 72.9 | |

| 40+ | 10 | (17.9) | 33.3 | 82.5 | 82.5 | 87.5 | 80.0 | 75.0 | |

| Current Clinical Practice | |||||||||

| % of workload that is screening mammography | 1-10 | 81 | (38.4) | 31.7 | 80.3 | 84.0 | 85.0 | 80.0 | 76.3 |

| 11-24 | 91 | (43.1) | 27.5 | 85.7 | 86.8 | 89.0 | 79.1 | 75.8 | |

| ≥25 | 39 | (18.5) | 18.4 | 71.1 | 76.3 | 84.6 | 82.1 | 68.4 | |

| Self-reported volume of screening mammograms in preceding year | <480 | 8 | (3.6) | 57.1 | 75.0 | 75.0 | 75.0 | 50.0 | 62.5 |

| 480-999 | 31 | (13.9) | 36.7 | 80.7 | 80.7 | 93.3 | 80.0 | 74.2 | |

| 1000-1999 | 68 | (30.5) | 26.9 | 86.6 | 85.1 | 85.3 | 75.0 | 74.6 | |

| 2000-4999 | 87 | (39.0) | 26.4 | 80.5 | 83.9 | 88.5 | 81.6 | 75.6 | |

| ≥5000 | 29 | (13.0) | 24.1 | 65.5 | 79.3 | 82.8 | 86.2 | 75.9 | |

| Self-reported total volume (screening and diagnostic) in preceding year | <480 | 3 | (1.4) | 66.7 | 66.7 | 66.7 | 66.7 | 66.7 | 33.3 |

| 480-999 | 18 | (8.2) | 58.8 | 83.3 | 77.8 | 83.3 | 72.2 | 66.7 | |

| 1000-1999 | 68 | (31.1) | 24.2 | 86.6 | 86.6 | 89.6 | 79.1 | 77.6 | |

| 2000-4999 | 88 | (40.2) | 27.3 | 80.7 | 84.1 | 87.5 | 81.8 | 73.6 | |

| ≥5000 | 42 | (19.2) | 21.4 | 73.8 | 81.0 | 85.7 | 81.0 | 78.6 | |

The number of radiologists not agreeing to individual survey questions: Trust accuracy (n=46), Pay attention (n=39), Reports valuable (n=31), Prompts to review cancers (n=47), Improves performance (n=58), Congress mandates (n=173).

For full survey question see text.

Bold number denote statistically significant difference compared to radiologists who did not agree to individual survey questions at p<0.05 using the chi-squared test.

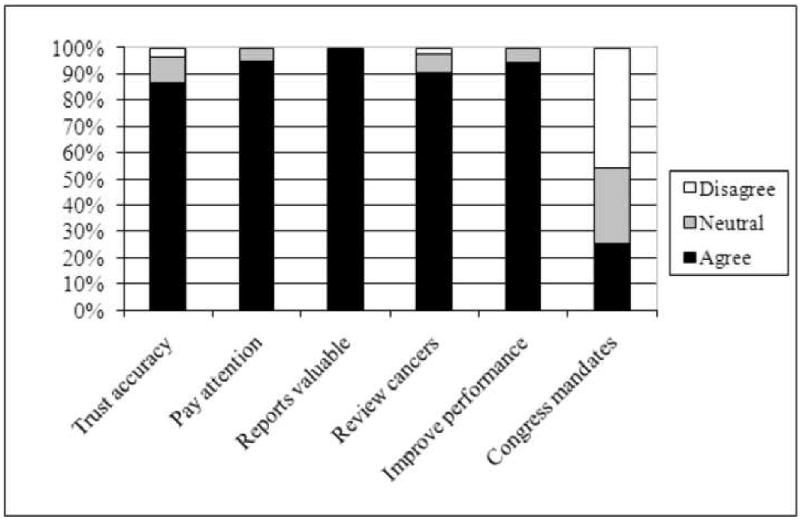

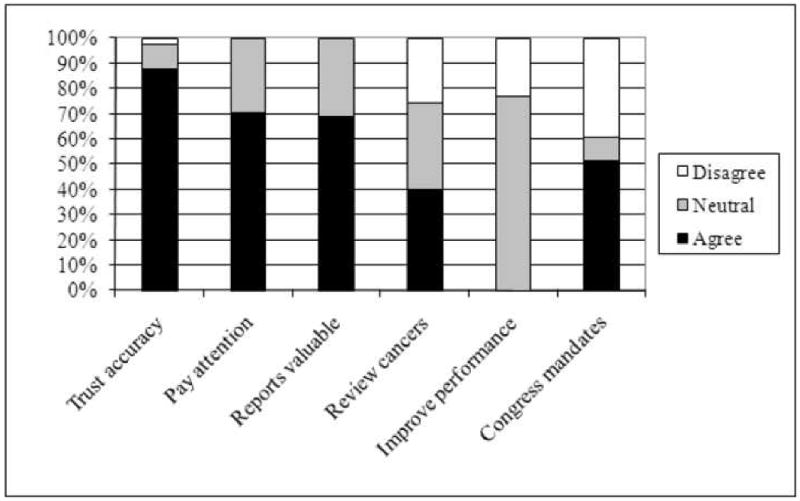

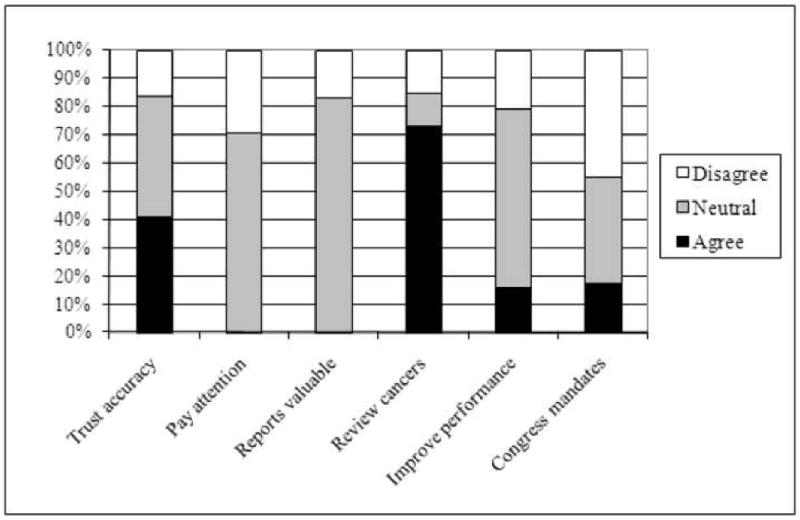

The results of the latent class analyses are shown in Figures 2a-2d and Appendix 1. The majority of radiologists who received audits (Fig. 2a, 75%) had favorable attitudes toward audit reports; these radiologists primarily trusted the accuracy of reports, paid attention to them, found audit reports to be valuable, used them to review cancers, and reported that audits improved performance. A smaller proportion of radiologists (Fig. 2b, 13%) were less favorable about audit reports; this group trusted the accuracy of the reports, but felt less favorable about whether the reports improve performance. A small proportion of radiologists were neutral about performance reports (Fig. 2c, 8%), though this group agreed that the reports prompted them to review cancers. A very small proportion (Fig. 2d, 3%) were unfavorable toward reports, primarily because they did not trust their accuracy.

Figures 2a-d.

Summary of latent class analyses for four groups of radiologists based on their overall attitudes toward mammography audits (n=233, radiologists who reported receiving audits).

Figure 2a. Radiologists with favorable perceptions of mammography audit reports (75% probability for radiologists in this cohort).

Figure 2b. Radiologists with less favorable perceptions of mammography audit reports (13% probability for radiologists in this cohort).

Figure 2c. Radiologists with neutral perceptions of mammography audit reports (8% probability for radiologists in this cohort).

Figure 2d. Radiologists with unfavorable perceptions of mammography audit reports (3% probability for radiologists in this cohort).

Discussion

The findings from our study suggest that most radiologists participating in the BCSC receive mammography audit reports, review them, consider them valuable, and are prompted by the reports to review missed cancers. The audit reports seen by radiologists in this national consortium varied in content and style and in the use of figures and graphs.

In our highly litigious society, collecting sensitive performance data raises fears of malpractice suits and the potential misuse of statistics16, 17. However, most states have quality assurance laws that protect these data from legal discovery18. Care must be taken not only in the production and de-identification of sensitive data, but also in the interpretation and distribution of audit data. While BCSC registries have the ability to pool information across facilities to deal with the issue of radiologists who work at multiple facilities, many facilities in the U.S. cannot do this. In addition, the audit reporting does not adjust for possible differences in characteristics of the patients, which can affect the performance of mammography19. Few radiologists interpret enough mammograms with cancer to get a precise estimate of their own cancer detection rate or sensitivity. MQSA requires that radiologists read at least 960 mammograms over a two-year period20; however, the cancer detection rate in the United States is only about 4 per 1,000 screening mammograms in an average-risk population21. Thus, radiologists who read 500 studies per year may see only one or two cancers annually.

Standardization of audit data and presentation style has the potential to benefit patients through enhanced quality assurance, and to benefit radiologists who work in multiple facilities22,23,24. Standardization also would clearly facilitate quality assurance research; without a standardized audit report, quality improvement efforts and evaluation research will remain limited in scope. The BI-RADS manual25 offers sample forms to simplify the audit data collection and calculation process. The American College of Radiology also recently introduced the National Mammography Database26 into which U.S. mammography facilities may choose to periodically upload their mammography data and then receive semiannual audit reports with national and demographically-similar-facility comparisons. These reports will not be linked to tumor registries for complete cancer ascertainment, thus, they will not include data on sensitivity or specificity. The BCSC has recently launched a web site where individual radiologists from some BCSC sites can see their outcome audits in comparison to regional and national data. In the future, radiologists who do not practice at a BCSC facility will be able to add their aggregated data to the web site and compare their performance with national benchmarks.

In addition to fulfilling MQSA requirements, mammography audits have the potential to improve interpretive performance and patient outcomes27. The value of medical audits may be that they provide information to practicing radiologists about their performance relative to that of their peers and national benchmarks. This is important because several studies of physicians' perceptions indicate that they believe they are performing at higher rates than they actually are28, 29. If used correctly, audits can provide a direct assessment of what the radiology facility and individual radiologist are doing well, and uncover deficiencies in performance, so that radiologists can consider changes they could make to their interpretive practices to improve their performance30 and easily determine if these changes lead to actual improvements.

Several studies have tested multi-component interventions that included audit reports and educational sessions interpreting screening mammograms31-33. Unfortunately, the specific contribution of receiving audit reports versus other components of the interventions is unknown. In a qualitative study of 25 BCSC radiologists6, many participants thought customizable, web-based reports would be useful.

Our study has a number of strengths. Mandating the collection of comprehensive audit data, as recommended in the 2005 Institute of Medicine report34, can be very labor intensive, making it important to see if radiologists are actually using the audit data and if they find the information valuable. Second, our response rate of 71% of radiologists who received the survey is considerably higher than most physicians surveys35. Third, this study included a diverse group of community-based radiologists who interpret mammograms for women living in seven geographical regions of the United States. Thus, our findings have greater generalizability than a survey restricted to academic radiologists or specialists in breast imaging.

Though there were strengths in this study, there were also limitations. First, it is possible that respondents and non-respondents to the survey differed in their attitudes toward the use of audit reports and reported a biased perspective. However, our high response rate is reassuring, and in previous analyses we found that the interpretive performance of responders to the survey and non-responders is similar11, 36. We also noted no difference in characteristics between radiologists who did and did not report receiving regular audit reports. A second limitation of this study is that survey data were based on subjective self-report; we did not verify whether reported use of audit data reflected actual review and use, or which radiologists received additional audit data beyond what the BCSC registries supply. The wording of survey questions such as question 6, which asked whether study participants would stop interpreting mammograms if congress mandates more intensive auditing requirements without additional funding, may be particularly prone to a biased response. Third, the audit reports received by BCSC radiologists are likely more detailed than reports received by non-BCSC radiologists due to the BCSC prospective data collection methods, linkage with cancer registries, and follow up on all negative exams. Finally, we were not able to assess the important question of whether use of audit data is associated with improved performance.

Audit reports are one possible means of improving radiologists' interpretative performance, since they may facilitate the review of previous false-negative diagnoses and can shed light on interpretive performance of individual radiologists. Our research indicates that radiologists find mammography audit reports from the BCSC useful, and are prompted by them to review cases with breast cancer diagnosis and improve their performance. Future studies should test whether having audit reports affects interpretive performance and should examine what aspects of the reports radiologists find most useful, and what aspects could be simplified or enhanced to facilitate increased use and benefit.

Acknowledgments

Funding sources: This work was supported by the National Cancer Institute [1R01 CA107623, 1K05 CA104699; Breast Cancer Surveillance Consortium (BCSC): U01CA63740, U01CA86076, U01CA86082, U01CA63736, U01CA70013, U01CA69976, U01CA63731, U01CA70040], the Agency for Healthcare Research and Quality (1R01 CA107623), the Breast Cancer Stamp Fund, and the American Cancer Society, made possible by a generous donation from the Longaberger Company's Horizon of Hope Campaign (SIRGS-07-271-01, SIRGS-07-272-01, SIRGS-07-273-01, SIRGS-07-274-01, SIRGS-07-275-01, SIRGS-06-281-01).

The authors had full responsibility in the design of the study, the collection of the data, the analysis and interpretation of the data, the decision to submit the manuscript for publication, and the writing of the manuscript. We thank the participating women, mammography facilities, and radiologists for the data they have provided for this study. A list of the BCSC investigators and procedures for requesting BCSC data for research purposes are provided at: http://breastscreening.cancer.gov/.

Appendix 1

Item response probabilities within each latent class and latent class prevalences for 233 radiologists who were surveyed about their perceptions and use of MQSA mandated medical outcomes audits.

| Item Response Probabilities | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Latent Class: | Favorable | Less favorable | Neutral | Unfavorable | ||||||||

| Response Category* | Agree | Neutral | Disagree | Agree | Neutral | Disagree | Agree | Neutral | Disagree | Agree | Neutral | Disagree |

| Trust accuracy of audits | 0.87 | 0.10 | 0.03 | 0.88 | 0.10 | 0.03 | 0.41 | 0.43 | 0.17 | 0.00 | 0.00 | 1.00 |

| Pay attention to audits | 0.95 | 0.05 | 0.00 | 0.70 | 0.30 | 0.00 | 0.00 | 0.71 | 0.29 | 0.51 | 0.00 | 0.49 |

| Reports are valuable | 0.99 | 0.01 | 0.00 | 0.69 | 0.31 | 0.00 | 0.00 | 0.83 | 0.17 | 0.76 | 0.00 | 0.24 |

| Review cancers because of audits | 0.91 | 0.07 | 0.02 | 0.40 | 0.34 | 0.26 | 0.73 | 0.12 | 0.16 | 0.16 | 0.12 | 0.72 |

| Audits prompt to improve performance | 0.94 | 0.06 | 0.00 | 0.00 | 0.77 | 0.23 | 0.16 | 0.63 | 0.21 | 0.76 | 0.00 | 0.24 |

| If congress mandates additional requirements I may stop interpreting mammograms | 0.25 | 0.29 | 0.46 | 0.51 | 0.10 | 0.39 | 0.17 | 0.38 | 0.45 | 0.37 | 0.36 | 0.27 |

| Class Prevalences | 0.75 | 0.13 | 0.08 | 0.03 | ||||||||

See methods section for complete survey questions.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.U.S. Food & Drug Administration. Mammography Quality Standards Act Regulations. [March 12, 2009]; http://www.fda.gov/cdrh/mammography/frmamcom2.html.

- 2.Birdwell RL, Wilcox PA. The mammography quality standards act: benefits and burdens. Breast Dis. 2001;13:97–107. doi: 10.3233/bd-2001-13112. [DOI] [PubMed] [Google Scholar]

- 3.U.S. Food & Drug Administration. Written Statement for the Record. [March 12, 2009]; http://www.fda.gov/ola/2002/mqsa0228.html.

- 4.Monsees BS. The Mammography Quality Standards Act. An overview of the regulations and guidance. Radiol Clin North Am. 2000 Jul;38(4):759–772. doi: 10.1016/s0033-8389(05)70199-8. [DOI] [PubMed] [Google Scholar]

- 5.U.S. Food & Drug Administration. Medical Outcomes Audit General Requirement. [May 4, 2009]; http://www.fda.gov/CDRH/mammography/robohelp/med_outcomes_audit_gen_req.htm.

- 6.Aiello Bowles EJ, Geller BM. Best ways to provide feedback to radiologists on mammography performance. AJR Am J Roentgenol. 2009 Jul;193(1):157–164. doi: 10.2214/AJR.08.2051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Breast Cancer Surveillance Consortium (NCI) http://breastscreening.cancer.gov/

- 8.Breast Cancer Surveillance Consortium NCI. [October 2009]; http://breastscreening.cancer.gov/publications/

- 9.Ballard-Barbash R, Taplin SH, Yankaskas BC, Ernster VL, Rosenberg RD, Carney PA, Barlow WE, Geller BM, Kerlikowske K, Edwards BK, Lynch CF, Urban N, Chrvala CA, Key CR, Poplack SP, Worden JK, Kessler LG. Breast Cancer Surveillance Consortium: A national mammography screening and outcomes database. AJR Am J Roentgenol. 1997;169:1001–1008. doi: 10.2214/ajr.169.4.9308451. [DOI] [PubMed] [Google Scholar]

- 10.Carney PA, Miglioretti DL, Yankaskas BC, et al. Individual and combined effects of age, breast density, and hormone replacement therapy use on the accuracy of screening mammography. Ann Intern Med. 2003 Feb 4;138(3):168–175. doi: 10.7326/0003-4819-138-3-200302040-00008. [DOI] [PubMed] [Google Scholar]

- 11.Elmore JG. Variability in Interpretive Performance of Screening Mammography and Radiologists' Characteristics Associated with Accuracy. Radiology. 2009 doi: 10.1148/radiol.2533082308. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Factors Affecting Variability of Radiologists (FAVOR) Research Group. National Survey of Mammography Practices. [September, 2009]; Available at: http://breastscreening.cancer.gov/collaborations/favor_ii_mammography_practice_survey.pdf.

- 13.Bandeen-Roche K, M D, Zeger SL, Rathouz PJ. Latent Variable Regression for Multiple Discrete Outcomes. Journal of the American Statistical Association. 1997;92:1375–1386. [Google Scholar]

- 14.Lanza S, Collins LM, Lemmon D, Schafer JL. PROC LCA: A SAS procedure for latent class analysis. Structural Equation Modeling. 2007;14(4):671–694. doi: 10.1080/10705510701575602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lanza ST, L D, Schafer JL, Collins LM. PROC LCA & PROC LTA User's Guide Version 1.1.5 beta. University Park: The Methodology Center, Pennsylvania State University; 2008. [Google Scholar]

- 16.Whiteman T. Mammography malpractice litigation and the impact of MQSA. Administrative Radiology. 1995:29–31. [PubMed] [Google Scholar]

- 17.Dick J. Predictors of Radiologists'Perceived Risk of Malpractice Lawsuits in Breast Imaging. AJR Am J Roentgenol. 2009;192:327–333. doi: 10.2214/AJR.07.3346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Carney PA, Geller BM, Moffett H, et al. Current medicolegal and confidentiality issues in large, multicenter research programs. Am J Epidemiol. 2000 Aug 15;152(4):371–378. doi: 10.1093/aje/152.4.371. [DOI] [PubMed] [Google Scholar]

- 19.Miglioretti DL, Smith-Bindman R, Abraham L, et al. Radiologist characteristics associated with interpretive performance of diagnostic mammography. J Natl Cancer Inst. 2007 Dec 19;99(24):1854–1863. doi: 10.1093/jnci/djm238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.U.S. Food and Drug Administration (FDA) The Mammography Quality Standards Act of 1992, Pub. L. No. 102-539. 1992. [Google Scholar]

- 21.Jiang Y, Miglioretti DL, Metz CE, Schmidt RA. Breast cancer detection rate: designing imaging trials to demonstrate improvements. Radiology. 2007 May;243(2):360–367. doi: 10.1148/radiol.2432060253. [DOI] [PubMed] [Google Scholar]

- 22.Clark RA, King PS, Worden JK. Mammography registry: considerations and options. Radiology. 1989 Apr;171(1):91–93. doi: 10.1148/radiology.171.1.2928551. [DOI] [PubMed] [Google Scholar]

- 23.Ballard-Barbash R, Taplin SH, Yankaskas BC, et al. Breast Cancer Surveillance Consortium: a national mammography screening and outcomes database. AJR Am J Roentgenol. 1997 Oct;169(4):1001–1008. doi: 10.2214/ajr.169.4.9308451. [DOI] [PubMed] [Google Scholar]

- 24.Sickles EA. Auditing your breast imaging practice: an evidence-based approach. Semin Roentgenol. 2007 Oct;42(4):211–217. doi: 10.1053/j.ro.2007.06.003. [DOI] [PubMed] [Google Scholar]

- 25.D'Orsi CJ, B L, Berg WA, et al. Breast Imaging Reporting and Data System: ACR BI-RADS-Mammography. 4. Reston, VA: American College of Radiology; 2003. [Google Scholar]

- 26.American College of Radiology. National Mammography Database (NMD) [September 2009]; Available at: https://nrdr.acr.org/portal/NMD/Main/page.aspx.

- 27.Linver MN, Osuch JR, Brenner RJ, Smith RA. The mammography audit: a primer for the mammography quality standards act (MQSA) AJR Am J Roentgenol. 1995 Jul;165(1):19–25. doi: 10.2214/ajr.165.1.7785586. [DOI] [PubMed] [Google Scholar]

- 28.Silvey AB, Warrick LH. Linking quality assurance to performance improvement to produce a high reliability organization. Int J Radiat Oncol Biol Phys. 2008;71(1 Suppl):S195–199. doi: 10.1016/j.ijrobp.2007.05.093. [DOI] [PubMed] [Google Scholar]

- 29.Benson HR. Benchmarking in healthcare: evaluating data and transforming it into action. Radiol Manage. 1996 Jan-Feb;18(1):40–46. [PubMed] [Google Scholar]

- 30.Sickles EA. Quality assurance: How to audit your own mammography practice. Radiologic Clinics of North America. 1992;30(1):265–275. [PubMed] [Google Scholar]

- 31.Adcock KA. Initiative to Improve Mammogram Interpretation. Permanente Journal. 2004;8:12–18. doi: 10.7812/tpp/04.969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Perry NM. Interpretive Skills in the National Health Service Breast Screening Programme: performance indicators and remedial measures. Seminars in Breast Disease. 2003 September;6(3):108–113. [Google Scholar]

- 33.van der Horst F, Hendriks J, Rijken H, Holland R. Breast cancer screening in the Netherlands: Audit and training of radiologists. Seminars in Breast Disease. 2003;6(3):114–122. [Google Scholar]

- 34.Institute of Medicine. Improving Breast Imaging Quality Standards. Washington, D.C.: The National Academies Press; 2005. [Google Scholar]

- 35.Asch DA, Jedrziewski MK, Christakis NA. Response rates to mail surveys published in medical journals. J Clin Epidemiol. 1997 Oct;50(10):1129–1136. doi: 10.1016/s0895-4356(97)00126-1. [DOI] [PubMed] [Google Scholar]

- 36.Miglioretti DL, G C, Carney PA, Onega T, Buist DSM, Sickles EA, Kerlikowske K, Rosenberg RD, Yankaskas BC, Geller BM, Elmore JG. When radiologists perform best: The learning curve in screening mammography interpretation. Radiology. 2009 doi: 10.1148/radiol.2533090070. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.American College of Radiology. Breast imaging reporting and data system (BI-RADS) Reston, VA: American College of Radiology; 2003. [Google Scholar]