Abstract

There is little consensus about the characteristics and number of body representations in the brain. In the present paper, we examine the main problems that are encountered when trying to dissociate multiple body representations in healthy individuals with the use of bodily illusions. Traditionally, task-dependent bodily illusion effects have been taken as evidence for dissociable underlying body representations. Although this reasoning holds well when the dissociation is made between different types of tasks that are closely linked to different body representations, it becomes problematic when found within the same response task (i.e., within the same type of representation). Hence, this experimental approach to investigating body representations runs the risk of identifying as many different body representations as there are significantly different experimental outputs. Here, we discuss and illustrate a different approach to this pluralism by shifting the focus towards investigating task-dependency of illusion outputs in combination with the type of multisensory input. Finally, we present two examples of behavioural bodily illusion experiments and apply Bayesian model selection to illustrate how this different approach of dissociating and classifying multiple body representations can be applied.

Keywords: Bayesian model selection, Body representations, Multimodal integration, Rubber hand illusion

Introduction

There has been little consensus on the nature and number of body representations. The main focus of this paper is neither to settle the debate in favour of one view over the other, nor to count how many body representations there are. Instead, the present paper tries to combine the different conceptual and experimental approaches to this topic into a more holistic view. In part one, we discuss two specific problems that have been encountered while dissociating multiple body representations in healthy individuals with the use of bodily illusions. In part two, we propose a different approach that might overcome these two main problems. In the last part, we discuss two example datasets of bodily illusion experiments, which serve as a technical illustration. We re-evaluate and reanalyze previously published rubber hand illusion data, which provide a good example of the two main problems. So instead of asking how many body representations can be dissociated purely based on experimental output, we identify different tentative models of the possible weighting of the multisensory input in the example bodily illusion experiment, and test these against each other directly using Bayesian model selection. This provides a possible way to avoid the problems recently encountered when dissociating multiple body representations in healthy individuals with the use of bodily illusions.

Challenges in the study of body representation

The way the body is mentally represented has been investigated by many different fields of research. For example, neuropsychologists have investigated patients with impairments in mentally representing and/or acting with the body, philosophers have explored the phenomenology of our bodily experiences and our conscious bodily self, experimental psychologists have studied multimodal integration with bodily illusions, and neuroscientists have tried to find the neural correlates of our mental body representation. However, there is no consensus on the number, definitions and/or characteristics of body representations. There are currently two main psychological and philosophical models of body representations: (a) a dual model of body representation distinguishing the body image and the body schema (Gallagher and Cole 1995; Rossetti et al. 1995; Paillard 1999; Dijkerman and De Haan 2007) or short-term and long-term body representations (O’Shaughnessy 1995; Carruthers 2008), and (b) a triadic model of body representation that makes a more fine-grained distinction between a visuo-spatial body map and body semantics within the body image, in addition to the body schema (Schwoebel and Coslett 2005; Buxbaum and Coslett 2001).

The first main problem that current models of body representations encounter when tested experimentally is conceptual. The distinctions between body representations are often made on a single dimension, such as availability to consciousness (Gallagher 2005), temporal dynamicity (O’Shaughnessy 1995), or functional role (Paillard 1999). Depending on the criterion, different distinctions are possible, leading to widespread confusion (de Vignemont 2007). Even more importantly, there are more dimensions on which body representations can be dissociated than the three highlighted above, such as the relative importance of different bodily sensory input signals, the spatial frame of reference, etc. For example, the body schema most probably includes short-term information (e.g., body posture) as well as long-term information (e.g., the size of the limbs), both self-specific information (e.g., strength) and human-specific information (e.g., degrees of freedom of the joints). By contrast, when investigating the body image one needs to try to group together the heterogeneous concepts of body percept, body concept and body affect in the dual model (Gallagher and Cole 1995). Although the triadic model does attempt to split the body image up into two components (the structural description and semantic knowledge), how and where do we implement the body affect? Should we postulate a fourth type of body representation?

The second problem that one encounters is the nature of the evidence that current models of body representations rely upon. Neuropsychology has been the main starting point for investigating mental body representations, whereby Head and Holmes (1911–1912) were among the first to describe several patients with dissociable deficits concerning the representation, localization and sensation of the body. However, because there is disagreement on the number and definitions of body representations, there is also disagreement on the classification of bodily disorders. For example, personal neglect is interpreted both as a deficit of body schema (Coslett 1998) and as a deficit of body image (Gallagher 2005), whereas Kinsbourne (1995) argued that it is due to an attentional impairment, and not to a representational impairment. The problem here is that most classifications of body representations rely primarily on a very heterogeneous group of neuropsychological disorders that can be divided or classified on a number of different levels (for an extended discussion, see de Vignemont (2009).

Attempts to classify multiple body representations in healthy individuals also run into several problems. The general approach holds that the experimenter induces a sensory conflict which often results in some form of bodily illusion. This sensory conflict can be evoked within a unimodal information source (for example, illusions due to tendon vibration—described below in more detail (Kammers et al. 2006; Lackner 1988)) or between multisensory sources (for example the rubber hand illusion (Botvinick and Cohen 1998) and mirror illusion (Holmes et al. 2006)). If the response to the bodily illusion is sensitive to the context or to the type of task, then this is often taken as evidence for the involvement of distinct types of body representations. In other words, when significantly different responses to the same sensory conflict/bodily illusion can be identified, these distinct responses are taken to be subserved by dissociable body representations. An example of an illusion which induces unimodal conflict is the kinaesthetic tendon vibration illusion. Vibration of a tendon induces an illusory displacement of a static limb by influencing the afferent muscle spindles (de Vignemont et al. 2005; Kammers et al. 2006; Lackner and Taublieb 1983). Lackner and Taublieb (1983) showed that consciously perceived limb position depends not only on afferent and efferent information about individual limbs in isolation, but also on the spatial configuration of the entire body. More recently, it was shown that a perceptual matching task (to test the body image) was significantly more affected by this vibrotactile illusion than an action reaching response (to test the body schema) towards the perceived location of the index finger of the vibrated arm (Kammers et al. 2006). This shows that the weighting of the information from the vibrated muscle might depend on the kind of output that is required, i.e., the type of task, which was taken as evidence for dissociable underlying body representations.

The kind of body representation that underlies a specific type of task is controversial as well. There is no consensus on how each body representation can best be experimentally tested (whether in healthy individuals or patients). For example, matching of a body part’s illusory orientation can be taken as a perceptual response, which would be a way to investigate the body image in the dual model. By contrast, it would most likely be a measurement of the body schema in the triadic model since it involves active muscle movement. Furthermore, for the triadic model, semantics should be included in the task to tap into the body image. This diversity illustrates the main problem when trying to dissociate and classify multiple body representations in healthy individuals, the risk of identifying as many body representations as there are tentative classifications or significantly different experimental outputs.

A last and important example of this plurality is the range of body representations that can be identified with the rubber hand illusion (RHI). The RHI is evoked when the participant watches a rubber hand being stroked, while their own unseen hand is stroked in synchrony. This results in feeling ownership over the rubber hand and induces a relocation of the perceived location of one’s unseen own hand towards the location of the rubber hand (Botvinick and Cohen 1998). Feeling of ownership over the rubber hand is often measured with a standard questionnaire (Botvinick and Cohen 1998). A psychometric approach using a more extensive questionnaire showed that the illusion induces different components of embodiment after synchronous versus asynchronous stroking indicating that both stimulations might induce different bodily experiences (Longo et al. 2008).

Asynchronous stroking is often applied as a standard control, which not only shows reduced feeling of ownership, but also shows a smaller relocation of the participant’s own unseen hand towards the seen rubber hand compared to synchronous stroking (Botvinick and Cohen 1998; Tsakiris and Haggard 2005). Interestingly, the proprioceptive drift has even been found without any tactile feedback (Holmes et al. 2006). With use of the mirror illusion (where the rubber hand is replaced by the mirror image of the participant’s own hand) Holmes et al. (2006) showed that no tactile feedback has a differential effect on the perceived relocation of the hand compared to asynchronous feedback. They showed that asynchronous feedback (tapping of the finger) in the mirror illusion significantly decreases the proprioceptive drift compared to no tactile feedback. For the RHI it remains somewhat unclear whether the synchronous stroking increases the proprioceptive drift or whether the asynchronous stroking decreases the proprioceptive drift. Nevertheless, the difference between the two is taken as a measure of embodiment of the (location) of the rubber hand.

The illusion-induced discrepancy in perceived hand location is often measured with a perceptual localization task (Botvinick and Cohen 1998; Tsakiris and Haggard 2005). Relocation of the perceived location of the participant’s own hand has now been shown to depend on the task. Although perceptual location judgments of the participant’s own hand were illusion-sensitive, ballistic actions with as well as towards the illuded hand proved robust against the illusion (Kammers et al. 2009a, b). We interpreted this task dependency as evidence for different dissociable underlying body representations, namely the body schema for action and the body image for perception. This was in line with the dual model of body representations. However, this distinction was primarily based on the illusion sensitivity of the body image versus the illusion robustness of the body schema. The interpretation of the body representation used for action became more complicated when we later showed that the robustness of motor responses against bodily illusions seems to be dependent on the exact type of motor task, as well as on the induction method of the illusion. More specifically, when the rubber hand illusion was induced not just on the index finger but on the whole grasping configuration of the hand (i.e., stroking on the index finger and thumb), the kinematic parameters of a grasping movement were affected by the RHI (Kammers et al. 2009).

Consequently, the main concern that one might have with the current approach, in healthy individuals especially, is its focus of interest. The dual and the triadic model are mainly interested in the final output of bodily information processing, and this is where they disagree. This focus on body representations per se is at the expense of the investigation of the building up of those body representations. Let us not assume the existence of multiple types of body representations in healthy individuals, on the basis of a heterogeneous group of syndromes, and try to avoid the pitfall of simply enumerating different representations on the basis of dissociable output, without also looking at the type of input and the interplay between the two. Therefore, instead of introducing yet another dissociation within the body representation(s) models, here we present a different view, focusing on the principles governing the construction of the body representations that are dependent on both available input and output.

A new approach

Two main problems in dissociating multiple body representations in healthy individuals with the use of bodily illusions are: (1) a disproportionate focus on output (i.e., task dependency) and (2) a failure to bring consensus to the current discussion between different body representation models. Alternatively, we suggest: (i) looking not only at the output but also at the type of input and the interplay between the two and (ii) to address and identify different models before conducting an experiment and then testing them directly and objectively against each other. The latter can be done on different levels, for example input, output or the different theoretical models on the number of body representations. Here, we propose Bayesian selection as a method to objectively test different models against each other simultaneously. The Bayes factor is a statistical measure that can be used to calculate the posterior model probability of a model. This quantity reflects the probability that the model is correct given the data. (For an introduction to Bayesian data analysis, see for instance, Gelman et al. (2004). Kass and Raftery (1995) provide a thorough overview of the properties of the Bayes factor as a model selection criterion.)

Why use Bayesian model selection as a tool to overcome the problems identified here? Application of the Bayes factor for this purpose has certain advantages in comparison to conventional null hypothesis testing. First, instead of having to compare each model of interest with the null model (or null hypothesis), the Bayes factor allows us to directly compare several models against each other. Second, this comparison of models does not result in the normal loss of power, due to multiple comparisons, because all relationships between the parameters in a model are simultaneously evaluated. Third, the Bayes factor has a naturally incorporated “Occam’s razor”, which means that when two models explain the data equally well, the Bayes factor prefers the simpler model. These benefits are especially interesting for the problem of the indefinite number of body representations identified here. First, the null model would be that there is no constraint of any body representation. So you could either say that this means that there is only a single body representation, or say that there is no body representation underlying the different responses at all. The other models would be, for example, the Dual model and Triadic model as well as perhaps a Quartic model (Sirigu et al. 1991). In the experimental design there should at least be as many different response types as there are possible body representations based on the most complex model. In this case, four different tasks to tap into the possible four different body representations. For example, a semantic task, a ballistic motor task, a purely perceptual localization task, a matching task, etc. Next, data can be collected and models can be tested in one single experiment, to evaluate which of these models best explain the data. In other words, this would answer the question whether we need two, three or four body representations to explain different effects of a bodily illusion on different type of tasks. This can potentially lead to more consensus within the body representation literature and to less isolated experiments. Next, we will provide a detailed and more technical example of the application of Bayesian model selection for this purpose.

Two rubber hand illusion experiments as a technical illustration

To illustrate the more technical application of this approach, we discuss two RHI experiments in detail. The RHI depends on the temporal correlation between visual and tactile stimulation (i.e., stroking), in which the discrepancy in location is overcome by “visual capture” of the tactile sensation, resulting in a feeling of ownership over the rubber hand and an illusory shift in the perceived location of the subject’s own hand towards the location of the rubber hand. The standard control condition involves asynchronous stimulation of the rubber hand and the subject’s own hand (Botvinick and Cohen 1998). An asynchronous stimulation, compared to synchronous stimulation, results in less feeling of ownership over the rubber hand and a smaller relocation of the subject's own occluded hand towards the visible rubber hand. First, we will discuss an imaginary dataset based on the standard way of investigating the effect of a bodily illusion on only a single type of response. Example 1 does therefore not address the issue of relating multiple responses to multiple body representations, but provides a simple example demonstrating that the proposed approach can be administered on different levels to investigate bodily illusions and body representations. Second, in Example 2 we discuss a more complicated, previously published RHI design, showing how the approach can deal with the conceptual implications of different perceived locations within one type of response (Kammers et al. 2009a).

In both examples we transformed different possible ways of integrating the RHI-induced conflict between multisensory information sources into inequality and equality constrained models. Subsequently, a Bayesian model selection criterion, i.e., the Bayes factor, will be used to investigate which tentative model (or models) of sensory integration can best describe the different perceived body locations.

Bayesian model selection: Example 1

In this first example we use an imaginary RHI dataset based on what has been frequently reported (e.g., Botvinick and Cohen 1998; Tsakiris and Haggard 2005). Five imaginary participants gave a perceptual judgment of the perceived location of their stimulated limb after either synchronous stroking (RHI illusion condition) or asynchronous stroking (RHI control condition). In this simplified version of an actual RHI experiment we investigate the effect of synchronicity of tactile stimulation. More precisely we will look at the mean response errors after synchronous versus asynchronous stroking to investigate the effect of the RHI (Table 1).

Table 1.

Illustrative data set of five participants

| Participant | x 1 (response error in cm after synchronous stroking) | x 2 (response error in cm after asynchronous stroking) |

|---|---|---|

| 1 | 5.0 | 1.0 |

| 2 | 2.0 | 1.0 |

| 3 | 1.0 | 2.0 |

| 4 | 4.0 | 0.0 |

| 5 | 3.0 | 3.0 |

| Mean | 3.0 | 1.4 |

This relocation error provides insight into the underlying relative weighting of visual and proprioceptive information. It is already known that accurate limb localization is based in general on the multimodal combination of visual and proprioceptive information (Desmurget et al. 1995; Graziano 1999; Graziano and Botvinick 2002). Several models have been proposed to account for this difference in multisensory weighting depending on the task demands (Deneve and Pouget 2004; Ernst and Banks 2002; Scheidt et al. 2005; van Beers et al. 1998, 1999, 2002). Although these models differ in the way multisensory information is integrated, they all agree that the objective of this integration/weighting is to reduce uncertainty and create an accurate (consistent) localization of the limb. A wide range of studies suggest that the relative weights that are given to both information sources seem to depend on a range of factors. For instance, the weighting seems to alter between different spatial directions (van Beers et al. 2002), which remains true even during illusory induced reaching errors (Snijders et al. 2007). Furthermore, different locations of the hand with respect to the body (Rossetti et al. 1994), and even illumination conditions of the hand and the visual background (Mon-Williams et al. 1997) can modify the relative weight given to vision and proprioception. Additionally, when looking at movements, the relative weighting seems to differ for the trajectory of the action versus the end point of a movement (Scheidt et al. 2005).

Model specification

We denote by μ the mean of the induced relocation of the illuded hand. In other words, this number represents the mean error between the perceived and the veridical location of the subject’s own hand in centimeters. From this number the relative weight of vision and proprioception can be derived. Complete visual dominance would result in a μ equal to the distance between the rubber hand and the subject’s own hand. By contrast, complete proprioceptive dominance would result in a μ of zero. In that case there would be no error between the perceived and the veridical location of the subject’s own hand.

In this first example we test two very simple models. We consider the inequality constrained model M 1: μ 1 > μ 2 (which states that there is an illusory relocation after synchronous stroking), this would results in a larger error towards the location of the rubber hand compared to the location error after asynchronous stroking. In terms of multisensory integration this means that the weight of visual information of the rubber hand is weighted more strongly after synchronous stroking compared to asynchronous stroking. Or conversely proprioception is weighted more strongly after asynchronous stroking compared to synchronous stroking.

This model will be tested against the unconstrained model M 0: μ 1, μ 2, which does not make any assumptions about the weighting of vision and proprioception, that is, μ 1 and μ 2 can have any combination of values.

Bayes factor

The Bayes factor, which is denoted by B ji, is a model selection criterion that provides the amount of evidence in the data in favour of model M j against model M i. If B 10 > 1, then model M 1 receives more evidence from the data than model M 0. For example, if B 10 = 3.0, there is three times more evidence in the data in favor of model M 1 in comparison to model M 0. Note that this is equivalent to B 01 = 0.33.

When selecting between the inequality constrained model (M 1: μ 1 > μ 2) versus the unconstrained model (M 0: μ 1, μ 2) based on the hypothetical data in Table 1, the Bayes factor can be calculated using the encompassing prior approach discussed by Klugkist et al. (2005). This methodology was generalized to address the multivariate normal model by Mulder et al. (2009).

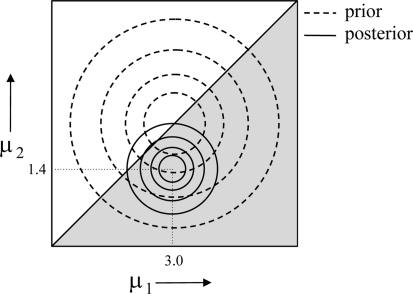

First, a prior distribution must be specified for the model parameters (μ 1, μ 2) under the unconstrained model M 0. This prior is also referred to as the encompassing prior. The prior distribution represents the knowledge we have about the model parameters before observing the data. We assume vague (noninformative), independent, and identically distributed priors for μ 1 and μ 2 so that the prior distribution is dominated by the data. Figure 1 displays a contour plot of this prior (dashed lines).

Fig. 1.

Sketch of contour plots of prior and posterior densities based on the data of Table 1. The complete square can be interpreted as the unconstrained space of M 0 and the grey area can be interpreted as the inequality constrained space of M 1. The proportion of the prior satisfying μ1 > μ2 is 0.5. The proportion of the posterior satisfying μ1 > μ2 is 0.97. Note that the prior distribution is broad and vague, whilst the posterior distribution is narrower and centred on the means in the empirical data

When updating our knowledge about (μ 1, μ 2) using the data in Table 1, we obtain the posterior distribution of (μ 1, μ 2), which represents our knowledge about (μ 1, μ 2) after observing the data. For this data set, the posterior would be located around the sample means (3, 1.4) as is displayed in Fig. 1.

Note that the posterior variances of μ1 and μ2 are smaller than the prior variances as can be seen by the smaller radius of the contours of the posterior in Fig. 1. This is a consequence of the posterior containing more information about μ1 and μ2 than the prior.

According to the encompassing prior approach, the Bayes factor B 10 of model M 1 versus model M 0 is given by:

|

Hence, model M 1 is almost two times better than model M 0 at explaining the observed data. Therefore, the model that assumes a larger error towards the rubber hand after synchronous stroking (μ 1 > μ 2) should be preferred over the unconstrained model (μ 1, μ 2 unconstrained) given the data in Table 1. In terms of multisensory integration this means that visual information is relatively more strongly weighted after synchronous stroking compared to asynchronous stroking.

The Bayes factor can be used to calculate posterior model probabilities, denoted by p(M i|X), which reflect the probability that model M i is correct given the data X and the other models under evaluation. In this example, the posterior model probability of M 1 is calculated according to:

|

Similarly, the posterior model probability of the unconstrained model is p(M 0|X) = 0.34.

Bayesian model selection: Example 2

In this second example we use an existing dataset (Kammers et al. 2009a) that exemplifies the main pitfall of the premise that all significantly different types of output are subserved by dissociable body representations. In this experiment, we applied the RHI paradigm and measured its effect on different types of responses to investigate possible dissociable body representations. Subject’s own occluded right index finger and the visible index finger of the rubber hand were stroked either synchronously (illusion condition) or asynchronously (control condition). After this stimulation period, one of five perceptual localization responses was collected.

The perceptual response was a matching judgment in which the subject verbally indicated when the experimenter’s left index finger on top of the framework mirrored the perceived location of the subject’s own right index finger inside the framework. Perceptual response 1 was asked immediately after the RHI induction.

The perceptual responses 2 through 5 were all conducted after two action responses. In these cases there was first the stimulation period, next two pointing responses and finally a perceptual response. A pointing response could be conducted either with the illuded hand towards the location of the tip of the index finger of the non-illuded hand, or vice versa. All pointing movements were done inside the framework out of view. The pointing hand landed on a Plexiglas pane placed above the target hand so no cues about pointing accuracy were provided.

Perceptual response 2 was given after the subject pointed twice with the non-illuded hand towards the perceived location of the index finger of the illuded hand. Perceptual response 3 was conducted after a pointing movement first with the illuded hand towards the non-illuded hand and next with the non-illuded hand towards the illuded hand. Perceptual response 4 was identical to perceptual response 3, except that the order of the two previous pointing movements was reversed. Finally, perceptual response 5 was preceded by two pointing movements with the illuded hand.

Our conventional line of reasoning holds that if the perceived location of the illuded hand measured with response X significantly differs from the perceived location of the illuded hand with response Y, then X and Y must be based on different underlying body representations (Kammers et al. 2006). This line of reasoning works relatively well if we administer qualitatively different tasks, such as actions (body schema) versus perceptual localization tasks (body image). However, this reasoning introduces the risk of becoming redundant when we find significantly different perceived locations for response X1, X2, X3 etc., like we do for the perceptual responses in this experiment (Kammers et al. 2009a). Strictly speaking this could be interpreted as evidence for three different body images. Therefore, in this case, investigating the underlying multisensory integration processes in more detail might be more informative than simply proposing numerous dissociable body representations/images. Here, we investigate whether the difference in magnitude between these perceptual judgments can be explained by differences in the weighting of information depending on both the availability and quantity of more up to date proprioceptive information when visual information is no longer directly available. In this way, dissociable perceived locations for the different perceptual responses do not necessarily need to be explained by distinct multiple underlying body representations.

Model specification

We identified the following two important aspects which might have affected the relative weighting of visual and proprioceptive information: (1) the available (multi)sensory information and (2) the precision of each mode of information (for example, vision has proven to be more precise than proprioception in certain cases).

In the present example, new proprioceptive information about the location of the illuded hand is only available for Perceptual responses 3, 4, and 5. The amount of information was the same for responses 3 and 4, but doubled for response 5. For perceptual response 2 there is no new proprioceptive information of the illuded hand and the visual information is older than during perceptual response 1 which may or may not affect its relative weight.

Subsequently, we identified three different possible tentative weighting models, which might explain the plurality of dissociable perceived locations of the same limb for the same type of task (perceptual matching as a means to measure the body image) in this experiment.

M 1—equality model. The location of the illuded hand is the result of a specific relative weighting between vision and proprioception that is equal across all conditions. In other words is unaffected by the amount of new proprioceptive information, which would thus result in the same localization error for all perceptual responses.

M 2—availability model. The location of the illuded hand is unaffected by the amount of new proprioceptive information. However, when new proprioceptive information is provided the relative weight of visual information is reduced. This would result in similar localization error for perceptual responses 3, 4, and 5 which would be smaller than the relocation error found for perceptual responses 1 and 2.

M 3—quantitative model. The location of the illuded hand is influenced by the presence as well as the quantity of more up to date proprioceptive information. In other words, the perceived location of the hand is not only influenced by movement of the illuded hand but also by the number of movements that are made before the perceptual response is given. This would result in diminishing relocation errors between 3, 4 versus 5.

We translate these hypotheses into constrained statistical models. To that end, we first subtract the strength of the RHI (illusion minus control condition) for each perceptual judgment so that we obtain five measurements of each subject.

Bayes factor

Response errors for 14 subjects in all 5 conditions are displayed in Table 2.

Table 2.

Overview of the real dataset, showing the RHI-dependent location error in centimeters (cm) for each perceptual response (data previously published in Kammers et al. 2009a)

| Proprioceptive update (preceded pointing) | Perceptual response | ||||

|---|---|---|---|---|---|

| 1. none | 2. none (twice left) | 3. partial (left then right) | 4. partial (right then left) | 5. maximal (twice right) | |

| Subject | |||||

| 1 | 5.46 | 5.67 | 3.33 | 2.67 | 1.33 |

| 2 | 6.75 | 5.67 | 2.00 | 3.00 | 0.00 |

| 3 | 5.54 | 4.67 | 4.67 | 2.67 | 0.00 |

| 4 | 6.83 | 6.67 | 3.67 | 4.00 | 3.67 |

| 5 | 5.38 | 4.33 | 3.67 | 3.33 | 3.33 |

| 6 | 4.92 | 4.00 | 4.00 | 3.67 | 2.00 |

| 7 | 6.25 | 6.00 | 3.33 | 3.33 | 2.67 |

| 8 | 5.83 | 4.67 | 2.00 | 3.00 | 2.33 |

| 9 | 5.92 | 7.17 | 4.00 | 4.00 | 0.67 |

| 10 | 6.13 | 5.67 | 3.00 | 1.33 | 2.00 |

| 11 | 7.75 | 5.67 | 3.67 | 4.33 | 4.00 |

| 12 | 5.83 | 7.00 | 2.67 | 3.67 | 3.50 |

| 13 | 5.75 | 5.33 | 3.00 | 3.33 | 0.33 |

| 14 | 4.75 | 4.17 | 3.67 | 3.00 | 1.00 |

| Mean | 5.9 | 5.5 | 3.3 | 3.2 | 1.9 |

These five measurements were modeled with a multivariate normal distribution N(μ, Σ) where μ is a vector of length 5 containing the means of the 5 measurements, i.e. (μ1, μ2, μ3, μ4, μ5), and Σ is the corresponding covariance matrix, which contains the variances and covariances of the five measurements. The three theories stated above can be translated into models with inequality and equality constraints between the measurement means according to:

|

Please note here that model M 1 is equivalent to the null hypothesis, i.e., the perceived position of the subject’s own hand is based on a specific relative weighting between vision and proprioception that is equal across all conditions. Next, we calculate the Bayes factor of each model versus the other models. This can be done using the methodology described by Mulder et al. (2009). Finally, the posterior model probability of each model can be calculated using the Bayes factors according to:

|

1 |

for j = 1, 2, 3, where B 11 = 1 because model M 1 is equally good as itself. As was mentioned earlier, the outcome reflects the probability that model M j is the correct model among the three models given the data.

The Bayes factors between each of the three models are displayed in Table 3. From these results it can be concluded that the Quantitative model M 3 is the best model because there is decisive evidence in favor of model M 3 against model M 1 (B 31 = 500) as well as strong evidence in favor of model M 3 against model M 2 (B 32 = 10).

Table 3.

Bayes factors between the constrained models M 1, M 2, and M 3

| B 21 | 50 |

| B 31 | 500 |

| B 32 | 10 |

The posterior model probabilities are calculated using (1) and are presented in Table 4. Hence, the Quantitative model M 3 is the most plausible of the three models given the data, with a posterior model probability of 0.91. This result implies that the perceived location of the subject’s own index finger depends on relative weighting between (memorized) visual information and proprioceptive information, whereby the relative weights depend on the availability as well as the quantity of new proprioceptive information. As the subject moves the limb, additional proprioceptive information about the limb’s location becomes available, and the relative weight assigned to the visual information about the limb’s location diminishes.

Table 4.

Posterior model probabilities

| p(M 1|X) | 0.002 |

| p(M 2|X) | 0.091 |

| p(M 3|X) | 0.907 |

By approaching the data in this way Example 2 shows that different sensed locations within a single perceptual task can be explained by differential weighting of information. Approaching the data in this way shifts the focus of interpretation back onto the interplay between the nature of the available sensory input and the specific output demands, providing more information about how the body representation is built up. This seems to be more informative and meaningful than classifying the task dependency of the RHI in terms of different body representation categories only—categories that differ between different body representation models in the first place.

Conclusion: the weight of representing the body

In the present paper, we address a problem that has recently arisen: the potentially indefinite number of body representations in healthy individuals when based on bodily illusion task-dependency alone. We propose a shift in focus by looking into how the sensory conflict induced during a bodily illusion is resolved depending on how different sensory weighting criteria are applied. Furthermore, we suggest identifying different models (either on the level of multisensory information or on the level of different theoretical body representation models) and testing them against each other simultaneously with Bayesian model selection in a single experiment. This way, we try to create more consensus and clarity within the body representations literature in healthy individuals. We illustrate the technical application of this approach in two RHI examples.

The advantage of this approach is twofold. First, the lack of unity between body representation models can now be overcome by testing these models directly against each other. The Bayes factor does not give the answer which of the models is “the truth”, but it can tell which of the models under investigation receives most support from the data. Second, the risk of infinite multiplication can be avoided by creating models that focus on the input together with the output, and by testing several different experimental manipulations at the same time against each other. This investigation of how the body is represented rather than in how many ways, might lead to more consensus and less isolated experiments.

Acknowledgments

The authors would like to thank Prof. Dr. H. Hoijtink for his valuable comments on an earlier version of the manuscript. This work was supported by the ANR grant No. JCJC06 133960 to FV, and a VIDI grant No. 452-03-325 to CD. Additional support was provided to MK by a Medical Research Council/Economic and Social Research Council (MRC/ESRC) fellowship (G0800056/86947).

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

References

- Botvinick M, Cohen J. Rubber hands ‘feel’ touch that eyes see. Nature. 1998;391:756. doi: 10.1038/35784. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Coslett HB. Specialised structural descriptions for human body parts: evidence from autotopagnosia. Cogn Neuropsychol. 2001;18:289–306. doi: 10.1080/02643290042000071. [DOI] [PubMed] [Google Scholar]

- Carruthers G. Types of body representation and the sense of embodiment. Conscious Cogn. 2008;17:1302–1316. doi: 10.1016/j.concog.2008.02.001. [DOI] [PubMed] [Google Scholar]

- Coslett HB. Evidence for a disturbance of the body schema in neglect. Brain Cogn. 1998;37:527–544. doi: 10.1006/brcg.1998.1011. [DOI] [PubMed] [Google Scholar]

- de Vignemont F. How many representations of the body? Behav Brain Sci. 2007;30:204–205. doi: 10.1017/S0140525X07001434. [DOI] [Google Scholar]

- de Vignemont F (2009) Body schema and body image—pros and cons (in press) [DOI] [PubMed]

- de Vignemont F, Ehrsson HH, Haggard P. Bodily illusions modulate tactile perception. Curr Biol. 2005;15:1286–1290. doi: 10.1016/j.cub.2005.06.067. [DOI] [PubMed] [Google Scholar]

- Deneve S, Pouget A. Bayesian multisensory integration and cross-modal spatial links. J Physiol Paris. 2004;98:249–258. doi: 10.1016/j.jphysparis.2004.03.011. [DOI] [PubMed] [Google Scholar]

- Desmurget M, Rossetti Y, Prablanc C, Stelmach GE, Jeannerod M. Representation of hand position prior to movement and motor variability. Can J Physiol Pharmacol. 1995;73:262–272. doi: 10.1139/y95-037. [DOI] [PubMed] [Google Scholar]

- Dijkerman HC, De Haan EHF. Somatosensory processing subserving perception and action. Behav Brain Sci. 2007;30:189–201. doi: 10.1017/S0140525X07001392. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Gallagher S. How the body shapes the mind. New York: Oxford University Press; 2005. [Google Scholar]

- Gallagher S, Cole J. Body schema and body image in a deafferented subject. J Mind Behav. 1995;16:369–390. [Google Scholar]

- Gelman A, Carlin JB, Stern HS, Rubin DB. Bayesian data analysis. 2. London: Chapman and Hall; 2004. [Google Scholar]

- Graziano MS. Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proc Natl Acad Sci USA. 1999;96:10418–10421. doi: 10.1073/pnas.96.18.10418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano MSA, Botvinick MM. How the brain represents the body: insights from neurophysiology and psychology. In: Prinz W, Hommel B, editors. Common mechanisms in perception and action: attention and performance XIX. Oxford: Oxford University Press; 2002. pp. 136–157. [Google Scholar]

- Head H, Holmes HG (1911–1912) Sensory disturbances from cerebral lesions. Brain 34:102–254

- Holmes NP, Snijders HJ, Spence C. Reaching with alien limbs: visual exposure to prosthetic hands in a mirror biases proprioception without accompanying illusions of ownership. Percept Psychophys. 2006;68:685–701. doi: 10.3758/bf03208768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kammers MPM, van der Ham IJ, Dijkerman HC. Dissociating body representations in healthy individuals: differential effects of a kinaesthetic illusion on perception and action. Neuropsychologia. 2006;44:2430–2436. doi: 10.1016/j.neuropsychologia.2006.04.009. [DOI] [PubMed] [Google Scholar]

- Kammers MPM, de Vignemont F, Verhagen L, Dijkerman HC. The rubber hand illusion in action. Neuropsychologia. 2009;47:204–211. doi: 10.1016/j.neuropsychologia.2008.07.028. [DOI] [PubMed] [Google Scholar]

- Kammers MPM, Verhagen L, Dijkerman HC, Hogendoorn H, de Vignemont F, Schutter D. Is this hand for real? Attenuation of the rubber hand illusion by transcranial magnetic stimulation over the inferior parietal lobule. J Cogn Neurosci. 2009;21:1311–1320. doi: 10.1162/jocn.2009.21095. [DOI] [PubMed] [Google Scholar]

- Kammers MPM, Kootker JA, Hogendoorn H, Dijkerman HC (2009) How many motoric body representations can we grasp? (in press) [DOI] [PMC free article] [PubMed]

- Kass RE, Raftery AE. Bayes factor. J Am Stat Assoc. 1995;90:773–795. doi: 10.2307/2291091. [DOI] [Google Scholar]

- Kinsbourne M. The intralaminar thalamic nuclei: subjectivity pumps or attention–action coordinators? Conscious Cogn. 1995;4:167–171. doi: 10.1006/ccog.1995.1023. [DOI] [PubMed] [Google Scholar]

- Klugkist I, Laudy O, Hoijtink H. Inequality constrained analysis of variance: a bayesian approach. Psychol Methods. 2005;10:477–493. doi: 10.1037/1082-989X.10.4.477. [DOI] [PubMed] [Google Scholar]

- Lackner JR. Some proprioceptive influences on the perceptual representation of body shape and orientation. Brain. 1988;111:281–297. doi: 10.1093/brain/111.2.281. [DOI] [PubMed] [Google Scholar]

- Lackner JR, Taublieb AB. Reciprocal interactions between the position sense representations of the two forearms. J Neurosci. 1983;3:2280–2285. doi: 10.1523/JNEUROSCI.03-11-02280.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Longo MR, Schüür F, Kammers MPM, Tsakiris M, Haggard P. What is embodiment? A psychometric approach. Cognition. 2008;107:978–998. doi: 10.1016/j.cognition.2007.12.004. [DOI] [PubMed] [Google Scholar]

- Mon-Williams M, Wann JP, Jenkinson M, Rushton K (1997) Synaesthesia in the normal limb. Proc R Soc B: Biol Sci 264(1384):1007–1010 [DOI] [PMC free article] [PubMed]

- Mulder J, Hoijtink H, Klugkist I (2009) Equality and inequality constrained multivariate linear models: objective model selection using constrained posterior priors (in press)

- O’Shaughnessy B. Proprioception and the body image. In: Bermudez JL, Marcel A, Eilan N, editors. The body and the self. Cambridge: MIT Press; 1995. pp. 175–205. [Google Scholar]

- Paillard J (1999) Body schema and body image: A double dissociation in deafferented patients. In: Gantchev GN, Mori S, Massion J (eds) Motor control, today and tomorrow. Academic Publishing House “Prof. M. Drinov”, Sofia, pp 197–214

- Rossetti Y, Meckler C, Prablanc C. Is there an optimal arm posture? Deterioration of finger localization precision and comfort sensation in extreme arm-joint postures. Exp Brain Res. 1994;99:131–136. doi: 10.1007/BF00241417. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Rode G, Boisson D. Implicit processing of somaesthetic information: a dissociation between where and how? Neuroreport. 1995;6:506–510. doi: 10.1097/00001756-199502000-00025. [DOI] [PubMed] [Google Scholar]

- Scheidt RA, Conditt MA, Secco EL, Mussa-Ivaldi FA. Interaction of visual and proprioceptive feedback during adaptation of human reaching movements. J Neurophysiol. 2005;93:3200–3213. doi: 10.1152/jn.00947.2004. [DOI] [PubMed] [Google Scholar]

- Schwoebel J, Coslett HB. Evidence for multiple, distinct representations of the human body. J Cogn Neurosci. 2005;17:543–553. doi: 10.1162/0898929053467587. [DOI] [PubMed] [Google Scholar]

- Sirigu A, Grafman J, Bressler K, Sunderland T. Multiple representations contribute to body knowledge processing. Evidence from a case of autotopagnosia. Brain. 1991;114:629–642. doi: 10.1093/brain/114.1.629. [DOI] [PubMed] [Google Scholar]

- Snijders HJ, Holmes NP, Spence C. Direction-dependent integration of vision and proprioception in reaching under the influence of the mirror illusion. Neuropsychologia. 2007;45:496–505. doi: 10.1016/j.neuropsychologia.2006.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsakiris M, Haggard P. The rubber hand illusion revisited: visuotactile integration and self-attribution. J Exp Psychol Hum Percept Perform. 2005;31:80–91. doi: 10.1037/0096-1523.31.1.80. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ. The precision of proprioceptive position sense. Exp Brain Res. 1998;122:367–377. doi: 10.1007/s002210050525. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ. Integration of proprioceptive and visual position-information: an experimentally supported model. J Neurophysiol. 1999;81:1355–1364. doi: 10.1152/jn.1999.81.3.1355. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Wolpert DM, Haggard P. When feeling is more important than seeing in sensorimotor adaptation. Curr Biol. 2002;12:834–837. doi: 10.1016/S0960-9822(02)00836-9. [DOI] [PubMed] [Google Scholar]