Abstract

A principle of efficient language production based on information theoretic considerations is proposed: Uniform Information Density predicts that language production is affected by a preference to distribute information uniformly across the linguistic signal. This prediction is tested against data from syntactic reduction. A single multilevel logit model analysis of naturally distributed data from a corpus of spontaneous speech is used to assess the effect of information density on complementizer that-mentioning, while simultaneously evaluating the predictions of several influential alternative accounts: availability, ambiguity avoidance, and dependency processing accounts. Information density emerges as an important predictor of speakers’ preferences during production. As information is defined in terms of probabilities, it follows that production is probability-sensitive, in that speakers’ preferences are affected by the contextual probability of syntactic structures. The merits of a corpus-based approach to the study of language production are discussed as well.

Keywords: Efficient language production, Rational cognition, Syntactic production, Syntactic reduction, Complementizer that-mentioning

1. Introduction

The extent to which language and language use are organized to be efficient has attracted researchers from various disciplines for at least close to a century (Aylett & Turk, 2004; Chomsky, 2005; Fenk-Oczlon, 2001; Genzel & Charniak, 2002; Givón, 1979; Hawkins, 2004; Landau, 1969; Manin, 2006; van Son, Beinum, Koopmans-van, & Pols, 1998; Zipf, 1935, 1949). Probably one of the earliest observations related to efficient language production is the link between word frequency and word form (Schuchardt, 1885; Zipf, 1929, 1935). The observation that frequent words generally have shorter linguistic forms (Zipf, 1935) was an important piece of evidence that led Zipf to propose his famous Principle of Least Effort, according to which human behavior is affected by a preference to minimize “the person’s average rate of work-expenditure over time” (Zipf, 1949, p. 6). In this context, it is intuitively efficient for more frequent words to have shorter phonological forms. More recent evidence suggests that word length (in phonemes or syllables) is even more strongly correlated with words’ average predictability in context than with their frequency (Piantadosi, Tily, & Gibson, 2009; see also Manin, 2006). This inverse relation between contextual probability and linguistic form is expected given information theoretic considerations about efficient communication (Shannon, 1948, for more detail see below): the more probable (expected) a word is in its context, the less information it carries (the more redundant it is) in that context. The observed link between probability and phonological form can then be restated in terms of information: on average, words that add more (new) information to their context have longer phonological forms. Intriguingly, this link between information, redundancy, and probability on the one hand and linguistic form on the other hand is not limited to the mental lexicon, but seems to extend to lexical production. Several studies over recent years have found that more predictable instances of the same word are on average produced with shorter duration and with less phonological and phonetic detail (Aylett & Turk, 2004, 2006; Bell et al., 2003, Bell, Brenier, Gregory, Girand, & Jurafsky, 2009; Pluymaekers, Ernestus, & Baayen, 2005; van Son et al., 1998; van Son & van Santen, 2005 among others).

In short, the distribution of phonological forms in the mental lexicon as well as evidence from phonetic and phonological reduction during online production suggest that language strikes an efficient balance between the information conveyed by linguistic units and the amount of signal associated with them (cf. Aylett & Turk, 2004). This raises an intriguing possibility. Human language production could be organized to be efficient at all levels of linguistic processing in that speakers prefer to trade off redundancy and reduction. Put differently, speakers may be managing the amount of information per amount of linguistic signal (henceforth information density), so as to avoid peaks and troughs in information density. If so, it should be possible to observe effects of this trade-off on speakers’ preferences at choice points during utterance planning.

Choice points that would theoretically allow speakers to manage information density are ubiquitous even beyond phonetic and phonological planning. To name just a few: during morphosyntactic production, speakers of many languages can sometimes choose between full or contracted forms (e.g. in auxiliary contraction, as in he is vs. he’s, Frank & Jaeger, 2008); during syntactic production, speakers sometimes have a choice between full and reduced constituents (e.g. in optional that-mentioning, as in This is the friend (that) I told you about, (Ferreira & Dell, 2000; Race & MacDonald, 2003); optional to-mentioning, as in It helps you (to) focus where your money goes, Rohdenburg, 2004); speakers often can even elide entire constituents (e.g. optional argument and adjunct omission, as in I already ate (dinner), Brown & Dell, 1987; Resnik, 1996); and at the earliest stages of production planning, speakers can choose to distribute their intended message over one or more clauses (e.g. Ok, next move the triangle over there vs. Ok, next take the triangle and move it over there, Brown & Dell, 1987; Gómez Gallo, Jaeger, & Smyth, 2008; Levelt & Maassen, 1981). Some of these choice points are arguably available during any sentence and similar choice points are available in other languages. If language production is organized to be efficient in that speakers prefer to distribute information uniformly across the linguistic signal, the form with less linguistic signal should be less preferred whenever the reducible unit encodes a lot of information.

Unfortunately, the effect of information density on production beyond the lexical level has remained almost entirely unexplored (but see Genzel & Charniak, 2002; Resnik, 1996; discussed below). This is despite a very rich tradition of research on speakers’ preferences during syntactic production (e.g. work on accessibility effects, e.g. Bock & Warren, 1985; Ferreira, 1994; Ferreira & Dell, 2000; Prat-Sala & Branigan, 2000; dependency length minimization, e.g. Elsness, 1984; Hawkins, 1994, 2001, 2004; syntactic priming, e.g. Bock, 1986; Pickering & Ferreira, 2008).

In this article, I explore the hypothesis that language production at all levels of linguistic representation is organized to be communicatively efficient. I present and discuss the hypothesis of Uniform Information Density (developed in collaboration with Roger Levy; see Jaeger, 2006a; Levy & Jaeger, 2007). The hypothesis of Uniform Information Density links speakers’ preferences at choice points during incremental language production to information theoretic theorems about efficient communication through a noisy channel with a limited bandwidth (Shannon, 1948). I test the prediction of this hypothesis that syntactic production reflects a preference to distribute information uniformly across the speech signal.

Successful transfer of information through a noisy channel with a limited bandwidth is maximized by transmitting information uniformly close to the channel’s capacity (Genzel & Charniak, 2002). Information is defined information theoretically in terms of probabilities. The Shannon information of a word, I(word), is the logarithm-transformed inverse of its probability, . Since in natural language the probability of a word depends on the context it occurs in, the definition of Shannon information captures that a word’s information, too, is context dependent. Intuitively (and simplifying for now), efficient communication balances the risk of transmitting too much information per time (or per signal), which increases the chance of information loss or miscommunication, against the desire to convey as much information as possible with as little signal as possible. If human language use is communication through a noisy channel, linguistic communication would be optimal if (a) on average each word adds the same amount of information to what we already know and (b) the rate of information transfer is close to the channel capacity.1 It seems unlikely that all aspects of language are organized so as to achieve optimal communication, given that language is subject to many other constraints (e.g. languages must be learnable). Still, it is possible that language production is efficient, in that speakers aim to communicate efficiently within the bounds defined by grammar. If so, speakers should (a) aim for a relatively uniform distribution of information across the signal wherever possible without (b) continuously under- or overutilizing the channel. The hypothesis of Uniform Information Density, which is tested in this paper, focuses on the first prediction (see also Aylett & Turk, 2004; Genzel & Charniak, 2002; Jaeger, 2006a; Levy & Jaeger, 2007).

Uniform Information Density (UID)

Within the bounds defined by grammar, speakers prefer utterances that distribute information uniformly across the signal (information density). Where speakers have a choice between several variants to encode their message, they prefer the variant with more uniform information density (ceteris paribus).

Two aspects of the definition deserve immediate clarification. For the purpose of this article, ‘information density’ corresponds roughly to information per time. It is, however, important to keep in mind that the relevant notion of information density of the acoustic signal may also depend on articulatory detail (cf. earlier versions of UID in Jaeger (2006a) and Levy & Jaeger (2007), which did not take this into consideration). Second, the term ‘choice’ does not imply conscious decision making. It is simply used to refer to the existence of several different ways to encode the intended message into a linguistic utterance.

Given the definition of information, UID assumes that speakers have access to probability distributions over linguistic units (segments, words, syntactic structures, etc.). This distinguishes UID from most existing production accounts, which make different architectural assumptions and do not predict information density to affect speakers’ preferences (e.g. availability accounts, Ferreira, 1996; Ferreira & Dell, 2000; Levelt & Maassen, 1981; alignment accounts, Bock & Warren, 1985; Ferreira, 1994; dependency processing accounts Hawkins, 1994, 2004). Among the accounts that share UID’s architectural assumption that speakers employ probability distributions during production are connectionist accounts (Dell, Chang, & Griffin, 1999; Chang, Dell, & Bock, 2006) and work on probability-sensitive production (e.g. Aylett & Turk, 2004; Bell et al., 2003, 2009; Gahl & Garnsey, 2004; Resnik, 1996; Stallings, MacDonald, & O’Seaghdha, 1998).

Previous findings from the phonetic and phonological reduction of words in spontaneous speech lend initial support to the hypothesis of Uniform Information Density (see references above). To investigate the effect of information density on production beyond the lexical level, I investigate a case of syntactic reduction, optional that-mentioning in English complement clauses. When speakers of English produce an utterance with a complement clause, they have the option of mentioning the complementizer, as in (1a), or omitting the complementizer, as in (1b) (example taken from the Switchboard corpus, Godfrey, Holliman, & McDaniel, 1992):

-

(1)

I know [that the expectation for them was, uh, to have sex …].

I know [ the expectation for them was, uh, to have sex …].

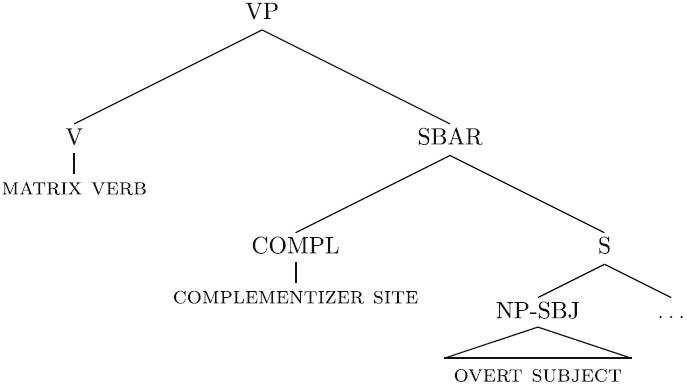

UID predicts that the production system is set up in such a way that information density directly or indirectly affects speakers’ preferences during production. That is, as speakers incrementally encode their intended message, their preferences at choice points should be affected by the relative information density of different continuations compatible with the intended meaning. Hence, UID does not predict that every word provides the same amount of information, but rather that, where grammar permits, speakers aim to distribute information more uniformly without exceeding the channel’s capacity. Fig. 1 serves to illustrate this prediction for that-mentioning in complement clauses. The hypothetical distribution of information for the same complement clause with and without the complementizer that is shown. Intuitively, mentioning the complementizer distributes the information at the onset of the complement clause over more words (this prediction will be spelled out below). If the information density at the onset of the complement clause is so high that it would otherwise exceed the channel capacity, as in Fig. 1a, speakers are predicted to prefer the full complement clause with that, thereby lowering information density. If, however, the information density at the complement clause onset is low, as in Fig. 1b, speakers are predicted to prefer the reduced variant, which avoids unnecessary redundancy.

Fig. 1.

Illustration of the development of information density over time (here simply information per word) for two alternative ways to encode the same message with a complement clause. Dashed lines indicate the variants without the complementizer that. Dotted lines indicate the variants with the complementizer that. A purely hypothetical channel capacity is indicated by the solid horizontal line (the value of 4 bits/word is arbitrarily chosen). (a) An example with high a priori information density at the complement clause onset. (b) An example with low information density.

The goals of this article are twofold. The first goal is to establish UID as a computational account of efficient sentence production. I provide evidence from that-mentioning that syntactic production is sensitive to information density and, more generally, that syntactic production is probability-sensitive. I summarize further evidence supporting UID and discuss the relation between UID and existing algorithmic accounts of sentence production, such as availability-based production (e.g. Bock & Warren, 1985; Ferreira & Dell, 2000; Levelt & Maassen, 1981) and ambiguity avoidance accounts (e.g. Bolinger, 1972; Clark & Fox Tree, 2002).

The data in this article are sampled from a corpus of spontaneous speech. The use of such naturally distributed data avoids a serious problem inherent to the use of balanced designs in psycholinguistic experiments that, I argue, has so far been underestimated. There is considerable evidence that listeners and speakers are sensitive to probability distributions (for comprehension, Hale, 2001; Jurafsky, 1996; Kamide, Altmann, & Haywood, 2003; Levy, 2008; MacDonald, 1994; McDonald & Shillcock, 2003; Staub & Clifton, 2006; Trueswell, 1996; for production, Bell et al., 2003, 2009; Gahl & Garnsey, 2004; Stallings et al., 1998, as well as the work presented here) and that they adapt to changes in these distributions (e.g. Saffran, Johnson, Aslin, & Newport, 1999; Wells, Christiansen, Race, Acheson, & MacDonald, 2009). There is even evidence that such adaptation can take place after relatively little exposure (e.g. Clayards, Tanenhaus, Aslin, & Jacobs, 2008). Consider also that one of the most widely used experimental paradigms in work on production, syntactic priming (Bock, 1986; Pickering & Ferreira, 2008), trades on recent exposure changing speakers’ behavior. Hence, it seems paramount to develop methods that facilitate well-controlled investigations of language production without exposing speakers to unusual distributions (such as balanced and hence uniform distributions, as opposed to, for example power law distributions, cf. Zipf, 1935, 1949). The corpus-based approach taken here constitutes such a method. Modern statistical regression models are used to deal with the unbalanced data that inevitably result from natural distributions. Such corpus-based studies are still rare in work on language production and there is skepticism about the use of corpus studies as tests of psycholinguistic hypotheses. The second goal of this article is to illustrate that a corpus-based approach is not only feasible, but a desirable methodological addition to research on the cognitive psychology of language production (see also Baayen, Feldman, & Schreuder, 2006; Bresnan, Cueni, Nikitina, & Baayen, 2007; Jaeger, 2006a, submitted for publication; Roland, Elman, & Ferreira, 2005).

2. Testing Uniform Information Density against syntactic reduction in spontaneous speech

UID predicts that speakers aim to transmit information uniformly close to, but not exceeding, the channel capacity. Mentioning the complementizer that at the onset of a complement clause distributes the same amount of information over one more word, thereby lowering information density. Hence, everything else being the same, speakers should be more likely to produce full complement clauses (CCs with that) than reduced CCs (without that), the higher the information of the CC onset in its context. This prediction, which is not shared by alternative accounts of syntactic production (at least not in their current form), is tested against data from spontaneous speech. For the purpose of the illustration, consider the CC onset to be the first word in CCs without that or the first two words in CCs with that. The overall Shannon information of the CC onset then consists of the information contained in the syntactic transition to a complement clause (the information that there is a complement clause) given the preceding context, and the information contained in the first words in the CC given that there is a CC and given the preceding context. In other words, I(CC onset|context) =I(CC|context) + I(onset|context, CC) = −log p(CC|context) + −log p(onset|context, CC). Since reliable estimates for the entire CC onset’s information are considerably harder to obtain than estimates of the first component in the above equation (there necessarily are much fewer observations per type), the current study focuses exclusively on the first component, I(CC|context) = −log p(CC|context). More specifically, the simplest possible estimate of this quantity is used, where the information of the CC onset is only conditioned on the matrix verb. That is, I(CC|context) is estimated as −log p(CC|matrix verb lemma). This estimate will be lower the more probable it is that a complement clause follows the matrix verb (e.g. think vs. confirm in Fig. 1). In the current study, it is hence a verb’s subcategorization frequency that is used to estimate the information density at the CC onset (see Appendix A for details).

Most experiments on sentence production test one or two hypotheses at a time, typically using a small set of homogeneous stimuli with lexical and structural properties that are extremely rare in spontaneous language use. Here, I take a different approach. A large number of complement clauses is extracted from a corpus of American English speech (the Switchboard corpus Godfrey et al., 1992) and one single multilevel logit analysis simultaneously tests the predictions of UID while controlling for various alternative accounts of syntactic production, such as availability accounts (Ferreira, 1996; Ferreira & Dell, 2000; Race & MacDonald, 2003), ambiguity avoidance accounts (Bolinger, 1972; Hawkins, 2004; Temperley, 2003), and dependency processing accounts (Elsness, 1984; Hawkins, 2001, 2004), while controlling for syntactic persistence (Bock, 1986; Ferreira, 2003), social effects (Adamson, 1992; Fries, 1940), and effects of grammaticalization (Thompson & Mulac, 1991b; Torres Cacoullos & Walker, 2009) on that-mentioning. With such statistical control, it is possible to benefit from the advantages of corpus-based work on spontaneous language production while minimizing the disadvantages. I will return to the trade-offs of corpus-based research in the general discussion.

Next, I describe the database and the statistical procedure employed in this study. Following that, I describe the controls included in the multilevel logit model analysis.

2.1. Database

The data comes from the Penn Treebank (release 3, Marcus, Santorini, Marcinkiewicz, & Taylor, 1999) subset of the Switchboard corpus of telephone dialogues (Godfrey et al., 1992). The corpus consists of approximately 800,000 words in 642 conversations between two speakers each (approximately gender-balanced) on a variety of topics. The version of the corpus used here is the Paraphrase Stanford-Edinburgh LINK Switchboard Corpus (Bresnan et al., 2002; Calhoun, Nissim, Steedman, & Brenier, 2005). Crucially, to the current study, the corpus was syntactically annotated and manually checked as part of the Penn Treebank project (Marcus et al., 1999). The syntactic annotation makes it possible to extract not only full complement clauses (with that) but also reduced complement clauses (without that) with high reliability. For an excellent overview of the annotation available for the Paraphrase corpus, see Calhoun (2006). Jean Carletta and colleagues kindly provided us with a version of the corpus in a format compatible with the syntactic search software TGrep2 (Rohde, 2005).

The TGrep2 pattern used to extract all and only CCs from the corpus is given in Appendix B. The pattern returned 7369 complement clauses. Manual inspection of the cases in the database (see Appendix B for more detail) resulted in the removal of 144 cases (2%). This error rate is considerably smaller than in earlier corpus studies using automatically parsed corpora (Roland et al., 2005, see also Roland, Dick, & Elman, 2007). Next, 71 cases (1% of total) were excluded because the matrix verb was incompatible with complementizer omission (see Appendix B). Another 138 cases (1.9% of total) were excluded because the matrix verb lemma did not occur at least 100 times in the corpus. This considerably improves the quality of information density estimates in the database. All remaining cases were automatically annotated for the control variables described in the next section using the TGrep2 Database Tools (Jaeger, 2006b; for more details, see Jaeger, 2006a, chap. 2). An additional 303 cases (4.1% of total) had to be excluded because of missing values for the control variables. The results presented below do not depend on any of these exclusions.

The remaining 6716 CCs come from 346 speakers. Of these, 1173 (17.5%) have a complementizer, while 5543 (82.5%) do not. Similarly low complementizer rates have been reported in previous work (Tagliamonte & Smith, 2005; Torres Cacoullos & Walker, 2009). The low overall rate of that-mentioning is primarily due to a few verbs with a low that-bias that make up the majority of the cases in the database. Cases with the matrix verbs think and guess rarely occur with a complementizer. These verbs make up 66% of the database. The remaining 27 verb lemmas, however, have much higher complementizer rates. This is illustrated in Table 1 (see also Table A.1 in Appendix A).

Table 1.

The four most frequent verbs in the database and observed proportions of that.

| Verb lemma | Percent of database (%) | that-bias in database (%) |

|---|---|---|

| think | 52 | 11 |

| guess | 14 | 1 |

| know | 8 | 32 |

| say | 8 | 27 |

| Remaining 27 verbs | 17 | 47 |

2.2. Statistical procedure: multilevel logit regression

A multilevel logit model, a type of generalized linear mixed model (Breslow & Clayton, 1993; Lindstrom & Bates, 1990; for an overview, see Agresti, 2002, chap. 12), is used to test the partial effect of information density while controlling for other variables known to correlate with that-mentioning. The analyzed categorical outcome (dependent variable) is the presence of that over its absence. The model contains 25 parameters for controls described in detail below and one parameter to analyze the effect of information density. Together these are the so-called fixed effects. Additionally, the analysis includes a random speaker intercept, which can be thought of as the individual adjustment to each speaker’s rate of that-mentioning. The abbreviated model equation is given in (1), where β1 to β25 are the control parameters, βInfoDensity is the parameter associated with the information density predictor, and ui is the random speaker intercept assumed to be normally distributed with variance

| (1) |

The model was fit using Laplace Approximation as implemented in the lmer( ) function of the lme4 package (Bates, Maechler, & Dai, 2008) in the statistical software R (R Development Core Team, 2008). Introductions to multilevel logit models in R are available in Gelman and Hill (2006, chap. 14) and, specifically for language researchers, Baayen (2008, chap. 7) and Jaeger (2008). Harrell (2001) provides an excellent overview of regression strategies with reference to R.

If guidelines of model evaluation are followed, multilevel models can be used to reliably analyze even highly unbalanced and clustered data like those typically present in corpus studies. For example, in the current data set, a few speakers contribute most of the data while many speakers contribute only a few (mean number of cases per speaker = 19.3, median = 14, mode = 14, range = 1–99, SD = 16.4). This is illustrated in the histogram in Fig. 2. Such clusters, if unaccounted for, can lead to spurious statistical results. The random speaker intercept in (1) addresses this issue.

Fig. 2.

Histogram of CCs per speaker in the database. Wider bars show bins of width 10, thinner bars show bins of width 2.

Additionally, estimated standard errors of fitted parameters become unreliable if the associated predictor is highly collinear with other predictors. The results presented here are not affected by collinearity. If not mentioned otherwise, correlations between fixed effects were very low (r < 0.2). Where necessary, this was achieved by means of residualization of correlated predictors (see below).

The approach taken here thus differs from previous large-scale studies of complementizer mentioning (Roland et al., 2005; Torres Cacoullos & Walker, 2009). Roland and colleagues’ model contains 186 parameters, many of which are correlated with each other. Torres Cacoullos and Walker (2009), too, do not control for collinearity between predictors in their model. While it is still possible to assess whether a predictor has an effect (e.g. via model comparison, as in Roland et al., 2005), it is difficult to reliably assess effect directions for collinear predictors. The goal of the approach taken here is to move closer to the direct tests of theories of sentence production within an integrated model, while simultaneously assessing the independent effects of multiple hypothesized mechanisms. This has the advantage that it results in interpretable parameter effects, thereby not only testing whether a predictor affects that-mentioning, but whether it does so in the predicted direction (without requiring post hoc tests, cf. Roland et al., 2005).

2.3. Controls

Syntactic reduction has been attributed to a variety of factors and mechanisms (Adamson, 1992; Bolinger, 1972; Dor, 2005; Elsness, 1984; Ferreira & Dell, 2000; Ferreira, 2003; Finegan & Biber, 2001; Fox & Thompson, 2007; Hawkins, 2001, 2004; Race & MacDonald, 2003; Temperley, 2003; Thompson & Mulac, 1991a; Yaguchi, 2001). To put the hypothesis of Uniform Information Density to as stringent an empirical test as possible, it is necessary to control for other effects known to affect complementizer that-mentioning. I briefly summarize the three arguably most influential processing accounts of that-mentioning.

Ferreira and Dell (2000) propose an account of syntactic reduction that is exclusively driven by production pressures. The general idea is that speakers insert optional words, such as relativizers or complementizers, when they would not be able to continue production fluently otherwise. That is, speakers are assumed to utter optional words if the material that has to be uttered next is not readily available. This idea of availability-based production is based on the Principle of Immediate Mention:

“Production proceeds more efficiently if syntactic structures are used that permit quickly selected lemmas to be mentioned as soon as possible.”

(Ferreira & Dell, 2000, 289 ff.)

The principle of immediate mention predicts that the accessibility of material at the complement clause onset determines whether speakers produce the that. Evidence for this prediction comes from production experiments (Ferreira & Dell, 2000; Ferreira & Hudson, 2005) and corpus studies (Elsness, 1984; Roland et al., 2005; Tagliamonte & Smith, 2005; Torres Cacoullos & Walker, 2009; see also Jaeger & Wasow (2006), Race & MacDonald (2003), Tagliamonte, Smith, & Lawrence (2005), Temperley (2003) for similar evidence from relativizer omission).

An alternative account of effects associated with the complement clause onset is that speakers insert that to avoid temporary ambiguity (Bolinger, 1972; Hawkins, 2004, see also Temperley (2003) for relativizer omission) as in the following example, where the complement clause subject you could lead to temporary ambiguity, if the speaker does not insert that before it:

-

(2)

Well, I know (that) you need to go.

The third account focuses on the effect that mentioning that has on the time it takes to process all dependencies between elements in the complement clause and elements in the matrix clause (cf. Domain Minimization and Maximize Online Processing, Hawkins, 2001, 2004). On the one hand, mentioning that slightly increases the complexity of the complement clause. On the other hand, mentioning that can shorten some of the dependencies (e.g. because it clearly marks the beginning of a complement clause). Dependency processing accounts have received support from studies showing that increased distance between the matrix verb (e.g. know in (2)) and the complement clause onset correlates with higher preference for complementizer that (Elsness, 1984; Hawkins, 2001; Roland et al., 2005; see also Quirk (1957), Fox & Thompson (2007), Race & MacDonald (2003) for relativizer mentioning).

Table 2 summarizes the control predictors included in the analysis to account for availability-based production, ambiguity avoidance, and dependency processing, as well as additional controls that previous studies found to affect that-mentioning. Next, I describe these control predictors in detail.

Table 2.

Predictors in the analysis. The name and description of each input variable are listed. The last column describes the predictor type (‘cat’, categorical; ‘cont’, continuous) along with the number of parameters associated with it

| Predictor | Description | Type (βs) (1) |

|---|---|---|

| INTERCEPT | ||

| Dependency length and position of CC | ||

| POSITION(MATRIX VERB) | CC position in the sentence | cont(3) |

| LENGTH(MATRIX VERB-TO-CC) | Distance of CC from matrix verb | cont(1) |

| LENGTH(CC ONSET) | Length of CC onset | cont(1) |

| LENGTH(CC REMAINDER) | Length of remainder of CC | cont(1) |

| Overt production difficulty at CC onset | ||

| SPEECH RATE | Log and squared log speech rate | cont(2) |

| PAUSE | Pause immediately preceding CC | cat(1) |

| DISFLUENCY | Normalized disfluency rate at CC onset | cont(1) |

| Lexical retrieval at CC onset | ||

| CC SUBJECT | Type of CC subject | cat(3) |

| SUBJECT IDENTITY | Matrix and CC subject are identical | cat(1) |

| FREQUENCY(CC SUBJECT HEAD) | Log frequency CC subject head lemma | cont(1) |

| WORD FORM SIMILARITY | Potential for double that sequence | cat(1) |

| Lexical retrieval before CC onset | ||

| FREQUENCY(MATRIX VERB) | Log frequency of verb lemma | cont(1) |

| Ambiguity avoidance at CC onset | ||

| AMBIGUOUS CC ONSET | CC onset ambiguous without that | cat(1) |

| Grammaticalization | ||

| MATRIX SUBJECT | Type of matrix subject | cat(3) |

| Additional controls | ||

| SYNT. PERSISTENCE | Prime (if any) w/ or w/o that | cat(2) |

| MALE SPEAKER | Speaker is male | cat(1) |

| Total number of control parameters in model plus intercept | 25 | |

2.3.1. Dependency length and position

Various measures of domain complexity preceding and within the CC have been found to be correlated with that-mentioning (e.g. Elsness, 1984; Ferreira & Dell, 2000; Hawkins, 2001, 2004; Roland et al., 2005; Tagliamonte et al., 2005; Thompson & Mulac, 1991a). Four such measures are included in the analysis.

Length(matrix verb-to-CC)

Given the results from previous studies, non-adjacent CCs are expected to strongly prefer that (Elsness, 1984; Hawkins, 2001; Rohdenburg, 1998). Principles like Hawkins’ Domain Minimization and Maximize Online Processing (Hawkins, 2001, 2004) predict an increasing preference for that the more material intervenes between the matrix verb and the CC onset. So, the number of words between the matrix verb and the CC onset was included as a continuous predictor. For example, in (3a) there zero intervening words, whereas in (3b) there are two intervening word. There were 222 cases with intervening material between the verb and the CC (mean number of intervening words for those cases = 2.0).

-

(3)

My boss thinks [I’m absolutely crazy].

I agree with you [that, that a person’s heart can be changed].

Length(CC onset)

Dependency processing accounts also predict that the length of the CC subject correlates positively with speakers’ preference for that. Here, the number of fluent words up to and including the CC subject was included as a continuous predictor. For example, the CC onset in (3a) contains one word, whereas the CC onset in (3b) contains four words (a person’s heart; clitics were counted as separate words). Only fluent words were counted to avoid collinearity with the measures of disfluency introduced below. The complementizer – if present – was also not counted to avoid a trivial correlation with that-mentioning.

Length(CC remainder)

The number of words in the CC following its subject was also included as a continuous predictor (e.g. three words in both (3a) and (3b)). Dependency processing accounts only predict an effect of this variable if other dependencies hold between material preceding the CC and material following it. This is unlikely to be a frequent event, and even for cases with such dependencies, the effect would be predicted to be very weak.

Position(matrix verb)

It is possible that production difficulty differs systematically depending on where speakers are in the process of planning and pronouncing a sentence. Unfortunately, very little is known about relative production difficulty during incremental sentence production (in sharp contrast to sentence comprehension). To capture any potentially non-linear effects of the position of the complement clause in the overall sentence, a restricted cubic spline over the number of words preceding the matrix verb (two words in (3a) and one word in (3b)) was included in the model (using rcs( ), package Design, Harrell, 2007). Restricted cubic splines provide a convenient way to model non-linear effects of predictors (for a concise summary, see Harrell (2001, pp. 16–24); for an introduction to rcs( ) for the analysis of language data, see Baayen (2008, chap. 6.2.1)). An effect of matrix verb position might also be predicted by grammaticalization accounts of that-mentioning, which consider certain uses of CC-embedding verbs to have become grammaticalized without that (Thompson & Mulac, 1991b; discussed below). Three parameters (4 knots) were used for the restrictive cubic spline, allowing for a moderate degree of non-linearity (consistent with the results, as also confirmed by further tests allowing for higher degrees of non-linearity). Following Harrell (2001), knots were placed at the 5th, 35th, 65th, and 95th quantiles, corresponding to 2, 3, 4, or 16 words preceding the complement clause.

2.3.2. Overt production difficulty at CC onset

Speakers are more likely to produce optional that when they are experiencing production difficulty (Ferreira & Firato, 2002; Jaeger, 2005). Overt signs of production difficulty provide a window into underlying production difficulty that may go beyond what the controls introduced above capture. Speech rate and pause information were extracted from the time-aligned orthographic transcripts of Switchboard (Deshmukh, Ganapathiraju, Gleeson, Hamaker, & Picone, 1998).

Log speech rate and squared log speech rate

Both the log-transformed and the square of the logtransformed speech rate at the CC onset were included because both have been shown to affect the phonetic reduction of words (Bell et al., 2003). Speech rates in the data set are roughly normally distributed with a heavy right tail (mean = 4.8 syllables/second, SD = 1.4). To reduce collinearity between these two factors, the log-transformed speech rate was centered before it was squared. Since the two measures were still correlated, the LOG SPEECH RATE was regressed out of SQUARED LOG SPEECH RATE and the residuals were included in the analysis of that-mentioning to assess the effect of SQ LOG SPEECH RATE.

Pause

The presence or absence of a suspension of speech at the CC onset was included as a binary control predictor. About 10% (680) of all cases contain a pause at the CC onset. Pause lengths range from 10 ms to almost 13 s (mean = 453 ms, SD = 626).

Disfluency

The number of disfluencies at the CC onset up to and including the CC subject was also included as a control. Disfluencies included restarts (e.g. “It was a, a, a, guy”), editing terms (e.g. you know, I mean), and filled pauses (e.g. uh, um). While these categories arguably differ in many respects, they all are correlates of production difficulty. For example, the CC onset (4) contains three disfluencies (two restarts and one filled pause).

-

(4)

They said [the, that, um, that he was horrible at batting with men on base].

To reduce collinearity between this measure and the length of the CC onset (longer phrases on average have more disfluencies), the disfluency count was regressed against LENGTH(CC ONSET) and the residuals of that regression were included in the main analysis to assess disfluency effects. The number of disfluencies intervening between the matrix verb and CC was not included as control because there were too few cases with disfluencies.

2.3.3. Lexical retrieval at CC onset

CC subject

The accessibility of the CC subject was coded based on its referential expression (Gundel, Hedberg, & Zacharski, 1993). Previous work has found that pronouns, especially the local pronoun I, correlate with lower that rates than other pronouns and lexical NPs (Elsness, 1984; Ferreira & Dell, 2000; Roland et al., 2005). CC SUBJECT was coded as four ordered levels: I(899 cases) > it(1294) > - other pronouns, including demonstrative pronouns (2874) > other types of NPs (1649). For example, the CC subject in (3a) is I, the CC subject in (3b) was coded as ‘other type of NP’, and the CC subject in (4) was coded as ‘other pronoun’.

The effect of CC subject accessibility on that-mentioning has been attributed to an availability-based strategy of speakers to insert that when following material is not readily available for pronunciation (Ferreira & Dell, 2000). In the Switchboard corpus, the word it is frequently used non-referentially (as in “…it rains…”) or to refer to a highly salient issue under discussion. Because both of these uses are likely to make it easy to retrieve and hence highly available, it is expected to pattern with the local pronoun I. Three Helmert-contrasts over the four levels of CC SUBJECT were included in the model, comparing each of the three lowest levels against all higher levels (it vs. I; other pronouns vs. I and it; etc.).

Subject identity

Both Elsness (1984) and Ferreira and Dell (2000) found that co-referentiality of the matrix and CC subject correlated with a lower that rate. While recent experiments (Ferreira & Hudson, 2005) and corpus studies (Torres Cacoullos & Walker, 2009) have not replicated this effect, SUBJECT IDENTITY encoding whether the matrix and CC subjects were string identical was included in the analysis. Since this variable is highly correlated both with the type of matrix subject (see below) and the type of CC subject, the centered binary SUBJECT IDENTITY variable was first regressed against the referential form of the CC subject (CC SUBJECT) and the referential form of the matrix subject (MATRIX SUBJECT). The residuals of this regression were entered into the main analysis. SUBJECT IDENTITY hence tests the effect of subject identity beyond the properties of the matrix and CC subjects.

Frequency(CC subject head)

In addition to these form-based predictors, the model included the log-transformed frequency of the CC subject’s head lemma (e.g. the frequency of I for (3a) or the frequency of heart for (3b) above). A lemma-based rather than word form-based estimate was chosen to reduce data sparsity (by combining the counts from all word forms associated with a lemma). Using word form frequency does not change any of the results reported below. Since most CC subject phrases were pronouns, their head is also the only word in that NP. In 85% of all cases in the database, the subject head is also the only word in the CC subject.

Since more frequent words are produced faster (Jescheniak & Levelt, 1994), availability accounts of that-mentioning predict that the less frequent the CC subject’s head is, the more likely speakers are to produce that. This has indeed been observed in previous studies (Roland et al., 2005).

The frequency of the CC subject is, however, highly correlated with the form of its referential expression (CC SUBJECT, Spearman rank correlation r = −0.80). Hence, the effect of CC SUBJECT was regressed out of the log-transformed frequency and the residuals of that regression were entered into the analysis of that-mentioning.

Word form similarity

Finally, a binary predictor was included to encode whether the first word in the CC (excluding the complementizer that) was the demonstrative determiner or pronoun that (as in, e.g. “I believe (that) that paint is what I need.”). There is preliminary evidence that speakers are about half as likely to produce complementizer that if the next word is demonstrative that (Jaeger, in press; Walter & Jaeger, 2008). Walter and Jaeger attribute this effect to a bias against adjacent similar word forms (the Obligatory Contour Principle Leben, 1973), presumably due to interference effect. A bias against adjacent similar or identical linguistic elements has been observed at many levels of linguistic representation (for a recent overview, see Walter, 2007). Similarity-based interference has also been observed in language comprehension (Gordon, Hendrik, & Johnson, 2001; Lewis, 1996) and production (e.g. in agreement errors, Badecker & Lewis, 2007; or phonological priming, Bock, 1987).

About 10% of the CCs in the data (696 cases) start with demonstrative that and hence could potentially exhibit an OCP effect. Since by far most of the instances of the demonstrative that (96.7%) are the demonstrative pronoun that (rather than the demonstrative determiner), which means that WORD FORM SIMILARITY is correlated with SUBJECT FORM ⩵ other pronoun (Spearman rank correlation r = 0.37), it is necessary to dissociate the two effects.

The WORD FORM SIMILARITY variable was regressed against an indicator variable which coded whether the CC subject was a pronoun. The residuals of that linear regression were included in the analysis as the WORD FORM SIMILARITY predictor.

2.3.4. Lexical retrieval immediately preceding CC

Frequency(matrix verb)

The matrix verb directly precedes the CC in 93.5% of all cases. Increased processing load associated with the production of less frequent word forms (Jescheniak & Levelt, 1994; Baayen et al., 2006) may spill over from the matrix verb to the CC onset. In that case, the availability- based account of that-mentioning predicts higher rates of that-mentioning following less frequent matrix verbs. Evidence for this hypothesis comes from previous corpus studies (Elsness, 1984; Garnsey, Pearlmutter, Meyers, & Lotocky, 1997; Roland et al., 2005). The log-transformed frequency of the matrix verb lemma was included in the analysis to account for this effect. The frequency of the matrix verb rather than the word that immediately preceded the CC was chosen to avoid collinearity with the effect for LENGTH(MATRIX VERB-TO-CC).

2.3.5. Ambiguity avoidance at CC onset

Ambiguous CC onset

Without a complementizer some CC onsets can lead to temporary syntactic ambiguity. If the matrix verb is compatible with subcategorization frames other than complement clauses and if the CC onset is not unambiguously case-marked (e.g. I and we vs. you or the man) and if the matrix onset preceding the complement clause does not unambiguously select a verb sense that requires a complement clause, the CC onset without the complementizer could temporarily be interpreted as an argument to the matrix verb. Consider, for example, (5) where many of them could also temporarily be interpreted as the direct object to the matrix verb know. Such temporary ambiguities can lead to considerably increased processing times (due to ‘garden-pathing’; e.g. Garnsey et al., 1997; Trueswell, Tanenhaus, & Kello, 1993).

-

(5)

…and I know [many of them are doing it].

This raises the question as to whether speakers avoid such temporary ambiguities. Indeed, some studies on that-mentioning in complement clauses (Bolinger, 1972; Hawkins, 2004) and relative clauses (Bolinger, 1972; Temperley, 2003) have provided evidence that producers mention that to avoid temporary ambiguities. However, these studies were generally conducted on small databases of written (often edited) texts and they lacked controls for most of the other factors affecting syntactic reduction. More recent and more controlled studies have failed to find any effect of ambiguity avoidance on that-mentioning (Ferreira & Dell, 2000; Roland et al., 2005). Most previous studies did not use spontaneous speech data (but see Roland et al., 2005, 2007) and none of the corpus studies used data that was manually annotated for potential ambiguity.

Here, a CC onset was coded as potentially ambiguous if the onset of the utterance up to and including the CC subject (but without the complementizer, if it was present) could potentially lead to a garden path. First, all cases with case-marked CC subjects or with a matrix verb that never or rarely takes a CC complement (think, suppose, wish, figure, hope, and agree) were automatically marked as unambiguous. This left 2973 cases that were manually annotated. This annotation was conducted by an undergraduate research assistant at the University of Rochester, after initial training by the author on 100 cases. The annotation marked 1012 CCs as potentially containing a temporary ambiguity (only about a third of the cases that would have been judged ambiguous based on only case-marking and verb subcategorization constraints!).

2.3.6. Grammaticalization of epistemic markers

Matrix subject

Several studies have found that that-mentioning is also strongly correlated with the form of the matrix subject. This effect has variously been attributed to decreased processing load for co-referentiality between the matrix and CC subject (Ferreira & Dell, 2000) or the use of grammaticalized discourse formulae (Thompson & Mulac, 1991b). Here, potential effects of co-referentiality are already modeled by the SUBJECT IDENTITY predictor introduced above.

According to grammaticalization accounts (Thompson & Mulac, 1991b), that-mentioning is not an alternation between two meaning-equivalent variants (Thompson & Mulac, 1991b, pp. 313–314). Rather, there are epistemic uses of complement clause embedding verbs, which occur without that, and real complement clauses, which are assumed to occur with that. For example, in (6) (taken from Thompson & Mulac (1991a, p. 313)) I think does not necessarily introduce a complement clause, but rather expresses a “degree of speaker commitment [to the proposition conveyed in the remainder of the clause]” (Thompson & Mulac, 1991a).

-

(6)

I think exercise is really beneficial, for anybody.

The strongest version of the grammaticalization hypothesis, according to which there is no alternation, is incompatible even with the data presented in Thompson and Mulac (1991a). After all epistemic phrases are excluded from their data, there still is considerable variation in that-mentioning that needs to be accounted for. Previous work (Thompson & Mulac, 1991a; Torres Cacoullos & Walker, 2009) does, however, support weaker grammaticalization accounts. Grammaticalization could be gradient or it could be one of several factors contributing to the overall pattern of that-mentioning. Indeed, there is evidence that certain highly frequent matrix clause onsets, such as I think and I guess, are associated with much lower rates of that-mentioning than other instances of complement clause embedding verbs (Thompson & Mulac, 1991a; Torres Cacoullos & Walker, 2009). According to Thompson and Mulac (1991b), speakers are most likely to express a degree of commitment when talking about themselves, but do so also when talking about their addressee(s) (Thompson & Mulac, 1991b, p. 243). Hence 1st person matrix subject I should correlate with the lowest rates of that-mentioning, followed by 2nd person matrix subjects. All other types of matrix subjects should correlate with considerably higher rates of that-mentioning. Thompson and Mulac (1991a), 321 present evidence for such a three-way distinction between matrix subjects. Other studies have, however, found that only matrix subject I differed significantly from other types of matrix subjects (Torres Cacoullos & Walker, 2009).

Potential effects of the matrix subject form are modeled as a treatment-coded predictor with three levels: I (5355 cases) vs. you (351) vs. other personal pronoun (515 cases) vs. other NP (495 cases, including zero subjects). Each level was contrasted against I, in such a way that the coefficient corresponds to the distance between the two group means.

2.3.7. Additional controls

Syntactic persistence

Optional that-mentioning is also affected by syntactic persistence, that is whether the most recent CC was produced with or without a complementizer that (Ferreira, 2003; Jaeger & Snider, 2008). SYNTACTIC PERSISTENCE was coded as categorical predictor with three levels: no preceding prime (616 cases), preceding prime with that (1239), preceding prime without that (4861). This coding does not distinguish between primes by the speaker and primes by their interlocutor. Helmert contrasts were used to model the effect of syntactic persistence, comparing that-mentioning for ‘no prime’ against that-mentioning for ‘primes without that’, and the effect of both of these levels against the effect of ‘primes with that’.

Speaker gender

Previous work found that social variables correlate with syntactic reduction (Adamson, 1992; Fries, 1940). Here speaker gender is included in the analysis since there is some evidence that female speakers are more likely to produce relativizers in non-subject-extracted relative clauses (Staum & Jaeger, 2005; although we did not find such an effect for complementizer that-mentioning). This would be consistent with the observation that female speakers are more likely to choose more formal registers and formal registers are correlated with less reduction (Finegan & Biber, 2001).

2.4. Model evaluation

Before results are reported, it is important to evaluate the fitted model. This involves testing for signs of overfitting, collinearity, and outliers (see Baayen, 2008, Section 6.2.3-4; Jaeger & Kuperman, 2009; Jaeger, submitted for publication). Plotting mean predicted probabilities of that vs. observed proportions in Fig. 3 shows an acceptable fit of the model. Unsurprisingly, the fit is best for bins with low observed proportions of that, which contain most of the data. Further simulations over the estimated parameters (using sim( ), package arm, Gelman et al., 2008) showed no signs of overfitting: all estimated coefficients were stable. There were no signs of collinearity in the model (fixed effect correlations rs < 0.2).

Fig. 3.

Mean predicted probabilities vs. observed proportions of that. The data are divided into 20 quantiles, each containing at least 335 data points. The data rug and the kernel density plot at the top of the plot visualizes the distribution of the predicted values. The R2 of the predicted probabilities vs. observed proportions is given (this is not to be misunderstood as a measure of model quality).

The model accounts for a significant amount of information in the variation of that-mentioning. While several pseudo R2 measures have also been proposed for logit models as measures of overall model quality, they lack the intuitive and reliable interpretation of the R2 available for linear models. One of the most commonly used pseudo R2 measures is the Nagelkerke R2. The Nagelkerke R2 assesses the quality of a model with regard to a baseline model (the model with only the intercept). While this measure is usually employed for ordinary logit models, its definition extends to multilevel logit models. For the current model, Nagelkerke R2 = 0.34 compared against an ordinary logit model with only the intercept as baseline.2

2.5. Effect of information density

As predicted by UID, there was a clearly significant effect of information density: the higher the information of the CC onset, the more likely speakers are to produce the word that (β = 0.47; z = 16.9; p < 0.0001). In other words, speakers are less likely to produce a complementizer that, the lower the information density of the CC onset. This effect holds even while other variables that previous studies found to be correlated with that-mentioning are controlled for. Fixed effect correlations of information density with other predictors were negligible (all r < 0.13), so that collinearity is not a concern for the assessment of the effect. Fig. 4 illustrates the effect on that-mentioning.

Fig. 4.

Effect of information density at the complement clause onset on that-mentioning along with 95% CIs (shaded area, which is hard to see because the CIs are very narrow around the predicted mean effect). (a) The effect on the log-odds of complementizer that (the space in which the analysis was conducted). (b) The effect transformed back into probability space. Hexagons indicate the distribution of information density against predicted log-odds (a) and probabilities (b) of that, considering all predictors in the model. Fill color indicates the number of cases in the database that fall within the hexagon.

As a matter of fact, information density is the strongest predictor in the model in terms of its contribution to the model’s likelihood . The χ2-test serves as a measure of how much the model is improved by the inclusion of the predictor.3 The partial Nagelkerke R2 associated with information density is 0.05. This suggests that at least 15% of the model quality (in terms of the Nagelkerke R2) can be attributed to information density.

This improvement in model quality more than matches that of all four fluency parameters combined . To put the effect in relation to two theories of sentence production that have received considerable attention in psycholinguistic work on syntactic variation, availability-based sentence production (Bock, 1986; Ferreira & Dell, 2000; Levelt & Maassen, 1981; Prat-Sala & Branigan, 2000, among others) and dependency processing accounts (Hawkins, 1994, 2004): the effect associated with the only parameter fitted for information density outranks the effect of all three parameters associated with dependency length effects in the model (LENGTH(MATRIX VERB-TO-CC), LENGTH(CC ONSET), LENGTH(CC REMAINDER); . The effect of information density also is much larger than the combined effect of accessibility related parameters in the model (CC SUBJECT, FREQUENCY( CC SUBJECT), SUBJECT IDENTITY; . That is, information density emerges as the single most important predictor of complementizer that-mentioning.

It is well-known that embedding verbs differ considerably with regard to their that-bias (the associated rate of that-mentioning, Garnsey et al., 1997; Roland et al., 2005, 2007). In the current database, that-biases range from 1% for guess to 75% for worry (see Table A.1 in Appendix A). On the one hand, UID differs from availability, ambiguity avoidance, and dependency processing accounts in that it potentially offers an account for these otherwise idiosyncratic differences between verbs. Note for example, that the verb guess, which has the lowest that-bias, also has one of the highest CC-biases (62.3% of all instances of guess in the corpus take a complement clause). In comparison, the verb worry, which has the highest that-bias, has a more than 20-times lower CC-bias (3.2%). Since the estimate of information density at the CC-onset used in this paper is only based on verbs’ subcategorization frequency, these observations are in line with the predictions of UID.

However, embedding verbs differ along dimension besides subcategorization frequency. It is possible that the analysis presented above fails to account for differences between embedding verbs that affect that-mentioning. Hence it is conceivable that the observed correlation between information density and that-mentioning is due to differences between embedding verbs that are not modeled in the analysis. To test this hypothesis, a random intercept for verb lemmas was added to the model and the analysis was repeated. Indeed, the estimated variance of the random verb lemma intercept is large in line with Roland et al. (2007). The random verb lemma intercept improves the model considerably ; Nagelkerke R2 = 0.38, cf. 0.34 for a model without the random verb lemma effect). It is worth noting, that, unlike for the other predictors in the model, the random verb lemma intercept is not motivated by a specific processing account. Also, unlike for the fixed predictor of information density, inclusion of a random effect does not make any assumption as to how verbs align with their that-bias. Instead, the random effect simply models arbitrary difference in that-bias between verb lemmas. Nevertheless, the information density effect remains significant in the expected direction . That is, while future work is necessary to determine exactly how much of the variance in that-mentioning associated with lexical differences can be attributed to UID, the observed effect of information density cannot be reduced to arbitrary properties of embedding verbs (see also Appendix C for further evidence that the observed effect is not due to a few verbs, including meta-analyses of production experiments reported in Ferreira and Dell (2000)).

2.6. Results and discussion of control predictors

Next, I discuss the effects of the control parameters grouped by the accounts of syntactic production that they bear on. In an effort to make the results more accessible, I present and discuss findings grouped by the accounts of sentence production on which they bear on. I first discuss availability, ambiguity avoidance, and dependency processing accounts (in that order). Then I discuss to what extent there is evidence that the observed patterns in that-mentioning are due to grammaticalization (Thompson & Mulac, 1991b) and syntactic persistence (Bock, 1986).

While the discussion of these controls does not directly bear on the primary effect of interest in this article, the tests provided go beyond the confirmation of previous research in several cases. For example, previous work has not investigated the relation between fluency and syntactic reduction, although, as I argue below, the evidence from fluency bears on and refines the hypothesis of availability- based production. Similarly, previous work has largely ignored the role of phonological similarity based interference during syntactic planning (but see Bock, 1987; Walter & Jaeger, 2008; Jaeger, in press). Additionally, the current analysis tests previous hypotheses while controlling for a large number of competing accounts. Indeed, not all previous effects replicate.

Table 3 summarizes the parameter estimates β for all fixed effects in the model, as well as the estimate of their standard error SE(β), the associated Wald’s z-score, and the significance level. The estimated variance of the random speaker intercept is . The partial Nagelkerke R2 associated with the random speaker effect is 0.01.

Table 3.

Result summary: coefficient estimates β, standard errors SE(β), associated Wald’s z-score (= β/SE(β)) and significance level p for all predictors in the analysis

| Predictor | Coef. β | SE(β) | z | p |

|---|---|---|---|---|

| Intercept | 0.12 | (0.38) | 0.3 | >0.7 |

| POSITION(MATRIX VERB) | 0.95 | (0.14) | 6.6 | <0.0001 |

| (1st restricted comp.) | −27.94 | (5.33) | −5.2 | <0.0001 |

| (2nd restricted comp.) | 55.43 | (10.80) | −5.1 | <0.0001 |

| LENGTH(MATRIX VERB-TO-CC) | 0.17 | (0.065) | 2.5 | =0.01 |

| LENGTH(CC ONSET) | 0.18 | (0.014) | 12.8 | <0.0001 |

| LENGTH(CC REMAINDER) | 0.03 | (0.006) | 4.4 | <0.0001 |

| LOG SPEECH RATE | −0.70 | (0.13) | −5.5 | <0.0001 |

| SQ LOG SPEECH RATE | −0.36 | (0.19) | −1.9 | <0.06 |

| PAUSE | 1.11 | (0.11) | 10.2 | <0.0001 |

| DISFLUENCY | 0.39 | (0.12) | 3.2 | <0.002 |

| CC SUBJECT =it vs. I | 0.04 | (0.08) | 0.5 | >0.6 |

| =other pro vs. prev. levels | 0.05 | (0.03) | 1.6 | <0.11 |

| =other NP vs. prev. levels | 0.11 | (0.02) | 4.9 | <0.0001 |

| FREQUENCY(CC SUBJECT HEAD) | −0.02 | (0.03) | −0.7 | >0.5 |

| SUBJECT IDENTITY | −0.32 | (0.17) | −1.9 | <0.052 |

| WORD FORM SIMILARITY | −0.31 | (0.17) | −1.8 | <0.08 |

| FREQUENCY(MATRIX VERB) | −0.23 | (0.03) | −7.7 | <0.0001 |

| AMBIGUOUS CC ONSET | −0.12 | (0.12) | −1.0 | >0.2 |

| MATRIX SUBJECT =you | 0.48 | (0.15) | 3.1 | <0.002 |

| =other PRO | 0.60 | (0.13) | 4.8 | <0.0001 |

| =other NP | 0.85 | (0.13) | 6.7 | <0.0001 |

| PERSISTENCE =no vs. prime w/o that | 0.02 | (0.07) | 0.3 | >0.7 |

| =prime w/ that vs. prev. levels | 0.06 | (0.04) | 1.6 | <0.11 |

| MALE SPEAKER | −0.15 | (0.11) | −1.3 | >0.19 |

| Information density | 0.47 | (0.03) | 16.9 | <0.0001 |

2.6.1. Availability-based production

Support for the availability account of syntactic reduction (Ferreira & Dell, 2000) comes from the fluency controls, the controls for accessibility effects of the CC subject, and the other controls for lexical retrieval effects at and immediately preceding the CC onset.

Fluency

Probably the strongest evidence that the availability of material at the CC onset affects that-mentioning (Ferreira & Dell, 2000) comes from the clearly significant effect of fluency predictors. Lower SPEECH RATE, presence of a PRECEDING PAUSE, and INITIAL DISFLUENCY correlate with higher complementizer rates. As previous work has found for phonetic reduction (Bell et al., 2003), speech rate has a highly significant effect on that-mentioning (log-transformed speech rate: β = −0.70; z = −5.5; p < 0.0001; residual squared log-transformed speech rate: β = −0.36; z = −1.9; p < 0.06). The effect of CC initial disfluencies, such as filled pauses or restarts, is also highly significant (β = 0.39; z = 3.2; p < 0.002): the odds of complementizer that are about 1.5(= e0.39) times higher for CCs with a disfluency than for CCs with fluent onsets. Pauses, too, strongly correlate with higher that rates (β = 1.11; z = 10.2; p < 0.0001; fixed effect correlation with log speech rate r = 0.23). Interestingly, inserting that does not seem to help speakers (or at least not sufficiently) to overcome the production difficulty that seems to cause that-insertion (Jaeger, 2005; see also Ferreira & Firato, 2002).

Subject identity, referential form, and frequency of CC subject

As predicted by availability accounts, co-referentiality between the matrix and CC subject correlates with lower that rates although the effect does not quite achieve significance (β = −0.32; z = 1.9; p < 0.052), thereby providing support, albeit weak support, for Ferreira and Dell (2000) over studies that failed to replicate the co-referentiality effect (Ferreira & Hudson, 2005; Torres Cacoullos & Walker, 2009).

Of the form-based contrasts (CC SUBJECT), lexical and pronoun CC subjects differ significantly (β = 0.11; z = 4.9; p < 0.0001; fixed effect correlations with other levels of CC SUBJECT: 0.004 < rs < 0.28). As expected, there is no sign that the local pronoun I and it differ in that rates (p > 0.6). The contrast between other pronouns vs. I and it is predicted to be significant by availability accounts, but does not quite reach significance (β = 0.05; z = 1.6; p = 0.11). Neither does FREQUENCY(CC SUBJECT HEAD), the residual effect of the CC subject’s head frequency beyond CC SUBJECT, reach significance (p > 0.5).

As mentioned above, the frequency and form-based effects are highly collinear, and splitting the accessibility effect between the two variables hurts both of them. Without the form-based predictors, CC subject frequency is a highly significant predictor correlating (as expected) negatively with that mentioning (β = −0.07; z = −4.0; p < 0.0001). Furthermore, CC subject frequency remains a significant predictor (β = −0.18; z = −2.5; p < 0.012), if only CCs with pronoun subjects are considered, suggesting that the frequency effect extends beyond the contrast between pronominal and lexical CC subjects. Excluding CC subject frequency does not change the significance of any of the form-based parameters. In sum, there is a clear accessibility effect related to the CC subject, and it is best modeled by the frequency of the subject’s head (BIC for model without form-based predictors: 4837; BIC for model without frequency predictor: 4849).4

Interference during lexical retrieval

There is also evidence for an interference effect during lexical retrieval due to similarity. If the CC subject is the demonstrative pronoun that or if it starts with the demonstrative determiner that, speakers are about 1.4 times less likely to mention that complementizer that than otherwise, although the effect fails to reach significance (β = −0.31; z = −1.8; p < 0.08). The relative weakness of the effect compared to previous work (Jaeger, in press; Walter & Jaeger, 2008) is likely due to the additional controls included in the analysis presented here.

‘Spillover’

The observed highly significant negative correlation between the lemma frequency of the matrix verb, FREQUENCY(MATRIX VERB), and that-mentioning (β = −0.23; z = −7.7; p < 0.0001) replicates earlier corpus-based results (Elsness, 1984; Garnsey et al., 1997; Roland et al., 2005). Interestingly, the only previous experiment investigating matrix verb frequency found no effect (Ferreira & Dell, 2000, Experiment 3). If confirmed further, the effect is compatible with availability accounts under the assumption that production resources are limited so that high processing load can ‘spill over’ into the planning of upcoming material.

2.6.2. Ambiguity avoidance

As discussed above, complementizer mentioning has been attributed to ambiguity avoidance (Bolinger, 1972; Hawkins, 2004; see also Temperley, 2003 for relativizer mentioning). However, several other studies have failed to replicate these effects (Ferreira & Dell, 2000; Roland et al., 2005). The results presented here are the first obtained from spontaneous speech and while controlling for a variety of other processing effects. The main analysis did not reveal evidence for ambiguity avoidance. CC onsets that were annotated as potentially ambiguous were not significantly more likely to occur with complementizer that (p > 0.2). But is possible that the vast majority of the ‘potentially ambiguous’ cases may lead to negligible or even no garden path effects in context. If speakers only avoid sufficiently severe ambiguity (i.e. cases that actually lead to increased comprehension difficulty), this would explain lack of an ambiguity avoidance effect in the main analysis. Two post hoc tests were conducted to assess this hypothesis.

First, consider that garden path effects have been shown to be weaker, the less probable the ‘garden path’ parse is (e.g. Garnsey et al., 1997; Trueswell et al., 1993). This observation suggests a modification of previous ambiguity avoidance accounts: maybe speakers only avoid temporary ambiguity if the preceding context biases comprehenders towards the unintended parse (for a similar argument, see Wasow & Arnold, 2003). In other words, speakers should be more likely to insert complementizer that, the more likely comprehenders are to choose the wrong parse after observing the first word(s) in the complement clause. This would explain the null effect of AMBIGUOUS CC ONSET since our ambiguity annotation was rather inclusive. That is, cases were annotated as potentially ambiguous, even if there were rather unlikely to lead to a garden path given the subcategorization bias of the verb. Consider the following example, where this does not have to start a CC, as in “Which one would you take?” - “I guess this one”.

-

(7)

I guess [this doesn’t really have to do with taxes …]

To test whether speakers only avoid potential ambiguity if comprehenders are likely to be garden pathed, an interaction term between AMBIGUOUS CC ONSET and the log-transformed probability of a complement clause given the matrix verb was added to the model (except for the sign, this is the same as the information density estimate). This interaction failed to reach significance (p > 0.5), providing no evidence that speakers avoid ambiguity.

But could it be that speakers only avoid potential comprehension difficulty if it is both likely and long-lasting? That is, do speakers only avoid long stretches of potentially ambiguous material (and only if comprehenders are likely to be garden pathed)? To test this hypothesis, all 1012 potentially ambiguous cases in the database were annotated for their disambiguation point. Table 4 summarizes the distribution of potentially ambiguous CC onsets with regard to their disambiguation point.

Table 4.

Distribution of disambiguation points for potentially ambiguous CC onsets.

| Disambiguation point – word: | 1 | 2 | 3 | 4 | 5–9 | >9 |

|---|---|---|---|---|---|---|

| Number of instances | 773 | 86 | 61 | 48 | 35 | 9 |

To test whether speakers only avoid relatively long-lasting ambiguity, the original predictor AMBIGUOUS CC ONSET in the model was replaced by the length of the potential ambiguity (in words). Unambiguous cases were coded as having an ambiguity length of 0. Both the (centered) ambiguity length predictor and its interaction with the probability of a complement clause given the matrix verb were entered into the model. The main effect of ambiguity length was not found to be significant (p > 0.4), but its interaction with CC predictability is approaching significance in the expected direction (β = −0 14, z = −1.6, p = 0.1):.For unexpected CCs with otherwise late disambiguation points, speakers are more likely to insert that. Including the interaction in the model does lead to a marginal improvement of the model’s log-likelihood .

In conclusion, there may be a weak effect on ambiguity avoidance on complementizer that-mentioning, but the strong effect found in some earlier studies (e.g. Hawkins, 2004) seems to vanish with the additional controls included in the current study. Given that multiple post hoc tests were performed, the marginal effect found above has to be taken with extreme caution. One reason for this weak or non-existent effect of ambiguity avoidance may be that by far most of the potentially ambiguous CC onsets in the database are almost immediately disambiguated (cf. Table 4). In other words, there are very few ambiguities that would be likely to lead to long-lasting garden path effects (for similar evidence from that-mentioning in relative clauses, see Jaeger, 2006a, Section 6.3.1). Syntactic reduction may simply be the wrong place to look for ambiguity avoidance.

Finally, it’s crucial to point out that a lack of ambiguity avoidance would not argue against all types of audience design. I return to this issue in Section 3, where I discuss how the hypothesis of Uniform Information Density relates to audience design.

2.6.3. Dependency processing

Several effects provide evidence for dependency processing accounts (Elsness, 1984; Hawkins, 2001, 2004). Complementizer mentioning correlates positively with the number of words intervening between the matrix verb and the CC onset (β = 0.17, z = 2.5, p = 0.01) and the number of words at the CC onset up to and including the CC subject (β = 0.18, z = 12.8,p < 0.0001 ), as predicted in Hawkins (2001, 2004) and replicating previous work (Elsness, 1984; Hawkins, 2001; Roland et al., 2005; Thompson & Mulac, 1991b; Torres Cacoullos & Walker, 2009). It is also worth noting that the coefficients for these two effects are of similar size, which is consistent with the specific account of complementizer omission outlined in (Hawkins, 2001). These effects hold beyond the availability effects discussed above (collinearity between levels of CC SUBJECT and LENGTHCC ONSET was minimal, −0.16 < rs < 004).

Interestingly, the number of words in the CC following its CC subject also correlates positively with that-mentioning β = 003, z = 44, p < 0.0001. As discussed above, Domain Minimization and Maximize Online Processing (Hawkins, 2004) would predict no effect or an effect with a much smaller coefficient than for the other two length measures. The latter is indeed the case. The significant effect of the CC complexity beyond the CC subject may be surprising given experimental evidence that language production seems to be rather radically incremental (e.g. Brown-Schmidt & Konopka, 2008; Griffin, 2003; Wheeldon & Lahiri, 1997). There is, however, considerable evidence that speakers must have at least heuristic weight or complexity estimates of material that is not yet phonologically encoded (e.g. Bock & Cutting, 1992; Clark & Wasow, 1998; Gómez Gallo et al., 2008; Wasow, 1997). Indeed, the empirical distribution of complementizers by overall CC length, shown in Fig. 5, reveals a strong effect of CC complexity up to the 7th to 9th word, after which no trend is observed. This was confirmed by refitting the model with a restricted cubic spline modeling over CC length. The predicted effect of CC length is displayed in Fig. 6.

Fig. 5.

Observed proportions of that by CC length in words (limited to CCs up to 25 words); jittered points are bottom and top of each cell represent individual cases; error bars indicate 95% confidence intervals. Note that CCs of length 1 are observed (though infrequent, 0.01%) because speakers were interrupted or for other reasons did not complete all CCs.

Fig. 6.

Non-linear effect of the complement clause length on log-odds of that-mentioning. Hexagons indicate the distribution of the predictor against predicted log-odds of that, considering all predictors in the model. Fill color indicates the number of cases in the database that fall within the hexagon.

It thus would seem that speakers have access to at least a heuristic complexity estimate of the CC onset up to the first 7–9 words and that this affects speakers’ decision to produce that (see Jaeger (2006a) for similar evidence from relativizer mentioning).

2.6.4. Grammaticalization of epistemic phrases

Previous findings provide clear support for (weak) grammaticalization accounts, according to which the distribution of complementizer that is partially driven by certain types of matrix clause onsets, such as I guess, that have become grammaticalized as epistemic markers. These epistemic markers are assumed to occur without the complementizer that (Thompson & Mulac, 1991b). Three findings in the current study are relevant to the evaluation of such weak grammaticalization accounts.

First, verbs hypothesized by Thompson and Mulac (1991b) to be frequently used as epistemic markers, such as think and guess, are associated with low rates of that-mentioning (see Table A.1 in Appendix A). Lexical differences between verbs account for a considerable amount of variation in that-mentioning, as evidenced by the highly significant model improvement associated with the addition of a random verb lemma effect. This is compatible with grammaticalization accounts, but could also be due to other lexical properties not controlled in the analysis.

Second, the distance of the matrix verb from the onset of the sentence had a highly significant nonlinear effect on that-mentioning , as illustrated in Fig. 7. Note that the coefficient-based significances of the individual components of the spline given in Table 3 are basically meaningless due to (expected) high fixed effect correlations (rs > 0.9). The total predicted effect of POSITION(MATRIX VERB) depicted in Fig. 7, however, is statistically unbiased (only the standard error estimates of collinear predictors are biased; coefficient estimates remain unbiased).

Fig. 7.

Non-linear effect of the position of the matrix verb on log-odds of that-mentioning. Hexagons indicate the distribution of the predictor against predicted log-odds of that, considering all predictors in the model. Fill color indicates the number of cases in the database that fall within the hexagon.

The effect of the matrix verb’s position is mostly driven by a contrast between sentence-initial and other verbs, where the odds of that are more than six times lower for sentence-initial CCs compared to CCs that occur more than 10 words into a turn. Most of the effect is due to the contrast between the first four words and all other cases, but there are gradient effects beyond the first four words. It is therefore likely that the positional effect is largely due to epistemic uses of embedding verbs, which often occur sentence-initially (Thompson & Mulac, 1991a; Thompson & Mulac, 1991b). This possibility is explored in ongoing work.