Abstract

Background

Autism is a neurodevelopmental disorder characterized by a specific triad of symptoms such as abnormalities in social interaction, abnormalities in communication and restricted activities and interests. While verbal autistic subjects may present a correct mastery of the formal aspects of speech, they have difficulties in prosody (music of speech), leading to communication disorders. Few behavioural studies have revealed a prosodic impairment in children with autism, and among the few fMRI studies aiming at assessing the neural network involved in language, none has specifically studied prosodic speech. The aim of the present study was to characterize specific prosodic components such as linguistic prosody (intonation, rhythm and emphasis) and emotional prosody and to correlate them with the neural network underlying them.

Methodology/Principal Findings

We used a behavioural test (Profiling Elements of the Prosodic System, PEPS) and fMRI to characterize prosodic deficits and investigate the neural network underlying prosodic processing. Results revealed the existence of a link between perceptive and productive prosodic deficits for some prosodic components (rhythm, emphasis and affect) in HFA and also revealed that the neural network involved in prosodic speech perception exhibits abnormal activation in the left SMG as compared to controls (activation positively correlated with intonation and emphasis) and an absence of deactivation patterns in regions involved in the default mode.

Conclusions/Significance

These prosodic impairments could not only result from activation patterns abnormalities but also from an inability to adequately use the strategy of the default network inhibition, both mechanisms that have to be considered for decreasing task performance in High Functioning Autism.

Introduction

Autism is a neurodevelopmental disorder characterized by a specific triad of symptoms such as: abnormalities in social interaction, abnormalities in communication and restricted activities and interests. Communication disorders are considered to be core features of Autism Spectrum Disorders [1]. While verbal autistic subjects may present a correct mastery of the formal aspects of speech, they have difficulties in pragmatics [2], [3]. Pragmatics can be seen as the linguistic conditions of appropriate use of sentences in context: the knowledge of basic speech acts types, such as assertions, questions and commands; the knowledge of all the systems of rules governing “things done with words”, such as congratulations and proclamations; and the knowledge of what is to be included in talk-in interaction pragmatics, such as organization of turn-taking [4]. Pragmatics is essentially conveyed by speech prosody, i.e., the speech musical dimension which is carried by variations of the fundamental frequency (F0) and whose perceptual correlate is pitch. Pragmatics includes modifications in pitch, duration and amplitude at the word and the sentence levels. Clinical observations have reported that young children with autism present either a lack of interest in motherese [5], [6] or a marked preference for a synthetic voice resembling motherese [7], which is in favour of a dysfunction in natural speech processing at an early stage of the development. Numbers of studies have reported that autistic subjects, whether children or adults, present prosodic impairment [8]–[12]. Thus, prosodic deficits of every kind pepper autistic speech productions: flat or exaggerated intonation, resulting in inappropriate intonation, abnormalities in rhythm and/or in pitch variations. These productive prosodic dysfunctions appear to persist with age although the formal aspects of speech tend to improve [13]–[16]. Nonetheless, this impairment in prosodic production may stem from a more complex dysfunction concerning prosodic perception, which would be in line with the hypothesis that an abnormality of sensory integration processing would be the core of autism [17].

One test, the Prosody-Voice Screening Profile (PVSP), aims at assessing the speaker's prosody and voice in conversational speech [15]. The only available test assessing both the perceptive and productive prosodic difficulties in English is the Profiling Elements of the Prosodic System (PEPS-C) developed by Peppé and McCann [13]. Different studies using PEPS-C have revealed that subjects with Language-Delayed High-Functioning Autism (LD-HFA) present prosodic deficits, distributed about equally on receptive and expressive prosodic tasks [17] and that the prosodic ability of children with LD-HFA is lower than in children with typical development of the same age from both a productive and perceptive point of view, and somewhat independent of other language skills [6]. These results suggest a perceptive deficit, even if the fact that this perceptive deficit is the cause or the consequence of the productive deficit still remains to be investigated.

Atypical processing of low level perceptual processing has been revealed in the auditory domain [18]. Several studies have reported an enhanced simple low- level processing for pitch discrimination and chord disembedding (spectral processing) [19], [20] whereas other studies have reported that tasks combining spectrally and temporally dynamic, complex material, with complex operations (speech) display a deficit [21], [22]. These findings have been related to the weak central theory which predicts that processing information globally may hamper perceptual functions in autism [23]. However, speech complexity processing by subjects with autism presents a dichotomous picture, since some studies have revealed an enhanced perceptual pitch processing of speech in autism [20]–[24] though other studies have put forward a temporal processing impairment in speech [22] and a lost in the enhanced ability of pitch discrimination in speech [21].

However, while prosodic impairment in autism is beginning to be well documented from a behavioural point of view, little is known about the neural substrate underlying the integration of prosody. In typically developing subjects, auditory prosodic processing has revealed the involvement of the frontal, parietal and temporal cortices bilaterally, that is to say the bilateral ventral pathway, the left dorsal pathway and its right counterpart, thus replicating imaging studies in adults [25].

Concerning autism and the basis of prosody, i.e., vocal sounds, an fMRI study of adults with autism and aged-matched controls during passive listening to vocal sounds and non vocal sounds has revealed that the autism group failed to activate bilateral superior temporal sulcus areas, which are considered to be voice-selective areas [26], in response to vocal sounds [27]. Concerning prosody in particular, three studies using cortical-evoked potentials in Asperger have demonstrated deficient encoding of speech and have related this deficit to poor receptive prosody. Kujala and collaborators [28] have reported that adults with Asperger syndrome present a deficit in the processing of pitch variations which would be linked to hypoactivity of the right cerebral hemisphere. Another study has revealed atypical neural responses to affective prosody in children with Asperger and their fathers, especially over the right cerebral hemisphere, and that this impairment can already be seen at low-level information processes [29]. The most recent study using MisMatch Negativity has observed an enhanced response in individuals with Asperger in a constant-feature discrimination for both pitch and vowel stimuli whereas no effect has been revealed when the condition involves deciphering phonemes with pitch variations [21]. The authors have concluded that children with autism lose their advantage in phoneme discrimination when the context of the stimuli is speech-like and requires abstracting invariant speech features from varying input, whereas the discrimination of pitch per se is enhanced in autism as compared to controls. A recent study has revealed that children with autism present aberrant, non-direction-specific pitch tracking which could be related to a deficient brainstem encoding of pitch, leading to the hypothesis that abnormalities in pitch processing may stem from an early subcortical processing impairment, which may account for cortical abnormalities [30]. Nevertheless, though no fMRI study has examined the neural correlates of prosodic speech in autism; three fMRI studies have investigated pragmatics in children and adults with autism. They reported increased activation in the right inferior frontal gyrus for subjects with autism as compared to controls when making inferences from discourse [31] or when comprehending pragmatic language [32], which may reflect the higher task demands that subjects with autism faced when interpreting discourse in context. More interestingly, Wang and collaborators [33] have investigated the neural basis of irony comprehension in children and adolescents with High Functioning Autism by differentiating the role of prosody and the role of context. Across all conditions, children with autism presented more activation in prefrontal and temporal regions than control children. More specifically, when only contextual cues were present the right IFG was more activated whereas greater activity was observed in the left Superior Temporal Sulcus and the right temporal pole in children with autism versus children with typical development. The authors have suggested that the greater involvement of the temporal regions may reflect a greater burden for children with autism than for control children when task demands require reliance on prosodic information alone. All together, these studies have revealed that subjects with autism present variations in the involvement of right cerebral cortex as compared to controls, when processing pragmatic, affective prosody or pitch variations in speech.

Along with studies interested in activation patterns, several studies have identified a deficit in the default mode network [34]. When comparing the differences between psychiatric patients such as in autism [34], [35] and controls, fMRI studies have revealed differences in decreased activity in the default mode network between patients and controls. Put another way, when subjects perform a cognitive task, activity in task-related areas increases and default mode activity decreases [36]. Recently, it has been shown that the degree of anticorrelation between activation and deactivation networks is correlated to performance on cognitive tasks [37]. Impairment in the balance between task-dependent activated and task-independent activated networks could be suggested.

Taken together, these data suggest the existence of neural abnormalities underlying language impairment and more particularly prosodic impairment. It can thus be hypothesized that an abnormal integration of prosody in speech (requiring both a spectral and a temporal processing) could be at the centre of these deficits. However, the question arises whether this deficit in prosody results from an abnormal neural network functioning, with a hypo or hyper activation of right cortical areas and/or from an altered balance between activated and deactivated networks.

The goal of the present study was therefore to characterize the neural network elicited by the integration of 90-s long connected speech stimuli of high degrees of prosodic information in High Functioning Autism (HFA) using functional Magnetic Resonance Imaging (fMRI). Since speech exists over time, long connected speech stimuli appear to favor a better integration of pitch modulations, since they present much more F0 modulations than isolated words or sentences do. The perceptive and productive prosodic abilities were investigated using the PEPS-C so as to assess the prosodic deficits in the HFA group. Results revealed the existence of a link between perceptive and productive prosodic deficits in autism and demonstrates for the first time that the neural network involved in prosodic speech perception exhibits abnormal activation and deactivation.

Materials and Methods

Participants

Eight male adults with HFA (mean age 23.38, ±2.10, mean Verbal Intelligence Quotient 89, ±7.89) matched with 8 male controls (mean age 23.05, ±2.02, mean VIQ 128.33, ±4.58) participated in the study after having given their informed written consent in accordance with the guidelines approved by the Ethics Committee of the Bordeaux Medical University. HFA participants were recruited by the Autism Resource Center of Charles Perrens Hospital of Bordeaux and were diagnosed with HFA according to the DSM-IV-R criteria [1] and the ADI-R. They all presented delay in speech onset. Controls were recruited from the community at the University of Bordeaux 2.

No participants had hearing disorders. They had no prior experience of either behavioural or fMRI tasks and were not familiar with the stimulus materials.

Behavioural study: French adaptation of the English PEPS-C

The PEPS-C [13] was adapted to the French language and culture (Hesling et al, in preparation). The French PEPS was implemented with E-prime software (Psychology Software Tools, Pittsburgh, PA), is computerized and lasts 30 minutes.

The procedure aims at evaluating prosodic skills according to a psycholinguistic model [27]. Tasks are at 2 levels: (i) communicative function tasks in which prosody plays an important role (requiring top-down processing, involving meaning) and (ii) form tasks (requiring bottom-up processing, where no meaning is involved). The communicative function tasks are assessed in both receptive and expressive modes whereas the form tasks are assessed in receptive mode in the French version because of the age of the participants since they found the expressive form tasks, i.e., imitation of humming sounds, embarrassing.

Four communicative functions were transposed so as to assess both perception and production skills in French. For each perceptive task, subjects are presented with two images on a computer screen and are required to click on the right image. For the output task, one image is presented and they have to produce what they see. The turn-end task, which involves intonation, aims at assessing the ability to distinguish between a question with rising pitch and a statement with falling pitch. For example, for the input task subjects are required to listen to single words (food items) and decide whether they sound like questions, i.e. if the person on the computer was “asking them if they want some”; or if they sound like statement. For the output task subjects are required to produce this distinction. The chunking task assesses the ability to disambiguate syntactically ambiguous sentences by the use of rhythm and silence. For example, for the input task subjects are required to listen to word groups such as “vingt-quatre, douze” (twenty-four, twelve) versus “vingt, quatre, douze” (twenty, four, twelve) and decide whether it sounds like 2 or 3 figures by clicking on the right image. For the output task one image (for example “thirty-one, twelve”) is presented and they have to produce the distinction.The focus task aims at assessing the ability to evaluate the emphasized word by the use of stress. For example, for the input task, subjects are required to listen to sentences and to identify which item is missing from sentences such as “je voulais du PAIN et des pommes” (I wanted BREAD and apple). For the output task subjects are required to produce this distinction. The affect task assesses the ability to decode the affective state of the speaker as produced using variation in intonation and voice and to produce such an affective state. For example, for the input task subjects are required to listen to one word and decide if the voice likes or does not like the food item by clicking on the right smiley. For the output task, one item is presented with one food item and they have to produce the affect symbolized by the smiley.

Auditory discrimination abilities are also assessed by 2 form tasks, making it possible to assess whether the subject has the underlying skills required to complete the communicative function tasks. The two form tasks are divided into short item tasks (1 or 2 syllables) and long item tasks (6 or 7 syllables). Short items represent intonation whether long items represent rhythm. The stimuli are laryngograph signals, which sound rather like humming, taken from the recordings of a selection of the four input communicative function tasks.

Each task, whether the 8 receptive and expressive communicative function tasks and the 2 receptive form tasks, includes 18 items with binary responses making it possible to calculate a score. This raw score calculated over 18 is then transformed in percentage. The choice of 18 items is justified by the necessity of having a reasonable number of non-chance scores since the response is binary, as it was done in the PEPS-C [13].

Data were analysed using a Mann-Whitney-U test to assess any differences between the groups for each task. Spearman's correlation test was also done to assess the strength of association between perception and production abilities for each communicative function task in each group. Owing to the heterogeneity of the VIQ in the autistic group, a Spearman's correlation test was done to check if the VIQ interfered with the score of each communicative task.

fMRI protocol

A 90-s-long prosodic connected speech stimulus dealing with a French story for children, which includes intonation, rhythm, focus and affect prosodic aspects (i.e., the 4 function tasks) was recorded by a trained native speaker in a soundproof room at a 16 bits/44.1 kHz sampling rate. The 90-s-long recording was digitally cut at sentence boundaries to obtain 3 fragments of 30-s-long activation periods. So as to avoid any disturbing noise, the 5 ms of the beginning of each fragment were gradually increased (fade-in process) and the 5 ms of the end of each fragment were gradually decreased (fade-out process).

The fMRI protocol consisted in 3 thirty-second-long stimuli interleaved with 4 fifteen-second-long rest periods, the total length of the procedure being 2 minutes and 50 seconds. Participants were asked to listen to the stimuli while remaining motionless and to keep their eyes closed. The speech stimuli were presented binaurally through headphones specifically designed for use in the scanner (MR Confon, Magdeburg, Germany).

fMRI acquisition

The MRI data were collected at 1.5 Tesla using an Intera Philips system (Philips Medical System, Best, Netherlands) equipped with an eight-element phased-array head coil. For each subject, a series of 50 functional scans were acquired using a T2*-weighted single shot echo-planar sequence (FOV = 256×256, Matrix = 128×128, TR/TE = 3000/60 ms, Flip angle = 90°, SENSE factor = 2). Each scan included 25 slices (no gap, thickness 4mm) parallel to AC-PC (Anterior Commissure-Posterior Commissure). Three dummy scans were used to reach steady-state magnetization. A high-resolution T1-weighted anatomic scan was also acquired to obtain a morphological reference (25 slices parallel to AC-PC with a resolution of 1×1×4 mm3, no gap).

fMRI debriefing

After the scanning session, the two groups of participants were submitted to a 10 items questionnaire so as to verify they understood the text. Though the autistic group is heterogeneous in VIQ, each subject with autism properly answered the 10 questions, and no significant difference between the two groups was revealed (Student t-test, p<0.887).

Whole brain analyses

All data were analyzed using SPM5 (Statistical Parameter Mapping, Wellcome Department of Imaging Neuroscience, London UK) and MATLAB 7.1 (The Mathworks Inc., Natick, MA, USA) and SPSS 16.0 (SPSS, Chicago).

For each individual subject, the dynamic scans were adjusted for slice timing differences, realigned to the first scan to correct for head movement, normalized to the standard Montreal Neurological Institute space (MNI) and spatially filtered by applying an 8 mm3 Gaussian kernel. High-pass filtering (cut off 128s) was performed to remove low frequency artefacts. Then, a general linear model was used to model the data [38]. The functional time series were modeled by a boxcar model convoluted with a canonical hemodynamic response. After estimation of the model parameters, a linear contrast (prosodic speech vs. rest) was built and entered in a 2nd level random effect model. Since the heterogeneity of the VIQ in the autistic group can be confounded, the model was adjusted with VIQ in each group.

Activation

A one sample t- test was conducted to reveal activated brain areas in each group. A conjunction analysis was performed to determine areas commonly activated in the HFA and control groups [39]. A two sample t-test was then run to determine the differences in activation between groups (HFA vs. Controls) for the prosodic listening condition. All data were intensity-thresholded at p<0.01 and cluster size-thresholded, at p<0.05, FDR corrected for multiple comparisons. Anatomical localization was performed using the AAL atlas [28].

Deactivation

A one sample t- test was conducted to reveal deactivated brain areas in each group. A conjunction analysis was performed to determine areas commonly deactivated in the HFA and control groups [29]. A two sample t-test was then run to determine the differences in deactivation between groups (HFA vs. Controls) for the prosodic listening condition. All data were intensity-thresholded at p<0.01 and cluster size-thresholded, at p<0.05, FDR corrected for multiple comparisons. Anatomical localization was performed using the AAL atlas [40].

ROIs analyses

Activation

An ROI analysis was conducted to examine the relation between task performances and activated brain areas extracted from the HFA>controls results. Each ROI was defined as an 8 mm-diameter sphere centered in the coordinates of the peak activated voxels of each activated brain cluster.

A percent BOLD signal change for each ROI was estimated for both groups using MarsBar [41]. Then, a Spearman correlation test was done to assess the strength between BOLD signal and scores of the input tasks.

Deactivation

An ROI analysis was conducted to examine the relation between task performances and deactivated areas extracted from the one sample t-test results. Each ROI was defined as an 8 mm-diameter sphere centered in the coordinates of the peak deactivated voxels of each deactivated brain cluster. A percent BOLD signal change for each ROI was estimated for both groups using Marsbar [41]. Then, a Spearman correlation test was done to assess the strength between deactivated areas and scores of the input tasks.

Results

Behavioural results

French PEPS

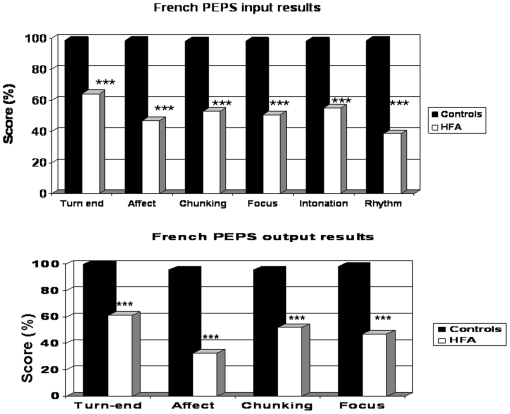

Controls performed all the perceptive and productive tasks at nearly ceiling, though the test cannot be considered as saturated since the score of 100% was only obtained for 2 subtests (expressive Turn-end and expressive chunking). The HFA group's results were significantly lower than those of controls for all the input and output tasks (p<0.001), (Figure 1).

Figure 1. French PEPS input results.

No significant correlation was found between VIQ and any of the communicative tasks using Spearman correlation (Table 1).

Table 1. Bivariate correlations between VIQ and receptive and expressive scores for the French PEPS tasks in the HFA and control groups.

| PEPS tasks | VIQ HFA | VIQ controls |

| Function tasks | Rho | |

| Turn-end input | 0.026 | 0.036 |

| Chunking input | 0.021 | 0.021 |

| Focus input | 0.185 | 0.028 |

| Affect input | 0.018 | 0.018 |

| Turn-end output | 0.122 | 0.022 |

| Chunking output | 0.109 | 0.109 |

| Focus output | 0.108 | 0.108 |

| Affect output | 0.073 | 0.019 |

| Form tasks | ||

| Short Items input | 0.112 | 0.023 |

| Long Items input | 0.031 | 0.015 |

Rho: Spearman's correlation coefficient.

Statistical analyses using Spearman correlation revealed that 3 communicative tasks (chunking task, p<0.001, Focus task, p<0.01, affect task p<0.01) out of 4 (Turn-end task) presented a significant positive correlation coefficient between perception and production tasks for the HFA group (Table 2). No significant correlation was found for the control group.

Table 2. Bivariate correlations between receptive and expressive scores for the French PEPS tasks in the HFA group.

| PEPS tasks | HFA | Controls |

| Rho | Rho | |

| Turn-end | 0.156 | 0.149 |

| Chunking | 0.991** | 0.140 |

| Focus | 0.869* | 0.149 |

| Affect | 0.869* | 0.140 |

Rho: Spearman's correlation coefficient.

*: significant at .01 level, *: significant at .05 level.

Whole-brain analyses

For all the fMRI analyses, the model was adjusted with VIQ.

Activation: One sample t test

The bilateral STS and the left cerebellum were activated in both groups whereas the right thalamus was only activated in the autistic group (Table 3).

Table 3. Brain activation in HFA and in controls.

| Brain areas | K | Tmax | Location (MNI coordinates) | ||

| x | y | z | |||

| HFA | |||||

| Left MTG | 3891 | 10.09 | −48 | −24 | 4 |

| Left cerebellum | 232 | 8.03 | −24 | −70 | −34 |

| Right MTG | 3558 | 17.01 | 42 | −32 | 2 |

| Right thalamus | 301 | 6.01 | 0 | −6 | 14 |

| Controls | |||||

| Left MTG | 3136 | 19.45 | −48 | −30 | −4 |

| Left cerebellum | 414 | 27.38 | −10 | −82 | −34 |

| Right MTG | 4002 | 15.90 | 52 | −18 | −22 |

Note: MTG refers to Superior Temporal Gyrus, ITG to Inferior Temporal Gyrus. One sample t-test, thresholded at p<0.01, cluster-sized threshold at p<0.05 FDR-corrected for multiple comparisons, K referring to the cluster size in voxels. The T maxima and MNI coordinates are for the peak activated voxel in each cluster.

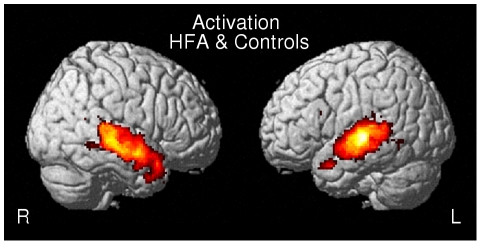

Activation: Conjunction analysis

Common activated areas between HFA and controls were observed bilaterally in the middle temporal gyrus (MTG, BA 21), and in the right temporal lobe (MTG, BA 21, ITG, BA38) (Figure 2, Table 4).

Figure 2. Conjunction map of activation between HFA and controls.

Table 4. Brain areas commonly activated in HFA and controls.

| Brain areas | K | Tmax | Location (MNI coordinates) | ||

| x | y | z | |||

| Left MTG | 950 | 8.45 | −56 | −26 | 0 |

| Right MTG | 585 | 6.78 | 56 | −6 | −14 |

| Right ITG | 50 | 5.13 | 46 | 14 | −22 |

Note: MTG refers to Superior Temporal Gyrus, ITG to Inferior Temporal Gyrus. Conjunction analysis, thresholded at p<0.01, cluster-sized threshold at p<0.05 FDR-corrected for multiple comparisons, K referring to the cluster size in voxels. The T maxima and MNI coordinates are for the peak activated voxel in each cluster.

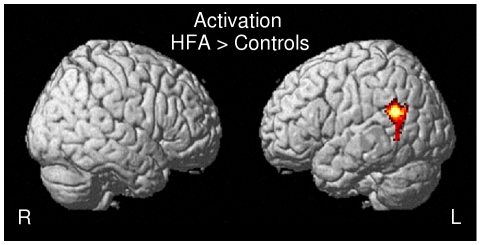

Activation: Two sample t-tests HFA vs. Controls

The HFA group revealed significantly greater activation in the left Supra Marginal Gyrus (SMG) as compared to the control group, whereas no brain area was more activated in the reverse contrast, i.e., Controls>HFA (Figure 3, Table 5).

Figure 3. Two sample t tests of activation, HFA>controls.

Table 5. HFA >Controls: brain activation.

| Brain areas | K | Tmax | Location (MNI coordinates) | ||

| x | y | z | |||

| Left SMG | 386 | 4.38 | −44 | −52 | 22 |

Note: SMG refers to Supra Marginal Gyrus. Two sample t-test, thresholded at p<0.01, cluster-sized threshold at p<0.05 FDR-corrected for multiple comparisons, K referring to the cluster size in voxels. The T maxima and MNI coordinates are for the peak activated voxel in each cluster.

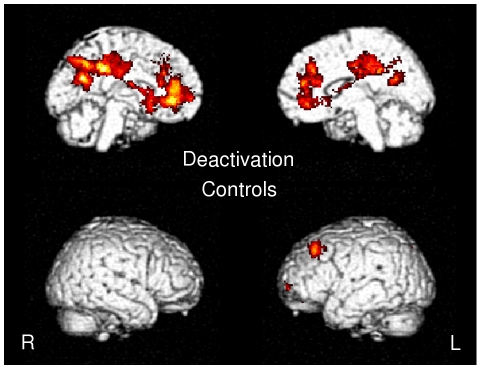

Deactivation: One sample t test

The left precuneus, the right anterior cingulate cortex and the left medial prefrontal cortex deactivated during the prosodic stimulus in the control group, whereas no brain areas were deactivated in the HFA group (Figure 4, Table 6).

Figure 4. Map of deactivation in controls.

Table 6. Deactivation in controls.

| Brain areas | K | Tmax | Location (MNI coordinates) | ||

| x | y | z | |||

| Left Prec | 2759 | 6.56 | −10 | −60 | 24 |

| Left MFG | 567 | 5.60 | −32 | 30 | 44 |

| Right ACC | 2739 | 5.77 | 4 | 26 | 18 |

Note: Prec refers to Precuneus, MFG to Middle Frontal Gyrus, ACC to Anterior Cingulate Cortex. One sample t-test, hresholded at p<0.01, cluster-sized threshold at p<0.05 FDR-corrected for multiple comparisons, K referring to the cluster size in voxels. The T maxima and MNI coordinates are for the peak activated voxel in each cluster.

Deactivation: Conjunction analysis

There were no common deactivated areas between the HFA and the control groups.

Deactivation: Two sample t-tests: Controls vs. HFA

The control group revealed significantly greater activation in the left precuneus, the left medial prefrontal cortex and the left middle temporal gyrus as compared to the HFA group, whereas no brain area was more activated in the reverse contrast, i.e., HFA>Controls (Table 7).

Table 7. Controls >HFA: brain deactivation.

| Brain areas | K | Tmax | Location (MNI coordinates) | ||

| x | y | z | |||

| Left Prec | 477 | 5.34 | −12 | −44 | 32 |

| Left MFG | 456 | 5.11 | −30 | 28 | 42 |

| Left MTG | 387 | 4.38 | −44 | −52 | 22 |

Note: Prec refers to Precuneus, MFG to Middle Frontal Gyrus, MTG to Middle Temporal Gyrus. One sample t-test, hresholded at p<0.01, cluster-sized threshold at p<0.05 FDR-corrected for multiple comparisons, K referring to the cluster size in voxels. The T maxima and MNI coordinates are for the peak activated voxel in each cluster.

Correlations between ROIs analyses and French PEPS

Activation

Statistical analyses using Spearman correlation revealed that the left SMG presented a significant positive correlation coefficient with the score of 2 communicative tasks, i.e. the turn-end task (p<0,01) and the focus task (p<0,05) for the natural speech condition in the HFA group (Table 8). No other correlation between cerebral activity and PEPS subtests was found in either group.

Table 8. Bivariate correlations between receptive scores of the French PEPS tasks and the left SMG in the HFA group.

| PEPS tasks | HFA |

| Turn-end | 0.883** |

| Chunking | 0.153 |

| Focus | 0.778* |

| Affect | 0.234 |

Rho: Spearman's correlation coefficient.

*: significant at .01 level, *: significant at .05 level.

Deactivation

Statistical analyses using Spearman correlation revealed that for the control group the left medial prefrontal cortex presented a significant negative correlation coefficient with the score of 3 communicative tasks, i.e., the chunking task (p<0.05), the focus task (p<0.05) and the affect task (p<0.01). The left precuneus presented a significant negative correlation coefficient with the score of 2 communicative tasks, i.e., the turn-end task (p<0.05) and the affect task (p<0.05). The right anterior cingulate cortex presented a significant negative correlation coefficient with the score of 3 communicative tasks, i.e., the chunking task (p<0.05), the focus task (p<0.05) and the affect task (p<0.05).

None of these 3 regions presented a significant correlation with the score of the communicative tasks for the HFA group (Table 9).

Table 9. Bivariate correlations between receptive scores of the French PEPS tasks and deactivated areas in both groups.

| PEPS tasks | LMPC | Lprecu | RACC |

| Rho | Rho | Rho | |

| Controls | −0.245 | −0.525* | −0.307 |

| Turn-end | −0.419* | −0.291 | −0.421* |

| Chunking | −0.507* | −0.333 | −0.599* |

| Focus | −0.682** | −0.416* | −0.567* |

| Affect | |||

| HFA | −0.231 | −0.172 | −0.048 |

| Turn-end | −0.152 | −0.129 | −0.146 |

| Chunking | −0.079 | −0.084 | −0.148 |

| Focus | −0.157 | −0.018 | −0.018 |

| Affect | |||

LMPC: left medial prefrontal cortex, Lprecu: left precuneus and RACC: right anterior cingulate cortex.

Rho: Spearman's correlation coefficient.

* *significant at .01 level.

*: significant at .05 level.

Discussion

This experiment revealed the existence of a link between perceptive and productive prosodic deficits in autism and demonstrates, for the first time, that the neural network involved in prosodic speech perception exhibits abnormal activation and deactivation. The French adaptation of the English PEPS-C confirmed that subjects with autism not only present difficulties in the production but also in the perception of speech prosody. Moreover, the magnitude of the deficit between perception and production was found to be linked for the HFA group. The fMRI results revealed that brain mechanisms underlying the processing of the prosodic connected prosodic speech comprehension are supported by a different cerebral network in HFA than in controls, involving the left SMG for the HFA as compared to controls. Moreover, whereas controls deactivated brain regions pertaining to the default mode such as the left precuneus and the left middle frontal gyrus as well as the right anterior cingulate while processing the prosodic connected speech comprehension, the HFA group failed to deactivate these brain areas. These results support the existence of a prosodic perceptive impairment in autism.

The French PEPS made it possible to assess significant prosodic differences between the control group and the HFA group, the latter revealing poorer prosodic abilities in both production and perception tasks. This is in accordance with the different results obtained in the English language by the PEPS-C [9]–[17]. More particularly, the Turn-end task, which consists in differentiating between a question and a statement, involves intonation, i.e., pitch variations. It could be suggested, regarding the significantly lower score obtained by the HFA group, that HFA subjects present difficulties in decoding and producing those pitch variations in speech. However, these results are in contradiction with results from Jarvinen-Pasley and collaborators [24] since they have reported an enhanced ability in auditory pitch processing in speech in autism as compared with controls. One issue can be raised to account for these discrepancies in results: in their study, Jarvinen-Pasley and collaborators asked subjects to listen to sentences with 4 different pitch contours and then to match them with a drawing representing the contour. In fact, in this paradigm, subjects can leave aside semantics and concentrate on pitch variations. In our study, as subjects had to match the listened word with the image, they had to integrate both the signifier (the acoustic representation of the word, i.e., the word they listened to) and the signified (the concept, i.e., the image representing the word), [42]. In fact, though both paradigms require high level processing, it may be hypothesized that Jarvinen-Pasley's paradigm involves more low-level processing though the paradigm in the present study involves more high-level processing, which could explain those surface discrepancies.The Chunking task, which allows for disambiguating lexically ambiguous sentences, mainly based on pauses and silences, was also poorly performed. The Focus task, which consists in emphasizing one word in a sentence, was also more difficult for the HFA group, suggesting a problem with stress. As the affect task requires the 3 acoustic correlates of prosody, namely pitch variations, duration (pauses and silences) and intensity, this task was unsurprisingly less well performed by the HFA group than by the control group. Results obtained in the 4 communicative function tasks may be accounted for by results from the 2 form tasks. In fact, these form tasks make it possible to assess whether the subject has the underlying skills required to complete the communicative function tasks. In the present study, these 2 form tasks were significantly poorly performed by the HFA group. More particularly, the short items discrimination task, which represents the ability to process intonation, i.e., pitch variations, is poorly performed by the HFA group, which can be linked to their poor performance in the Turn end task. The long items discrimination task, which represents the ability to process rhythm, is also poorly performed by the HFA group, which can be linked to their poor performance in the chunking task. However, it can be put forward that as these 2 form tasks do not involve a semantics processing, an enhanced processing in the HFA group as compared to controls could have been expected. One possible explanation would be that as these tasks require both spectral and temporal information processing, subjects with autism encounter difficulties with temporal information processing as supported by some studies revealing an abnormal temporal processing of auditory stimuli in speech [18]–[22]. In summary, both perceptive and productive prosodic skills appear to be impaired in the HFA group. Moreover, the magnitude of the perceptive and productive deficits was revealed to be linked for the chunking, focus and affect tasks in the HFA group. This suggests that perception and production deficits are strongly connected and it can be hypothesized that production depends on perception abilities as regard studies on deaf subjects or on second language learning [43].

Data from the fMRI study contribute to understanding this impairment since the cerebral network underlying the processing of prosodic connected speech present differences between the 2 groups. In controls, the bilateral temporal lobes are found to be activated, which is in accordance with previous data showing that auditory sentence comprehension is associated with involvement of both left and right STG [44]–[47]. However, some studies on auditory prosodic speech perception have revealed whether a right [48]–[52] and/or left [53]–[56] Inferior Frontal Gyrus (IFG) activation, which was not achieved in the present study at the chosen threshold as in other studies [57], [58]. One possible explanation is that the content of the stimulus, though prosodic, was not emotional enough to make subjects rehearse the stimulus.

While no brain area was more recruited for the control group as compared with the HFA group; the reverse contrast, i.e., HFA>controls, revealed greater activation in the left SMG. The left SMG has been revealed to be connected with a part of the inferior frontal gyrus (pars triangularis, F3td) through the arcuate fasciculus [59]. The left SMG is viewed as the starting point of the working memory loop for phonology which then projects frontally [60]. As such, the left SMG can be considered as the phonological store area and would then be a part of the phonological loop postulated by Baddeley [61]. It can thus be suggested that autistic subjects rely more on working memory processes and processes translating from auditory to articulatory representations than controls do in the natural condition [62]. Correlations between the left SMG and the Turn-end and Focus tasks in the HFA group revealed that the more this brain structure is activated, the more accurately the HFA subjects performed the tasks. Controls, in the case of natural speech integration, did not present more activation in the left SMG as compared to HFA, though their scores on the task were nearly at ceiling. It can thus be hypothesized that the HFA group recruit the left SMG as a compensatory phenomenon, which is supported by the idea that prosody could be so troublesome for them that they would be more concentrated on phoneme discrimination, which is part of the literal speech decoding, either to avoid paying attention to prosodic features or to be able to understand the story. A further explanation which may be raised for accounting for this left SMG activation could stem from a right hypoactivation in the HFA group, which is in light with previous cortical evoked potential studies reporting a right hypoactivation in autism [28], [29]. In fact, even if the present results did not reveal any differences in the right STS between controls and the HFA group at the chosen threshold, a less permissive threshold revealed that the right STS is more activated in controls than in the HFA group, which would support the hypoactivation hypothesis. Another complementary explanation comes from results from deactivation. When comparing the differences between autistic patients [34], [35], and controls, fMRI studies have revealed differences in decreased activity in the default network between patients and controls, although these differences were not correlated with task performance. In line with this, in the present study, the control group exhibited deactivation in this default mode network while processing prosodic connected speech comprehension, suggesting that listening to the story leads to inhibition of this network engaged in self-reflective thought [63]. The underlying mechanism of this inhibition seems to be a facilitation of task-specific activations through the suppression of task-irrelevant cortical regions, enabling the subject to focus his attention on the relevant process. This hypothesis is supported by results from correlations between the PEPS scores and the 3 seed deactivated regions (the left precuneus, the right anterior cingulate cortex and the left medial prefrontal cortex). In fact, it can be hypothesized that the more these brain regions deactivate, the better the score, which may reflect the degree to which subjects express the balance between tasks-dependent and tasks-independent networks. With this respect, the inabilities of deactivating the default mode network encountered by the HFA group evidenced here could support, at least in part, a less efficient processing of the relevant information, i.e. the prosodic dimension of speech, in autistic patients. The question arises if this deactivation failure results from abnormal functional interaction between task-dependant and task-independent networks or from a dysfunction of default mode network itself. Even if the first hypothesis cannot be excluded, several functional imaging studies in autism have revealed abnormalities in middle anterior and posterior regions involved in the default mode network during a variety of tasks, either in socioemotional [33]–[64] or non-socioemotional tasks [65], [66].

This preliminary study also has several limitations that need to be taken into account when interpreting the findings. Indeed, the results are based on a relatively small sample of subjects and there is heterogeneity in VIQ in the HFA group, which limits the generalization of the results and makes replication efforts an important step. Even if these limits must be considered, three main points can be raised to run counter to them (i) VIQ scores did not correlate with any of the communicative tasks, (ii) each subject properly answered the 10 items questionnaire, making it possible to state that they understood the text and (iii) VIQ was used as a covariate in all the imaging measures.

In conclusion, this study confirms the existence of perceptive prosodic deficits in autism and demonstrates for the first time that the neural network involved in prosodic speech perception exhibits abnormal activation and deactivation. Future studies should further precise the respective role of task dependant and independent networks and assess the direction of the link between perception and production in autism.

Acknowledgments

The authors thank Drs Ray Cooke and Anne-Marie Carassou for editing the English version of the manuscript. They thank Martine Bordessoules for the scanning of the subjects.

They also thank Willy Mayo, Kattalin Etchegoyhen and Cédric Galéra.

Footnotes

Competing Interests: The authors have declared that no competing interests exist.

Funding: This study was supported by the Fondation de France Recherche Autisme 2006 012484. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Edition t., editor. APA. DSM IV Diagnostic and Statistical Manual. Washington, D.C.: American Psychiatric Association; 1994. [Google Scholar]

- 2.Paul R, Augustyn A, Klin A, Volkmar FR. Perception and production of prosody by speakers with autism spectrum disorders. J Autism Dev Disord. 2005;35:205–220. doi: 10.1007/s10803-004-1999-1. [DOI] [PubMed] [Google Scholar]

- 3.Baron-Cohen S, Ring H, Moriarty J, Shmitz P, Costa D, et al. Recognition of mental state terms: a clinical study of autism, and a functional neuroimaging study of normal adults. British Journal of Psychiatry. 1994;165:640–649. doi: 10.1192/bjp.165.5.640. [DOI] [PubMed] [Google Scholar]

- 4.Klin A. Young autistic children's listening preferences in regard to speech: a possible characterization of the symptom of social withdrawal. J Autism Dev Disord. 1991;21:29–42. doi: 10.1007/BF02206995. [DOI] [PubMed] [Google Scholar]

- 5.Klin A. Listening preferences in regard to speech in four children with developmental disabilities. J Child Psychol Psychiatry. 1992;33:763–769. doi: 10.1111/j.1469-7610.1992.tb00911.x. [DOI] [PubMed] [Google Scholar]

- 6.Kuhl PK, C-C S, Padden D, Dawson G. Links between social and linguistic processing of speech in preschool children with autism: Behavioral and electrophysiological evidence. Developmental Science. 2005;8:1–12. doi: 10.1111/j.1467-7687.2004.00384.x. [DOI] [PubMed] [Google Scholar]

- 7.Burack JA, Charman T, Yirmiya N, Zelazo RP, editors. The development of autism: Perspectives from theory and research. Mahwah, NJ: Lawrence Erlbaum Associates; 2001. [Google Scholar]

- 8.Shriberg LD, Paul R, McSweeny JL, Klin AM, Cohen DJ, et al. Speech and prosody characteristics of adolescents and adults with high-functioning autism and Asperger syndrome. J Speech Lang Hear Res. 2001;44:1097–1115. doi: 10.1044/1092-4388(2001/087). [DOI] [PubMed] [Google Scholar]

- 9.Peppé S, McCann J, Gibbon F, O'Hare A, Rutherford M. Assessing prosodic and pragmatic ability in children with high-functioning autism. Journal of Pragmatics. 2006;38:1776–1791. [Google Scholar]

- 10.Tager-Flusberg H. On the nature of linguistic functioning in early infantile autism. J Autism Dev Disord. 1981;11:45–56. doi: 10.1007/BF01531340. [DOI] [PubMed] [Google Scholar]

- 11.Baltaxe CSJ. In: Prosodic development in normal and autistic children. Schopler E, editor. New York: Plenum; 1985. pp. 95–125. [Google Scholar]

- 12.Fay WS, Schuller . Emerging language in autistic children. Baltimore: University Park Press; 1980. [Google Scholar]

- 13.Peppe S, McCann J. Assessing intonation and prosody in children with atypical language development: the PEPS-C test and the revised version. Clin Linguist Phon. 2003;17:345–354. doi: 10.1080/0269920031000079994. [DOI] [PubMed] [Google Scholar]

- 14.Paul R, Shriberg LD, McSweeny J, Cicchetti D, Klin A, et al. Brief report: relations between prosodic performance and communication and socialization ratings in high functioning speakers with autism spectrum disorders. J Autism Dev Disord. 2005;35:861–869. doi: 10.1007/s10803-005-0031-8. [DOI] [PubMed] [Google Scholar]

- 15.Shriberg LD, Kwaitkowski J, Rasmussen C. Prosody-Voice Screening Profile (PVSP): Scoring forms and training materials. 1990. Communication Skill Builders. Tuscon.

- 16.Young EC, Morris D, Hyman SL, Benneto L. The use of 2 language tests to identify pragmatic language problems in children with autism spectrum disorders. language, Speech, and Hearing Services in Schools. 2005;36:62–72. doi: 10.1044/0161-1461(2005/006). [DOI] [PubMed] [Google Scholar]

- 17.Peppe S, McCann J, Gibbon F, O'Hare A, Rutherford M. Receptive and expressive prosodic ability in children with high-functioning autism. J Speech Lang Hear Res. 2007;50:1015–1028. doi: 10.1044/1092-4388(2007/071). [DOI] [PubMed] [Google Scholar]

- 18.Samson F, Jernel B, Belin P, Ciocca V. Can spectro-temporal complexity explain the autistic pattern of performance on auditory tasks? J Autism Dev Disord. 2006;36:65–76. doi: 10.1007/s10803-005-0043-4. [DOI] [PubMed] [Google Scholar]

- 19.Bonnel A, Peretz I, Trudel M, Gallun E, Bonnel AM. Enhanced pitch sensitivity in individuals with autism: a signal detection analysis. J Cogn Neurosci. 2003;15:226–235. doi: 10.1162/089892903321208169. [DOI] [PubMed] [Google Scholar]

- 20.Heaton P, Williams K, Cummins O, Happé F. Autism and pitch processing splinter skills: a group and sub-group analysis. Autism. 2008;12:21–37. doi: 10.1177/1362361307085270. [DOI] [PubMed] [Google Scholar]

- 21.Lepisto T, Kajander M, Vanhala R, Alku P, Huotilainen M, et al. The perception of invariant speech features in children with autism. Biol Psychol. 2008;77:25–31. doi: 10.1016/j.biopsycho.2007.08.010. [DOI] [PubMed] [Google Scholar]

- 22.Groen W, Huurne N, Swinkels S, van der Gaag R, Buitelaar J, et al. Intact Spectral but Abnormal Temporal Processing of Auditory Stimuli in Autism. Journal of Autism and Developmental Disorders. 2009;39:742–750. doi: 10.1007/s10803-008-0682-3. [DOI] [PubMed] [Google Scholar]

- 23.Happe F, Frith U. The weak coherence account: detail-focused cognitive style in autism spectrum disorders. J Autism Dev Disord. 2006;36:5–25. doi: 10.1007/s10803-005-0039-0. [DOI] [PubMed] [Google Scholar]

- 24.Jarvinen-Pasley A, Ramus F, Happé F, Heaton P. Enhanced perception of speech in autism. Developmental science. 2008;11:109–121. doi: 10.1111/j.1467-7687.2007.00644.x. [DOI] [PubMed] [Google Scholar]

- 25.Plante E, Schmithorst V. Prosodic processing by children: An fMRI study. Brain & language. 2006;97:332–342. doi: 10.1016/j.bandl.2005.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- 27.Gervais H, Belin P, Boddaert N, Leboyer M, Coez A, et al. Abnormal cortical voice processing in autism. Nat Neurosci. 2004;7:801–802. doi: 10.1038/nn1291. [DOI] [PubMed] [Google Scholar]

- 28.Kujala T, Lepisto T, Nieminen-von Wendt T, Naatanen P, Naatanen R. Neurophysiological evidence for cortical discrimination impairment of prosody in Asperger syndrome. Neurosci Lett. 2005;383:260–265. doi: 10.1016/j.neulet.2005.04.048. [DOI] [PubMed] [Google Scholar]

- 29.Korpilahti P, Jansson-Verkasalo E, Mattila ML, Kuusikko S, Suominen K, et al. Processing of affective speech prosody is impaired in Asperger syndrome. J Autism Dev Disord. 2007;37:1539–1549. doi: 10.1007/s10803-006-0271-2. [DOI] [PubMed] [Google Scholar]

- 30.Russo NM, Trommer B, Nicol T, Zecker S, Bradlow A, et al. Deficient brainstem encoding of pitch in children with Autism Spectrum Disorders. Clin Neurophysiol. 2008;119:1720–1731. doi: 10.1016/j.clinph.2008.01.108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mason RA, Kana RK, Minshew N, Just MA. Theory of mind disruption and recruitment of the right hemisphere during narrative comprehension in autism. Neuropsychologia. 2008;46:269–280. doi: 10.1016/j.neuropsychologia.2007.07.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tesink C, Buitelaar JK, Petersson K, Gaag R, Kan C, et al. Neural correlates of pragmatic language comprehension in autism disorders. Brain. 2009;132:1941–1952. doi: 10.1093/brain/awp103. [DOI] [PubMed] [Google Scholar]

- 33.Wang AT, Lee SS, Sigman M, Dapretto M. Neural basis of irony comprehension in children with autism: the role of prosody and context. Brain. 2006;129:932–943. doi: 10.1093/brain/awl032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kennedy DP, Redcay E, Courchesne E. Failing to deactivate: resting functional abnormalities in autism. Proc Natl Acad Sci U S A. 2006;103:8275–8280. doi: 10.1073/pnas.0600674103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kennedy DP, Courchesne E. The intrinsic functional organization of the brain is altered in autism. Neuroimage. 2008;39:1877–1885. doi: 10.1016/j.neuroimage.2007.10.052. [DOI] [PubMed] [Google Scholar]

- 36.Grady C, Kovacevic N, Strother S, Afshin B, Wojtowicz M, et al. A Multivariate Analysis of Age-Related Differences in Default Mode and Task-Positive Networks across Multiple Cognitive Domain. Cerebral Cortex. 2010;20:1432–1447. doi: 10.1093/cercor/bhp207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kelly A, Biswal B, Castellanos F, Milham M. Competition between functional brain networks mediates behavioral variability. NeuroImage. 2008;39:527–537. doi: 10.1016/j.neuroimage.2007.08.008. [DOI] [PubMed] [Google Scholar]

- 38.Friston KJ, Ashburner J, Poline JB, Frith CD, Heather JD, et al. : Spatial registration and normalization of images. Human Brain Mapping. 1995;2:165–189. [Google Scholar]

- 39.Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- 40.Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, et al. Automated anatomical labelling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- 41.Brett M, Anton JL, Valabregue R, Poline JB. Region of interest analysis using an SPM toolbox (abstract). 2002. Paper presented at the 8th international conference of Functional Mapping of the Human Brain, Sendai, Japan.

- 42.Saussure F. 1976. Cours de linguistique générale, Payot, Paris.

- 43.Guberina P. The verbo-tonal method for rehabilitating people with communication problems. New York: World Rehabilitation Fund, International Exchange of Information in Rehabilitation; 1981. [Google Scholar]

- 44.Kuperberg GR, Bullmore ET, Brammer MJ, Rabe-Hesketh S, Wright IC, et al. Common and distinct neural substrates for pragmatic, semantic, and syntactic processing of spoken sentences: an fMRI study. Journal of Cognitive Neuroscience. 2000;12:321–341. doi: 10.1162/089892900562138. [DOI] [PubMed] [Google Scholar]

- 45.Meyer M, Friederici A, Lohmann G, von Cramon D. fMRI reveals brain regions mediating slow prosodic modulations in spoken sentences. Human Brain Mapping. 2002;17:73–88. doi: 10.1002/hbm.10042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Muller RA, Behen ME, Muzik O, Mangner TJ, Chugani HT. Receptive and expressive language activations for sentences: a PET study. Neuroreport. 1997;8:3767–3770. doi: 10.1097/00001756-199712010-00022. [DOI] [PubMed] [Google Scholar]

- 47.Schlosser MJ, Fulbright RK, Gore JC, Mc Carthy G. Functional MRI studies of auditory comprehension. Human Brain Mapping. 1998;6:1–13. doi: 10.1002/(SICI)1097-0193(1998)6:1<1::AID-HBM1>3.0.CO;2-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Breitenstein C, Van Lancker D, Kempler D, Daum I, Waters CH. The contribution of working memory to the perception of emotional prosody in Parkinson's disease. Brain & language. 1998;65:243–246. [Google Scholar]

- 49.George MS, Parekh P, Rosinsky N. Understanding emotional prosody activates right hemisphere regions. Archives of Neurology. 1996;53:665–670. doi: 10.1001/archneur.1996.00550070103017. [DOI] [PubMed] [Google Scholar]

- 50.Hesling I, Clement S, Bordessoules M, Allard M. Cerebral mechanisms of prosodic integration: evidence from connected speech. NeuroImage. 2005;24:937–947. doi: 10.1016/j.neuroimage.2004.11.003. [DOI] [PubMed] [Google Scholar]

- 51.Imaizumi S, Mori K, Kiritani S, Kawashima R, Sugiura M, et al. Vocal identification of speaker and emotion activates different brain regions. NeuroReport. 1997;8:2809–2812. doi: 10.1097/00001756-199708180-00031. [DOI] [PubMed] [Google Scholar]

- 52.Ross ED, Thompson RD, Yenkosky J. Lateralization of affective prosody in brain and the callosal integration of hemispheric language functions. Brain and Language. 1997;56:27–54. doi: 10.1006/brln.1997.1731. [DOI] [PubMed] [Google Scholar]

- 53.Buchanan L, Kiss I, Burgess C. Word and non-word reading in a deep dyslexic: Phonological information enhances performance. Brain Cognition. 2000;43:65–68. [PubMed] [Google Scholar]

- 54.Démonet JF, Chollet F, Ramsay S, Cardebat D, Nespoulous JL, et al. The anatomy of phonological and semantic processing in normal subjects. Brain. 1992;115:1753–1768. doi: 10.1093/brain/115.6.1753. [DOI] [PubMed] [Google Scholar]

- 55.Zatorre RJ, Evans AC, Meyer E, Gjedde A. Lateralization of phonetic and pitch discrimination in speech processing. Science. 1992;256:846–849. doi: 10.1126/science.1589767. [DOI] [PubMed] [Google Scholar]

- 56.Zatorre RJ, Halpern AR, Perry DW, Meyer E, Evans AC. Hearing in the mind's ear: A PET investigation of musical imagery and perception. Journal of Cognitive Neuroscience. 1996;8:29–46. doi: 10.1162/jocn.1996.8.1.29. [DOI] [PubMed] [Google Scholar]

- 57.Perani D, Paulesu E, Sebastian-Galles N, Dupoux E, Dehaene S, et al. The bilingual brain: Proficiency and age of acquisition of the second language. Brain. 1998;121:1841–1852. doi: 10.1093/brain/121.10.1841. [DOI] [PubMed] [Google Scholar]

- 58.Dehaene S, Dupou E, Mehler J, Cohen L, Paulesu E, et al. Anatomical variability in the cortical representation of first and second language. Neuroreport. 1997;8:3809–3815. doi: 10.1097/00001756-199712010-00030. [DOI] [PubMed] [Google Scholar]

- 59.Catani M, Jones DK, Ffytche DH. Perisylvian language networks of the human brain. American Neurology. 2005;57:8–16. doi: 10.1002/ana.20319. [DOI] [PubMed] [Google Scholar]

- 60.Vigneau M, Beaucousin V, Hervé PY, Duffau H, Crivello F, et al. Meta-analysing left hemisphere language areas: Phonology, semantics, and sentence processing. Neuroimage. 2006;30:1414–1432. doi: 10.1016/j.neuroimage.2005.11.002. [DOI] [PubMed] [Google Scholar]

- 61.Baddeley AD, Emslie H, Nimmo-Smith I. Speed and Capacity of Language Processing (SCOLP) Test. Bury St Edmunds (UK): Thames Valley Test Company; 1992. [Google Scholar]

- 62.Hickok G, Poeppel D. Towards a functional neuroanatomy of speech perception. Trends Cogn Sci. 2000;4:131–138. doi: 10.1016/s1364-6613(00)01463-7. [DOI] [PubMed] [Google Scholar]

- 63.Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, et al. A default mode of brain function. Proc Natl Acad Sci U S A. 2001;98:676–682. doi: 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Pierce K, Haist F, Sedaghat F, Courchesne E. The brain response to personally familiar faces in autism: findings of fusiform activity and beyond. Brain. 2004;127:2703–2716. doi: 10.1093/brain/awh289. [DOI] [PubMed] [Google Scholar]

- 65.Luna B, Minshew NJ, Garver KE, Lazar NA, Thulborn KR, et al. Neocortical system abnormalities in autism: an fMRI study of spatial working memory. Neurology. 2002;59:834–840. doi: 10.1212/wnl.59.6.834. [DOI] [PubMed] [Google Scholar]

- 66.Ring HA, Baron-Cohen S, Wheelwright S, Williams SC, Brammer M, et al. Cerebral correlates of preserved cognitive skills in autism: a functional MRI study of embedded figures task performance. Brain. 1999;122(Pt 7):1305–1315. doi: 10.1093/brain/122.7.1305. [DOI] [PubMed] [Google Scholar]