Abstract

Although the right fusiform face area (FFA) is often linked to holistic processing, new data suggest this region also encodes part-based face representations. We examined this question by assessing the metric of neural similarity for faces using a continuous carryover functional MRI (fMRI) design. Using faces varying along dimensions of eye and mouth identity, we tested whether these axes are coded independently by separate part-tuned neural populations or conjointly by a single population of holistically tuned neurons. Consistent with prior results, we found a subadditive adaptation response in the right FFA, as predicted for holistic processing. However, when holistic processing was disrupted by misaligning the halves of the face, the right FFA continued to show significant adaptation, but in an additive pattern indicative of part-based neural tuning. Thus this region seems to contain neural populations capable of representing both individual parts and their integration into a face gestalt. A third experiment, which varied the asymmetry of changes in the eye and mouth identity dimensions, also showed part-based tuning from the right FFA. In contrast to the right FFA, the left FFA consistently showed a part-based pattern of neural tuning across all experiments. Together, these data support the existence of both part-based and holistic neural tuning within the right FFA, further suggesting that such tuning is surprisingly flexible and dynamic.

INTRODUCTION

Few visual stimuli are as ecologically important for human observers as the human face. Faces contain a variety of cues to emotional state, social status, and identity, which human observers can extract with seemingly little effort. However, as a stimulus class, faces pose a difficult challenge for the visual system, because they are highly complex and relatively homogeneous.

One highly influential account of face perception argues that faces differ from other visual stimuli in how they are processed, undergoing relatively little decomposition into component parts (Farah et al. 1998). Instead, they are encoded via a holistic or integrative mechanism, as a template or gestalt. Evidence for holistic processing comes from behavioral findings such as the composite face effect (Young et al. 1987), in which interference from irrelevant face features is present in a part recognition task. Together with other findings such as the face inversion effect (Yin 1969) and whole-over-part superiority effect (Tanaka and Farah 1993), these results have been taken as evidence of a dedicated holistic processing mechanism for upright faces.

Despite this general consensus, many questions about holistic processing remain. For example, some authors have proposed that the holistic/gestalt encoding described above is one of several “configural” mental processes associated with face perception, which represent faces in terms of spacing between features rather than features themselves (Maurer et al. 2002; Rossion 2008). However, methodological confounds within this literature have led other researchers to question this finding (McKone and Yovel 2009).

Instead, an explicit formulation of holistic processing may be borrowed from the general object recognition literature. Research on the metric structure of similarity spaces has long distinguished two types of stimulus coding: integral and separable (Shepherd 1964). Operationally (Garner 1974), an integral stimulus space can be defined as a system in which changes on one dimension interfere with perception of the other dimension (e.g., hue and saturation variation in colors). In a separable stimulus space, in contrast, the dimensions are perceived independently (e.g., color and shape of objects). In general, the perception of faces has been found to have integral characteristics, although evidence for a slower, separable process that individuates features has also been found (Bartlett et al. 2003).

Recent neuroimaging research has explicitly linked behavioral measures of integral versus separable perception to particular forms of neural coding. Specifically, for two given stimulus dimensions, integral stimulus perception would be associated with conjointly tuned neurons, whereas separable perception implies the existence of neural populations that are independently tuned for the two stimulus dimensions (Drucker et al. 2009). These two types of neural tuning may be distinguished through measurement of neural habituation (the reduction in neural response to stimuli that repeat prior stimulus features). Essentially, independent neural populations are expected to produce an additive recovery from adaptation to “combined” stimulus changes, whereas a conjointly tuned neural population will produce a recovery that is less than the sum of the recoveries observed for “pure” stimulus changes along each dimension (Drucker et al. 2009; Engel 2005). This neural measure is therefore reflective of the behavioral measure of metric similarity.

Given these results, can we likewise apply a conjoint versus independent metric to the holistic processing of faces? Recent research on the representation of “face-space” has used similar ideas to look at questions such as the geometry of face space (Loffler et al. 2005) and the representation of gender and ethnicity (Ng et al. 2006). For example, the latter study directly examined conjoint tuning using psychophysical and functional MRI (fMRI) adaptation for faces that varied in both gender and ethnicity versus along a single dimension. Other fMR adaptation studies (Jiang et al. 2006; Rothstein et al. 2005) have shown a monotonic relationship between the dissimilarity of a pair of faces presented in succession and the magnitude of recovery of neural response within the fusiform face area (FFA) (Kanwisher et al. 1997; McCarthy et al. 1997). These results indicate the presence of a neural population with broad tuning to facial identity. Whether these neurons are tuned to face parts or wholes, however, is not defined.

In this study, we examined whether neural tuning for faces is best quantified by a conjoint or independent metric using fMR adaptation. Although hallmarks of holistic processing have been reported for several “face-selective” brain areas, activity within the right FFA in particular has been shown to be consistent with the face inversion effect (Yovel and Kanwisher 2004, 2005) and composite face effect (Schiltz and Rossion 2006). Therefore the right FFA seems to play a special role in the holistic processing of faces.

It is unclear, however, whether this holistically responsive region is composed solely of neurons tuned to represent entire faces. Single-unit recordings from analogous regions of monkey inferotemporal (IT) cortex have found neurons sensitive to individual features or combinations of features, without the high nonlinearity expected of holistic processing (Freiwald et al. 2009; Perrett et al. 1982). Likewise, intracranial recordings in humans show preferential responses to face parts at sites both lateral and medial to those responding to whole faces in ventral IT cortex (McCarthy et al. 1999). Thus it is possible that both parts and wholes are represented within face-selective areas such as the FFA.

Consistent with this idea, recent work from our own laboratory using fMRI has shown that faces manipulated to elicit holistic or part-based processing produce similar activation of the FFA (Harris and Aguirre 2008). However, within the right FFA, the response was also modulated by familiarity, which is known to increase holistic processing (Buttle and Raymond 2003; Young et al. 1985). Therefore, although the right FFA is of special importance for holistic face processing, both part and whole representations seem to exist within this area.

In the experiments presented here, we test this idea in greater depth by probing the metric of neural similarity within the FFA using fMRI. Specifically, we measured neural adaptation to stimulus changes along a single dimension (e.g., eye appearance) and to changes along two dimensions (e.g., eyes and mouth) using a continuous carry-over approach (Aguirre 2007). If independent neural populations represent the eyes and mouth, we would expect recovery from adaptation for combined changes to the eyes and mouth to be simply the sum of the recovery seen for each feature change in isolation. Conversely, if neural populations represent eyes and mouth changes conjointly, we would predict a subadditive response to combined stimulus changes (Drucker et al. 2009).

This test is most efficiently conducted within the context of a continuous carry-over design (Aguirre 2007). Unlike standard fMR adaptation studies, which use paired presentations of adaptor and test, carry-over designs present stimuli in an unbroken, counterbalanced sequence (Aguirre 2007). Counterbalancing also allows the “direct” effects of stimuli (e.g., a larger amplitude response to 1 or another stimulus) to be distinguished from carry-over effects (the effect of the preceding stimulus on the current stimulus).

Although the methodological aspects of the continuous carry-over approach have been discussed previously (Aguirre 2007; Drucker et al. 2009), it is worth reiterating several aspects of the design here. First, like all fMR adaptation techniques, this method rests on the assumptions that BOLD responses monotonically reflect neural population activity and that the underlying neural responses vary smoothly with changes along a stimulus dimension. Thus this method cannot probe extremely sparse neural representations, such as a “grandmother cell” coding of individual face identity. However, given that adaptation effects in the FFA are known to vary monotonically with stimulus similarity (Jiang et al. 2006), this latter stipulation is unlikely to be an issue for our experiment. Other assumptions of the continuous carry-over technique, such as an equivalent mean response to each face identity, are directly verified as part of the experimental analysis.

Therefore in this study, we used a continuous carry-over design to probe for independent versus conjoint neural tuning to faces varying along dimensions of eye and mouth identity. Using four faces changing along eye, mouth, or both eye and mouth axes (Fig. 1A), we measured neural adaptation to a continuous stream of stimuli. By comparing the recovery from neural adaptation for “Pure” changes along a single axis (eyes only or mouth only) to that for “Composite” changes along both eye and mouth axes, we can infer whether neural populations in the FFA represent faces in terms of parts or wholes.

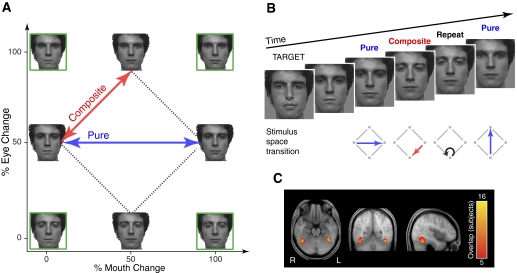

Fig. 1.

Methods for the experiments. A: 4 endpoint faces (green boxes) varying along eye and mouth dimensions were morphed to create the experimental stimuli, which have equal 50% differences in both eye and mouth dimensions. Signal recovery in functional MRI (fMRI) was measured for stimulus transitions either along a single axis (e.g., mouth only), so-called “pure” transitions (blue), or “composite” changes along both eye and mouth axes (red). Because the original stimulus images used in the experiment were not approved for publication, example faces are shown in the figure. B: sample fMRI sequence. The measure of interest was the differential adaptation of the fMRI response for Pure vs. Composite stimulus transitions from the previous face. Subjects were not explicitly informed of the stimulus space arrangement or the transitions of interest and were instructed to monitor for the appearance of a target face. C: analyses focused on the fusiform face area (FFA) in left and right hemispheres, functionally defined in each subject from an independent localizer scan. These maps reflect the overlap of FFA across subjects (n = 16), shown atop the average of the registered anatomical images across subjects.

METHODS

Experiment 1: testing for conjoint tuning for holistically perceived faces

SUBJECTS.

Sixteen subjects between the ages of 18 and 35 yr with normal or contact-corrected vision were recruited from local universities. An additional four subjects were excluded because of excessive head motion or failure to show main effects of adaptation (described below). In this and all subsequent experiments, informed consent was obtained from all subjects, and procedures followed institutional guidelines and the Declaration of Helsinki.

STIMULI.

Two neutral faces (266 × 360 pixels, subtending 5.7 × 7.6° of visual angle) from the NimStim stimulus set (Tottenham et al. 2009) were used to make four endpoint faces varying along eye and mouth dimensions (Fig. 1A, green boxes). Morphs between these endpoint faces were combined with the base features to create the final experimental stimuli varying by eye and mouth features (Fig. 1A, dashed lines). Stimulus transitions consisted of either Pure (Fig. 1A, blue lines) changes along a single axis (e.g., mouth only) or Composite changes along both eye and mouth axes (Fig. 1A, red lines).

The stimuli were designed so that the summed, composite stimulus changes (50% eye + 50% mouth) would be equal at a low perceptual level to a full, pure stimulus change (100% eye or 100% mouth). We tested for this property in two ways. First, a perceptual discriminability task run separately in six different subjects found no differences between the Pure and Composite transitions in accuracy [Pure = 93.1 ± 2% (SE), Composite = 95.0 ± 1.2%] or reaction time (Pure = 1.27 ± 0.22 s, Composite = 1.26 ± 0.22 s). The similarity of the face images was also assessed using a computational model of V1 cortex, consisting of Gabor filters of varying scales and orientations (Renninger and Malik 2004). The computed dissimilarity between pairs of stimuli that differed in a single feature (Pure: 0.017) was essentially the same as that computed for pairs of stimuli that differed in both eyes and mouth (Composite: 0.016).

PROCEDURE.

The experiment consisted of five runs, during which subjects saw a steady stream of stimuli consisting of the four faces from the artificial face space described above, as well as a “target” face from outside the space and blank screens. The order of these six types of stimuli (Eye1, Eye2, Mouth1, Mouth2, Target, and Blank) was determined by a first-order counterbalanced, optimized, n = 6, type 1, index 1 sequence (a sequence that presents the stimuli in counterbalanced, permuted blocks; Aguirre 2007). Five scans of 156 TRs (time to repetition) each (∼8 min) were acquired for each subject, with two stimuli presented per 3 s TR. To allow steady-state hemodynamic responses at the start of each run, the final five TRs (10 trials) of each run were repeated as the first five TRs of the following run, which were trimmed before analysis (Aguirre 2007). Thus there were 151 TRs (302 trials) per scan, for a total of 755 TRs (1,510 trials).

In each trial, a stimulus was displayed for 1,350 ms followed by a blank screen for 150 ms. Whereas the internal features of the face were kept constant throughout the experiment to test the coding of eye and mouth stimulus dimensions, the external outline (hair, ears, jaw) of the face was varied across scans to reduce low-level habituation. During each scan, subjects were instructed to respond by button-press to the appearance of the target face; postexperimental analysis verified that all subjects achieved an acceptable level of accuracy (mean d′ = 3.03, SD = 0.89, range = 1.66–6.02). Target trials constituted one sixth of the total trials and were modeled separately, as were trials that followed targets. Subject responses were recorded using a fiber-optic button box (//www.curdes.com/newforp.htm). Null trials, consisting of a blank, gray screen, constituted one sixth of the total trials and had a duration (3,000 ms) twice that of other trials (appendix A of Aguirre 2007).

MRI SCANNING.

Scanning was performed on a 3-T Siemens Trio using an eight-channel surface array coil. Echoplanar BOLD fMRI data were collected at a TR of 3 s, with 3 × 3 × 3 mm isotropic voxels covering the entire brain. Head motion was minimized with foam padding. A high-resolution anatomical image (3D MPRAGE) with 1 × 1 × 1-mm voxels was also acquired for each subject. Visual stimuli were presented using an Epson 8100 3-LCD projector with Buhl long-throw lenses for rear-projection onto Mylar screens, which subjects viewed through a mirror mounted on the head coil.

MRI PREPROCESSING AND ANALYSIS.

BOLD fMRI data were processed using the VoxBo software package (http://www.voxbo.org/). After image reconstruction, the data were sinc interpolated in time to correct for the fMRI acquisition sequence, motion corrected, transformed to a standard spatial frame (MNI, using SPM2; http://www.fil.ion.ucl.ac.uk/spm), and spatially smoothed with a 2-voxel full-width half-max (FWHM) three-dimensional (3D) Gaussian kernel. Within-subject statistical models were created using the modified general linear model (Worsley and Friston 1995). Experimental conditions were modeled as delta functions and convolved with an average hemodynamic response function (Aguirre et al. 1998). Nuisance covariates included effects of scan, global signals, and spikes in the data caused by sudden head movement.

In contrast with traditional paired adaptation methods (Henson 2003), in the continuous carry-over approach, each stimulus serves as both adaptor and test for the other stimuli in the sequence. The relationship of each stimulus to the preceding stimulus forms the basis for the covariates of interest (Aguirre 2007). By design, there were six possible transitions between stimuli: either changes along a single axis (eye or mouth) or changes along both eye and mouth axes. Covariates therefore included six conditions based on stimulus change transitions: two Pure changes along eye or mouth (e.g., E0M50 ↔ E100M50 or E50M0 ↔ E50M100) and four Composite changes along both eye and mouth axes (E0M50 ↔ E50M0, E50M0 ↔ E100M50, E100M50 ↔ E50M100, E50M100 ↔ E0M50). Modeling also included covariates for repeats, target trials, and trials immediately after targets. Blank trials and trials after blank screens served as the reference condition.

The direct effect of stimulus identity on BOLD signal amplitude was modeled using a covariate for each stimulus identity (E0M50, E100M50, E50M0, E50M100) and the target stimulus. Blank trials and trials after blank screens again served as the reference condition.

Anatomical regions of interest (ROIs) were defined individually for each subject using a separate blocked localizer experiment with face, scene, object, and scrambled noise conditions. Left and right regions anatomically corresponding to the FFA were manually selected within the ventral occipito-temporal region using the contrast of faces versus scenes, with a threshold of t ≥ 3.5. Across 16 subjects, the average size of the FFA ROIs was 102 ± 37 voxels of 3 × 3 × 3 mm in volume. Anatomically determined area V1 regions of interest were defined using the topology of the cortical surface for each subject (Hinds et al. 2008) and the FreeSurfer software package (http://surfer.nmr.mgh.harvard.edu/). Beta values extracted from these functionally and anatomically defined ROIs were used for statistical analyses.

Experiment 2: testing for independent tuning for misaligned face halves

SUBJECTS.

Sixteen subjects between the ages of 18 and 35 yr with normal or contact-corrected vision were recruited from local universities. Seven of the subjects in experiment 2 also participated in experiment 1 in a separate session several months apart. An additional two subjects were excluded because of excessive head motion and self-reported sleepiness or discomfort. Unlike experiment 1, failure to show a main effect of adaptation was not used as a criterion for subject exclusion, because previous work failed to find adaptation effects for misaligned composite faces (Schiltz and Rossion 2006). An additional motivation is that we wished to retain the ability to test for adaptation to identical stimulus presentation. This was needed to provide a positive control for the expected negative finding of no difference in adaptation between pure and composite stimulus changes for misaligned faces. By including subjects regardless of their “main effect” of adaptation, we would still be able to test for the response to identical stimulus presentation, which would otherwise be biased by the exclusion criteria used in experiment 1.

STIMULI.

Stimuli were identical to those used in experiment 1, with the sole change that top and bottom halves of the faces were misaligned (Fig. 3A). The misaligned stimuli subtended 8.4 × 7.6° of visual angle.

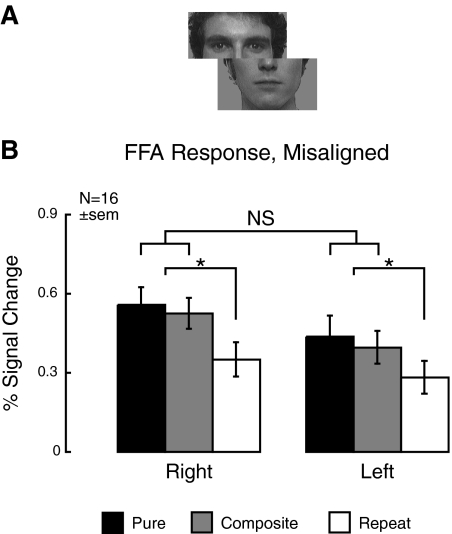

Fig. 3.

Measured neural tuning for experiment 2 (misaligned faces). To assess whether the right FFA can also display significant neural tuning for parts, we replicated the 1st experiment with horizontally misaligned faces, known to disrupt holistic processing. A: sample stimulus. All other experimental procedures and analyses remained the same as experiment 1. B: the right FFA continued to show significant fMRI signal recovery for Pure and Composite stimulus transitions relative to identical repetitions, but there was no significant difference between Pure and Composite transitions. Together, these data suggest that the right FFA contains neural populations tuned to parts and wholes.

PROCEDURE.

Experimental procedure, scanning parameters, and fMRI analysis were identical to experiment 1. Performance on the target detection task during scanning was comparable to experiment 1 (mean d′ = 2.71, SD = 0.44, range = 1.90–3.18).

Experiment 3: a positive test for part-based tuning

SUBJECTS.

Five subjects between the ages of 18 and 35 yr with normal or contact-corrected vision who participated in experiment 1 also participated in this experiment in a separate scanning session conducted 5 days (1 subject) to >1 mo (4 subjects) after experiment 1.

STIMULI.

Stimuli were generated in a similar fashion to those in experiment 1, with the major difference that they were selected to have an asymmetric geometry within the stimulus space (Fig. 4A). Whereas the original stimuli were equidistant from one another along either the eye or mouth axis, the asymmetric stimulus space had a major axis consisting of an 87% change in one feature and a minor axis with a 50% change in the other feature. Subjects were tested with all four versions of this asymmetric stimulus space (k1–k4). As expected from the stimulus space arrangement, a Gabor filter, V1-model applied to the stimuli showed larger pixel-wise distances in the major axis of the Composite change compared with the Pure change (0.021 vs. 0.017). However, a perceptual discriminability task run separately in six different subjects found no differences between the Pure and Composite transitions in accuracy (Pure = 95.0 ± 1.1%, Composite = 97.3 ± 1%) or reaction time (Pure = 1.16 ± 0.07 s, Composite = 1.16 ± 0.07), showing no large behavioral disparities in processing of these stimuli. Subjects were never explicitly exposed to the arrangement of the faces within the stimulus space.

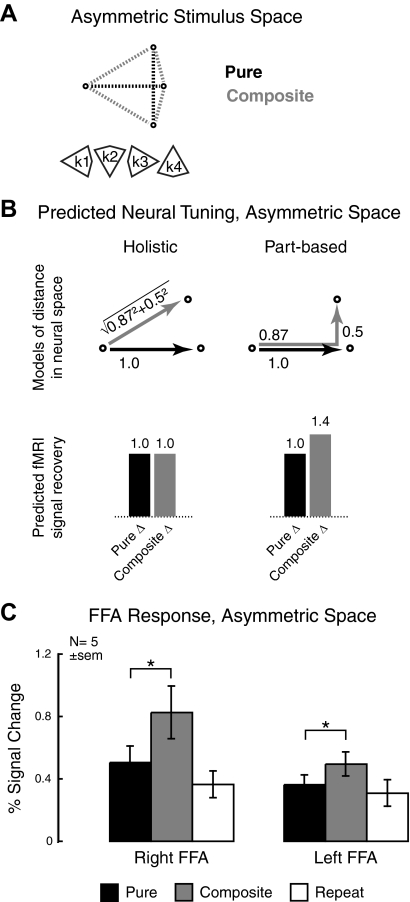

Fig. 4.

Predicted and measured neural tuning for experiment 3 (asymmetric space). This experiment was designed to provide a positive measure of part-based neural tuning as a control for the previous 2 experiments. A: the asymmetric stimulus space has an 87% change in 1 feature along the major axis and a 50% change in the other feature on the minor axis. Because of this directionality, there are 4 possible versions of the asymmetric space (k1–k4); subjects were exposed to all 4 versions. B: the values of the asymmetric stimulus space were chosen to produce opposite predictions to those for the original face stimulus set. Left: if tuning is holistic, responses to Pure and Composite stimulus transitions will be equivalent in this space. Right: because of the asymmetric arrangement of the space, additive part-based tuning would result in greater recovery for Composite stimulus transitions. C: measured fMRI signal recovery for the asymmetric space. Although the left FFA response remains part-based, the right FFA response unexpectedly also displays part-based neural tuning.

PROCEDURE.

Experimental procedure, scanning parameters, and fMRI analysis were similar to experiment 1, with the one difference that modeling of the composite stimulus transition was further subdivided along the long and short arms of the space. By design, only the comparison of the long arm of Composite changes versus the Pure feature change axis was informative as to the nature of the stimulus space representation (Fig. 4B). Therefore for this stimulus space, Composite stimulus transitions only along the long arm were of interest. Behavioral performance on the target detection task was similar to that in the previous experiments (mean d′ = 2.52, SD = 0.58, range = 1.92–3.22).

RESULTS

Experiment 1: testing for conjoint tuning for holistically perceived faces

As described above, fMRI adaptation may be used to test whether stimulus changes are represented by the independent activity of separate neural populations or conjoint coding within a single group of neurons (Drucker et al. 2009). In our first experiment, we applied this general principle to the specific case of whole versus part-based coding of faces.

To do so, we created faces varying along two axes of eye and mouth identity (Fig. 1A). These faces were presented to subjects in a continuous, counterbalanced stream during fMRI scanning while subjects monitored for the appearance of an unrelated target face (Fig. 1B). Thus the paradigm allows measurement of automatic, task-unrelated neural tuning to the dimensions of eye and mouth identity. (Note that subjects were never exposed to the original eye and mouth identities or explicitly informed of the stimulus space geometry.)

Of key interest for our analysis were the responses of left and right FFA (Fig. 1C) to Pure stimulus transitions along a single axis (e.g., mouth or eyes only; Fig. 1A, blue line) versus Composite transitions involving change to both eye and mouth identity (Fig. 1A, red line). Depending on whether these stimulus dimensions are coded conjointly or independently, we would predict different patterns of fMRI signal recovery.

Specifically, neural populations tuned to individual face parts or whole faces will show different degrees of adaptation for different stimulus transitions (analogous to behavioral studies of integral and separable perception; Shepherd 1964). Separate populations of neurons tuned to represent different face parts independently will treat half-step composite changes additively, producing equal recovery from adaptation for Pure and Composite transitions (Fig. 2A, right). In contrast, if neurons are tuned to represent the entire face (conjoint or holistic coding), half-step composite changes will be subadditive, resulting in greater recovery from adaptation for Pure change (Fig. 2A, left). In the model presented for Fig. 2A, an example neural distance that perfectly reflects “integral” perception is considered, implying a Euclidean measure of dissimilarity of the stimuli (Shepherd 1964). In practice, a significantly subadditive recovery from adaptation is sufficient to reject the hypothesis of separable (part-based) neural representation (appendix A of Drucker et al. 2009).

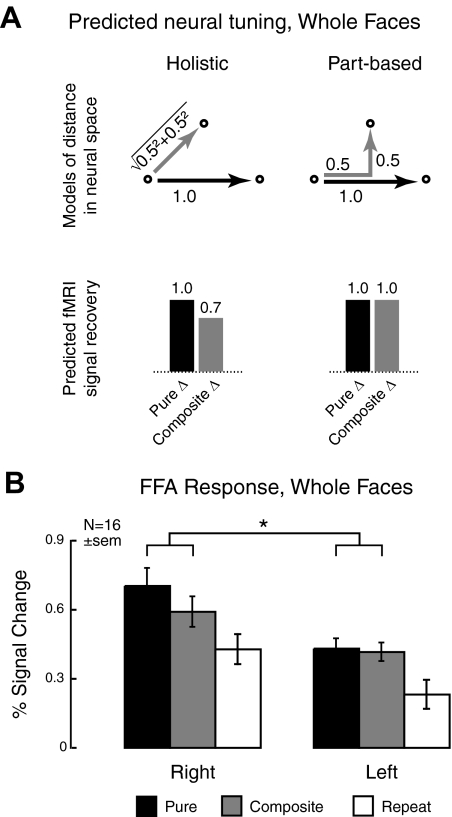

Fig. 2.

Predicted and measured neural tuning for experiment 1 (whole faces). A: because of the manner in which the stimulus spaces are constructed, different response patterns are predicted for Euclidean vs. “city block” metrics of distance, associated, respectively, with holistic and part-based processing. Left: neural tuning to the entire face will result in a subadditive, Euclidean distance between composite faces, with greater fMRI signal recovery for Pure (red) relative to Composite (blue) stimulus transitions. Right: conversely, tuning for independent parts is associated with additive part changes, resulting in equal recovery for Pure and Composite stimulus transitions. B: measured fMRI responses show that, although tuning in the left FFA is part-based, the right FFA is holistic. Error bars in this and all subsequent graphs reflect SE.

Measured recovery of the fMRI signal in the right and left FFA is shown in Fig. 2B. Comparing Pure and Composite stimulus transitions, we see different patterns of adaptation in the two hemispheres. There is no difference between Pure and Composite changes in the left FFA, whereas the right FFA displays greater recovery from adaptation for Pure stimuli. A two-way repeated-measures ANOVA with hemisphere (right/left) and stimulus transition (pure/composite) as factors confirmed these results, with a significant interaction of hemisphere × stimulus transition [F(1,16) = 4.8, P = 0.045]. Consistent with this result, a post hoc t-test found a significant difference between Pure and Composite transitions in right [t(15) = 2.7, P = 0.015] but not left [t(15) = 0.48, P = 0.6] FFA. Main effects of hemisphere [F(1,16) = 15.9, P = 0.001] and stimulus transition [F(1,16) = 4.8, P = 0.045] were also significant.

Thus the pattern of fMRI signal recovery shows different neural tuning in the left and right hemispheres. The left FFA shows no difference in adaptation to Pure versus Composite transitions—the predicted pattern for part-based neural tuning. This result agrees with studies of featural or part-based processing in the left hemisphere (Parkin and Williamson 1987). The right FFA, in contrast, displays greater recovery for Pure stimulus transitions and thus subadditive recovery from adaptation to Composite face part transitions, which is indicative of holistic processing. Again, these results are consistent with prior work, which has particularly implicated this region in holistic coding of faces (Harris and Aguirre 2008; Schiltz and Rossion 2006; Yovel and Kanwisher 2004, 2005).

The recovery from adaptation associated with Pure stimulus transitions was greater for the eye change compared with the mouth change [0.65 vs. 0.48% signal change; F(1,15) = 6.7, P = 0.02] in both left and right hemispheres (interaction of effect with hemisphere: F < 1). This is consistent with prior work showing the greater perceptual salience of the eyes (Langton et al. 2000). Importantly, however, this greater salience of the eye dimension does not affect our inferences regarding conjoint versus independent neural tuning. Simulations have shown that unequal perceptual salience cannot lead to erroneous measurement of subadditive neural responses (Drucker et al. 2009). This insensitivity to the unequal salience of the perceptual axes may be appreciated through a geometric intuition: although “stretched” from a square to a rhombus, the sides of the space defined by the four stimuli still obey L2 metric norms. That is, composite stimulus changes still have a Euclidean distance in conjoint coding, and additive distance in independent coding, when one axis is longer than another. Consistent with this, the BOLD signal response to the four different composite transitions was quite similar [combining the left and right FFA, mean percent signal change (±SE): E0M50 ↔ E50M0 = 0.58 (0.06), E50M0 ↔ E100M50 = 0.38 (0.08), E100M50 ↔ E50M100 = 0.55 (0.05), E50M100 ↔ E0M50 = 0.51 (0.06)], with minimal departure from a rhomboid stimulus-space geometry.

The measurement of adaptation that was used here can be complicated by differences in the magnitude of the response that are independent of presentation history (direct effects; Aguirre 2007; Drucker et al. 2009). Therefore we tested for differences in the average response to each of the four face stimuli independent of adaptation effects. No differences were found overall or in either hemisphere (all F < 1), confirming this assumption of the test for independent versus conjoint neural coding. There was a highly significant main effect of hemisphere [F(1,16) = 14.6, P = 0.002], reflecting a lower response in the left hemisphere FFA across all facial identities (percent signal change: right, 0.84; left, 0.58).

Given this overall difference in response between the left and right hemispheres (evident in Fig. 2B), the lack of differential Pure versus Composite adaptation in the left FFA could reflect less response to the stimuli rather than qualitative differences in neural tuning. However, this explanation seems unlikely. Comparing Pure change versus identical repetition, the strongest adaptation case, we see similar ranges of percent signal change for the right (0.7 – 0.43 = 0.28%) and left (0.43 – 0.23 = 0.2%) hemispheres. Thus whereas there is a general disparity in the strength of the response between the left and right FFA, it does not seem to account for the differential pattern of adaptation reported here.

Is this pattern of differential activation specific to the FFA? Because we ultimately wish to make inferences about the neural basis of face perception, it is important to ensure that our results are regionally specific and not replicated in areas without tuning to faces. To examine this question, we also measured the adaptation effect for voxels with positive activations in V1, associated with foveal retinotopic representation. Across the three adaptation conditions and two hemispheres, we failed to find any significant effects (all F < 1). Comparing the direct effects of identity at V1 also showed no significant effects (all F < 1). Based on these data, it is unlikely that the qualitative differences in neural tuning measured at the FFA are caused by a general pattern of adaptation across even non–face-selective visual areas.

Therefore this first experiment confirms that measurement of the metric of neural adaptation to combined and isolated stimulus changes is capable of replicating past findings of part and holistic processing within the left and right fusiform face areas. In two additional experiments, we used this method to probe whether the right FFA represents parts and wholes, as suggested by our previous work (Harris and Aguirre 2008). Our second experiment examines the effect of misaligning the top and bottom halves of the face, a manipulation that has previously been shown to reduce holistic processing (Young et al. 1987).

Experiment 2: testing for independent tuning for misaligned face halves

In this experiment, we tested whether the right FFA represents faces in an exclusively holistic manner. Although a number of studies have found holistic processing in this area (Schiltz and Rossion 2006; Yovel and Kanwisher 2005), recent data suggest that it may also carry information about face parts (Harris and Aguirre 2008). Therefore we examined how the neural tuning of the right FFA is affected by the disruption of holistic processing.

To do so, we used a manipulation previously shown to reduce holistic processing: misalignment of top and bottom halves of the face (Fig. 3A). Previously used to show reduced interference in the composite face effect (Young et al. 1987), misalignment of face halves has also been used in an fMRI adaptation paradigm by Schiltz and Rossion (2006). Their failure to find significant adaptation effects for misaligned faces within the right FFA has supported the role of this region in holistic processing of faces.

In this experiment, we used misaligned faces in the same continuous carry-over design used in experiment 1. Given the differential predictions in terms of fMRI signal recovery for Pure versus Composite changes (Fig. 2A), we can ask whether misalignment affects the neural tuning for the right FFA. Specifically, if the right FFA is a dedicated holistic processor, we would expect to find no significant adaptation effect for either Pure or Composite stimulus transition, in line with prior results (Schiltz and Rossion 2006). On the other hand, if the right FFA represents parts and wholes, we would predict a shift in neural tuning from holistic to part-based, with equal recovery from adaptation for Pure and Composite stimulus transitions.

In fact, subjects show clear recovery from adaptation for both Pure and Composite stimulus transitions (Fig. 3B). A repeated-measures ANOVA with hemisphere (right/left) and stimulus transition (Pure/Composite/Repeat) as factors likewise showed a significant main effect of stimulus transition [F(2,15) = 12.5, P = 0.0001]. Simple effects analysis found significant differences between both Pure versus Repeat [F(1,15) = 17.1, P = 0.001] and Composite versus Repeat [F(1,15) = 20.6, P = 0.0004]. In contrast, a two-way ANOVA with hemisphere and Pure/Composite stimulus transitions as factors showed no significant effect of stimulus transition and no significant interaction of stimulus transition and hemisphere (F < 1). The absence of a difference in response between the Pure and Composite stimulus transitions supports part-based tuning (i.e., neural populations independently tuned to eye appearance and mouth appearance).

Within the right hemisphere, this pattern of results is different from that seen for the aligned faces in experiment 1. Indeed, an ANOVA with hemisphere and stimulus transition as within-subject factors and alignment of faces (aligned/misaligned) as a between-subjects factor showed a significant three-way interaction of hemisphere, stimulus transition, and alignment [F(1,23) = 4.2, P = 0.05; df adjusted for 7 subjects common to both experiments]. Therefore these data support the idea that the right FFA either contains both independently and conjointly tuned neural populations for the stimulus dimensions of eye and mouth identity or that neural populations within the right FFA can dynamically alter tuning to encompass individual face parts or face wholes.

The data from experiment 2 also support the finding of holistic processing in the right FFA in experiment 1 by eliminating an alternate explanation for the result. The physical (pixel-wise) differences between the stimulus transitions were identical in the two experiments. The interaction of the stimulus manipulation (splitting the face) with the switch from conjoint to independent neural tuning between the two experiments may therefore be plausibly related to a switch from holistic to part-based processing.

A potential limitation to this inference is the reliance, in both experiments 1 and 2, on a null result to support part-based neural tuning. The three-way interaction of hemisphere, stimulus transition, and alignment does suggest that this result is meaningful. Additionally, the presence of recovery from adaptation compared with repeat trials suggests that sufficient sensitivity is present. Nonetheless, we wished to show part-based neural tuning via a positive, rather than negative, finding. We addressed this issue in a third experiment, in which the geometry of the stimulus space was altered such that a positive effect would be predicted for part-based neural tuning in the FFA.

Experiment 3: a positive test for part-based tuning

In this experiment, we sought to extend the previous findings by showing part-based neural tuning via a positive, rather than null, result. To do so, we used an asymmetric version of the stimulus space developed in experiment 1 (Fig. 4A). Whereas the original stimulus set varied by 50% along the eye and/or mouth dimensions that comprised the artificial stimulus space, the asymmetric stimuli instead varied by 87% along one axis (major axis) and 50% on the other (minor axis). Subjects saw four different asymmetric stimulus sets (k1–k4), each with the major axis shifted in a different direction. As in experiments 1 and 2, subjects were never explicitly informed of the geometric arrangement of the stimuli in the space.

Why perform this manipulation of stimulus space symmetry? As shown in Fig. 4B, a key feature of the asymmetric stimulus set is the differing predictions from experiment 1. The choice of an 87% difference along the major axis implies that holistic processing would produce equal fMRI signal recovery to Pure and Composite stimulus transitions [Euclidean distance: √(0.872 + 0.52) ≈ 1; Fig. 4B, left]. In contrast, if neural tuning is part-based, the additive responses to eye and mouth dimensions would result in a greater fMRI signal for Composite, as opposed to Pure, stimulus transitions (0.87 + 0.5 = 1.4; Fig. 4B, right). Thus this asymmetric stimulus space allows us to potentially reject the hypothesis of holistic processing by obtaining a positive result.

Results from five subjects who had participated in experiment 1 are shown in Fig. 4C. The left FFA shows significantly greater recovery for composite relative to Pure stimulus transitions [t(4) = 3.3, P = 0.03, 2-tailed], again indicating that this region represents faces in a part-based manner. However, surprisingly, the right FFA also shows a larger response to Composite versus Pure stimulus transitions [t(4) = 4.5, P = 0.01, 2-tailed], consistent with part-based neural tuning. This pattern differs from that seen in the same subjects in experiment 1, where the right FFA had greater signal recovery for Pure relative to Composite stimulus transitions, in line with holistic representation. A repeated-measures ANOVA confirmed that this interaction was significant [F(1,4) = 24.5, P = 0.008].

Some caution is necessary in interpreting this result, given both the small number of subjects (n = 5 compared with n = 16 in the previous 2 experiments) and the prior participation of these subjects in experiment 1. However, the finding of highly significant main and interaction effects—even with only 4 df—supports the idea that these results reflect meaningful changes in neural tuning rather than a false-positive result. Why do we find such significant results in experiment 3 compared with the preceding experiments? One possibility is that the larger differences between stimuli compared with experiment 1 (0.021 vs. 0.016 pixel-wise difference, Composite change) may have resulted in an increased effect size, and consequently, more significant results with a smaller sample size.

Another issue, that participation in experiment 1 may have introduced biases affecting the results of experiment 3, is also unlikely to fully explain these results. In experiment 1, the right FFA response in the same five subjects was overwhelmingly holistic, with significantly greater recovery for Pure relative to Composite transitions [t(4) = 5.2, P = 0.007]. From findings such as the whole-over-part superiority effect (Tanaka and Farah 1993), it is known that prior learning of a holistic context hinders part-based processing. Hence it is unclear why one would necessarily predict a bias in the opposite direction, toward part-based processing, in experiment 3. The subjects' greater familiarity with the stimulus space likewise does not explain the direction of the effect, because familiarity has been shown to lead to greater holistic processing (Buttle and Raymond 2003). Therefore these results are unlikely to reflect bias or familiarization within subjects. Indeed, by reducing the variance of effect estimates, the use of repeated measures within subjects increases the statistical power of our experiment.

Thus using an asymmetric stimulus space, we measured a positive result consistent with part-based, or independent, neural tuning. In the case of the left FFA, this finding agrees both with the data from the previous two experiments, which both showed left FFA activation in line with predictions for part-based processing, and with previous work implicating this region in part-based representation of faces (Yovel and Kanwisher 2004).

However, we found a similar pattern in the right FFA, at odds with both existing studies (Schiltz and Rossion 2006; Yovel and Kanwisher 2005) and with the pattern of fMRI signal recovery seen in the same subjects in experiment 1. Instead, combined with the results from experiment 2, these data support the idea that the right FFA contains neural populations tuned both to face parts and whole faces. When holistic processing is reduced, as for unfamiliar faces (Harris and Aguirre 2008), or disrupted, for example by misalignment (experiment 2), part-based representations may contribute more heavily to encoding and recognition (Cabeza and Kato 2000; Lobmaier and Mast 2007).

DISCUSSION

Despite the influence of the holistic hypothesis on theories of face perception, there is some evidence that face parts may also be represented independently, or in nonholistic linear combinations, within face-selective neural populations. The bulk of this data comes from intracranial recordings in monkeys (Freiwald et al. 2009; Perrett et al. 1982) and humans (McCarthy et al. 1999), but we have recently found compatible results in fMRI (Harris and Aguirre 2008). In particular, whereas the right FFA contributes heavily to holistic processing, this region nonetheless seems to contain part-based representations as well.

In these experiments, we further investigated this question using a continuous carry-over design (Aguirre 2007), which provides an efficient means of characterizing neural tuning as independent or conjoint (Drucker et al. 2009). This distinction, often applied in the realm of object recognition, maps readily onto the part-based/holistic dichotomy frequently discussed in the face perception literature.

We examined fMRI signal recovery for a set of faces varying along constructed dimensions of eye and mouth appearance (Fig. 1A). The neural distance metrics for part-based and holistic processing are different, with additive coding for independent part representation versus subadditive (e.g., Euclidean) responses for conjoint coding. Importantly, we can predict different patterns of neural recovery depending on whether the FFA contains part-based or holistically-tuned neural populations.

Using this method with our set of faces varying in eye and mouth identity, we found that, whereas the left FFA seems to represent the eye and mouth dimensions of the stimuli independently, the two axes are processed conjointly within the right FFA. Thus the right FFA seems to represent faces holistically, in keeping with previous reports (Schiltz and Rossion 2006; Yovel and Kanwisher 2004, 2005) and our own prior work (Harris and Aguirre 2008).

However, in our previous study, we found a similar right FFA response to face stimuli whether they could be processed holistically or only in terms of their parts (Harris and Aguirre 2008), suggesting that this region represents parts and wholes. To explore this question further, we used the same procedure as in the first experiment, but disrupted subjects' holistic processing of the stimuli by misaligning the top and bottom halves of the face (Fig. 3A). Another study using this manipulation reported no significant recovery from adaptation for misaligned faces in the right FFA (Schiltz and Rossion 2006), leading the authors to conclude that this region processes faces holistically.

Our experimental results instead show significant recovery from adaptation for both Pure and Composite stimulus transitions (Fig. 3B). Furthermore, differences in signal recovery between these transitions were not significant, consistent with part-based neural tuning. Thus these data lend additional support to the idea that the right FFA contains neural populations tuned both to whole faces and their individual parts.

However, in this design, this inference depends critically on a negative result: the finding of no statistical difference between the Pure and Composite stimulus transitions. We devised another stimulus space for which the predicted pattern of adaptation would be reversed, with part-based neural tuning producing significantly greater fMRI signal recovery for the Composite versus Pure stimulus transition. This prediction was confirmed in both the left FFA, which had shown part-based processing in the preceding two experiments, and also, somewhat surprisingly, in the right.

This finding is all the more remarkable given that subjects were never explicitly exposed to the eye and mouth identities or their arrangement in the stimulus space. Indeed, during fMRI scanning, subjects performed an unrelated target detection task while viewing these faces. However, within the same subjects, the right FFA displayed holistic neural tuning in the first experiment versus part-based coding for the asymmetric stimulus space.

Together with previous work on familiarity and expertise, which has shown shifts from part-based to holistic processing (Baker et al. 2002; Buttle and Raymond 2003; Gauthier et al. 2003; Harris and Aguirre 2008), these data underscore the flexibility of neural representation. Although the exact mechanism is unclear, the switch from holistic to part-based encoding for these stimuli may reflect the more general ability of the visual system to extract the central tendency of stimulus distributions over time (Leopold et al. 2001; Posner and Keele 1968; Webster et al. 2004) and space (Clifford et al. 2007; Haberman and Whitney 2009). In this case, asymmetric distribution of stimuli in terms of eye or mouth identity may serve as a cue to bias processing toward individual feature representations. The occurrence of such effects in the absence of explicit instructions, task demands, or, indeed, self-reported differentiation of the stimulus space geometry suggests that such effects need not require explicit attention.

Rather than reflecting a shift between cortical sites, this modulation occurred within a single region of the right fusiform gyrus. While supporting the special involvement of this area in holistic processing of faces (Schiltz and Rossion 2006; Yovel and Kanwisher 2005), these results argue against the idea of exclusive and invariant neural representation of face wholes. Instead, different neural representations seem to be flexibly engaged within this one cortical region.

Our findings join recent electrophysiological data, which suggest that face-selective cells in macaque IT cortex code information about both face parts and their combination into whole faces (Freiwald et al. 2009; Sugase et al. 1999). Although these different representations could occur across different neural populations, it is also possible that this information is carried within single neurons, perhaps via dynamic changes in receptive field size (Wörgötter and Eysel 2000) or varying signals at different latencies. Supporting the latter idea, Sugase et al. (1999) reported that single neurons within the inferotemporal (IT) cortex are capable of conveying different scales of facial information at different latencies, with global information about category (face vs. shape) preceding fine distinctions based on identity and expression.

Alternatively, Freiwald and colleagues report that individual neurons are tuned to the axes of a parametric face space rather than holistic exemplars. Holistic processing instead affects neural firing by increasing the gain of these feature tuning curves. Along with the work of Sugase et al. (1999), these findings are of clear relevance to our own results, suggesting means by which neural tuning to parts and wholes observed in right FFA may be related. However, because some of the most predominant feature axes in Freiwald et al. (2009) correspond to configural or layout cues (e.g., aspect ratio, inter-eye distance), which were not manipulated in this study, we must remain cautious in drawing direct parallels between these results.

A related limitation of our study is that we sampled only a small portion of “face space.” The stimuli used were driven by both the constrained nature of the experimental question and the limits of subjects' sensitivity. For the natural stimulus dimensions used here, pilot data showed that subjects had difficulty discriminating smaller changes in face parts, whereas realistic larger changes to the endpoints are limited by physical restrictions on facial anatomy. Future experiments could conceivably work around these issues by using cartoon-like feature dimensions that allow greater variation between stimuli and along stimulus dimensions, as in Freiwald et al. (2009) or Loffler et al. (2005).

In our previous studies of conjoint and independent neural tuning with fMRI adaptation, a stimulus space composed of 16 separate samples was used (Drucker et al. 2009). A consequence of using a reduced number of samples from the space is that the test for conjoint neural tuning may potentially be biased by nonlinearities in the transform of neural activity to the BOLD fMRI signal (Drucker et al. 2009). This concern is minimized in this study, however, because the measured neural tuning was found to vary in the predicted manner in the same cortical region with a change in the stimulus properties (the right FFA in experiments 1 and 2) and between homologous cortical regions that differ in hemisphere (the right and left FFA in experiment 1). Because neuro-vascular coupling would not be expected to be different under these circumstances, a failure of linearity could not explain these results. Additional assumptions of the model include a monotonic relationship between changes in stimulus similarity and recovery from adaptation (supported by previous fMRI studies of face similarity; Jiang et al. 2006; Rothstein et al. 2005) and near-equivalence of the amplitude of neural response to the different stimuli from the space (confirmed in our data).

In conclusion, we used fMRI to examine neural tuning for face parts and wholes. Consistent with previous work implicating the left hemisphere in part-based processing (Parkin and Williamson 1987), the left FFA response reflected part-based neural tuning across all experiments. In the right FFA, in contrast, holistic tuning was seen for whole faces, but this region also showed part-based coding when holistic processing was disrupted by misalignment or reduced using an asymmetric stimulus space. Changes in neural tuning for asymmetric stimulus spaces measured in fMRI suggest that the nature of such tuning is markedly flexible and may be dynamically modulated by changing sensory information over time.

GRANTS

This research was supported by a Burroughs-Wellcome Career Development Award and National Institute of Mental Health Grant K08 MH-72926-01.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

REFERENCES

- Aguirre, 2007.Aguirre GK. Continuous carry-over designs for fMRI. Neuroimage 35: 1480–1494, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aguirre et al., 1998.Aguirre GK, Zarahn E, D'Esposito M. The variability of human, BOLD hemodynamic responses. Neuroimage 8: 360–369, 1998 [DOI] [PubMed] [Google Scholar]

- Baker et al., 2002.Baker CI, Behrmann M, Olson CR. Impact of learning on representation of parts and wholes in monkey inferotemporal cortex. Nat Neurosci 5: 1210–1216, 2002 [DOI] [PubMed] [Google Scholar]

- Bartlett and Searcy, 1993.Bartlett JC, Searcy J. Inversion and configuration of faces. Cogn Psychol 25: 281–316, 1993 [DOI] [PubMed] [Google Scholar]

- Bartlett et al., 2003.Bartlett JC, Searcy JH, Abdi H. What are the routes to face recognition? In: Perception of Faces, Objects, and Scenes: Analytic and Holistic Processes (Advances in Visual Cognition), edited by Peterson MA, Rhodes G. Oxford, UK: Oxford, 2003, 29–34 [Google Scholar]

- Buttle and Raymond, 2003.Buttle H, Raymond JE. High familiarity enhances visual change detection for face stimuli. Percept Psychophys 65: 1296–1306, 2003 [DOI] [PubMed] [Google Scholar]

- Cabeza and Kato, 2000.Cabeza R, Kato T. Features are also important: contributions of featural and configural processing to face recognition. Psychol Sci 11: 429–433, 2000 [DOI] [PubMed] [Google Scholar]

- Clifford et al., 2007.Clifford CWG, Webster MA, Stanley GB, Stocker AA, Kohn A, Sharpee TO, Schwarz O. Visual adaptation: neural, psychological and computational aspects. Vision Res 47: 3125–3131, 2007 [DOI] [PubMed] [Google Scholar]

- Drucker et al., 2009.Drucker DM, Kerr WT, Aguirre GK. Distinguishing conjoint and independent neural tuning for stimulus features with fMRI adaptation. J Neurophsyiol 101: 3310–3324, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel, 2005.Engel SA. Adaptation of oriented and unoriented color-selective neurons in human visual areas. Neuron 45: 613–623, 2005 [DOI] [PubMed] [Google Scholar]

- Farah et al., 1998.Farah MJ, Wilson KD, Drain M, Tanaka JN. What is “special” about face perception? Psychol Rev 105: 482–498, 1998 [DOI] [PubMed] [Google Scholar]

- Freiwald et al., 2009.Freiwald WA, Tsao DY, Livingstone MS. A face feature space in the macaque temporal lobe. Nat Neurosci 12: 1187–1196, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garner, 1974.Garner WR. The Processing of Information and Structure. Oxford, UK: Lawrence Erlbaum, 1974 [Google Scholar]

- Gauthier et al., 2003.Gauthier I, Curran T, Curby KM, Collins D. Perceptual interference supports a non-modular account of face processing. Nat Neurosci 6: 428–432, 2003 [DOI] [PubMed] [Google Scholar]

- Haberman and Whitney, 2009.Haberman J, Whitney D. Seeing the mean: ensemble coding for sets of faces. J Exp Psychol Hum Percept Perform 35: 718–734, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris and Aguirre, 2008.Harris A, Aguirre GK. The representation of parts and wholes in face-selective cortex. J Cogn Neurosci 20: 863–878, 2008 [DOI] [PubMed] [Google Scholar]

- Henson, 2003.Henson RNA. Neuroimaging studies of priming. Prog Neurobiol 70: 53–81, 2003 [DOI] [PubMed] [Google Scholar]

- Hinds et al., 2008.Hinds OP, Rajendran N, Polimeni JR, Augustinack JC, Wiggins G, Wald LL, Diana Rosas H, Potthast A, Schwartz EL, Fischl B. Accurate prediction of V1 location from cortical folds in a surface coordinate system. Neuroimage 39: 1585–1599, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang et al., 2006.Jiang X, Rosen E, Zeffiro T, VanMeter J, Blanz V, Riesenhuber M. Evaluation of a shape-based model of human face discrimination using fMRI and behavioral techniques. Neuron 50: 159–172, 2006 [DOI] [PubMed] [Google Scholar]

- Kanwisher et al., 1997.Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17: 4302–4311, 1997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langton et al., 2000.Langton SRH, Watt RJ, Bruce V. Do the eyes have it? Cues to the direction of social attention. Trends Cogn Sci 4: 50–59, 2000 [DOI] [PubMed] [Google Scholar]

- Leopold et al., 2001.Leopold DA, O'Toole AJ, Vetter T, Blanz V. Prototype-referenced shape encoding revealed by high-level aftereffects. Nat Neurosci 4: 89–94, 2001 [DOI] [PubMed] [Google Scholar]

- Lobmaier and Mast, 2007.Lobmaier JS, Mast FW. Perception of novel faces: the parts have it! Perception 36: 1660–1673, 2007 [DOI] [PubMed] [Google Scholar]

- Loffler et al., 2005.Loffler G, Yourganov G, Wilkinson F, Wilson HR. fMRI evidence for the neural representation of faces. Nat Neurosci 8: 1386–1390, 2005 [DOI] [PubMed] [Google Scholar]

- Maurer et al., 2002.Maurer D, Le Grand R, Mondloch CJ. The many faces of configural processing. Trends Cogn Sci 6: 255–260, 2002 [DOI] [PubMed] [Google Scholar]

- McCarthy et al., 1999.McCarthy G, Puce A, Belger A, Allison T. Electrophysiological studies of human face perception. II. Response properties of face-specific potentials generated in occipitotemporal cortex. Cereb Cortex 9: 431–444, 1999 [DOI] [PubMed] [Google Scholar]

- McCarthy et al., 1997.McCarthy G, Puce A, Gore JC, Allison T. Face-specific processing in the human fusiform gyrus. J Cogn Neurosci 9: 605–610, 1997 [DOI] [PubMed] [Google Scholar]

- McKone and Yovel, 2009.McKone E, Yovel G. Why does picture-plane inversion sometimes dissociate perception of features and spacing in faces, and sometimes not? Toward a new theory of holistic processing. Psychon Bull Rev 16: 778–797, 2009 [DOI] [PubMed] [Google Scholar]

- Ng et al., 2006.Ng M, Ciaramitaro VM, Anstis S, Boyton GM, Fine I. Selectivity for the configural cues that identify the gender, ethnicity, and identity of faces in human cortex. Proc Natl Acad Sci USA 103: 19552–19557, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkin and Williamson, 1987.Parkin A, Williamson P. Cerebral lateralisation at different stages of facial processing. Cortex 23: 99–110, 1987 [DOI] [PubMed] [Google Scholar]

- Perrett et al., 1982.Perrett DI, Rolls ET, Caan W. Visual neurons responsive to faces in the monkey temporal cortex. Exp Brain Res 47: 329–342, 1982 [DOI] [PubMed] [Google Scholar]

- Posner and Keele, 1968.Posner MI, Keele SW. On the genesis of abstract ideas. J Exp Psychol 77: 353–363, 1968 [DOI] [PubMed] [Google Scholar]

- Renninger and Malik, 2004.Renninger LW, Malik J. When is scene identification just texture recognition? Vision Res 44: 2301–2311, 2004 [DOI] [PubMed] [Google Scholar]

- Rossion, 2008.Rossion B. Picture-plane inversion leads to qualitative changes of face perception. Acta Psychol (Amst) 128: 274–289, 2008 [DOI] [PubMed] [Google Scholar]

- Rotshtein et al., 2005.Rotshtein P, Henson RNA, Treves A, Driver J, Dolan RJ. Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat Neurosci 8: 107–113, 2005 [DOI] [PubMed] [Google Scholar]

- Schiltz and Rossion, 2006.Schiltz C, Rossion B. Faces are represented holistically in the human occipito-temporal cortex. Neuroimage 32: 1385–1394, 2006 [DOI] [PubMed] [Google Scholar]

- Searcy and Bartlett, 1996.Searcy JH, Bartlett JC. Inversion and processing of component and spatial-relational information in faces. J Exp Psychol Hum Percept Perform 22: 904–915, 1996 [DOI] [PubMed] [Google Scholar]

- Shepherd, 1964.Shepherd RN. Attention and the metric structure of the stimulus space. J Math Psychol 1: 54–87, 1964 [Google Scholar]

- Sugase et al., 1999.Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature 400: 869–873, 1999 [DOI] [PubMed] [Google Scholar]

- Tanaka and Farah, 1993.Tanaka JW, Farah MJ. Parts and wholes in face recognition. Q J Exp Psychol 46: 225–245, 1993 [DOI] [PubMed] [Google Scholar]

- Tottenham et al., 2009.Tottenham N, Tanaka J, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, Westerlund A, Casey BJ, Nelson CA. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res 168: 242–249, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webster et al., 2004.Webster MA, Kaping D, Mizokami Y, Duhamel P. Adaptation to natural facial categories. Nature 428: 557–561, 2004 [DOI] [PubMed] [Google Scholar]

- Wörgötter and Eysel, 2000.Wörgötter F, Eysel UT. Context, state and the receptive fields of striatal cortical cells. Trends Neurosci 23: 497–503, 2000 [DOI] [PubMed] [Google Scholar]

- Worsley and Friston, 1995.Worsley KJ, Friston KJ. Analysis of fMRI time-series revisited—again. Neuroimage 2: 173–181, 1995 [DOI] [PubMed] [Google Scholar]

- Yin, 1969.Yin RK. Looking at upside-down faces. J Exp Psychol 81: 141–145, 1969 [Google Scholar]

- Young et al., 1985.Young AW, Hay DC, McWeeny KH, Flude BM, Ellis AW. Matching familiar and unfamiliar faces on internal and external features. Perception 14: 737–746, 1985 [DOI] [PubMed] [Google Scholar]

- Young et al., 1987.Young AW, Hellawell D, Hay DC. Configurational information in face perception. Perception 16: 747–759, 1987 [DOI] [PubMed] [Google Scholar]

- Yovel and Kanwisher, 2004.Yovel G, Kanwisher N. Face perception: domain specific, not process specific. Neuron 44: 889–898, 2004 [DOI] [PubMed] [Google Scholar]

- Yovel and Kanwisher, 2005.Yovel G, Kanwisher N. The neural basis of the behavioral face-inversion effect. Curr Biol 15: 2256–2262, 2005 [DOI] [PubMed] [Google Scholar]