Abstract

There is no shortage of evidence to suggest that faces constitute a special category in human perception. Surprisingly little consensus exists, however, regarding the interpretation of these results. The question persists: what makes faces special? We address this issue via one hallmark of face perception – its striking sensitivity to low-level image format – and present evidence in favor of an expertise account of the specialization of face perception. In accordance with earlier work (Biederman & Kalocsai, 1997), we find that manipulating one image into two versions that are complementary in spatial frequency (SF) and orientation information disproportionately impairs face matching relative to object matching. Here, we demonstrate that this characteristic of face processing is also found for cars, with its magnitude predicted by the observers’ level of expertise with cars. We argue that the bar needs to be raised for what constitutes proper evidence that face perception is special in a manner that is not related to our expertise in this domain.

Keywords: perceptual expertise, spatial frequency, face perception

Introduction

Face perception is argued to be “special” in part on the basis of behavioral effects that distinguish it from the perception of objects. For instance, face perception suffers more than object perception when images are turned upside-down (the inversion effect; Yin, 1969) and selective attention to half of a face is easier when face halves are aligned than misaligned, a composite effect (Carey & Diamond, 1994; Young et al., 1987) that is not observed for non-face objects. Such phenomena are generally not disputed and are often taken to indicate that faces are processed in a more holistic manner than non-face objects, relying less on part decomposition. The interpretation of these findings, however, is a source of contention. One account invokes a process of specialization due to experience individuating faces (Carey, Diamond & Woods, 1980; Curby & Gauthier, 2007; Diamond & Carey, 1986; Gauthier, Curran, Curby & Collins, 2003; Gauthier & Tarr, 1997; 2002; Rossion, Kung & Tarr, 2004). According to this theory, expertise with individuating objects from non-face categories would result in similar behavioral hallmarks. A competing account suggests that these effects reflect processes that are unique face perception, either due to innate constraints or to preferential exposure early in life (Kanwisher, 2000; Kanwisher, McDermott & Chun, 1997; McKone, Kanwisher & Duchaine, 2007). Resolving this debate is important for the study of perception and memory. If face perception is truly unique, it is reasonable to seek qualitatively different models to account for face and object recognition. In contrast, if hallmarks of face perception arise as a function of our expertise with objects, then more efforts should be devoted to the design of computational models that can account for the continuum of novice to expert perception.

Why is there yet no resolution to this question? Although there are scores of studies contrasting face perception to novice object perception and highlighting the special character of face processing (e.g., Biederman, 1987; Tanaka & Farah, 1993; Tanaka & Sengco, 1997; Yin, 1969; Young, Hellawell & Hay, 1987), there are fewer studies directly addressing the role of perceptual expertise. Most of this latter set conclude that face-like behaviors can be obtained with both real-world and lab-trained objects of expertise (e.g., Diamond & Carey, 1986; Gauthier et al., 2003; Gauthier, Skudlarski, Gore & Anderson, 2000a; Gauthier & Tarr, 2002; Rossion et al., 2004; Tanaka & Curran, 2001; Xu, 2005), while a few studies report no effect of expertise (e.g., Nederhouser, Yue, Mangini & Biederman, 2007; Robbins & McKone, 2007; Yue, Tjan & Biederman, 2006). Nonetheless, a recent review argued that many of the published expertise effects are small or inconclusive and argues that the holistic processing characteristic of face perception is not the result of expertise (McKone et al., 2007). Various conclusions drawn in this review have since been empirically challenged. For example, a study contrasting performance for faces and cars in a short-term memory paradigm revealed a robust inversion effect for cars comparable to that observed for faces, only in car experts (Curby, Glazek & Gauthier, 2009). Another study (Wong, Palmeri & Gauthier, 2009) revealed that recently acquired expertise with novel objects results in a composite effect. Both inversion and composite effects have been used as measures of holistic processing and/or the related construct of configural processing (Carey & Diamond, 1994; Farah et al., 1995; Tanaka & Farah, 1993; Yin, 1969). Therefore, these results reinforce prior claims that holistic and configural processing are domain-general strategies adopted by perceptual experts.

It may be reasonable to assume that other effects indexing holistic and configural processing will likewise be explained by expertise. This is important so that we do not unnecessarily re-open the debate every time the same processes are operationalized in a new task. However, there could still be measures that capture other aspects of face perception, even related to configural and/or holistic processing, that are truly independent of expertise. There is evidence for such a hallmark of face processing which so far defies an expertise account: its marked sensitivity to manipulations of the spatial frequency (SF) content of images (Biederman & Kalocsai, 1997; Collin, Liu, Troje, McMullen & Chaudhuri, 2004; Williams, Willenbockel & Gauthier, 2009; Yue et al., 2006;).

Face perception is highly sensitive to SF filtering (Fiser, Subramaniam & Biederman, 2001; Goffaux, Gauthier & Rossion, 2003) and to other types of manipulations of image format such as contrast reversal (Gaspar, Bennett & Sekuler, 2008; Hayes, 1988; Subramaniam & Biederman, 1997) or the use of line drawings (e.g., Bruce, Hanna, Dench, Healey & Burton, 1992). In contrast, such manipulations hardly affect object recognition (Biederman, 1987; Biederman & Ju, 1988; Liu, Collin, Rainville & Chaudhuri, 2000; Nederhouser et al.,2007). This led Biederman and Kalocsai (1997) to suggest that faces and objects are represented differently in the visual system. They proposed that non-face objects are encoded as structural descriptions of parts that can be recovered from images based on non-accidental properties found in an edge description of the object (Biederman, 1987). Face representations, on the other hand, would preserve SF and orientation information from V1-type cell outputs (although with translation and scale invariance), accounting for why face perception is highly sensitive to spatial manipulations.

In a test of this hypothesis, complementary images were created by dividing the SF-by-orientation space of the raw image into an 8 × 8 radial matrix and filtering out every odd diagonal of cells to form one version of the image and every even diagonal of cells to form the second image(Biederman & Kalocsai, 1997). These two versions of the same images are complementary as they do not overlap in any specific combination of SF and orientation (Fig 1). Participants were poorer matching complementary faces relative to identical faces, while matching of chairs was not affected by this manipulation (see Collin et al. (2004), for a similar result in a different task). This SF complementation effect for faces was replicated in a recent study, although a robust SF complementation was also observed for cars, chairs, and inverted faces, albeit significantly less than that observed with upright faces (Williams et al., 2009).

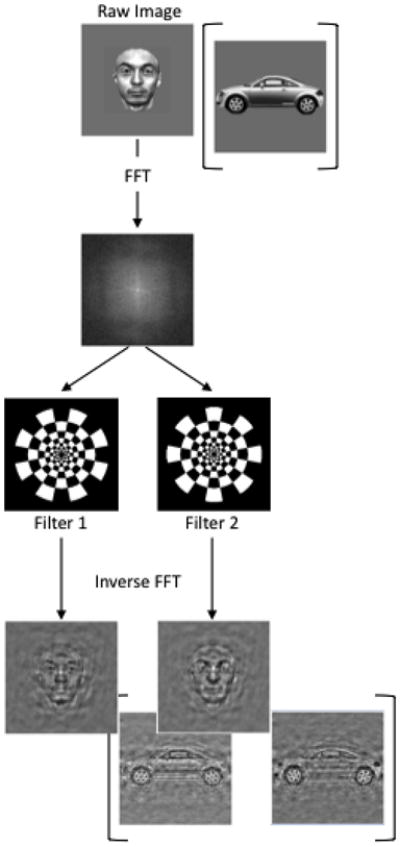

Figure 1.

Spatial Frequency (SF) and Orientation filtering. First, the Fast Fourier Transform (FFT) is applied to a raw image (either face or car). Two complementary filters (8 × 8 radial matrices) are then applied to the Fourier-transformed image to preserve alternating combinations the SF-orientation content from the raw image. The information preserved with each filter is represented by the white checkers. Finally, when returned to the spatial domain via the inverse FFT, the resulting complementary pair of images shares no overlapping combinations of SF and orientation information.

One study addressed whether the large SF complementation effect for upright faces may be due to perceptual expertise (Yue et al., 2006)by manipulating experience with novel objects called blobs. Regardless of their training experience with blobs, participants showed robust effects of complementation for faces but not blobs. A number of limitations in that study motivated us to reexamine this question. First, training with blobs has never been shown to result in any face-like behavioral effects. In fact, the only other study with these training protocol and stimuli failed to find face-like sensitivity to contrast reversal in blob experts (Nederhouser et al., 2007). This is difficult to interpret, given the many studies using laboratory trained experts (Gauthier et al., 1997; Gauthier, Williams, Tarr & Tanaka, 1998; Gauthier, Tarr, Anderson & Gore, 1999; Nishimura & Maurer, 2008; Rossion, Gauthier, Goffaux, Tarr & Crommelinck, 2002; Wong et al., 2009) and real-world experts (Busey & Vanderkolk, 2004; Gauthier et al., 2000a; 2000b; 2003; Gauthier & Curby, 2005; Xu, 2005) that have produced behavioral and neural face-like effects using a wide-range of stimuli. Second, in blob studies(Nederhouser et al., 2007; Yue et al., 2006)participants were tested with transfer blobs that were structurally different from the trained blobs, possibly preventing generalization of learned expertise (see Bukach, Gauthier & Tarr (2006)for a discussion of this issue). Finally, blobs have limited texture and minimal high SF information relative to faces, factors that could have reduced the effects of SF filtering.

We sought to explore the role of expertise in the SF complementation effect by testing participants with a range of expertise with cars. This has important advantages. First, expertise resulting from years of experience with a category is more likely to yield a large effect size than that following a few hours of laboratory training. Second, we use a proven method to quantify perceptual expertise with cars, validated by its prediction of other face-like effects, both neurally (Gauthier et al., 2000b; 2003; Rossion et al., 2002a, Xu 2005) and behaviorally (Gauthier et al., 2003; Curby et al., 2009). This method indexes performance in a car and bird matching task, where performance with birds in a group of participants who are not bird experts serves as a control for individual differences related to motivation and unrelated to car expertise, the variable of interest. Accordingly, a “Car Expertise Index” is defined as the difference in discriminability of cars and birds: Car d′ – Bird d′.

In two experiments, we compared the SF complementation effect for faces and cars by asking participants to judge if pairs of sequentially presented images showed the same item. We manipulated whether the images were identical or complementary. Experiment 1 adopted an approach identical to that used in prior work (Biederman & Kalocsai, 1997; Yue et al., 2006). Specifically, stimulus pairs that were identical or complementary were randomized and, because different trials cannot be assigned to a condition (i.e., different exemplars are neither identical nor complementary in SF content), analyses focused exclusively on accuracy for same trials. In Experiment 2, we blocked identical and complementary trials so that signal detection analysis could be used to exclude differences in response biases, which can affect faces and objects differentially in this task (Williams et al., 2009). In the second experiment, faces and cars were presented both upright and upside-down. If expertise with cars results in holistic processing and if holistic processing is particularly susceptible to SF manipulations(Goffaux, Hault, Michel, Vyong & Rossion, 2005; Goffaux & Rossion, 2006, but see Cheung, Richler, Palmeri & Gauthier, 2008)), we would expect increased SF sensitivity with increased expertise.

Methods

Participants

Experiment 1

Thirty-nine individuals (15 male, mean age 22 years) volunteered.

Experiment 2

Forty-three individuals (18 male, mean age 21 years) who had not participated in Experiment 1 volunteered.

All participants had normal or corrected-to-normal visual acuity. All received a small honorarium or course credit and provided written informed consent. The study was approved by the Institutional Review Board at Vanderbilt University.

Stimuli

Experiment 1

Stimuli were digitized, eight-bit greyscale images of 72 faces with hair cropped (obtained from the Max-Planck Institute for Biological Cybernetics in Tuebingen, Germany) and 72 cars (obtained from www.tirerack.com). All images were filtered with a method used in prior work (Biederman & Kalocsai, 1997; Yue et al., 2006; Williams et al., 2009): the original images were subjected to a Fast Fourier Transform (FFT) and filtered by two complementary filters (Figure 1). Each filter eliminated the highest (above 181 cycles/image) and lowest (below 12 cycles/image, corresponding to approximately 7.5 cycles per face width (c/fw)) spatial frequencies. The remaining area of the Fourier domain was divided into an 8-by-8 matrix of 8 orientations (increasing in successive steps of 22.5 degrees) by 8 SFs (covering four octaves in steps of 0.5 octaves). This manipulation created two complementary pairs of images, whereby every other of the 32 frequency-orientation combinations in a radial checkerboard pattern in the Fourier domain was ascribed to one image, and the remaining combinations were assigned to the complementary member of that pair. As such, both complementary members of a pair contained all 8 SFs and all 8 orientations but in unique combinations. The two complementary images shared no common information in the Fourier domain. Filtered images were then converted back to images in the spatial domain via the inverse FFT. The final stimuli were resized to two formats, with images subtending either 2° or 4° visual angle.

Experiment 2

The same images as in Experiment 1 were used, in their upright and inverted (flipped in the vertical, up-down direction) versions.

Matching Task

Experiment 1

We used a 2 × 2 within-participant factorial design, manipulating category (face, car) and SF-orientation content (identical, complementary). A total of 1152 trials were arranged into 6 blocks by category: 3 face blocks and 3 car blocks of 192 trials each. Block order was randomized across subjects, and breaks were offered every 64 trials. Participants began with eight practice trials selected randomly from all possible face and car trials. On each trial, participants judged whether a pair of sequentially presented images (either two faces or two cars) was of the same identity. Relative to the study image, the probe image could be (a) the same identity and the same SF (i.e., the exact image), (b) the same identity and a complementary SF, or (c) a different exemplar altogether, though also filtered to contain only alternating SF-orientation components. Participants were instructed to make their judgments based on identity alone, regardless of differences in image size or SF content (described to subjects as “blurriness”). Each trial began with a 500ms fixation cross, followed by a target stimulus (face or car) in the center of the screen for 200ms. After a 300ms inter-stimulus-interval a probe stimulus appeared for 200ms. Participants had to make a same/different judgment on this image within 1800ms. All images were presented at the center of the screen and image size was selected randomly for each stimulus (either 2° visual angle or 4° visual angle) to prevent image matching (Yue et al., 2006).

Experiment 2

We used a 2 × 2 × 2 repeated-measures design, manipulating (a) category (face or car), (b) SF-orientation content (identical or complementary), and (c) orientation (upright or inverted). The procedure differed from Experiment 1 in three ways. First, the orientation of stimuli varied randomly across trials (both stimuli within a trial were always of the same orientation). Second, image size always differed from study to probe (2° to 4° or 4° to 2°), thereby eliminating cases where study and probe could randomly occur at the same size, so no part of the effect could be attributed to image matching. Third, trials were blocked according to SF content (identical or complementary) rather than stimulus category (face or car); hence, different trials could be assigned to either the identical or complementary condition, allowing for the computation of discriminability (d′) and response criterion (C) (provided as supporting online information).

A total of 1152 trials were grouped into 6 blocks: 3 blocks of identical SF-orientation pairs and 3 blocks of complementary SF-orientation pairs, where each block contained 192 trials. Stimulus category and orientation varied randomly within a block, allowing 48 trials per block (or 288 trials total) for each condition (i.e., upright faces, upright cars, inverted faces, and inverted cars). Block order was randomized across subjects. Each subject began with 12 practice trials, and breaks were offered every 64 trials.

Expertise Test

Following the matching task with filtered images, participants in both experiments completed a test of car expertise to quantify their skill at matching cars (Curby et al., 2009; Gauthier et al., 2000a; 2005; Grill-Spector, Knouf & Kanwisher, 2004; Rossion et al., 2004; Xu, 2005). Participants made same/different judgments on car images (at the level of make and model, regardless of year) and on bird images (at the level of species). There were 112 trials for each object category. On each trial, the first stimulus appeared for 1000ms, followed by a 500ms mask. A second stimulus then appeared and remained visible until a same/different response was made or 5000ms elapsed.

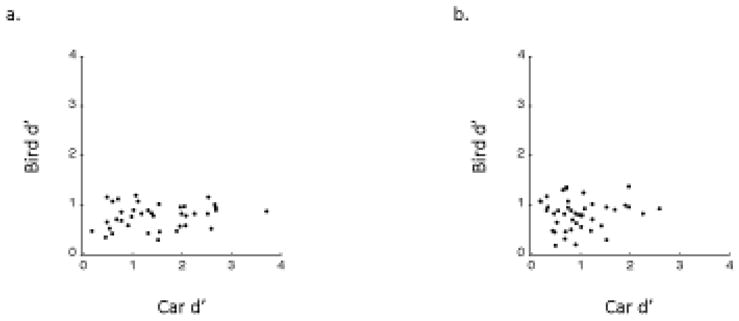

A separate sensitivity score was calculated for cars (Car d′) and birds (Bird d′). The difference between these measures (Car d′ – Bird d′) yields a Car Expertise Index for each participant. Performance with birds provides a baseline for individual differences in motivation or attention that would not be due to experience with cars. Figure 2 shows the distribution of car d′ and bird d′ scores for each experiment. As we did not screen participants for experience with birds, we also report the results for a subset of our sample, excluding participants whose performance with birds may suggest a moderate level of experience with birds (those with Bird d′ > 1: n=8 out of 39 in Experiment 1; n=10 out of 43 in Experiment 2).

Figure 2.

Distribution of car d′ and bird d′ values. (a) Experiment 1 (N=39). Scatterplot showing the correlation between car d′ (SD= 0.83) and bird d′ (SD= 0.24) in Experiment 1: r=0.18, p=n.s. (b) Experiment 2 (N=43). Scatterplot showing the correlation between car d′ (SD= 0.56) and bird d′ (SD= 0.31) in Experiment 2: r=0.08, p=n.s.

Results

Experiment 1

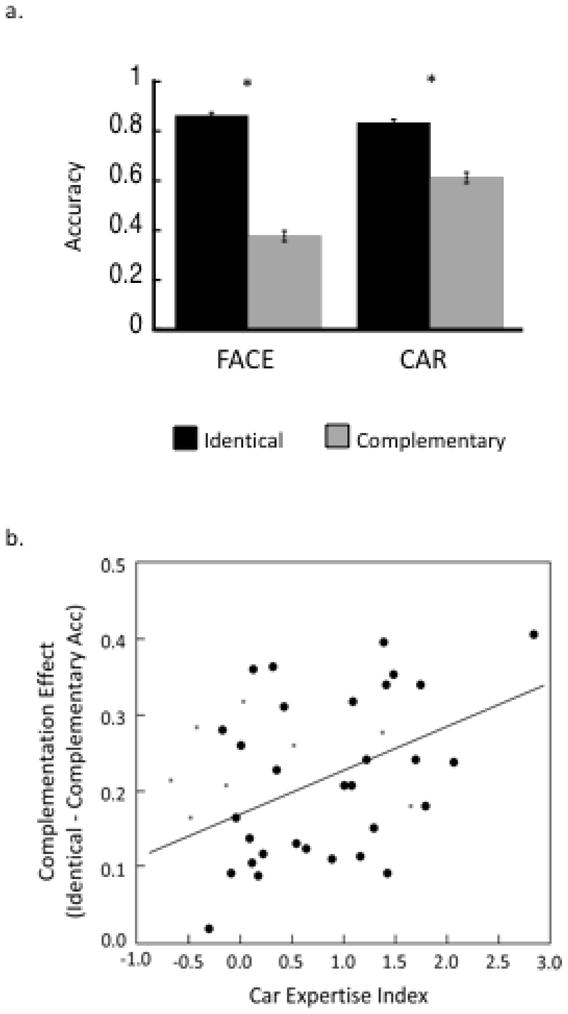

We replicated the advantage of complementation for faces over cars with accuracy (or hit rates) (Biederman & Kalocsai, 1997; Yue et al., 2006; Collin et al., 2004) (Fig 3a). A 2×2 ANOVA on accuracy for same trials revealed better performance for cars than faces (F1,38=47.32, p<.0001), better performance on identical than complementary trials (F1,38=423.81, p<.0001), and an interaction between Category and SF content (F1,38=179.76, p<.0001). Bonferroni post hoc tests (per-comparison alpha (αPC)=.0125) showed that the superior performance for cars was driven by performance in complementary trials (p<.0001), with a non-significant difference between cars and faces in identical trials (p=.26). Although the SF complementation effect (accuracy on identical pairs > accuracy on complementary pairs) was significant for both cars and faces, an ANOVA computed directly on SF complementation values (identical – complementary) revealed a larger effect of complementation for face matching relative to car matching (F1,38=179.76, p<.0001).

Figure 3.

Experiment 1 results (N=39). (a) Mean accuracy values for the same-different matching of identical and complementary faces and cars. Error bars represent the standard error of the mean. (b) Correlation plot showing the relationship between the Complementation Effect (accuracy on Identical trials – accuracy on Complementary trials) in the upright car condition and the Car Expertise Index (Car d′ – Bird d′). Grey squares represent the subset of the population with Bird d′ scores greater than 1 (n=8 out of 39). The linear regression is calculated considering the remaining participants (n=31), and shows a significant positive correlation (r=.42, p<.05).

We also compared the profile of results when the image size was the same within a matching trial (2-deg to 2 deg or 4-deg to 4-deg) versus when it was different (2-deg to 4-deg or 4-deg to 2-deg). First we re-calculated the ANOVA on accuracy from same-identity matching trials to introduce a new factor, Size, with two levels: same and different. There was no difference in the effect of complementation for same-and different-size trials (F1,38=0.935, p=n.s.). In addition, we computed separate AVOVAs for same-and different-size trials, observing no qualitative differences across conditions: same-size trials (Fface>car(1,38)=100.06, p<0.001;Fid>comp(1,38)=380.52, p<0.001; Finteraction(1,38)=137.76, p<0.001) and different-size trials (Fface>car(1,38)=9.28, p<0.01; Fid>comp(1,38)=286.95, p<0.001; Finteraction(1,38)=128.95, p<0.001). These results suggest that low-level image-based matching cannot explain the observed complementation effect.

Moreover, by correlating the magnitude of each individual’s complementation effect for cars and faces with his or her Car Expertise Index, we show that car expertise is associated with the magnitude of the SF complementation effect for cars, while it does not predict the same effect for faces (Table 1, Fig 3b). This expertise effect is of comparable magnitude whether we use the bird baseline or not to quantify individual differences in expertise (i.e, Car Expertise Index versus Car d′, respectively). The correlation grows when we restrict the range of performance on the matching task with birds, removing subjects whose performance suggests a moderate level of bird expertise, despite the consequence of a smaller sample size. Interestingly, this does not depend on the use of the bird baseline in our Expertise Index: the improvement exists even when we use Car d′ to quantify expertise but exclude these participants with high bird scores. This is inconsistent with the idea that the car expertise of participants with elevated Bird d′ could be underestimated when we compute the Expertise Index (Williams et al., 2009). Instead, some participants with relatively high bird-matching scores may use a qualitatively different strategy than most when matching any visually similar objects, thereby obtaining car-matching scores that reflect an advantage that is not due to experience.

Table 1.

| Bird d′ < 1 | All participants | |||

|---|---|---|---|---|

| Car d′ | Car - Bird Delta d′ | Car d′ | Car - Bird Delta d′ | |

| Expt.1- Cars Upright | .41 * | .42 * | .35 * | .32 # |

| Faces Upright | .26 | .26 | .21 | .20 |

| Expt. 2 - Cars Upright | .36 * | .35 * | .19 | .14 |

| Cars Inverted | .20 | .21 | .03 | −.05 |

| Faces Upright | −.06 | .04 | −.14 | −.09 |

| Faces Inverted | −.15 | −.12 | −.11 | −.18 |

p=.05,

p<.05

Experiment 2

We sought to replicate the results from Experiment 1 with two key changes. First, the SF complementation effect was measured using d′ for all trials (rather than accuracy on same trials; Cheung, Richler, Palmeri & Gauthier, 2008). This allows us to control for potential response biases that individuals may have towards certain trial conditions and/or object categories. Second, we manipulated stimulus orientation to investigate the boundary conditions of the expertise effect. As before, we also consider whether removing participants with high bird-matching scores increases the expertise effect.

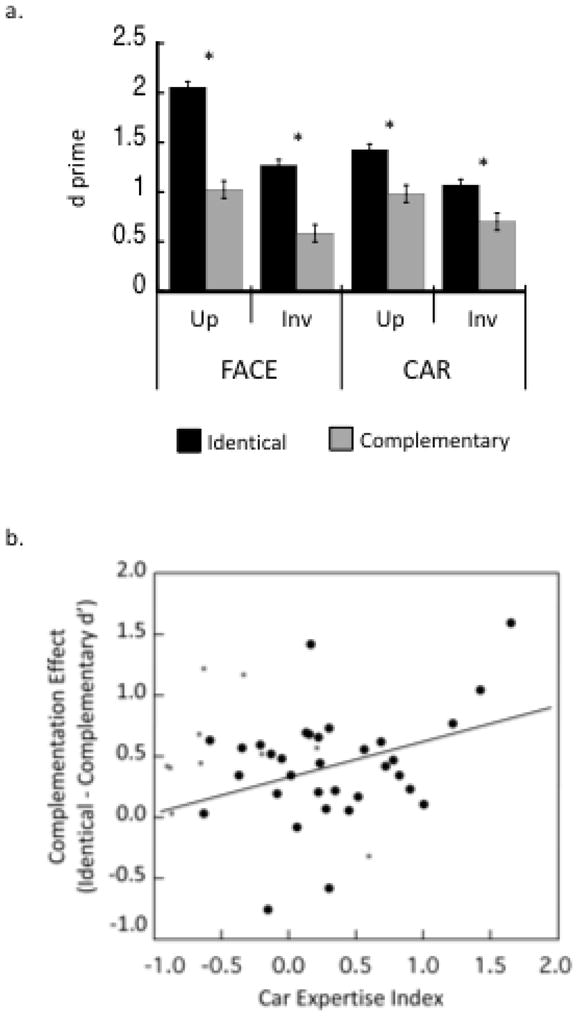

A 2×2×2 ANOVA on d′ (within-subject factors: Category (face or car), SF content (identical or complementary), and Orientation (upright or inverted), all of two levels) showed that faces led to better matching performance than cars (F1,42=14.08, p=0.0005), identical pairs were easier to match than complementary pairs (F1,42=182.88, p<0.0001), and performance on upright trials was greater than inverted trials (F1,42=205.30, p<0.0001) (Fig. 4a). Following up on the Category × SF content interaction (F1,42=69.35, p<.0001) and the Category × Orientation interaction (F1,42=18.37, p=.008) using Bonferroni post hoc tests (αPC=.0125), we found that the superior scores for face matching could be attributed to better performance on identical trials and upright trials compared with complementary trials or inverted trials, respectively. We further observed a three-way interaction between Category, SF content, and Orientation (F1,42=6.97, p=0.012), which we explored with post hoc tests (αPC=.00625). The effect of SF complementation was significant in all four conditions (upright and inverted faces and cars), and performance with faces was only better than with cars for upright identical trials.

Figure 4.

Experiment 2 results (N=43). (a) Mean d′ values for the same-different matching of identical and complementary faces and cars in their upright and inverted orientations. Error bars represent the standard error of the mean. (b) Correlation plot showing the relationship between the Complementation Effect (accuracy on Complementary trials subtracted from accuracy on Identical trials) in the upright car condition and the Car Expertise Index (Car d′ – Bird d′). Grey squares represent the subset of the population with Bird d′ scores greater than 1 (n=10 out of 43). The linear regression calculated for the remaining participants (n=33) shows a significant positive correlation (r=.35, p<.05).

A 2×2 ANOVA computed on SF complementation scores (identical – complementary) confirmed the greater sensitivity of faces relative to cars (F1,42=74.68, p<.0001) and upright images relative to inverted images (F1,42=7.19, p=.01). We explored the interaction effect (F1,42=8.06, p=.007) with post hoc tests (αPC=.00625), finding a larger effect of SF complementation for upright faces relative to the other three categories (i.e., inverted faces and upright and inverted cars). Other than the car orientation comparison (i.e., upright cars – inverted cars), the complementation effect was significant in all Category × Orientation comparisons.

We again assessed the effect of expertise on the magnitude of the complementation effect. As in Experiment 1, correlations with the complementation effect are virtually identical whether we define car expertise using Car d′ by itself or the Car Expertise Index, where Bird d′ is subtracted from Car d′ (Table 1, Figure 4b). We also replicate the finding of a larger influence of car expertise on the complementation effect for upright cars when we exclude participants with high bird scores (d′ greater than 1, n=10 out of 43). With a sample of participants varying in car expertise (.31 – 2.59) but limited in their performance with birds (.18 – 1), car expertise correlates with the magnitude of the complementation effect for upright cars (Fig. 4b), but not for inverted cars or faces in either orientation. In both our experiments, tests using the external studentized residualson datasets that either included or excluded participants with high bird scores failed to reveal any significant outlier.

Discussion

We found that the level of expertise with cars can predict the magnitude of the SF complementation effect. This represents a surprising perceptual deficit in car experts, especially since they would have known the names for most of the cars and would therefore have had access to a verbal code in addition to visual short-term memory. Despite the advantages associated with expertise, however, the perception of objects of expertise was more sensitive to the specific SF content in the image. Our results suggest that the large effect of complementation for upright faces results from our expertise with this category.

This result stands in contrast to prior conclusions(Yue et al., 2006), though several explanations exist for why this earlier study was less sensitive to an expertise effect. In particular, the previous study relied on lab-trained participants with relatively weaker expertise than real-world experts and did not quantify the expertise of individual participants. Indeed, even in our real-world experts, the correlations between expertise and the SF complementation effect were not large. This is not surprising, because prior work suggests that the magnitude of the complementation effect is also influenced by factors independent of expertise, such as the symmetry of the images (Yue et al., 2006).

Why are experts more sensitive to SF content then novices? We introduced the complementation effect within its original framework (Biederman & Kalocsai, 1997), in which the initial null result in the complementation paradigm with non-face objects led to the claim that only face representations include SF and orientation information. But since then it has been shown that even novices with objects like cars or chairs (even inverted cars and chairs) can display significant SF complementation effects (Williams et al., 2009), suggesting that differences between face and object representations’ sensitivity to SF information may not be qualitative. While it is not surprising that identical images of the same object are more easily matched than complementary images that vary considerably, it is less intuitive that matching of complementary images is even more difficult for experts. However, other paradigms measuring selective attention demonstrate that experts find it more difficult than novices to ignore a part of the image that they are told is task-irrelevant(Gauthier & Curby, 2005; Gauthier & Tarr, 1997; Gauthier et al., 1998; 2003; Hole, 1994; Tanaka & Farah, 1993; Young et al., 1987). Observers matching our filtered stimuli are trying to ignore differences caused by the filter and trying to match on the basis of the true underlying shape. As in other paradigms, experts find it particularly difficult to ignore irrelevant information.

Such a failure of selective attention could occur at a perceptual locus (similar to what was originally proposed for the SF complementation effect). For instance, expert representations may be more Gabor-like (Biederman & Kalocsai, 1997) or holistic(Tanaka & Farah, 1993)than novice representations and image transformations – such as our filters – may be particularly hard to ignore in the encoding of these representations. But the same effect could also have a more decisional locus if, for instance, experts have developed through experience an ingrained assumption that no part of two objects differs noticeably without the two objects actually being different. This question concerning the locus of holistic processing and similar effects has only recently been addressed directly, with proponents of both accounts (perceptual: Farah, Wilson, Drain & Tanaka, 1998; McKone et al., 2007; Robbins & McKone, 2007; decisional: Richler, Gauthier, Wenger & Palmeri, 2008; Wenger & Ingvalson, 2002).

While awaiting resolution on this particular issue, we can offer the following explanation of our results: to an expert visual system trained to make fine discriminations, two complementary images represent inputs that are highly likely to signify two similar but distinct individuals. While we instruct our participants to ignore the transformation imposed by the complementary filters, experts appear to instinctively attend to or process, and consequently be influenced by, differences between images that would normally suggest distinct object identities.

Conclusion

This study offers evidence that the SF complementation effect increases as a function of expertise with a category and, thus, may be especially large for faces because of our expertise in this domain.

How does the evidence stand on whether face perception differs qualitatively from object perception? Several hallmarks of face perception have at least sometimes been found to depend on perceptual expertise. This is the case for the inversion effect (Curby et al., 2009; Diamond & Carey, 1986), holistic processing (Gauthier & Tarr, 1997; 2002; Gauthier et al., 1998; 2000a), configural processing (Busey & Vanderkolk, 2004), increased performance in categorizing individuals (Gauthier et al., 2000a; 2000b; Tanaka & Taylor, 2001), and sensitivity to SF information, as demonstrated here. In contrast, evidence suggesting that face perception nonetheless relies on face-specific mechanisms comes from studies with either (i) larger effects in faces than in objects of expertise, or (ii) null effects of expertise in certain hallmarks of face perception. This work on an effect once thought to be unique to faces, then shown to be larger for faces than objects and for which prior tests of expertise rejected the role of experience, offers an opportunity to consider, and reject, these two arguments.

First, given the significant linear relationship between expertise and many behavioral and neural hallmarks of face processing, the modularity of face perception cannot be supported in any strong way solely by evidence that an effect is larger for faces than other objects. The reason is simple: without a way to match the strength of expertise in another domain to that for faces, comparisons of the magnitude of an effect for faces vs. objects are meaningless. Consider that in this study, the mean complementation effect for faces (a difference of approximately 40%in Experiment 1 and 1 Δd′ in Experiment 2)falls near the upper limit obtained by our best car experts(Fig 3b and 4b). Thus, to argue that them agnitude of the face effect can be explained by expertise would only require the assumption that the average level of face expertise in our participants is at least comparable to the car expertise of our best car experts. This appears plausible given the time most of us devote to face perception in a lifetime. Unfortunately, many claims for the special nature of face perception rest on the interpretation of such quantitative differences (e.g., Bruce et al., 1991; Farah et al., 1998; Haig, 1984; Hosie, Ellis & Haig, 1988; Yin, 1969).

Second, when evaluating the expertise account, our findings caution against over-interpretation of null effects, because they are based on specific operational definitions of expertise. Beyond typical concerns raised in the framework of null hypothesis significance testing, an important issue is that the power of a theoretical construct (experience) is assessed with specific measures of expertise. Here, we used a measure of expertise that predicts other hallmarks of face perception in behavioral studies(Curby & Gauthier, 2007; Curby et al., 2009), functional MRI studies (Gauthier et al., 1999; 2000b; Tarr & Gauthier, 2000), and electrophysiological studies (Gauthier et al., 2003; Rossion et al., 2002b; Tanaka & Curran, 2001; Tanaka & Taylor, 2001). Few alternatives to this method of quantifying expertise have been tested and studies that do not use this approach often revert to the less statistically powerful contrast of two groups of experts and novices, based on self-report or some other subjective criterion. However, expertise may be a matter of degree regardless of domain; in fact, growing evidence highlights even a broad distribution of face recognition abilities in the general population (e.g., Russell, Duchaine & Nakayama, 2009). Compared to other fields dealing with individual differences, work on expertise is still in its infancy and measures of expertise are clearly imperfect. For instance, our finding that car expertise effects are more pronounced when participants with high bird-matching scores are removed(even when only car d′ is used as a predictor)suggests that quantifying expertise in a given domain would likely benefit from a sampling of performance across more than two domains. On the one hand, better performance for cars and birds relative to many other domains could reflect expertise in both domains. On the other hand, an observer who performs very well with cars and birds, but just as well as with several other domains is unlikely to qualify as a genuine expert. He or she may instead score high on a general factor relevant to visual perception (similar to “g” for intelligence). Under estimating these challenges of measurement can reduce expertise effects and even lead to null effects. But, critically, these problems are limitations of our measurements of expertise, not of the underlying expertise account of face specialization.

It is important to consider the cost of wrongly assuming that faces are special. Such a conclusion discourages the search for models that can account for both novice and expert performance in any domain. It creates subfields of researchers less likely to influence each other’s work. The suggestion that face perception differs qualitatively from that of other objects for reasons that have nothing to do with experience is a strong claim that requires strong evidence. Any domain-specific model of face perception needs to account for why expertise can predict some putatively face-specific effects (e.g., recruitment of the fusiform gyrus, holistic processing, shift of the entry level, SF complementation effect). If it cannot, it should at least present evidence of a new hallmark of face processing that cannot be explained by expertise under conditions where expertise can still predict these other effects. Therefore, we leave open the possibility that face perception is special in some as yet undetermined way, but propose that the criteria for accepting this possibility be raised substantially relative to current standards.

Supplementary Material

Acknowledgments

This work was supported by the Temporal Dynamics of Learning Center (NSF Science of Learning Center SBE-0542013) and by a grant from James S. McDonnell Foundation to the Perceptual Expertise Network.

References

- Biederman I. Recognition by components: A theory of human image understanding. Psychological Review. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Biederman I, Ju G. Surface vs. edge-based determinants of visual recognition. Cognitive Psychology. 1988;20:38–64. doi: 10.1016/0010-0285(88)90024-2. [DOI] [PubMed] [Google Scholar]

- Biederman I, Kalocsai P. Neurocomputational bases of object and face recognition. Philosophical Transactions of the Royal Society of London, Series B Biological Sciences. 1997;352:1203–1219. doi: 10.1098/rstb.1997.0103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce V, Doyle T, Dench N, Burton M. Remembering facial configurations. Cognition. 1991;38:109–144. doi: 10.1016/0010-0277(91)90049-a. [DOI] [PubMed] [Google Scholar]

- Bukach CM, Gauthier I, Tarr MJ. Beyond faces and modularity: the power of an expertise framework. Trends in Cognitive Science. 2006;10:159–166. doi: 10.1016/j.tics.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Busey TA, Vanderkolk JR. Behavioral and electrophysiological evidence for configural processing in fingerprint experts. Vision Research. 2005;45:431–448. doi: 10.1016/j.visres.2004.08.021. [DOI] [PubMed] [Google Scholar]

- Carey S, Diamond R, Woods B. Development of face perception: A maturational component? Developmental Psychology. 1981;16:257–269. [Google Scholar]

- Diamond R, Carey S. Why faces are and are not special: an effect of expertise. Journal of General Psychology. 1986;115:107–117. doi: 10.1037//0096-3445.115.2.107. [DOI] [PubMed] [Google Scholar]

- Cheung O, Richler JJ, Palmeri TJ, Gauthier I. Revisiting the role of spatial frequencies in the holistic processing of faces. Journal of Experimental Psychology: Human Perception and Performance. 2008;34:1327–1336. doi: 10.1037/a0011752. [DOI] [PubMed] [Google Scholar]

- Collin CA, Liu CH, Troje NF, McMullen PA, Chaudhuri A. Face recognition is affected by similarity in spatial frequency range to a greater degree than within category object recognition. Journal of Experimental Psychology: Human Perception and Performance. 2004;30:975–987. doi: 10.1037/0096-1523.30.5.975. [DOI] [PubMed] [Google Scholar]

- Curby KM, Gauthier I. A visual short-term memory advantage for faces. Psychonomic Bulletin & Review. 2007;14:620–628. doi: 10.3758/bf03196811. [DOI] [PubMed] [Google Scholar]

- Curby K, Glazek K, Gauthier I. Perceptual expertise increases visual short term memory capacity. Journal of Experimental Psychology: Human Perception and Performance. 2009;35:94–107. doi: 10.1037/0096-1523.35.1.94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diamond R, Carey S. Why faces are and are not special: an effect of expertise. Journal of Experimental Psychology: General. 1986;115:107–117. doi: 10.1037//0096-3445.115.2.107. [DOI] [PubMed] [Google Scholar]

- Farah MJ, Wilson KD, Drain HM, Tanaka JW. What is “special” about face perception? Psychological Review. 1998;105:482–498. doi: 10.1037/0033-295x.105.3.482. [DOI] [PubMed] [Google Scholar]

- Fiser J, Subramaniam S, Biederman I. Size tuning in the absence of spatial frequency tuning in object recognition. Vision Research. 2001;41:1931–1950. doi: 10.1016/s0042-6989(01)00062-1. [DOI] [PubMed] [Google Scholar]

- Gaspar CM, Bennett PJ, Sekuler AB. The effects of face inversion and contrast-reversal on efficiency and internal noise. Vision Research. 2008;48:1084–1095. doi: 10.1016/j.visres.2007.12.014. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Anderson AW, Tarr MJ, Skudlarski P, Gore JC. Levels of categorization in visual recognition studied using functional resonance imaging. Current Biology. 1997;7:645–651. doi: 10.1016/s0960-9822(06)00291-0. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Curby KM. A perceptual traffic-jam on highway N170: Interference between face and car expertise. Current Directions Psychological Science. 2005;14:30–33. [Google Scholar]

- Gauthier I, Curby KM, Skudlarski P, Epstein R. Activity of spatial frequency channels in the fusiform face-selective area relates to expertise in car recognition. Cognitive and Affective Behavioral Neuroscience. 2005;5:222–234. doi: 10.3758/cabn.5.2.222. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Curran T, Curby KM, Collins D. Perceptual interference supports a non-modular account of face processing. Nature Neuroscience. 2003;6:428–432. doi: 10.1038/nn1029. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nature Neuroscience. 2000a;3:191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Becoming a “Greeble” expert: Exploring mechanisms for recognition. Vision Research. 1997;37:1682–1682. doi: 10.1016/s0042-6989(96)00286-6. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Unraveling mechanisms for expert object recognition: Bridging brain activity and behavior. Journal of Experimental Psychology: Human Perception and Performance. 2002;28:431–446. doi: 10.1037//0096-1523.28.2.431. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Anderson A, Gore J. Activation of the middle fusiform “face area” increases with experience in recognizing novel objects. Nature Neuroscience. 1999;2:568–573. doi: 10.1038/9224. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW. The fusiform “face area” is part of a network that processes faces at individual level. Journal of Cognitive Neuroscience. 2000b;12:495–504. doi: 10.1162/089892900562165. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Williams P, Tarr MJ, Tanaka J. Training “Greeble” experts: A framework for studying expert object recognition processes. Vision Research. 1998;38:2401–2428. doi: 10.1016/s0042-6989(97)00442-2. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N. The FFA subserves face perception, not generic within category identification. Nature Neuroscience. 2004;7:555–562. doi: 10.1038/nn1224. [DOI] [PubMed] [Google Scholar]

- Goffaux V, Gauthier I, Rossion B. Spatial scale contribution to early visual differences between face and object processing. Cognitive Research. 2003;16:416–424. doi: 10.1016/s0926-6410(03)00056-9. [DOI] [PubMed] [Google Scholar]

- Goffaux V, Hault B, Michel C, Vuong QC, Rossion B. The respective role of low and high spatial frequencies in supporting configural and featural processing of faces. Perception. 2005;34:77–86. doi: 10.1068/p5370. [DOI] [PubMed] [Google Scholar]

- Goffaux V, Rossion B. Faces are “spatial”— holistic face perception is supported by low spatial frequencies. Journal of Experimental Psychology: Human Perception and Performance. 2006;32:1023–1039. doi: 10.1037/0096-1523.32.4.1023. [DOI] [PubMed] [Google Scholar]

- Haig ND. The effect of feature displacement on face recognition. Perception. 1984;13:505–512. doi: 10.1068/p130505. [DOI] [PubMed] [Google Scholar]

- Hayes A. Identification of two-tone images: Some implications for high-and low-spatial-frequency processes in human vision. Perception. 1988;17:429–436. doi: 10.1068/p170429. [DOI] [PubMed] [Google Scholar]

- Hole GJ. Configurational factors in the perception of unfamiliar faces. Perception. 1994;23:65–74. doi: 10.1068/p230065. [DOI] [PubMed] [Google Scholar]

- Hosie JA, Ellis HD, Haig ND. The effect of feature displacement on the perception of well-known faces. Perception. 1988;17:461–474. doi: 10.1068/p170461. [DOI] [PubMed] [Google Scholar]

- Kanwisher N. Domain specificity in face perception. Nature Neuroscience. 2000;3:759–763. doi: 10.1038/77664. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. The Journal Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu CH, Collin CA, Rainville SJM, Chaudhuri A. The effects of frequency overlap on face recognition. Journal of Experimental Psychology: Human Perception and Performance. 2000;29:729–743. doi: 10.1037//0096-1523.26.3.956. [DOI] [PubMed] [Google Scholar]

- McKone E, Kanwisher N, Duchaine BC. Can generic expertise explain special processing for faces? TRENDS in Cognitive Sciences. 2007;11:8–15. doi: 10.1016/j.tics.2006.11.002. [DOI] [PubMed] [Google Scholar]

- Nederhouser M, Yue X, Mangini MC, Biederman I. The effect of contrast reversal on recognition is unique to faces, not objects. Vision Research. 2007;47:2134–2142. doi: 10.1016/j.visres.2007.04.007. [DOI] [PubMed] [Google Scholar]

- Nishimura M, Maurer D. The effect of categorization on sensitivity to second-order relations in novel objects. Perception. 2008;37:584–601. doi: 10.1068/p5740. [DOI] [PubMed] [Google Scholar]

- Richler JJ, Gauthier I, Wenger MJ, Palmeri TJ. Holistic processing of faces: Perceptual and decisional components. Journal of Experimental Psychology: Learning, Memory and Cognition. 2008;34:328–342. doi: 10.1037/0278-7393.34.2.328. [DOI] [PubMed] [Google Scholar]

- Robbins R, McKone E. No face-like processing for objects-of-expertise in three behavioral tasks. Cognition. 2007;103:34–79. doi: 10.1016/j.cognition.2006.02.008. [DOI] [PubMed] [Google Scholar]

- Rossion B, Curran T, Gauthier I. A defense of the subordinate-level account for the N170 component. Cognition. 2002a;85:189–196. doi: 10.1016/s0010-0277(02)00101-4. [DOI] [PubMed] [Google Scholar]

- Rossion B, Gauthier I, Goffaux V, Tarr MJ, Crommelinck M. Expertise training with novel objects leads to left lateralized face-like electrophysiological responses. Psychological Science. 2002b;13:250–257. doi: 10.1111/1467-9280.00446. [DOI] [PubMed] [Google Scholar]

- Rossion B, Kung CC, Tarr MJ. Visual expertise with nonface objects leads to competition with the early perceptual processing of faces in human occipitotemporal cortex. Proceedings of the National Academy of Sciences. 2004;101:14521–14526. doi: 10.1073/pnas.0405613101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russell R, Duchaine B, Nakayama K. Super-recognizers: People with extraordinary face recognition ability. Psychonomic Bulletin and Review. 2009;16:252–257. doi: 10.3758/PBR.16.2.252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Subramaniam S, Biederman I. Does contrast reversal affect object identification? Investigative Ophthalmology and Visual Science. 1997;38:998. [Google Scholar]

- Tanaka JW, Curran T. A neural basis for expert object recognition. Psychological Science. 2001;12:43–47. doi: 10.1111/1467-9280.00308. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. Quarterly Journal Experimental Psychology. 1993;46:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Sengco JA. Features and their configuration in face recognition. Memory & Cognition. 1997;25:583–592. doi: 10.3758/bf03211301. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Taylor M. Object categories and expertise: Is the basic level in the eye of the beholder? Cognitive Psychology. 2001;23:457–482. [Google Scholar]

- Tarr MJ, Gauthier I. FFA: a flexible fusiform area for subordinate-level visual processing automatized by expertise. Nature Neuroscience. 2000;3:764–769. doi: 10.1038/77666. [DOI] [PubMed] [Google Scholar]

- Wenger MJ, Ingvalson EM. A decisional component of holistic encoding. Journal of Experimental Psychology: Learning, Memory and Cognition. 2002;28:872–892. [PubMed] [Google Scholar]

- Williams NR, Willenbockel V, Gauthier I. Sensitivity to spatial frequency content is not specific to face perception. Vision Research. 2009;49:2353–2362. doi: 10.1016/j.visres.2009.06.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong ACN, Palmeri TJ, Gauthier I. Conditions for face-like expertise with objects: Becoming a Ziggerin expert – but which type? Psychological Science. 2009;20:1108–1117. doi: 10.1111/j.1467-9280.2009.02430.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu Y. Revisiting the role of the fusiform face area in visual expertise. Cerebral Cortex. 2005;15:1234–1242. doi: 10.1093/cercor/bhi006. [DOI] [PubMed] [Google Scholar]

- Yin RK. Looking at upside-down faces. Journal of Experimental Psychology. 1969;81:141–145. [Google Scholar]

- Young AW, Hellawell D, Hay D. Configural information in face perception. Perception. 1987;10:747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]

- Yue X, Tjan BS, Biederman I. What makes faces special? Vision Research. 2006;46:3802–3811. doi: 10.1016/j.visres.2006.06.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.