Abstract

Algorithmically and energetically efficient computational architectures that operate in real time are essential for clinically useful neural prosthetic devices. Such devices decode raw neural data to obtain direct control signals for external devices. They can also perform data compression and vastly reduce the bandwidth and consequently power expended in wireless transmission of raw data from implantable brain-machine interfaces. We describe a biomimetic algorithm and micropower analog circuit architecture for decoding neural cell ensemble signals. The decoding algorithm implements a continuous-time artificial neural network, using a bank of adaptive linear filters with kernels that emulate synaptic dynamics. The filters transform neural signal inputs into control-parameter outputs, and can be tuned automatically in an on-line learning process. We provide experimental validation of our system using neural data from thalamic head-direction cells in an awake behaving rat.

Index Terms: Brain-machine interface, Neural decoding, Biomimetic, Adaptive algorithms, Analog, Low-power

I. INTRODUCTION

Brain-machine interfaces have proven capable of decoding neuronal population activity in real-time to derive instantaneous control signals for prosthetics and other devices. All of the decoding systems demonstrated to date have operated by analyzing digitized neural data [1]–[7]. Clinically viable neural prosthetics are an eagerly anticipated advance in the field of rehabilitation medicine, and development of brain-machine interfaces that wirelessly transmit neural data to external devices will represent an important step toward clinical viability. The general model for such devices has two components: a brain-implanted unit directly connected to a multielectrode array collecting raw neural data; and a unit outside the body for data processing, decoding, and control. Data transmission between the two units is wireless. A 100-channel, 12-bit-precise digitization of raw neural waveforms sampled at 30 kHz generates 36 Mbs−1 of data; the power costs in digitization, wireless communication, and population signal decoding all scale with this high data rate. Consequences of this scaling, as seen for example in cochlear-implant systems, include unwanted heat dissipation in the brain, decreased longevity of batteries, and increased size of the implanted unit. Recent designs for system components have addressed these issues in several ways. However, almost no work has been done in the area of power-efficient neural decoding.

In this work we describe an approach to neural decoding using low-power analog preprocessing methods that can handle large quantities of high-bandwidth analog data, processing neural input signals in a slow-and-parallel fashion to generate low-bandwidth control outputs.

Multiple approaches to neural signal decoding have been demonstrated by a number of research groups employing highly programmable, discrete-time, digital algorithms, implemented in software or microprocessors located outside the brain. We are unaware of any work on continuous-time analog decoders or analog circuit architectures for neural decoding. The neural signal decoder we present here is designed to complement and integrate with existing approaches. Optimized for implementation in micropower analog circuitry, it sacrifices some algorithmic programmability to reduce the power consumption and physical size of the neural decoder, facilitating use as a component of a unit implanted within the brain. Trading off the flexibility of a general-purpose digital system for the efficiency of a special-purpose analog system may be undesirable in some neural prosthetic devices. Therefore, our proposed decoder is meant to be used not as a substitute for digital signal processors but rather as an adjunct to digital hardware, in ways that combine the efficiency of embedded analog preprocessing options with the flexibility of a general-purpose external digital processor.

For clinical neural prosthetic devices, the necessity of highly sophisticated decoding algorithms remains an open question, since both animal [3], [4], [8], [9] and human [5] users of even first-generation neural prosthetic systems have proven capable of rapidly adapting to the particular rules governing the control of their brain-machine interfaces. In the present work we focus on an architecture to implement a simple, continuous-time analog linear (convolutional) decoding algorithm. The approach we present here can be generalized to implement analog-circuit architectures of general Bayesian algorithms; examples of related systems include analog probabilistic decoding circuit architectures used in speech recognition and error correcting codes [10], [11]. Such architectures can be extended through our mathematical approach to design circuit architectures for Bayesian decoding.

II. A BIOMIMETIC ADAPTIVE ALGORITHM FOR DECODING NEURAL CELL ENSEMBLE SIGNALS

In convolutional decoding of neural cell ensemble signals, the decoding operation takes the form

| (1) |

| (2) |

where N⃗ (t) is an n-dimensional vector containing the neural signal (n input channels of neuronal firing rates, analog signal values, or local field potentials, for example) at time t; M⃗ (t) is a corresponding m-dimensional vector containing the decoder output signal (which in the examples presented here corresponds to motor control parameters, but could correspond as well to limb or joint kinematic parameters or to characteristics or states of nonmotor cognitive processes); W is a matrix of convolution kernels Wij(t) (formally analogous to a matrix of dynamic synaptic weights), each of which depends on a set of p modifiable parameters, , k ∈ {1, … , p}; and ○ indicates convolution. Accurate decoding requires first choosing an appropriate functional form for the kernels and then optimizing the kernel parameters to achieve maximal decoding accuracy. Since the optimization process is generalizable to any choice of kernels that are differentiable functions of the tuning parameters, we discuss the general process first. We then explain our biophysical motivations for selecting particular functional forms for the decoding kernels; appropriately chosen kernels enable the neural decoder to emulate the real-time encoding and decoding processes performed by biological neurons.

Our algorithm for optimizing the decoding kernels uses a gradient-descent approach to minimize decoding error in a least-squares sense during a learning phase of decoder operation. During this phase the correct output , and hence the decoder error , is available to the decoder for feedback-based learning. We design the optimization algorithm to evolve W(t) in a manner that reduces the squared decoder error on a timescale set by the parameter τ, where the squared error is defined as

| (3) |

| (4) |

and the independence of each of the m terms in Equation 4 is due to the the independence of the m sets of np parameters , j ∈ {1, … , n} k ∈ {1, … , p} associated with generating each component Mi(t) of the output. Our strategy for optimizing the matrix of decoder kernels is to modify each of the kernel parameters continuously and in parallel, on a timescale set by τ, in proportion to the negative gradient of E(W(t), τ) with respect to that parameter:

| (5) |

| (6) |

| (7) |

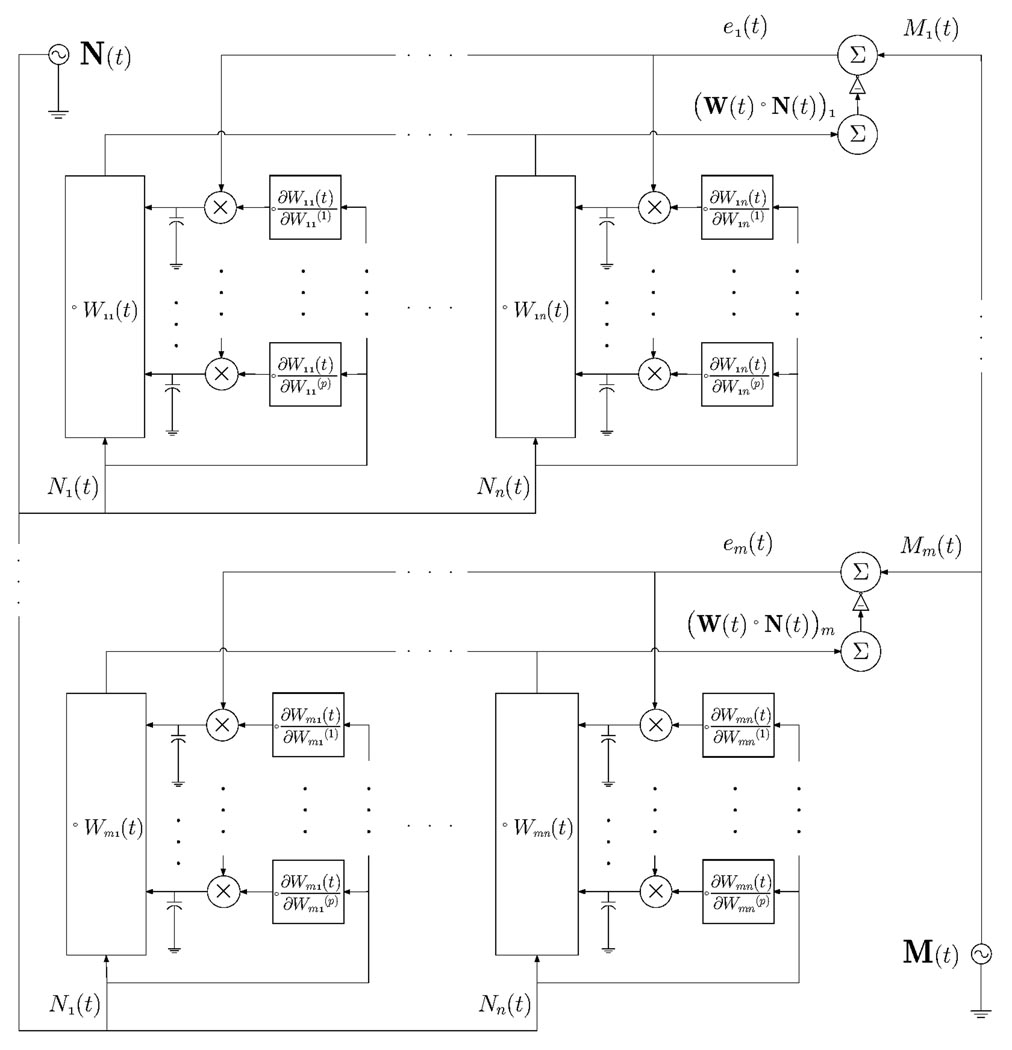

The learning algorithm refines W in a continuous-time fashion, using −∇⃗E(t) as an error feedback signal to modify W(t), and incrementing each of the parameters in continuous time by a term proportional to (the proportionality constant, ε, must be large enough to ensure quick learning but small enough to ensure learning stability). If W(t) is viewed as an array of linear filters operating on the neural input signal, the quantity used to increment each filter parameter can be described as the product, averaged over a time interval of length τ, of the error in the filter output and a secondarily filtered version of the filter input. The error term is identical for the parameters of all filters contributing to a given component of the output, Mi (t). The secondarily filtered version of the input is generated by a secondary convolution kernel, , which depends on the functional form of each primary filter kernel and in general differs for each filter parameter. Figure 1 shows a block diagram for an analog circuit architecture that implements our decoding and optimization algorithm.

Fig. 1.

Block diagram of a computational architecture for linear convolutional decoding and learning.

Many functional forms for the convolution kernels are both theoretically possible and practical to implement using low-power analog circuitry. Our approach has been to emulate biological neural systems by choosing a biophysically inspired kernel whose impulse response approximates the postsynaptic currents biological neurons integrate when encoding and decoding neural signals in vivo [12]. Combining our decoding architecture with the choice of a first-order low-pass decoder kernel enables our low-power neural decoder to implement a biomimetic, continuous-time artificial neural network. Numerical experiments have also indicated that decoding using such biomimetic kernels can yield results comparable to those obtained using optimal linear decoders [13]. But in contrast with our on-line optimization scheme, optimal linear decoders are computed off-line after all training data have been collected. We have found that this simple choice of kernel offers effective performance in practice, and so we confine the present analysis to that kernel.

Two-parameter first-order low-pass filter kernels account for trajectory continuity by exponentially weighting the history of neural inputs:

| (8) |

where the two tunable kernel parameters are , the low-pass filter gain, and , the decay time over which past inputs N⃗ (t′), t′ < t, influence the present output estimate M⃗ (t) = W ○ N⃗ (t). The filters used to tune the low-pass filter kernel parameters can be implemented using simple and compact analog circuitry. The gain parameters are tuned using low-pass filter kernels of the form

| (9) |

while the time-constant parameters are tuned using band-pass filter kernels:

| (10) |

When decoding discontinuous trajectories, such as sequences of discrete decisions, we can set the τij to zero, yielding

| (11) |

Such a decoding system, in which each kernel is a zeroth-order filter characterized by a single tunable constant, performs instantaneous linear decoding, which has successfully been used by others to decode neuronal population signals in the context of neural prosthetics [5], [14]. With kernels of this form, W(t) is analogous to matrices of synaptic weights encountered in artificial neural networks, and our optimization algorithm resembles a ‘delta-rule’ learning procedure [15].

III. RESULTS

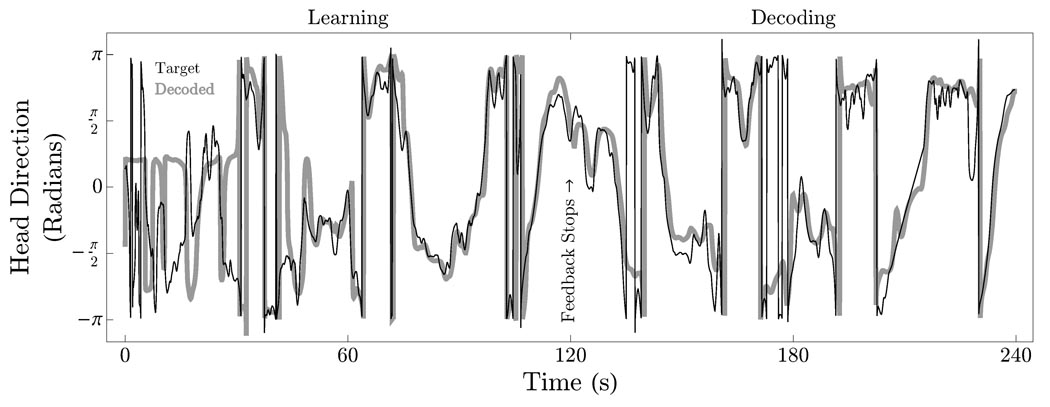

Head direction was decoded from the activity of n = 6 isolated thalamic neurons according to the method described in [16]. The adaptive filter parameters were implemented as micropower analog circuits and simulated in SPICE; they were optimized through gradient descent over training intervals of length T during which the decoder error, ei(t) = Mi(t)− M̂i(t) (where M⃗ (t) = (cos (θ(t)), sin (θ(t))) and θ denotes the head direction angle), was made available to the adaptive filter in the feedback configuration described in Section II for t ∈ [0, T]. Following these training intervals feedback was discontinued and the performance of the decoder was assessed by comparing the decoder output M⃗ (t) with for t > T.

Figure 2 compares the output of the decoder to the measured head direction over a 240 s interval. The filter parameters were trained over the interval t ∈ [0, T = 120] s. The figure shows M⃗ (t) (gray) tracking (black) with increasing accuracy as training progresses, illustrating that while initial predictions are poor, they improve with feedback over the course of the training interval. Feedback is discontinued at t = 120 s. Qualitatively, the plots on the interval t ∈ [120, 240] s illustrate that the output of the neural decoder reproduces the shape of the correct waveform, predicting head direction on the basis of neuronal spike rates.

Fig. 2.

Continuous decoding of head direction from neuronal spiking activity.

IV. DISCUSSION

Simulations using basic circuit building-blocks for the modules shown in Figure 1 indicate that a single decoding module (corresponding to an adaptive kernel Wij and associated optimization circuitry, as diagrammed in Figure 1) should consume approximately 54 nW from a 1 V supply in 0.18 µm CMOS technology and require less than 3000 µm2. Low power consumption is achieved through the use of subthreshold bias currents for transistors in the analog filters and other components. Analog preprocessing of raw neural input waveforms is accomplished by dual thresholding to detect action potentials on each input channel and then smoothing the resulting spike trains to generate mean firing rate input signals. SPICE simulations indicate that each analog preprocessing module should consume approximately 241 nW from a 1 V supply in 0.18 µm CMOS technology. A full-scale system with n = 100 neuronal inputs comprising N⃗ (t)) and m = 3 control parameters comprising M⃗ (t) would require m × n = 300 decoding modules and consume less than 17 µW in the decoder and less than 25 µW in the preprocessing stages.

Direct and power-efficient analysis and decoding of analog neural data within the implanted unit of a brain-machine interface could also facilitate extremely high data compression ratios. For example, the 36 Mbs−1 required to transmit raw neural data from 100 channels could be compressed more than 100, 000-fold to 300 bs−1 of 3-channel motor-output information updated with 10-bit precision at 10 Hz. Such dramatic compression brings concomitant reductions in the power required for communication and digitization of neural data. Ultra-low-power analog preprocessing prior to digitization of neural signals could thus be beneficial in some applications.

V. CONCLUSIONS

The algorithm and architecture presented here offer a practical approach to computationally efficient neural signal decoding, independent of the hardware used for their implementation. While the system is suitable for analog or digital implementation, we suggest that a micropower analog implementation trades some algorithmic programmability for reductions in power consumption that could facilitate implantation of a neural decoder within the brain. In particular, circuit simulations of our analog architecture indicate that a 100-channel, 3-motor-output neural decoder can be built with a total power budget of approximately 43 µW. Our work could also enable a 100, 000-fold reduction in the bandwidth needed for wireless transmission of neural data, thereby reducing to nanowatt levels the power potentially required for wireless data telemetry from a brain implant. Our work suggests that highly power-efficient and area-efficient analog neural decoders that operate in real time can be useful components of brain-implantable neural prostheses, with potential applications in neural rehabilitation and experimental neuroscience. Through front-end preprocessing to perform neural decoding and data compression, algorithms and architectures such as those presented here can complement digital signal processing and wireless data transmission systems, offering significant increases in power and area efficiency at little cost.

ACKNOWLEDGMENTS

This work was funded in part by National Institutes of Health grants R01-NS056140 and R01-EY15545, the McGovern Institute Neurotechnology Program at MIT, and National Eye Institute grant R01-EY13337. Rapoport received support from a CIMIT–MIT Medical Engineering Fellowship.

Contributor Information

Benjamin I. Rapoport, Department of Electrical Engineering and Computer Science, Massachusetts Institute of Technology (MIT), Cambridge, Massachusetts 02139 USA; Harvard–MIT Division of Health Sciences and Technology, Cambridge, Massachusetts 02139 and Harvard Medical School, Boston, Massachusetts 02115.

Woradorn Wattanapanitch, Department of Electrical Engineering and Computer Science, Massachusetts Institute of Technology (MIT), Cambridge, Massachusetts 02139 USA.

Hector L. Penagos, MIT Department of Brain and Cognitive Sciences and the Harvard–MIT Division of Health Sciences and Technology

Sam Musallam, Department of Electrical and Computer Engineering, McGill University, Montreal, Quebec, Canada.

Richard A. Andersen, Division of Biology, California Institute of Technology, Pasadena, California 91125

Rahul Sarpeshkar, Email: rahuls@mit.edu.

REFERENCES

- 1.Chapin JK, Moxon KA, Markowitz RS, Nicolelis ML. Real-time control of a robot arm using simultaneously recorded neurons in the motor cortex. Nature Neuroscience. 1999;2:664–670. doi: 10.1038/10223. [DOI] [PubMed] [Google Scholar]

- 2.Wessberg J, Stambaugh CR, Kralik JD, Beck PD, Laubach M, Chapin JK, Kim J, Biggs SJ, Srinivasan MA, Nicolelis MAL. Real-time prediction of hand trajectory by ensembles of cortical neurons in primates. Nature. 2000 November;408:361–365. doi: 10.1038/35042582. [DOI] [PubMed] [Google Scholar]

- 3.Taylor DM, Tillery SIH, Schwartz AB. Direct cortical control of 3d neuroprosthetic devices. Science. 2002 June;296:1829–1832. doi: 10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- 4.Musallam S, Corneil BD, Greger B, Scherberger H, Andersen RA. Cognitive control signals for neural prosthetics. Science. 2004 July;305:258–262. doi: 10.1126/science.1097938. [DOI] [PubMed] [Google Scholar]

- 5.Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan AH, Branner A, Chen D, Penn RD, Donoghue JP. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006 July;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 6.Santhanam G, Ryu SI, Yu BM, Afshar A, Shenoy KV. A high-performance brain-computer interface. Nature. 2006 July;442:195–198. doi: 10.1038/nature04968. [DOI] [PubMed] [Google Scholar]

- 7.Jackson A, Mavoori J, Fetz EE. Long-term motor cortex plasticity induced by an electronic neural implant. Nature. 2006 November;444:55–60. doi: 10.1038/nature05226. [DOI] [PubMed] [Google Scholar]

- 8.Carmena JM, Lebedev MA, Crist RE, ODoherty JE, Santucci DM, Dimitrov DF, Patil PG, Henriquez CS, Nicolelis MAL. Learning to control a brain-machine interface for reaching and grasping by primates. Public Library of Science Biology. 2003 October;1(2):1–16. doi: 10.1371/journal.pbio.0000042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008 June;453(7198):1098–1101. doi: 10.1038/nature06996. [DOI] [PubMed] [Google Scholar]

- 10.Lazzaro John, Wawrzynek John, Lippmann RichardP. A micropower analog circuit implementation of hidden markov model state decoding. IEEE Journal of Solid-State Circuits. 1997 August;32(8):1200–1209. [Google Scholar]

- 11.Loeliger Hans-Andrea, Tarköy Felix, Lustenberger Felix, Helfenstein Markus. Decoding in Analog VLSI. IEEE Communications Magazine. 1999 April;:99–101. [Google Scholar]

- 12.Arenz A, Silver RA, Schaefer AT, Margrie TW. The contribution of single synapses to sensory representation in vivo. Science. 2008 August;321:977–980. doi: 10.1126/science.1158391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Eliasmith C, Anderson CH. Neural Engineering. MIT Press; 2003. chapter 4; pp. 112–115. [Google Scholar]

- 14.Wessberg J, Nicolelis MAL. Optimizing a linear algorithm for real-time robotic control using chronic cortical ensemble recordings in monkeys. Journal of Cognitive Neuroscience. 2004;16(6):1022–1035. doi: 10.1162/0898929041502652. [DOI] [PubMed] [Google Scholar]

- 15.Haykin S. Neural Networks: A Comprehensive Foundation. New Jersey: Prendice Hall, Upper Saddle River; 1999. [Google Scholar]

- 16.Barbieri R, Frank LM, Quirk MC, Wilson MA, Brown EN. A Time-Dependent Analysis of Spatial Information Encoding in the Rat Hippocampus. Neurocomputing. 2000;32–33:629–635. [Google Scholar]