Abstract

Purpose

A method to register endoscopic and laparoscopic ultrasound (US) images in real time with pre-operative computed tomography (CT) data sets has been developed with the goal of improving diagnosis, biopsy guidance, and surgical interventions in the abdomen.

Methods

The technique, which has the potential to operate in real time, is based on a new phase correlation technique: LEPART, which specifies the location of a plane in the CT data which best corresponds to the US image. Validation of the method was carried out using an US phantom with cyst regions and with retrospective analysis of data sets from animal model experiments.

Results

The phantom validation study shows that local translation displacements can be recovered for each US frame with a root mean squared error of 1.56 ± 0.78 mm in less than 5 sec, using non-optimized algorithm implementations.

Conclusion

A new method for multimodality (preoperative CT and intraoperative US endoscopic images) registration to guide endoscopic interventions was developed and found to be efficient using clinically realistic datasets. The algorithm is inherently capable of being implemented in a parallel computing system so that full real time operation appears likely.

Keywords: Multi-modality registration, Phase correlation, Ultrasound-guided endoscopic, Ultrasound-guided laparoscopy, CT

Introduction

Minimally invasive procedures, such as endoscopic or laparoscopic interventions, have increased in volume, partially due to the availability of real time local imaging techniques that increase the operator’s confidence. Ultrasound is often used since it offers a flexible, easy to integrate, and real time imaging source that penetrates into tissue, thereby complementing standard optical (video) imaging. Further benefits may accrue from incorporating pre-procedure data; the gap between novices and expert operators can be reduced when using such “Image Registered” systems [15]. These systems are based on the use of preoperative volumetric CT (or MRI) images, loosely registered with the real time ultrasound image (Fig. 1). The operator can navigate more effectively and better understand the content of the real time ultrasound image by comparing it to a synthetic CT image formed in the plane of the preoperative volume corresponding to the US plane. Using a calibrated electromagnetic tracker, the registration error of this reference image is less than 5mm [2], which is adequate to guide many surgical procedures.

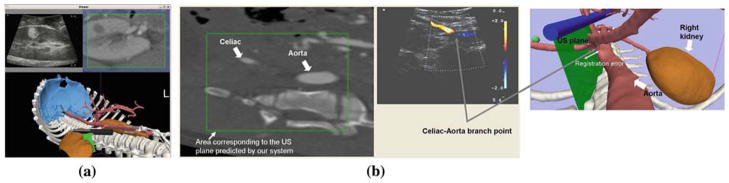

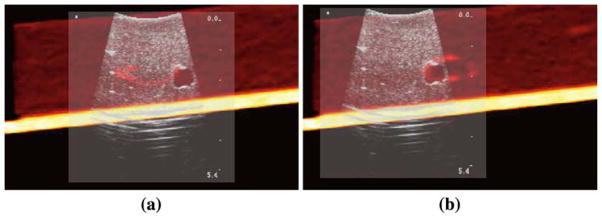

Fig. 1.

Image Registered system. The system uses an initial rigid body registration to find the global transformation between the preoperative CT scans and the OR coordinate system. a Laparoscopic examination of a kidney tumor. The tumor is shown both in the US image and the reformatted preoperative CT. b Scan of the branch point between the celiac and the aorta. The image shows the out-of-plane misalignment incurred by the system

A more ambitious goal is to improve the registration by doing a continuous real-time local correction based on incremental shifts between the ultrasound image and a subvolume of the CT data reformatted in the coordinate frame of the ultrasound. This approach neglects the possibilities of non-uniform warping or movement of tissue, but merely accommodates local displacements between the data from the two modalities. However, the image matching is restricted to a local region, so improvement is likely.

For practical utility we require that the registration occurs quickly: ideally with no lag, but within a few seconds at worst. Also, if the registration correction is rapid, organ shifts due to breathing (and other motions) may easily be accommodated. We note that our registration problem involves fitting a 2D slice to a 3D volume. Although other groups are successfully studying the registration of 3D ultrasound to 3D preoperative volumes [7–9], clinical laparoscopic and endoscopic procedures are carried out with 2D US probes; this is not likely to change in the near future. Other authors have successfully applied different registration strategies using a collection of tracked 2D transabdominal ultrasounds and CT [18,20]. Still, these works require a set of US images before the registration can be accomplished and the techniques are very demanding computationally.

We present here a method that is designed to register US images as they become available during the course of the intervention. We propose a new registration method based on phase correlation (PC). The direct application of PC to our problem is complicated by the different signal characteristics between the preoperative CT (or MRI) source, and the US source. Also, a successful approach must accommodate missing information in the intra-operative US images due to shadowing and phantom reflections caused by non-orthogonal incident angles between the US wave and the imaged object surface.

Background

The increasing use and quality of radiologic techniques (CT, MRI, SPECT, PET) for screening and diagnosis will stimulate the demand for minimally invasive biopsy and intervention. While these procedures might optimally be conducted inside the imaging device itself, practical considerations, including convenience and cost, will continue to limit “in-scanner“ approaches. Thus endoscopic and (to some extent) laparoscopic imaging are likely to be more broadly applied to the follow-up diagnosis of disease and the staging of care. Also, optical biopsy techniques, which can use smaller probes, may be increasingly utilized.

One approach to improving the guidance of instruments in real time is the use of intra-procedure ultrasound. While ultrasonic laparoscopic and endoscopic devices are widely available, they have not been maximally adopted by gastroenterologists and surgeons due to the long learning curve and lack of confidence in ultrasound interpretation, which manifests as difficulty in navigation and targeting for the probe to reach the radiologically determined target. We have therefore developed Image Registration techniques [2,15] which show the position and orientation of the ultrasound probe in anatomic context and display of re-formatted CT (or MR) reference images to improve the accuracy and confidence of physician operators (see Fig. 1b). The Image Registration approach is based on two assumptions:

The operator may function most effectively when presented with a simplified representation of the anatomy showing only key structures to guide probe positioning, and

Real time display of the position and orientation in this anatomic context may be more efficient than the use of more complex 3D plane displays of the radiologic information.

That is, Image Registration techniques may have added value because the instrument position and orientation is displayed accessibly and understood easily and intuitively.

Experimental studies in porcine models have shown that both laparoscopic ultrasound (LUS) and endoscopic ultrasound (EUS) users are significantly more effective at identifying multiple anatomic targets in a timed trial using the Image Registration system [16]. Kinematics measurements, which record and analyze position, orientation, and motion of instruments, have been shown to correlate well with the expertise of the user [14]. Experiments comparing LUS and EUS user performance with and without Image Registration (IR) show that the IR system permits novice users to perform like experts in some tasks, and even appears to improve the performance of experts in limited tests [15]. Users overwhelmingly preferred the Image Registration approach for both endoscopy and laparoscopy.

Functionally, our system requires four capabilities:

construction of a useful model from the pre-procedure 3D data (Segmentation),

alignment and fitting of the model to the real time anatomy (Registration),

determining the position of the instruments (Tracking), and

presenting the information to guide the procedure (Display).

A typical screen-shot of the display of our system is shown in Fig. 1a. Table 1 shows key attributes for these sub-system functions to make a successful Image Registration system.

Table 1.

Main attributes for a imaged registered system for laparoscopic and endoscopic interventions

| Subsystem | Key attributes |

|---|---|

| Segmentation | Semi-automatic, rapid |

| Registration | Rapid set up, accurate, complaint with patient and organ motion |

| EM tracking | Noise-free, unobtrusive, easily implemented under sterile conditions |

| Display | Intuitively understood, high quality, real-time (lag free) |

The method presented in this paper addresses the improvement of registration for small shifts from the initial registration. The most relevant of these motions is the out-of-plane mis-registration between the US image and the reformatted CT image. While in-plane shifts can more easily be recovered by the operator without a huge mental burden, out-of-plane misalignments may be critical for clinical interpretation. The integration of such a registration technique has to be done in the main tracking loop, so continuous updates can be made available as new US images provide more information about the mis-registration that the system is incurring.

Traditional phase correlation

Phase correlation is a well-known image registration method that exploits the Fourier shift theorem. Even though PC is limited to estimating translational shifts between two images, this approach is robust to frequency-dependent noise and limited image overlap areas. Furthermore, it can be calculated rapidly by means of the fast Fourier transform (FFT). This method can also achieve sub-pixel accuracy by using spectral techniques [12] or by means of least squares fitting of a Dirichlet function [3].

Let fCT (x) and fUS (x) be two n-D images defined in ℝn, where n = 2 or 3. In our case, fCT and fUS will be the CT and US image respectively. For now we will also assume that both images have the same dimensionality. Let f̂CT (u) =  {fCT} = |f̂CT|ejΦCT and f̂US (u) =

{fCT} = |f̂CT|ejΦCT and f̂US (u) =  {fUS} = f̂US = |f̂|ejΦUS be the corresponding Fourier transform of the images, where u is the spatial frequency coordinate. Let us assume that the CT image is shifted in the spatial domain fCT(x − x0) where x0 is a translation. The normalized cross power spectrum, i.e the PC function, is defined as

{fUS} = f̂US = |f̂|ejΦUS be the corresponding Fourier transform of the images, where u is the spatial frequency coordinate. Let us assume that the CT image is shifted in the spatial domain fCT(x − x0) where x0 is a translation. The normalized cross power spectrum, i.e the PC function, is defined as

| (1) |

where * denotes the complex conjugate and uT x is the inner product between u and x0. As we can see, the PC, Ψ, between the US image and the translated CT image is given by a complex exponential of a linear term incorporating the translation. However the phase discrepancy between the images, Φd (u) = ΦCT(u) − ΦUS(u), appears in the PC function as a nuisance term. Therefore, the PC function in the spatial domain, Ψ(x), is not merely a Dirac delta centered at x0 but additional terms appear complicating the estimation process.

The phase discrepancy term, Φd (u), arises from several sources: (1) Noise in both images. (2) Deformations between the images that cannot be accounted by a simple translation. (3) Differences between the phase signatures of the images, which are particularly evident when the images come from different imaging modalities. Since noise is not correlated with the signal, it is therefore easier to manage [12]. However, phase discrepancy due to high order deformations and multimodality imaging exhibits structured components that may yield a concentration of the phase spectrum energy in a harmonic different from that which corresponds to the desired translation.

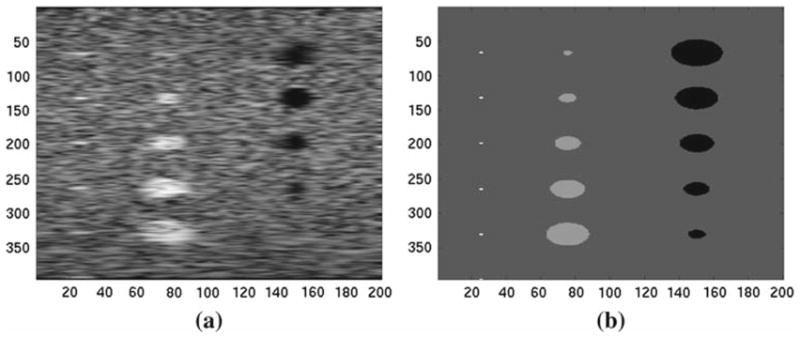

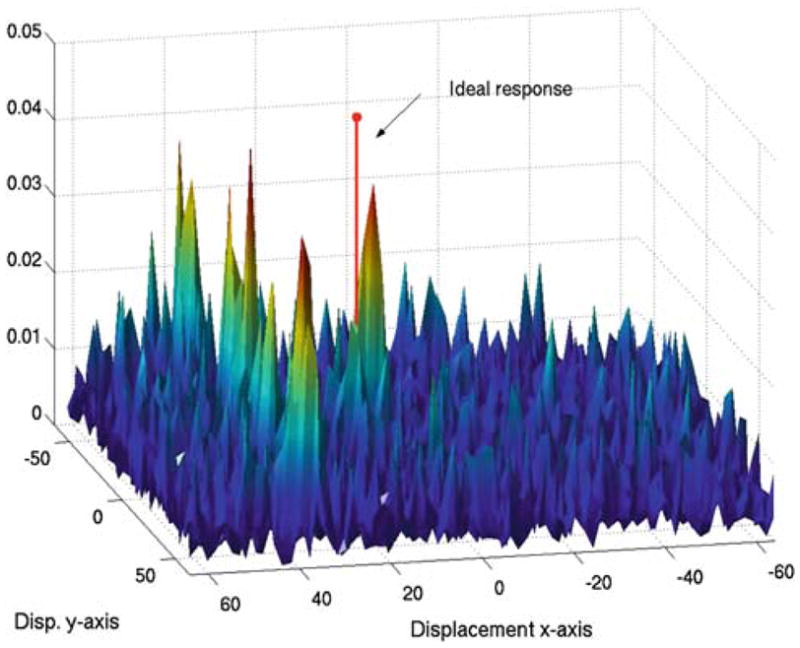

Since we seek to register images from different modalities, phase discrepancy makes PC, as defined in Eq. (1), impractical. To illustrate this, we simulated an US image of a cyst phantom using the simulator field II [4] (hereon we will use this example to illustrate our method). The simulation is based on a scattering map (Fig. 2b). This represents the power of the scattering sites distributed in the medium and is thus a realization of the underlying tissue density (and therefore closer to the CT image properties). Let us assume that the scattering map is translated x0 = [15, 10] in pixel units with respect to the ultrasound image. Figure 3 shows the PC function in the spatial domain when traditional PC is applied to the translated scattering map and the simulated ultrasound image. Instead of a Dirac delta function at x0, the PC function exhibits multiple local maxima and the global maximum is not located at the corresponding displacement location as desired.

Fig. 2.

Simulated ultrasound image (a) and the corresponding scattering map (b). These images are used to illustrate the phase discrepancy associated to the use of different modalities. The scattering map is a density map of the scatters element that forms the US signal. In our example, the scattering map can be associated with a CT image, or at least it has phase properties that are closer to a CT image of the same phantom

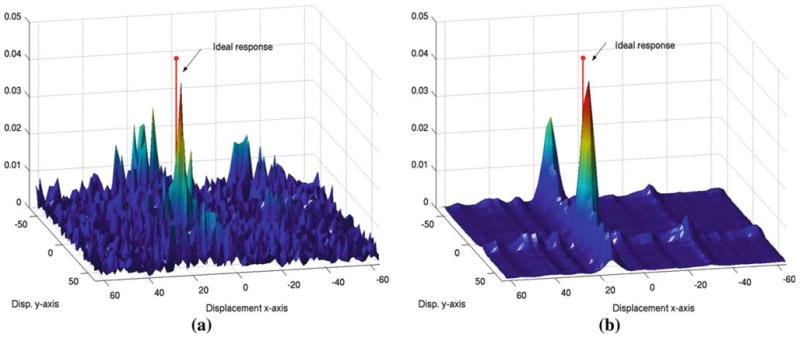

Fig. 3.

Traditional phase correlation, ΨfCTfUS, between images a and b in Fig. 2 when a displacement x0 = [15, 10] is applied

In summary, traditional PC methods cannot be directly applied to multimodality 2D-to-3D registration due to:

Different phase spectra content in the signals to registered: CT or MRI and US

Different signal dimensionality: we have to perform a 2D to 3D registration where the US data is given in a 2D plane with a given known orientation and the CT data is given in a 3D grid that has an initial positioning with respect to the 2D plane based on a initial registration process when the patient is place in the OR table.

Method

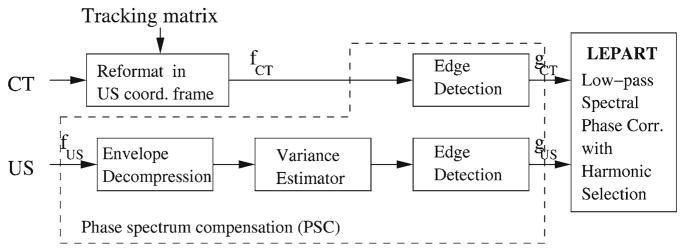

Here we present a new PC-based method which offers a robust approach to multimodality registration, specially for the registration of US to CT. Figure 4 outlines our approach, which we now discuss in detail:

Fig. 4.

Approach to multimodality registration based on phase correlation

Phase spectrum compensation

The first step of our method is to produce phase compensation between the CT and the US signal by preprocessing the signals from both modalities. This step is aimed at suppressing the phase discrepancy term, Φd (u).

Ultrasound preprocessing

Our approach is similar to the one followed by other authors [7,8] where the registration is achieved using edge information. The ultrasound signal is generated by a scattering process which causes characteristic speckle noise. Although the speckle carries useful information about the object medium, this information has a phase component that is not present in the CT image; therefore reducing the speckle component can improve the registration process.

Before performing the edge detection, the US signal is processed in the following manner:

The RF ultrasound signal is usually compressed to accommodate the dynamic range of the display device. This compression has been suggested to be a logarithm mapping [5], fUSc = D log(fUS) + C. To recover the RF signal we compute the compression parameter D using the method proposed by Kaplan and Ma [5] based on second order moment of the log-compressed Rayleigh distribution.

-

After that, we estimate the scattering power map, σUS(x), from the uncompressed ultrasound image using the known statistic of the radio frequency (RF) envelope magnitude. Under ideal conditions and assuming that the medium contains a high number of randomly distributed scatterers, the ultrasound signal follows a Rayleigh distribution [17]. The Rayleigh distribution is specified by one parameter, the variance or power of the scatters, that can be estimated in a Maximum-Likelihood sense as

(2) where

is a squared neighborhood around location x and N is the number of samples in the neighborhood. Recovering this variance yields an estimation of the scattering map for that ultrasound image.

is a squared neighborhood around location x and N is the number of samples in the neighborhood. Recovering this variance yields an estimation of the scattering map for that ultrasound image.

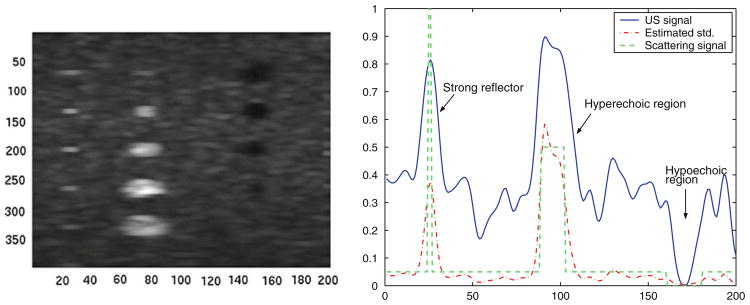

An edge detection based on the magnitude of the gradient of the estimated scattering signal, σUS, is then applied. To illustrate this process, Fig. 5 shows the estimated σUS for a horizontal scan-line corresponding to the cyst phantom shown in Fig. 2a. We observe that the estimated signal closely approximates the scattering map. The edge detection is performed over the estimated scattering map. The edge image is smoothed with a Gaussian kernel to remove high frequency components corresponding to spurious edges.

Fig. 5.

Estimated scattering map, σUS(x), after decompression and variance estimation. Left Scattering map image corresponding to the ultrasound image shown in Fig. 2a. Right Horizontal line comparing the US signal, the estimated scattering map by the proposed variance estimator and the real scattering map

CT preprocessing

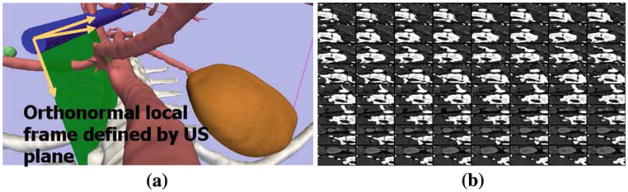

The CT is reformatted in the coordinate system of the ultrasound B-scan plane using the tracking information [2]. Figure 6a shows an schematic view of the frame attached to the US probe, as defined by the tracking information, that is used to locally reformat the CT volume. Figure 6b shows the corresponding reformatted CT as an overlay. Each image tile corresponds to one z-slice in the local CT volume. A field-of-view spanning a large enough region is usually defined based on the expected maximum displacement that can be recovered.

Fig. 6.

CT volume reformatted in the local frame defined by the US probe. a shows an illustration of the US B-scan plane and the local frame attached to the plane that is used to reformat the CT volume. b shows a tiled view of the reformatted CT images corresponding to the US plane shows in a

Since boundaries between tissues with different CT densities may show an acoustic impedance, we define acoustic interfaces as areas with a gradient magnitude in the CT. Following on with our illustrative example, Fig. 7a shows the result of applying the traditional PC method after the phase spectra has been compensated. In this case, the global maximum is close to the ideal solution. Let us denote gCT and gUS as the resulting signals after the phase spectrum compensation (PSC) process for the CT and the US images respectively.

Fig. 7.

Phase correlation after phase spectrum compensation (a) and after spectral projected phase correlation (b). Note how spectral projection reduces the amount of noise in the phase correlation image; however, two local maxima still remains

LEPART: Low-pass spEctral Phase correlAtion with HaRmonic selecTion

Although the previous preprocessing introduces a normalization of the signal phase spectra, additional terms remain that preclude a robust estimation of the translation using the traditional PC method. To overcome this problem, we have developed a new method based on PC named LEPART. This method has two major components: (1) low dimensional spectral projection of the PC function into a rank-1 approximation and (2) harmonic selection.

Spectral projection

This stage follows the method proposed by Hoge [12] for accurate PC. In 2D, the PC matrix is ideally a rank-1 matrix; therefore the estimation of the displacement can be done by finding the best rank-1 matrix that approximates the estimated correlation matrix from the data. This can be achieved by means of singular value decomposition (SVD). SVD is a spectral technique that projects a matrix onto a set of r orthogonal eigenvectors that span the closest linear r-dimensional subspace to that matrix. In this case, finding the largest singular eigenvectors (one per spatial dimension) yields the best rank-1 approximation to the correlation method. This method has been also extended to deal with multiple dimensions [13] by means of the higher-order SVD [1]. Before applying the higher-order SVD, the PC is masked with a rectangular window to remove high frequency components that introduce noise into the projection and spectral distortion due to aliasing and border effects (as well as reducing the number of data points to be analyzed through the higher-order SVD). The windowed PC is defined as

| (3) |

where ΠBc (ui) is the rectangular function along dimension ui with width 2Bc. Then, the windowed PC is decomposed using the higher-order SVD

| (4) |

where qi (ui) is a complex eigenvector that spans the projection of Φw(u) along the dimensions i. The projected PC for our example is shown in Fig. 7b. It is interesting to note that a clear global maximum exists, although other extrema appear. The translation for each dimension can be independently worked out with sub-pixel resolution by fitting a line to the unwrapped phase of each 1D signal qi (ui) in a least squares sense. This is one of the main advantages of the spectral projection method. Following with our example, Fig. 8a shows the unwrapped phase of the eigenvectors corresponding to the axis x and y.

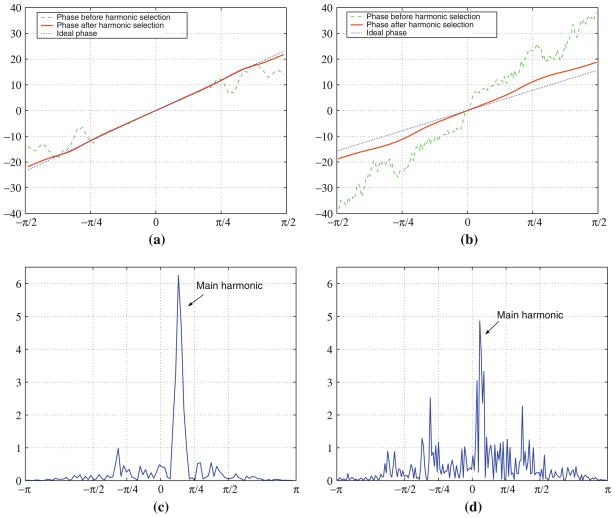

Fig. 8.

Harmonic selection for the projected phase correlation. a and b shows the phase of the filtered eigenvectors and , respectively. c and d shows the Fourier transform of qx (ux) and qy (uy), respectively

Harmonic selection

As shown in Figs. 7b and 8a, the unwrapped phase of qi (ui) can be in some instances highly non-linear. This non-linear behavior is due to the presence of several harmonics in the projected phase that correspond to multiple potential fittings. Harmonics can appear due to remaining structured components of the phase discrepancy. To increase the robustness, we have therefore implemented a harmonic selection process.

The harmonic selection stage works as follows. The optimal harmonic, , is computed by finding the principal harmonic of qi (ui) by means of its Fourier transform

| (5) |

where υi is the frequency coordinate of qi and H (υi) is a Hamming window that is designed to give more importance to low frequency harmonics, therefore smaller rather than larger displacements. Once the harmonic frequency has been found, the filtered eigenvector is computed by applying a band pass filtered centered at .

| (6) |

where Bhc is the bandwidth of the bandpass filter.

The result of applying the harmonic selection to our examples is shown in Fig. 8. The Fourier magnitude spectra of qx (ux) and qy (uy) is shown in Fig. 8c, d, respectively. The filtered eigenvectors are shown in Fig. 8a, b for the displacement in x and y respectively. It is noticeable that the phase term is quite linear after the harmonic selection and comparably close to the linear phase induced by the applied translation.

Linear least squares fitting

As mentioned above, the last step in LEPART is a least squares fitting of a line to the unwrapped phase of for each axis. The slope of the fitting corresponds to the estimated translation term x̂0 under consideration.

2D/3D registration

The approach outlined so far assumes that the images to be registered have the same dimensionality. In our problem this is not the case: the ultrasound image is defined in a 2D plane and the CT image is a 3D volume reformatted in the coordinates defined by the ultrasound plane.

The ultrasound B-scan plane can be rewritten as a 3-dimensional function by means of the discrete delta function as

| (7) |

where (x1, x2, x3) is the 3D discrete coordinate system of the reformatted CT. Then, the 3D Discrete Fourier transform of Eq. (7) is given by

| (8) |

where δ is the discrete delta function [11], * is the convolution operator and L is the number of slices along the u3 axis. In summary, the 3D Fourier representation of the 2D ultrasound plane is, no more no less, the replication the 2D spectrum along the new axis. Then, the LEPART method is applied in 3D, where the 3D Fourier representation of the US signal is constructed by replicating its 2D spectrum. The spectrum replication comes at no additional computational cost.

In summary, LEPART is a PC method for the registration of 2D ultrasound to 3D CT. After the PSC stage, the ultrasound edge image, gUS(x1, x2), is transformed to the Fourier domain and the spectrum is replicated according to Eq. (8). The CT edge volume, gCT(x1, x2, x3), is reformatted in the local coordinate frame given by the US and is also transformed to the Fourier domain. Then, LEPART is applied as described in “LEPART: Low-pass spEctral Phase correlAtion with HaRmonic selecTion”.

Results

Phantom study

The adequacy and accuracy of the proposed method for endoscopic and laparoscopic surgery has been validated by means of an abdominal ultrasound phantom (Fig. 9). The phantom consists of a ultrasound pad with several hypo and hyper-echoic regions. The phantom was scanned in a Siemens Sensation Somaton 64 Slice CT scanner with 0.6 mm isotropic voxel size. A sequence of ultrasound images have been acquired using a laparoscopic US probe (BK-Medical). The images were taken under controlled conditions so registration error was minimal. Additional, manually extracted features in both the CT and US were used to increase the registration accuracy between both modalities. This was considered our gold standard for validation. We selected 5 ultrasound slices where different tumors were visible. The CT was reformatted in a 0.3 mm isotropic grid and 20 mm × 5 mm × 20 mm margins were added in x, y and z direction respectively for each US image. We drew 500 realization for each location of uniformly random shifts in the range −5 to 5 mm. The parameters used in our algorithm were: Bc = π/8, Bhc = π/12,  = [7, 7]. The registration error results are shown in Table 2.

= [7, 7]. The registration error results are shown in Table 2.

Fig. 9.

Model of the abdominal US phantom used for validation. The blue phantom (Blue Phantom, Kirkland, WA) is made of silicone with acoustic properties similar to human tissue. The phantom has different cystic regions with different echogenicity. The phantom was mounted on a casing simulating a human torso that can be used to explore the region using either an endoscopic or laparoscopic probe

Table 2.

Numerical analysis of LEPART as a robust multimodality registration method by Monte Carlo simulation

| Method | RMS Registration Error (mm) |

Execution timea (s) | |||

|---|---|---|---|---|---|

| Mean | SD | Min | Max | ||

| Spectral PC [13] without PSC | 12.997 | 0.178 | 12.896 | 13.281 | 3.73 |

| Spectral PC [13] with PSC | 3.376 | 2.599 | 1.135 | 14.241 | 3.85 |

| LEPART without PSC | 1.799 | 0.681 | 0.328 | 4.856 | 3.92 |

| LEPART with PSC | 1.558 | 0.781 | 0.179 | 4.414 | 4.25 |

Mean execution time in an Intel Centrino 2 GHz and Matlab implementation

LEPART with PSC achieves a registration whose RMS error is less than 2 mm. LEPART seems to be a robust approach to multimodality image registration even when PSC is not applied, therefore accuracy can be sacrificed for the sake of performance. We have noticed that Bc plays an important role in finding a stable solution, albeit limiting the range of displacement that can be recovered. Increasing Bc above π/4 leads to unstable results. The results for one of the realizations before and after registration are shown in Fig. 10.

Fig. 10.

Example of one of the registrations for one of the tumor a before and b after applying LEPART with PSC

Retrospective study

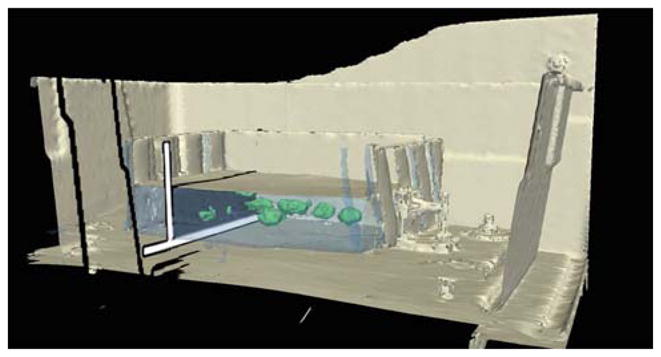

We have tested our method under real surgical conditions using a retrospective specimen abdominal study. A laparoscopic experiment was conducted in a porcine model using the system described in Sect. 2. A preoperative CT scans was acquired with a Siemens Sensation 64 with contrast-enhancement to visualize vascular structures. The CT resolution was 0.6 mm isotropic. The US images were provided by a BK Panther Laparoscopic Ultrasound system. The laparoscopic probe was equipped with an electromagnetic tracker (Micro-BIRD, Ascension Technology Corp., Burlington, VT), and was connected to the computer through a PCI board. The sensor attached to the endoscope tip was 1.8 mm in diameter and 8.4 mm in length. Data from the US system and the tracking system were recorded using our navigation system for two targeted structures: the celiac-aorta branch with Doppler enabled and the right kidney. The acquisitions were done using the nominal system operation after an initial rigid body registration. The goal was to successfully recover the displacement using LEPART retrospectively.

The results for the celiac-aorta branch are shown in Fig. 11. Figure 11a depicts the clear displacement in the z-direction with respect to the US probe. While the branch is imaged in the US, as it is reflected in the Doppler signal shown in Fig. 11b, the reformatted CT provided by the system, shown in Fig. 11c, does not align with the underlying US. The results after applying LEPART is shown in Fig. 11d. The displacement recovered by LEPART was (−0.6, −7.5, 2.7) mm defined with respect to the local US frame. We can see how the method is able to recover the z-displacement until the branch point is clearly visible while it was missing using standard tracked US, as depicted in Fig. 11a. Being able to recover z-displacements is critical given that in plane misregistration can be easily identified by the tracked US system operator.

Fig. 11.

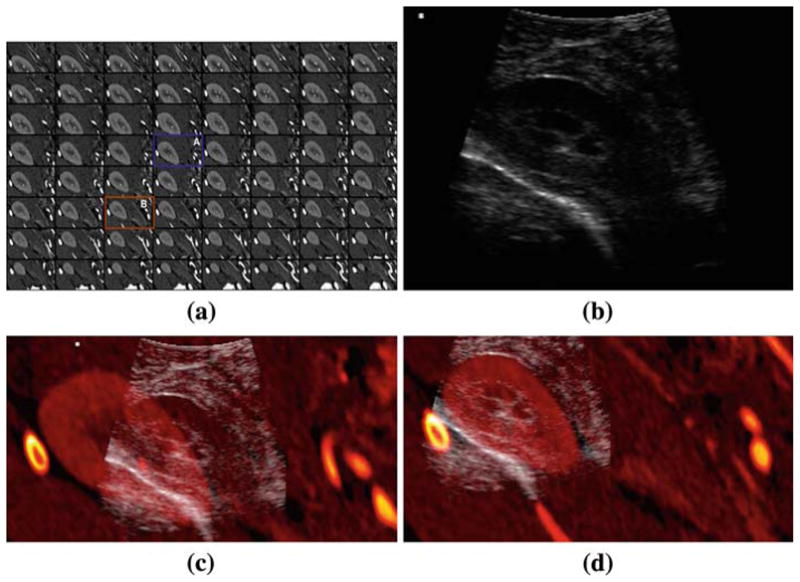

Registration in an animal model: celiac-aorta branch. a 3D view of the tracked probe with respect to the anatomy extracted from CT before applying the proposed registration method. b Ultrasound image of the celiac-aorta branch corresponding to the 3D view shown in (a). Fusion of the CT and US before (c) and after (d) registration. The branch point is successfully recovered in the reformatted CT view after registration

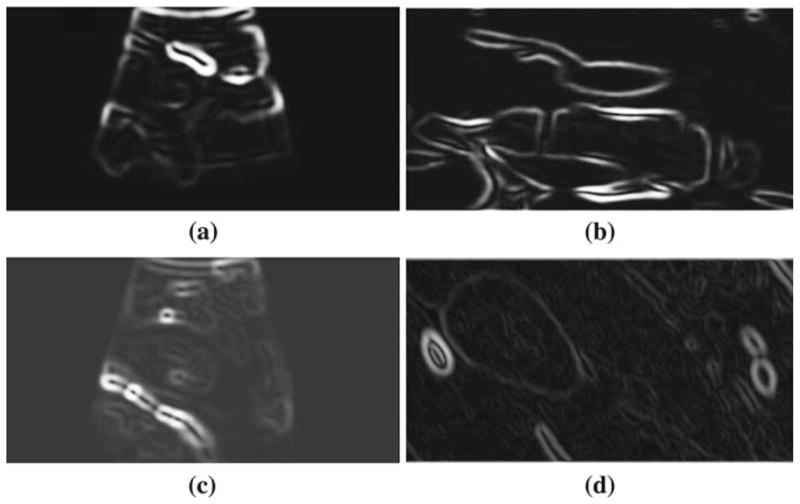

The registration result for the kidney case is shown in Fig. 12. The displacement computed by LEPART was (−9.6, −16.8, −4.5) mm. Both an out-of-plane and in-plane displacement is recovered to yield a good alignment of the outermost surface of the kidney, as well as the internal capsule. The registration worked reasonably well given the amount of edge content present in the presented cases. The edge maps generated by the PSC step described in Sect. 5, that are used as inputs for LEPART, are shown in Fig. 13. LEPART is able to recover the discrepancy between the images even though some edges are either spurious or partially missing in the US source.

Fig. 12.

Registration in an animal model: right kidney. a Sequence of the CT volume reformatted in the local frame given by the US probe coordinate system. b The CT slice corresponding to the US plane before (A) and after (B) registration are highlighted. Fusion of the CT and US before (c) and after (d) registration. The kidney surface observable in the US is accurately aligned with the surface defined by CT

Fig. 13.

Edge images used by LEPART as input data to generate the registration results. a and b depicts the edge images from US (gUS) and CT (gCT), respectively, for the celiac-aorta branch case. c and d shows the same edge maps for the kidney case. For the CT edge images, only one slice is shown corresponding to the optimal slice resolved by the registration algorithm

Discussion

The presented registration framework has been developed to be used with abdominal laparoscopic and endoscopic ultrasound images without relying on matching specific geometric characteristics. Instead, it uses edge information between tissues with different acoustic properties and CT densities to perform PC. In this sense, our approach is not organ specific. However, the edge features must be present in both the US and CT images. This is not always the case, due to the aberrations and distortions usually encountered in US imaging, for example shadowing. In our experience, the kidneys and the vascular structures in the abdomen present enough contrast and edge information to guide the registration process. As long as minimal conditions are met for the amount of structural content both in the US and the CT images, the algorithm is not organ-specific per se.

Edge information has been used as the main intermediate modality to achieve PSC and be able to deal with the multimodality nature of the problem. However, other approaches to PSC are possible and recent works on simulation of US images from [10,19] have shown encouraging results. In turn, the simulated US is used in conjunction with the acquired US to perform the registration. It is our interest to explore how LEPART performs when using the simulated US as its input. We expect to exploit the phase information contained in tissues with well-defined speckled characteristics that we are not currently benefiting from.

Our results show that the registration is achieved within a few seconds on a conventional laptop PC. A specialized implementation using advanced computing techniques that leverage the Graphics Processing Unit (GPU) is expected to achieve at least 10 frame per seconds registration update, which should suffice for clinical use. This target frame rate is based on the fact that the underlying core operation of the presented approach is the FFT. It has been shown that the FFT can be optimally implemented on a GPU [6]. Such implementations are currently commercially available in CUDA, the parallel computing architecture developed by NVIDIA.

Our current method poses several limitations that have to be considered. LEPART is only capable of recovering small translations of more or less rigid structures, which may limit its utility to reduced field of view imaging volumes. Larger displacements and other types of deformations might be accommodated by applying a fit of the deformation model over a temporal window. Another major limitation is related to the fact that enough structural information has to be present in the US images to achieve a successful registration. During a regular intervention, some US frames lack any information at all, mostly due to shadowing or poor acoustic coupling. Those frames ideally should not be considered in the registration process. An automatic method to perform frame selection would be desired before LEPART, or even any other registration method, is applied. We are currently exploring different alternatives based on the information content provided by the US image. Lastly, the current study only presents a phantom validation that may reveal limited information about the registration accuracy of LEPART in a clinical situation, future research lines will include an in-vivo validation of LEPART. The clinical registration examples were based on a porcine animal model. Further research is needed to assess the performance of LEPART in human studies.

The systems which may clinically use LEPART are comparatively low in cost. They consist of a personal computer workstation with parallel graphics capability, a flat panel display, and an electromagnetic tracker system. At current commercial prices, this system can be implemented in an endoscopy or laparoscopy suite for less than $15,000. In addition, the system requires very little training; as shown in prior work [15,16]; new users use it effectively with little orientation and no training.

Conclusions

In this paper, we have presented a multimodality registration method for preoperative CT images and intraoperative US endoscopic images to guide endoscopic interventions. Unlike traditional registration methods, the registration paradigm presented in this paper is unique in that:

The registration technique can potentially work in a real-time mode producing continuous updates of the changes between the preoperative image and the US image.

The registration technique is local to the location of the US frame. The locality of the image source allow us to use the assumption of quasi-translational misalignments.

LEPART appears to be a fast and robust technique for near real-time multimodality registration (under 5 s) of 2D to 3D data sets. For the cases studied, the LEPART approach gives significantly smaller registration error (Table 2) in comparison to traditional PC techniques.

Acknowledgments

Portions of this work were sponsored by NIH grant U41 RR019703, CIMIT and the US Department of the Army under DAMD 17-02-2-0006. The information does not necessarily reflect the position of the government and no official endorsement should be inferred. Carl-Fredrik Westin was supported in part by the NIH grants R01 MH074794, P41 RR13218.

Contributor Information

Raúl San José Estépar, Email: rjosest@bwh.harvard.edu, Laboratory of Mathematics in Imaging, Brigham and Women’s Hospital, Boston, MA, USA, Surgical Planning Laboratory, Brigham and Women’s Hospital, Boston, MA, USA.

Carl-Fredrik Westin, Email: westin@bwh.harvard.edu, Laboratory of Mathematics in Imaging, Brigham and Women’s Hospital, Boston, MA, USA, Surgical Planning Laboratory, Brigham and Women’s Hospital, Boston, MA, USA.

Kirby G. Vosburgh, Email: kvosburgh@partners.org, Surgical Planning Laboratory, Brigham and Women’s Hospital, Boston, MA, USA. Center for Integration of Medicine and Innovative Technology (CIMIT), Boston, MA, USA

References

- 1.de Lathauwer L, de Moor B, Vandewalle J. A multilinear singular value decomposition. SIAM J Matrix Anal Appl. 2000;21(4):1253–1278. [Google Scholar]

- 2.San José Estépar R, Stylopoulos N, Ellis R, Samset E, Westin C-F, Thompson C, Vosburgh K. Towards scarless surgery: an endoscopic ultrasound navigation system for transgastric access procedures. Comput Aided Surg. 2007;12(6):311–324. doi: 10.3109/10929080701746892. [DOI] [PubMed] [Google Scholar]

- 3.Foroosh H, Zerubia J, Berthod M. Extension of phase correlation to sub-pixel registration. IEEE Trans Image Process. 2002;11(3):188–200. doi: 10.1109/83.988953. [DOI] [PubMed] [Google Scholar]

- 4.Jensen JA. Field: a program for simulating ultrasound systems. Proceedings of the 10th Nordic-Baltic conference on Biomedical imaging, medical and biological engineering and computing in tampere; 1996. pp. 351–353. [Google Scholar]

- 5.Kaplan D, Ma Q. On the statistical characteristic of log-compressed rayleigh signals: theoretical formulation and experimental results. J Acoust Soc Am. 1994;95(3):1396–1400. [Google Scholar]

- 6.Kenneth M, Edward A. The FFT on a GPU. SIG-GRAPH/Eurographics workshop on Graphics hardware 2003 proceedings; 2003. pp. 112–119. [Google Scholar]

- 7.Leroy A, Mozer P, Payan Y, Troccaz J. Rigid registration of freehand 3D ultrasound and CT-scan kidney images. Lect Notes Comput Sci MICCAI 04. 2004;3216:837–844. [Google Scholar]

- 8.Malandain G, Roche A, Pennec X, Ayache N. Rigid registration of 3-D ultrasound with MR images: a new approach combining intensity and gradient information. IEEE Trans Medical Imaging. 2001;20(10):1038–1048. doi: 10.1109/42.959301. [DOI] [PubMed] [Google Scholar]

- 9.Penney GP, Blackall JM, Hamady MS, Sabharwal T, Adam A, Hawkes DJ. Registration of freehand 3D ultrasound and magnetic resonance liver images. Med Image Anal. 2004;8:81–91. doi: 10.1016/j.media.2003.07.003. [DOI] [PubMed] [Google Scholar]

- 10.Shams R, Hartley R, Navab N. Real-time simulation of medical ultrasound from CT images. Medical image computing and computer-assisted intervention (MICCAI); New York, USA. September 2008; 2008. pp. 734–741. [DOI] [PubMed] [Google Scholar]

- 11.Soliman SS, Srinath MD. Continuous and discrete signals and systems. Prentice-Hall; Upper Saddle River: 1990. [Google Scholar]

- 12.Scott HW. A subspace identification extension to the phase correlation method. IEEE Trans Medical Imaging. 2003;22(2):277–280. doi: 10.1109/TMI.2002.808359. [DOI] [PubMed] [Google Scholar]

- 13.Scott HW, Westin C-F. Identification of translational displacements between N-dimensional data sets using the high order SVD and phase correlation. IEEE Trans Image Process. 2005;14(7):884–889. doi: 10.1109/tip.2005.849327. [DOI] [PubMed] [Google Scholar]

- 14.Stylopoulos N, Vosburgh K. Assessing technical skill in surgery and endoscopy: a set of metrics and an algorithm (C-PASS) to assess skills in surgical and endoscopic procedures. Surg Innov. 2007;14(2):113–121. doi: 10.1177/1553350607302330. [DOI] [PubMed] [Google Scholar]

- 15.Vosburgh KG, Stylopoulos N, San José Estépar R, Ellis RE, Samset E, Thompson CC. EUS and CT improve efficiency and structure identification over conventional EUS. Gastrointest Endoscopy. 2007;65(6):866–870. doi: 10.1016/j.gie.2006.09.021. [DOI] [PubMed] [Google Scholar]

- 16.Vosburgh KG, Stylopoulos N, Thompson CC, Ellis RE, Samset E, San José Estépar R. Novel real time tracking interface improves the use of laparoscopic and endoscopic ultrasound in the abdomen. Int J Comput Assist Radiol Surg. 2006;1(Suppl 1):282–284. [Google Scholar]

- 17.Wagner RF, Smith SW, Sandrik JM, Lopez H. Statistics of speckle in ultrasound b-scans. IEEE Trans Sonics Ultrason. 1983;30(3):156–163. [Google Scholar]

- 18.Wein W, Khamene A, Clevert D, Kutter O, Navab N. Simulation and fully automatic multimodal registration of medical ultrasound. Lect Notes Comput Sci MICCAI 07. 2007;4791:136–143. doi: 10.1007/978-3-540-75757-3_17. [DOI] [PubMed] [Google Scholar]

- 19.Wein W, Röper B, Navab N. Integrating diagnostic B-mode ultrasonography into CT-based radiation treatment planning. IEEE Trans Med Ima. 2007;26(6):866–879. doi: 10.1109/TMI.2007.895483. [DOI] [PubMed] [Google Scholar]

- 20.Wolfgang W, Shelby B, Ali K, Matthew RC, Nassir N. Automatic CT-ultrasound registration for diagnostic imaging and image-guided intervention. Med Image Anal. 2008;12(5):577–585. doi: 10.1016/j.media.2008.06.006. [DOI] [PubMed] [Google Scholar]