Abstract

Background

Optimal decision making requires that organisms correctly evaluate both the costs and benefits of potential choices. Dopamine transmission within the nucleus accumbens (NAc) has been heavily implicated in reward learning and decision making, but it is unclear how dopamine release may contribute to decisions that involve costs.

Methods

Cost-based decision making was examined in rats trained to associate visual cues with either immediate or delayed rewards (delay manipulation) or low effort or high effort rewards (effort manipulation). After training, dopamine concentration within the NAc was monitored on a rapid timescale using fast-scan cyclic voltammetry.

Results

Animals exhibited a preference for immediate or low effort rewards over delayed or high effort rewards of equal magnitude. Reward-predictive cues, but not response execution or reward delivery, evoked increases in NAc dopamine concentration. When only one response option was available, cue-evoked dopamine release reflected the value of the future reward, with larger increases in dopamine signaling higher value rewards. In contrast, when both options were presented simultaneously, dopamine signaled the better of two options, regardless of the future choice.

Conclusions

Phasic dopamine signals in the NAc reflect two different types of reward cost and encode potential rather than chosen value under choice situations.

Keywords: Dopamine, nucleus accumbens, decision making, reward, motivation, cost

Introduction

The ability to weigh the costs and benefits of potential actions is critical for adaptive decision making and is disrupted in numerous psychiatric disorders (1–3). For decisions that involve rewards, two fundamental costs include the amount of effort and the time investment that must be committed when seeking rewards. Cost-based decision making engages a specific network of brain nuclei including the nucleus accumbens (NAc) and midbrain dopaminergic neurons (4). Dopamine depletion or antagonism in the NAc produces profound deficits in operant responding when reinforcement is contingent upon high response costs (5), and NAc manipulations bias animals away from choices that involve high effort or long delays, even when those choices lead to larger or superior rewards (5, 6). NAc dopamine is also heavily implicated in behavioral responses to reward-paired cues and the ability of such cues to influence decision making. Reward paired stimuli evoke robust dopamine release in the NAc (7, 8), and the magnitude of dopamine signaling reflects complex information concerning the value of predicted rewards (9–12). Thus, dopamine release in the NAc may not only be necessary to overcome large costs, but may also facilitate choice behavior when available options have different costs. Here, we reveal that phasic dopamine transmission in the NAc reflects two different types of reward cost (effort and delay) when available options are limited, but encodes the value of the best option rather than the chosen option under decision situations.

Methods and Materials

NAc dopamine concentration was monitored in rats (n = 10) trained in either effort-based or delay-based decision tasks (Figures 1A and S1; also see Supplementary Methods in Supplement 1). In these tasks, rewards of equal magnitude (45mg sucrose pellets) were made available at either low or high value in pseudo-randomly ordered trials, with 90 total trials per behavioral session. On forced-choice trials (60 per session), distinct 5s cue lights signaled the available response option and future reward value. In the effort task, cues predicted low cost (FR1) and high cost (FR16) rewards, whereas in the delay task cues predicted immediate (FR1, 0s delay) and delayed (FR1, 5s delay) rewards. On forced choice trials, responses on the non-cued option constituted errors, which terminated the trial without reward. On free-choice trials (30 per session), both cue lights were presented simultaneously and either option (low or high cost and immediate or delayed reward) could be selected. These trials served to provide a behavioral index of value preference. Following initial training, all rats were surgically prepared for electrochemical recording in the NAc core (see Supplementary Methods in Supplement 1). After recovery and additional training, changes in NAc dopamine concentration were recorded in real time using fast-scan cyclic voltammetry during behavioral performance (n = 7 sessions from 6 animals for effort task; n = 8 sessions from 4 animals in delay task; see Figure S2 (in Supplement 1) for histological reconstruction).

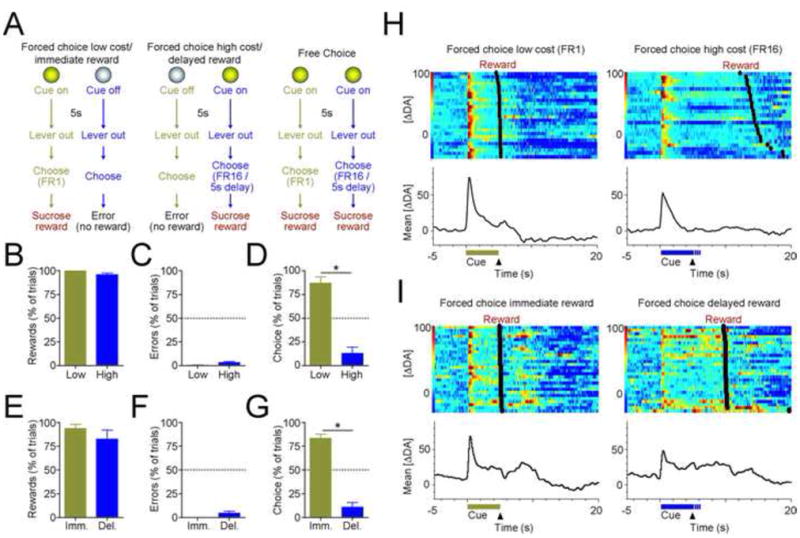

Figure 1.

Behavioral preference tracks reward cost. (A) Schematic representing effort-based or delay-based behavioral tasks. On forced-choice low cost/immediate reward trials (left panel), a cue light was presented for 5s and was followed by extension of two response levers into the behavioral chamber. A single lever press (FR1) on the lever corresponding to the cue light led to immediate reward (45 mg sucrose) delivery in a centrally located food receptacle. Responding on the other lever did not produce reward delivery and terminated the trial. On forced choice high cost/delayed reward trials, the other cue light was presented for 5s before lever extension. On these trials, a reward was delivered after either sixteen responses (FR16, effort based decision task) or a delay (FR1 + 5s delay, delay based decision task). Responses on the opposite lever terminated the trial and no reward was delivered. On free choice trials, both cues were presented, and animals could select either response option. After training, dopamine concentration in the NAc was measured using fast-scan cyclic voltammetry during a single 90-trial behavioral session. Behavioral performance in effort (B–D) and delay (E–G) based decision tasks during electrochemical recording. All data are mean ± SEM. (B,E) Percentage of possible rewards obtained on forced-choice trials. Animals overcame high effort requirements and reward delays to maximize rewards. (C,F) Percentage of errors on forced-choice trials in both tasks were significantly below chance levels (50%; p < 0.0001 for all comparisons), indicating behavioral discrimination between cues. (D,G) Response allocation on free-choice trials, as percentage of choices. Dashed line indicates indifference point. Animals robustly preferred the low cost and immediate reward options (* p < 0.01). (H,I) Behavior-related changes in dopamine across multiple trials for representative animals on effort (H) and delay (I) tasks. Heat plots represents individual trial data, rank ordered by distance from lever extension (black triangle) to reward delivery (black circles), whereas bottom trace represents average from all trials. Data are aligned to cue onset (horizontal bars). For all trial types, dopamine release peaks after cue presentation, but high value cues evoke larger increases in dopamine concentration.

Results

During behavioral sessions, animals readily overcame high effort demands or long delays to obtain rewards (Figure 1B, E) and discriminated between reward-predictive cues to reduce errors on forced-choice trials (error rates significantly below chance levels; p < 0.0001 for all comparisons; Figure 1C,F). On free-choice trials, animals exhibited a marked preference for low cost and immediate reward options over high cost and delayed reward options (paired t-test on choice allocation; p < 0.01 for both comparisons; Figure 1D,G), indicating that the value of future rewards was substantially discounted by heavy costs. See Supplementary Results (in Supplement 1) for additional behavioral findings.

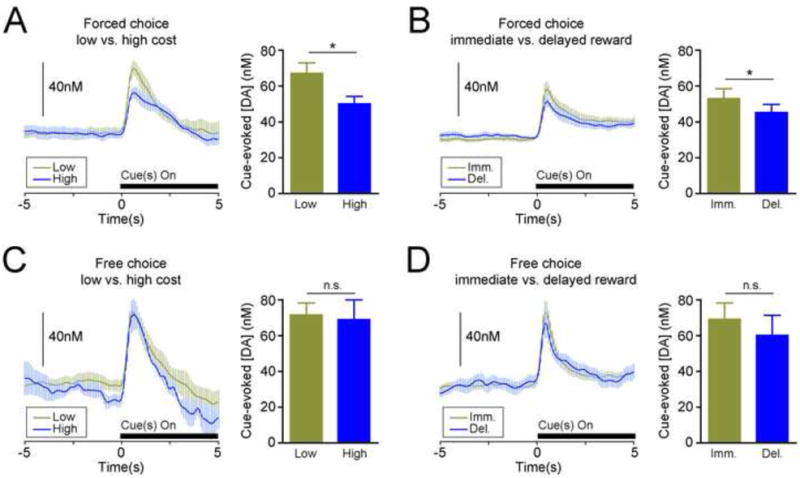

Consistent with previous reports (7, 8), reward-predictive cues in all trial types of both decision tasks evoked phasic increases in dopamine release (Figures 1H,I and S3 [in Supplement 1] for single-trial examples). Increases occurred immediately after cue presentation and were of short duration, as dopamine concentration returned to near baseline levels before lever extension and behavioral responding. Increases were not observed during lever press responses or reward delivery (Dunnett’s comparison with baseline; p > 0.05 for all trial types). Therefore, our analysis focused on investigating whether cue-evoked dopamine signals encoded future reward value or other decision parameters. On forced-choice trials, where cues were presented independently and only one option was available, cue-evoked dopamine release tracked the value of future rewards despite the type of cost involved. Low cost cues produced significantly larger increases in NAc dopamine concentration than high cost cues (paired t-test, t = 4.859, df = 6, p = 0.0028; Figure 2A). Likewise, immediate reward cues in the delay task evoked larger dopamine increases than delayed reward cues (t = 3.58, df = 7, p = 0.009; Figure 2B). Dopamine signaling in the nearby NAc shell showed the same trend, but cue-evoked dopamine differences did not reach significant levels (t = 1.803, p = 0.12; see Supplemental Results and Figure S4 in Supplement 1).

Figure 2.

Dopamine release in the NAc core encodes the value of the best available response. (A,B) Left panels: Change in dopamine concentration on forced-choice trials, aligned to cue onset (black bar). Right panels: Peak cue-evoked dopamine signal on forced choice trials. Cue presentation on low cost and immediate reward trials led to significantly larger increases in dopamine concentration than cue presentation on high cost and delayed reward trials, respectively. (C,D) Change in dopamine concentration (left panels) and peak dopamine signals (right panels) on free-choice trials. Conventions follow from A,B. On free-choice trials, cue-evoked dopamine signals did not reflect the value of the chosen option. All data are mean ± SEM. *p < 0.01. DA, dopamine; n.s., not significant.

Since high cost trials in the effort task also effectively imposed a delay between the initiation of responding and reward delivery (Figure S5 in Supplement 1), we next sought to determine whether dopamine signaling encoded effort in addition to reward delay, or encoded reward delay alone. As there was no difference in the average reward delay on high cost trials (5.57 ± 0.49s) and delayed reward trials in the delay task (one sample t-test; t = 1.16, df = 6, p = 0.29; Figure S5B in Supplement 1), we directly compared the difference in cue-evoked dopamine signals on forced choice trials between tasks (Figure S6 in Supplement 1). This analysis revealed that the difference between cue-evoked dopamine signals in the effort condition was significantly greater than the difference observed in the delay condition (unpaired t-test; t = 2.31, df = 13, p = 0.029; Figure S6B in Supplement 1). Specifically, the difference in dopamine evoked by low and high cost cues was more than double the difference in dopamine evoked by immediate and delayed reward cues, indicating that larger dopamine signals on high value trials were not completely explained by reward delay alone.

Cue-evoked dopamine release could signal the value of the best available response option or the value of the option that is eventually chosen (9), which are conflated on forced-choice trials. Therefore, we investigated dopamine signaling on free-choice trials (where the chosen outcome and best value are not always identical), by separating trials in which animals chose the lower value reward and the higher value reward. This analysis revealed that dopamine signals on free-choice trials were unrelated to the value of the chosen option (Figure 2C,D). Rather, the simultaneous presentation of both high and low value cues on free-choice trials produced no differences in NAc core dopamine release (effort-based task, t = 0.154, df = 3, p = 0.8871; delayed-based task, t = 0.817, df = 4, p = 0.4599). For both experiments, cue-evoked dopamine signals on free choice low value trials were not significantly different than cue-evoked signals on forced choice high value trials (paired t-tests; both p’s > 0.5). Thus, cue-evoked dopamine release on choice trials appeared to mimic the high-value dopamine signal rather than the low-value dopamine signal, even when the low-value action was subsequently chosen.

Discussion

Dopamine neurons encode a reward prediction error signal in which cues that predict rewards evoke phasic increases in firing rate, whereas fully expected rewards do not alter dopamine activity (13). This signal is also sensitive to a number of features of the upcoming reward, as cues which predict larger, immediate, or more probable rewards evoke larger spikes in dopamine neuron activity than cues which predict smaller, delayed, or less probable rewards (9–11, 14). In this way, dopamine neurons are thought to contribute to reward-based decision making by broadcasting the value of potential actions to striatal and prefrontal circuits that control motivated behavior. Here, we demonstrate that dopamine release in the NAc core faithfully reflects both the effort and temporal delay associated with future rewards. Cues that signaled low cost, immediate rewards evoked greater increases in dopamine concentration than cues that signaled high cost, delayed rewards. The present results reveal that the cost-discounted value of impending rewards is also integrated with reward-prediction signals within the NAc, which could be critical for tuning optimal behavioral performance in the face of different costs. As such, the present results may help explain why dopamine manipulations in the NAc produce such drastic effects on cost-based decision making (15–17).

Efficient reward-related decision making requires that organisms discriminate between the costs of available response options under choice situations. Importantly, value-based dopamine signals could presciently encode either the value of a future choice or the value of the best available choice, regardless of the future decision (9, 18). Here, free-choice trials were used to reveal a behavioral preference for low cost rewards, but also allowed us to examine which type of valuation signal was encoded by NAc dopamine release. Faced with different options, cue-evoked dopamine signals on these trials did not differ based on the future choice, but instead reflected the high value option. Such signals are consistent with recent reports of dopamine neuron activity (9) and suggest that in situations in which decisions are required, NAc dopamine transmission encodes the value of the best available option instead of the value of the subsequently chosen option (see Supplemental Materials in Supplement 1 for further discussion).

Supplementary Material

Acknowledgments

The authors would like to thank Mitchell F. Roitman, Robert A. Wheeler, and Brandon J. Aragona for helpful discussions, and Kate Fuhrmann, Laura Ciompi, and Jonathan Sugam for technical assistance.

Footnotes

Financial Disclosure: This research was supported by NIDA (DA 021979 to J.J.D., DA 017318 to R.M.C. and R.M.W., & DA 10900 to R.M.W.). All authors report no biomedical financial interests or potential conflicts of interest.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Green L, Myerson J. A discounting framework for choice with delayed and probabilistic rewards. Psychological Bulletin. 2004;130:769–792. doi: 10.1037/0033-2909.130.5.769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Heerey EA, Robinson BM, McMahon RP, Gold JM. Delay discounting in schizophrenia. Cogn Neuropsychiatry. 2007;12:213–221. doi: 10.1080/13546800601005900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Scheres A, Tontsch C, Thoeny AL, Kaczkurkin A. Temporal Reward Discounting in Attention-Deficit/Hyperactivity Disorder: The Contribution of Symptom Domains, Reward Magnitude, and Session Length. Biol Psychiatry. 2009 doi: 10.1016/j.biopsych.2009.10.033. [DOI] [PubMed] [Google Scholar]

- 4.Croxson PL, Walton ME, O’Reilly JX, Behrens TE, Rushworth MF. Effort-based cost-benefit valuation and the human brain. J Neurosci. 2009;29:4531–4541. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Salamone JD, Correa M, Mingote S, Weber SM. Nucleus accumbens dopamine and the regulation of effort in food-seeking behavior: implications for studies of natural motivation, psychiatry, and drug abuse. J Pharmacol Exp Ther. 2003;305:1–8. doi: 10.1124/jpet.102.035063. [DOI] [PubMed] [Google Scholar]

- 6.Cardinal RN, Pennicott DR, Sugathapala CL, Robbins TW, Everitt BJ. Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science. 2001;292:2499–2501. doi: 10.1126/science.1060818. [DOI] [PubMed] [Google Scholar]

- 7.Day JJ, Roitman MF, Wightman RM, Carelli RM. Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat Neurosci. 2007;10:1020–1028. doi: 10.1038/nn1923. [DOI] [PubMed] [Google Scholar]

- 8.Roitman MF, Stuber GD, Phillips PE, Wightman RM, Carelli RM. Dopamine operates as a subsecond modulator of food seeking. J Neurosci. 2004;24:1265–1271. doi: 10.1523/JNEUROSCI.3823-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 11.Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science. 2005;307:1642–1645. doi: 10.1126/science.1105370. [DOI] [PubMed] [Google Scholar]

- 12.Gan JO, Walton ME, Phillips PE. Dissociable cost and benefit encoding of future rewards by mesolimbic dopamine. Nat Neurosci. 2010;13:25–27. doi: 10.1038/nn.2460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 14.Fiorillo CD, Newsome WT, Schultz W. The temporal precision of reward prediction in dopamine neurons. Nat Neurosci. 2008 doi: 10.1038/nn.2159. [DOI] [PubMed] [Google Scholar]

- 15.Salamone JD, Arizzi MN, Sandoval MD, Cervone KM, Aberman JE. Dopamine antagonists alter response allocation but do not suppress appetite for food in rats: contrast between the effects of SKF 83566, raclopride, and fenfluramine on a concurrent choice task. Psychopharmacology (Berl) 2002;160:371–380. doi: 10.1007/s00213-001-0994-x. [DOI] [PubMed] [Google Scholar]

- 16.Salamone JD, Cousins MS, Bucher S. Anhedonia or anergia? Effects of haloperidol and nucleus accumbens dopamine depletion on instrumental response selection in a T-maze cost/benefit procedure. Behav Brain Res. 1994;65:221–229. doi: 10.1016/0166-4328(94)90108-2. [DOI] [PubMed] [Google Scholar]

- 17.Floresco SB, Tse MT, Ghods-Sharifi S. Dopaminergic and glutamatergic regulation of effort- and delay-based decision making. Neuropsychopharmacology. 2008;33:1966–1979. doi: 10.1038/sj.npp.1301565. [DOI] [PubMed] [Google Scholar]

- 18.Morris G, Nevet A, Arkadir D, Vaadia E, Bergman H. Midbrain dopamine neurons encode decisions for future action. Nat Neurosci. 2006;9:1057–1063. doi: 10.1038/nn1743. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.