Abstract

Objectives

To investigate whether pharmacy students' anonymous peer assessment of a medication management review (MMR) was constructive, consistent with the feedback provided by an expert tutor, and enhanced the students' learning experience.

Design

Fourth-year undergraduate pharmacy students were randomly and anonymously assigned to a partner and participated in an online peer assessment of their partner's MMR.

Assessment

An independent expert graded a randomly selected sample of the MMR's using a schedule developed for the study. A second expert evaluated the quality of the peer and expert feedback. Students also completed a questionnaire and participated in a focus group interview. Student peers gave significantly higher marks than an expert for the same MMR; however, no significant difference between the quality of written feedback between the students and expert was detected. The majority of students agreed that this activity was a useful learning experience.

Conclusions

Anonymous peer assessment is an effective means of providing additional constructive feedback on student performance on the medication review process. Exposure to other students' work and the giving and receiving of peer feedback were perceived as valuable by students.

Keywords: peer assessment, medication therapy management, assessment

INTRODUCTION

Peer assessment in higher education is effective,1-12 encourages students to take responsibility for their own learning, and helps them to gain a clear understanding of the standards expected of them.4-6 Peer assessment activities can promote genuine interchange of ideas, demonstrating to students that their experiences are valued and their judgements respected; and provides opportunities for students to reflect critically on their performance.4,6-9

Peer assessment involves the assessor reviewing, summarizing, clarifying, constructing feedback, and identifying incorrect knowledge and missing knowledge.13 These are all cognitively demanding activities, and students report that they are more critical and confident as a result of participating in peer assessment.5 Engaging in critical evaluation can also have generalized, overall positive effects on the evaluator's own work, and students often will subsequently review their own work within the context of their peer assessor role using the skills learned as a peer assessor.7,10 Reported benefits from peer assessment included improved skills in 3 areas: comparison of approaches, comparison of standards, and exchange of information.14 Peer assessment also can benefit academic staff members by reducing workload15 and providing new insights into students' learning processes.7 It also can encourage academic staff members to provide greater clarity regarding assessment objectives, purposes, criteria, and grading scales, and may increase a student's interest in these matters.10

In Australia, the expansion of clinical services provided by pharmacists includes medication management reviews (MMRs).16 In the Faculty of Pharmacy at the University of Sydney, all bachelor of pharmacy students take a clinical practice course in the second semester of their fourth-year, in which they have to complete 4 MMRs during a 100-hour clinical placement in order to meet assessment requirements. Real patient profiles are used for the MMRs and a variety of topic areas and levels of complexity are covered. One submitted MMR is assessed by the course tutor, and students are given grades and written feedback. For the remaining 3 reviews, students receive only a completion grade, and global feedback is provided to the class based on the instructor's scrutiny of a sample of the MMRs submitted.

To complete an MMR, students interpret the patient's history; identify, interpret, and evaluate a range of articles from the literature relevant to the patient's case; identify and prioritize therapeutic and other issues relevant to the short- and long-term management of the patient for consideration by a prescriber and/or other health care professional; make appropriate recommendations in the context of the patient's history; and finally, write an appropriately worded report for a prescriber or other health care professional. The task is consistent with the MMR program that is currently conducted in Australia by accredited pharmacists, with the goal of maximizing patient benefit from their medication regimen, and preventing medication-related problems through a team approach involving the patient's general practitioner and pharmacist.16 Pharmacists are accredited to conduct MMRs across all disease states.

In their subject evaluations, students have consistently expressed dissatisfaction with the level of personal feedback they received for their MMRs. While academic staff members have endeavoured to provide as much quality feedback as possible, the large class size (approximately 180 students) has made this a challenging task for a relatively small teaching team. On the basis of its demonstrated effectiveness, not only in providing feedback but also helping students to develop important related skills, the instructors/academic staff members decided to incorporate an element of peer feedback into the assessment regime for this subject.

There are some caveats to peer assessment in pharmacy education, such as ensuring that students receive adequate training and understand the relevance of the peer assessment activity to their main assessment objectives.17-22 In the studies cited, peer assessment occurred in a variety of settings encompassing a range of topic areas and study designs, including triangulation of peer assessment scores with self-assessment and/or expert assessment. Results showed an inflated self-assessment when compared with the assessment of others. In the studies cited, peer assessment occurred in a variety of settings encompassing a range of topic areas and study designs, including triangulation of peer assessment scores with self-assessment and/or expert assessment. Results showed an inflated self-assessment when compared with the assessment of others

No previous study has investigated the use of peer assessment in teaching students the pharmacist's role in medication review. Our objective was to investigate the effectiveness of using student peer assessment of MMRs, and determine (1) whether the feedback provided by students on a peer's MMR was constructive; (2) whether it was consistent with the feedback provided by an expert; and (3) the extent to which the use of peer feedback enhanced the students' learning experience.

DESIGN

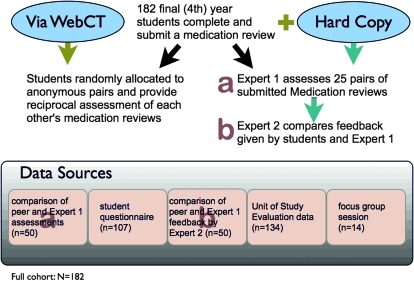

Ethical approval for the study was obtained from the Human Research Ethics Committee of the University of Sydney. Figure 1 provides a summary of the study procedures and the data sources. Instead of submitting 4 MMRs as previously required, students were asked to submit 2 for instructor assessment, and 1 for peer feedback. We estimated that the time and effort required to assess and provide feedback on a peer's work was comparable replacement for the fourth MMR.5 Students were provided with complete instructions on completing the MMRs and the peer assessment. An MMR submission form and flowchart of the MMR process was made available to students online as part of their course material (links to PDF versions of the documents are available from the author). The MMR submission form provided the students with precise and clear guidelines on what to include in their submitted MMR case, while the process flowchart provided them with the methodology to follow in preparing their findings and recommendation for their MMR case. Criteria for evaluation were articulated in the 2007 Clinical Practice subject outline (available from the author upon request). Logistical issues, such as the required tutorial rooms to conduct the tutorials and computers for the Internet-based part of the study, were all addressed prior to study commencement.

Figure 1.

Process used to conduct a peer assessment activity.

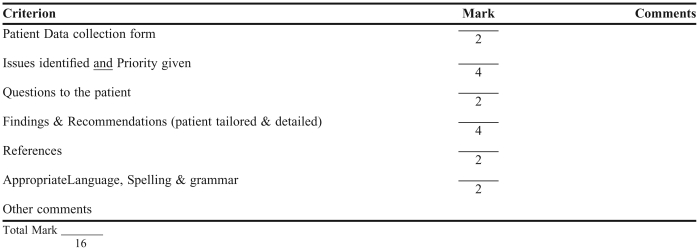

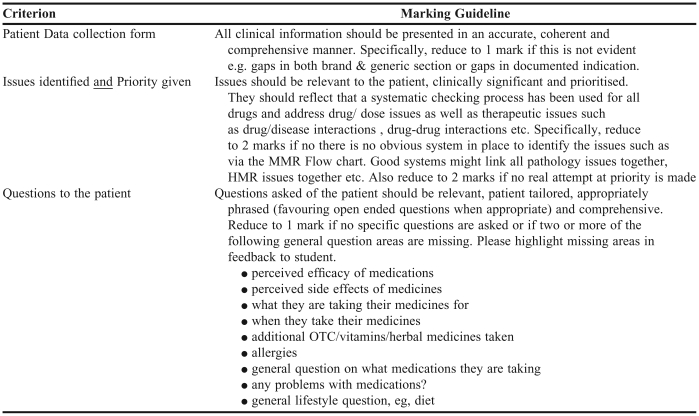

The fourth-year pharmacy students enrolled in the course undertook the revised assessment regime, which required completion of 2 stages by the students: (1) writing and submitting their own MMR and (2) providing written feedback and a grade (0 to 16 points) for a fellow student's MMR. A peer assessment schedule developed by experts and staff members was used to provide written comments (Appendix 1). Adequate guidance was provided in the schedule to ensure students understood the grading criteria.23 To avoid the scoring leniency and limited range of grades (usually at the high end of the rating scale) often seen in peer assessment, the specificity of the scoring criteria on the schedule was increased.24 Students received verbal in-class instructions from the course instructor on how to provide constructive peer feedback, which reiterated the set of written guidelines provided online/in the course materials.

The peer feedback activity was conducted online through a threaded discussion activity in WebCT (WebCT Campus Edition 6.1, Blackboard Inc, Washington DC). One hundred eighty-two students were randomly assigned to 1 of 91 groups of 2 students. Each pair's MMR documents were attached to their discussion group in WebCT, allowing them to download their peer's MMR and subsequently upload their peer assessment. The activity was configured so that participants were anonymous to each other,25 and could read only their partner's MMR and assessment. (Instructions for setting up this activity are available from the author.)

All students were invited to participate in the study. Participation involved students giving permission for their assessment submissions to be used as data in the study.

ASSESSMENT

Expert Evaluation

The MMRs of 25 student pairs (ie, 50 reviews) randomly sampled from the 91 pairs were assessed by an independent evaluator, expert 1 (a qualified pharmacist who was accredited in the preparation of MMRs in Australia16 and who was an experienced pharmacy teacher not involved in the teaching of this subject). The same evaluation schedule provided to the students (Appendix 1) was used. Expert 1 gave each MMR a score from 0 to 16 and provided written comments on each report. In the next stage, a second independent evaluator, expert 2 (also an accredited pharmacist and not involved in teaching this subject) evaluated the quality of the students' and Expert 1's written comments for the same randomized sample of the submitted MMRs (50 out of 182). The evaluator (Expert 2) used a feedback schedule developed for the purposes of the study based on Boud's1 framework for the giving and receiving of constructive feedback to assess the quality of the comments. The highest score possible for comment quality was 24.

Data Analysis

The relationship between the student peer and Expert 1 grades was investigated using one-way analysis of variance (ANOVA) with pair (1, …, 25) as a blocking factor, marker (expert, peer) as the treatment factor, and mark (out of 16) as the response variate. With the questionnaire data, the proportion of respondents who agreed and disagreed with each item was evaluated. For all statistical analyses, P values of ≤ 0.05 were considered significant. All ANOVA analyses were performed in GenStat, Release 9.1, software (Rothamsted Research, England) ANOVA assumptions for these 2 data sets, including homogeneity of variance and normality of residuals, were met.

A comparison of the quality of written feedback provided by student peers and expert 1 (as evaluated by expert 2) was undertaken via paired sign test in SPSS, version 15.0. This test was chosen as the assumption of homogeneity of variance for a paired t test and the assumptions regarding the shape of the distributions of the data for the Wilcoxon matched pairs test were not met. For all statistical analyses, P values of ≤ 0.05 were considered significant.

Findings

The grades given by expert 1 and the student peer assessor for each student's MMR (N = 182, in the age range 21-22 years, and comprising 65% females) were found to be significantly different (P < 0.001) – the peer assessors' grades (mean = 12.33 out of 16) were higher than the grades given by expert 1 (mean = 10.1 out of 16). The peer grades awarded to the students in each pair who submitted their work first were not significantly different from the peer grades awarded to the students in each pair who submitted their work second (P = 0.238).

The sign test indicated a marginal but not significant difference (P = 0.086) in the quality of written feedback between the students and expert 1, with student feedback being marginally better. However, the variances were markedly different with the students' feedback having a much wider range in quality (mean = 13.5 ± 5.4), than the feedback provided by expert 1 (mean = 12.1 ± 2.4). Hence, as judged by Expert 2, we conclude there was no consistent difference in the quality of written feedback provided by the students and that provided by expert 1.

Student Perceptions

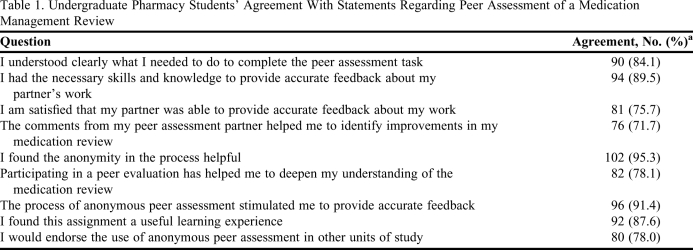

At the end of the semester, all students were invited to complete an anonymous questionnaire (Table 1) related to the peer feedback activity and their learning. The questionnaire consisted of nine 4-point Likert-scale items (strongly agree, agree, disagree, strongly disagree), with the opportunity to provide open-ended written responses for each item. The questionnaire investigated students' perceived ability to complete an MMR task; whether they felt they had the necessary skills to provide accurate feedback to their colleagues; their satisfaction with the feedback received from their colleagues; whether they felt the activity led to improvement in their ability to conduct an MMR, and whether they felt the activity provided a useful learning experience. A briefing session was held with students to familiarize them with the questionnaire and clarify any items before they completed it.

Table 1.

Undergraduate Pharmacy Students' Agreement With Statements Regarding Peer Assessment of a Medication Management Review

a Agreement = strongly agree + agree; disagreement = disagree + strongly disagree

At this time, students also were asked to complete the Unit of Study Evaluation (USE)26 questionnaire, which is part of the University of Sydney's standardized learning and teaching evaluation system. It contains 11 items related to key aspects of learning and teaching, such as clarity of outcomes and standards, teaching effectiveness, assessment and feedback to promote learning, and an “overall satisfaction” item. The USE focuses on the overall quality of the learning experience rather than teacher performance. We had administered the USE to students enrolled in the same subject in the previous year (N =146), thus enabling a comparison with the current cohort receiving the new intervention.

For the questionnaire data, the proportion of respondents who agreed and disagreed with each item was evaluated. In their questionnaire responses, the majority of students agreed that the peer assessment task was a useful learning experience (Table 1) and that they had the necessary skills to provide accurate feedback to their partner regarding their MMR. Similarly, 72% of students agreed that the comments from their peer assessment partner helped them to improve the way they conducted their MMR; and participation in this task helped 78% of students to deepen their understanding of the MMR process. Forty-one percent (44/107) of students added comments to their questionnaire responses.

Students' USE responses (n = 134) showed that more students than in the previous year (62% versus 45%) thought they were receiving timely feedback with regard to their progress in conducting MMRs.

Peer Review Focus Group

At the end of the semester, an open invitation was extended to the students to attend a focus group session to elicit further comment about the peer assessment activity. A series of open-ended questions was prepared by 1 of the authors (J.W.) as a basis for the semi-structured interview format and the session facilitated by that same person. The questions were:

(1) How did you feel about the process being anonymous? What would be your preference in this regard?

(2) What skills do you think are needed to give peer feedback? How confident are you that both you and your peer-review partner possess them?

(3) Before you completed your peer review, you received feedback from your tutor about your first Medication Review. To what extent did this help you provide feedback to your partner?

(4) What other information do you think was needed to help you with this task?

(5) How do you think this peer review process could be improved?

(6) Could you tell me what you've learned from this peer review process? How much do you think this can be applied in professional life?

(7) Any other comments?

Data from the open-ended written comments on the University's standardized Unit of Study Evaluation questionnaire together with a recording of the focus group conversation were summarized thematically. The focus group participants (n = 14) stated that prior to carrying out this peer assessment task, they “never” showed their work to other students. They valued the exposure to other students' work as it gave them the opportunity to gain different perspectives on the task. However, the consensus among focus group participants was that they put more work into their peer assessment than their partner did. In contrast to this, only one of those present stated that the partner's peer assessment was “unsatisfactory.” Some students also expressed concerns about the lack of an objective “right answer” or a “standardized” grade in the review they received. Students were also concerned that being linked in pairs created the possibility of a student “getting revenge” for a poor grade or highly critical feedback. In response to this concern, a comparison of the first and second grades allocated was conducted, but no significant difference was found. As in the questionnaire responses from students, focus group participants gave strong endorsement of maintaining anonymity in the peer assessment. It was mentioned that some students actually revealed their identity in the properties of their uploaded file; however, this was not seen by students as a cause for concern. It also emerged from focus group comments that the use of a grade caused the students to concentrate initially on the score they received rather than on their peer's comments. The group agreed that replacing numerical scores with terms such as “good,” “very good,” etc, would encourage students to provide more constructive feedback.

DISCUSSION

Our finding that peers gave higher marks to students than an independent expert is consistent with other studies that found less agreement on grades by students and staff members when students were inexperienced in the procedure and had not contributed significantly to the process of identifying the assessment criteria.5-7 The quality of the students' feedback to each other varied markedly, while the quality of the expert's feedback was more consistent. As a result, the potential value and usefulness of peer feedback for each recipient student would have differed. Students also communicated feedback to their peers regarding the level of attainment of the criteria for the task through the marks they awarded.

The numerical questionnaire data, together with the students' written comments, are consistent with the generally favorable view of peer assessment held by students that is evident in other studies.6,25,27-29 In order for peer assessment to work there must be: openness, trust, and clarity among instructors and students; an appropriate scientific framework to make the process a valid exercise25; and assurance that the process is fair and accurate.6 The strong agreement among students that they understood what they needed to do to complete the task is an endorsement of the guidelines that were provided both in class and in the assessment schedule. Also reassuring were the students' strong agreement that the task was a useful experience and that anonymous peer assessment should be used in other subjects.

Most of the negative questionnaire comments were confined to items 3 and 4, indicating that some feedback was not constructive, and either too detailed (or “picky”) or too general to be of use. Although these views were not reflected in the subsequent focus group discussion, previous researchers have noted that poor performers may not accept peer feedback as fair and accurate10 nd that better students tend to be more critical of their weaker peers and are prone to grade higher and over comment.25 Nevertheless, these students, with few exceptions, agreed that the peer assessment experience was useful and should be used in other course subjects. While acknowledging that a small proportion of the students did not evaluate the experience favorably, the questionnaire data support plans to expand the use of anonymous peer assessment within our different programs of study at both undergraduate and postgraduate levels. The substantial improvement in response to the USE item concerning timely feedback has also reinforced the value of this peer assessment activity.

The focus group data that (in one student's words) “I worked harder on the peer assessment than my partner did” revealed a strong perception within the group that their partners had not put as much effort into the peer assessment process as they had. The authors acknowledge that for practical reasons, the focus group was self-selected and thus may not have been representative of the student cohort. However, when asked if they were dissatisfied with the quality of the feedback they had received, only one of those present answered in the affirmative. A potential weakness in the design of this activity was revealed in the focus group session. Pairing the students to provide feedback to each other, and including a grade in the feedback, fostered an impression that some partners responded to highly critical feedback with a low “revenge” grade in return. Although evidence of this concern was not apparent in the actual grades given, the future use of grades (as opposed to students providing only verbal feedback) is being reconsidered. In addition, steps will be taken to ensure that peer feedback is revealed to all students simultaneously, for example, by requiring students to submit their peer assessments centrally and subsequently releasing all students' peer assessments at the same time. Students also valued the exposure to their peer's work as it gave them the opportunity to get a different perspective on the task. This outcome supports previous findings that peer assessment can allow for the cultivation of a greater understanding of the standards expected, enabling students to place their performance in relation to their peers.9

CONCLUSION

This study demonstrated the effectiveness of using anonymous peer feedback in an undergraduate pharmacy course as an additional means of providing constructive feedback on performance. Both the exposure to other students' work and the giving and receiving of peer feedback were perceived as valuable by the students. Students also reported confidence in the reliability of their peers' feedback. Peer assessment can promote the genuine interchange of ideas and demonstrates to students that their experiences are valued and their judgements respected. A future controlled study using more objective measures of student learning could determine whether students gained a deeper understanding of the medication review process through peer assessment of MMRs.

ACKNOWLEDGMENTS

The authors would like to acknowledge the research assistance provided by Lindsay Walker, Gabrielle Smith, and Carlene Smith.

Appendix 1. Schedule for Peer Assessment of Medication Management Review

Examples of marking guidelines that accompanied the peer assessment schedule:

REFERENCES

- 1.Boud D. London: Kogan Page; 1995. Enhancing learning through self assessment. [Google Scholar]

- 2.Fry H, Ketteridge S, Marshall S. London: Kogan Page; 1999. A handbook for teaching and learning in higher education: enhancing academic practice. [Google Scholar]

- 3. James R, McInnis C, Devlin M, University of Melbourne. Australian Universities Teaching Committee. Assessing learning in Australian universities: ideas, strategies and resources for quality in student assessment. Melbourne, Canberra: Centre for the Study of Higher Education; 2002. http://www.cshe.unimelb.edu.au/assessinglearning/docs/AssessingLearning.pdf. Accessed May 24, 2010.

- 4.Boud D, Cohen R, Sampson J. Peer learning and assessment. Asses Eval Higher Educ. 1999;24(4):413–426. [Google Scholar]

- 5.Falchikov N. Peer feedback marking: developing peer assessment. Innovat Educ Teach Int. 1995;32(2):175–187. [Google Scholar]

- 6.Dochy F, Segers M, Sluijsmans D. The use of self-, peer and co-assessment in higher education: a review. Stud Higher Educ. 1999;24(3):331–350. [Google Scholar]

- 7.Topping KJ, Smith EF, Swanson I, Elliot A. Formative peer assessment of academic writing between postgraduate students. Asses Eval Higher Educ. 2000;25(2):149–166. [Google Scholar]

- 8.Brown S, Dove P. Self and peer assessment. Standing Conference on Educational Development; Birmingham, UK: 1991. [Google Scholar]

- 9.Zlatic TD. Abilities-based assessment within pharmacy education: preparing students for practice of pharmaceutical care. J Pharm Teach. 2000;7(3):5–27. [Google Scholar]

- 10.Topping K. Peer assessment between students in colleges and universities. Rev Educ Res. 1998;68(3):249–276. [Google Scholar]

- 11.Fry H, Ketteridge S, Marshall S, editors. A Handbook for Teaching and Learning in Higher Education: Enhancing Academic Practice. 2nd ed. London; Sterling, VA: Kogan Page; 2003. [Google Scholar]

- 12.van den Berg I, Admiraal W, Pilot A. Peer assessment in university teaching: evaluating seven course designs. Asses Eval Higher Educ. 2006;31(1):19–36. [Google Scholar]

- 13.Van Lehn KA, Chi MTH, Baggett W, Murray RC. Pittsburgh, PA: Learning Research and Development Center, University of Pittsburgh; 1995. Progress report: towards a theory of learning during tutoring. [Google Scholar]

- 14.Sluijsmans D, Dochy F, Moerkerke G. Creating a learning environment by using self-, peer- and co-assessment. Learning Environments Res. 1998;1(3):293–319. [Google Scholar]

- 15.Cho K, Schunn CD, Wilson RW. Validity and reliability of scaffolded peer assessment of writing from instructor and student perspectives. J Educ Psychol. 2006;98(4):891–901. [Google Scholar]

- 16. Medication Management Reviews. Australian Government. Department of Health and Aging; 2008. http://www.quitnow.info.au/internet/main/publishing.nsf/Content/medication_management_reviews.htm Accessed May 24, 2010.

- 17.Malcolmson C, Shaw J. The use of self- and peer-contribution assessments within a final year pharmaceutics assignment. Pharm Educ. 2005;5(3):169–174. [Google Scholar]

- 18.Austin Z, Gregory PAM. Evaluating the accuracy of pharmacy students' self-assessment skills. Am J Pharm Educ. 2007;71(5) doi: 10.5688/aj710589. Article 89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Banga AK. Assessment of group projects beyond cooperative group effort. J Pharm Teach. 2005;11(2):115–123. [Google Scholar]

- 20.Steensels C, Leemans L, Buelens H, Laga E, Lecoutere A, Laekeman G, et al. Peer assessment: a valuable tool to differentiate between student contributions to group work? Pharm Educ. 2006;6(2):111–118. [Google Scholar]

- 21.Marriott JL. Use and evaluation of “virtual” patients for assessment of clinical pharmacy undergraduates. Pharm Educ. 2007;7(4):341–349. [Google Scholar]

- 22.Shah R. Improving undergraduate communication and clinical skills: personal reflections of a real world experience. Pharm Educ. 2004;4(1):1–6. [Google Scholar]

- 23.Falchikov N, Goldfinch J. Student peer assessment in higher education: a meta-analysis comparing peer and teacher marks. Rev Educ Res. 2000;70(3):287–322. [Google Scholar]

- 24.Miller PJ. The effect of scoring criteria specificity on peer and self-assessment. Asses Eval Higher Educ. 2003;28(4):383–394. [Google Scholar]

- 25.Davies P. Peer assessment: judging the quality of students' work by comments rather than marks. Innovat Educ Teach Int. 2006;43(1):69–82. [Google Scholar]

- 26. About the Unit of Study Evaluation System. Institute for Teaching and Learning, The University of Sydney. Available from: http://www.itl.usyd.edu.au/use/ Accessed May 24, 2010.

- 27.Orsmond P, Merry S, Reiling K. The importance of marking criteria in the use of peer assessment. Asses Eval Higher Educ. 1996;21(3):239–250. [Google Scholar]

- 28.Cheng W, Warren M. Having second thoughts: student perceptions before and after a peer assessment exercise. Stud Higher Educ. 1997;22(2):233–240. [Google Scholar]

- 29.Hanrahan SJ, Isaacs G. Assessing self- and peer-assessment: the students' views. Higher Educ Res Dev. 2001;20(1):53–70. [Google Scholar]