Abstract

Tool use depends on processes represented in distinct regions of left parietal cortex. We studied the role of visual experience in shaping neural specificity for tools in parietal cortex by using functional magnetic resonance imaging with sighted, late-blind, and congenitally blind participants. Using a region-of-interest approach in which tool-specific areas of parietal cortex were identified in sighted participants viewing pictures, we found that specificity in blood-oxygen-level-dependent responses for tools in the left inferior parietal lobule and the left anterior intraparietal sulcus is independent of visual experience. These findings indicate that motor- and somatosensory-based processes are sufficient to drive specificity for representations of tools in regions of parietal cortex. More generally, some aspects of the organization of the dorsal object-processing stream develop independently of the visual information that forms the major sensory input to that pathway in sighted individuals.

Keywords: tools, blind humans, conceptual knowledge, dorsal visual pathway, fMRI

Complex tool use requires the integration of diverse types of information. Consider the simple act of picking up a fork and eating. Such an action requires visually recognizing the fork as the target of the action, transporting and shaping the hand according to the spatial position and volumetric properties of the fork, and then retrieving and implementing complex action knowledge about how to manipulate the fork according to its function. Evidence from functional imaging and neuropsychology indicates that the neural systems supporting tool use are principally localized within left parietal and frontal cortices. Within parietal cortex, an important distinction has been made between regions in posterior parietal cortex along the intraparietal sulcus that parse visual information for the purposes of object-directed reaching and grasping (e.g., Binkofski et al., 1998; Culham et al., 2003; Frey, Vinton, Norlund, & Grafton, 2005; Goodale & Milner, 1992; Pisella, Binkofski, Lasek, Toni, & Rossetti, 2006; Rizzolatti & Matelli, 2003; Ungerleider & Mishkin, 1982) and the left inferior parietal lobule, which is critical for processing the complex actions required for tool use (e.g., Boronat et al., 2005; Canessa et al., 2008; Goldenberg, 2009; Heilman, Rothi, & Valenstein, 1982; Hermsdörfer, Terlinden, Mühlau, Goldenberg, & Wohlschläger, 2007; Johnson-Frey, 2004; Kellenbach, Brett, & Patterson, 2003; Rumiati et al., 2004).

To date, research using functional imaging to study object representations within dorsal-stream structures has been based on sighted individuals and has generally used experimental paradigms involving visually presented objects (e.g., Chao & Martin, 2000; Johnson-Frey, Newman-Norlund, & Grafton, 2005; Mahon et al., 2007; Rumiati et al., 2004; but see Noppeney, Price, Penny, & Friston, 2006, for work with auditory words in sighted participants). A common finding is that viewing manipulable objects, such as tools and utensils, compared with viewing large, nonmanipulable nonliving things, animals, or faces leads to differential blood-oxygen-level-dependent (BOLD) responses in regions of left parietal cortex (for a review, see Martin, 2007). An important issue that is not addressed by those studies is whether the observed neural specificity for manipulable objects in regions of parietal cortex requires visual experience with those objects.

On the basis of the available functional imaging and neuro-psychological research, it can be hypothesized that specialization for manipulable objects in regions of parietal cortex is driven, in part, by interactions between those regions and motor- and somatosensory-based processes in neighboring brain areas. A previous study on hand movements in congenitally blind and sighted participants (Fiehler, Burke, Bien, Röder, & Rösler, 2008) found increased bilateral BOLD responses in primary somatosensory cortex independent of the visual experience of participants (see also Lingnau, Gesierich, & Caramazza, 2009, for data from sighted participants). Those data indicate that the neural systems supporting kinesthetic feedback during manual movements do not require visual experience in order to develop. Furthermore, the anterior intraparietal sulcus, the terminal projection of the dorsal visual pathway, has strong reciprocal connections with frontal motor areas (for reviews, see Geyer, Matelli, Luppino, & Zilles, 2000; Rizzolatti & Craighero, 2004). It is also known that damage to the left inferior parietal lobule can result in an impairment for manipulating tools, without necessarily impairing (visually based) object-directed grasping and reaching (for a review, see Johnson-Frey, 2004).

Thus, we hypothesized that processes in the left inferior parietal lobule that support complex object-associated actions would be relatively independent of the visual experience of participants. We tested this hypothesis using functional magnetic resonance imaging (fMRI) to study BOLD responses to tools, animals, and nonmanipulable objects in sighted, late-blind, and congenitally blind participants while they were performing a size-judgment task on auditorily presented words. In order to independently localize “tool-preferring” regions of the left parietal lobule using the standard picture-viewing approach, we also ran a picture-viewing localizer with sighted participants.

Method

Informed consent was obtained in writing (from sighted participants) and verbally (from blind participants) under approved University of Trento and Harvard University protocols for the use of human participants in research.

Auditory size-judgment task

Participants performed an auditory size-judgment task in the MRI scanner. The items for this task came from three semantic categories: tools (n = 24; e.g., saw, corkscrew, scissors, fork), animals (n = 24; e.g., butterfly, horse, cat, turtle), and nonmanipulable objects (n = 24; e.g., bed, table, truck, fence; see Mahon et al., 2007, for a detailed discussion of the classification of items by semantic category). Every trial of the experiment consisted of a miniblock in which 6 spoken words, all from the same category, were presented. The duration of each miniblock was 20 s. The order of the 6 items within a mini-block, the assignment of the 6 items (out of the pool of 24 items) to each miniblock, and the order of miniblocks was random, with the restriction that there were never two consecutive miniblocks of the same semantic category (stimulus type).

Within every functional run, all stimuli were presented once, for a total of four miniblocks per semantic category (and 12 miniblocks in total). Each run lasted approximately 10 min, and constituted a replication of the full experimental design. Sighted participants (S1–S7) each completed three functional runs, whereas late-blind participants (LB1–LB3) each completed four runs. Congenitally blind participants completed either four runs (CB1) or five runs (CB2–CB3).

Participant’s task was to compare the size of the second through sixth items in each miniblock with the size of the first. If all six objects (referred to by the words) had more or less the same size, participants responded by pushing a button with the index finger of the right hand. If at least one of the objects was different in size from the first, participants responded with the index finger of the left hand. At jittered intervals after the offset of the last of the six words, participants were presented with an auditory response cue (a tone lasting 200 ms; jittering was conducted in 0.5-s steps from 2 to 8 s and was distributed with hyperbolic density). Participants were instructed to make their response as soon as they heard the auditory response cue, but not before. The next trial began 20 s after the onset of the auditory response cue (i.e., there was no auditory stimulation for 20 s).

The auditory size-judgment task ensured that participants attended to the stimuli and was designed so that both sighted and blind participants could complete it. Because the task is based on a relative judgment, and because assignment of items to miniblocks was random (and thus different for every run, both within and across participants), there was no objective level for “correct” performance. The three groups of participants judged similar percentages of miniblocks to be composed of items that were roughly the same size: Sighted participants judged 25.2%, congenitally blind participants judged 26.5%, and late-blind participants judged 17.4% of trials as meeting this criterion.

Category-localizer experiment

After completing the auditory size-judgment task, the 7 sighted participants completed a standard category-localizer task in which they viewed pictures corresponding to the spoken word cues used in the auditory size-judgment task. Thirteen participants who did not participate in the auditory size-judgment task also completed the category-localizer experiment (see Methods in the Supplemental Material available online for details of the design of the localizer task).

Imaging and analysis

Magnetic resonance data were collected at the Center for Mind/Brain Sciences, University of Trento, on a Bruker Bio-Spin MedSpec 4-T scanner (Bruker BioSpin GmbH, Rheinstetten, Germany), using standard imaging procedures, and were analyzed using BrainVoyager Version 1.9 (Goebel, Esposito, & Formisano, 2006). After we excluded the first two volumes of each run, preprocessing consisted (in the following order) of slice-time correction, motion correction, and linear-trend removal in the temporal domain (cutoff: three cycles within the run). Functional and anatomical data were normalized to Talairach space (Talairach & Tournoux, 1988), and functional data were spatially smoothed with a 4.5-mm full-width at half-maximum filter (for full details of the scanning parameters and analysis procedures, see Methods in the Supplemental Material; see also Mahon, Anzellotti, Schwarzbach, Zampini, & Caramazza, 2009).

We used a random-effects analysis to analyze the data from the group of 20 participants who viewed pictures. The analyses of the remaining data sets were fixed-effects analyses with separate study (i.e., run) predictors. This approach allowed us to define regions of interest (ROIs) using population-level statistics (random effects) and then test within those ROIs using data from the participants who completed the auditory size-judgment task. This analysis approach does not permit generalization of the data from the blind participants to the population of blind individuals, but rather supports inferences about whether visual experience is necessary in order for the effects to be present. We report results of analyses of individual participants’ data in Figures S1 and S2 in the Supplemental Material.

The principal statistical contrast of interest consisted of contrasting tool stimuli against animal stimuli and nonmanipulable-object stimuli (with animal and nonmanipulable-object stimuli collapsed together, and tools and nontools weighted equally). This contrast was carried out separately for each data set (sighted participants viewing pictures, sighted participants performing auditory size judgments, late-blind participants performing auditory size judgments, and congenitally blind participants performing auditory size judgments). In order to test the experimental hypothesis and to avoid issues of nonindependence in the definition of ROIs (for a discussion of this issue, see Kriegeskorte, Simmons, Bellgowan, & Baker, 2009), we defined three ROIs in parietal cortex on the basis of data from sighted participants viewing pictures (using a threshold of p < .05, corrected for false discovery rate for the entire brain volume; Genovese, Lazar, & Nichols, 2002). We then tested BOLD responses within those ROIs for the auditory size-judgment task, separately for each participant group. For the overlap analysis, we computed the statistical contrast maps of tool stimuli versus the other stimuli within each data set separately. For the data sets from the auditory size-judgment task, we used a threshold of p < .005 (corrected for false discovery rate for the entire brain volume).

Results

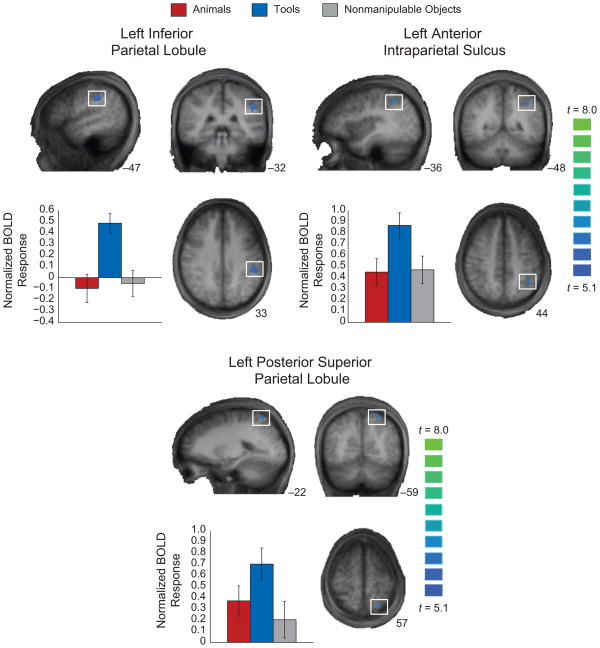

Three regions within the left parietal lobule showed differential BOLD responses for tool stimuli compared with the other object types in sighted participants viewing pictures (see Fig. 1), replicating previous studies. The first region, in the left inferior parietal lobule, is critical for processing complex object-associated actions (e.g., Heilman et al., 1982; Johnson-Frey, 2004; Mahon et al., 2007; Rumiati et al., 2004). The second region, in the left anterior intraparietal sulcus, is important for calculating the volumetric properties of objects relevant to shaping the hand during object grasping (e.g., Binkofski et al., 1998; Frey et al., 2005). The third region, in a more posterior and superior region of parietal cortex than the previous two regions, is important for calculating visuo-motor information relevant for transporting the hand to the correct spatial location of an object (e.g., Culham et al., 2003; Goodale & Milner, 1992; Ungerleider & Mishkin, 1982). The locations (Talairach & Tournoux, 1988) and strength of the effects for the peak voxels within the three ROIs were as follows: left inferior parietal cortex (−51, −37, 34), t = 7.01, p < 10−5; left anterior intraparietal sulcus (−39, −49, 43), t = 7.07, p < 10−5; and left posterior superior parietal cortex (−24, −61, 55), t = 7.32, p < 10−5. As in previous studies, all three regions showed larger BOLD responses when sighted participants viewed pictures of tools than when they viewed pictures of the other stimuli, despite the fact that participants were not instructed to perform any overt or covert object-directed actions. These three regions in normalized space served as ROIs for analyses of the data from the sighted, late-blind, and congenitally blind participants who performed the auditory size-judgment task.

Fig. 1.

Differential blood-oxygen-level-dependent (BOLD) responses for tool stimuli in sighted participants viewing pictures. The brain images show three regions of interest (ROIs) within left parietal cortex. These ROIs were defined by contrasting tool stimuli against the other stimulus types (threshold of p < .05, corrected for false discovery rate for the whole brain volume). The bar graphs show BOLD estimates for the three stimulus types in each of these regions for the same data set; statistical tests were not performed over the data in these graphs, as those data are not independent of the ROI selection. Error bars reflect the standard errors of the mean. The locations and statistics for the effects are provided in the Results section. Talairach coordinates for the respective dimensions are shown adjacent to (bottom right of) the anatomical images. BOLD responses are plotted as normalized values (z scores).

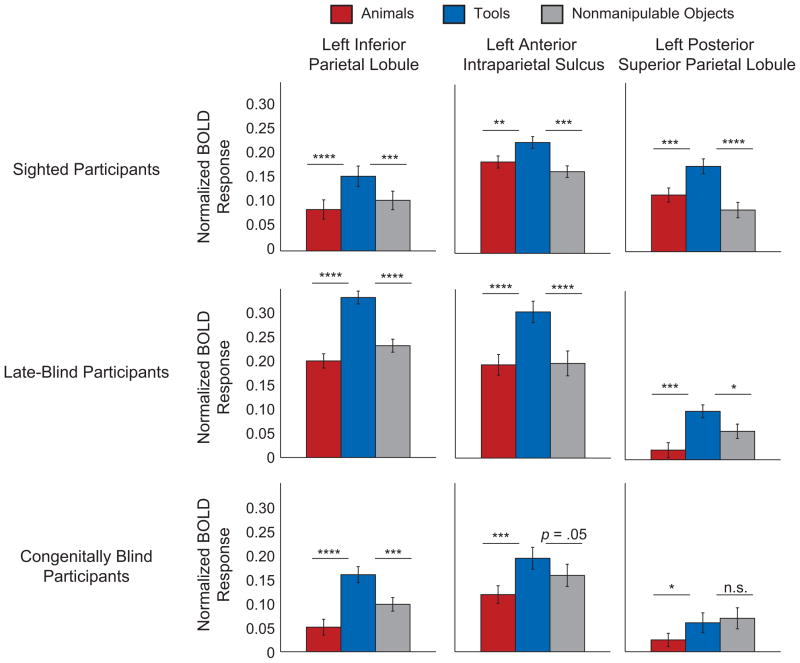

We tested the hypothesis that visual experience is not necessary for differential BOLD responses for tool stimuli in those ROIs. As illustrated in Figure 2, sighted and blind participants performing the auditory size-judgment task showed the same pattern of differential BOLD responses for tool stimuli compared with the other object types in the left inferior parietal lobule and the left anterior intraparietal sulcus. The pattern of differential BOLD responses for tool stimuli in those regions was observed in both late-blind and congenitally blind participants. In the posterior superior parietal ROI in congenitally blind participants, BOLD responses did not differ between tool stimuli and nonmanipulable objects, whereas both of these object types evoked larger BOLD responses than did animal stimuli (see Table 1 for t values and Fig. 2 for normalized BOLD responses by condition).

Fig. 2.

Mean blood-oxygen-level-dependent (BOLD) responses during the auditory size-judgment task as a function of stimulus type. Results are shown separately for each region of interest (defined on the basis of sighted participants viewing pictures; see Fig. 1) and participant group. Error bars reflect standard errors of the mean. Asterisks indicate a significant difference in BOLD responses (*p < .05, **p < .01, ***p < .001, ****p < .00001).

Table 1.

Values of t Statistics for the Region-of-Interest Analyses of the Auditory Size-Judgment Task

| Region of interest | Tools > animals |

Tools > nonmanipulable objects |

||||

|---|---|---|---|---|---|---|

| Sighted participants | Late-blind participants | Congenitally blind participants | Sighted participants | Late-blind participants | Congenitally blind participants | |

| Left inferior parietal lobule | 4.6**** | 6.5**** | 6.0**** | 3.3*** | 5.0**** | 3.4*** |

| Left anterior intraparietal sulcus | 2.7** | 5.7**** | 4.1*** | 3.9*** | 5.6**** | 2.0* |

| Left posterior superior parietal lobule | 3.6*** | 4.1*** | 2.0* | 5.8**** | 2.2* | −0.5 |

Note: The region-of-interest analyses contrasted blood-oxygen-level-dependent responses for tool stimuli versus the other stimuli (animals and nonmanipulable objects) for each participant group and region of interest. Degrees of freedom on the t statistics were as follows: sighted participants: 4,907; late-blind participants: 2,808; congenitally blind participants: 3,261.

p ≤ .05.

p < .01.

p < .001.

p < .00001.

As described in the Method section, fixed-effects analyses were used to analyze the data sets from the auditory size-judgment task. We chose this analysis approach because the sample sizes of the groups of participants performing the auditory size-judgment task were smaller than what would be required for random-effects analysis. One analytical concern that attends the use of fixed-effects analyses, however, is that they may yield significant effects at the group level despite substantial heterogeneity in effects for individual participants. To address this concern, we conducted a series of individual-participant ROI analyses, using the same ROIs as for the group-level analyses. These analyses (shown in Fig. S1 in the Supplemental Material) demonstrated that the pattern of group-level fixed effects in congenitally and late-blind participants was present at the individual-participant level.

Somewhat surprisingly, the individual-participant ROI analyses of auditory size judgments revealed substantial variability among sighted participants in whether or not they showed differential BOLD responses for tool stimuli. These differences in results between the fixed-effects and individual-participant analyses for the sighted participants highlight the potential frailty of fixed-effects analyses, and, in particular, their susceptibility to being carried by a few participants. However, the discrepancy between the findings for sighted participants in these two analyses does not undermine the conclusion that visual experience is not necessary for tool specificity to emerge. That conclusion is based on the results observed for congenitally blind and late-blind participants, which were not different at the group and individual-participant levels (Figs. 2 and S1). Furthermore, the ROIs themselves were initially defined on the basis of sighted participants viewing pictures, so there is no question about whether these ROIs show the theoretically predicted patterns of neural specificity. An issue for future research is whether the use of auditory stimulation in sighted participants, relative to using visual stimuli, leads to increased intersubject heterogeneity in the strength and locations of preferences for tool stimuli in parietal cortices.

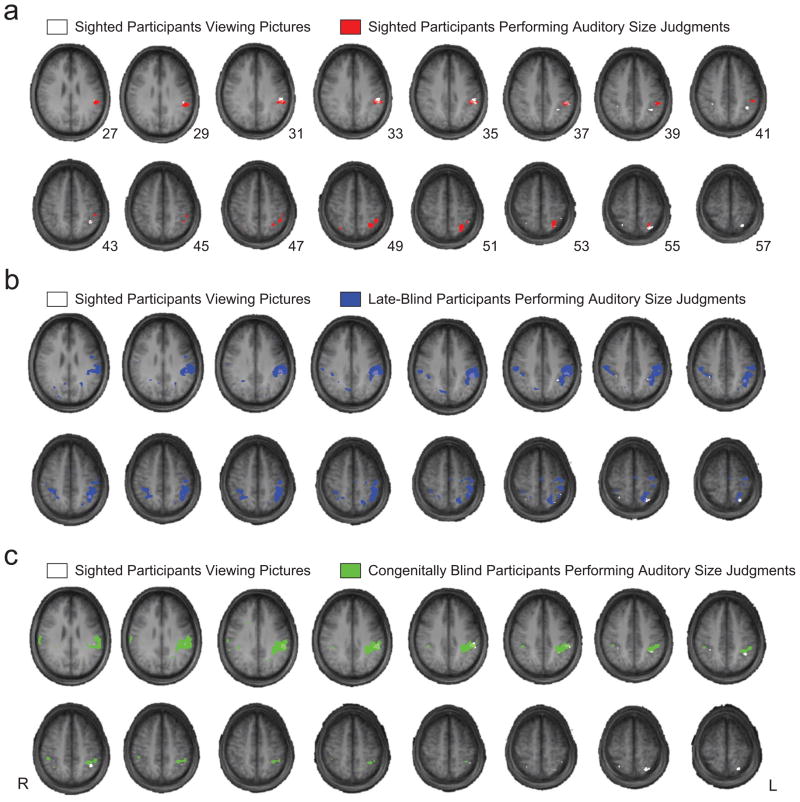

Finally, in order to have a broader view of regions of parietal cortex that show differential BOLD responses for tool stimuli in the three groups of participants, we created a series of overlap maps. Specifically, we overlaid the statistical contrast map (tool stimuli > other object types) for the sighted participants viewing pictures with the corresponding maps for the three groups of participants performing auditory size judgments (see Fig. 3). The results of the overlap analysis were consistent with the ROI analyses. The greatest overlap was observed in the left inferior parietal lobule and the left anterior intraparietal sulcus, and there was less overlap in posterior superior parietal cortex (see Fig. S2 in the Supplemental Material for individual participants’ contrast maps). This analysis showed that the activation clusters for the effect of interest (i.e., tool specificity) were relatively restricted to the expected regions when contrasts were defined within each data set and there were no ROI restrictions.

Fig. 3.

Overlap analysis of statistical contrast maps. Statistical contrast maps for all groups of participants were defined by contrasting responses to tool stimuli against responses to the other stimulus types, for each data set separately. The statistical contrast maps for sighted participants viewing pictures (see also Fig. 1) were then overlaid with those for (a) sighted, (b) late-blind, and (c) congenitally blind participants performing auditory size judgments. The color overlays indicate all voxels that were at or above the statistical threshold for each group of participants (sighted participants viewing pictures: p < .05, corrected for false discovery rate for the whole brain volume; all other data sets: p < .005, corrected for false discovery rate for the whole brain volume). L = left; R = right.

Discussion

A large body of research across functional imaging and neuro-psychology indicates that different regions of parietal cortex are required for different aspects of complex tool use. In sighted participants, those regions show differential BOLD responses during passive viewing of tool stimuli compared with a range of other object types. We replicated that basic finding, in that regions in the inferior parietal lobule, the anterior intraparietal sulcus, and the posterior superior parietal lobule all showed differential BOLD responses for tool stimuli compared with nontool stimuli. When those ROIs, as defined over sighted participants viewing pictures, were tested in sighted, late-blind, and congenitally blind participants performing an auditory size-judgment task, the same pattern of differential BOLD responses for tool stimuli was observed. These data indicate that the motor and somatosensory demands of complex object use are sufficient to drive neural specificity for tools (compared with animals and nonmanipulable objects) within the left inferior parietal lobule and the left anterior intraparietal sulcus. The lack of a dissociation between tool stimuli and nonmanipulable objects in the posterior superior parietal ROI in congenitally blind participants may derive from the fact that congenitally blind individuals do reach for and touch large nonmanipulable objects (e.g., tables, cars), and the possibility that plasticity within the congenitally blind brain remaps nonvisual inputs to that region of parietal cortex. However, further empirical work is required in order to fully understand the effect of visual deprivation on BOLD responses in posterior superior parietal cortex.

The central issue that is framed by these findings is how plasticity of function within the brains of blind humans remaps the available sensory inputs (e.g., audition, touch) so that they may guide action in the absence of vision. There is likely to be massive reorganization of the way in which the dorsal object-processing stream extracts action-relevant information about objects in the absence of vision. That is because the sensory modality of touch, unlike vision, is not a sense-at-a-distance, and audition, unlike vision and touch, does not convey volumetric information about objects. An important issue for further research concerns the nature of sensory inputs to the dorsal object-processing route in individuals who have not had visual experience and how the brain is able to negotiate the constraints imposed by motor and somatosensory experience in the absence of visual stimulation. An understanding of these issues will contribute to a broader theory about the principles that determine the organization and representation of object knowledge in the brain.

Supplementary Material

Acknowledgments

We thank Gianpaolo Basso and Manuela Orsini for technical assistance with the brain imaging, Stefano Anzellotti for his assistance running participants, the Unione Italian Ciechi sezione di Trento for their help in recruiting blind participants, and Jessica Cantlon for her comments on the manuscript. B.Z.M. is now at the Department of Brain & Cognitive Sciences at the University of Rochester.

Funding

B.Z.M. was supported in part by a National Science Foundation Graduate Research Grant and an Eliot Dissertation Completion Grant. This research was supported in part by Grant DC006842 from the National Institute on Deafness and Other Communication Disorders (A.C.) and by a grant from the Fondazione Cassa di Risparmio di Trento e Rovereto. Additional support was provided by Norman and Arlene Leenhouts.

Footnotes

Declaration of Conflicting Interests

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

Additional supporting information may be found at http://pss.sagepub.com/content/by/supplemental-data

References

- Binkofski F, Dohle C, Posse S, Stephan KM, Hefter H, Seitz RJ, Freund HJ. Human anterior intraparietal area subserves prehension: A combined lesion and functional MRI activation study. Neurology. 1998;50:1253–1259. doi: 10.1212/wnl.50.5.1253. [DOI] [PubMed] [Google Scholar]

- Boronat CB, Buxbaum LJ, Coslett HB, Tang K, Saffran EM, Kimberg DY, Detre JA. Distinctions between manipulation and function knowledge of objects: Evidence from functional magnetic resonance imaging. Cognitive Brain Research. 2005;23:361–373. doi: 10.1016/j.cogbrainres.2004.11.001. [DOI] [PubMed] [Google Scholar]

- Canessa N, Borgo F, Cappa SF, Perani D, Falini A, Buccino G, et al. The different neural correlates of action and functional knowledge in semantic memory: An fMRI study. Cerebral Cortex. 2008;18:740–751. doi: 10.1093/cercor/bhm110. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable manmade objects in the dorsal stream. NeuroImage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, DeSouza JF, Gati JS, Menon RS, Goodale MA. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Experimental Brain Research. 2003;153:180–189. doi: 10.1007/s00221-003-1591-5. [DOI] [PubMed] [Google Scholar]

- Fiehler K, Burke M, Bien S, Röder B, Rösler F. The human dorsal action control system develops in the absence of vision. Cerebral Cortex. 2008;19:1–12. doi: 10.1093/cercor/bhn067. [DOI] [PubMed] [Google Scholar]

- Frey SH, Vinton D, Norlund R, Grafton ST. Cortical topography of human anterior intraparietal cortex active during visually guided grasping. Cognitive Brain Research. 2005;23:397–405. doi: 10.1016/j.cogbrainres.2004.11.010. [DOI] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. NeuroImage. 2002;15:870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Geyer S, Matelli M, Luppino G, Zilles K. Functional neuroanatomy of the primate isocortical motor system. Anatomy and Embryology. 2000;2002:443–474. doi: 10.1007/s004290000127. [DOI] [PubMed] [Google Scholar]

- Goebel R, Esposito F, Formisano E. Analysis of functional image analysis contest (FIAC) data with BrainVoyager QX: From single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Human Brain Mapping. 2006;27:392–401. doi: 10.1002/hbm.20249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldenberg G. Apraxia and the parietal lobes. Neuropsychologia. 2009;47:1449–1459. doi: 10.1016/j.neuropsychologia.2008.07.014. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner D. Separate visual pathways for perception and action. Trends in Neurosciences. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Heilman KM, Rothi LJ, Valenstein E. Two forms of ideomotor apraxia. Neurology. 1982;32:342–346. doi: 10.1212/wnl.32.4.342. [DOI] [PubMed] [Google Scholar]

- Hermsdörfer J, Terlinden G, Mühlau M, Goldenberg G, Wohlschläger AM. Neural representations of pantomimed and actual tool use: Evidence from an event-related fMRI study. NeuroImage. 2007;36:T109–T118. doi: 10.1016/j.neuroimage.2007.03.037. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH. The neural basis of complex tool use in humans. Trends in Cognitive Sciences. 2004;8:71–78. doi: 10.1016/j.tics.2003.12.002. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. A distributed left hemisphere network active during planning of everyday tool use skills. Cerebral Cortex. 2005;15:681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. Actions speak louder than functions: The importance of manipulability and action in tool representation. Journal of Cognitive Neuroscience. 2003;15:20–46. doi: 10.1162/089892903321107800. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan SF, Baker CI. Circular analysis in systems neuroscience: The dangers of double dipping. Nature Neuroscience. 2009;12:535–540. doi: 10.1038/nn.2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lingnau A, Gesierich B, Caramazza A. Asymmetric fMRI adaptation reveals no evidence for mirror neurons in humans. Proceedings of the National Academy of Sciences, USA. 2009;24:9925–9930. doi: 10.1073/pnas.0902262106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Anzellotti S, Schwarzbach J, Zampini M, Caramazza A. Category-specific organization in the human brain does not require visual experience. Neuron. 2009;63:397–405. doi: 10.1016/j.neuron.2009.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Milleville SC, Negri GA, Rumiati RI, Caramazza A, Martin A. Action-related properties shape object representations in the ventral stream. Neuron. 2007;55:507–520. doi: 10.1016/j.neuron.2007.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin A. The representation of object concepts in the brain. Annual Review of Psychology. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Price CJ, Penny WD, Friston KJ. Two distinct neural mechanisms for category-selective responses. Cerebral Cortex. 2006;16:437–445. doi: 10.1093/cercor/bhi123. [DOI] [PubMed] [Google Scholar]

- Pisella L, Binkofski F, Lasek K, Toni I, Rossetti Y. No double-dissociation between optic ataxia and visual agnosia: Multiple sub-streams for multiple visuo-manual integrations. Neuropsychologia. 2006;44:2734–2748. doi: 10.1016/j.neuropsychologia.2006.03.027. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirrorneuron system. Annual Review of Neuroscience. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Matelli M. Two different streams for the dorsal visual system: Anatomy and functions. Experimental Brain Research. 2003;153:146–157. doi: 10.1007/s00221-003-1588-0. [DOI] [PubMed] [Google Scholar]

- Rumiati RI, Weiss PH, Shallice T, Ottoboni G, Noth J, Zilles K, Fink GR. Neural basis of pantomiming the use of visually presented objects. NeuroImage. 2004;21:1224–1231. doi: 10.1016/j.neuroimage.2003.11.017. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW, editors. Analysis of visual behavior. Cambridge, MA: MIT Press; 1982. pp. 549–586. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.