Abstract

Vision and proprioception are the main sensory modalities that convey hand location and direction of movement. Fusion of these sensory signals into a single robust percept is now well documented. However, it is not known whether these modalities also interact in the spatial allocation of attention, which has been demonstrated for other modality pairings. The aim of this study was to test whether proprioceptive signals can spatially cue a visual target to improve its detection. Participants were instructed to use a planar manipulandum in a forward reaching action and determine during this movement whether a near-threshold visual target appeared at either of two lateral positions. The target presentation was followed by a masking stimulus, which made its possible location unambiguous, but not its presence. Proprioceptive cues were given by applying a brief lateral force to the participant’s arm, either in the same direction (validly cued) or in the opposite direction (invalidly cued) to the on-screen location of the mask. The d′ detection rate of the target increased when the direction of proprioceptive stimulus was compatible with the location of the visual target compared to when it was incompatible. These results suggest that proprioception influences the allocation of attention in visual space.

Keywords: Human, Vision, Proprioception, Cross-modal spatial attention, Motor control

Introduction

Information about the location and direction of own hand movement comes to the brain via the sensory modalities of vision and proprioception. Two mechanisms have been proposed for how the brain combines multimodal spatial information: multisensory integration and cross-modal spatial attention.

Multisensory integration is the fusion of separate unimodal spatial signals into a single percept, which provides a more certain representation of the stimulus (Ernst and Bulthoff 2004; van Beers et al. 2002). Visuoproprioceptive integration has been clearly demonstrated by experiments in which a discrepancy between visual and proprioceptive cues led participants to perceive their hand between the visually and proprioceptively cued locations (Rossetti et al. 1995; Welch and Warren 1986). The relative weighting of visual and proprioceptive information in this multimodal percept minimizes the variance of the position estimate (van Beers et al. 2002).

However, the interaction between sensory modalities—without integration into a single percept—is also evident in spatial attention tasks as the facilitation of stimulus processing when its location coincides with a stimulus in a different modality (Spence and Driver 1994). For instance, a visual stimulus is perceived faster and more accurately when presented in spatial proximity to a task-irrelevant stimulus in a different modality such as sound (Spence and Driver 1997) or a touch on the skin (Spence et al. 1998) compared with a condition when this stimulus is presented further away from the target. Cross-modal interactions in which a salient, non-predictive cue in one modality facilitates or inhibits the processing of a target in another modality have been found for all modality pairings that have been tested between vision, touch and audition (Spence and Driver 2004). It is at present unclear whether vision and proprioception also interact in this way; however, based on these previous studies we hypothesized that a proprioceptive cue would, like an auditory or a tactile one, facilitate visual detection when directed towards versus away from a visual target.

There is already substantial evidence that hand location can influence visual target detection; however, the link between proprioception and visual attention has not yet been demonstrated. Reed et al. (2006) investigated whether the static location of the hand (visible or not) affected spatial attention in healthy participants. In their experiments, participants had to place one hand next to a visual target location and then detect targets using highly predictive visual cues. The authors found that the detection of a visual stimulus improved nearer to the hand, supporting the idea that the presence of the hand affects the allocation of attention in visual space. However, it is difficult to argue that this effect is the direct result of proprioception influencing visual attention because participants knew where they were placing their hand before each trial, which leaves open the possibility of the effect being due to previous knowledge of body configuration (e.g. a multimodal body schema) rather than to the current proprioceptive input.

Similarly, di Pellegrino and Frassinetti (2000) showed that visual extinction in a parietally damaged patient was reduced when visual stimuli were presented near the contralesional finger; but since the effect disappeared when the hand was hidden, it could not have been purely due to proprioception. Schendel and Robertson (2004) demonstrated a similar effect in a patient with blindsight, in that his visual detection improved when his arm was placed in his blind field. However, once again both proprioception and prior knowledge of body configuration (e.g. a body schema acquired through vision or the efference copy of the motor command) could have accounted for this effect. Smith et al. (2008) investigated this result further and found no evidence of improved implicit or explicit visual target detection in the blind field as a consequence of moving the arm; they thus conclude that the effect is due to a spatial bias towards the space containing the extended arm and is not specific to proprioceptive coding.

The aim of the present study was to follow up on this previous work by explicitly testing whether proprioception influences the allocation of visual attention. We used a paradigm in which participants had to detect a visual target in the presence of a task-irrelevant, spatially directed proprioceptive stimulus. Participants made forward reaching arm movements holding a robotic manipulandum. During each voluntary movement a brief force pulse laterally perturbed their unseen hand either in the direction of the visual target (validly cued) or in the opposite direction (invalidly cued). The direction of this passive movement was random across trials and thus, we assumed, proprioception was the only source of spatial information about the hand. We reasoned that if proprioception (e.g. the direction of movement or the location of the hand) influences the distribution of attention in visual space in the same way as touch or audition does, then d′ detection rates would be higher in the validly cued than in the invalidly cued condition.

Materials and methods

Overview of the experiments

All experiments had two randomly interleaved conditions that differed by the directional congruence between the force applied to the hand and the location of the visual target. In the validly cued condition the force deviated the hand’s trajectory in the direction of the visual mask whereas in the invalidly cued condition the hand was deviated in the direction opposite to the visual mask. Experiment 1 was a pilot experiment in which we varied stimulus onset asynchrony (SOA, the timing difference between the onset of the perturbing force and the onset of the visual stimulus) in order to determine the optimal SOA for which the difference in visual detection between the validly cued and invalidly cued conditions was largest. Previous studies have shown an optimal facilitatory interaction between sensory modalities around 100–300 ms (Spence and Driver 1997; Spence et al. 1998). We therefore tested 5 different SOAs: 50, 100, 150, 200 or 250 ms. Experiment 2 used the best SOA (200 ms) to retest for a difference in visual detection rates between conditions, using a larger sample of new participants. Experiment 3 was similar to Experiment 2 except that the task was made more spatially consistent by the use of a projection-mirror system so that the visual stimuli were in the same plane as the participants’ hand. Experiment 4 was a control experiment in which participants performed a shorter version of the task while their horizontal eye movements were measured by a SKALAR eye-tracking system.

Participants

We tested 48 participants in this study. Six took part in Experiment 1 (2 male, 4 female, age range 21–33 years, median age 23 years); sixteen took part in Experiment 2 (3 male, 13 female, age range 19–35 years, median age 23 years); twenty-two took part in Experiment 3 (8 male, 14 female, age range 18–36 years, median age 22 years) and four took part in Experiment 4 (1 male, 3 female, age range 17–38 years, median age 22 years). All participants were healthy right-handed adults with no known motor or sensory abnormalities, and all had normal or corrected-to-normal vision. Only participants who were naïve to the purpose of the experiment were included. The experiment was approved by the local ethics committee at the University of Birmingham, and all participants gave their informed consent.

Task

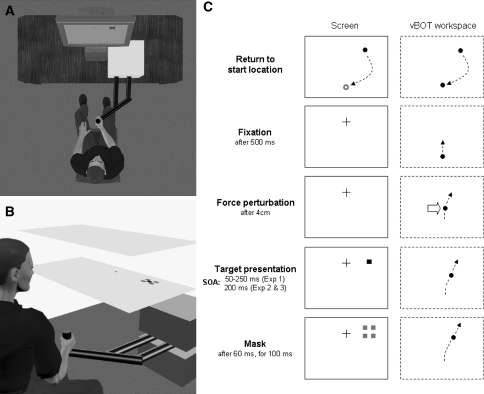

Participants used a vBOT robotic manipulandum (Howard et al. 2009) with their right hand. The manipulandum was held in a power grasp with the thumb uppermost and the palm of the hand to the right of the vertical handle. A computer sampled the position and velocity of the manipulandum and updated the forces imposed on the handle with millisecond resolution. In Experiment 1 and Experiment 2, participants controlled the movement of a cursor (black circle) subtending 0.57° visual angle on a white computer screen (40 × 30 cm), positioned frontoparallel 1 m in front of them (Fig. 1a). The movement of the manipulandum mapped 1:1 onto the movement of the cursor on the screen, so if the participants moved 10 cm using the robot, the cursor moved 10 cm (5.7° visual angle) on the screen. In Experiments 3 and 4, we used a projection-mirror system (Fig. 1b) to provide a more spatially consistent visuomotor environment where a forward movement of the hand would translate into an identical co-localized cursor movement on the horizontal screen, rather than a movement upwards as in the set-up of Experiments 1 and 2. In this set-up, participants gazed down into a mirror that reflected a horizontal screen positioned such that they saw stimuli as virtual images in the same plane as their hand on the robot underneath, and the visual cursor was spatially aligned with the position of the vBOT handle. The viewing distance to the most distant virtual image was 66 cm. Assuming that the arm length is around 70 cm, all visual stimuli were thus within peripersonal space. We kept the on-screen size of stimuli the same across experiments, so that the cursor size at the eye was increased to 0.87° in Experiment 3. A 10 cm hand movement caused the cursor to move 10 cm (8.7° visual angle). In the first Experiments 1 and 2, horizontal blinkers attached to a pair of safety goggles blocked the direct view of their hand; in Experiments 3 and 4, the projection-mirror apparatus blocked the view of the hand. Participants responded with their right foot on a pair of foot switches. They were instructed to lift their toe if the target was present and lift their heel if it was absent.

Fig. 1.

Force profile, experimental set-up and procedure. a Set-up for Experiments 1 and 2 with monitor placed frontoparallel. b Set-up for Experiments 3 and 4 with projection-mirror system. c Participants reached forward, experienced a lateral perturbation and had to detect a visual target that could appear in the direction of the perturbation (validly cued condition, shown) or opposite to it (invalidly cued condition). Left column screen display, right column participant’s hand position in vBOT workspace, empty arrow direction of perturbation, dashed arrow direction of hand/cursor motion

Before each trial, the participants moved the cursor to a start circle at the bottom centre of the screen, with the manipulandum aligned to the body midline. As soon as this position was reached the cursor and start circle disappeared and after 500 ms a fixation cross appeared at the top of the screen to signal the start of the trial. The distance between the start circle and the fixation cross was 16 cm (20 cm in Experiment 3). Participants were instructed to move the robot handle forward in a straight line at a comfortable speed towards the fixation point (Fig. 1c). After the hand had moved 4 cm (6 cm in Experiment 3), a force was applied laterally, perpendicular to the direction of motion. The direction (left or right) was randomized across trials. The applied force profile was based on a β-function that peaked at 13.35 N after 50 ms from onset and decayed to zero after 500 ms, as given by

|

where t is the time in seconds. Participants were instructed to continue to move forward after the force impacted on their arm, and not to attempt to correct laterally for the perturbation. Thus, their uncorrected hand path would be deviated diagonally towards the left or the right, depending on which side the force impacted (Fig. 3). The cursor only reappeared at the end of each trial so that there was no visual information about the deviated hand trajectory.

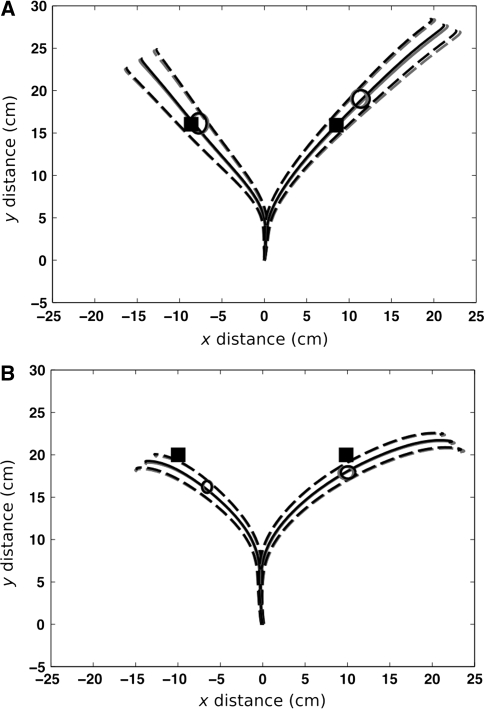

Fig. 3.

Hand path data. a Means (solid lines) and standard errors (dashed lines) of spatially resampled movement paths across all trials and participants for validly (grey) and invalidly (black) cued conditions in Experiment 2. The black squares show the target locations on-screen; the ellipses show the means and standard deviations of hand position at target onset. b Similar data for Experiment 3

After a predefined SOA from the onset of force, on a randomly interleaved 50% of the trials, a visual target consisting of a 0.57° (0.87° for Experiment 3) black or greyscale square was presented for 60 ms with equal probability at 5.73° (8.73°) to either left or right of the fixation. Whether the target was present or not, a mask consisting of four 0.57° (0.87°) red squares arranged in a 1.71° (2.60°) square centred on the same location was then presented for 100 ms. The onset of mask presentation was 60 ms after the SOA interval. Randomisation of the trials was based on a Mersenne random number generator.

Experiments 1–3 consisted of 480 trials, with a break every 120 trials to prevent fatigue and boredom. Across all trials, force pulse direction was uninformative about the location of the visual target, and we collected 120 trials each for the 4 combinations of target present/absent and direction validly cued/invalidly cued. Before each experiment the participants practiced the task and their 60% visual threshold was detected using a maximum-likelihood threshold estimation algorithm ML-PEST (Linschoten et al. 2001). On each trial, the threshold algorithm varied the contrast of the visual target, which was a greyscale square (see below), until the threshold criterion was reached.

In Experiment 4, participants followed the same instructions as for Experiments 1–3 but for a shorter protocol designed to allow accurate eye tracking. Prior to beginning this experiment they underwent an eye-tracking calibration process. They fixated on three horizontal points in space while eye position data was collected that we then used to calibrate the subsequent tracking. Experiment 4 consisted of only 72 trials in a single block.

Data analysis

All data analysis took place in MATLAB and SPSS. Hit rate H and false alarm rate F for the validly and invalidly cued trials were transformed into Z-scores, and the d′ sensitivity measure was calculated using the standard formula: d′ = Z(H) − Z(F). Because we had the a priori hypothesis that the detection rate in the validly cued condition would be higher than in the invalidly cued condition, a one-tailed t-test was used in Experiment 2 to test that the average d′ score for the validly cued condition was indeed larger that that for the invalidly cued condition. We used a between-groups ANOVA to test for differences between Experiments 2 and 3 and thus to determine whether we could pool the data from these experiments. For Experiments 2 and 3, we also calculated changes in two measures of response bias criterion (c and β) which are calculated as follows:

|

The position and velocity of the vBOT handle was sampled in Experiments 2 and 3 at 1000 Hz. Movement onsets and offsets were calculated using a velocity threshold of 1 cm/s. Trajectories were then spatially resampled over 150 samples evenly spaced across the distance travelled, to remove time dependence, before spatial averaging.

The horizontal gaze position of the left eye was sampled at 200 Hz in Experiment 4. We identified all horizontal saccades in a window of 100–300 ms following force onset. Saccades were identified using a velocity threshold of 50 deg/s and an amplitude threshold of 3 degrees. Fischer et al. (1997) suggest an interval of 100–350 ms for saccade latency. Since in Experiment 4 the visual stimulus appeared 200 ms after force onset, any saccades detected 300 ms after force onset could conceivably be in response to the visual cue rather than to the force pulse.

Results

One participant who we discovered following Experiment 3 was not naïve to the purposes of the experiment was excluded from the analysis, leaving 21 participants in Experiment 3. Furthermore a technical error meant that the final 13 trials of one participant in Experiment 4 were lost, leaving us with only 59 trials for that participant rather than 72.

Experiment 1

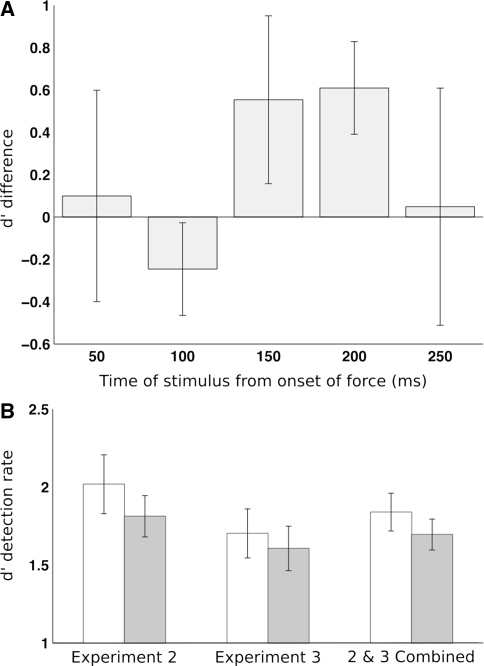

Figure 2a shows the mean difference in d′ across six participants for the validly cued and invalidly cued trials. While a one-way ANOVA on the differences showed no significant differences between the SOAs, the largest validly invalidly cued difference was at an SOA of 200 ms (although detection was also high at 150 ms). Hence, we decided to use 200 ms as a fixed SOA in Experiments 2 and 3.

Fig. 2.

Detection rate results. a Mean and standard error of the difference in d’ sensitivity score (validly minus invalidly cued) for 5 different SOAs tested in Experiment 1. The difference at an SOA of 200 ms after force onset was the largest. b Means and standard errors of d’ sensitivity score for validly and invalidly cued conditions in Experiments 2 and 3, and for both experiments taken together. Participants were significantly more likely to be able to detect the target in the validly cued vs. the invalidly cued condition in Experiment 2 and in the combined data

Experiment 2

This experiment was a replication of Experiment 1 using only a single SOA of 200 ms with a new larger participant group. The mean hit rate in Experiment 2 was 59.5% with a standard deviation of 18.2%. The d′ scores for the validly cued trials [mean: 2.02; SD: 0.75] were slightly higher than the invalidly cued trials [mean: 1.81; SD: 0.53]. Our a priori hypothesis predicted that the d′ sensitivity measure would be larger in the validly cued than in the invalidly cued trials, and this pattern was observed: a one-tailed t-test revealed a small but significant difference in d′ between the two conditions [t(15) = 1.88, p = 0.040]. Hence participants detected the target more reliably in the validly cued than in the invalidly cued condition.

Experiment 3

We wondered whether one reason for the small effect in Experiment 2 was due to the spatial incompatibility between the visual and proprioceptive spaces. Participants moved their arm (and received proprioceptive feedback) in the horizontal plane whereas cursor feedback of their movement and visual targets were presented in a vertical plane on the monitor in front of them. Experiment 3 addressed this issue using a projection-mirror system (see method) in which the visual targets were coplanar with their hand movements, increasing the spatial compatibility between the visual and proprioceptive stimuli compared with Experiments 1 & 2, and with spatial alignment of the VBOT handle and the virtual image of the cursor. It has previously been shown that the more compatible the space in which the hand moves and the space in which the visual feedback is given (e.g. in the same plane as the hand as compared with a plane that was perpendicular to the plane where the hand moved), the larger the magnitude of visuo-proprioceptive re-mapping as measured by the aftereffects in a prism adaptation task (Norris et al. 2001). The mean hit rate of the participants in Experiment 3 was 55.9% with a standard deviation of 18.0%. The d′ scores for the validly cued trials [mean: 1.70; SD: 0.72] were again higher than the invalidly cued trials [mean: 1.61; SD: 0.65]. However, in this case a one-tailed t-test showed no significant difference in d’ between the two conditions [t(20) = 1.12, p = 0.138].

A mixed ANOVA on the d′ scores across Experiments 2 and 3 (between-groups factor: experiment; repeated measures: condition, validly or invalidly cued) showed no significant effect of experiment [F(1,35) = 1.509, p = 0.227] and no interaction between experiment and condition [F(1,35) = 0.631, p = 0.432]. Thus, we failed to find evidence that the compatibility between the visual and proprioceptive spaces affects detection rates. Since there was no effect of experiment, the results across Experiments 2 and 3 are sufficiently similar to one another that the data can be taken together and in the combined analysis a significant effect of condition was found [F(1,35) = 4.853, p = 0.034]; d′ scores were significantly higher in the validly cued than in the invalidly cued condition. Figure 2b shows the d′ scores for experiments 2 and 3 individually, and also for the validly cued trials [mean: 1.84; SD: 0.74] and invalidly cued trials [mean: 1.70; SD: 0.60] from both experiments combined. Although we expected that a better compatibility between the visual and proprioceptive spaces would further facilitate the proprioceptively cued visual perception, we found no statistically significant difference in the effect between experiments.

We also measured the criterion or response bias using both c and β. From the pooled data, the values of c for the validly cued trials [mean: 0.667; SD: 0.443] and invalidly cued trials [mean: 0.721; SD: 0.359] were all positive, and the values of β for the validly cued trials [mean: 11.41; SD: 27.57] and invalidly cued trials [mean: 4.25; SD: 3.54] were all greater than 1. These positive values of c and supraunitary values of β indicate a systematic bias towards reporting that there was no target present. We used a similar ANOVA on these values as in the previous analysis of d′. For c, there was no significant effect of condition [F(1,35) = 2.22, p = 0.145] or of experiment [F(1,35) = 0.01, p = 0.923] and no significant interaction between them [F(1,35) = 0.06, p = 0.805]. For β, there was no significant effect of condition [F(1,35) = 2.86, p = 0.100] or of experiment [F(1,35) = 1.06, p = 0309] and no significant interaction between them [F(1,35) = 0.84, p = 0.365]. These results indicate that the significant differences between the d′ values are not due to differences in response bias between the conditions.

As expected, t-tests for each experiment comparing hand position showed that participants were pushed significantly closer to the target at stimulus onset in the validly than in the invalidly cued condition for both Experiment 2 [t(15) = 16.91, p < 0.001] and Experiment 3 [t(20) = 40.55, p < 0.001]—see also Fig. 3. It has previously been suggested that the distance between the cue in one modality and the target in another modality is critical for detecting the effect of cross-modal attention (Prime et al. 2008). Namely, if the distance between the cue and the target increases the benefit of a spatially valid cue is reduced because the target falls outside the focus of attention. To test whether in the current experiment the distance from hand (proprioceptive cue) and target (visual target) affected the d′ detection rates we calculated a correlation coefficient across participants between the distance from target to hand at stimulus onset and d′. For each participant, we subtracted the mean distance for the valid trials from the mean distance for invalid trials and calculated a correlation coefficient between this difference and the corresponding difference in d′. (For the data from Experiment 2 we performed two calculations: a transformed calculation, the difference between the target position and the unseen cursor position in the plane of the screen, and a total distance calculation, the Euclidean distance between the hand and the target on screen.) This correlation was not significant for either the transformed [r = 0.18, p = 0.500] or total [r = 0.37, p = 0.163] calculations for Experiment 2, or for Experiment 3 [r = −0.31, p = 0.176]. Thus, even though the hand was closer to the target at stimulus onset in the validly cued conditions, there is no evidence that this is responsible for the effect. It is possible that aspects of hand proprioception other than the precise location of the hand (for instance the direction of hand motion or the body hemispace in which the hand was located) were responsible for the cueing effects we observed in Experiment 2.

Experiment 4

Another reason for the effects we found in Experiments 2 and 3 could be that participants’ eye movements were yoked to the involuntary movement of their arm when perturbed by the force. To explore this possibility, we tested 4 subjects in an eye-tracking paradigm and looked for saccades in the direction of the force. Saccades were detected in only a mean of 9.0% of trials (SD: 7.0%). The number of trials with saccades in the direction of the force was 5.3% (SD: 4.3%) whereas the number of trials with saccades in the opposite direction was 3.6% (SD: 3.5%). A t-test showed no significant difference between the percentage of trials with saccades in the direction of the force and those in the opposite direction [t(3) = 0.95, p = 0.413]. Thus, the effect is unlikely to be driven by eye movements, which were infrequent and were unbiased in their direction.

Movement data

Figure 3 shows the means and standard deviations of the spatially resampled participants’ hand paths from Experiment 2 (Fig. 3a) and Experiment 3 (Fig. 3b). A mixed ANOVA on the overall distance moved at stimulus onset (between-groups factor: experiment 2 vs. 3; repeated measures: condition, validly or invalidly cued; force direction, left or right) showed a significant effect of force direction [F(1,35) = 64.61, p < 0.001], condition [F(1,35) = 5.81, p = 0.021] but not experiment [F(1,35) = 1.057, p = 0.331]; participants were found to move further in the rightward force direction and in the validly cued condition. There were no interactions between the conditions. A similar ANOVA on the peak velocity revealed a significant effect of force direction [F(1,35) = 187.93, p < 0.001]; participants moved faster in the rightward force condition. There was also a significant interaction between force direction and condition [F(1,35) = 4.62, p = 0.039]. Bonferroni-corrected t-tests of all combinations of left and right force conditions indicated that participants moved significantly more slowly in the left force conditions (validly or invalidly cued) than in the right force conditions (validly or invalidly cued) [t(36) ≥ 13.93, p ≤ 0.001].

Overall, these results indicate that participants moved further and faster in the rightward force condition than in the leftward force condition. This effect is clearly shown in Fig. 3; hand paths for rightward perturbed trials were deviated more laterally and were more variable in extent than hand paths from leftward perturbed trials. These effects reflect the biomechanical asymmetry of the human arm in this configuration. The hand was also moved further (though not faster) in the validly cued condition. However, the difference between conditions was only 0.11 cm so it is difficult to see how this could have contributed to the effect.

Discussion

In this study, we demonstrated that a visual target is easier to detect when proprioceptively cued. The detection rate was higher when the visual target was cued validly relative to invalidly by the hand location or its direction of movement. This study adds proprioception to the list of the modalities that influence the prioritization of visual processing resources in space (Spence and Driver 1997; Spence et al. 1998) and suggests a model of how vision and proprioception may interact during motor control that is complementary to the established multisensory integration model. Thus, proprioception can interact with vision not only by being merged with it into a single robust percept of hand position (Rossetti et al. 1995; Welch and Warren 1986) but also by helping allocate attentional resources towards the location of the hand or in the direction of hand movement in visual space.

Proprioception has been found to be necessary for the rapid processing of visual feedback (Balslev et al. 2007). TMS-induced depression of the contralateral somatosensory cortex was found to increase reaction times for the correction of the trajectory of finger movement in response to angular rotation of a cursor position relative to the finger position. If proprioception does indeed influence of the allocation of visual attention near the hand position, then this kind of effect is to be expected, as TMS reduces somatosensory processing of the proprioceptive signals, thus leading to slowed responses to the visual perturbation. However, it is only indirect evidence for this interaction, and we have provided more direct evidence here.

Reed et al. (2006) have shown that visual detection improves at the static location of the hand. However, in their study it was unclear whether proprioception was the cause of the effect. For instance, it may be that the increase in visual detection they found was due to top–down knowledge of hand location (e.g. a mental representation of body configuration based for instance on previous visual input or the efferent copy of the motor command). In the current study we randomly manipulated hand position (deviating left or right of the midsagittal plane) so that proprioception was the only source of hand position information. Therefore, we argue that our study provides evidence for a direct link between proprioception and visual detection.

Previous studies have investigated whether proprioception interacts with vision (Klein 1977) or with vision and touch (Lyons et al. 2006) during motion perception. Lyons et al. (2006) found no effect of finger posture on the interaction between a moving tactile stimulus applied on the finger and visual motion. In a different experiment, Klein (1977) found that visual movement discrimination is slower and less accurate in the presence of passive finger movement going in opposite rather than similar directions, suggesting that proprioception may interact with vision for motion perception. In that study, however, the decrease in the efficiency of visual discrimination may have been the result of the incompatibility relationship between the direction of movement of the proprioceptive distractor (passive finger movement oriented to the left or to the right) and the required response (left or right visual motion). In the present study we control for this possibility by making the required response a simple target present/absent report, using the foot, so that the compatibility effect does not affect the response.

In the experiments we present here, participants were certain about the location of the target because of the visual mask, but were not certain of the target’s presence. The certainty about the position of the stimulus followed work by McDonald et al. (2000) in which the authors also used a masking stimulus to give veridical information about target location. It is important to note, then, that the certainty of an integrated multimodal percept combining the visual location of the mask with the proprioceptive location of the hand cannot explain the difference in accuracy between conditions, and thus our results cannot be explained by a model of visuoproprioceptive integration (van Beers et al. 1999).

We found evidence that proprioception affects visual detection; however, our design does not allow us to conclude which aspect of proprioceptive information is critical for directing attention in visual space. One possible candidate would be the location of the hand, so that the smaller the Euclidean distance to the target, the better the visual detection. Because the participants were instructed not to resist the perturbation, during the validly cued condition, the visual target was presented both in the direction of the force and also closer to the deviated hand, compared with the invalidly cued condition (Fig. 3). In order to determine whether the reduced target-hand distance contributed to the facilitation, an analysis was performed across participants correlating the difference in the average hand-target distance at stimulus onset with the difference in d’ for the validly invalidly cued conditions, in Experiments 2 and 3. A significant correlation would indicate that the hand-target proximity might have contributed to the target detection facilitation. However, these correlations were not significant and went in opposite directions for Experiments 2 and 3. Thus, even though the hand was clearly closer to the target at stimulus onset in the validly cued conditions, we were not able to find any evidence that the improvement in visual detection in the validly cued trials relative to the invalidly cued trials depends on the Euclidean distance to the target. It is possible that other proprioceptive information such as direction of movement or laterality of the hand (e.g. same or different side of the body as the visual target) may be better predictors of visual detection. Our experimental design did not allow us to distinguish whether the proprioceptive stimulus that facilitated visual detection was the direction of force or the side of space being moved into. Future studies could test these possibilities by having the participants reach forward from the left or the right of the body midline, and give perturbations that pushed them to the left or the right, but not crossing the mid-saggital plane.

Another concern is that the increased visual detection in the validly cued condition reflects an interaction between touch and vision, rather than an interaction between proprioception and vision. Previously, it has been described that when touch and vision co-localize in space, touch improves visual detection. Thus, a touch on the right hand speeds up the detection of a rightward visual stimulus when the hand is positioned to the right of the body midline, whereas when the right hand is positioned to the left of the body midline this effect is reversed, i.e. the touch on the right hand now improves the detection of a visual stimulus in the left hemispace (Kennett et al. 2001). In our experiments the right hand held the vertical handle of the manipulandum, which was gripped with the palm of the hand on the right and the fingers curled round to the left (see Fig. 1a, b). Thus, a force field pushes the palm or the fingers, causing a tactile stimulus—and if spatially lateralized, this tactile stimulus may have drawn attention towards its location. To test this possibility we compared the position of the manipulandum at the moment when the force peaked and found a mean difference of only 1.1 cm between left and right force pulses (left force mean: −0.42 cm, SD: 1.73 cm; right force mean: 0.65 cm, SD: 1.73 cm). Co-location of the cue and the target is critical for the cueing effect (Prime et al. 2008) and previous studies where an interaction between touch and vision was demonstrated had presented these stimuli at identical location in space (e.g. Spence et al. 1998). Although we cannot entirely rule out an explanation of our results in terms of an interaction between touch, proprioception and vision, the small separation in space between the tactile stimulation applied to the fingers or the palm, together with the relatively large horizontal separation between the tactile and the visual stimuli (8 or 10 cm), makes such an explanation unlikely. Experiment 4 ruled out the possibility that eye movements, yoked to the passive movement of the hand in the same way as they are to active eye movements (Johanssen et al. 2001), could have caused a difference in visual detection in the direction in which the hand was pushed. The direction of the force applied to the hand had no statistically significant impact on the direction of the very few saccades we observed.

One question that arises from these results is this: do these effects point towards attentional costs or attentional benefits? That is, does a validly cued stimulus benefit attention on the validly cued side, causing a higher stimulus detection rate; or does an invalidly cued stimulus produce some kind of cost on the invalidly cued side, reducing stimulus detection rate? One way to test this is with a ‘neutral’ condition in which the cue is not location specific. One solution is to have multiple cued locations, with the idea being that all locations are cued simultaneously, allowing this to act as a baseline. However, it is impossible for the robot to push the arm to the left and to the right at the same time, so the neutral condition would need to assist or resist the arm’s motion in the forward direction. Although our design does not allow us to separate out attentional costs and benefits, we conclude that hand proprioception can prioritize the visual processing resources so that objects located near the hand or in the direction or hand movement are better detected relative to objects located further from hand position/trajectory.

It is interesting that Figs. 3a and b show movement data that look quite different to one another, despite performing essentially the same task. In Fig. 3a (Experiment 2), the hand paths after force onset are fairly straight, whereas in Fig. 3b (Experiment 3) they are much more curved. This difference in behaviour likely arises from the fact that participants tend to move differently when viewing their transformed hand path than when viewing their movement in the workspace (Messier and Kalaska 1997). Even though participants had no vision of their moving limb, these differences in behaviour still occurred. However, we found no differences in detection rates between the two experiments. Thus, trajectory control may be influenced by motor–visual spatial consistency independently from proprioceptive-visual cueing.

Experiment 1 suggests that the proprioceptive facilitation of visual processing varies with the time interval between the onset of the proprioceptive and visual stimuli. We found that in our set-up, the largest difference in validly invalidly cued visual detection was when the interval between the onsets of the stimuli was 200 ms, with the visual detection rate at 150 ms at a similar magnitude. While these are only preliminary data on which no statistical tests were performed, they are in line with previous results showing an optimal facilitatory interaction between vision and audition at 100–200 ms (Spence and Driver 1997) and for vision and touch at 100–300 ms (Spence et al. 1998). Shorter SOAs might not have allowed sufficient time for the proprioceptive stimuli to be processed and for attentional mechanisms to be engaged. At longer SOAs, inhibitory aftereffects like inhibition of return, which becomes an important process at 300–500 ms post-cue (Klein 2000) or an opposite shift in attention in the direction of a potential movement correction (Baldauf et al. 2006) might have occurred, both of which would bias attention away from the target location and thus reduce the facilitatory effect. It is worth noting that there is no correlation between the d′ detection rate and the distance between target and hand position at stimulus onset in Experiment 1 [r = 0.066, p = 0.916], much as in the other experiments, which means that there is no evidence that hand-target distance is responsible for the d′ changes in this experiment.

We conclude that proprioception can affect the distribution of attention in visual space and suggest that spatially valid proprioceptive cues not only make the location of the visual stimulus more certain (van Beers et al. 1999) but can also improve visual detection in the space in which the hand is located. This mechanism would complement the change in visual attention induced by a planned, but not yet executed hand movement (Baldauf et al. 2006), which is related to the efferent copy of the motor command. We speculate that these two mechanisms together serve visuomotor control by increasing the visual salience of the hand during movement or of potential obstacles in the hand’s trajectory.

Acknowledgments

This work was supported by the Wellcome Trust [GR069439] and a postdoctoral research fellowship from the Danish Medical Research Councils (DB). We thank two anonymous reviewers for their helpful comments on the manuscript.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

References

- Baldauf D, Wolf M, Deubel H. Deployment of visual attention before sequences of goal-directed hand movements. Vis Res. 2006;46:4355–4374. doi: 10.1016/j.visres.2006.08.021. [DOI] [PubMed] [Google Scholar]

- Balslev D, Miall RC, Cole J. Proprioceptive deafferentation slows down the processing of visual hand feedback. J Vis. 2007;7:1–7. doi: 10.1167/7.5.12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- di Pellegrino G, Frassinetti F. Direct evidence from parietal extinction of enhancement of visual attention near a visible hand. Curr Biol. 2000;10:1475–1477. doi: 10.1016/S0960-9822(00)00809-5. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Bulthoff HH. Merging the senses into a robust percept. Trends Cogn Sci. 2004;8:162–169. doi: 10.1016/j.tics.2004.02.002. [DOI] [PubMed] [Google Scholar]

- Fischer B, Biscaldi M, Gezeck S. On the development of voluntary and reflexive components in human saccade generation. Brain Res. 1997;754:285–297. doi: 10.1016/S0006-8993(97)00094-2. [DOI] [PubMed] [Google Scholar]

- Howard IS, Ingram JN, Wolpert DM. A modular planar robotic manipulandum with end-point torque control. J Neurosci Methods. 2009;181:199–211. doi: 10.1016/j.jneumeth.2009.05.005. [DOI] [PubMed] [Google Scholar]

- Johanssen RS, Westling G, Bäckström A, Flanagan JR. Eye-hand coordination in object manipulation. J Neurosci. 2001;21:6917–6932. doi: 10.1523/JNEUROSCI.21-17-06917.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennett S, Eimer M, Spence C, Driver J. Tactile-visual links in exogenous spatial attention under different postures: convergent evidence from psychophysics and ERPs. J Cogn Neurosci. 2001;13:462–478. doi: 10.1162/08989290152001899. [DOI] [PubMed] [Google Scholar]

- Klein RM. Attention and visual dominance: a chronometric analysis. J Exp Psychol Hum Percept Perform. 1977;3:365–378. doi: 10.1037/0096-1523.3.3.365. [DOI] [PubMed] [Google Scholar]

- Klein RM. Inhibition of return. Trends Cogn Sci. 2000;4:138–147. doi: 10.1016/S1364-6613(00)01452-2. [DOI] [PubMed] [Google Scholar]

- Linschoten MR, Harvey LO, Eller PM, Jafek BW. Fast and accurate measurement of taste and smell thresholds using a maximum-likelihood adaptive staircase procedure. Percept & Psychophys. 2001;63:1330–1347. doi: 10.3758/bf03194546. [DOI] [PubMed] [Google Scholar]

- Lyons G, Sanabria D, Vatakis A, Spence S. The modulation of crossmodal integration by unimodal perceptual grouping: a visuotactile apparent motion study. Exp Brain Res. 2006;174:510–516. doi: 10.1007/s00221-006-0485-8. [DOI] [PubMed] [Google Scholar]

- McDonald JJ, Teder-Salejarvi WA, Hillyard SA. Involuntary orienting to sound improves visual perception. Nature. 2000;407:906–908. doi: 10.1038/35038085. [DOI] [PubMed] [Google Scholar]

- Messier J, Kalaska JF. Differential effect of task conditions on errors of direction and extent of reachingmovements. Exp Brain Res. 1997;115:469–478. doi: 10.1007/PL00005716. [DOI] [PubMed] [Google Scholar]

- Norris SA, Greger BE, Martin TA, Thach WT. Prism adaptation of reaching is dependent on the type of visual feedback of hand and target position. Brain Res. 2001;905:207–219. doi: 10.1016/S0006-8993(01)02552-5. [DOI] [PubMed] [Google Scholar]

- Prime DJ, McDonald JJ, Green J, Ward LM. When cross-modal spatial attention fails. Can J Exp Psychol. 2008;62:192–197. doi: 10.1037/1196-1961.62.3.192. [DOI] [PubMed] [Google Scholar]

- Reed CL, Grubb JD, Steele C. Hands up: attentional prioritization of space near the hand. J Exp Psychol Hum Percept Perform. 2006;32:166–177. doi: 10.1037/0096-1523.32.1.166. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Desmurget M, Prablanc C. Vectorial coding of movement—vision, proprioception, or both. J Neurophysiol. 1995;74:457–463. doi: 10.1152/jn.1995.74.1.457. [DOI] [PubMed] [Google Scholar]

- Schendel K, Robertson LC. Reaching out to see: arm position can attenuate human visual loss. J Cogn Neurosci. 2004;16:935–943. doi: 10.1162/0898929041502698. [DOI] [PubMed] [Google Scholar]

- Smith DT, Lane AR, Schenck T. Arm position does not attenuate visual loss in patients with homonymous field defects. Neuropsychologia. 2008;46:2320–2325. doi: 10.1016/j.neuropsychologia.2008.03.010. [DOI] [PubMed] [Google Scholar]

- Spence CJ, Driver J. Covert spatial orienting in audition—exogenous and endogenous mechanisms. J Exp Psychol Hum Percept Perform. 1994;20:555–574. doi: 10.1037/0096-1523.20.3.555. [DOI] [Google Scholar]

- Spence C, Driver J. Audiovisual links in exogenous covert spatial orienting. Percept & Psychophys. 1997;59:1–22. doi: 10.3758/bf03206843. [DOI] [PubMed] [Google Scholar]

- Spence C, Driver J. Exogenous spatial-cueing studies of human cross-modal attention and multisensory integration. In: Spence C, Driver J, editors. Crossmodal space and crossmodal attention. Oxford: Oxford University Press; 2004. pp. 277–320. [Google Scholar]

- Spence C, Nicholls MER, Gillespie N, Driver J. Cross-modal links in exogenous covert spatial orienting between touch, audition, and vision. Percept & Psychophys. 1998;60:544–557. doi: 10.3758/bf03206045. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, van der Gon JJD. Integration of proprioceptive and visual position-information: an experimentally supported model. J Neurophysiol. 1999;81:1355–1364. doi: 10.1152/jn.1999.81.3.1355. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Baraduc P, Wolpert DM. Role of uncertainty in sensorimotor control. Phil Trans R Soc Lond Ser B-Biol Sci. 2002;357:1137–1145. doi: 10.1098/rstb.2002.1101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Beers RJ, Wolpert DM, Haggard P. When feeling is more important than seeing in sensorimotor adaptation. Curr Biol. 2002;12:834–837. doi: 10.1016/S0960-9822(02)00836-9. [DOI] [PubMed] [Google Scholar]

- Welch RB, Warren DH (1986) Intersensory interactions. In: Bauf KR, Kauffman L, Thomas JP (eds) Handbook of perception and human performance. Wiley, New York, pp 21.1–25.36