Abstract

The current fMRI adaptation study sought to elucidate the dimensions of syntactic complexity and their underlying neural substrates. For the first time with fMRI, we investigated repetition suppression (ie, fMRI adaptation) for two orthogonal dimensions of sentence complexity: embedding position (right-branching vs center-embedding) and movement type (subject vs object). Two novel results were obtained: First, we found syntactic adaptation in Broca's area and second, this adaptation was structured. Anterior Broca's area (BA 45) selectively adapted to movement type, while posterior Broca's area (BA 44) demonstrated adaptation to both movement type and embedding position (as did left posterior superior temporal gyrus and right inferior precentral sulcus). The functional distinction within Broca's area is critical not only to an understanding of the functional neuroanatomy of language, but also to theoretical accounts of syntactic complexity, demonstrating its multi-dimensional nature. These results implicate that during syntactic comprehension, a large network of areas is engaged, but that only anterior Broca's area is selective to syntactic movement.

It is almost universally agreed that the linguistic comprehension function of some language regions of the brain is related to syntactic complexity. Less clear is the definition of this functional notion, even though its elucidation is crucial for an understanding of the functions of these brain regions – a long debated issue, especially concerning Broca's area (Bornkessel et al., 2005; Caplan et al., 2000; Friederici et al., 2006; Grodzinsky, 2000). In this paper, we report a highly structured, tightly controlled and counterbalanced fMRI adaptation experiment, that helps elucidating this notion by considering (some of) its component parts, and localizing them in distinct cerebral loci. Our results refine our understanding of the functional anatomy of processes underlying syntactic complexity, and furthermore support the move to make functional subdivisions within Broca's area (Makuuchi et al., 2009).

Our investigation uses an array of complex sentences constructed around two orthogonal and independently motivated complexity factors: word-order canonicity (and its close relative, syntactic Movement in its varied instantiations) and place of embedding. Our method, fMRI adaptation, is based on the observation that a stimulus property, once repeated, yields a suppressed signal, and so helps to identify such properties that a particular brain region processes. If a given region is involved in processing a property X, then repetition of X results in a lower fMRI signal recorded in that region (Buckner et al., 1998; Grill-Spector and Malach, 2001; Kourtzi and Kanwisher, 2001). In the present study, X takes the form of the two types of syntactic complexity factors mentioned.

Our paradigm produced new results. Here are the highlights: a. for the first time, we show syntactic adaptation in Broca's area; b. we show that this region moreover is functionally subdivided along these syntactic complexity dimensions; c. we demonstrate that the anterior portion of Broca's area – and only this part – is syntactically selective, as it adapts to one complexity dimension (Canonicity/Movement type), but not the other (Embedding).

These results provide critical support for the claim that the anterior part of Broca's region has a highly specific (though not exclusive) role in sentence processing (Santi and Grodzinsky, 2007b). Though likely multi-functional, the specialty of this brain region in the domain of sentence processing is syntactic Movement.

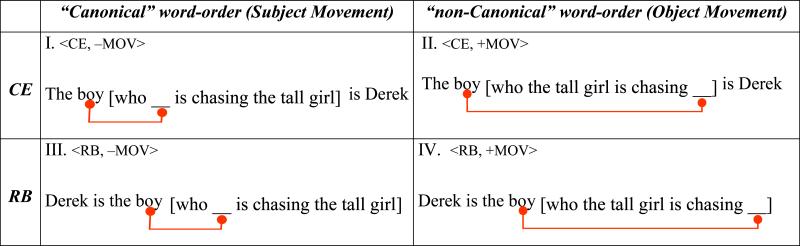

A long psycho- and neuro-linguistic tradition operationalizes the concept of syntactic complexity: sentence types whose comprehension is more difficult (as reflected by elevated reaction and reading time, level of comprehension errors, and the like) are considered more complex than those whose speed of processing and error levels are on the lower side. Structurally, complex sentences are typically created by embedding sentences within others (see Table 1 and compare against a baseline of conjoined sentences, generally of the form John is short and Mary is tall, or Derek is the boy, and the tall girl is chasing Derek). A word-order manipulation that displaces a constituent further increases complexity. These two structural manipulations can be crossed to generate a 2×2 matrix of relative clauses (Table 1 see SI_Figure1 and SI_Figure2 for syntactic tree representations). Since this matrix represents the stimulus design of the current study, let's further examine how these factors generate complexity.

Table 1.

Blue denotes relative clause and red the matrix clause (relative clause excluded).

|

Sentence Stimuli: CE: Center Embedded relative clauses; RB: Right-Branching relative clauses; MOV: Movement

Embedding

embedded relative clauses (blue lowered script) that modify the subject of the (red) main clause (top row) intervene between the main subject and its predicate, which maintain an agreement dependency (in person, gender, and number). Such sentences are more difficult to process than those in which the (blue) relative modifies the object (bottom row) and the agreement relation within the (red) main clause is between adjacent elements (Blaubergs and Braine, 1974; Miller and Isard, 1964).

Canonicity/Movement

in all the sentences in Table 1, there must be a link between the boy (the relative head), who (the relative pronoun), and the empty position “_” to guarantee that we interpret the boy as chaser in I and III, and as chasee in II and IV. This link is short in the left column sentences where the boy, the main subject, which is modified by the relative clause, also functions as the subject of the relative itself (in which “__” precedes the embedded verb) and where the surface order of roles <chaser,chasee> is as in a “canonical” active sentence. However, in the right column sentences, where “canonicity” is reversed, with a surface order of <chasee,chaser>, the link between the boy (the relative head), who (the relative pronoun), and the empty position “_” is long. Indeed, the sentences in the left column are easier to process than those in the right column (Ford, 1983; King and Just, 1991; Traxler et al., 2002; Traxler et al., 2005). This is a Movement type (or ±”Canonicity”) contrast. We mention both perspectives on this contrast merely because this particular experiment is not designed to distinguish between them.

Note that Table 1 features an embedded clause in both rows, and a Movement relation in both columns (annotated by a line). Each of these dimensions, then, distinguishes a type – Movement type in the columns, and Embedding type in the rows. Movement in the left column (I, III) is “string-vacuous” (i.e., it does not lead to overt changes of word-order), which preserves the Movement contrast in the present context. The complex theoretical considerations that lead to the postulation of Movement even when there are no overt consequences are orthogonal to the question we are asking. In the constructions we use, such movement may in fact have no processing consequences: the proximity of the thematic assignee (the boy who is the chaser in I, III) to its assigner (is chasing) may enable direct assignment of the external thematic role. Yet this needn't concern us; we ask, rather, whether or not 2 central complexity factors can be teased apart. A “Movement type” (short subject vs long object movement) distinction would therefore suffice for our purposes, which is how we refer to our column contrast.

This array is a compact 2-dimensional arrangement of 4 sentence types composed of identical sets of words, yet whose different syntactic properties result in different meanings. Further, these syntactic properties have processing ramifications: the position of the embedded clause within the matrix clause has a processing cost, as Center-Embedded sentences in the top row have been shown to be more complex than the Right-Branching ones in the bottom row (Blaubergs and Braine, 1974; Miller and Isard, 1964). If complexity works in the reverse direction (ie, right-branching is more complex than center-embedding), as some have claimed (Gibson et al., 2005), our adaptation design will still be equally sensitive to this contrast as it does not make any assumption of directionality. Likewise, the greater extraction “distance” of object-extracted relatives compared to subject-extracted ones (left vs. right column) is also correlated with processing difficulty (there is a similar subject/object processing asymmetry in wh-questions (De Vincenzi, 1996; Frazier and d'Arcais, 1989), suggesting that in general, movement from object position is more difficult to process than from subject position).

Psycholinguistically, then, both Embedding type and Movement type affect processing difficulty. Yet as Table 1 demonstrates, they are structurally orthogonal, providing a distinction for which any theory of sentence processing must take into consideration. Though there have been a number of past attempts to account for these complexity distinctions (Kimball, 1973; Miller and Chomsky, 1963), a dominant current approach collapses both dimensions, ascribing them to differential demands made on Working Memory (WM; Gibson, 1998; Miller and Chomsky, 1963; Wanner and Maratsos, 1978). It contends that when a relative clause is Center-Embedded, it separates its head – the subject of the main clause (the boy, Table 1 top row) – from its predicate (is Derek), and that this separation interferes in the computation of the subject-verb agreement dependency, which in turn taxes WM, making more storage demands than in the Right-Branching case (Table 1 bottom row). In the ±Movement contrast, the distance between the position where an element is pronounced (the boy, who) and where it is interpreted (“_”) for its semantic role with respect to the predicate is greater in object relatives (Table 1 right column) than in subject relatives, and thus imparts greater demands on WM. Neurological evidence is also said to converge on this account, as Broca's area is the brain region most implicated in imaging experiments on syntactic complexity (and particularly canonicity), and aspects of WM are housed in this anatomical locus (Braver et al., 1997; Druzgal and D'esposito, 2001; Smith and Jonides, 1999). It has for these reasons been claimed that WM demands are the underlying cause of these complexity effects (Caplan et al., 2000; Just et al., 1996; Stromswold et al., 1996).

Yet, are these orthogonal dimensions separable neurologically? This is an important question, given the different structural configurations in the rows and columns of Table 1. This is a question that any account, including the WM approach, would like an answer to: an affirmative answer would force a modular theory, whereas a negative one would fortify the claim that the underlying cause of difficulty in processing embedding and movement is one and the same.

To date, no imaging study has attempted to unpack the 2 dimensions of complexity we discussed. Experiments have either varied one dimension while keepingthe other constant (Ben-Shachar et al., 2003; Ben-Shachar et al., 2004; Bornkessel et al., 2005; Caplan et al., 1999; Caplan et al., 2000; Grewe et al., 2005; Just et al., 1996; Stromswold et al., 1996), or manipulated Movement in different ways (Ben-Shachar et al., 2003; Ben-Shachar et al., 2004; Bornkessel et al., 2005; Caplan et al., 1999; Caplan et al., 2000; Grewe et al., 2005; Just et al., 1996; Stromswold et al., 1996). Results of the latter experiments have been used to attribute a selective role for Broca's area in movement. The lesion-based literature, which inspired much of the imaging work, has for the most part varied a single complexity dimension, using the ±Movement contrast to argue that Broca's aphasic patients are deficient in Movement (Ansell and Flowers, 1982; Beretta et al., 2001; Caplan and Futter, 1986; Friedmann and Shapiro, 2003; Grodzinsky, 1989, 2000; Sherman and Schweickert, 1989).

Interestingly, lesion-studies that varied both dimensions suggest that a distinction may exist: A recent reanalysis of published comprehension data from 32 agrammatic Broca's aphasics distinguishes between the dimensions of complexity of relative clauses. Despite considerable individual variability, the Movement and Embedding dimensions are dissociable (Drai and Grodzinsky, 2006; Drai et al., 2001), as a robust comprehension performance contrast across Movement types is evinced, but Embedding position by and large has no effect on comprehension (Ansell and Flowers, 1982; Beretta et al., 2001; Caplan and Futter, 1986; Drai and Grodzinsky, 2006; Friedmann and Shapiro, 2003; Grodzinsky, 1989, 2000; Sherman and Schweickert, 1989) though see (Hickok et al., 1993) for a somewhat different perspective. While not problem free, this work hints at the possibility of a neurological distinction among dimensions of complexity. Such a result, if obtained, would be of major theoretical significance, as it would lead to a more refined theory of sentence processing, and to a more detailed view of its neurological underpinnings.

No imaging study has attempted to distinguish between the two central dimensions of complexity, by pitting them against one another. Against this background, we designed the present fMRI adaptation study. In it, we provide a novel perspective on the neurological representation of syntactic complexity, by teasing apart the two complexity factors. The idea here is to test the uniformity of the cerebral representation of syntactic complexity through adaptation. A generic theory of complexity predicts no neurological distinction in adaptation patterns to both Embedding and Movement. A processing theory that does distinguish between sources of complexity opens the way for inquiries such as ours, that seek for a split in localization.

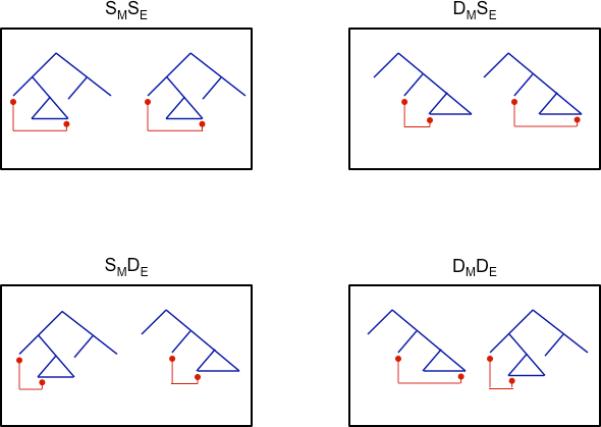

We turned to neural adaptation, which is induced by repetition (Buckner et al., 1998; Grill-Spector and Malach, 2001; Kourtzi and Kanwisher, 2001). Our experiment, modeled after a vision experiment by Kourtzi and Kanwisher (2001), repeated sentences which were either the same or different along the two complexity dimensions featured in Table 1. Each stimulus consisted of a 2-sentence sequence, which differed along zero, one, or two complexity dimensions. This resulted in a 2(movement type, embedding position) × 2(same, different) design. Thus, the first sentence was of the four types in Table 1, and the second was either the same or different along each complexity dimension. This design produced four conditions (Figure 1): sentence pairs can be a. Same Movement, Same Embedding (SMSE); b. Different Movement, Same Embedding (DMSE); c. Same Movement, Different Embedding (SMDE); d. Different Movement, Different Embedding (DMDE). We modeled our design after a vision study, while keeping in mind the fact that adaptation has been observed for 2-sentence pairs (Devauchelle et al., 2009), and neural adaptation has been observed with sentences in general (Dehaene-Lambertz et al., 2006; Devauchelle et al., 2009; Noppeney and Price, 2004).

Figure 1.

Presents a graphical representation of the sentence pairs in each of the four conditions.

Neural adaptation reduces the BOLD signal, and is expected in a given voxel whenever it considers the 2 sentences as Same. Therefore, if either or both complexity dimensions vary, signal intensity in conditions (b)-(d) relative to (a) would be lower. If, however, the brain makes complexity distinctions, adaptation patterns would differentiate the conditions.

Materials and Methods

Subjects

Eighteen students from McGill University volunteered (11 females, x̄=23.78years, range: 19-47 years). One of the subjects was excluded from the analysis due to low behavioral scores (70% overall and around 50% in certain conditions). All participants were right-handed by self-report, native English speakers with normal hearing. Participants gave informed consent in accordance with the ethics committee of the Montreal Neurological Institute (MNI).

Stimuli

For each of the 4 conditions there were 48 unique sentences (see Supplementary Information for a complete list of sentences) divided up over two runs. Given a coding error there were 49 trials of the DMDE condition, 47 of the DMSE condition, and 48 of the SMSE and SMDE conditions. Within each condition the possible order of sentence type was counterbalanced. Critically, all four conditions contain the same syntactic types, but the pairs of sentences within a trial were different (See Table 3). This means that any resulting activation distinctions across conditions cannot be attributed to different degrees of syntactic difficulty across conditions since each of them contained identical syntactic types that simply varied in their sequencing within the sentence pairs of each trial. Furthermore, given that there were no differences in task performance across the conditions, any argument about task complexity interacting with the conditions is diminished.

Table 3.

A schematic depiction of the counterbalancing of sentence orders in each condition where Subject (S), Verb (V), Object (O), and Noun (N)

| |

Movement Type |

||

|---|---|---|---|

| Embedding Position | Same | Different | |

| Same | SMSE | DMSE | |

| <CE, –MOV>, <CE, –MOV> | <CE, –MOV>, <CE, +MOV> | ||

| <CE, +MOV>, <CE, +MOV> | <CE, +MOV>, <CE, –MOV> | ||

| <RB, –MOV>, <RB, –MOV> | <RB, –MOV>, <RB, +MOV> | ||

| <RB, +MOV>, <RB, +MOV> | <RB, +MOV>, <RB, –MOV> | ||

| Different | SMDE | DMDE | |

| <CE, –MOV>, <RB, –MOV> | <CE, –MOV>, <RB, +MOV> | ||

| <CE, +MOV>, <RB, +MOV> | <CE, +MOV>, <RB, –MOV> | ||

| <RB, –MOV>, <CE, –MOV> | <RB, –MOV>, <CE, +MOV> | ||

| <RB, +MOV>, <CE, +MOV> | <RB, +MOV>, <CE, –MOV> | ||

The recorded auditory sentences were on average 3274ms long. Each trial began with one frame of silence (1800ms) followed by a sentence (depending on sentence length there may be additional silence at the beginning), then 200ms of silence, and then a second sentence (again, depending on sentence length there may be additional silence at the beginning). Compared to the first sentence, the second sentence had, either the same syntax (ie, SMSE), a change to both Movement type and Embedding position (ie, DMDE), a change in embedding position only (ie, SMDE), or a change in Movement type only (ie, DMSE). The second sentence of each pair in a trial contained identical lexical items, with the exception that one or two of the nouns changed, as demonstrated in (i) and (ii) for an SMSE condition (the changed noun is marked in bold). The subjects’ task was to identify whether 1 or 2 nouns changed from the first to the second sentence of every trial. Half of the stimuli had a 1 noun change and half had a 2 noun change. When there was a one noun change it could be to any of the three nouns. When there was a two noun change, the noun within the relative was changed as well as either the proper name or the head of the relative. In this way, the two sentences always differed in meaning to some extent. Additionally, in the conditions that contained Movement (DMSE , DMDE) (most of) the sentences differed in thematic labeling, given that in one sentence the moved NP (e.g. the boy) was the agent and in the other it was the patient. In the conditions that contained Embedding (DMDE, SMDE) sentence meaning was also affected, albeit in a way that is more difficult to quantify: the semantic difference between (Derek is the boy who is chasing the tall girl) and (The boy who is chasing the tall girl is Derek) is better appreciated by considering that they each serve as an answer to a different question: “Who is the boy?” for the former, and ”Who is Derek?” for the latter.

Following the second sentence, the subject was given 1300ms of silence to respond. A 500ms tone was played to indicate the end of the trial and that they should make a response by this time (see Figure 5 for a representation of the trial dynamics).

Figure 5.

Presents the temporal dynamics of each trial. Positions of the three scans, relative to the trial, that were utilized in the analysis are also presented.

The waitress [who the lawyer is smiling at _] is Dianna

The witness [who the lawyer is smiling at _] is Dianna

Given that there were two sentences per trial, it was not possible to directly assess comprehension. Our task may have an inherent bias, which is, crucially, a conservative one: if participants try to avoid comprehension, and instead scan the sequence of nouns heard in order to carry out this task, they would do so across all conditions. As a consequence, no structurally selective results are ever predicted. And still, our quest and main result demonstrates selective adaptation (see Results). Furthermore, it seems unlikely that suppressing most of the words on the way, and retaining only the nouns, would be more efficient or easier.

fMRI Parameters

Image acquisition was performed with a 1.5T Siemens SonataVision imager at the MNI in Montréal, Canada. A localizer was performed followed by whole-brain T1-weighted imaging for anatomical localization (256x256 matrix; 160-176 continuous 1.00mm sagittal slices). Each functional volume was acquired with a 64 × 64 matrix size and a total volume acquisition time of 1800 ms. Each imaging run produced 582 acquisitions of the brain volume (20, 5mm thick slices, AC-PC plane; TE = 50ms; TR = 1800 ms; FA = 40° FOV = 320×320 mm).

fMRI Procedure

One to two days prior to MRI scanning the subject came into the lab and they were provided with task instructions and given a short practice session. This practice session provided an opportunity to address any questions or concerns participants may have about the task, or otherwise, prior to them being inside the imaging machine. On the day of MRI scanning the subject was positioned on the bed of the MRI machine. An air-vacuum pillow was used to minimize head movement. Stimuli were presented through high quality pneumatic-based headphones (Silent Scan 3100 System, Avotec Inc.) that attenuated surrounding noise by ~30dB. The session began with a short practice run of 8 sentences. The practice run provided an opportunity to confirm that the volume was adequate and that all equipment was properly connected, in addition to giving the subject a chance to warm up to the task. The subject then performed the task across two runs of stimuli with a high-resolution anatomical scan acquired between the two functional runs.

Stimuli were presented in a rapid event related design. Additional null frames to the one presented at the beginning of every trial were included and were used to jitter stimulus onset. There were a total of 100 additional null frames that were presented individually or in multiples of the TR (ie, in multiples of 2-8 frames). The duration and positioning of null frames as well as condition order across a run was optimized by using the Linux program Optseq (http://surfer.nmr.mgh.harvard.edu/optseq/). Presentation order of the runs was counterbalanced across subjects.

Behavioral Data Analysis

The behavioral percent correct comprehension data were averaged across runs and was then submitted to a 2(±Same Movement) × 2(±Same Embedding) Repeated Measures ANOVA in SPSS.

fMRI Data Analysis

Statistical fMRI analyses were performed with Brainvoyager QX v1.7 software (Goebel et al., 2006). The data were slice-scan time corrected (sinc interpolation), motion corrected, and had linear trends removed. The first two frames of silence (of a sequence of 7) in each run were excluded from the analysis due to saturation of the BOLD effect. The functional data was coregistered to the anatomical data through a two step process. An initial alignment was performed based on position information contained in the header of the functional and anatomical files, followed by a fine-tuning alignment based on intensity correlation between the functional (inverted intensities values were used) and anatomical data in order to compensate for any head motion between the acquisition of the anatomical and the functional. In cases where the fine-tuning alignment did not produce an optimal alignment, minor manual adjustments were made. The individual functional and anatomical data was transformed into Talairach space and the talairached functional data were processed with a Gaussian spatial filter (FWHM=8mm).

Statistical analyses were based on the General Linear Model. Given that a rapid event-related design was used, the hemodynamic response function (HRF) of one condition overlapped with another and a deconvolution analysis was used to determine the signal associated with each condition. This analysis presents an advantage over the typical convolution based GLM analysis, since it makes no assumptions about the shape of the hemodynamic response function. The deconvolution was performed over 11 scans from stimulus onset (ie, 11 predictors per condition) for each of the four experimental conditions. Stimulus onset was taken as the beginning of the first frame following the 1800ms of silence in a trial. Since sentence length varied, there was a variable amount of silence at the beginning of the stimulus (equivalent to 3500ms-sentence length). Since adaptation should not occur until the second sentence of a trial the timepoints that entered into the contrast were those of the second half of the deconvolution and surrounding the peak. Specifically the time points corresponding to the scans 7-9 (of 1-11) were used in each contrast. There is evidence that adaptation, at least behaviorally, occurs by the second sentence and then plateaus (Devauchelle et al., 2009).

Conjunction of Contrasts

The contrasts that entered into a conjunction analysis to isolate regions only responsive to movement type were the following: (1) DMDE – SMSE, (2) DMDE – SMDE, (3) DMSE – SMSE, and (4) DMSE – SMDE. In each of these contrasts a condition with movement type remaining the same (SMDE and SMSE) is being subtracted from one where it changes (DMSE, DMDE). A region specific to movement type should only reduce fMRI activation when movement type remains unchanged (i.e adapt). It should not distinguish between embedding position changing or remaining the same.

A conjunction of the following contrasts was used to see if any areas were responsive to embedding position only, although there is no theory, of which we are aware, that would make such a prediction: (1) DMDE – SMSE (2) DMDE – DMSE, (3) SMDE – SMSE, and (4) SMDE – DMSE. The logic here is the same as in the above conjunction.

Lastly, a conjunction of the following contrasts was used to determine which areas are responsive to both embedding position and movement type: (1) DMDE – SMSE (2) SMDE – SMSE and (3) DMSE – SMSE. When either (or both) the position of embedding or the type of movement changes (SMDE, DMSE) the activation should be higher than when both are held constant. Hence, the identical condition in which both embedding type and movement type is the same is subtracted from those conditions in which one or both factors change. So long as there is a change to a property that the region is sensitive to it may be released from the repetition suppression.

Notice, that these contrasts do not distinguish identity or difference between members of a pair in terms of syntax and semantic composition. It is impossible to change the syntax and maintain semantic composition constant, however the conditions do keep lexical semantics constant.

Any overlap between areas that adapt to both syntactic variables and those that adapt to only one syntactic variable is indicative of an area that adapts to both but is adapting more strongly to one over the other.

Random effect group maps corrected for serial correlations underwent a conjunction analysis and thresholded at the voxel-wise p-value p<.05 and cluster thresholded at p<.05 to correct for multiple comparisons and for positive betas only. Given that the voxel wise p-value of .05 is performed for the intersection of 3 or 4 maps, the probability that any voxel in the conjunction analysis is due to chance is actually much lower. Given the dependence between some of the contrasts, a calculation is not straightforward. However, in the case of the 4 contrast conjunction, 2 contrasts are independent, thus, the probability for all 4 is lower than the .0025 associated with only these 2 contrasts. To confirm the reliability of the results we also looked at less stringent voxel-wise thresholds (p<.1, although not significant). Two methods are available for calculating conjunction analyses with a random effects model in BV: (I) random effect statistic calculated on the conjunction of contrasts based on their beta values or (II) conjunction of the random effect statistic of each contrast. Given the more conservative nature of (II) we chose this method.

Cytoarchitectonic Probability Calculations

For activation clusters that appeared within the left Inferior Frontal Gyrus (IFG), the probability that these clusters were within BA 44 and/or 45 was calculated using the probability maps of Amunts et al. (1999 ; http://www.bic.mni.mcgill.ca/cytoarchitectonics/). The probability calculations required a 5-step process: (I) Extract talairach coordinates for all voxels within a cluster (II) Convert the coordinates to MNI coordinates using the matlab script tal2mni (http://imaging.mrc-cbu.cam.ac.uk/imaging/MniTalairach) as the Amunts map (1999) is in the MNI coordinate system and ours are in Talairach and Tournoux (1988) , (III) Convert the MNI world coordinates to voxel coordinates of the MINC files (with respect to the probability maps of BA44 and BA45 that are within MNI space), (IV) Extract probability values from the voxels within the probability maps, and (V) average all values within a cluster to obtain the final number. Since the values represent the number of subjects with overlapping cytoarchitectonic areas at any particular voxel, the average value was divided by the number of brains used in generating the cytoarchtectonic maps (ie, 10) and multiplied by 100%.

Results

Behavioral

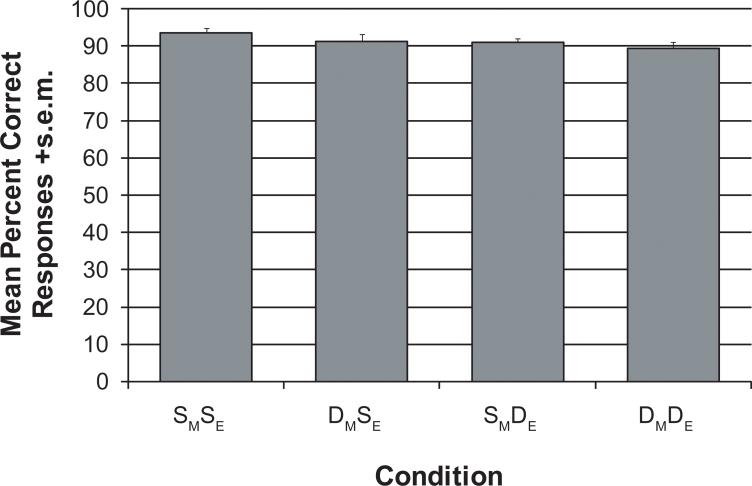

The subjects performed the task well without being at ceiling, as each condition had an average accuracy around 90% (see Figure 2). There was a significant main effect of Embedding (F(1, 16)=5.38, p=0.034), due to same-Embedding having a higher mean percentage correct than different-Embedding. There was no main effect of Movement, which is most likely due to more variability in the movement contrast, given the two contrasts are of almost equal magnitude – . Given the task involves a response to a comparison between two sentences rather than a single one, there is no theoretical motivation to measure reaction time data.

Figure 2.

Presents the mean percent correct responses +s.e.m for each condition.

fMRI

The search for clusters that adapt to Movement and to Embedding was carried out through the calculation of contrasts, and conjunction analyses (see Methods for further elaboration). We report the following results:

1. The anterior LIFG (BA 45) adapted to Movement but not Embedding

This cluster was discovered through a conjunction of contrasts between Same Movement and Different Movement conditions. That is, contrasts between conditions that contained sentence pairs that were the Same on the Movement dimension, and conditions that were Different on this dimension. In each contrast, then, a Same Movement condition (namely, SMDE and SMSE) was subtracted from a Different Movement condition (DMSE and DMDE; Table 2, Figure 3). The absence of adaptation to Embedding is not an issue of cluster thresholding, as there were no clusters of any size in BA45. Furthermore, a less conservative 2 (+/- Same Movement) × 2(+/- Embedding) ANOVA analysis did not demonstrate a main effect of Embedding in this region, while it did clearly demonstrate a main effect of Movement.

Table 2.

A summary of the fMRI adaptation results indicating each cluster's anatomical landmark, hemisphere, mean Talairach coordinate, Brodmann area (BA), and volume in mm3.

| Landmark | Hemis phere | Talairach coordinates (x,y,z) | BA | Volume (mm3) |

|---|---|---|---|---|

| Adaptation to Movement Type and Embedding Position | ||||

| Inferior Frontal Gyrus/Inferior Precentral Sulcus | Left | -41, 10, 31 | 44/6/8 | 5563 |

| Inferior Precentral Sulcus | Right | 48, 20, 36 | 6/9 | 2146 |

| Superior Temporal Gyrus | Left | -52, -34, 2 | 21/22 | 2378 |

| Adaptation to Movement Type | ||||

| Inferior Frontal Gyrus | Left | -48, 29, 15 | 45 | 857 |

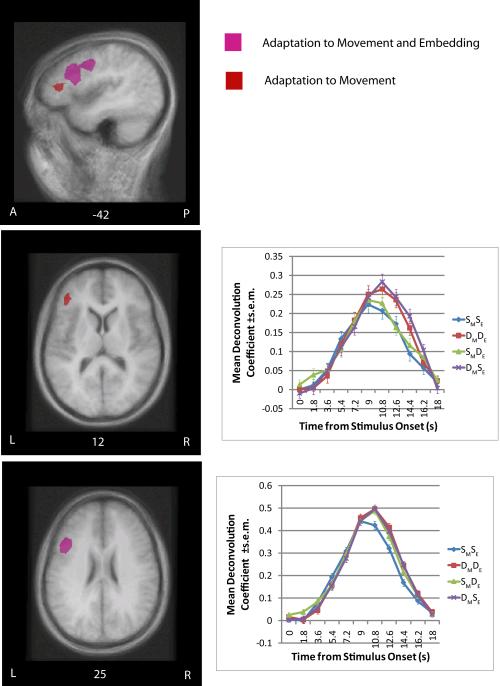

Figure 3.

Presents the clusters of adaptation in the left inferior frontal gyrus (IFG; Broca's area) overlaid on a group average MRI. The anatomical locations are presented sagitally and axially. The pink color corresponds to those areas that adapted to both movement type and embedding position, the red color corresponds to those areas that adapted to movement type only. The clusters are thresholded at equivalent thresholds (p<.05 voxel-wise and p<.05 map corrected for multiple-comparisons). The deconvolution plots for areas that adapted to movement type only and both movement type and embedding position are presented as well.

2. No region adapted to Embedding but not Movement

To seek for such a cluster, a conjunction of the following contrasts was used: (1) DMDE – SMSE (2) DMDE – DMSE, (3) SMDE – SMSE, and (4) SMDE – DMSE. The logic here is the same as in the above conjunction – subtract the conditions in which Embedding is Same cross the 2 sentences of each stimulus (SMSE, DMSE) from those in which it is Different (SMDE, DMDE ). No current theory, of which we are aware, predicts that such a cluster be found.

3. Three distinct regions adapted to both Movement and Embedding – posterior LIFG (BA44), the right inferior precentral sulcus (RiPS), and the left superior temporal gyrus (STG)

This was discovered through a conjunction of the following contrasts: (1) DMDE – SMSE (2) SMDE – SMSE and (3) DMSE – SMSE. When either (or both) the position of embedding or the type of movement changes (SMDE, DMSE) the activation should be higher than when both are held constant (see Table 2, Figure 4). Hence, the identical condition in which both embedding type and movement type is the same is subtracted from those conditions in which one or both factors change.

Figure 4.

Presents the clusters of adaptation in the left superior temporal gyrus (STG; upper panel) and Right Inferior Precentral Sulcus (iPS; lower panel) overlaid on a group average MRI. The clusters are presented in sagital and axial planes along with its deconvolution plot. The cluster is thresholded at p<.05 voxel-wise and p<.05 map corrected for multiple-comparisons.

Localization and separation of clusters

We used cytoarchitectonic probability maps from the Jülich Brain Mapping project as our reference for Broca's region (see Methods for further elaboration). The Movement cluster in anterior LIFG had a 29% probability of being within BA45. This number is relatively high (Santi and Grodzinsky, 2007a). The Movement+Embedding cluster in the posterior LIFG had a 17% probability of being within BA44 (see Table 2, Figure 5). This cluster is quite large and extends beyond BA44. These two clusters had a small volume of overlap (47mm3). The overlap of these contrasts is indicative of an interaction, whereby the region is sensitive to both embedding position and movement type, but is more so to movement. Given the small size of the volume, it does not require much discussion. Moreover, increasing the voxel-wise threshold (p<.1) did not diminish the distinction across Broca's area, rather both conjunctions simply produced larger clusters and consequently a larger area of overlap.

Discussion

Our results present a highly structured picture: they suggest anatomical division of syntactic complexity, and specifically a functional parcellation of Broca's region along the two complexity dimensions. If decreased BOLD response to repeated percepts reflects the involvement of the adapted regions in processing, then left Brodmann Area 45 specializes in calculating Movement relations during sentence comprehension, whereas no particular brain area specializes in handling Embedding. Our results further suggest that a distinct set of brain areas participates in analyzing sentences that are syntactically complex along both dimensions (i.e., the left posterior STG, and to a lesser extent right iPS, and left Brodmann Areas 44/6/8). These are novel results that contribute to a more detailed and precise brain map of the language faculty, mainly by showing that Broca's region is not linguistically monolithic and that syntactic complexity is not a uniform notion. The results cannot be attributed to lexical semantics, since lexical items were equally consistent across all conditions that were contrasted to one another in testing for adaptation (ie, a decreased signal). We note that it is possible however, that the left posterior STG, right iPS and left BA 44/6/8 were adapting to sentence-level (compositional) semantics since sentential meaning was preserved more in the identical condition than the other three conditions (it is impossible to preserve compositional semantics given the syntactic modifications under investigation). Such an account, importantly, cannot be given to the main result – the Movement-selectivity in adaptation of BA45. A meaning difference between the 2 members of the sentence pair exists in both complexity dimensions, and hence on the semantic account, a meaning difference within a stimulus – whether induced by Movement or Embedding, should lead to an effect. The observed selectivity is therefore incompatible with a semantic composition explanation.

An alternative view is to recast the neural dissociation between these two syntactic factors, in BA45, in semantic terms. The movement conditions (DMSE, DMDE) contained pairs of sentences that had an NP moved from subject position in one case and from object position in the other. This resulted in different pairings between the theta roles and the NPs. For example, the DMSE condition contained a sentence with subject movement (see I in Table 1), where the boy was the agent, and a sentence with object movement (see II in Table 1), where the boy was the patient. On this view (proposed by an anonymous reviewer whom we thank), the current experiment cannot unpack sameness of gap position from thematic labeling of the NPs as the reason for the observed effect. This view should be considered against the fact that all conditions contained meaning differences (see Methods section) – the Embedding conditions also contained a meaning difference, which was distinct from the meaning difference in the Movement conditions. Either way, the critical point here, whether described in terms of semantics or syntax, is that BA45 demonstrates clear selectivity.

Moreover, the results are in line with some previous syntactic results: studies that contrasted Movement with other intra-sentential dependency relations, in English, singled out left BA 45 (Santi and Grodzinsky, 2007a, b) and a study that contrasted long distance object and short distance subject movement, in German, found activation at the border between BA44 and BA45 and at the border between the precentral and inferior frontal sulci (Fiebach et al., 2005). Though these studies showed that this region is not generally sensitive to syntactic dependencies, neither the current study nor previous ones precisely identify which aspect of this complex movement operation is responsible.

Finally, it is important to note that some earlier syntactic adaptation experiments obtained very different – and disparate – results. Thus one study found adaptation in the left anterior temporal pole (Noppeney and Price, 2004) whereas another homes in on the posterior superior temporal gyrus (Dehaene-Lambertz et al., 2006; Devauchelle et al., 2009). The results of these studies did not overlap, and none, moreover, found adaptation in Broca's area. The underlying reason for this disjointed picture has to do with the contrasts and the tasks used (see SI_Discussion).

In summary, the current study demonstrates that not all dimensions of syntactic complexity are treated equally in the brain. Distinctions are made along an anterior-to-posterior direction, with anterior aspects being selective to movement and posterior aspects demonstrating a general sensitivity to syntax. A structured brain map for aspects of syntax thus emerges. Importantly, our results do not exclude a relation between these brain areas and other cognitive or linguistic functions. They only indicate the manner in which each is involved in the processing of complex sentences.

Supplementary Material

Supplementary Figure Captions

Figure 1.

Presents tree diagrams for the two right right-branching sentences. (a.) contains an object-relative and (b.) contains a subject relative.

Figure 2.

Presents tree diagrams for the two center-embedded sentences. (a.) contains an object-relative and (b.) contains a subject relative.

Acknowledgments

This work was supported by a Canada Research Chairs grant, by a SSHRC standard grant, and by NIH grant DC000494 (YG), and a Natural Sciences and Engineering Research Council (NSERC) scholarship, and Lloyd Carr-Harris Fellowship (AS).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Amunts K, Schleicher A, Börgel U, Mohlberg H, Uylings HBM, Zilles K. Broca's region revisited: Cytoarchitecture and intersubject variability. Journal of Comparative Neurology. 1999;412:319–341. doi: 10.1002/(sici)1096-9861(19990920)412:2<319::aid-cne10>3.0.co;2-7. [DOI] [PubMed] [Google Scholar]

- Ansell BJ, Flowers CR. Aphasic adults’ use of heuristic and structural linguistic cues for sentence analysis. Brain and Language. 1982;16:61–72. doi: 10.1016/0093-934x(82)90072-4. [DOI] [PubMed] [Google Scholar]

- Ben-Shachar M, Hendler T, Kahn I, Ben-Bashat D, Grodzinsky Y. The neural reality of syntactic transformations: Evidence from functional magnetic resonance imaging. Psychological Science. 2003;14:433–440. doi: 10.1111/1467-9280.01459. [DOI] [PubMed] [Google Scholar]

- Ben-Shachar M, Palti D, Grodzinsky Y. Neural correlates of syntactic movement: Converging evidence from two fMRI experiments. NeuroImage. 2004;21:1320–1336. doi: 10.1016/j.neuroimage.2003.11.027. [DOI] [PubMed] [Google Scholar]

- Beretta A, Schmitt C, Halliwell J, Munn A, Cuetos F, Kim S. The effects of scrambling on Spanish and Korean agrammatic interpretation: Why linear models fail and structural models survive. Brain and Language. 2001;79:407–425. doi: 10.1006/brln.2001.2495. [DOI] [PubMed] [Google Scholar]

- Blaubergs MS, Braine MDS. Short-term memory limitations on decoding self-embedded sentences. Journal of Experimental Psychology-General. 1974;102:745–748. [Google Scholar]

- Bornkessel I, Zysset S, Friederici A, Von Cramon DY, Schlesewsky M. Who did what to whom? The neural basis of argument hierarchies during language comprehension. Neuroimage. 2005;26:221–233. doi: 10.1016/j.neuroimage.2005.01.032. [DOI] [PubMed] [Google Scholar]

- Braver TS, Cohen JD, Nystrom LE, Jonides J, Smith EE, Noll DC. A parametric study of prefrontal cortex involvement in human working memory. NeuroImage. 1997;5:49–62. doi: 10.1006/nimg.1996.0247. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Goodman J, Burock M, Rotte M, Koustaal W, Schacter D, Rosen B, Dale A. Functional-anatomic correlates of object priming in humans revealed by rapid presentation event-related fMRI. Neuron. 1998;20:285–296. doi: 10.1016/s0896-6273(00)80456-0. [DOI] [PubMed] [Google Scholar]

- Caplan D, Alpert N, Waters G. PET studies of syntactic processing with auditory sentence presentation. NeuroImage. 1999;9:343–351. doi: 10.1006/nimg.1998.0412. [DOI] [PubMed] [Google Scholar]

- Caplan D, Alpert N, Waters G, Olivieri A. Activation of Broca's area by syntactic processing under conditions of concurrent articulation. Human Brain Mapping. 2000;9:65–71. doi: 10.1002/(SICI)1097-0193(200002)9:2<65::AID-HBM1>3.0.CO;2-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caplan D, Futter C. Assignment of thematic roles to nouns in sentence comprehension by an agrammatic patient. Brain and Language. 1986;27:117–134. doi: 10.1016/0093-934x(86)90008-8. [DOI] [PubMed] [Google Scholar]

- De Vincenzi M. Syntactic analysis in sentence comprehension: Effects of dependency types and grammatical constraints. Journal of Psycholinguistic Research. 1996;25:117–133. doi: 10.1007/BF01708422. [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G, Dehaene S, Anton J-L, Campagne A, Ciuciu P, Dehaene G, Denghien I, Jobert A, LeBihan D, Sigman M, Pallier C, Poline J-B. Functional segregation of cortical language areas by sentence repetition. Human Brain Mapping. 2006;27:360–371. doi: 10.1002/hbm.20250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devauchelle A, Oppenheim C, Rizzi L, Dehaene S, Pallier C. Sentence syntax and content in the human temporal lobe: an fMRI adaptation study in auditory and visual modalities. Journal of Cognitive Neuroscience. 2009;21:1000–1012. doi: 10.1162/jocn.2009.21070. [DOI] [PubMed] [Google Scholar]

- Drai D, Grodzinsky Y. A new empirical angle on the variability debate: Quantitative neurosyntactic analyses of a large data set from Broca's Aphasia. Brain and Language. 2006;96:117–128. doi: 10.1016/j.bandl.2004.10.016. [DOI] [PubMed] [Google Scholar]

- Drai D, Grodzinsky Y, Zurif E. Broca's aphasia is associated with a single pattern of comprehension performance: A reply. Brain and Language. 2001;76:185–192. doi: 10.1006/brln.2000.2288. [DOI] [PubMed] [Google Scholar]

- Druzgal JT, D'esposito M. Acitivity in fusiform face area modulated as a function of working memory load. Cognitive Brain Research. 2001;10:355–364. doi: 10.1016/s0926-6410(00)00056-2. [DOI] [PubMed] [Google Scholar]

- Fiebach CJ, Schlesewsky M, Lohmann G, von Cramon DY, Friederici AD. Revisiting the role of Broca's area in sentence processing: Syntactic integration versus syntactic working memory. Human Brain Mapping. 2005;24:79–91. doi: 10.1002/hbm.20070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ford M. A method for obtaining measures of local parsing complexity throughout sentences. Journal of verbal learning and verbal behavior. 1983;22:203–218. [Google Scholar]

- Frazier L, d'Arcais GBF. Filler driven parsing: A study of gap filling in Dutch. Journal of Memory and Language. 1989;28:331–344. [Google Scholar]

- Friederici AD, Fiebach CJ, Schlesewsky M, Bornkessel ID, Von Cramon DY. Processing linguistic complexity and grammaticality in the left frontal cortex. Cerebral Cortex. 2006;16:1709–1717. doi: 10.1093/cercor/bhj106. [DOI] [PubMed] [Google Scholar]

- Friedmann N, Shapiro LP. Agrammatic comprehension of simple active sentences with moved constituents: Hewbrew OSV and OVS structures. Journal of Speech language and Hearing Research. 2003;46:288–297. doi: 10.1044/1092-4388(2003/023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson E. Linguistic complexity: Locality of syntactic dependencies. Cognition. 1998;68:1–76. doi: 10.1016/s0010-0277(98)00034-1. [DOI] [PubMed] [Google Scholar]

- Gibson E, Desmet T, Grodner D, Watson D, Ko K. Reading relative clauses in English. Cognitive Linguistics. 2005;16:313–354. [Google Scholar]

- Goebel R, Esposito F, Formisano E. Analysis of functional image analysis contest (FIAC) data with Brainvoyager QX: From single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Human Brain Mapping. 2006;27:392–401. doi: 10.1002/hbm.20249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grewe T, Bornkessel I, Zysset S, Wiese R, Von Cramon DY, Schlesewsky M. The emergence of the unmarked: A new perspective on the language-specific function of Broca's Area. Human Brain Mapping. 2005;26:178–190. doi: 10.1002/hbm.20154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychologica. 2001;107:293–321. doi: 10.1016/s0001-6918(01)00019-1. [DOI] [PubMed] [Google Scholar]

- Grodzinsky Y. Agrammatic Comprehension of relative clauses. Brain and Language. 1989;37:480–499. doi: 10.1016/0093-934x(89)90031-x. [DOI] [PubMed] [Google Scholar]

- Grodzinsky Y. The neurology of syntax: language use without Broca's area. Behavioral and Brain Sciences. 2000;23:1–21. doi: 10.1017/s0140525x00002399. [DOI] [PubMed] [Google Scholar]

- Hickok G, Zurif E, Canseco-Gonzalez E. Structural description of agrammatic comprehension. Brain and Language. 1993;45:371–395. doi: 10.1006/brln.1993.1051. [DOI] [PubMed] [Google Scholar]

- Just MA, Carpenter PA, Keller TA, Eddy WF, Thulborn KR. Brain activation modulated by sentence comprehension. Science. 1996;274:114–116. doi: 10.1126/science.274.5284.114. [DOI] [PubMed] [Google Scholar]

- Kimball J. Seven principles of surface structure parsing in natural language. Cognition. 1973;2:5–47. [Google Scholar]

- King J, Just MA. Individual differences in syntactic processing: the role of working memory. Journal of Memory and Language. 1991;30:580–602. [Google Scholar]

- Kourtzi Z, Kanwisher N. Representation of perceived object shape by the human lateral occipital complex. Science. 2001;293:1506–1509. doi: 10.1126/science.1061133. [DOI] [PubMed] [Google Scholar]

- Makuuchi M, Bahlmann A, Anwander A, Friederici AD. Segregating the core computational faculty of human language from working memory. Proceedings of the National Academy of Sciences. 2009;106:8362–8367. doi: 10.1073/pnas.0810928106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller GA, Chomsky N. Finitary models of language users. Wiley; New York: 1963. [Google Scholar]

- Miller GA, Isard S. Free recall of self-embedded English sentences. Information and Control. 1964;7:292–303. [Google Scholar]

- Noppeney U, Price CJ. An fMRI study of syntactic adaptation. Journal of Cognitive Neuroscience. 2004;16:702–713. doi: 10.1162/089892904323057399. [DOI] [PubMed] [Google Scholar]

- Santi A, Grodzinsky Y. Taxing Working Memory with Syntax: Bi-hemispheric Modulations. Human Brain Mapping. 2007a;28:1089–1097. doi: 10.1002/hbm.20329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santi A, Grodzinsky Y. Working memory and syntax interact in Broca's area. Neuroimage. 2007b;37:8–17. doi: 10.1016/j.neuroimage.2007.04.047. [DOI] [PubMed] [Google Scholar]

- Sherman JC, Schweickert J. Syntactic and semantic contributions to sentence comprehension in agrammatism. Brain and Language. 1989;37:419–439. doi: 10.1016/0093-934x(89)90029-1. [DOI] [PubMed] [Google Scholar]

- Smith EE, Jonides J. Storage and executive processes in the frontal lobes. Science. 1999;283:1657–1661. doi: 10.1126/science.283.5408.1657. [DOI] [PubMed] [Google Scholar]

- Stromswold K, Caplan D, Alpert N, Rauch S. Localization of syntactic comprehension by positron emission tomography. Brain and Language. 1996;52:452–473. doi: 10.1006/brln.1996.0024. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. Thieme; New: 1988. [Google Scholar]

- Traxler MJ, Morris R, Seely R. Processing subject and object relative clauses: Evidence from eye movements. Journal of Memory and Language. 2002;47:69–90. [Google Scholar]

- Traxler MJ, Williams RS, Blozis SA, Morris RK. Working memory, animacy, and verb class in the processing of relative clauses. Journal of Memory and Language. 2005;53:204–224. [Google Scholar]

- Wanner E, Maratsos M. Linguistic theory and psychological reality. MIT press; Cambridge, MA: 1978. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Figure Captions

Figure 1.

Presents tree diagrams for the two right right-branching sentences. (a.) contains an object-relative and (b.) contains a subject relative.

Figure 2.

Presents tree diagrams for the two center-embedded sentences. (a.) contains an object-relative and (b.) contains a subject relative.