Abstract

Objective

To examine the extent to which health plan quality measures capture physician practice patterns rather than plan characteristics.

Data Source

We gathered and merged secondary data from the following four sources: a private firm that collected information on individual physicians and their health plan affiliations, The National Committee for Quality Assurance, InterStudy, and the Dartmouth Atlas.

Study Design

We constructed two measures of physician network overlap for all health plans in our sample and linked them to selected measures of plan performance. Two linear regression models were estimated to assess the relationship between the measures of physician network overlap and the plan performance measures.

Principal Findings

The results indicate that in the presence of a higher degree of provider network overlap, plan performance measures tend to converge to a lower level of quality.

Conclusions

Standard health plan performance measures reflect physician practice patterns rather than plans' effort to improve quality. This implies that more provider-oriented measurement, such as would be possible with accountable care organizations or medical homes, may facilitate patient decision making and provide further incentives to improve performance.

Keywords: Physician network overlap, managed care, health plan, quality, accountable care organizations (ACO), HEDIS, CAHPS

One approach to reforming the health care system involves creating more competitive health care markets. Some proposals focus on competition among health plans, under the implicit assumption that well-informed purchasers and consumers can choose their plan based on costs, benefit structure, and quality (Robinson 1999; Scanlon et al. 2005;). As a result, health plan performance measurement is common (Bundorf, Choudhry, and Baker 2006), reflecting the assumption that plans are responsible, at least in part, for the quality of care received by their members.

Yet plan control over quality is limited. Typical measures of health plan performance include process measures that likely reflect physician practice style and behavior. To the extent that hospitals and physicians contract with multiple health plans, plans may be less able to distinguish themselves in the marketplace on these measures (Chernew et al. 2004). As such, if the degree of physician overlap is large, comparing health plans based on performance measures may not necessarily convey the information that either consumers or policy makers seek about variation in the quality of care, limiting the usefulness of the measures for practical purposes.

Thus, the health plan may not be the most useful unit for measuring quality of care experienced by consumers. Instead, provider-oriented structures, such as accountable care organizations (ACOs) or medical homes, where the primary responsibility of delivering high-quality care is assumed to rest with the providers, may constitute a step in the right direction toward the goal of creating more meaningful quality metrics (Fisher et al. 2007). Of course, measurement at this more refined level can always be aggregated to the health plan level if plan-level comparisons are desired.

In this paper, we empirically explore the extent to which the overlap in health plans' physician networks is correlated with health plan performance using a unique dataset. Our results indicate that in the presence of a higher degree of provider network overlap, plan performance measures tend to converge to a lower level of quality.

In previous research, Chernew et al. (2004) demonstrated that there is about a fifty–fifty chance that a patient switching a plan may not need to change his or her physician. Baker et al. (2004) showed that variation in the Healthcare Effectiveness Data and Information Set (HEDIS) scores may be explained by the systematic but unobserved heterogeneity of both providers and plans, suggesting that providers may indeed affect HEDIS scores.

CONCEPTUAL FRAMEWORK

Health plans' provider networks form for two reasons. First, by joining the list of “preferred” or “exclusive” providers affiliated with a health plan, a provider can gain access to a steady flow of patients and revenue; second, health plans benefit by obtaining discounts as well as some control over how the providers utilize resources to treat their enrollees (Ma and McGuire 2002). As the number of plans increases in a market while the number of providers remains unchanged, competition is likely to lead to increased overlap in the plans' provider networks.

The existing measures of plan performances, however, commonly include process measures—for example, rates of prescription of beta blockers following a heart attack, retinopathy exam rates for diabetics, preventive screening test utilization rates such as mammography or preventive immunizations, etc.—that are likely to reflect physician practice styles and behaviors as well as the characteristics of the plan, patients, and the market in which they operate. As such, we begin with the assumption that a health plan's scores on performance measures are jointly determined by its contracted physicians, its enrolled members and patients, as well as the plan's own characteristics, such as profit status, enrollment size, and time in business.

Plan performance is also assumed to be affected by market conditions and regional practice norms. In particular, regional variation in medical utilization, practice patterns, and costs has been well-documented in recent literature (Fisher et al. 2003). Given that health plans typically operate in multiple markets across several geographical regions, it is likely that their overall plan-level performance measures also reflect the variation in practice patterns and resource utilization rates across all of the markets in which they operate.

Existing literature indicates that managed care plans are in a unique position to implement quality improvement initiatives and have strong incentive to do so (Scanlon et al. 2000). Plans can influence physicians by the incentives they provide (e.g., payment), educational programs they offer, or information they provide about patients (e.g., chronic care registries). Plans may also directly influence patients through benefit design, prevention programs offered (e.g., smoking cessation programs), or disease or care management programs.

However, many of the available measures of plan performance such as HEDIS are more likely to be functions of physician practice styles and patterns that do not vary patient by patient (Glied and Zivin 2002). If the plan quality measures are ultimately driven by the physicians and, in essence, reflect their practice patterns, one would expect that plan performance measures are fundamentally explained by the number of physicians the plans have in common with other plans—that is, physician network overlap. Therefore, we expect that as physician network overlap increases, plan performance scores will converge.

We also expect higher overlap to be associated with plan performance converging to a lower level. We hypothesize that a greater degree of physician network overlap may lead to less incentive for individual plans to undertake quality improvement initiatives at the physician level because the benefits from such efforts would spill over to the plans' competitors, with whom the physicians may also contract (N. D. Beaulieu unpublished data; Beaulieu et al. 2006). Thus, as plans share more of their physician networks with their competitors, we expect individual plan performance measures to decline on average.

DATA

We gathered and merged relevant data from the following four sources: a private firm, The National Committee for Quality Assurance (NCQA), InterStudy, and the Dartmouth Atlas.

Plan Physician Network

Our data originated from a private firm that collected information on individual physicians and their health plan affiliations. This firm collected the data to generate a comprehensive database of plan–physician combinations for employers who wanted an easy way for their employees to search for affiliated physicians or to check if their physician was in any given plan. The firm gave us access to its proprietary electronic provider lists for 214 health maintenance organizations (HMOs) that reflect each plan's physician network for January 2001, 2002, and 2003.

We compared our physician sample against the area resource file (ARF) data and found that the physicians in our sample were fairly representative of all physicians in the United States during the same period in terms of geographical location. Our sample contained physicians from all 50 states plus the District of Columbia, with more than half concentrated in six states: California, New York, Ohio, Texas, Florida, and New Jersey. Moreover, over 95 percent of the physicians in the sample were located primarily in metropolitan statistical areas (MSAs). According to the ARF data in the same period, the top five states in terms of number of physicians were California, New York, Texas, Florida, and Pennsylvania (in our data, Pennsylvania was seventh); furthermore, ARF indicated that about 98 percent of all physicians in the United States were located in MSAs.

Because the firm provided specific physician names to interested employees and benefits managers, we believe the data to be highly accurate. Nevertheless, to assess the accuracy and reliability of the dataset, we checked the affiliations of all listed physicians in two large plans. We provided the physician lists and asked the plans to verify that all listed physicians were indeed affiliated with the plans. We found a combined error rate of <1.5 percent, suggesting that although the dataset had some noise, it was relatively accurate.

Because hospital-based physicians (radiologists, anesthesiologists, pathologists, and hospitalists) often do not appear on health plan provider lists, our measure of overlap is therefore best interpreted as a measure of overlap among community-based physicians.

Measuring Network Overlap

We construct two measures of overlap. One is a “plan pair” measure that captures the overlap between any pair of plans. This allows us to test how overlap affects the convergence in quality performance. The other is the average number of health plans with which the physicians in a given plan have contracts. This allows us to investigate the effect of a plan's overlap with other plans on its own quality performance. In constructing these measures, we assume that those physicians contracting with the same plan also belong to the same “networks.” Thus, to some extent, we use the terms “physician network” and “plan affiliation” interchangeably. In reality, however, belonging to a same plan may not be equivalent to belonging to a same physician network—that is, independent physician association (IPA) or preferred provider organization (PPO); unfortunately, we are not able to observe the actual “network” to which each physician belongs.

A “plan pair” measure is defined for every unique combination of two plans. It is calculated as the number of physicians belonging to both plans divided by the total number of physicians belonging to either plan. For example, suppose plan A has 300 physicians and plan B has 500 physicians in their respective networks. Also suppose that there are 200 physicians who are in both networks (thus counted twice). Then, the plan–pair overlap is 33.3 percent—that is, 200 divided by (300+500−200). This is possible because our data allow us to explicitly observe physicians' plan affiliations.

The average number of plans contracted by physicians belonging to a given plan's network captures the degree to which a plan shares its physician panel with all other competitors in the market. For example, suppose a plan contracts with five physicians, each of whom also contracts with two other plans in the market (thus, each physician contracts with three plans in total). Then the average number of plans contracted by the plan's network physicians is three (3+3+3+3+3=15 divided by 5). A greater value on this measure might suggest less incentive for the plan to undertake quality improvement initiatives at the physician level because any benefits from such initiatives would spill over to the plan's competitors.

Note that the plan–pair overlap value of 0 percent corresponds to a plan-level overlap value of one plan per physician; that is, if every physician in a given plan contracts with only one plan, that implies that none of those physicians appears in any other plan's network (i.e., exclusive provider network). Given our method of constructing the overlap measure, however, a “zero overlap” can reflect one of two situations. First, it may reflect two plans in the same market using exclusive physician networks. This is most likely when either or both of the plans in a given plan pair are predominantly group or staff model HMOs.

Alternatively, it could reflect two plans operating in entirely different markets, resulting in zero overlap by definition. To assess whether our results are sensitive to this alternative definition of zero overlap, we obtained a separate set of results using a “full” sample that included all the plan pairs that do not operate in the same market, assigning an overlap value of zero for such plan pairs. Because we found no significant difference in our results from this broader definition of zero overlap, we focused only on the plan pairs that do operate in the same markets.

Plan Performance and Characteristics

We linked HEDIS and Consumer Assessment of Healthcare Providers and Systems (CAHPS) data obtained from the NCQA to the HMOs contained in the private firm's dataset for the corresponding years (i.e., 2001, 2002, and 2003). Ideally, we would have liked to observe plan performance measures at the geographical market level in order to explicitly account for the market-level factors that influence them. However, because HEDIS and CAHPS are measured at the plan level rather than at the market level (i.e., they reflect each plan's performances aggregated across all markets in which it operates), we were unable to achieve this level of detail in our dataset.

To account for the plan characteristics, we also merged in data from InterStudy's MSA Profiler and Competitive Edge. Because InterStudy and NCQA do not use common health plan identifiers, we matched the plans manually, relying on plan name and geographic service area. This resulted in a dataset of 189 health plans, representing about 66 percent of total commercial HMO enrollment in the United States as of 2001.

Our sample was more likely to include larger and older plans operating in large urban markets. Using the 2002 and 2003 data, we then constructed a dataset of those plans covering the 3-year period. We were unable to link approximately 20 percent of the plans in our network overlap data to the NCQA data, presumably because either these plans did not report data to NCQA or we could not find the appropriate match. Also, some plans that had appeared initially in the 2001 data dropped out of the data in later years, presumably due to mergers or acquisitions.

Selecting Plan Performance Measures

As dependent variables, we chose a subset of eight HEDIS and four CAHPS measures for analysis. Specifically, we focused on HEDIS measures related to breast cancer screening, adolescent immunization, and diabetes care. The selection of these measures was driven by our a priori expectation that they are more likely to be influenced by individual physician characteristics and practice styles than other available measures.

To check whether our method may pick up false associations between physician network overlap and plan performance measures, we also included the CAHPS “Claims Processing” measure as an outcome variable under the assumption that patients' assessment of plans' claims processing would depend on plans' behaviors and not on physician behaviors. We therefore expect a nonsignificant relationship between the “Claims Processing” measure and the physician overlap measure.

HEDIS Data Collection Methods

Plans could use either administrative or hybrid methods to collect the necessary data to compute their HEDIS scores. The administrative method relied on examining claims data to determine the relevant performance measures, while the hybrid method involved supplementing the claims data with chart audits of randomly selected samples of eligible enrollees. Thus, different collection methods might have led to differences in the plan performance measures (Pawlson, Scholle, and Powers 2007). Therefore, we created categorical variables to control for the differences in HEDIS collection methods in our regression analyses.

Regional Variation and Plan Enrollee Case Mix

Plan performance scores are further affected by enrollee characteristics and other unobserved market characteristics that lead to plan quality variation not attributable to plans' quality improvement activities or to physician practice patterns. To account for this, we created the following four indices: case mix, ambulatory-care sensitive conditions (ACSC), market share, and market dissimilarity index (MDI).

To capture the plan differences in terms of unobserved enrollee health status and severity of illnesses, we calculated the average enrollee prevalence rate of four chronic conditions (heart disease, diabetes, asthma, and hypertension) for each plan from the NCQA data. More specifically, for each of the chronic conditions, we obtained the percentage of each plan's total enrollee population identified as having that condition and calculated the average of the percentages to obtain our case mix index. A higher value of this index for a given plan thus indicated a sicker enrollee population for that plan.

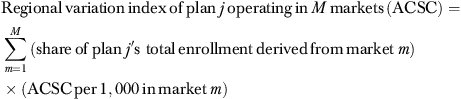

To create an index that captures the regional variation in resource utilization that may affect plan performance measures, we merged in the Dartmouth Atlas data because the Atlas data contain rich information on the variation in Medicare utilization and practice patterns across regions. Although our plan performance measures are relevant only for commercial HMOs, there has been strong evidence (Needleman et al. 2003; Wennberg et al. 2004; Baker, Fisher, and Wennberg 2008;) that observed treatment patterns and quality of care among the Medicare population are likely to be highly correlated with those among the general population. We based our index on hospital discharge rates for ACSC. Previous research suggests that ACSC discharge rates exhibit significant geographical variations (McCall, Harlow, and Dayhoff 2001), reflecting regional differences in terms of utilization, access to health care, market conditions, and practice norms.

To obtain the ACSC index, we used the following formula:

|

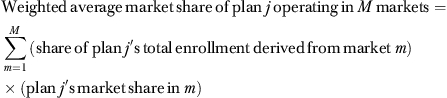

Market Characteristics

The degree to which the markets for physician practices and plans are concentrated in each locale may influence the degree of physician network overlap, potentially based on the provider practice patterns and quality. Moreover, existence of a “dominant” plan in terms of the local market share may induce adoption of certain practice styles and patterns across all providers in the market.

Thus, to control for the effects of health plan market concentration on plan performance measures, we obtained a variable that captures, on average, how “dominant” plans are across the markets they serve using the following formula:

|

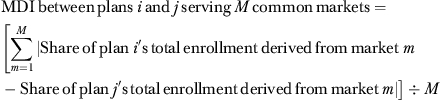

From our data, it was not possible to directly identify all the relevant market characteristics that may impact the physician network overlap and plan performance, such as the competitiveness of the physician market. Instead, for any given plan pair, we constructed an MDI that is presumed to capture the differences in the unobserved market conditions that the plans face. MDI was calculated as

|

If plans i and j served the same markets and derived the exact same amount of “business” from each of those markets, MDI would be zero, indicating that the plans were subjected to identical market conditions. On the other hand, if the plans operated in different markets, MDI would approach one.

These indices were used as control variables in our regression models described in the next section. Refer to Table 1 for the complete list of the variables used for the analyses and their descriptions.

Table 1.

Variable Description

| Dependent variables | |

| BCS | Percentage of continuously enrolled women between age 52 and 69 who had a mammogram during the measurement year |

| AMMR | Percentage of continuously enrolled adolescents who turned 13 years old during the measurement year and had a second dose of MMR immunization by 13th birthday |

| AHB | Percentage of continuously enrolled adolescents who turned 13 years old during the measurement year and had three hepatitis B immunizations or complete two-dose regimen by 13th birthday |

| A1c Test | Percentage of continuously enrolled members with diabetes (Type 1 and Type 2) age 18–75 receiving hemoglobin A1c (HbA1C) testing during the measurement year |

| A1c Control | Percentage of continuously enrolled members with diabetes (Type 1 and Type 2) age 18–75 whose HbA1c was poorly controlled (>9.5%) during the measurement year (subtracted from 100% to make higher rates indicate better performance) |

| Eye Exam | Percentage of continuously enrolled members with diabetes (Type 1 and Type 2) age 18–75 receiving an eye exam during the measurement year |

| Lipid Control | Percentage of continuously enrolled members with diabetes (Type 1 and Type 2) age 18–75 whose most recent LDL-C level was less than 130 mg/dL during the measurement year |

| Nephropathy | Percentage of continuously enrolled members with diabetes (Type 1 and Type 2) age 18–75 who were monitored for nephropathy during the measurement year |

| Claims Processing | Member satisfaction rating on claims processing during the 12-month continuous enrollment period (0–100 scale) |

| Getting Care Quickly | Member satisfaction rating on how quickly patients were able to obtain care during the 12-month continuous enrollment period (0–100 scale) |

| Doctor Communication | Member satisfaction rating on how well doctors communicated with patients during the 12-month continuous enrollment period (0–100 scale) |

| Staff Helpful | Member satisfaction rating on how their practitioner office staff were courteous and helpful during the 12-month continuous enrollment period (0–100 scale) |

| Explanatory variables | |

| Plan–Pair Overlap | Percentage of physicians who appear in both plans' networks |

| Plans per Physician | Average number of health plans that the physicians in a given plan have a contract with |

| For-Profit | =1 if the plan has for-profit status; 0 if nonprofit |

| Both Plans NP | =1 if both plans have nonprofit status; 0 otherwise |

| One NP One FP | =1 if one plan has for-profit status and the other has nonprofit status; 0 otherwise |

| Both Plans FP | =1 if both plans have for-profit status; 0 otherwise (omitted category) |

| Plan Age >10 years | =1 if the plan is older than 10 years; 0 otherwise |

| Both <10 years | =1 if both plans are younger than 10 years; 0 otherwise |

| One <10 years One >10 years | =1 if one plan is older than 10 years and the other is younger than 10 years; 0 otherwise |

| Both >10 years | =1 if both plans are older than 10 years (omitted category) |

| Enrollment | Plan's total national enrollment size in hundred thousands |

| Staff/Group | Percentage of the plan's total enrollment in staff/group HMO model |

| Network | Percentage of the plan's total enrollment in network HMO model |

| IPA | Percentage of the plan's total enrollment in network IPA model |

| # Model Type | Number of different HMO model types employed by the plan |

| Case Mix | Average prevalence rates of heart disease, asthma, diabetes, and hypertension among plan enrollees |

| % Medicare & Medicaid | Percentage of the plan's total enrollees enrolled in Medicare & Medicaid |

| ACSC | Average number of hospital discharges for ambulatory care sensitive conditions per thousand Medicare enrollees across all markets served by plan |

| Market Share | Average market share across all markets served by plan |

| MDI | =0 if plan pair serves the same markets and approaches 1 as they serve different markets |

| Administrative Method | =1 if plan used administrative data collection method only; 0 otherwise |

| Method: Both Hybrid | =1 if both plans in a plan pair used hybrid data collection method; 0 otherwise (omitted category) |

| Method: Both Administrative | =1 if both plans in a plan pair used administrative data collection method only; 0 otherwise |

| Method: Different | =1 if plan pair used different data collection methods; 0 otherwise |

METHODOLOGY

To test the first hypothesis that a higher degree of physician network overlap is associated with convergence of plan performance measures between two plans, the following linear regression model was estimated:

| (1) |

where Diff(Performijtq) denotes the absolute difference of plan performance scores between plans i and j in year t for a given performance measure q; (Plan–Pair Overlapijt) is the measure of physician network overlap between plans i and j at time t; Diff(Planijt) represents the vector of absolute differences in plan characteristics; Diff(Patientijt) captures the absolute difference of the plan enrollee case mix; Diff(Marketijt) indicates the absolute differences of the market characteristics for both plans; and Year is the indicator variable for year t.

To test the second hypothesis that a higher degree of physician network overlap is associated with lower levels of performance at the individual plan level, the following model was estimated:

| (2) |

where (Plans per Physician)it denotes the average number of health plans that the physicians in plan i have contracted with, including plan i. Performitq represents plan i's performance on measure q at time t. Other variables are defined similarly as in equation (1).

We weighted the plans per physician measure to reflect the fact that plans typically operate in many markets and thus derive disproportionate amounts of business from those markets. The weight was the share of the plan's total enrollment derived from each of the markets in which the plan operates. Thus, greater weights were given to markets in which plans derive greater shares of their total business.

Both equations (1) and (2) were estimated using the ordinary least squares (OLS) estimator. Technically, a fixed-effects model approach (i.e., inclusion of plan or plan–pair indicator variables in the estimation models to control for any unobserved plan effects) was feasible. Unfortunately, our data showed little variation in the physician networks over time because physician panels tend to remain stable, at least over a short period. As such, our fixed-effects model estimates showed little statistical significance on the overlap coefficient estimates. Moreover, because HEDIS and CAHPS measures were available only at the plan level, we were unable to include market fixed-effects in our empirical models to further capture unobserved market effects. Therefore, we reported below only the results obtained from the model specifications without any fixed effects.

Because of the nature of our data (i.e., same physicians belonging to multiple plans), the standard i.i.d assumption on the error terms was likely to be incorrect. To account for this in equation (1), we implemented the multiway clustering developed by Cameron, Gelbach, and Miller (2006). As the unit of analysis in equation (1) was the plan pair, there were three ways to cluster the observations: cluster by the first plan in each pair, by the second plan in each pair, and by the plan pair itself, because each plan pair occurred up to three times in our sample because of the longitudinal nature of our data.

In equation (2), because of physician network overlap, the error terms across the individual plans were also likely to be correlated, with the correlation being stronger among the plans that have larger overlap. To assess this, we attempted two alternative clustering approaches. In the first, we implemented a simple one-way clustering by plan to capture any serial correlation over time. In the second approach, we further grouped the plans into quartiles of the average number of plans per physician and re-estimated the models using a two-way clustering method. Because the standard errors obtained from the first approach tended to be slightly larger than the second, we reported the results obtained from the first approach, because they represented the more conservative estimates of the standard errors.

RESULTS

Table 2 shows the coefficient estimates on the measures of physician network overlap obtained from equations (1) and (2). As expected, our model indicates little association between the CAHPS “Claims Processing” measure and network overlap. The negative coefficient estimate on the overlap variable in equation (1) is consistent with our first hypothesis and suggests that plan performance converges as physician network overlap broadens, although the measured magnitude of convergence differs by plan performance measure.

Table 2.

Estimated Coefficients on Network Overlap Measure (SE in Parentheses)

| Network Overlap (Equation (1)) | Plans per Physician (Equation (2)) | |

|---|---|---|

| BCS | −4.61 (1.07***) | −0.54 (0.15***) |

| AMMR | −6.64 (2.66**) | −0.95 (0.40**) |

| AHB | −4.67 (2.57*) | −0.40 (0.48) |

| A1c Test | −6.22 (4.00) | −0.51 (0.20**) |

| A1c Control | −8.13 (3.37**) | −0.77 (0.28***) |

| Eye Exam | −8.81 (2.75***) | −1.50 (0.29***) |

| Lipid Control | −5.77 (3.03*) | −0.30 (0.21) |

| Diabetic Nephropathy | −9.01 (3.48***) | −1.25 (0.24***) |

| Claims Process | −0.14 (0.89) | −0.23 (0.20) |

| Doctor Communication | −0.29 (0.42) | −0.19 (0.06***) |

| Getting Care Quickly | −1.86 (0.65***) | −0.70 (0.12***) |

| Office Staff Helpful | −0.81 (0.35**) | −0.37 (0.06***) |

Notes. This table shows only the coefficient estimates on the measures of physician network overlap in the regression models. Coefficients on other covariates (including plan age, ownership status, enrolment, collection methods, etc.) were also estimated; for the complete regression results, see the Supporting Information.

Significant at 1% level.

Significant at 5% level.

Significant at 10% level.

The coefficient on the number of plans per physician variable, obtained from estimating equation (2), also indicates a pattern, consistent with our second hypothesis that an individual plan's performance declines as the physicians in the plan's network contract with other health plans.

Simulation

To facilitate interpretation of our regression results, we performed a series of simulation exercises based on the parameter estimates obtained from the two regression models, focusing on performance measures for which the coefficients on the overlap measures were statistically significant in both equations. Our goal was to simulate a series of policy “experiments” in which two otherwise identical hypothetical plans were subject to different levels of physician network overlap, holding all else equal. More specifically, both of the hypothetical plans were assumed to have been in operation for more than 10 years; were for-profit; and used the hybrid data collection method as of 2003. All other variables were given their mean values, as shown in the descriptive statistics table in the Supporting Information.

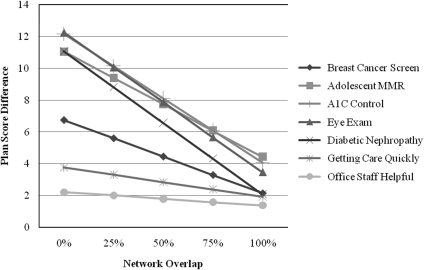

Across all seven health plan performance measures in Figure 1, the gaps in scores between the two plans disappear as the network overlap increases. For some measures, appreciable differences in the measures may entirely disappear when plans share common physician panels. Particularly for diabetic nephropathy rates and eye exam rates, going from 0 percent overlap to 100 percent overlap corresponds to reductions in the differences by about 9 percentage points.

Figure 1.

Simulated Convergence of Plan Performance Scores

At the same time, the degree of convergence is not as dramatic for other measures. Moreover, the results generally indicate that the performance measures do not converge completely even when plans approach entirely overlapping physician networks. This suggests that plans do make some contribution in determining their own aggregate plan performance results, albeit not as much as would be suggested when the physician network overlap is not taken into consideration. Note that in our regression models, we are unable to control for every plan-specific characteristic, such as individual plans' unique disease management programs, that give them the “competitive edge” against their competitors. Such unobserved plan characteristics are likely to account for the persistent residual differences in the plan performance measures.

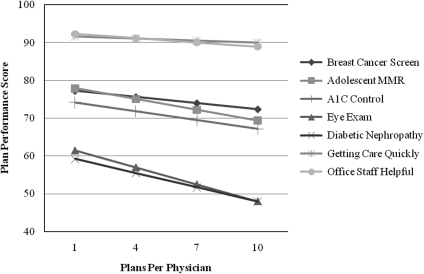

Figure 2 illustrates that the HEDIS and CAHPS measures decline as the physicians in our hypothetical plan's network contract with a greater number of other health plans. When the physicians move from an exclusive contracting relationship (i.e., one plan per physician) to contracting with as many as 10 plans, the nephropathy and eye exam plan performance measures decline by about 11–13 percentage points. This is consistent with the hypothesis that plans face a reduced incentive to improve quality when physicians are shared by multiple plans.

Figure 2.

Simulated Plan Performance Scores

CONCLUSION

Our results suggest that when there is overlap in health plans' physician provider networks, plan performance scores converge, generally resulting in lower values as physicians contract with multiple plans. We hypothesize that plans have less of an incentive to invest in quality when overlap is high, because returns to this investment may accrue to competing plans as well. Additionally, the investment required to improve quality may be larger when physicians are not exclusively affiliated with a single plan.

There are two practical implications of our study: first, for the end-users of HEDIS and CAHPS measures, interpreting such commonly available health plan performance measures as metrics of health plan quality must be done cautiously. Indeed, our results indicate that, if one's goal is to accurately assess the quality of care experienced by a group of consumers, the health care provider level may be the more meaningful unit of observation. The current interest in provider-oriented entities such as ACOs or medical homes may help establish a more credible and useful unit for measuring the quality of care, while still allowing for the possibility of aggregating to the plan level.

Second, in the presence of increasingly overlapping provider networks, quality improvement initiatives that depend on physician participation may be more productive if sponsored at the community, rather than at the individual plan level. Interestingly, this is consistent with the increasing trend toward community-based approaches to quality improvements, such as the Department of Health and Human Service's Chartered Value Exchange effort and private foundation-funded efforts such as the Robert Wood Johnson Foundation's Aligning Forces for Quality initiative (Hurley et al. 2009).

In either case, our analysis illustrates that significant overlap in provider networks can influence market outcomes. Proposals for insurance exchanges and other procompetitive strategies must recognize that overlap in physician provider networks will influence the way health plans compete, resulting in real quality differences for patients.

Acknowledgments

Joint Acknowledgment/Disclosure Statement: Funding was provided by the Agency for Healthcare Research and Quality (AHRQ) under Grant No. P01-HS10771. Daniel Maeng is supported by the Robert Wood Johnson Foundation's Aligning Forces for Quality evaluation project. Walter Wodchis is supported by a Canadian Institutes for Health Research New Investigator Award.

Disclosures: None.

Disclaimers: None.

SUPPORTING INFORMATION

Additional supporting information may be found in the online version of this article:

Appendix SA1: Author Matrix.

Table SA1: Descriptive Statistics for Variables in Equation 1 (S.D. in Parentheses)

Table SA2: Descriptive Statistics for Variables in Equation 2 (S.D. in Parentheses)

Table SA3: Regression Results for Plan Performance Convergence Analysis (Equation 1)

Table SA4: Regression Results for Plan-Level Performance Analysis (Equation 2)

Please note: Wiley-Blackwell is not responsible for the content or functionality of any supporting materials supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.

REFERENCES

- Baker LC, Fisher ES, Wennberg JE. Variations in Hospital Resource Use for Medicare and Privately Insured Populations in California. Health Affairs. 2008;27(2):w123–34. doi: 10.1377/hlthaff.27.2.w123. [DOI] [PubMed] [Google Scholar]

- Baker LC, Hopkins D, Dixon R, Rideout J, Geppert J. Do Health Plans Influence Quality of Care. International Journal for Quality in Health Care. 2004;16(1):19–30. doi: 10.1093/intqhc/mzh003. [DOI] [PubMed] [Google Scholar]

- Beaulieu ND, Cutler DM, Ho K, Isham G, Lindquist T, Nelson A, O'Connor P. The Business Case for Diabetes Disease Management for Managed Care Organizations. Forum for Health Economics & Policy. 2006;9(1):Article 1. [Google Scholar]

- Bundorf K, Choudhry K, Baker L. 2006. The Effects of Health Plan Performance Measurement and Reporting on Quality of Care for Medicare Beneficiaries. Paper presented at the annual meeting of the Economics of Population Health: Inaugural Conference of the American Society of Health Economists.

- Cameron AC, Gelbach JB, Miller DL. 2006. Robust Inference with Multi-Way Clustering. NBER Technical Working Paper No. 327.

- Chernew ME, Wodchis WP, Scanlon DP, McLaughlin C. Overlap in HMO Physician Networks. Health Affairs. 2004;23(2):91–101. doi: 10.1377/hlthaff.23.2.91. [DOI] [PubMed] [Google Scholar]

- Fisher ES, Staiger DO, Bynum JPW, Gottlieb DJ. Creating Accountable Care Organizations: The Extended Hospital Medical Staff. Health Services Research. 2007;26(1):w44–57. doi: 10.1377/hlthaff.26.1.w44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher ES, Wennberg DE, Stukel TA, Gottlieb DJ, Lucas FL, Pinder EL. The Implications of Regional Variations in Medicare Spending. Part 1: The Content, Quality, and Accessibility of Care. The Annals of Internal Medicine. 2003;138(4):273–87. doi: 10.7326/0003-4819-138-4-200302180-00006. [DOI] [PubMed] [Google Scholar]

- Glied S, Zivin J. How Do Doctors Behave When Some (But Not All) of Their Patients Are in Managed Care? Journal of Health Economics. 2002;21:337–53. doi: 10.1016/s0167-6296(01)00131-x. [DOI] [PubMed] [Google Scholar]

- Hurley RE, Keenan PS, Martsolf GR, Maeng DD, Scanlon DP. Early Experiences with Consumer Engagement Initiatives to Improve Chronic Care. Health Affairs. 2009;28(1):277–83. doi: 10.1377/hlthaff.28.1.277. [DOI] [PubMed] [Google Scholar]

- Ma CA, McGuire TG. Network Incentives in Managed Health Care. Journal of Economics and Management Strategy. 2002;11(1):1–35. [Google Scholar]

- McCall N, Harlow J, Dayhoff D. Rates of Hospitalization for Ambulatory Care Sensitive Conditions in Medicare+Choice Population. Health Care Financing Review. 2001;22(3):127–45. [PMC free article] [PubMed] [Google Scholar]

- Needleman J, Buerhaus PI, Mattke S, Stewart M, Zelevinsky K. Measuring Hospital Quality: Can Medicare Data Substitute for All-Payer Data? Health Services Research. 2003;38(6):1487–508. doi: 10.1111/j.1475-6773.2003.00189.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pawlson LG, Scholle SH, Powers A. Comparison of Administrative-Only versus Administrative Plus Chart Review Data for Reporting HEDIS Hybrid Measures. American Journal of Managed Care. 2007;13(10):547–9. [PubMed] [Google Scholar]

- Robinson JC. The Corporate Practice of Medicine: Competition and Innovation in Health Care. Berkeley, CA: University of California Press; 1999. [Google Scholar]

- Scanlon DP, Rolph E, Darby C, Doty HE. Are Managed Care Plans Organized for Quality? Medicare Care Research and Review. 2000;59(9):9–32. doi: 10.1177/1077558700057002S02. [DOI] [PubMed] [Google Scholar]

- Scanlon DP, Swaminathan S, Chernew ME, Bost JE, Shevock J. Competition and Health Plan Performance: Evidence from Health Maintenance Organization Insurance Markets. Medical Care. 2005;43(4):338–46. doi: 10.1097/01.mlr.0000156863.61808.cb. [DOI] [PubMed] [Google Scholar]

- Wennberg JE, Fisher ES, Stukel TA, Sharp SM. Use of Medicare Claims Data to Monitor Provider-Specific Performance among Patients with Severe Chronic Illness. Health Affairs. 2004;23(Variation suppl):VAR5–18. doi: 10.1377/hlthaff.var.5. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.