Abstract

The medial temporal lobe (MTL), a set of heavily inter-connected structures including the hippocampus and underlying entorhinal, perirhinal and parahippocampal cortex, is traditionally believed to be part of a unitary system dedicated to declarative memory. Recent studies, however, demonstrated perceptual impairments in amnesic individuals with MTL damage, with hippocampal lesions causing scene discrimination deficits, and perirhinal lesions causing object and face discrimination deficits. The degree of impairment on these tasks was influenced by the need to process complex conjunctions of features: discriminations requiring the integration of multiple visual features caused deficits, whereas discriminations that could be solved on the basis of a single feature did not. Here, we address these issues with functional neuroimaging in healthy participants as they performed a version of the oddity discrimination task used previously in patients. Three different types of stimuli (faces, scenes, novel objects) were presented from either identical or different viewpoints. Consistent with studies in patients, we observed increased perirhinal activity when participants distinguished between faces and objects presented from different, compared to identical, viewpoints. The posterior hippocampus, by contrast, showed an effect of viewpoint for both faces and scenes. These findings provide convergent evidence that the MTL is involved in processes beyond long-term declarative memory and suggest a critical role for these structures in integrating complex features of faces, objects, and scenes into view-invariant, abstract representations.

Keywords: hippocampus, perirhinal cortex, memory, perception, fMRI

INTRODUCTION

It is well established that the medial temporal lobe (MTL) is essential for the formation of new memories (Squire et al., 2004, 2007). However, its role in processes beyond declarative memory, including high-order perception and short-term memory, remains controversial. For example, recent lesion studies in both humans and monkeys suggest that perirhinal cortex is necessary for processing complex conjunctions of object features, during both memory and perceptual tasks (Buckley et al., 2001; Bussey et al., 2002; Barense et al., 2005, 2007; Lee et al., 2005a; Taylor et al., 2007). In contrast to perirhinal cortex, the hippocampus does not seem critical for either object perception or memory (Baxter and Murray, 2001; Mayes et al., 2002; Barense et al., 2005; Saksida et al., 2006), but does seem critical for processing spatial and/or relational information, even in tasks with only perceptual or short-term mnemonic demands (e.g., Winters et al., 2004; Lee et al., 2005a; Hannula et al., 2006; Olson et al., 2006b; Hartley et al., 2007).

Importantly, the degree of impairment is influenced by the requirement to process conjunctions of object or spatial features. For example, individuals with bilateral hippocampal damage showed discrimination deficits for spatial scenes presented from different viewpoints, but performed normally if the scenes were presented from the same angle (Buckley et al., 2001; Lee et al., 2005b). By contrast, damage that included perirhinal cortex, but not hippocampus, impaired discrimination of faces presented from differing viewpoints, but spared performance when faces were presented from the same viewpoint. Functional neuroimaging of healthy participants has provided some support for these patient data (Lee et al., 2006a, 2008; Devlin and Price, 2007). However, these neuroimaging studies did not systematically investigate variations in viewpoint across different stimulus types, which have proved critical for eliciting perceptual impairments in individuals with MTL damage. Moreover, given that the perceptual object discriminations used in these studies involved common everyday items, it could be argued that the perirhinal activity reflected retrieval of a stored semantic memory for the object, rather than perceptual processing per se (Lee et al., 2006a; Devlin and Price, 2007).

The present study, therefore, used functional magnetic resonance imaging (fMRI) of healthy individuals while they performed oddity judgment for three types of trial-unique novel stimuli: objects, faces, and scenes. There were two different conditions for each stimulus type: (1) three stimuli presented from the same view, and (2) three stimuli presented from different views (Fig. 1). Stimuli presented from the same view could be discriminated by simple feature matching, whereas stimuli presented from different viewpoints required processing conjunctions of visual features. To address possible differences in difficulty, an additional size oddity baseline task was employed, the difficulty of which could be manipulated independently of conjunctive processing demands (Barense et al., 2007). We predicted that the contrast of different versus same view oddity conditions – corresponding to increased conjunctive processing demands – would elicit greater activity in perirhinal cortex for faces and objects, and greater activity in posterior hippocampus for scenes, when compared to the baseline contrast of difficult versus easy size oddity conditions.

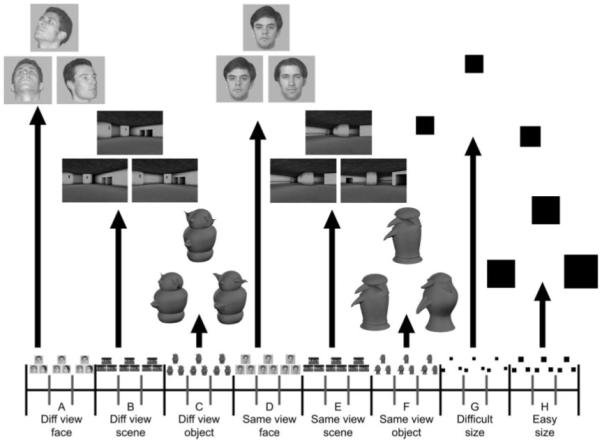

FIGURE 1.

Schematic diagram of a single block of (A) different view face oddity; (B) different view scene oddity; (C) different view object oddity; (D) same view face oddity; (E) same view scene oddity; (F) same view object oddity; (G) difficult size oddity; and (H) easy size oddity trials (for illustrative purposes, the correct answer is always the bottom right stimulus). Subjects were told that of the three pictures presented per trial, two depicted the same stimulus, whereas the third picture was of a different stimulus. They were instructed to select the different stimulus. Note that for each oddity trial the three pictures were presented simultaneously, thus minimizing mnemonic demands of the task. All stimuli were trial-unique.

METHODS

Participants

Thirty-four right-handed neurologically normal subjects were scanned in total [21 female, mean age = 23.20 yr; standard deviation (SD) = 3.71]. The data for six participants were excluded due to poor behavioral performance (performance below two SDs of the group mean on one of the eight conditions). The data from one participant were excluded due to problems with his vision. The age range of the remaining 27 participants (17 female) was 18–31 yr (mean age = 23.49 yr; SD = 3.93). After the nature of the study and its possible consequences had been explained, all subjects gave informed written consent. This work received ethical approval from the Cambridgeshire Local Research Ethics Committee (LREC reference 05/Q0108/127).

Image Acquisition

The scanning was performed at the Medical Research Council Cognition and Brain Sciences Unit using a Siemens 3T TIM Trio. Three sessions were acquired for every subject. For each dataset, an echo planar imaging (EPI) sequence was used to acquire T2*-weighted image volumes with blood oxygen level dependent contrast. Because temporal lobe regions were the primary area of interest, thinner slices (32 axial-oblique slices of 2-mm thickness) were used in order to reduce susceptibility artifacts (interslice distance 0.5 mm, matrix size 64 × 64, in-plane resolution 3 × 3mm, TR = 2,000 μs, TE = 30 μs, flip angle = 78°). The slices were acquired in an interleaved order, angled upward away from the eyeballs to avoid image ghosting of the eyes. Each EPI session was 600 s in duration, consisting of 6 dummy scans at the start to allow the MR signal to reach equilibrium, and 294 subsequent data scans. A T1 structural scan was acquired for each subject using a three-dimensional MPRAGE sequence (TR = 2,250 μs; TE = 2.99 μs; flip angle = 9°; field of view = 256 mm × 240 mm × 160 mm; matrix size = 256 mm × 240 mm × 160 mm; spatial resolution = 1 × 1 × 1 mm).

Visual stimuli were presented during scanning with a program written using Microsoft Visual Basic 6.0 (Microsoft Corporation, Redmond, WA). The program was run on an IBM compatible desktop computer connected to a LCD projector (1,024 × 768 pixels resolution) that projected onto a white screen situated behind the scanner subject bed. The screen could be seen with an angled mirror placed directly above the subject’s eyes in the scanner. The responses for the experimental task were made using three specified buttons on a four-button response box held in the right hand. Response times and accuracy were automatically recorded by the computer.

Experimental Paradigm

Subjects were administered a series of oddity discrimination tests, in which they were instructed to choose the unique stimulus from an array of three simultaneously presented items. They were told that of the three pictures presented per trial, two depicted the same stimulus, whereas the third picture was of a different stimulus. It is important to emphasize that for each oddity trial the three pictures were presented simultaneously, thus minimizing mnemonic demands of the task. Furthermore, to minimize mnemonic demands across trials, all stimuli were trial-unique. There were three stimulus-types of interest: unfamiliar faces, novel objects (greebles) and virtual reality scenes, plus an additional control stimulus (black squares). In addition, there was a manipulation of viewpoint, with stimuli presented from either identical or differing viewpoints. This manipulation meant that the different view conditions were not readily solvable on the basis of simple feature matching, but instead required processing multiple stimulus features. By contrast, the same view conditions were easily solvable on the basis of a single feature. There were eight conditions in total: different view face oddity, different view scene oddity, different view object oddity, same view face oddity, same view scene oddity, same view object oddity, difficult size oddity, and easy size oddity (more details below). For all eight conditions, the three images (faces, objects, scenes, or squares) were presented on a white screen with one stimulus positioned above the remaining two. Each trial lasted 6 s (5.5 s stimulus display time, 0.5 s intertrial interval), during which the participants were required to select the odd one out by pressing the corresponding button on the response box as quickly, but as accurately as possible. The location of the odd stimulus in the array was counterbalanced across each condition.

Each EPI session consisted of 96 trials divided equally into an ABCDEFGH–ABCDEFGH–ABCDEFGH–ABCDEFGH blocked design (see Fig. 1). A blocked design was utilized in order to maximize power. Each block comprised three trials of a given condition. Figure 1 illustrates a single ABCDEFGH series and a representative trial from each condition. The order of blocks was fixed within each EPI session, and the order of sessions was counterbalanced across subjects. Clear instructions and two short practice sessions (one outside and another inside the scanner) involving a different set of stimuli were administered prior to the start of scanning.

Each condition was designed according to the following specific parameters:

Different view face oddity

On each trial, three grayscale images of human faces were presented on a gray background (256 × 256 pixels). Two of the images were of the same face taken from different viewpoints, whereas the third image was of a different face taken from another view. There were four viewpoints used in total: (1) a face looking directly ahead, (2) a face looking 45° to the left, (3) a face looking 45° to the right and tilted upward, and (4) a face looking upward. Each viewpoint was presented an equal number of times across all trials, and the position of each viewpoint in the 1 × 3 stimulus array was fully counterbalanced. A set of 72 trial-unique unfamiliar (i.e., nonfamous) male Caucasian faces (all aged 20–40 yr, with no spectacles) was used.

Same view face oddity

The same view face oddity task was identical to the different view face oddity condition, except that on every trial, all three faces were all facing in the same direction (either directly ahead, 45° to the right, or 45° to the left). A different set of 72 unfamiliar male Caucasian faces (all aged 20–40 yr, with no spectacles) was used to those in the different view face condition. As in the different view face condition, all stimuli were trial-unique. It is important to note that the stimuli used in this condition differ from those employed in the same view face oddity conditions reported previously in nonhuman primate and patient studies (Buckley et al., 2001; Lee et al., 2005b, 2006b). Earlier studies presented the target face from one angle and the foils were presented from differing angles. By contrast, in the present experiment the target and foil faces were all presented from the same angle. This alteration ensured that the same view face condition was more closely matched to the same view object and scene conditions (see below).

Different view scene oddity

On each trial, three grayscale images of three-dimensional virtual reality rooms were presented (460 × 309 pixels). Two of the images were of the same room taken from two different viewpoints, whereas the third image was of a different room taken from a third viewpoint. The two rooms in each trial were similar to each other but differed with respect to the size, orientation, and/or location of one or more features of the room (e.g., a window, staircase, wall cavity). A set of 72 rooms was used, created using a commercially available computer game (Deus Ex, Ion Storm L.P., Austin, TX) and a freeware software editor (Deus Ex Software Development Kit v1112f), with no room being used more than once in each session.

Same view scene oddity

The same view oddity condition was identical to the different view oddity task, except that all images were of rooms taken from the same viewpoint. Thus, two of the images were of identical rooms, whereas the third was of a different room presented from the same viewpoint. Each presented room was trial-unique.

Different view object oddity

“Greebles” were chosen because they represent a well-controlled set of novel, three-dimensional objects that are not perceived as faces except when the viewer is trained extensively (e.g., Gauthier and Tarr, 1997). None of our participants had any previous experience with greebles and thus, the greebles served as object stimuli for which participants would have little or no pre-existing semantic representation.

Three pictures of greebles were presented for each trial (320 × 360 pixels). Two of the images were of the same greeble presented from different viewpoints, whereas the third image was of a different greeble. For this condition, the greebles were always from the same family, the same gender and were of the same symmetry (i.e., asymmetrical vs. symmetrical). Within those criteria, the greebles for each trial were selected to produce the maximum amount of possible feature overlap between the odd-one-out and the foils, while also matching the difficulty of this condition to that of the different view face and scene tasks. Difficulty was equated through a series of behavioral pilot experiments. A set of 72 greebles was used, with no greeble repeated across trials.

Same view object (greeble) oddity

On the same view greeble task, the greebles were from different families. The greebles could be either the same or different gender, and be of the same or different symmetry; the different combinations of symmetry and genders were fully counterbalanced across trials. This condition was identical to the different view greeble condition in every other respect.

Difficult size oddity (baseline)

On each trial, three black squares were presented. The length of each side was randomly varied from 67 to 247 pixels, and the size of each square was trial-unique. In each trial, two of the squares were the same size, whereas the third square was either larger or smaller. The difference between the lengths of the two different sides varied between 9 and 15 pixels. The positions of squares were jittered slightly so that the edges did not line up along vertical or horizontal planes. Through pilot experiments outside the scanner, the difficulty of this condition was designed to closely match that of the different view oddity tasks.

Easy size oddity (baseline)

This condition was identical to the difficult size oddity task in every respect, except that the size differential between the different squares was greater. The length of each side was randomly varied from 40 to 268 pixels, and the difference between the lengths of the two different sides varied between 16 and 40 pixels. Through pilot experiments outside the scanner, the difficulty of this condition was designed to closely match that of the same view oddity tasks.

Image Preprocessing

The fMRI data were preprocessed and analyzed using Statistical Parametric Mapping software (SPM5, http://www.fil.ion.ucl.ac.uk/spm/software/spm5/). Data preprocessing involved: (1) correcting all images for differences in slice acquisition time using the middle slice in each volume as a reference; (2) realigning all images with respect to the first image of the first run via sinc interpolation and creating a mean image (motion correction); (3) normalizing each subject’s structural scan to the Montreal Neurological Institute (MNI) ICBM152 T1 average brain template, and applying the resulting normalization parameters to the EPI images. The normalized images were interpolated to 2 × 2 × 2 mm voxels and smoothed with an 8-mm full width, half maximum isotropic Gaussian kernel (final smoothness ~12 × 12 × 11 mm).

fMRI Data Analysis

Following preprocessing, statistical analyses were first conducted at a single subject level. For each participant, each session and each condition, every trial was modeled using a regressor made from an on–off boxcar convolved with a canonical hemodynamic response function described in Friston et al. (1998). The duration of each boxcar was equal to the stimulus duration (i.e., 5.5 s). Incorrect trials and trials for which the subject failed to make a response were modeled separately as conditions of no interest. Thus, although the stimuli were presented in blocks of three stimuli, each trial was modeled separately so that incorrect trials could be excluded from the analysis. To account for residual artifacts after realignment, six estimated parameters of movement between scans (translation and rotation along x, y, and z axes) were entered as covariates of no interest. The resulting functions were then implemented in a General Linear Model (GLM), including a constant term to model session effects of no interest. The data and model were highpass filtered with a cut-off of 1/128 s, to remove low-frequency noise. The parameter estimates for each condition (i.e., different view face oddity, different view scene oddity, different view object oddity, same view face oddity, same view scene oddity, same view object oddity, difficult size oddity, and easy size oddity) were calculated on a voxel-by-voxel basis (Friston et al., 1995) to create one image for each subject and condition.

Second-level, group analyses were conducted by entering the parameter estimates for the eight conditions for each subject into a single GLM, which treated subjects as a random effect. Within this model, three main t-contrasts were performed at every voxel (see Planned Comparisons in Results section), using a single error estimate pooled across conditions (Henson and Penny, 2003), whose nonsphericity was estimated using Restricted Maximum Likelihood, as described in Friston et al. (2002). Statistical parametric maps (SPMs) of the resulting T-statistic were thresholded after correction for multiple comparisons to a familywise error (FWE) of P < 0.05, using Random Field Theory (Worsley et al., 1995). For the MTL and other regions of interest (ROIs), these corrections were applied over volumes defined by anatomical ROIs (see below); for regions outside the MTL, the correction was applied over the whole brain. When more than one suprathreshold voxel appeared for a given contrast within a given brain region, we report the coordinate with the highest Z-score. We report multiple coordinates for a given brain region only when they are separated by a Euclidean distance of more than the 8-mm smoothing kernel. All reported stereotactic coordinates correspond to the MNI template.

ROI analyses

The ROIs for the above small-volume corrections (SVCs) were defined by anatomical masks in MNI space. The perirhinal ROI was the probability map created by Devlin and Price (2007) (available at http://joedevlin.psychol.ucl.ac.uk/perirhinal.php). We included areas that had a 70% or more probability of being perirhinal cortex. The hippocampus ROI was defined based on the Anatomical Automatic Labeling atlas (Tzourio-Mazoyer et al., 2002). Further ROI analyses were conducted on the parahippocampal place area (PPA) and fusiform face area (FFA), areas known to be extensively involved in scene and face processing, respectively (Kanwisher et al., 1997; Epstein and Kanwisher, 1998), as well as the amygdala, as defined in Supporting Information. All ROIs were bilateral.

RESULTS

The following planned comparisons were performed to investigate the effect of viewpoint for each stimulus type, after removing differences related to difficulty via the difficult versus easy size oddity comparison:

Greater fMRI activity for different view faces versus same view faces relative to size difficulty control: (different view faces–same view faces) – (difficult size–easy size).

Greater fMRI activity for different view objects versus same view objects relative to size difficulty control: (different view objects–same view objects) – (difficult size–easy size).

Greater fMRI activity for different view scenes versus same view scenes relative to size difficulty control: (different view scenes–same view scenes) – (difficult size–easy size).

To ensure that any reliable interactions that resulted were not driven by baseline effects (i.e., interactions driven by the difficult vs. easy size comparison as opposed to the different view vs. same view comparison), we also tested the simple effect of different versus same view for each stimulus-type. To avoid selection bias in the case of the imaging data (because the interaction and simple effect contrasts are not orthogonal, any test of the simple effect in voxels that were selected for showing an interaction would be statistically biased, unless one used, as here, the same corrected statistical threshold for both contrasts), we concentrated on voxels that showed both a reliable interaction and a reliable simple effect of different versus same view that survived SVC for multiple comparisons (Fig. 2). Having said this, for completeness we also report separately (in Fig. 3) voxels in which we found both a trend toward an interaction and a trend for the different view versus same view simple effect at P < 0.001 (uncorrected).

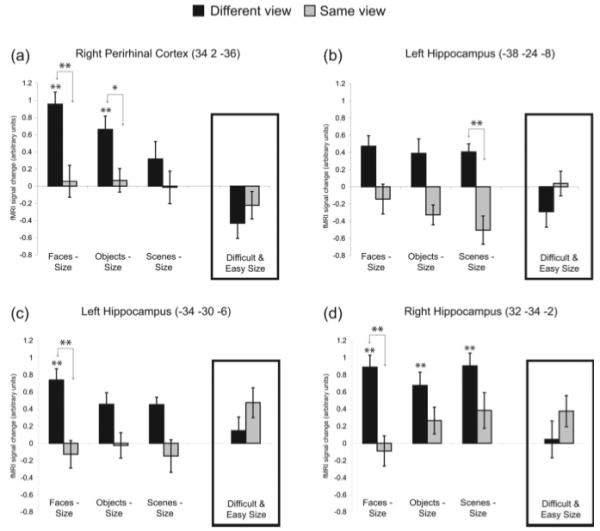

FIGURE 2.

Significant viewpoint effects. fMRI signal change relative to size baseline (i.e., difficult size was subtracted from the different view conditions and easy size was subtracted from the same view conditions) in suprathreshold voxels from the perirhinal and hippocampal regions of interest. Signal change for the difficult and easy size conditions is also shown in the inset box for each voxel. Significance is shown for the comparison of different vs. same view within each stimulus type (as indicated by arrows) or the comparison of a given condition relative to size baseline; **P < 0.05 (FWE-corrected), *P < 0.001 (uncorrected). Error bars represent standard error of mean (SEM).

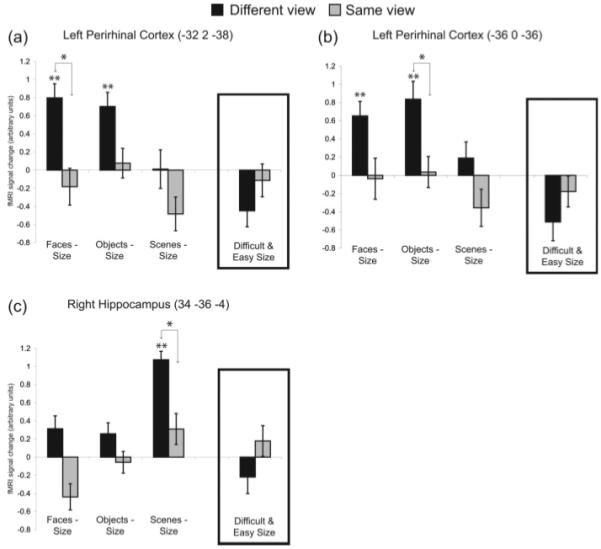

FIGURE 3.

Mariginally significant viewpoint effects. fMRI signal change relative to size baseline (i.e., difficult size was subtracted from the different view conditions and easy size was subtracted from the same view conditions) in voxels from the perirhinal and hippocampal regions of interest that survive a threshold of P < 0.001 (uncorrected). Signal change for the difficult and easy size conditions is also shown in the inset box for each voxel. Significance is shown for the comparison of different vs. same view within each stimulus type (as indicated by arrows) or the comparison of a given condition relative to size baseline; **P < 0.05 (FWE-corrected), *P < 0.001 (uncorrected). Error bars represent SEM.

Finally, posthoc t-tests (FWE-corrected for multiple comparisons) were also performed for each brain region to investigate significant activity for each condition relative to its size baseline (i.e., different view conditions vs. difficult size and same view conditions vs. easy size). Given our directional hypotheses, all t-tests were one-tailed unless stated otherwise.

Behavioral Data

The accuracy and reaction time (RT) data are shown in Table 1. As planned, accuracy was lower and RTs were longer for difficult relative to easy size oddity baseline conditions, t(26)’s > 9.32; P’s < 0.001. In relation to our planned comparisons, the interaction contrasts for scenes revealed no greater difference in accuracy between different view scenes and same view scenes than between difficult versus easy size oddity conditions [i.e., t(26) = 0.87; P = 0.40, two-tailed]. In terms of RTs, the increase in RTs for different versus same view scenes was actually less than the increase for difficult versus easy size oddity [t(26) = −2.51; P < 0.05, two-tailed]. These results suggest that the corresponding planned comparison on the imaging data is not confounded by difficulty effects.

TABLE 1. Average Accuracy and Reaction Time (Correct Trials Only) Scores for Each Condition (Standard Deviations Shown in Parentheses).

| Different view faces |

Different view objects |

Different view scenes |

Difficult size |

Same view face |

Same view objects |

Same view scenes |

Easy size | |

|---|---|---|---|---|---|---|---|---|

| Proportion correct | 0.75 (0.08) | 0.74 (0.10) | 0.81 (0.08) | 0.82 (0.09) | 0.98 (0.03) | 0.99 (0.02) | 0.95 (0.05) | 0.98 (0.03) |

| Reaction times (ms) | 2,797 (425) | 3,163 (398) | 3,295 (380) | 2,259 (485) | 1,741 (317) | 1,797 (315) | 2,879 (338) | 1,677 (363) |

In contrast to pilot experiments performed outside the scanner, the planned comparisons for faces and objects showed that the decrease in accuracy for different versus same view conditions was greater than the decrease for difficult versus easy size oddity conditions [t(26)’s < −3.17; P’s < 0.005, two-tailed]. The increase in RTs for different versus same view conditions was also greater than that for difficult versus easy size oddity conditions [t(26)’s > 8.5; P’s < 0.001, two-tailed]. Although these results suggest that the different view face and object conditions were, contrary to expectations, more difficult than the difficult size oddity control [as confirmed by posthoc pairwise t-tests, t(26)’s > 2.7; P’s < 0.05, two-tailed], further considerations suggest that the imaging data for face and object conditions are unlikely to be confounded by difficulty (see Discussion).

Imaging Data

Effects of viewpoint: ROI analyses

To investigate our predictions, SVC analyses were performed on our two primary anatomical ROIs: bilateral perirhinal cortex and bilateral hippocampi. Local maxima surviving a threshold of P < 0.05 (FWE-corrected for multiple comparisons) within each region for the three planned comparisons of viewpoint effects (see Methods) are reported below. We also report posthoc t-tests (FWE-corrected for multiple comparisons) for each condition relative to its size baseline (i.e., different view conditions vs. difficult size and same view conditions vs. easy size). For the perirhinal and hippocampal ROIs, the minimum Z-score required to survive the P < 0.05 (FWE-corrected for multiple comparisons) threshold was 3.4 and 3.5, respectively.

Perirhinal cortex

The planned interaction contrast for faces revealed viewpoint effects (i.e., more activity for different than same view conditions relative to their respective size baseline conditions) in the right perirhinal cortex [34, 2, −36, Z = 3.8] (Fig. 2a). The same voxel showed reliably greater activity for different than same views of faces alone (i.e., the simple effect also survived correction, Z = 4.0). Notably, this voxel also survived P < 0.001 (uncorrected) for the object interaction contrast (Z = 3.1). Posthoc, pairwise tests (FWE-corrected) relative to the size oddity baselines showed significant activity for different view objects and faces (Z’s > 4.3), but not for any other condition.

There were no voxels that survived an FWE correction of P < 0.05 for the objects or scenes planned comparison. However, when we lowered the threshold to P < 0.001 (uncorrected) we found a significant effect of viewpoint for faces and objects in two left perirhinal voxels [−32, 2, −38, Z = 3.39 and −36, 0, −36, Z = 3.3 for faces and objects, respectively] (Figs. 3a,b). Posthoc, pairwise tests (FWE-corrected) relative to the size oddity baselines showed significant activity for different view faces and objects in both voxels (Z’s > 3.5). We also performed the reverse contrast for each stimulus type [i.e., (same view–different view) – (easy size–difficult size)] and found no suprathreshold voxels.

Hippocampus

The planned interaction contrast for scenes showed a significant effect of viewpoint in the right hippocampus [−38, −24, −8, Z = 4.3] (Fig. 2b). The same voxel showed reliably greater activity for different than same views of scenes alone (i.e., the simple effect also survived correction, Z = 3.7). In addition, the planned interaction contrast for scenes revealed two voxels at the boundary between the hippocampus and thalamus [−14, −32, 10, Z = 3.9 and 12, −34, 10, Z = 4.1]. When we lowered the threshold to P < 0.001 (uncorrected), we observed a significant effect of viewpoint for scenes in the right posterior hippocampus [34, −36, −4, Z = 3.2] (Fig. 3c). Posthoc, pairwise tests (FWE-corrected) relative to the size oddity baselines showed significant activity in this voxel for different view scenes (Z = 6.3), but not for any other condition.

The planned interaction contrast for faces revealed voxels of significant activity in the left [−34, −30, −6, Z = 4.0] and right [32, −34, −2, Z = 4.1] hippocampus (Figs. 2c,d). These same voxels showed reliably greater activity for different than same views of faces alone (Z’s > 3.5). Posthoc pairwise tests (FWE-corrected) relative to the size oddity baselines revealed significant activity for the different view conditions of faces in both hippocampal voxels, scenes and objects in the right voxel (Z’s > 4.2), and a trend for scenes and objects in the left voxel (Z’s > 3.3).

There were no voxels in the hippocampus that survived an FWE correction for the objects planned comparison. We also performed the reverse contrast for each stimulus type [i.e., (same view–different view) – (easy size–difficult size)] and found no suprathreshold voxels.

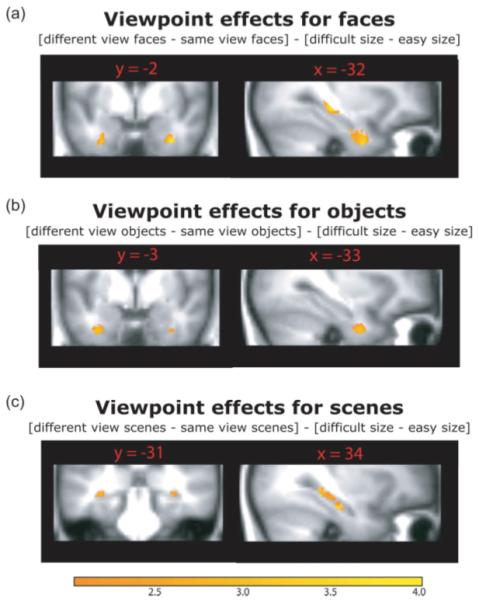

To show the spatial extent of these perirhinal and hippocampal activations, Figure 4 displays statistical maps for each of the three planned comparisons using a liberal threshold of P < 0.01 uncorrected. Statistical maps are superimposed on the mean structural image for all participants in the present study. A SVC was applied based on a mask image created by combining the perirhinal and hippocampal ROIs above.

FIGURE 4.

Regions of MTL activity in the whole-brain analysis of viewpoint for each of the three a priori contrasts. To show the spatial extent of the activations, these maps were thresholded at P < 0.01 (uncorrected). Statistical are superimposed on the mean structural image for all participants in the present study and the color bar reflects t-values. [Color figure can be viewed in the online issue which is available at www.interscience.wiley.com.]

Amygdala, PPA, and FFA

Results from ROI analyses for the PPA, FFA, and amygdala are reported in Supporting Information. It is noteworthy that the viewpoint effects reported for the perirhinal cortex and hippocampus were not observed in the amygdala, indicating that the observed results were not common to all MTL structures.

Effects of viewpoint: Whole-brain analysis

The same three planned comparisons were also performed on a voxel-by-voxel basis, to investigate brain regions outside the MTL showing any viewpoint effects. For maxima outside the MTL, a threshold of P < 0.05, two-tailed and FWE-corrected for the whole brain was applied. The results for faces and objects are listed in Tables 2 and 3 (no regions outside the MTL showed view-effects for scenes). All tables list regions of significant BOLD signal change outside the MTL for the specified contrast, ordered from anterior to posterior regions in the brain. Brodmann areas (BA), P and Z values, and sterotaxic co-ordinates (in mm) of peak voxels in MNI space are given. A threshold of P < 0.05 corrected for multiple comparisons via familywise error (FWE) using Random Field Theory (Worsley et al., 1995) was applied.

TABLE 2. View Effect Outside the MTL for Faces: Different View > Same View (With Size Difficulty Control): (Different View Faces–Same View Faces) – (Difficult Size–Easy Size).

| Brain region | BA | P (FWE) | Z value | x | y | z |

|---|---|---|---|---|---|---|

| Left hemisphere | ||||||

| Inferior occipital gyrus | 37 | <0.001 | 6.71 | −48 | −64 | −12 |

| Inferior occipital gyrus | 19 | <0.001 | 6.18 | −44 | −76 | −4 |

| Inferior occipital gyrus | 18 | 0.001 | 5.47 | −28 | −90 | −10 |

| Cerebellum | <0.001 | 5.78 | −12 | −74 | −42 | |

| Right hemisphere | ||||||

| Inferior temporal gyrus | 37 | <0.001 | 6.34 | 50 | −64 | −12 |

| Inferior occipital gyrus | 19 | 0.001 | 5.45 | 44 | −76 | −2 |

| Inferior occipital gyrus | 18 | 0.003 | 5.18 | 30 | −92 | −12 |

| Cerebellum | <0.001 | 5.78 | 4 | −72 | −26 | |

There were no suprathreshold clusters for the reverse contrast: (Same view faces–Different view faces) – (Easy size–Difficult size).

TABLE 3. View Effect Outside the MTL for Objects: Different View > Same View (With Size Difficulty Control): (Different View Objects–Same View Objects) – (Difficult Size–Easy Size).

| Brain region | BA | P (FWE) | Z value | x | y | z |

|---|---|---|---|---|---|---|

| Left hemisphere | ||||||

| Insula | 48 | 0.003 | 5.21 | −38 | 0 | 2 |

| Precentral gyrus | 48 | 0.023 | 4.72 | −46 | −4 | 26 |

| Superior temporal gyrus |

20 | 0.011 | 4.89 | −40 | −22 | −8 |

| Cerebellum | 0.009 | 4.94 | −8 | −58 | −8 | |

| Middle temoral gyrus | 37 | 0.013 | 4.86 | −50 | −66 | 0 |

| Right hemisphere | ||||||

| Inferior frontal gyrus, opercular part |

6 | 0.011 | 4.88 | 56 | 8 | 16 |

| Precentral gyrus | 43 | 0.008 | 4.96 | 58 | 2 | 24 |

| Insula | 48 | <0.001 | 5.94 | 40 | −2 | 8 |

| Lingual gyrus | 18 | 0.022 | 4.73 | 14 | −68 | 0 |

There were no suprathreshold clusters for the reverse contrast: (Same view objects–Different view objects) – (Easy size–Difficult size).

There were no suprathreshold clusters outside the MTL for either contrast involving scenes [i.e., (Different view scenes–Same view scenes) – (Difficult size–Easy size) or (Same view scenes–Different view scenes) – (Easy size–Difficult size)].

Finally, although nothing survived correction for either the whole brain or for the MTL in the comparison of difficult versus easy size oddity conditions, there were maxima showing greater activity at P < 0.001 (uncorrected) for the difficult size oddity condition in bilateral insula and right lateral frontal cortex, regions that have previously been associated with greater “effort” (Duncan and Owen, 2000).

Stimulus specificity

In addition to the above planned comparisons, we also conducted further posthoc tests to determine whether we observed viewpoint effects that were greater for one stimulus type over another. There were six possible comparisons: (1) viewpoint effects for faces–viewpoint effects for scenes [i.e., (different view faces–same view faces) – (different view scenes–same view scenes)], (2) viewpoint effects for objects–viewpoint effects for scenes, (3) viewpoint effects for scenes–viewpoint effects for faces, (4) viewpoint effects for scenes–viewpoint effects for objects, (5) viewpoint effects for faces–viewpoint effects for objects, (6) viewpoint effects for objects–viewpoint effects for faces. Based on neuropsychological studies, we predicted greater viewpoint effects in the perirhinal cortex for faces and objects relative to scenes, and greater viewpoint effects in the hippocampus for scenes relative to faces and objects (Lee et al., 2005b, 2006b; Barense et al., 2007).

Face viewpoint effects > Scene viewpoint effects and Object viewpoint effects > Scene viewpoint effects

As predicted, we observed a greater effect of viewpoint for faces than for scenes in the perirhinal cortex (34, −2, −36, Z = 3.6; P < 0.05 FWE-corrected for multiple comparisons). In addition, we also observed a voxel that showed a greater viewpoint effect for faces than for scenes in the posterior hippocampus (−22, −32, −2, Z = 6.28; P < 0.05 FWE-corrected for multiple comparisons). There were no voxels in either the perirhinal cortex or hippocampus that showed suprathreshold activity for object viewpoint effects relative to scene viewpoint effects.

Scene viewpoint effects > Object viewpoint effects and Scene viewpoint effects > Face viewpoint effects

We identified a region at the boundary of the right hippocampus and thalamus that showed a greater viewpoint effect for scenes than for objects at a threshold of P < 0.05 FWE-corrected for multiple comparisons (14, −36, 10; Z = 3.6). When we lowered the threshold to P < 0.001 (uncorrected), we observed an additional region in the right hippocampus that showed a greater viewpoint effect for scenes than for objects (34, −38, −4, Z = 3.0). When viewpoint effects for scenes were compared to those observed for faces, we did not find any voxels in the hippocampus that showed greater scene viewpoint effects at a threshold of P < 0.05 FWE-corrected for multiple comparisons. When we lowered the threshold to P < 0.001 (uncorrected), we observed a voxel at the boundary of the right hippocampus and thalamus that showed a greater viewpoint effect for scenes than for faces (−12, −34, 10, Z = 3.1). There were no suprathreshold voxels showing greater viewpoint effects for scenes relative to faces or objects in the perirhinal cortex.

Face viewpoint effects > Object viewpoint effects and Object viewpoint effects > Face viewpoint effects

When viewpoint effects for faces were compared to those observed for objects, we identified a voxel in the right posterior hippocampus that showed greater viewpoint effects for faces (−26, −36, 2; Z = 3.5; P < 0.05 FWE-corrected for multiple comparisons). There were no suprathreshold voxels in the hippocampus that showed greater viewpoint effects for objects than for faces. Within the perirhinal cortex, there were no voxels that showed greater viewpoint effects for faces relative to objects, and vice versa.

DISCUSSION

Nonhuman primates and humans with perirhinal cortex damage have demonstrated discrimination impairments involving complex objects and faces presented from different viewpoints (Buckley et al., 2001; Barense et al., 2005, 2007; Lee et al., 2006b), whereas humans with hippocampal damage have shown selective deficits in discriminations involving different viewpoints of scenes (Lee et al., 2005b, 2006b). To provide convergent evidence, the present study used fMRI to scan healthy subjects while they made oddity judgments for various types of trial-unique stimuli. Consistent with both of our predictions from patient studies, when the discrimination increased the demand for processing conjunctions of features (i.e., different viewpoint conditions compared to same viewpoint conditions) we observed (1) increased perirhinal activity for judgments involving faces and objects and (2) increased posterior hippocampal activity for judgments involving scenes. In addition to these predicted findings, we also observed a significant effect of viewpoint for faces in the posterior hippocampus.

It is important to note that the experimental paradigm did not place an explicit demand on long-term memory. Within each trial the stimuli were simultaneously presented, and across trials the stimuli were trial-unique. Moreover, unlike in previous studies, the object stimuli were novel, thus ruling out an explanation that the activity is due to the retrieval of a stored long-term semantic memory for the object. These findings, when considered in combination with the discrimination deficits observed in patients after damage to these regions, provide strong evidence that MTL structures are responsible for integrating complex visual features comprising faces and objects—even in the absence of long-term memory demands. Furthermore, when taken together, these neuroimaging and patient findings suggest that there may be some degree of differential sensitivity, with the perirhinal cortex more involved in processing faces and possibly objects (relative to scenes) (see also Litman et al., 2009) and the posterior hippocampus more involved in processing scenes (relative to objects).

Although our favored interpretation is that the present MTL activity reflects perceptual processing, it might also be explicable in terms of very short-term memory across saccades between stimuli within a trial (Hollingworth et al., 2008). Several studies have reported impairments of short-term memory for stimulus conjunctions in amnesia (Hannula et al., 2006; Nichols et al., 2006; Olson et al., 2006a,b, although see Shrager et al., 2008), and fMRI studies have observed hippocampal activity in tasks typically considered to assess short-term memory (Ranganath and D’Esposito, 2001; Cabeza et al., 2002; Park et al., 2003; Karlsgodt et al., 2005; Nichols et al., 2006). This hypothesis is difficult to refute: if memory across saccades is considered the province of short-term memory, it is not immediately obvious how one could test perception of complex stimuli with a large number of overlapping features. More importantly, however, both our and the short-term memory account of the present data force revision to the prevailing view of MTL function by extending its function beyond long-term memory, and considering different functional contributions from different MTL structures.

Another potential interpretation of the present findings is that they reflect incidental encoding into long-term memory, rather than perceptual processing per se. Although it is not possible to disprove this hypothesis, there are reasons to believe that encoding is not exclusively responsible for our results. First, as mentioned above, patient studies have indicated that perirhinal and hippocampal regions are necessary to perform the face/object and scene discriminations, respectively. Second, a basic encoding explanation would predict MTL activity for our same view conditions (relative to our size oddity), which we did not find (Figs. 2 and 3). Third, a study using a similar oddity paradigm provided no evidence that activity in either perirhinal cortex, or in anterior hippocampus, is modulated by encoding (Lee et al., 2008). In this study, oddity discriminations were repeated across three sessions. Critically, the magnitude of activity in perirhinal cortex and anterior hippocampus remained similar irrespective of whether the stimuli were novel (in the first session) or familiar (in second or third sessions). If activity in these regions were modulated by encoding, it would be expected to decrease with stimulus repetitions. Finally, it is important to note that the proposal that the patterns of MTL activity in the current study are a correlate of perceptual processes and the proposal that they reflect incidental encoding are not mutually exclusive. There is no obvious reason why the same mechanism could not underlie both accounts: the stronger the perceptual representation, the greater the likelihood of successful memory encoding.

Finally, one might argue that the observed MTL effects are due to the fact that our different view oddity conditions were generally more “difficult,” as indicated by lower accuracy and/or longer RTs. In other words, our fMRI activations could reflect a generic concept like cognitive effort rather than perceptual processing. We included difficult and easy versions of a size oddity task, which do not require conjunctive processing, precisely to address this concern (as in Barense et al., 2007). In pilot behavioral experiments, we manipulated the squares’ sizes to match accuracy and RTs to the different and same view conditions. Unfortunately, while behavioral performance in the scanner was reasonably matched for scenes, it was not for faces or objects. Fortunately, there are several aspects of our analysis and results that argue strongly against a “difficulty” explanation. First, we analyzed correct trials only, discounting any explanation in terms of proportion of errors. Second, there was less activity for difficult than easy size conditions in all MTL ROIs, which is incompatible with a difficulty account (e.g., indicating that the greater MTL activity for the different than same view conditions cannot relate to the longer RTs per se). Third, a difficulty account could not explain the activity in posterior hippocampus related to scene processing, for which behavioral performance was matched with the size oddity conditions, nor the lack of reliable activity in perirhinal cortex related to scene processing, given that behavioral performance was worse in the different than same view scene condition. Finally, it is hard to see how a single “difficulty” explanation could explain the apparent anatomical differences between MTL structures in terms of conjunctive processing of faces and objects versus conjunctive processing of scenes.

It is interesting to consider how our claim that the perirhinal cortex and hippocampus support complex conjunctive processing for faces/objects and scenes respectively relates to other cognitive functions that have been attributed to these structures. Dual-process theories of recognition memory suggest that the perirhinal cortex mediates familiarity-based memory, whereas the hippocampus plays a critical role in recollective aspects of recognition (e.g., Aggleton and Brown, 1999). Another account proposes that memory for relationships between perceptually distinct items (i.e., relational memory) is dependent on the hippocampus (e.g., Eichenbaum et al., 1994), with the perirhinal cortex important for item-based memory. Our findings are not incompatible with these ideas. For example, it has been shown that familiarity-based memory was enhanced when participants were required to process stimulus configurations holistically through a unitization/integration process, effects which were localized outside the hippocampus (Mandler et al., 1986; Yonelinas et al., 1999; Giovanello et al., 2006; Quamme et al., 2007). It seems plausible that these processes and the feature integration processes assessed by the face and object discriminations in the current study may tax a similar perirhinal-based mechanism. By contrast, discrimination of complex scenes would require processing relations/associations between the various elements comprising the scene, which may require the relational/associative processes that have been attributed to the hippocampus.

Contrary to our predictions, in the hippocampus we also observed significant effects of viewpoint for faces, and significant activity for different view conditions of faces and objects relative to the difficult size baseline. Put differently, one could argue that the hippocampus showed a main effect of viewpoint, which cannot be attributed to difficulty, but only slight evidence of differential activity across the different stimulus types. This finding is inconsistent with the intact performance of patients with selective hippocampal damage on nearly identical oddity discriminations involving objects (Barense et al., 2007) and faces (Lee et al., 2005b). One potential explanation comes from a series of studies that have highlighted a hippocampal-based comparator mechanism (i.e., a match–mismatch signal) underlying associative novelty detection (Kumaran and Maguire, 2006a,b, 2007). It is possible that such a mechanism was operating in the present study—as a participant compared different stimuli within a trial, the hippocampus signaled a mismatch to the odd-one-out. It is important to note, however, that such an explanation would require the putative hippocampal comparator mechanism to be operating at extremely short (trans-saccadic) delays. Furthermore, given the intact performance of patients with hippocampal damage on this face and object oddity task, although this activity may be automatic, it does not appear necessary to support normal performance for the different view face and object oddity discriminations.

In summary, the present experiment adds to a growing body of evidence suggesting that the various structures within the MTL do not constitute a unitary system specialized for long-term declarative memory. Our observation that the perirhinal cortex and posterior hippocampus were more active for discriminations involving novel stimuli presented from different viewpoints, suggests a role for these structures in integrating the complex features comprising objects, faces, and scenes into view-invariant abstract representations. Moreover, when considered in combination with neuropsychological findings in patients with damage to these regions, there appears to be some degree of differential sensitivity, with the perirhinal cortex more responsive to viewpoint changes involving faces than scenes and the posterior hippocampus more responsive to viewpoint changes involving scenes than objects. These processes are not restricted to the domain of long-term declarative memory, but are engaged during tasks involving scenes faces and objects, irrespective of the demands placed on mnemonic processing (Barense et al., 2005, 2007; Lee et al., 2005a,b), short-term working memory (Hannula et al., 2006; Olson et al., 2006a), long-term declarative memory (Pihlajamaki et al., 2004; Taylor et al., 2007), and nondeclarative memory (Chun and Phelps, 1999; Graham et al., 2006).

Supplementary Material

Acknowledgments

The authors thank Michael J. Tarr (Brown University, Providence, RI) for providing the greeble stimuli and Jessica A. Grahn and Christian Schwarzbauer for technical assistance.

Grant sponsor: Medical Research Council; Grant number: WBSE U.1,055.05.012.00001.01; Grant sponsor: Wellcome Trust Research Career Development Fellowship; Grant number: 082315; Grant sponsor: Peterhouse Research Fellowship.

Footnotes

Additional Supporting Information may be found in the online version of this article.

REFERENCES

- Aggleton JP, Brown MW. Episodic memory, amnesia, and the hippocampal-anterior thalamic axis. Behav Brain Sci. 1999;22:425–444. discussion 444–489. [PubMed] [Google Scholar]

- Barense MD, Bussey TJ, Lee ACH, Rogers TT, Davies RR, Saksida LM, Murray EA, Graham KS. Functional specialization in the human medial temporal lobe. J Neurosci. 2005;25:10239–10246. doi: 10.1523/JNEUROSCI.2704-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barense MD, Gaffan D, Graham KS. The human medial temporal lobe processes online representations of complex objects. Neuropsychologia. 2007;45:2963–2974. doi: 10.1016/j.neuropsychologia.2007.05.023. [DOI] [PubMed] [Google Scholar]

- Baxter MG, Murray EA. Opposite relationship of hippocampal and rhinal cortex damage to delayed nonmatching-to-sample deficits in monkeys. Hippocampus. 2001;11:61–71. doi: 10.1002/1098-1063(2001)11:1<61::AID-HIPO1021>3.0.CO;2-Z. [DOI] [PubMed] [Google Scholar]

- Buckley MJ, Booth MC, Rolls ET, Gaffan D. Selective perceptual impairments after perirhinal cortex ablation. J Neurosci. 2001;21:9824–9836. doi: 10.1523/JNEUROSCI.21-24-09824.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bussey TJ, Saksida LM, Murray EA. Perirhinal cortex resolves feature ambiguity in complex visual discriminations. Eur J Neurosci. 2002;15:365–374. doi: 10.1046/j.0953-816x.2001.01851.x. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Dolcos F, Graham R, Nyberg L. Similarities and differences in the neural correlates of episodic memory retrieval and working memory. Neuroimage. 2002;16:317–330. doi: 10.1006/nimg.2002.1063. [DOI] [PubMed] [Google Scholar]

- Chun MM, Phelps EA. Memory deficits for implicit contextual information in amnesic subjects with hippocampal damage. Nat Neurosci. 1999;2:844–847. doi: 10.1038/12222. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Price CJ. Perirhinal contributions to human visual perception. Curr Biol. 2007;17:1484–1488. doi: 10.1016/j.cub.2007.07.066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J, Owen AM. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci. 2000;23:475–483. doi: 10.1016/s0166-2236(00)01633-7. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H, Otto T, Cohen NJ. Two functional components of the hippocampal memory system. Behav Brain Sci. 1994;17:449–518. [Google Scholar]

- Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Friston K, Holmes AP, Worsley KJ, Poline JB, Frith CD, Frackowiak RS. Statistical parametric maps in functional imaging: A general linear approach. Hum Brain Mapp. 1995;2:189–210. [Google Scholar]

- Friston KJ, Fletcher P, Josephs O, Holmes A, Rugg MD, Turner R. Event-related fMRI: Characterizing differential responses. Neuroimage. 1998;7:30–40. doi: 10.1006/nimg.1997.0306. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Penny W, Phillips C, Kiebel S, Hinton G, Ashburner J. Classical and Bayesian inference in neuroimaging: Theory. Neuroimage. 2002;16:465–483. doi: 10.1006/nimg.2002.1090. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Becoming a “Greeble” expert: Exploring mechanisms for face recognition. Vision Res. 1997;37:1673–1682. doi: 10.1016/s0042-6989(96)00286-6. [DOI] [PubMed] [Google Scholar]

- Giovanello KS, Keane MM, Verfaellie M. The contribution of familiarity to associative memory in amnesia. Neuropsychologia. 2006;44:1859–1865. doi: 10.1016/j.neuropsychologia.2006.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graham KS, Scahill VL, Hornberger M, Barense MD, Lee AC, Bussey TJ, Saksida LM. Abnormal categorization and perceptual learning in patients with hippocampal damage. J Neurosci. 2006;26:7547–7554. doi: 10.1523/JNEUROSCI.1535-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannula DE, Tranel D, Cohen NJ. The long and the short of it: Relational memory impairments in amnesia, even at short lags. J Neurosci. 2006;26:8352–8359. doi: 10.1523/JNEUROSCI.5222-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartley T, Bird CM, Chan D, Cipolotti L, Husain M, Vargha-Khadem F, Burgess N. The hippocampus is required for short-term topographical memory in humans. Hippocampus. 2007;17:34–48. doi: 10.1002/hipo.20240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henson RNA, Penny WD. ANOVAs and SPM. Wellcome Department of Imaging Neuroscience; 2003. Technical Report. [Google Scholar]

- Hollingworth A, Richard AM, Luck SJ. Understanding the function of visual short-term memory: Transsaccadic memory, object correspondence, and gaze correction. J Exp Psychol Gen. 2008;137:163–181. doi: 10.1037/0096-3445.137.1.163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karlsgodt KH, Shirinyan D, van Erp TG, Cohen MS, Cannon TD. Hippocampal activations during encoding and retrieval in a verbal working memory paradigm. Neuroimage. 2005;25:1224–1231. doi: 10.1016/j.neuroimage.2005.01.038. [DOI] [PubMed] [Google Scholar]

- Kumaran D, Maguire EA. The dynamics of hippocampal activation during encoding of overlapping sequences. Neuron. 2006a;49:617–629. doi: 10.1016/j.neuron.2005.12.024. [DOI] [PubMed] [Google Scholar]

- Kumaran D, Maguire EA. An unexpected sequence of events: Mismatch detection in the human hippocampus. PLoS Biol. 2006b;4:e424. doi: 10.1371/journal.pbio.0040424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kumaran D, Maguire EA. Match mismatch processes underlie human hippocampal responses to associative novelty. J Neurosci. 2007;27:8517–8524. doi: 10.1523/JNEUROSCI.1677-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee AC, Bussey TJ, Murray EA, Saksida LM, Epstein RA, Kapur N, Hodges JR, Graham KS. Perceptual deficits in amnesia: Challenging the medial temporal lobe ‘mnemonic’ view. Neuropsychologia. 2005a;43:1–11. doi: 10.1016/j.neuropsychologia.2004.07.017. [DOI] [PubMed] [Google Scholar]

- Lee AC, Buckley MJ, Pegman SJ, Spiers H, Scahill VL, Gaffan D, Bussey TJ, Davies RR, Kapur N, Hodges JR, Graham KS. Specialization in the medial temporal lobe for processing of objects and scenes. Hippocampus. 2005b;15:782–797. doi: 10.1002/hipo.20101. [DOI] [PubMed] [Google Scholar]

- Lee AC, Bandelow S, Schwarzbauer C, Henson RNA, Graham KS. Perirhinal cortex activity during visual object discrimination: An event-related fMRI study. Neuroimage. 2006a;33:362–373. doi: 10.1016/j.neuroimage.2006.06.021. [DOI] [PubMed] [Google Scholar]

- Lee AC, Buckley MJ, Gaffan D, Emery T, Hodges JR, Graham KS. Differentiating the roles of the hippocampus and perirhinal cortex in processes beyond long-term declarative memory: A double dissociation in dementia. J Neurosci. 2006b;26:5198–5203. doi: 10.1523/JNEUROSCI.3157-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee AC, Scahill VL, Graham KS. Activating the medial temporal lobe during oddity judgment for faces and scenes. Cereb Cortex. 2008;18:683–696. doi: 10.1093/cercor/bhm104. [DOI] [PubMed] [Google Scholar]

- Litman L, Awipi T, Davachi L. Category-specificity in the human medial temporal cortex. Hippocampus. 2009;19:308–319. doi: 10.1002/hipo.20515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mandler G, Graf P, Kraft D. Activation and elaboration effects in recognition and word priming. Q J Exp Psychol. 1986;38A:645–662. [Google Scholar]

- Mayes AR, Holdstock JS, Isaac CL, Hunkin NM, Roberts N. Relative sparing of item recognition memory in a patient with adultonset damage limited to the hippocampus. Hippocampus. 2002;12:325–340. doi: 10.1002/hipo.1111. [DOI] [PubMed] [Google Scholar]

- Nichols EA, Kao YC, Verfaellie M, Gabrieli JD. Working memory and long-term memory for faces: Evidence from fMRI and global amnesia for involvement of the medial temporal lobes. Hippocampus. 2006;16:604–616. doi: 10.1002/hipo.20190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olson IR, Moore KS, Stark M, Chatterjee A. Visual working memory is impaired when the medial temporal lobe is damaged. J Cogn Neurosci. 2006a;18:1087–1097. doi: 10.1162/jocn.2006.18.7.1087. [DOI] [PubMed] [Google Scholar]

- Olson IR, Page K, Moore KS, Chatterjee A, Verfaellie M. Working memory for conjunctions relies on the medial temporal lobe. J Neurosci. 2006b;26:4596–4601. doi: 10.1523/JNEUROSCI.1923-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park DC, Welsh RC, Marshuetz C, Gutchess AH, Mikels J, Polk TA, Noll DC, Taylor SF. Working memory for complex scenes: Age differences in frontal and hippocampal activations. J Cogn Neurosci. 2003;15:1122–1134. doi: 10.1162/089892903322598094. [DOI] [PubMed] [Google Scholar]

- Pihlajamaki M, Tanila H, Kononen M, Hanninen T, Hamalainen A, Soininen H, Aronen HJ. Visual presentation of novel objects and new spatial arrangements of objects differentially activates the medial temporal lobe subareas in humans. Eur J Neurosci. 2004;19:1939–1949. doi: 10.1111/j.1460-9568.2004.03282.x. [DOI] [PubMed] [Google Scholar]

- Quamme JR, Yonelinas AP, Norman KA. Effect of unitization on associative recognition in amnesia. Hippocampus. 2007;17:192–200. doi: 10.1002/hipo.20257. [DOI] [PubMed] [Google Scholar]

- Ranganath C, D’Esposito M. Medial temporal lobe activity associated with active maintenance of novel information. Neuron. 2001;31:865–873. doi: 10.1016/s0896-6273(01)00411-1. [DOI] [PubMed] [Google Scholar]

- Saksida LM, Bussey TJ, Buckmaster CA, Murray EA. No effect of hippocampal lesions on perirhinal cortex-dependent feature-ambiguous visual discriminations. Hippocampus. 2006;16:421–430. doi: 10.1002/hipo.20170. [DOI] [PubMed] [Google Scholar]

- Shrager Y, Levy DA, Hopkins RO, Squire LR. Working memory and the organization of brain systems. J Neurosci. 2008;28:4818–4822. doi: 10.1523/JNEUROSCI.0710-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Squire LR, Stark CE, Clark RE. The medial temporal lobe. Annu Rev Neurosci. 2004;27:279–306. doi: 10.1146/annurev.neuro.27.070203.144130. [DOI] [PubMed] [Google Scholar]

- Squire LR, Wixted JT, Clark RE. Recognition memory and the medial temporal lobe: A new perspective. Nat Rev Neurosci. 2007;8:872–883. doi: 10.1038/nrn2154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor KJ, Henson RN, Graham KS. Recognition memory for faces and scenes in amnesia: Dissociable roles of medial temporal lobe structures. Neuropsychologia. 2007;45:2428–2438. doi: 10.1016/j.neuropsychologia.2007.04.004. [DOI] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Winters BD, Forwood SE, Cowell RA, Saksida LM, Bussey TJ. Double dissociation between the effects of peri-postrhinal cortex and hippocampal lesions on tests of object recognition and spatial memory: Heterogeneity of function within the temporal lobe. J Neurosci. 2004;24:5901–5908. doi: 10.1523/JNEUROSCI.1346-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley KJ, Marrett S, Neelin P, Vandal AC, Friston KJ, Evans AC. A unified approach for determining significant signals in images of cerebral activation. Hum Brain Mapp. 1995;4:58–73. doi: 10.1002/(SICI)1097-0193(1996)4:1<58::AID-HBM4>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- Yonelinas AP, Kroll NE, Dobbins IG, Soltani M. Recognition memory for faces: When familiarity supports associative recognition judgments. Psychon Bull Rev. 1999;6:654–661. doi: 10.3758/bf03212975. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.