Abstract

A commitment to practice change (CTC) approach may be used in educational program evaluation to document practice changes, examine the educational impact relative to the instructional focus, and improve understanding of the learning-to-change continuum. The authors reviewed various components and procedures of this approach and discussed some practical aspects of its application using as an example of a study evaluating a presentation on menopausal care for primary care physicians. The CTC approach is a valuable evaluation tool, but it requires supplementation with other data to have a complete picture of the impact of education on practice. From the evaluation perspective, the self-reported nature of the CTC data is a major limitation of this method.

Keywords: commitment to practice change, program evaluation, continuing education

The translation of new knowledge into clinical practice can be a slow process, often taking a decade or more for research findings to find their way into routine patient care (Sussman, Valente, Rohrbach, Skara, & Pentz, 2006). The resulting gaps in the quality of healthcare have led to calls for action in many parts of the world (Committee on Quality of Healthcare in America, 2001; Legido-Quigley, McKee, Nolte, & Glinos, 2008). The traditional role of continuing medical education (CME)—updating physicians on the latest scientific evidence—is not sufficient to respond to this reality (Marinopoulos et al., 2007). Therefore, in the past five years there has been a marked shift toward focusing CME activities on improving practice rather than disseminating information (Davis, Davis, & Bloch, 2008; Regnier, Kopelow, Lane, & Alden, 2005). This shift in the goals of CME programming has resulted in a concomitant shift in how CME activities need to be evaluated. Evaluators must now go beyond measuring learner satisfaction and change in medical knowledge to the level of physician performance and patient outcomes. To date, only a limited number of CME evaluations have assessed impact at this level (Tian, Atkinson, Portnoy, & Gold, 2007). There is a pressing need to equip evaluators with valid, reliable and feasible methods of assessing practice and/or patient outcomes (Davis, Barnes, & Fox, 2003; Marinopoulos et al., 2007).

The commitment to change (CTC) approach is one tool available to CME evaluators for assessing the impact of education on clinical practice. The central, distinguishing feature of the CTC approach is that it asks participants in an educational activity to write down descriptions of the changes they propose to make as a result of what they learned during the activity (Purkis, 1982). Since 1982, when the CTC approach was introduced to medical educators as an evaluation method (Purkis, 1982), it has been increasingly used in the CME field to both facilitate and measure practice change (Wakefield, 2004). This trend is likely due, in part, to the perception that the CTC approach is relatively easy to implement and low-cost (Curry & Purkis, 1986; Jones, 1990). There are, however, significant gaps in the literature conceptualizing the CTC approach (Overton & MacVicar, 2008) and lack of a clear definition for CTC's critical components (Mazmanian, Ratcliff, Johnson, Davis, & Kantrowitz, 1998; Wakefield, 2004). The purpose of this article is to improve understanding of the CTC approach as an evaluation tool and raise awareness about several practical issues surrounding its application in educational program evaluation. We address these aims through providing an overview of the CTC approach from the program evaluator perspective, and discussing an example of using the CTC approach to evaluate a CME program on menopausal care.

What is the CTC approach?

The CTC approach is a theoretical framework and a practical approach for enabling and measuring behavioral change resulting from attending an educational event that involves requesting CTC statements from learners and, in cases where a follow-up is used, following-up with them at later time to ask about compliance with their statements and reasons for non-compliance (Mazmanian & Mazmanian, 1999; Purkis, 1982; Wakefield, 2004). There is a solid theoretical foundation to explain how and why the CTC approach may facilitate learning and change including Locke's goal-setting theory (Locke et al., 1981), Rogers' diffusion of innovations and communication networks (Rogers, 1995; Rogers and Kincaid, 1981), reflective learning (Schön, 1983), and the transtheoretical model of change (Prochaska, Reddling & Evers, 2002) that were discussed in Jones (1990), Mazmanian and Mazmanian (1999), Mazmanian, Waugh and Mazmanian (1997), Overton and MacVicar (2008), and Wakefield et al. (2003). There is also some empirical evidence of this effect of the CTC approach (Pereles et al., 1997), although the extent of the influence of the CTC approach on educational outcomes remains largely unknown (Mazmanian & Mazmanian, 1999; Wakefield, 2004).

As an evaluation tool, the CTC approach can be used for several purposes. One common purpose is documenting the impact of education on clinical practice in quantitative terms, such as the number of intended practice changes, number of learners who made CTC statements, mean number of commitments per learner, and percentage of compliance with stated commitments (e.g., Mazmanian & Mazmanian, 1999). In addition, findings related to the reasons for non-implementation of a CTC statement may advance understanding of how change occurs in a given clinical area and, thus, what could be done to better address specific barriers to practice improvement with future educational and/or organizational interventions. Also, the collected CTC statements can be compared to activity objectives (Dolcourt & Zuckerman, 2003; Lockyer et al., 2005), activity content (Curry & Purkis, 1986; Pereles, Lockyer, Hogan, Gondocz, & Parboosingh, 1997), evidence-based messages incorporated into the content (Wakefield et al., 2003), and amount of time allocated to a content area relevant to the CTC statements (Lockyer et al., 2001). Such analyses are helpful to verify the program planners' assumptions; identify “major points of impact”, that is, instructional points that stimulated practice change (Purkis, 1982); and document anticipated and unanticipated learning outcomes (Dolcourt & Zuckerman, 2003).

There is considerable variation in how the CTC approach has been implemented in the CME field (Wakefield, 2004). In order to characterize variations in how CTC is defined and implemented as an evaluation method, we conducted a MEDLINE search using “commitment to change” and “continuing medical education” as search terms and, additionally, reviewed references in the identified articles, yielding 18 published studies that employed the CTC approach as a means of assessing the outcomes of an educational activity. We then examined the CTC approach used in each case for similarities and differences. A major difference we found is whether the approach is used in a post-only design (in which information is gathered from participants only in the period immediately following an activity) or post/follow-up (participants are asked to write CTC statements after the educational activity and then are queried at a later time regarding their progress in making changes). Many published CTC studies have used the post-only design (e.g., Neill, Bowman, & Wilson, 2001); indeed, it is considered likely that the majority of evaluations of continuing education programs for healthcare professionals using CTC have been limited to collecting CTC statements (Mazmanian & Mazmanian, 1999). However, some authors, notably Mazmanian and Mazmanian (1999) and Wakefield (2004), consider a follow-up to be an integral part of the approach.

We found other differences as well (Table 1). Procedures for collecting commitment to change statements varied in the wording of the question eliciting CTC statements, use of the strength of commitment question, and inclusion of a signature line. Approaches for conducting the follow-up also varied. We observed differences in wording of questions about implementation, the scales used to assess implementation, inclusion of additional questions (e.g., about barriers to change and intention to continue with the initiated changes), and data collection procedure (e.g., written questionnaire versus verbal communication). The timing of the follow-up also varied.

Table 1.

Procedures of the Post/Follow-up Commitment to Change (CTC) Approach

| Stage | Component | Component Description | Procedure | Timing |

|---|---|---|---|---|

| Initial CTC Request |

Question soliciting a CTC statement |

Participants are asked to list their intended changes in practice (if any) resulting from attending an educational event. Participants are often asked to identify up to 3-5 “specific,” “concrete” and/or “measurable” changes. The words “commitment to change” may or may not be used in the request (e.g., they may be included in a form title). |

A form or a card is provided during or immediately after the event. A sample form may be part of the registration materials. A CTC request may be embedded in the course evaluation. Time may be left at the end of the event to complete the form. A self- addressed envelope may be used. Participants may be provided an incentive (e.g., registration fee discount). |

A form/card is collected at the end of an educational event. |

|

| ||||

| Strength of commitment question |

Participants may be asked to designate a level of commitment to a change, using the Likert scale (e.g., from 1=lowest to 5=highest level of commitment). |

|||

|

| ||||

| Signature | Form may include a signature line. | |||

|

| ||||

| Follow-up | Reminder of intended changes |

The completed form/card is used as a reminder of personal intended changes. |

Usually, a copy of the original CTC form/card and a questionnaire about implementation and barriers are mailed to participants. A follow-up may also be done through telephone interviewing. A 2nd follow-up may be used to reach either non- respondents only or all participants. |

1-6 months post event (a 2nd follow-up may be done 1-2 months after the 1st follow-up). |

|

| ||||

| Question(s) about implementation |

Participants are asked if they implemented their intended changes. The answer choices may be yes/no or specific degrees of implementation (e.g., fully implemented/partially implemented/not implemented; implemented one out of three/two out of three/all three). |

|||

|

| ||||

| Question(s) about barriers |

In most cases, follow-up includes questions about barriers to change/reasons for non- implementation. A list with the barriers to choose from may be included. |

|||

|

| ||||

| Additional questions |

Participants may also be asked about: sources of information that precipitated changes; the degree of difficulty of making the change (e.g., using a 5-point Likert scale); the number of patients affected by the change; and intention to continue with the initiated changes. |

|||

To date, little empirical evidence has been made available to provide guidance choosing from among these various options in designing a CTC evaluation. One important example is the study conducted by Mazmanian and colleagues, which found that a learner's signature on a CTC form does not appear to influence self-reported compliance with CTC statements (Mazmanian, Johnson, Zhang, Boothby, & Yeatts, 2001), but questions such as how to decide on the optimal timing for a follow-up (Wakefield, 2004) have not been systematically examined.

In the absence of empirical evidence, several authors have theorized about the impact of various choices or offered rationales in support of different options. For example, on the issue of how to word the CTC question, Overton and MacVicar (2008) hypothesized that if the initial CTC request includes an explicit reference to “commitment”, it is likely to evoke an attitudinal commitment. However, if learners are asked to indicate intended practice changes, the request is likely to produce a behavioral commitment to action. Wakefield (2004) suggested that asking what participants “plan to do” rather than “plan to change” may result in CTC statements more predictive of actual changes in practice behavior.

We came to a conclusion that variations in how the CTC approach has been used suggest it can be tailored to a particular educational program and program/evaluation purposes. At the same time, some uncertainty remains as far as how different components and procedures of the CTC approach influence the results obtained through its application.

An Application of the CTC Approach to CME

Seeing the CTC approach as a useful evaluation tool, the authors employed it as part of an evaluation of an educational program on menopausal care developed for an audience of primary care physicians. The remainder of this paper consists of our reflections on this evaluation experience and discussion of several issues regarding the CTC approach as a tool for evaluation of CME activities. Although some data related to the impact of the evaluated program are presented below, it was not the intent of this paper to report the evaluation findings. Thus, these data are used to illuminate application of the CTC approach rather than provide a complete picture of how education influenced learners and their patients.

Description of the Educational Program

In 2006-2007, we evaluated the impact of a presentation entitled “Hormone Therapy: Communicating Benefits and Risks in Peri- and Postmenopausal Women”. The presentation was designed to provide state-of-the-science information about various approaches to relieving menopausal symptoms and to help physicians more effectively communicate to patients the risks and benefits of different treatment options. The presentation was delivered in multiple venues as part of the Improving Menopausal Care (IMC) educational program. The length of the presentation ranged from 60 to 75 minutes with the time divided roughly equally between a review of the scientific evidence and strategies for communication with patients about risks and benefits. The latter component of the presentation included discussion of a case example and a question and answer period. The presentation was to be given by one faculty member for audiences of 15-100 attendees or by two faculty members for larger audiences (up to 500). Instructional materials included a slide presentation and a two-page quick reference guide summarizing recommendations for contraception in perimenopause and hormone therapy (HT) for menopausal symptoms for clinicians. Although this was a brief, one-time educational activity, the intended outcome was a change at the level of clinical practice—improved communication with patients who experienced menopausal symptoms.

Evaluation Design

The IMC evaluation study was designed to inform decision-making about the future direction of the IMC program. Given that single-event, lecture-based CME was shown to have a very limited or no impact on improving clinical practice and patient outcomes (Bloom, 2005; Mansouri & Lockyer, 2007; Marinopoulos et al., 2007), program planners and evaluators questioned if the described presentation was appropriate educational strategy to improve menopausal care. In particular, two main evaluation questions were: what are the effects of this educational program on the target audience and patients, and how do various aspects of the educational program contribute to the observed outcomes? A mixed-methods approach was used, combining direct observation of educational events, semi-structured interviews (individual and focus groups), and written surveys. Data were collected from a sample of learners pre-event (interviews), one week post-event (survey), and on follow-up at three and nine months (interviews). Additionally, for a subset of the sample, a 360-degree multisource feedback survey (Lockyer, 2003) was used to collect data from learners' peer physicians, healthcare team members and patients around the time of the second follow-up.

The CTC approach was integrated into the evaluation plan described above. The initial CTC request was one of seven questions in a survey mailed to evaluation participants about one week post-event. Participants were asked to indicate practice changes they planned to make as a result of attending the presentation and their level of commitment to each change using a 10-point scale (Figure 1).

Figure 1.

Initial CTC Request.

Three months post-event during the first follow-up telephone interview, participants were presented with their CTC statements and asked 1) if each planned change was implemented (yes/no), 2) if the level of commitment remained the same, 3) if they implemented any changes beyond those listed in their CTC statements, 4) if they wanted to make any new commitments to change and if so, what is their level of commitment, and 5) what barriers or enhancers they encountered or anticipated in the implementation process. These questions were repeated about nine months post-event during the second follow-up telephone interview. Both follow-up interviews included questions that were not part of the CTC approach related to satisfaction with the educational activity, self-assessment of knowledge and skill with respect to menopausal care, and a clinical vignette. It is due to the mixed methods and rich quantitative and qualitative data collected for this evaluation that we were able to gain insights about the meaning and value of various components of the CTC approach, as well as some challenges and limitations of using this approach in program evaluation.

Evaluation Participants

A total of 80 primary care physicians were recruited from approximately 1300 attendees at six educational events. Of these, 66 completed a post-event survey and all interviews, resulting in a completion rate of 82%. Thirteen participants took part in the 360-degree evaluation. Participants completing the survey and all interviews received a stipend of $500 to compensate for the approximately 3.5 hours required to complete this part of the evaluation. Physician participants in the 360-degree evaluation received an additional $360 to distribute the 360-degree surveys, and patients received a $10 incentive to complete their survey.

Issues in Using the CTC Approach for Evaluation

There may be little room for change in clinical practice

One basic assumption of the CTC approach is that there is an existing gap between optimal practice and actual practice that the educational program is designed to address. However, this assumption may not hold for all participants as some may already be practicing in a manner consistent with the desired outcome, such as a physician who reported that the presentation “didn't have much impact, except to reaffirm what I've been doing”. This becomes a critical issue when interpreting the results of a CTC evaluation. For example, in a hypothetical situation where education resulted in a very low number of CTC statements, what conclusion should be drawn? It is possible that the instructional content was insufficiently engaging or challenging to trigger the change. An alternative possibility is that learners were already practicing at the desired level in a given clinical area, which means the program planners' assumption about the extent of the practice gap was wrong. This suggests that a CTC approach should incorporate a mechanism for assessing or at least estimating baseline performance levels.

Quantitative CTC data are not sufficient to claim success or failure of education in closing practice gaps

One purported benefit of using the CTC approach as an evaluation tool is that it allows evaluators to measure the impact of education on clinical practice (e.g., Mazmanian & Mazmanian, 1999). As we note below however, making judgments about the effectiveness of an educational activity in closing a practice gap based solely on quantitative data, such as the numbers of intended and implemented practice changes, is problematic for several reasons.

The CTC approach may yield statements other than intended practice changes

In the IMC evaluation, 58 of the 66 participants (88%) who completed the evaluation process submitted written CTC statements. However, some described an intent to learn more about the topic (e.g., “learn more about women's health issues“) rather than move directly to making a change. White, Grzybowski and Broudo (2004) reported a similar finding. Other statements indicated intentions to continue rather than change existing practice (e.g., “cont[inue] to educate patients”). Wakefield and colleagues noted analogous occasions of education leading to confirmation of existing practices (Wakefield 2004; Wakefield et al., 2003). Some statements described changes in attitude rather than in practice. For instance, one participant wrote, “more willing to offer HT”.

The implication for designing a CTC approach is that the evaluators should decide whether changes in practice are the only outcomes of interest or “movement in the right direction” is also important and relevant. If the choice is the latter, procedures for classifying and interpreting commitment statements that describe something other than changes in practice should be identified. There are several options that might be considered. One option for handling statements that do not describe intended changes in practice is to include in the evaluation plan a process for sorting CTC statements into categories such as practice changes, additional learning activities, attitude changes, and confirmed existing practice. If this strategy is employed, it is desirable to have explicit criteria for deciding which types of statements will be included in the analysis with a rationale to support the choice. Another option is to anticipate that some learners will find that the educational activity confirmed current practice (no need to change) and provide that as a response option on the form used to collect CTC statements. This does not ensure that CTC statements will then only describe intended changes, as Wakefield and colleagues discovered (Wakefield 2004; Wakefield et al., 2003), but it may be expected to reduce the number of statements describing an intent to continue current practices.

Then, in evaluations where documenting any intentions to act is important, the evaluation form can be designed to capture a broader range of outcomes including those other than a change in practice. For example, the learner might be asked to indicate what actions they intend to take in the future, such as “I intend to learn more about the topic”, “I intend to make changes in my practice”. If the learner indicates an intention to change practice, they could be instructed to describe them. For either approach, use of a theoretical framework such as Slotnick's theory of physician learning (Slotnick, 1999) or Prochaska's transtheoretical model (Prochaska et al., 2002) can give guidance on what the categories should be and how to interpret the results. Participants' pre- and post-event self-assessments of their awareness, knowledge, communication skills and confidence as related to menopausal care along with participants' pre- and post-event responses to a clinical vignette may provide additional insights about learners' progress throughout the learning-to-change continuum (as we did in the IMC evaluation).

Some changes may be undesirable or their desirability may be difficult to assess

One assumption of the CTC approach is that the change statements provided by participants will describe changes that are consistent with the best evidence. However, that is not always the case. Wakefield et al (2003) reported that only about 56% of the intended changes were directly related to the evidence-based messages incorporated in the educational modules, although the researchers did not specify if the remaining intentions included undesirable ones that did not comply with the best practices in any clinical domain. We encountered one instance where an intended change was contradicted by the evidence described at the presentation: one participant reported a commitment to use HT “for treatment of osteoporosis”, while HT is indicated not for treatment but for prevention of postmenopausal osteoporosis in some situations. Furthermore, identifying undesirable change is not only a data analysis problem but also potentially a patient safety/medical malpractice problem. The challenge it poses to evaluators is, if the response can be linked to an individual, what, if anything, should be done to correct what appears to be a misunderstanding of the scientific data that were presented?

Our experience also suggests that in some cases it may be impossible to evaluate the desirability of changes described by participants. For example, if a CTC statement reads “increase use of HT”, the desirability of this change cannot be assessed without knowing the baseline. If the learner is currently unwilling to consider recommending HT for any patients but now sees that the evidence supports its use under specific circumstances, then an increase would be considered desirable. Under other circumstances, such an outcome might be highly undesirable.

The changes described by the CTC statement may be difficult to determine

Some changes may be hard to evaluate because written statements do not always reflect well the change that the writer had in mind. For example, in the IMC evaluation one respondent submitted a CTC statement that read “Vasomotor symptoms”. The follow-up interview provided a convenient opportunity to seek clarification of this ambiguous statement. When a follow-up is accomplished through mailed or online questionnaire, it may be difficult to obtain clarifications as it would require adding an individualized question to the questionnaire.

Practice changes may be initiated but not fully accomplished

During the follow-up interviews, some participants in the IMC evaluation hesitated to say “yes” or “no” when asked if they implemented their CTC statements. For example, one participant said: “I can't say that I fulfill that commitment 100% of the time. I can't give you a number of how many times I adequately discuss hormone replacement therapy with a patient.” Participants appeared uncertain in reporting compliance with their CTC statements in cases where they did not practice in a new way consistently, tried a new practice approach with a limited number of patients, or implemented only selected aspects of a planned change. The evaluators were challenged in these cases to categorize a commitment as “implemented” or “not implemented”. Reflecting on this observation, we came to a conclusion that offering participants options that reflect various degrees of implementation, such as “fully implemented”, partially implemented”, “could not be implemented at this time”, and “will not be implemented” (Lockyer et al., 2005) is preferable over a dichotomous scale of “yes (implemented)”/“no (not implemented)”. The possibility of reporting a change as partially implemented would allow participants to more accurately reflect practice changes that they initiated but have not fully accomplished.

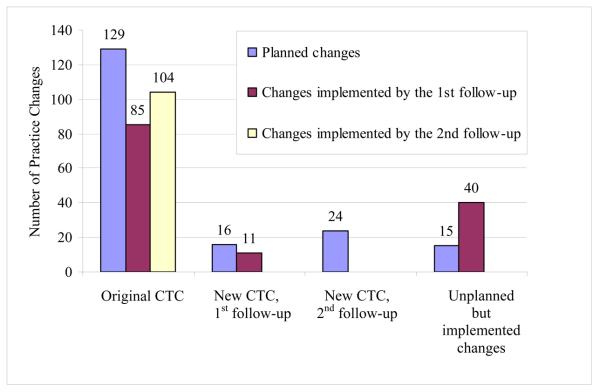

Practice changes can be made beyond or instead of those described in CTC statements

During follow-up interviews in the IMC evaluation, participants reported that they had implemented most of the changes (104 of 129 or about 80%) to which they had committed. Interestingly, during follow-up interviews, some participants made new CTC statements and/or reported implementation of practice changes that were not previously stated in their post-event survey. The number of unplanned but implemented changes was substantial, constituting roughly one-third of all the changes in practice reported (Figure 2). This suggests that the CTC approach may underestimate the extent of change subsequent to an educational program. This finding underscores the importance of conducting a follow-up and asking about implementation of planned changes and any additional practice changes, especially when the goal is to obtain a more complete picture about the impact of education on practice and exploring what circumstances led to the change as a means of assessing the contribution of the activity.

Figure 2.

Planning and Implementation of Changes by Participants of the Improving Menopausal Care Program Evaluation.

Attributing changes to the activity can be challenging

Change in clinical practice is rarely the result of a single educational activity; the activity is often one of several factors leading to the change. As a result, if the goal is to evaluate the effectiveness of an activity in producing a change, it is important to explore how the activity contributed to the reported changes in the IMC evaluation, data from multiple sources were put together like pieces of a puzzle to reveal a picture of learning and change that allowed us to draw conclusions about the impact of the education event on clinical practice, including barriers and enhancers of change. Information about barriers to change helped us understand instances where change did not result. Analysis of enhancers to change, such as menopause-related learning resources used by participants after attending the presentation, was important in understanding the extent to which observed practice changes could be attributed to the presentation as opposed to other factors.

Limitation of the CTC approach related to self-reported data about practice changes

As IMC program evaluators, we were concerned that participants might inaccurately report changes made in clinical practice given conclusions of systematic reviews of evidence about limitations of physicians' abilities to accurately self-assess their performance (Davis et al., 2006). There is evidence that although the CTC approach is based on participants' self-reports it has some validity as a tool for predicting and assessing actual changes in practice, at least in physician prescribing practices. Wakefield et al. (2003) compared prescribing-related CTC statements with the pharmacy registry data and found that these statements were predictive of the actual practice change. Curry and Purkis (1986) demonstrated that CME participants' self-reports about compliance with the CTC statements accurately reflected prescribing changes that were documented through the prescription pad copies. To our knowledge, the validity of the CTC approach for domains of medical practice other than prescribing is not well-established. To address this concern, we attempted to validate occasions of reported practice changes by data triangulation; that is, by reviewing the CTC data against data from other sources (Mathison, 1988). One strategy we used was to present a clinical vignette followed by questions about physician actions in response to a clinical problem. The same vignette was used during the pre-event and follow-up interviews. The results from the vignette identified changes that were largely consistent with the overall pool of participant reports of changes made. Occasionally, a direct connection between a change in a response to vignette and an implemented CTC statement was evident. For instance, in his pre-event response, one participant was not sure what to recommend to a patient interested in alternative therapies. “I don't have the information to guide them and advise them.” In the second follow-up response, he appeared to be more knowledgeable about HT versus alternative treatments and ready to use his knowledge to communicate with the patient. He also talked about using “visual aids” to show the patient her risk factors of HT. This improvement in response to the vignette was consistent with his CTC statements: “increase education of HT alternatives” and “increase education of risks of HT”. However, this component of the evaluation was not as useful as we had anticipated.

Another strategy was reviewing the CTC data against the 360-degree data (i.e., data collected from participants' patients, peers and healthcare team members). In many cases, we found no evidence to support or refute the claims of changes, because the 360-degree survey questions did not match the specific changes reported by participants. However, in instances of sufficient evidence, the data seemed to substantiate the majority of participants' claims. For example, patients of a participant who claimed increased patient education about risks of HT reported that this physician provided adequate and clear explanations of risks and side effects of treatment choices for menopausal symptoms, answered all their questions, and gave them printed information and/or suggested other educational resources. From our experience, we conclude that consideration should be given to data triangulation to validate and strengthen conclusions based on the CTC data. Otherwise, as others have observed, the data gathered through the CTC approach should be interpreted with caution, given the self-reported nature of the CTC data (Lockyer et al., 2001).

Limitations of This Paper

This paper is not a report of a research study examining the CTC approach but rather the evaluators' reflections on their experience of using this approach to evaluate educational outcomes. It is our view that in addition to research and theory, the practical knowledge and experience of CME practitioners who have tried using the CTC approach for evaluation is also an important source of information on how to use it. We recognize the IMC evaluation study discussed as an example had limitations including the absence of a control group, self-selection of participants, limited numbers of evaluation participants relative to the number of program participants, use of self-reported data, and the effect of the evaluation itself. While these limitations should be considered when interpreting evaluation findings, they did not preclude describing the CTC technique from the evaluator perspective, identifying issues and challenges, and offering ideas for addressing them.

Conclusion

The CTC approach is a potentially valuable evaluation tool. It can be used to document self-reported clinical practice changes, examine the impact of an educational activity relative to its instructional focus, and improve understanding of the learning-to-change continuum in a given clinical area. However, this requires supplementation with other data if the goal is to have a complete picture of the impact of education on practice. The self-reported nature of the CTC data is a major limitation of this method from the evaluation perspective. There is a variation in how the CTC approach has been used, suggesting it can be tailored to a particular educational program and evaluation purposes. At the same time, some uncertainty remains as far as how different components and procedures of the CTC approach influence the results obtained through its application. Future research is needed to establish its validity in areas other than prescribing practices, provide better guidance on how to tailor the features of the CTC approach to fit the context in which it is used, and assess the impact of the CTC approach itself on the educational outcomes it reveals.

Acknowledgements

We want to thank Chere C. Gibson, RD, PhD for her many valuable contributions to the IMC evaluation study and critical input on this publication. The IMC study was approved by the University of Wisconsin-Madison Health Sciences Institutional Review Board and funded by an educational grant from Wyeth Pharmaceuticals.

Biographies

Marianna B. Shershneva, MD, PhD is an Assistant Scientist working with the University of Wisconsin Office of Continuing Professional Development in Medicine and Public Health. Her research and publications advance understanding of how physicians learn and change and explore innovative continuing medical education programs.

Min-fen Wang, PhD is an Assistant Scientist in the Centers for Public Health Education and Outreach, University of Minnesota School of Public Health. Her current research interest is to explore methods of assessing higher levels of educational outcomes and contextual influences on educational effectiveness.

Gary C. Lindeman, MS, PhD is an educator and researcher, currently retired, who last worked as an Assistant Researcher with the University of Wisconsin Office of Continuing Professional Development in Medicine and Public Health.

Julia N. Savoy, MS, is a Project Assistant at the Wisconsin Center for Education Research and a doctoral student in the Department of Educational Leadership and Policy Analysis, University of Wisconsin-Madison.

Curtis A. Olson, PhD is an Assistant Professor in the Department of Medicine at the University of Wisconsin-Madison and Head of the Research and Development Unit in the Office of Continuing Professional Development in Medicine and Public Health. His current research interest is to understand how teams of health care professionals use different types of knowledge and information to make changes in their clinical practice.

References

- Bloom BS. Effects of continuing medical education on improving physician clinical care and patient health: a review of systematic reviews. International Journal of Technology Assessment in Health Care. 2005;21:380–385. doi: 10.1017/s026646230505049x. [DOI] [PubMed] [Google Scholar]

- Committee on Quality of Healthcare in America, Institute of Medicine . Crossing the quality chasm: A new health system for the 21st century. National Academy Press; Washington, DC: 2001. [Google Scholar]

- Curry L, Purkis I. Validity of self-reports of behavior changes by participants after a CME course. Journal of Medical Education. 1986;61:579–586. doi: 10.1097/00001888-198607000-00005. [DOI] [PubMed] [Google Scholar]

- Davis D, Barnes BE, Fox R, editors. The continuing professional development of physicians: From research to practice. American Medical Association; Chicago: 2003. [Google Scholar]

- Davis N, Davis D, Bloch R. Continuing medical education: AMEE Education Guide no. 35. Medical Teacher. 2008;30:652–666. doi: 10.1080/01421590802108323. [DOI] [PubMed] [Google Scholar]

- Davis DA, Mazmanian PE, Fordis M, Van Harrison R, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence: A systematic review. JAMA. 2006;296:1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- Dolcourt J. Commitment to change: A strategy for promoting educational effectiveness. The Journal of Continuing Medical Education in the Health Professions. 2000;20:156–163. doi: 10.1002/chp.1340200304. [DOI] [PubMed] [Google Scholar]

- Dolcourt J, Zuckerman G. Unanticipated learning outcomes associated with commitment to change in continuing medical education. The Journal of Continuing Medical Education in the Health Professions. 2003;23:173–177. doi: 10.1002/chp.1340230309. [DOI] [PubMed] [Google Scholar]

- Eckstrom E, Desai SS, Hunter AJ, Allen E, Tanner CE, Lucas LM, Joseph CL, Ririe MR, Doak MN, Humphrey LL, Bowen JL. Aiming to improve care of older adults: An innovative faculty development workshop. Journal of General Internal Medicine. 2008;23(7):1053–1056. doi: 10.1007/s11606-008-0593-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green ML, Gross CP, Kernan WN, Wong JG, Holmboe ES. Integrating teaching skills and clinical content in a faculty development workshop. Journal of General Internal Medicine. 2003;18:468–474. doi: 10.1046/j.1525-1497.2003.20933.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Habermann R, Butts N, Powers J, Pichert JW. Physician behavior changes following CME on the prospective payment system in long-term care: a pilot study. Journal of the American Medical Directors Association. 2002;3(1):12–15. [PubMed] [Google Scholar]

- Jones D. Viability of commitment to change evaluation strategy in continuing medical education. Academic Medicine. 1990;65:S37–S38. doi: 10.1097/00001888-199009000-00033. [DOI] [PubMed] [Google Scholar]

- Legido-Quigley H, McKee M, Nolte E, Glinos IA. Assuring quality of health care in the European Union: A Case for Action. World Health Organization 2008, on behalf of the European Observatory on Health Systems and Policies. MPG Books; UK: 2008. [Google Scholar]

- Locke EA, Saari IM, Shar KN, Latham GP. Goal setting and task performance: 1969-1980. Psychological Bulletin. 1981;90:125–152. [Google Scholar]

- Lockyer J. Multisource feedback in the assessment of physician competencies. The Journal of Continuing Education in the Health Professions. 2003;23:4–12. doi: 10.1002/chp.1340230103. [DOI] [PubMed] [Google Scholar]

- Lockyer JM, Fidler H, Hogan DB, Pereles L, Wright B, Lebeuf C, Gerritsen C. Assessing outcomes through congruence of course objectives and reflective work. The Journal of Continuing Medical Education in the Health Professions. 2005;25:76–86. doi: 10.1002/chp.12. [DOI] [PubMed] [Google Scholar]

- Lockyer J, Fidler H, Ward R, Basson R, Elliott S, Toews J. Commitment to change statements: A way of understanding how participants use information and skills taught in an educational session. The Journal of Continuing Education in the Health Professions. 2001;21:82–89. doi: 10.1002/chp.1340210204. [DOI] [PubMed] [Google Scholar]

- Mansouri M, Lockyer J. A meta-analysis of continuing medical education effectiveness. Journal of Continuing Education in the Health Professions. 2007;27:6–15. doi: 10.1002/chp.88. [DOI] [PubMed] [Google Scholar]

- Marinopoulos SS, Dorman T, Ratanawongsa N, Wilson LM, Ashar BH, Magaziner JL, Miller RG, Thomas PA, Prokopowicz GP, Qayyum R, Bass EB. Effectiveness of Continuing Medical Education. Evidence Report/Technology Assessment No. 149 (Prepared by the Johns Hopkins Evidence-based Practice Center, under Contract No. 290-02-0018.) AHRQ Publication No. 07-E006. Agency for Healthcare Research and Quality; Rockville, MD: 2007. [PMC free article] [PubMed] [Google Scholar]

- Mathison S. Why triangulate? Educational Researcher. 1988;17:13–17. [Google Scholar]

- Mazmanian P, Mazmanian P. Commitment to change: Theoretical foundations, methods, and outcomes. The Journal of Continuing Education in the Health Professions. 1999;19:200–207. [Google Scholar]

- Mazmanian P, Johnson R, Zhang A, Boothby J, Yeatts M. Effects of a signature on rates of change: A randomized controlled trial involving continuing education and the commitment-to-change model. Academic Medicine. 2001;75(6):642–646. doi: 10.1097/00001888-200106000-00018. [DOI] [PubMed] [Google Scholar]

- Mazmanian P, Ratcliff S, Johnson R, Davis D, Kantrowitz M. Information about barriers to planned change: A randomized controlled trial involving continuing medical education lectures and commitment to change. Academic Medicine. 1998;7(8):882–886. doi: 10.1097/00001888-199808000-00013. [DOI] [PubMed] [Google Scholar]

- Mazmanian PE, Waugh JL, Mazmanian PM. Commitment to change: Ideational roots, empirical evidence, and ethical implications. The Journal of Continuing Education in the Health Professions. 1997;17:133–140. [Google Scholar]

- Neill RA, Bowman MA, Wilson JP. Journal article content as a predictor of commitment to change among continuing medical education respondents. The Journal of Continuing Education in the Health Professions. 2001;21(1):40–45. doi: 10.1002/chp.1340210107. [DOI] [PubMed] [Google Scholar]

- Overton GK, MacVicar R. Requesting a commitment to change: Conditions that produce behavioral or attitudinal commitment. The Journal of Continuing Education in the Health Professions. 2008;28(2):60–66. doi: 10.1002/chp.158. [DOI] [PubMed] [Google Scholar]

- Pereles L, Lockyer J, Hogan D, Gondocz T, Parboosingh J. Effectiveness of commitment contracts in facilitating change in continuing medical education intervention. The Journal of Continuing Education in the Health Professions. 1997;17:27–31. [Google Scholar]

- Prochaska JO, Redding CA, Evers KE. The transtheoretical model and stages of change. In: Glanz K, Rimer BK, Clark NM, editors. Health Behavior and Health Education: Theory, Research, and Practice. 3rd Ed. Jossey-Bass; San Francisco: 2002. pp. 99–120. [Google Scholar]

- Purkis I. Commitment for change: An instrument for evaluating CME courses. Journal of Medical Education. 1982;57:61–63. [PubMed] [Google Scholar]

- Regnier K, Kopelow M, Lane D, Alden E. Accreditation for learning and change: Quality and Improvement as the outcome. The Journal of Continuing Education in the Health Professions. 2005;25(3):174–182. doi: 10.1002/chp.26. [DOI] [PubMed] [Google Scholar]

- Rogers EM. Diffusion of Innovations. 4th Ed. The Free Press; New York: 1995. [Google Scholar]

- Rogers EM, Kincaid DL. Communication Networks: Toward a New Paradigm for Research. The Free Press; New York: 1981. [Google Scholar]

- Schön DA. The Reflective Practitioner: How Professionals Think in Action. Basic Books; New York: 1983. [Google Scholar]

- Slotnick HB. How doctors learn: Physicians' self-directed learning episodes. Academic Medicine. 1999;74(10):1106–1117. doi: 10.1097/00001888-199910000-00014. [DOI] [PubMed] [Google Scholar]

- Sussman S, Valente TW, Rohrbach LA, Skara S, Pentz MA. Translation in the health professions: Converting science into action. Evaluation & the Health Professions. 2006;29(1):7–32. doi: 10.1177/0163278705284441. [DOI] [PubMed] [Google Scholar]

- Tian J, Atkinson NA, Portnoy B, Gold RS. A systematic review of evaluation in formal continuing medical education. The Journal of Continuing Education in the Health Professions. 2007;27(1):16–27. doi: 10.1002/chp.89. [DOI] [PubMed] [Google Scholar]

- Wakefield JG. Commitment to change: Exploring its role in changing physician behavior through continuing education. The Journal of Continuing Education in the Health Professions. 2004;24(4):197–204. doi: 10.1002/chp.1340240403. [DOI] [PubMed] [Google Scholar]

- Wakefield J, Herbert C, Maclure M, Dormuth C, Wright J, Legare J, Brett-MacLean P, Premi J. Commitment to change statements can predict actual change in practice. The Journal of Continuing Education in the Health Professions. 2003;23(2):81–91. doi: 10.1002/chp.1340230205. [DOI] [PubMed] [Google Scholar]

- White MI, Grzybowski S, Broudo M. Commitment to change instrument enhances program planning, implementation, and evaluation. The Journal of Continuing Education in the Health Professions. 2004;24:153–162. doi: 10.1002/chp.1340240306. [DOI] [PubMed] [Google Scholar]