Abstract

For generations the study of vocal development and its role in language has been conducted laboriously, with human transcribers and analysts coding and taking measurements from small recorded samples. Our research illustrates a method to obtain measures of early speech development through automated analysis of massive quantities of day-long audio recordings collected naturalistically in children's homes. A primary goal is to provide insights into the development of infant control over infrastructural characteristics of speech through large-scale statistical analysis of strategically selected acoustic parameters. In pursuit of this goal we have discovered that the first automated approach we implemented is not only able to track children's development on acoustic parameters known to play key roles in speech, but also is able to differentiate vocalizations from typically developing children and children with autism or language delay. The method is totally automated, with no human intervention, allowing efficient sampling and analysis at unprecedented scales. The work shows the potential to fundamentally enhance research in vocal development and to add a fully objective measure to the battery used to detect speech-related disorders in early childhood. Thus, automated analysis should soon be able to contribute to screening and diagnosis procedures for early disorders, and more generally, the findings suggest fundamental methods for the study of language in natural environments.

Keywords: vocal development, automated identification of language disorders, all-day recording, automated speaker labeling, autism identification

Typically developing children in natural environments acquire a linguistic system of remarkable complexity, a fact that has attracted considerable scientific attention (1–6). Yet research on vocal development and related disorders has been hampered by the requirement for human transcribers and analysts to take laborious measurements from small recorded samples (7–11). Consequently, not only has the scientific study of vocal development been limited, but the potential for research to help identify vocal disorders early in life has, with a few exceptions (12–14), been severely restricted.

We begin, then, with the question of whether it is possible to construct a fully automated system (i.e., an algorithm) that uses nothing but acoustic data from the child's “natural” linguistic environment, isolates child vocalizations, extracts significant features, and assesses vocalizations in a useful way for predicting level of development and detection of developmental anomalies. There are major challenges for such a procedure. First, with recordings in a naturalistic environment, isolating child vocalizations from extraneous acoustic input (e.g., parents, other children, background noise, television) is vexingly difficult. Second, even if the child's voice can be isolated, there remains the challenge of determining features predictive of linguistic development. We describe procedures permitting massive-scale recording and use the data collected to show it is possible to track linguistic development with totally automated procedures and to differentiate recordings from typically developing children and children with language-related disorders. We interpret this as a proof of concept that automated analysis can now be included in the tool repertoire for research on vocal development and should be expected soon to yield further scientific benefits with clinical implications.

Naturalistic sampling on the desired scale capitalizes on a battery-powered all-day recorder weighing 70 g that can be snapped into a chest pocket of children's clothing, from which it records in completely natural environments (SI Appendix, “Recording Device”). Recordings with this device have been collected since 2006 in three subsamples: typically developing language, language delay, and autism (Fig. 1A and SI Appendix, “Participant Groups, Recording and Assessment Procedures”). In both phase I (2006–2008) and phase II (2009) studies (SI Appendix, Table S2), parents responded to advertisements and indicated if their children had been diagnosed with autism or language delay. Children in phase I with reported diagnosis of language delay were also evaluated by a speech-language clinician used by our project. Parents of children with language delay in phase II and parents of children with autism in both phases supplied documentation from the diagnosing clinicians, who were independent of the research. Parent-based assessments obtained concurrently with recordings (SI Appendix, Table S3) confirmed sharp group differences concordant with diagnoses; the autism sample had sociopsychiatric profiles similar to those of published autism samples, and low language scores occurred in autism and language delay samples (Fig. 1B and SI Appendix, Figs. S2–S4 and “Analyses Indicating Appropriate Characteristics of the Participant Groups”).

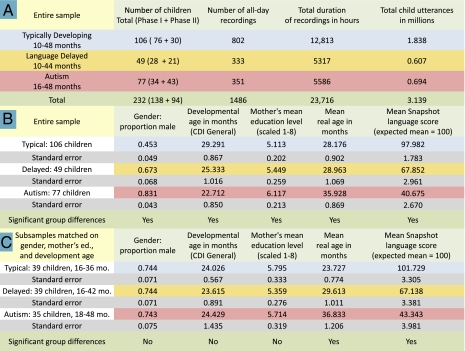

Fig. 1.

Demographics. (A) Characteristics of the child groups and recordings. (B) Demographic parameters indicating that groups differed significantly in terms of gender, mother's education level (a strong indicator of socioeconomic status), and general development age determined by the Child Development Inventory (CDI) (40), an extensive parent questionnaire assessment obtained for 86% of the children, including all those in the matched samples. This CDI age equivalent score is based on 70 items distributed across all of the subscales of the CDI, including language, motor development, and social skills. The groups also differed in age and in scores on the LENA Developmental Snapshot, a 52-item parent questionnaire measure of communication and language, obtained for all participants. (C) Demographic parameters for subsamples matched on gender, mother's education level, and developmental age.

Demographic information (Fig. 1B and SI Appendix, Tables S3 and S4) was collected through both phases: boys appeared disproportionately in the disordered groups, mother's educational level (a proxy for socioeconomic status) was higher for children with language disorders, and general developmental levels were low in the disordered groups. A total of 113 children were matched on these variables (Fig. 1C) to allow comparison with results on the entire sample.

Our dataset included 1,486 all-day recordings from 232 children, with more than 3.1 million automatically identified child utterances. The signal processing software reliably identified (SI Appendix, Tables S6 and S7) sequences of utterances from the child wearing the recorder, discarded cries and vegetative sounds, and labeled the remaining consecutive child vocalizations “speech-related child utterances” (SCUs; SI Appendix, Fig. S5). Additional analysis divided SCUs into “speech-related vocal islands” (SVIs), high-energy periods bounded by low-energy periods (SI Appendix, Figs. S6 and S7). Roughly, the energy criterion isolated salient “syllables” in SCUs. Analysis of SVIs focused on acoustic effects of rhythmic “movements” of jaw, tongue, and lips (i.e., articulation) that underlie syllabic organization, and on acoustic effects of vocal quality or “voice.” Infants show voluntary control of syllabification and voice in the first months of life and refine it throughout language acquisition (15, 16). Developmental tracking of these features by automated means at massive scales could add a major new component to language acquisition research. Given their infrastructural character, anomalies in development of rhythmic/syllabic articulation and voice might also suggest an emergent disorder (17, 18).

SVIs were analyzed on 12 infrastructural acoustic features reflecting rhythmic/syllabic articulation and voice and known to play roles in speech development (19) (SI Appendix, “Automated Acoustic Feature Analysis”). The features pertain to four conceptual groupings (SI Appendix, Table S5): (i) rhythm/syllabicity (RhSy), (ii) low spectral tilt/high pitch control (LtHp, designed to reflect certain voice characteristics), (iii) high bandwidth/low pitch control (BwLp, designed to reflect additional voice characteristics), and (iv) duration. The 12 features were assigned to the four groupings based on a combination of infrastructural vocal theory (19) and results of principal component (PC) analysis (PCA; SI Appendix, Table S10 and Fig. S12).

The algorithm determined the degree to which each SVI showed presence or absence of each of the 12 features, providing a measure of infrastructural vocal development on each feature. Each SVI was classified as plus or minus (i.e., present or absent) for each feature. The number of “plus” classifications per parameter varied from more than 2,400 per recording on the voicing (VC) parameter to fewer than 100 on the wide formant bandwidth parameter (SI Appendix, Fig. S8). To adjust for differences in length of recordings and amount of vocalization (i.e., “volubility”) across individuals and recordings, we took the ratio for each parameter of the number of SVIs classified as plus to the number of SCUs. This yielded 12 numbers, one for each parameter at each recording, reflecting per-utterance usage of the 12 features. The SVI/SCU ratio also varied widely, from more than 1.3 per utterance for VC to fewer than 0.05 for wide formant bandwidth (SI Appendix, Fig. S9). The 12-dimensional vector composed of SVI/SCU ratios normalized for age was used to predict vocal development and to classify recordings as pertaining to children with or without a language-related disorder (SI Appendix, “Statistical Analysis Summary”).

The acoustic features were thus chosen as developmental indicators and as potential child group differentiators. Aberrations of voice have been noted from the first descriptions of autism spectrum disorders (ASDs) (20, 21); subsequent research has added evidence of abnormalities of prosody (10, 22–25) and articulatory features affecting rhythm/syllabicity (11, 26). Still, the standard diagnostic reference for mental/behavioral disorders (27) does not include vocal characteristics in assessment of ASD. Reasons appear to be that evidence of vocal abnormalities in autism is scattered and often vague, criteria for judgment of vocalization are not typically included in health professionals’ educational curricula, and reliability of judgment for vocal characteristics is problematic given individual variability (10, 26). Autism diagnosis is based heavily on “negative markers” such as joint attention deficit (28–31) and associated communication deficits (32–35). Abnormal vocal characteristics would constitute a positive marker that might enhance precision of screening or diagnosis (31).

Most children with language-related disorders, even without autism, also show articulation and voice anomalies (36–39). Our work explores the possibility that the automated approach may be useful in discriminating typical from language-disordered development, as well as in discriminating autism from language delay. The power of the automated approach will presumably depend on its ability to predict changes with age in typically developing children.

Results

Correlations between child age and SVI/SCU ratios for the 12 parameters showed clear evidence that the automated analysis predicts development: for the typically developing and language-delayed groups, five of the 12 correlations were greater than ±0.4, at a high level of statistical significance of P < 10−10 (Fig. 2A and SI Appendix, Table S9 A–D); all 12 correlations with age for the typically developing sample and seven of 12 for the language-delayed sample were statistically significant. The autistic sample, to the contrary, showed little evidence of development on the parameters; all their correlations of acoustic parameters with age were less than ±0.2, and only two were statistically reliable (SI Appendix, Table S9 E and F). The typically developing group showed reliable negative correlations (P < 10−4) on three parameters for which the other groups showed positive correlations, illustrating that certain vocal tendencies diminished with age in the typically developing group but did not diminish or increased with age in the others. Correlations of the 12 parameters with each other also revealed coherency within the four parameter groupings for all three child groups, but the autistic sample showed many more correlations not predicted by the four parameter groupings than the other child groups (SI Appendix, Table S9 A–F), a result suggesting children with autism organize acoustic infrastructure for vocalization differently from typically developing children or children with language delay.

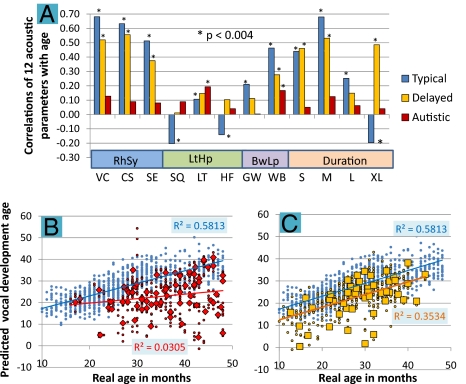

Fig. 2.

Results of correlational analysis and MLR analysis. (A) Correlations of acoustic parameter SVI/SCU ratio scores with age across the 1,486 recordings. In 10 of 12 cases, both typically developing and language-delayed children showed higher absolute values of correlations with age than children with autism (SI Appendix, Table S9 A–F). All 12 correlations for the typically developing sample and seven of 12 for the language-delayed sample were statistically significant after Bonferroni correction (P < 0.004). The autistic sample, conversely, showed little evidence of development on the parameters; all correlations of acoustic parameters with age were lower than ±0.2. (B) MLR for typically developing and autism samples. Blue dots represent real and predicted age (i.e., “predicted vocal development age”) for 802 recordings of typically developing children based on SVI/SCU ratios for the 12 acoustic parameters (r = 0.762, R2 = 0.581). Red dots represent 351 recordings of children with autism, for which predicted vocal development ages (r = 0.175, R2 = 0.031) were determined based on the typically developing MLR model. Each red diamond represents the mean predicted vocal development level across recordings for one of the 77 children with autism. (C) MLR for the typically developing (blue) and language-delayed samples (333 recordings; gold squares for 49 individual child averages; r = 0.594, R2 = 0.353).

Multiple linear regression (MLR) also illustrated development and group differentiation. The 12 acoustic parameter ratios (SVIs/SCUs) for each recording were regressed against age for the typically developing sample, yielding a normative model for development of vocalization. Coefficients of the model were used to calculate developmental ages for autistic and language-delayed recordings, displayed along with data for the typically developing recordings in Fig. 2 B and C. The MLR model for the 802 recordings from the typically developing sample accounted for greater than 58% of variance in predicted age. MLR for the 106 typically developing children yielded yet higher prediction, accounting for more than 69% of variance, and suggesting that the automated analysis can provide a strong indicator of vocal development within this age range.

The correlation of predicted vocal developmental age with real age based on MLR was much higher for the typically developing and language-delayed samples (Fig. 2C) than for the autism sample (Fig. 2B), in which the predicted levels were also generally considerably below those of the typically developing sample. The acoustic parameters within the four theoretical groupings were also regressed against age (SI Appendix, Fig. S11 A–D), with results suggesting strong differentiation of the typically developing and autistic samples for three of the four groupings (RhSy, LtHp, and duration). The low correlations with age for the autism sample in these MLR analyses based on the typically developing model do not imply that the autism sample showed no change with regard to the acoustic parameters across age. On the contrary, an independent MLR model for the autism sample alone using all 12 acoustic parameters as predictors accounted for more than 16% of variance (P < 0.001), indicating that the autism group did develop with age on the parameters. However the nature of the change with regard to the parameters across age was clearly different in the two groups, as indicated by the MLR results.

Linear discriminant analysis (LDA) and linear logistic regression were used to model classification of children into the three groups based on the acoustic parameters. Here the ratio scores (SVIs/SCUs) for each of the 12 parameters were first standardized by calculating z-scores for each recording in monthly age intervals. Leave-one-out cross-validation (LOOCV) was the main holdout method to verify generalization of results to recordings not in the training samples (SI Appendix, “Statistical Analysis Summary”). Six pairwise comparisons (SI Appendix, Fig. S1), each based on 1,500 randomized splits into training and testing (i.e., holdout) samples of varying sizes, confirmed that LOOCV results were consistently representative of midrange randomly selected holdout results, and thus provide a useful standard for the data reported in the subsequent paragraphs. LOOCV results for LDA and linear logistic regression were nearly identical (SI Appendix, Table S1); the remainder of the present report thus focuses on LDA only.

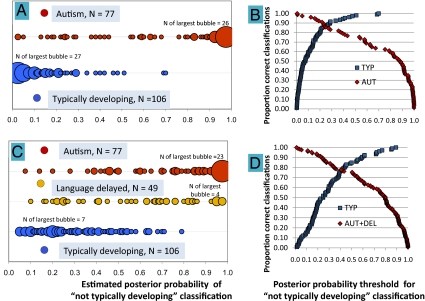

The results indicated strong correct identification of autism versus typical development (χ2, P < 10−21), with sensitivity (i.e., hit rate) of 0.75 and specificity (i.e., correct rejection rate) of 0.98, based on a threshold (i.e., cutoff) posterior classification probability of 0.52 (Fig. 3A). At equal-error probability, sensitivity and specificity were 0.86 (crossover point, Fig. 3B). LDA also differentiated the typically developing sample from a combined autism and language-delay sample. At a cutoff of 0.56, specificity (i.e., correct identification of typically developing children) was 0.90. At the same cutoff, 80% of the autism sample and 62% of the language-delay sample were correctly classified as not being in the typically developing group (Fig. 3C). Sensitivity and specificity at equal error probability for classification of children as being or not being typically developing was 0.79 (Fig. 3D). Fig. 4A supplies LDA data on all six possible binary classificatory comparisons of the three child groups and illustrates strongest discrimination of the autism versus typically developing samples (P < 10−21). For the entire samples (left column), both typically developing and autism groups were also very reliably (P < 10−5) differentiated from the language-delayed group, although sensitivity/specificity was lower (0.73 and 0.70) than in the differentiation of the typically developing from autism groups or in differentiation of the typically developing from the combined language disordered groups.

Fig. 3.

LDA with LOOCV indicating differentiation of child groups. Estimated classification probabilities were based on the 12 acoustic parameters. Results are displayed in bubble plots, with each bubble sized in proportion to the number of children at each x axis location (the number of children represented by the largest bubble in each line is labeled). The plots indicate classification probabilities (Left) and proportion-correct classification (Right) for LDA in (A and B) children with autism (red) versus typically developing children (blue) and (C and D) a combined group of children with autism (red) or language delay (gold) versus typically developing children (blue).

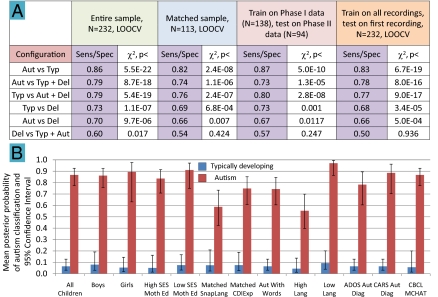

Fig. 4.

LDA showing group discrimination for various configurations and subsamples. (A) LDA data on the six binary configurations of the three groups for the entire sample (N = 232; LOOCV); subsamples matched on gender, mother's education level, and developmental level (n = 113; LOOCV); a different holdout method in which, instead of LOOCV, training was conducted on phase I (2006–2008) data (n = 138) and testing on phase II (2009) data (n = 94); and a testing sample based for each child on the first recording only (N = 232; LOOCV). (B) Bar graph comparisons for subsamples illustrating robustness of group differentiation in the autism versus typical development configuration only (based on LOOCV modeling). Means were calculated over logit-transformed posterior probabilities (PPs) of autism classification, then converted back to PPs. All comparisons showed robust group differentiation, including (from left to right) the entire sample (N = 232), boys (typical, n = 48; autism, n = 64), girls (typical, n = 58; autism, n = 13), children of higher socioeconomic status (SES) as indicated by mother's education level ≥6 on an eight-point scale (typical, n = 42; autism, n = 49) and lower SES (typical, n = 64; autism, n = 28). To assess the possibility that “language level” may have played a critical role in automated group differentiation, we compared 35 child pairs matched for developmental age (typical development group, mean age of 22.6 mo; autism group, mean age of 22.7 mo) on the Snapshot, a language/communication measure (SI Appendix, “Participant Groups and Recording Procedures”), and 46 child pairs matched on the raw score from a single subscale of the CDI (40), namely, the expressive language subscale (typical development group, mean score of 21.6; autism group, mean score of 21.5), and we found robust group differentiation on PPs. Similar results were obtained for 48 children in the autism sample whose parents reported they were using spoken words meaningfully and for typical and autism samples split at medians into subgroups of high or low language (High Lang, Low Lang) level on the Snapshot developmental quotient. A subsample of 29 children with autism for whom diagnosis had been based on the Autism Diagnostic Observation Schedule (ADOS) (41) and another of 24 children with autism diagnosed with the Childhood Autism Rating Scale (CARS) (42) also showed robust group discrimination for PPs. Finally, in phase II (typical, n = 30; autism, n = 77) administration of both the Child Behavior CheckList (CBCL) (43) and the Modified Checklist for Autism in Toddlers (MCHAT) (44) had supported group assignment based on diagnoses, and group differentiation of PPs for these children using the automated system was unambiguous.

Fig. 4A also reveals only slightly lower levels of group differentiation for modeling with LOOCV on the matched sample of 113 children. Holdout models (i.e., not based on LOOCV) with training on phase I data and testing on phase II data revealed similar results. Models based on LOOCV training for all recordings were also used to determine sensitivity and specificity rates on only the first recording available for each child, again with similar results seen in Fig. 4A. The first-recording analysis suggests the automated approach can discriminate groups quite reliably with a single all-day recording for each child.

Fig. 4B compares mean posterior probabilities of autism classification (LOOCV models) in subsamples for one configuration only: typically developing versus autism. Group differentiation was robust, applying to both genders and to higher and lower socioeconomic status (indicated by mother's reported education level). Further, differentiation was strong for subgroups matched on language development and expressive language measures, for the autism subgroup reported to be speaking in words, as well as for subgroups with high and low within-group language scores. Additional comparisons in Fig. 4B illustrate that subgroups diagnosed with autism through widely used tests documented in clinical reports supplied by parents (SI Appendix, Table S3) were differentiated sharply from the typically developing sample by the automated analysis. All comparisons of typically developing and autistic samples in Fig. 4B were highly statistically reliable (P < 10−11). Additional evaluations of posterior probabilities for individuals and subgroups of special interest in the autism and language-delay samples further illustrated the robustness of the automated analysis in group differentiation (SI Appendix, “Results: Individual Children and Subgroups of Particular Interest in the Group Discrimination Analyses”; and SI Appendix, Figs. S14 and S15). Very low correlations of age with posterior probabilities (r = −0.07 and r = 0.11 for autism and typical samples, respectively) suggest group differentiation by this automated method was not importantly dependent on age within these samples (SI Appendix, Fig. S16).

Long-term success of automated vocal analysis may depend on the “transparency” of modeling, the extent to which results are interpretable through vocal development theory. The present work was based on such a theory (SI Appendix, “The 12 Acoustic Parameters” and “Research in Development of Vocal Acoustic Characteristics”), and thus provides a beginning for explaining why the model worked in, for example, predicting age. In pursuit of such explanation we further evaluated correlations among SVI/SCU ratios for the 12 acoustic parameters. To alleviate confounding effects of intercorrelations among the parameters, it was useful to project the data along orthogonal principal components (PCs). The PCA (SI Appendix, Table S10 and Fig. S12 A–C) yielded one dominant PC in the typically developing sample (analysis conducted on all 802 recordings without differentiation of the recordings within child), accounting for 40% of variance, more than double that of any other PC. The dominant PC for the typically developing group was highly correlated with all of the a priori RhSy (rhythm/syllabicity) parameters: (i) canonical transitions (r = 0.88), reflecting for each recording the extent to which “syllables” (i.e., SVIs) had rapid formant transitions required in mature syllables, (ii) voicing (VC; r = 0.78), indicating whether SVIs had periodic vocal energy required for mature syllables, and (iii) spectral entropy (r = 0.71), indicating whether variations in vocal quality of SVIs were typical of variations occurring in mature speech. The dominant PC was also highly correlated with a parameter from the a priori duration grouping: (iv) medium duration (r = 0.91), reflecting SVI durations in the midrange for mature speech syllables.

When the four PCs of the typically developing sample were regressed against age at the child level, this dominant PC played the central role in age prediction (R2 = 0.55, P < 10−19). The β-coefficient (0.73) for the dominant PC was more than three times that of any other PC, suggesting that the typically developing children's control of the infrastructure for syllabification provided the primary basis for modeling of vocal development age (SI Appendix, “Principal Components Analysis Indicating Empirical and Theoretical Organization of the Parameters”).

PCA at the child level for the autism sample showed a considerably different structure of relations between acoustic parameters and PCs, and importantly, the first PC for the autism sample showed low correlations with the a priori RhSy acoustic parameters (SI Appendix, Table S10 and Fig. S12). When the four PCs for the autism sample were regressed against age, the PC with high correlations on the a priori RhSy parameters (PC2 in this case) yielded a β of 0.16, less than one fourth of that occurring for the typically developing sample. Prediction of age by this autism PC was not statistically significant. Thus, in the typically developing sample, the PC most associated with control of the RhSy parameters was highly predictive of age, but in the autism sample, no such predictiveness was found.

To quantify the role of RhSy parameters in group differentiation, the PCA model for the first four PCs in the typically developing sample was applied to child-level data from all three groups and then converted to standard scores based on the mean and SD for the typically developing group. Analysis of the mean standard scores (SI Appendix, Fig. S13) showed that, indeed, the PC associated with RhSy accounted for more than 3.5 times as much variance in group differentiation (adjusted R2 = 0.21) as any of the other PCs, suggesting that children's control of the infrastructure for syllabification may have played the predominant role in group differentiation.

Discussion

The purpose of our work is fundamentally scientific: to develop tools for large-scale evaluation of vocal development and for research on foundations for language. The outcomes indicate that the time for basic research through automated vocal analysis of massive recording samples is already upon us. A typically developing child's age can be predicted at high levels (accounting for more than two thirds of variance) based on this first attempt at totally automated acoustic modeling with all-day recordings. The automated procedure has proven sufficiently transparent to offer suggestions on how the developmental age prediction worked—the primary factor appears to have been the child's command of the infrastructure for syllabification, a finding that should help to guide subsequent inquiries. From a practical standpoint, the present work illustrates the possibility of adding a convenient and fully objective evaluation of acoustic parameters to the battery of tests that is used with increasing success to identify children with language delay and autism. It appears that childhood control of the infrastructural features of syllabification played the central role in group differentiation, just as it played the central role in tracking development. The automated method showed higher accuracy in differentiating children with and without a language disorder than in differentiating the two language disorder groups (autism and language delay) from each other. Future work will be directed to evaluation of additional vocal factors that may differentiate subgroups of language disorder more effectively.

Based on the results reported here, there appears to be little reason for doubt that totally automated analysis of well selected acoustic features from naturalistic recordings can provide a monitoring system for developmental patterns in vocalization as well as significant differentiation of children with and without disorders such as autism or language delay. We are optimistic that the procedure can be improved, as this is our first attempt at automated infrastructural modeling, with all parameters designed and implemented in advance of any analysis of recordings. To date there have still been no adjustments made in the theoretically proposed parameters. Additional modeling (e.g., hierarchical, age-specific, nonlinear) can be invoked, modifications can be explored in acoustic features, and larger samples, especially from the entire spectrum of ASDs and other language-related disorders, can help tune the procedures. The future of research on vocal development will profit from the combination of traditional approaches using laboratory-based exhaustive analysis of small samples of vocalization with the enormous power that is now clearly possible to command through automated analysis of naturalistic recordings.

Materials and Methods

The following details of methods are provided in SI Appendix, SI Materials and Methods: (i) statistical analysis summary, (ii) recording device, (iii) participant groups and recording and assessment procedures, (iv) automated analysis algorithms, (v) the 12 acoustic parameters, and (vi) reliability of the automated analysis. The statistical analysis summary provides details regarding methods of prediction of development using MLR, as well as differentiation of child groups using LDA and logistic regression. An empirical illustration of reasons for using LOOCV in LDA and logistic regression analyses is also given. The recording procedures used in the present work as well as recruitment procedures for children in the three groups are explained along with selection criteria. Additional data are supplied on language and social characteristics of the participating groups. Automated analysis algorithms are described along with reliability data. All procedures were approved by the Essex Institutional Review Board.

Supplementary Material

Acknowledgments

Research by D.K.O. for this paper was funded by an endowment from the Plough Foundation, which supports his Chair of Excellence at The University of Memphis.

Footnotes

Conflict of interest statement: The recordings and hardware/software development were funded by Terrance and Judi Paul, owners of the previous for-profit company Infoture. Dissolution of the company was announced February 10, 2009, and it was reconstituted as the not-for-profit LENA Foundation. All assets of Infoture were given to the LENA Foundation. Before dissolution of the company, D.K.O., P.N., and S.F.W. had received consultation fees for their roles on the Scientific Advisory Board of Infoture. J.A.R., J.G., and D.X. are current employees of the LENA Foundation. S.G. and U.Y. are affiliates and previous employees of Infoture/LENA Foundation. None of the authors has or has had any ownership in Infoture or the LENA Foundation.

*This Direct Submission article had a prearranged editor.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1003882107/-/DCSupplemental.

References

- 1.de Villiers JG, de Villiers PA. Competence and performance in child language: Are children really competent to judge? J Child Lang. 1974;1:11–22. [Google Scholar]

- 2.Slobin D. Cognitive prerequisites for the development of grammar. In: Ferguson CA, Slobin DI, editors. Studies in Child Language Development. New York: Holt, Rinehart & Winston; 1973. pp. 175–208. [Google Scholar]

- 3.Bloom L. Language Development. Cambridge, MA: MIT Press; 1970. [Google Scholar]

- 4.Brown R. A First Language. London: Academic Press; 1973. [Google Scholar]

- 5.Pinker S. The Language Instinct. New York: Harper Perennial; 1994. [Google Scholar]

- 6.Cutler A, Klein W, Levinson SC. The cornerstones of twenty-first century psycholinguistics. In: Cutler A, editor. Twenty-First Century Psycholinguistics: Four Cornerstones. Mahwah, NJ: Erlbaum; 2005. pp. 1–20. [Google Scholar]

- 7.Locke JL. Phonological Acquisition and Change. New York: Academic Press; 1983. [Google Scholar]

- 8.Oller DK. The emergence of the sounds of speech in infancy. In: Yeni-Komshian G, Kavanagh J, Ferguson C, editors. Child Phonology, Vol 1: Production. New York: Academic Press; 1980. pp. 93–112. [Google Scholar]

- 9.Stark RE, Rose SN, McLagen M. Features of infant sounds: The first eight weeks of life. J Child Lang. 1975;2:205–221. [Google Scholar]

- 10.Sheinkopf SJ, Mundy P, Oller DK, Steffens M. Vocal atypicalities of preverbal autistic children. J Autism Dev Disord. 2000;30:345–354. doi: 10.1023/a:1005531501155. [DOI] [PubMed] [Google Scholar]

- 11.Wetherby AM, et al. Early indicators of autism spectrum disorders in the second year of life. J Autism Dev Disord. 2004;34:473–493. doi: 10.1007/s10803-004-2544-y. [DOI] [PubMed] [Google Scholar]

- 12.Oller DK, Eilers RE. The role of audition in infant babbling. Child Dev. 1988;59:441–449. [PubMed] [Google Scholar]

- 13.Eilers RE, Oller DK. Infant vocalizations and the early diagnosis of severe hearing impairment. J Pediatr. 1994;124:199–203. doi: 10.1016/s0022-3476(94)70303-5. [DOI] [PubMed] [Google Scholar]

- 14.Masataka N. Why early linguistic milestones are delayed in children with Williams syndrome: Late onset of hand banging as a possible rate-limiting constraint on the emergence of canonical babbling. Dev Sci. 2001;4:158–164. [Google Scholar]

- 15.Oller DK, Griebel U. The origins of syllabification in human infancy and in human evolution. In: Davis B, Zajdo K, editors. Syllable Development: The Frame/Content Theory and Beyond. Mahwah, NJ: Lawrence Erlbaum and Associates; 2008. pp. 368–386. [Google Scholar]

- 16.Vihman MM. Phonological Development: The Origins of Language in the Child. Cambridge, MA: Blackwell Publishers; 1996. [Google Scholar]

- 17.Stark RE, Ansel BM, Bond J. Are prelinguistic abilities predictive of learning disability? A follow-up study. In: Masland RL, Masland M, editors. Preschool Prevention of Reading Failure. Parkton, MD: York Press; 1988. [Google Scholar]

- 18.Stoel-Gammon C. Prespeech and early speech development of two late talkers. First Lang. 1989;9:207–223. [Google Scholar]

- 19.Oller DK. The Emergence of the Speech Capacity. Mahwah, NJ: Lawrence Erlbaum Associates; 2000. [Google Scholar]

- 20.Kanner L. Autistic disturbances of affective contact. Nerv Child. 1943;2:217–250. [PubMed] [Google Scholar]

- 21.Asperger H. Autistic “psychopathy” in childhood. In: Frith U, editor. Autism and Asperger Syndrome. Cambridge, UK: Cambridge University Press; 1991. pp. 37–90. [Google Scholar]

- 22.Paul R, Augustyn A, Klin A, Volkmar FR. Perception and production of prosody by speakers with autism spectrum disorders. J Autism Dev Disord. 2005;35:205–220. doi: 10.1007/s10803-004-1999-1. [DOI] [PubMed] [Google Scholar]

- 23.Pronovost W, Wakstein MP, Wakstein DJ. A longitudinal study of the speech behavior and language comprehension of fourteen children diagnosed atypical or autistic. Except Child. 1966;33:19–26. doi: 10.1177/001440296603300104. [DOI] [PubMed] [Google Scholar]

- 24.McCann J, Peppé S. Prosody in autism spectrum disorders: a critical review. Int J Lang Commun Disord. 2003;38:325–350. doi: 10.1080/1368282031000154204. [DOI] [PubMed] [Google Scholar]

- 25.Peppé S, McCann J, Gibbon F, O'Hare A, Rutherford M. Receptive and expressive prosodic ability in children with high-functioning autism. J Speech Lang Hear Res. 2007;50:1015–1028. doi: 10.1044/1092-4388(2007/071). [DOI] [PubMed] [Google Scholar]

- 26.Shriberg LD, et al. Speech and prosody characteristics of adolescents and adults with high-functioning autism and Asperger syndrome. J Speech Lang Hear Res. 2001;44:1097–1115. doi: 10.1044/1092-4388(2001/087). [DOI] [PubMed] [Google Scholar]

- 27.Association AP. Diagnostic and Statistical Manual of Mental Disorders, DSM-IV-TR. Arlington, VA: American Psychiatric Association; 2000. [Google Scholar]

- 28.Baron-Cohen S, Allen J, Gillberg C. Can autism be detected at 18 months? The needle, the haystack, and the CHAT. Br J Psychiatry. 1992;161:839–843. doi: 10.1192/bjp.161.6.839. [DOI] [PubMed] [Google Scholar]

- 29.Baron-Cohen S. Theory of mind and autism: a fifteen year review. In: Baron-Cohen S, Tager-Flusberg H, Cohen DJ, editors. Understanding Other Minds: Perspectives from Developmental Cognitive Neuroscience. Oxford: Oxford University Press; 2000. pp. 3–20. [Google Scholar]

- 30.Loveland KA, Landry SH. Joint attention and language in autism and developmental language delay. J Autism Dev Disord. 1986;16:335–349. doi: 10.1007/BF01531663. [DOI] [PubMed] [Google Scholar]

- 31.Mundy P, Kasari C, Sigman M. Nonverbal communication, affective sharing, and intersubjectivity. Infant Behav Dev. 1992;15:377–381. [Google Scholar]

- 32.Mundy P, Sigman M, Kasari C. A longitudinal study of joint attention and language development in autistic children. J Autism Dev Disord. 1990;20:115–128. doi: 10.1007/BF02206861. [DOI] [PubMed] [Google Scholar]

- 33.Rutter M. Diagnosis and definition of childhood autism. J Autism Child Schizophr. 1978;8:139–161. doi: 10.1007/BF01537863. [DOI] [PubMed] [Google Scholar]

- 34.Tager-Flusberg H. A psycholinguistic perspective on language development in the autistic child. In: Dawson G, editor. Autism: Nature, Diagnosis, and Treatment. New York: Guilford; 1989. pp. 92–115. [Google Scholar]

- 35.Tager-Flusberg H. Language and understanding minds: connections in autism. In: Baron-Cohen S, Tager-Flusberg H, Cohen DJ, editors. Understanding Other Minds: Perspectives from Developmental Cognitive Neuroscience. Oxford: Oxford University Press; 2000. pp. 124–149. [Google Scholar]

- 36.Tyler AA, Sandoval KT. Preschoolers With Phonological and Language Disorders: Treating Different Linguistic Domains. Lang Speech Hear Serv Schools. 1994;25:215–234. [Google Scholar]

- 37.Conti-Ramsden G, Botting N. Classification of children with specific language impairment: Longitudinal considerations. J Speech Lang Hear Res. 1999;42:1195–1204. doi: 10.1044/jslhr.4205.1195. [DOI] [PubMed] [Google Scholar]

- 38.Shriberg LD, Aram DM, Kwiatkowski J. Developmental apraxia of speech: I. Descriptive and theoretical perspectives. J Speech Lang Hear Res. 1997;40:273–285. doi: 10.1044/jslhr.4002.273. [DOI] [PubMed] [Google Scholar]

- 39.Marshall CR, Harcourt-Brown S, Ramus F, van der Lely HK. The link between prosody and language skills in children with specific language impairment (SLI) and/or dyslexia. Int J Lang Commun Disord. 2009;44:466–488. doi: 10.1080/13682820802591643. [DOI] [PubMed] [Google Scholar]

- 40.Ireton H, Glascoe FP. Assessing children's development using parents’ reports. The Child Development Inventory. Clin Pediatr (Phila) 1995;34:248–255. doi: 10.1177/000992289503400504. [DOI] [PubMed] [Google Scholar]

- 41.Lord C, Rutter M, DiLavore PC, Risi S. Autism Diagnostic Observation Schedule. Los Angeles: Western Psychological Services; 2002. [Google Scholar]

- 42.Schopler E, Reichler RJ, DeVellis RF, Daly K. Toward objective classification of childhood autism: Childhood Autism Rating Scale (CARS) J Autism Dev Disord. 1980;10:91–103. doi: 10.1007/BF02408436. [DOI] [PubMed] [Google Scholar]

- 43.Achenbach TM. Integrative Guide to the 1991 CBCL/4-18, YSR, and TRF Profiles. Burlington, VT: University of Vermont; 1991. [Google Scholar]

- 44.Robins DL, Fein D, Barton ML, Green JA. The Modified Checklist for Autism in Toddlers: An initial study investigating the early detection of autism and pervasive developmental disorders. J Autism Dev Disord. 2001;31:131–144. doi: 10.1023/a:1010738829569. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.