Abstract

Verbal communication is a joint activity; however, speech production and comprehension have primarily been analyzed as independent processes within the boundaries of individual brains. Here, we applied fMRI to record brain activity from both speakers and listeners during natural verbal communication. We used the speaker's spatiotemporal brain activity to model listeners’ brain activity and found that the speaker's activity is spatially and temporally coupled with the listener's activity. This coupling vanishes when participants fail to communicate. Moreover, though on average the listener's brain activity mirrors the speaker's activity with a delay, we also find areas that exhibit predictive anticipatory responses. We connected the extent of neural coupling to a quantitative measure of story comprehension and find that the greater the anticipatory speaker–listener coupling, the greater the understanding. We argue that the observed alignment of production- and comprehension-based processes serves as a mechanism by which brains convey information.

Keywords: functional MRI, intersubject correlation, language production, language comprehension

Verbal communication is a joint activity by which interlocutors share information (1). However, little is known about the neural mechanisms underlying the transfer of linguistic information across brains. Communication between brains may be facilitated by a shared neural system dedicated to both the production and the perception/comprehension of speech (1–7). Existing neurolinguistic studies are mostly concerned with either speech production or speech comprehension, and focus on cognitive processes within the boundaries of individual brains (1). The ongoing interaction between the two systems during everyday communication thus remains largely unknown. In this study we directly examine the spatial and temporal coupling between production and comprehension across brains during natural verbal communication.

Using fMRI, we recorded the brain activity of a speaker telling an unrehearsed real-life story and the brain activity of a listener listening to a recording of the story. In the past, recording speech during an fMRI scan has been problematic due to the high levels of acoustic noise produced by the MR scanner and the distortion of the signal by traditional microphones. Thus, we used a customized MR-compatible dual-channel optic microphone that cancels the acoustic noise in real time and achieves high levels of noise reduction with negligible loss of audibility (see SI Methods and Fig. 1A). To make the study as ecologically valid as possible, we instructed the speaker to speak as if telling the story to a friend (see SI Methods for a transcript of the story and Movie S1 for an actual sample of the recording). To minimize motion artifacts induced by vocalization during an fMRI scan, we trained the speaker to produce as little head movement as possible. Next, we measured the brain activity (n = 11) of a listener listening to the recorded audio of the spoken story, thereby capturing the time-locked neural dynamics from both sides of the communication. Finally, we used a detailed questionnaire to assess the level of comprehension of each listener.

Fig. 1.

Imaging the neural activity of a speaker–listener pair during storytelling. (A) To record the speaker's speech during the fMRI scan, we used a customized MR-compatible recording device composed of two orthogonal optic microphones (Right). The source microphone captures both the background noise and the speaker's speech utterances (upper audio trace), and the reference microphone captures the background noise (middle audio trace). A dual-adaptive filter subtracts the reference input from the source channel to recover the speech (lower audio trace). (B) The speaker–listener neural coupling was assessed through the use of a general linear model in which the time series in the speaker's brain are used to predict the activity in the listeners’ brains. To capture the asynchronous temporal interaction between the speaker and the listeners, the speaker's brain activity was convolved with different temporal shifts. The convolution consists of both backward shifts (up to −6 s, intervals of 1.5 s, speaker precedes) and forward shifts (up to +6 s, intervals of 1.5 s, listener precedes) relative to the moment of vocalization (0 shift). For each brain area (voxel), the speaker's local response time course is used to predict the time series of the Talairach-normalized, spatially corresponding area in the listener's brain. The model thus captures the extent to which activity in the speaker's brain during speech production is coupled over time with the activity in the listener's brain during speech production.

Our ability to assess speaker–listener interactions builds on recent findings that a large portion of the cortex evokes reliable and selective responses to natural stimuli (e.g., listening to a story), which are shared across all subjects (8–11). These studies use the intersubject correlation method to characterize the similarity of cortical responses across individuals during natural viewing conditions (for a recent review, see ref. 12). Here we extend these ideas by looking at the direct interaction (not induced by shared external input) between brains during communication and test whether the speaker's neural activity during speech production is coupled with the shared neural activity observed across all listeners during speech comprehension.

We hypothesize that the speaker's brain activity during production is spatially and temporally coupled with the brain activity measured across listeners during comprehension. During communication, we expect significant production/comprehension couplings to occur if speakers use their comprehension system to produce speech, and listeners use their production system to process the incoming auditory signal (3, 13, 14). Moreover, because communication unfolds over time, this coupling will exhibit important temporal structure. In particular, because the speaker's production-based processes mostly precede the listener's comprehension-based processes, the listener's neural dynamics will mirror the speaker's neural dynamics with some delay. Conversely, when listeners use their production system to emulate and predict the speaker's utterances, we expect the opposite: the listener's dynamics will precede the speaker's dynamics (14). However, when the speaker and listener are simply responding to the same shared sensory input (both speaker and listener can hear the same utterances), we predict synchronous alignment. Finally, if the neural coupling across brains serves as a mechanism by which the speaker and listener converge on the same linguistic act, the extent of coupling between a pair of conversers should predict the success of communication.

Results

Speaker–Listener Coupling Model.

We formed a model of the expected activity in the listeners’ brains during speech comprehension based on the speaker's activity during speech production (see Fig. 1B and Methods for model details). Due to both the spatiotemporal complexity of natural language and an insufficient understanding of language-related neural processes, conventional hypothesis-driven fMRI analysis methods are largely unsuitable for modeling the brain activity acquired during communication. We therefore developed an approach that circumvents the need to specify a formal model for the linguistic process in any given brain area by using the speaker's brain activity as a model for predicting the brain activity within each listener. To analyze the direct interaction of production and comprehension mechanisms, we considered only spatially local models that measure the degree of speaker–listener coupling within the same Talairach location. To capture the temporal dynamics, we first shifted the speaker's time courses backward (up to −6 s, intervals of 1.5 s, speaker precedes) and forward (up to +6 s, intervals of 1.5 s, listener precedes) relative to the moment of vocalization (0 shift). We then combined these nine shifted speaker time courses with linear weights to build a predictive model for the listener brain dynamics. Though correlations between shifted voxel time-courses can complicate the interpretation of the linear weights, here, these correlations are small as shown by the mean voxel autocorrelation function (Fig. S1). The weights are thus an approximately independent measure of the contribution of the speaker dynamics for each shift. To further ensure that the minimal autocorrelations among regressors did not affect the model's temporal discriminability, we decorrelated the regressors within the model and repeated the analysis. Similar results were obtained in both cases (SI Methods).

Speaker and Listener Brain Activity Exhibits Widespread Coupling During Communication.

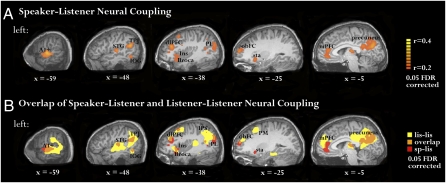

For each brain area, we identified significant speaker–listener couplings by applying an analytical F test to the overall model fit, and controlled for multiple comparisons across the volume using a fixed false discovery rate (γ = 0.05; see Methods for details). Similar results were obtained using a nonparametric permutation test (Fig. S2). Figure 2A presents the results in the left hemisphere; similar results were obtained in the right hemisphere (Fig. S3A). Significant speaker–listener coupling was found in early auditory areas (A1+), superior temporal gyrus, angular gyrus, temporoparietal junction (these areas are also known as Wernicke's area), parietal lobule, inferior frontal gyrus (also known as Broca's area), and the insula. Although the function of these regions is far from clear, they have been associated with various production and comprehension linguistic processes (15–17). Moreover, both the parietal lobule and the inferior frontal gyrus have been associated with the mirror neuron system (18). Finally, we also observed significant speaker–listener coupling in a collection of extralinguistic areas known to be involved in the processing of semantic and social aspects of the story (19), including the precuneus, dorsolateral prefrontal cortex, orbitofrontal cortex, striatum, and medial prefrontal cortex (see Table S1 for Talairach coordinates).

Fig. 2.

The speaker–listener neural coupling is widespread, extending well beyond low-level auditory areas. (A) Areas in which the activity during speech production is coupled to the activity during speech comprehension. The analysis was performed on an area-by-area basis, with P values defined using an F test and was corrected for multiple comparisons using FDR methods (γ = 0.05). The findings are presented on sagittal slices of the left hemisphere (similar results were obtained in the right hemisphere; see Fig. S3). The speaker–listener coupling is extensive and includes early auditory cortices and linguistic and extralinguistic brain areas. (B) The overlap (orange) between areas that exhibit reliable activity across all listeners (listener–listener coupling, yellow) and speaker–listener coupling (red). Note the widespread overlap between the network of brain areas used to process incoming verbal information among the listeners (comprehension-based activity) and the areas that exhibit similar time-locked activity in the speaker's brain (production/comprehension coupling). A1+, early auditory cortices; TPJ, temporal-parietal junction; dlPFC, dorsolateral prefrontal cortex; IOG, inferior occipital gyrus; Ins, insula; PL, parietal lobule; obFC, orbitofrontal cortex; PM, premotor cortex; Sta, striatum; mPFC, medial prefrontal cortex.

Brain areas that were coupled across the speaker and listener coincided with brain areas used to process incoming verbal information within the listeners (Fig. 2B). To compare the speaker–listener interactions (production/comprehension) with the listener–listener interactions (comprehension only), we constructed a listener–listener coupling map using similar analysis methods and statistical procedures as above. In agreement with previous work, the story evoked highly reliable activity in many brain areas across all listeners (8, 11, 12) (Fig. 2B, yellow). We note that the agreement with previous work is far from assured: the story here was both personal and spontaneous, and was recorded in the noisy environment of the scanner. The similarity in the response patterns across all listeners underscores a strong tendency to process incoming verbal information in similar ways. A comparison between the speaker–listener and the listener–listener maps reveals an extensive overlap (Fig. 2B, orange). These areas include many of the sensory-related, classic linguistic-related and extralinguistic-related brain areas, demonstrating that many of the areas involved in speech comprehension (listener–listener coupling) are also aligned during communication (speaker–listener coupling).

Speaker–Listener Neural Coupling Emerges Only During Communication.

To test whether the extensive speaker–listener coupling emerges only when information is transferred across interlocutors, we blocked the communication between speaker and listener. We repeated the experiment while recording a Russian speaker telling a story in the scanner, and then played the story to non–Russian-speaking listeners (n = 11). In this experimental setup, although the Russian speaker is trying to communicate information, the listeners are unable to extract the information from the incoming acoustic sounds. Using identical analysis methods and statistical thresholds, we found no significant coupling between the speaker and the listeners or among the listeners. At significantly lower thresholds we found that the non–Russian-speaking listener–listener coupling was confined to early auditory cortices. This indicates that the reliable activity in most areas, besides early auditory cortex, depends on a successful processing of the incoming information, and is not driven by the low-level acoustic aspects of the stimuli.

As further evidence that extensive speaker–listener couplings rely on successful communication, we asked the same English speaker to tell another unrehearsed real-life story in the scanner. We then compared her brain activity while telling the second story with the brain activity of the listeners to the original story. In this experimental setup, the speaker transmits information and the listener receives information; however, the information is decoupled across both sides of the communicative act. As in the Russian story, we found no significant coupling between the speaker and the listeners. We therefore conclude that coupling across interlocutors emerges only while engaged in shared communication.

Listeners’ Brain Activity Mirrors the Speaker's Brain Activity with a Delay.

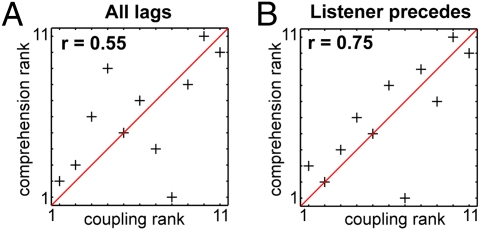

Natural communication unfolds over time: speakers construct grammatical sentences based on thoughts, convert these to motor plans, and execute the plans to produce utterances; listeners analyze the sounds, build phonemes into words and sentences, and ultimately decode utterances into meaning. In our model of brain coupling, the speaker–listener temporal coupling is reflected in the model's weights, where each weight multiplies a temporally shifted time course of the speaker's brain activity relative to the moment of vocalization (synchronized alignment, zero shift). As expected, and in agreement with previous work (12), the activity among the listeners is time locked to the moment of vocalization (Fig. 3A, blue curve). In contrast, in most areas, the activity in the listeners’ brains lagged behind the activity in the speaker's brain by 1–3 s (Fig. 3A, red curve). These lagged responses suggest that on average the speaker's production-based processes precede and likely induce the mirrored activity observed in the listeners’ brains during comprehension. These findings also allay a methodological concern that the speaker–listener neural coupling is induced simply by the fact that the speaker is listening to her own speech.

Fig. 3.

Temporal asymmetry between speaker–listener and listener–listener neural couplings. (A) The mean distribution of the temporal weights across significantly coupled areas for the listener–listener (blue curve) and speaker–listener (red curve) brain pairings. For each area, the weights are normalized to unit magnitude, and error bars denote SEMs. The weight distribution within the listeners is centered on zero (the moment of vocalization). In contrast, the weight distribution between the speaker and listeners is shifted; activity in the listeners’ brains lagged activity in the speaker's brain by 1–3 s. This suggests that on average the speaker's production-based processes precede and hence induce the listeners’ comprehension-based processes. (B) The speaker–listener temporal coupling varies across brain areas. Based on the distribution of temporal weights within each brain area, we divided the couplings into three temporal profiles: the activity in speaker's brain precedes (blue); the activity is synchronized with ±1.5 s around the onset of vocalization (yellow), and the activity in listener's brain precedes (red). In early auditory areas, the speaker–listener coupling is time locked to the moment of vocalization. In posterior areas, the activity in the speaker's brain preceded the activity in the listeners’ brains; in the mPFC, dlPFC, and striatum, the listeners’ brain activity preceded. Results differ slightly in right and left hemisphere. (C) The listener–listener temporal coupling is time locked to the onset of vocalization (yellow) across all brain areas in right and left hemispheres. Note that unique speaker–listener temporal dynamics mitigates the methodological concern that the speaker's activity is similar to the listeners’ activity due to the fact that the speaker is merely another listener of her own speech.

Neural Couplings Display Striking Temporal Differences Across the Brain.

The temporal dynamics of the speaker–listener coupling varied across brain areas (Fig. 3B). Among significantly coupled brain areas, important differences in dynamics are contained within the weights of the different temporally shifted regressors. To assess how these patterns varied across the brain, we categorized the weights as delayed (speaker precedes, −6 to −3 s), advanced (listener precedes, 3–6 s), or synchronous (−1.5 to 1.5 s). Though such categorizations increase statistical power, they also reduce the temporal resolution of the analysis. Thus, synchronous weights reflect processes that occur both at the point of vocalization (shift 0) as well as ±1.5 s around it, whereas delayed and advanced weights require shifts of over 1.5 s. Next, for each area we performed a contrast analysis to identify brain areas in which the mean weight for each temporal category is statistically greater (P < 0.05) than the mean weight over the rest of the couplings (Methods). In early auditory areas (A1+) the speaker–listener coupling is aligned to the speech utterances (synchronized alignment; Fig. 3B, yellow); in posterior areas, including the right TPJ and the precuneus, the speaker's brain activity preceded the listener's brain activity (speaker precedes; Fig. 3B, blue); in the striatum and anterior frontal areas, including the mPFC and dlPFC, the listener's brain activity preceded the speaker's brain activity (listener precedes; Fig. 3B, red). To verify that our categorization of temporal couplings was independent of autocorrelations within the speaker's time series, we repeated the analysis after decorrelating the model's regressors. We found nearly exact overlap between the delayed, synchronous, and advanced maps obtained with the original and decorrelated models (97%, 97%, and 94%, respectively). The result that significant speaker–listener couplings include substantially advanced weights may be indicative of predictive processes generated by the listeners before the moment of vocalization to enhance and facilitate the processing of the incoming, noisy speech input (14). Furthermore, the spatial specificity of the temporal coupling shows that it cannot simply be attributed to nonspecific, spatially global effects such as arousal. In comparison to the speaker–listener couplings, the comprehension-based processes in the listeners’ brains were entirely aligned to the moment of vocalization (Fig. 3C, yellow). Thus, the dynamics of neural coupling between the speaker and the listeners are fundamentally different from the neural dynamics shared among all listeners.

Extent of Speaker–Listener Neural Coupling Predicts the Success of the Communication

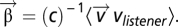

Humans use speech to convey information across brains. Here we administer a behavioral assessment to each listener at the end of the scan to assess the amount of information transferred from the speaker to each of the listeners (SI Methods and Fig. S4). We independently ranked both the listeners’ behavioral scores and the spatial extent of significant neural coupling between the speaker and each listener, and found a strong positive correlation. (r = 0.55, P < 0.07; Fig. 4A). The correlation between the neural coupling and the level of comprehension was robust to changes in the exact statistical threshold and remained stable across many statically significant P values (Fig. S5A). These findings suggest that the stronger the neural coupling between interlocutors, the better the understanding. Finally, we computed behavioral correlations with brain regions that show coupling at different delays (speaker precedes, synchronous, listener precedes). Using these temporal categories, we analyzed the connection between each category and the level of comprehension. Remarkably, the extent of cortical areas where the listeners’ activity preceded the speaker's activity (red areas in Fig. 3B; contrast P < 0.03) provided the strongest correlation with behavior (r = 0.75, P < 0.01). This suggests that prediction is an important aspect of successful communication. Furthermore, the behavioral correlation in both cases increases to r = 0.76 (P < 0.01) and r = 0.93 (P < 0.0001), respectively, when we remove a single outlier listener (ranked eighth in Fig. 4 A and B). Finally, the correlation between comprehension and neural coupling was robust to changes in the exact contrast threshold (Fig. S5B). Importantly, we note that the correlation with the level of understanding cannot be attributed to low-level processes (e.g., the audibility of the audiofile), as the correlation with behavior increases when we do not include early auditory areas (synchronous alignment, yellow areas in Fig. 3B).

Fig. 4.

The greater the extent of neural coupling between a speaker and listener the better the understanding. (A) To assess the comprehension level of each individual listener, an independent group of raters (n = 6) scored the listeners’ detailed summaries of the story they heard in the scanner. We ranked the listeners’ behavioral scores and the extent of significant speaker–listener coupling and found a strong positive correlation (r = 0.54, P < 0.07) between the amount of information transferred to each listener and the extent of neural coupling between the speaker and each listener (Fig. 4A). These findings suggest that the stronger the neural coupling between interlocutors, the better the understanding. (B) The extent of brain areas where the listeners’ activity preceded the speaker's activity (red areas in Fig. 3B) provided the strongest correlation with behavior (r = 0.75, P < 0.01). These findings provide evidence that prediction is an important aspect of successful communication.

Discussion

Communication is a shared activity resulting in a transfer of information across brains. The findings shown here indicate that during successful communication, speakers’ and listeners’ brains exhibit joint, temporally coupled, response patterns (Figs. 2 and 3). Such neural coupling substantially diminishes in the absence of communication, such as when listening to an unintelligible foreign language. Moreover, more extensive speaker–listener neural couplings result in more successful communication (Fig. 4). We further show that on average the listener's brain activity mirrors the speaker's brain activity with temporal delays (Fig. 3 A and B). Such delays are in agreement with the flow of information across communicators and imply a causal relationship by which the speaker's production-based processes induce and shape the neural responses in the listener's brain. Though the sluggish BOLD response masks the exact temporal speaker–listener coupling, the delayed and advanced timescales (∼1–4 s) coincide with the timescales of some rudimentary linguistic processes (e.g., in this study, it took the speaker on average around 0.5 ± 0.6 s to produce words, 9 ± 5 s to produce sentences, and even longer to convey ideas). Moreover, we recently demonstrated that some high-order brain areas, such as the TPJ and the parietal lobule, have the capacity to accumulate information over many seconds (8).

Our analysis also identifies a subset of brain regions in which the activity in the listener's brain precedes the activity in the speaker's brain. The listener's anticipatory responses were localized to areas known to be involved in predictions and value representation (20–23), including the striatum and medial and dorsolateral prefrontal regions (mPFC, dlPFC). The anticipatory responses may provide the listeners with more time to process an input and can compensate for problems with noisy or ambiguous input (24). This hypothesis is supported by the finding that comprehension is facilitated by highly predictable upcoming words (25). Remarkably, the extent of the listener's anticipatory brain responses was highly correlated with the level of understanding (Fig. 4B), indicating that successful communication requires the active engagement of the listener (26, 27).

The notion that perception and action are coupled has long been argued by linguists, philosophers, cognitive psychologists, social psychologists, and neurophysiologists (2, 3, 24, 28–33). Our findings document the ongoing dynamic interaction between two brains during the course of natural communication, and reveal a surprisingly widespread neural coupling between the two, a priori independent, processes. Such findings are in agreement with the theory of interactive linguistic alignment (1). According to this theory, production and comprehension become tightly aligned on many different levels during verbal communication, including the phonetic, phonological, lexical, syntactic, and semantic representations. Accordingly, we observed neural coupling during communication at many different processing levels, including low-level auditory areas (induced by the shared input), production-based areas (e.g., Broca's area), comprehension-based areas (e.g., Wernicke's area and TPJ), and high-order extralinguistic areas (e.g., precuneus and mPFC) that can induce shared contextual model of the situation (34). Interestingly, some of these extralinguistic areas are known to be involved in processing social information crucial for successful communication, including, among others, the capacity to discern the beliefs, desires, and goals of others (15, 16, 31, 35–38)

The production/comprehension coupling observed here resembles the action/perception coupling observed within mirror neurons (35). Mirror neurons discharge both when a monkey performs a specific action and when it observes the same action performed by another (39). Similarly, during the course of communication the production-based and comprehension-based processes seem to be tightly coupled to each other. Currently, however, direct proof of such a link remains elusive for two main reasons. First, mirror neurons have been recorded mainly in the ventral premotor area (F5) and the intraparietal area (PF/IPL) of the primate brain during observation and execution of rudimentary motor acts such as reaching or grabbing food. The speaker–listener neural coupling observed here extends far beyond these two areas. Furthermore, although area F5 in the macaque has been suggested to overlap with Broca's area in humans, a detailed characterization of the links between basic motor acts and complex linguistic acts is still missing (see refs. 40 and 41). Second, based on the fMRI activity recorded during production and comprehension of the same utterances, we cannot tell whether the speaker–listener coupling is generated by the activity of the same neural population that produces and encodes speech or by the activity of two intermixed but independent populations (42). Nevertheless, our findings suggest that, on the systems level, the coupling between action-based and perception-based processes is extensive and widely used across many brain areas.

The speaker–listener neural coupling exposes a shared neural substrate that exhibits temporally aligned response patterns across communicators. Previous studies have shown that during free viewing of a movie or listening to a story, the external shared input can induce similar brain activity across different individuals (8–11, 43, 44). Verbal communication enables us to convey information across brains, independent of the actual external situation (e.g., telling a story of past events). Such phenomenon may be reflected in the ability of the speaker to directly induce similar brain patterns in another individual, via speech, in the absence of any other stimulation. Finally, the recording of the neural activity from both the speaker brain and the listener brain opens a new window into the neural basis of interpersonal communication, and may be used to assess verbal and nonverbal forms of interaction in both human and other model systems (45). Further understanding of the neural processes that facilitate neural coupling across interlocutors may shed light on the mechanisms by which our brains interact and bind to form societies.

Methods

Subject Population.

One native-English speaker, one native-Russian speaker, and 12 native-English listeners, ages 21–30 y, participated in one or more of the experiments. Procedures were in compliance with the safety guidelines for MRI research and approved by the Princeton University Committee on Activities Involving Human Subjects. All participants provided written informed consent.

Experiment and Procedure.

To measure neural activity during communication, we first used fMRI to record the brain activity of a speaker telling a long, unrehearsed story. The speaker had three practice sessions inside the scanner telling real-life unrehearsed stories. This allowed for the opportunity for the speaker to familiarize herself with the conditions of storytelling inside the scanner and to learn to minimize head movements without compromising storytelling effectiveness. In the final fMRI session, the speaker told a new, nonrehearsed, real-life, 15-min account about an experience she had as a freshman in high school (see SI Methods for the transcript). The story was recorded using a MR-compatible microphone (see below). The speech recording was aligned with the scanner's TTL backtick received at each TR. The same procedure was followed for the Russian speaker, telling a nonrehearsed, real-life story in Russian. In focusing on a personally relevant experience, we strove both to approach the ecological setting of natural communication and to ensure an intention to communicate by the speaker.

We measured listeners’ brain activity during audio playback of the recorded story. We synchronized the functional time series to the speaker's vocalization through the use of a Matlab code (MathWorks Inc.) written to start the speaker's recording at the onset of the scanner's TTL backtick. Eleven listeners listened to the recording of the English story. Ten of the listeners (and one new subject) listened to the recording of the Russian story. None of the listeners understood Russian. Our experimental design thus allows access to both sides of the simulated communication. Participants were instructed before the scan to attend as best as possible to the story, and further that they would be asked to provide a written account of the story immediately following the scan.

Recording System.

We recorded the speaker's speech during the fMRI scan using a customized MR-compatible recording system (FOMRI II; Optoacoustics Ltd.). More details are described in SI Methods and in Fig. 1A.

MRI Acquisition.

Subjects were scanned in a 3T head-only MRI scanner (Allegra; Siemens). More details are described in SI Methods.

Data Preprocessing.

fMRI data were preprocessed with the BrainVoyager software package (Brain Innovation, version 1.8) and with additional software written with Matlab. More details are described in SI Methods.

Model Analysis.

The coupling between speaker–listener and listener–listener brain pairings was assessed through the use of a spatially local general linear model in which temporally shifted voxel time series in one brain are linearly summed to predict the time series of the spatially corresponding voxel in another brain. Thus for the speaker–listener coupling we have

|

where the weights  are determined by minimizing the RMS error and are given by

are determined by minimizing the RMS error and are given by  Here, C is the covariance matrix

Here, C is the covariance matrix  and

and  is the vector of shifted voxel times series,

is the vector of shifted voxel times series,  We choose

We choose  , which is large enough to capture important temporal processes while also minimizing the overall number of model parameters to maintain statistical power. We obtain similar results with

, which is large enough to capture important temporal processes while also minimizing the overall number of model parameters to maintain statistical power. We obtain similar results with  .

.

We calculated the neural couplings for three brain pairings: (a) speaker, individual listener; (b) speaker, average listener; and (c) and listener, average listener. In all three cases, the first brain in the pairing provides the independent variables in Eq. 1. The average listener dynamics was constructed by averaging the functional time series of the (n = 11) listeners at each location in the brain. The (listener, average listener) pairing was constructed by first building, for each listener, the [listener, (N−1) listener average] pairing. For each listener, the (N−1) average listener is the average listener constructed from all other listeners. We then solved the coupling model (Eq. 1). Finally, to connect our findings to behavioral variability, we constructed the (speaker–listener) coupling separately for the N individual listeners.

Statistical Analysis.

We indentified statistically significant couplings by assigning P values through a Fisher's F test. In detail, the model in Eq. 1 has  degrees of freedom, while

degrees of freedom, while  , where T is the number of time points in the experiment. For the prom story, T = 581, and T = 451 for the Russian story. For each model fit we construct the F statistic and associated P value

, where T is the number of time points in the experiment. For the prom story, T = 581, and T = 451 for the Russian story. For each model fit we construct the F statistic and associated P value  where f is the cumulative distribution function of the F statistic. We also assigned nonparametric P values by using a null model based on randomly permuted data (n = 1,000) at each brain location. The nonparametric null model produced P values very close to those constructed from the F statistic (Fig. S2). We correct for multiple statistical comparisons when displaying volume maps by controlling the false discovery rate (FDR). Following ref. 46, we place the P values in ascending order

where f is the cumulative distribution function of the F statistic. We also assigned nonparametric P values by using a null model based on randomly permuted data (n = 1,000) at each brain location. The nonparametric null model produced P values very close to those constructed from the F statistic (Fig. S2). We correct for multiple statistical comparisons when displaying volume maps by controlling the false discovery rate (FDR). Following ref. 46, we place the P values in ascending order  and choose the maximum value

and choose the maximum value  such that

such that  , where

, where  is the FDR threshold.

is the FDR threshold.

To identify significant listener–listener couplings, we applied the above statistical analysis to the model fits across all (n = 11) listener–average listener pairs. This is a statistically conservative approach aimed to facilitate comparison with the speaker–listener brain pairing. The greater statistical power contained within the (n = 11) different listener/average listener pairs can be fully exploited using nonparametric bootstrap methods for estimating the null distribution and calculating significant couplings (Fig. S6).

Coupling Categorization.

For each cortical location and brain pairing, the parameters  of Eq. 1 fully characterize the local neural coupling. As seen in Fig. 3, temporal differences among the couplings reveal important differences between action and perception. To explore these differences we categorize each coupling as delayed (speaker precedes, −6 to −3 s), advanced (listener precedes 3–6 s), or synchronous (−1.5 to 1.5 s) based on the difference of the mean weight within each category relative to the mean weight outside the category. We define the statistical significance of each category through a contrast analysis. For example, for the delayed category we define the contrast

of Eq. 1 fully characterize the local neural coupling. As seen in Fig. 3, temporal differences among the couplings reveal important differences between action and perception. To explore these differences we categorize each coupling as delayed (speaker precedes, −6 to −3 s), advanced (listener precedes 3–6 s), or synchronous (−1.5 to 1.5 s) based on the difference of the mean weight within each category relative to the mean weight outside the category. We define the statistical significance of each category through a contrast analysis. For example, for the delayed category we define the contrast  and associated t statistic

and associated t statistic  , where

, where  is the model variance and

is the model variance and  is the covariance of the shifted time-series. The contrasts for other categories are defined similarly. A large contrast indicates that the coupling is dominated by weights within that particular temporal category.

is the covariance of the shifted time-series. The contrasts for other categories are defined similarly. A large contrast indicates that the coupling is dominated by weights within that particular temporal category.

Behavioral Assessment.

Immediately following the scan, the participants were asked to record the story they heard in as much detail as possible. Six independent raters scored each of these listener records accordingly, and the resulting score was used as a quantitative and objective measure of the listener's understanding. More details are provided in SI Methods and Fig. S4.

Supplementary Material

Acknowledgments

We thank our colleagues Forrest Collman, Yadin Dudai, Bruno Galantucci, Asif Ghazanfar, Adele Goldberg, David Heeger, Chris Honey, Ifat Levy, Yulia Lerner, Rafael Malach, Stephanie E. Palmer, Daniela Schiller, and Carrie Theisen for helpful discussion and comments on the manuscript. G.J.S. was supported in part by the Swartz Foundation.

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1008662107/-/DCSupplemental.

References

- 1.Pickering MJ, Garrod S. Toward a mechanistic psychology of dialogue. Behav Brain Sci. 2004;27:169–190. doi: 10.1017/s0140525x04000056. discussion 190–226. [DOI] [PubMed] [Google Scholar]

- 2.Hari R, Kujala MV. Brain basis of human social interaction: From concepts to brain imaging. Physiol Rev. 2009;89:453–479. doi: 10.1152/physrev.00041.2007. [DOI] [PubMed] [Google Scholar]

- 3.Liberman AM, Mattingly IG. The motor theory of speech perception revised. Cognition. 1985;21:1–36. doi: 10.1016/0010-0277(85)90021-6. [DOI] [PubMed] [Google Scholar]

- 4.Branigan HP, Pickering MJ, Cleland AA. Syntactic co-ordination in dialogue. Cognition. 2000;75:B13–B25. doi: 10.1016/s0010-0277(99)00081-5. [DOI] [PubMed] [Google Scholar]

- 5.Levelt WJM. Speaking: From Intention to Articulation. Cambridge, MA: MIT Press; 1989. [Google Scholar]

- 6.Wilson M, Knoblich G. The case for motor involvement in perceiving conspecifics. Psychol Bull. 2005;131:460–473. doi: 10.1037/0033-2909.131.3.460. [DOI] [PubMed] [Google Scholar]

- 7.Chang F, Dell GS, Bock K. Becoming syntactic. Psychol Rev. 2006;113:234–272. doi: 10.1037/0033-295X.113.2.234. [DOI] [PubMed] [Google Scholar]

- 8.Hasson U, Yang E, Vallines I, Heeger DJ, Rubin N. A hierarchy of temporal receptive windows in human cortex. J Neurosci. 2008;28:2539–2550. doi: 10.1523/JNEUROSCI.5487-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Golland Y, et al. Extrinsic and intrinsic systems in the posterior cortex of the human brain revealed during natural sensory stimulation. Cereb Cortex. 2007;17:766–777. doi: 10.1093/cercor/bhk030. [DOI] [PubMed] [Google Scholar]

- 10.Hasson U, Nir Y, Levy I, Fuhrmann G, Malach R. Intersubject synchronization of cortical activity during natural vision. Science. 2004;303:1634–1640. doi: 10.1126/science.1089506. [DOI] [PubMed] [Google Scholar]

- 11.Wilson SM, Molnar-Szakacs I, Iacoboni M. Beyond superior temporal cortex: Intersubject correlations in narrative speech comprehension. Cereb Cortex. 2008;18:230–242. doi: 10.1093/cercor/bhm049. [DOI] [PubMed] [Google Scholar]

- 12.Hasson U, Malach R, Heeger D. Reliability of cortical activity during natural stimulation. Trends Cogn Sci. 2010;14:40–48. doi: 10.1016/j.tics.2009.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Galantucci B, Fowler CA, Turvey MT. The motor theory of speech perception reviewed. Psychon Bull Rev. 2006;13:361–377. doi: 10.3758/bf03193857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pickering MJ, Garrod S. Do people use language production to make predictions during comprehension? Trends Cogn Sci. 2007;11:105–110. doi: 10.1016/j.tics.2006.12.002. [DOI] [PubMed] [Google Scholar]

- 15.Fletcher PC, et al. Other minds in the brain: A functional imaging study of “theory of mind” in story comprehension. Cognition. 1995;57:109–128. doi: 10.1016/0010-0277(95)00692-r. [DOI] [PubMed] [Google Scholar]

- 16.Völlm BA, et al. Neuronal correlates of theory of mind and empathy: A functional magnetic resonance imaging study in a nonverbal task. Neuroimage. 2006;29:90–98. doi: 10.1016/j.neuroimage.2005.07.022. [DOI] [PubMed] [Google Scholar]

- 17.Sahin NT, Pinker S, Cash SS, Schomer D, Halgren E. Sequential processing of lexical, grammatical, and phonological information within Broca's area. Science. 2009;326:445–449. doi: 10.1126/science.1174481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Fadiga L, Craighero L, D'Ausilio A. Broca's area in language, action, and music. Ann N Y Acad Sci. 2009;1169:448–458. doi: 10.1111/j.1749-6632.2009.04582.x. [DOI] [PubMed] [Google Scholar]

- 19.Xu J, Kemeny S, Park G, Frattali C, Braun A. Language in context: Emergent features of word, sentence, and narrative comprehension. Neuroimage. 2005;25:1002–1015. doi: 10.1016/j.neuroimage.2004.12.013. [DOI] [PubMed] [Google Scholar]

- 20.Koechlin E, Corrado G, Pietrini P, Grafman J. Dissociating the role of the medial and lateral anterior prefrontal cortex in human planning. Proc Natl Acad Sci USA. 2000;97:7651–7656. doi: 10.1073/pnas.130177397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Krueger F, Grafman J. The human prefrontal cortex stores structured event complexes. In: Shipley T, Zacks JM, editors. Understanding Events: From Perception to Action. New York: Oxford Univ Press; 2008. pp. 617–638. [Google Scholar]

- 22.Gilbert SJ, et al. Functional specialization within rostral prefrontal cortex (area 10): A meta-analysis. J Cogn Neurosci. 2006;18:932–948. doi: 10.1162/jocn.2006.18.6.932. [DOI] [PubMed] [Google Scholar]

- 23.Craig AD. How do you feel—now? The anterior insula and human awareness. Nat Rev Neurosci. 2009;10:59–70. doi: 10.1038/nrn2555. [DOI] [PubMed] [Google Scholar]

- 24.Garrod S, Pickering MJ. Why is conversation so easy? Trends Cogn Sci. 2004;8:8–11. doi: 10.1016/j.tics.2003.10.016. [DOI] [PubMed] [Google Scholar]

- 25.Schwanenflugel PJ, Shoben EJ. The influence of sentence constraint on the scope of facilitation for upcoming words. J Mem Lang. 1985;24:232–252. [Google Scholar]

- 26.Clark HH. Using Language. Cambridge, UK: Cambridge Univ Press; 1996. [Google Scholar]

- 27.Clark HH, Wilkes-Gibbs D. Referring as a collaborative process. Cognition. 1986;22:1–39. doi: 10.1016/0010-0277(86)90010-7. [DOI] [PubMed] [Google Scholar]

- 28.Galantucci B, Fowler CA, Goldstein L. Perceptuomotor compatibility effects in speech. Atten Percept Psychophys. 2009;71:1138–1149. doi: 10.3758/APP.71.5.1138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Merleau-Ponty M. The Phenomenology of Perception. Paris, France: Gallimard; 1945. [Google Scholar]

- 30.Gibson JJ. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin; 1979. [Google Scholar]

- 31.Amodio DM, Frith CD. Meeting of minds: The medial frontal cortex and social cognition. Nat Rev Neurosci. 2006;7:268–277. doi: 10.1038/nrn1884. [DOI] [PubMed] [Google Scholar]

- 32.Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Brain Res Cogn Brain Res. 1996;3:131–141. doi: 10.1016/0926-6410(95)00038-0. [DOI] [PubMed] [Google Scholar]

- 33.Arbib M. Mirror system activity for action and language is embedded in the integration of dorsal and ventral pathways. Brain Lang. 2010;112:12–24. doi: 10.1016/j.bandl.2009.10.001. [DOI] [PubMed] [Google Scholar]

- 34.Johnson-Laird PN. Mental Models: Towards a Cognitive Science of Language, Inference, and Consciousness. Cambridge, MA: Harvard Univ Press; 1995. [Google Scholar]

- 35.Gallagher HL, et al. Reading the mind in cartoons and stories: An fMRI study of ‘theory of mind’ in verbal and nonverbal tasks. Neuropsychologia. 2000;38:11–21. doi: 10.1016/s0028-3932(99)00053-6. [DOI] [PubMed] [Google Scholar]

- 36.Gallagher HL, Frith CD. Functional imaging of ‘theory of mind’. Trends Cogn Sci. 2003;7:77–83. doi: 10.1016/s1364-6613(02)00025-6. [DOI] [PubMed] [Google Scholar]

- 37.Saxe R, Carey S, Kanwisher N. Understanding other minds: Linking developmental psychology and functional neuroimaging. Annu Rev Psychol. 2004;55:87–124. doi: 10.1146/annurev.psych.55.090902.142044. [DOI] [PubMed] [Google Scholar]

- 38.Saxe R, Kanwisher N. People thinking about thinking people. The role of the temporo-parietal junction in “theory of mind”. Neuroimage. 2003;19:1835–1842. doi: 10.1016/s1053-8119(03)00230-1. [DOI] [PubMed] [Google Scholar]

- 39.Rizzolatti G, Fogassi L, Gallese V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci. 2001;2:661–670. doi: 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- 40.Rizzolatti G, Arbib MA. Language within our grasp. Trends Neurosci. 1998;21:188–194. doi: 10.1016/s0166-2236(98)01260-0. [DOI] [PubMed] [Google Scholar]

- 41.Arbib MA, Liebal K, Pika S. Primate vocalization, gesture, and the evolution of human language. Curr Anthropol. 2008;49:1053–1063. doi: 10.1086/593015. discussion 1063–1076. [DOI] [PubMed] [Google Scholar]

- 42.Dinstein I, Thomas C, Behrmann M, Heeger DJ. A mirror up to nature. Curr Biol. 2008;18:R13–R18. doi: 10.1016/j.cub.2007.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hanson SJ, Gagliardi AD, Hanson C. Solving the brain synchrony eigenvalue problem: Conservation of temporal dynamics (fMRI) over subjects doing the same task. J Comput Neurosci. 2009;27:103–114. doi: 10.1007/s10827-008-0129-z. [DOI] [PubMed] [Google Scholar]

- 44.Jääskeläinen IP, et al. Inter-subject synchronization of prefrontal cortex hemodynamic activity during natural viewing. Open Neuroimaging J. 2008;2:14–19. doi: 10.2174/1874440000802010014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Schippers MB, Roebroeck A, Renken R, Nanetti L, Keysers C. Mapping the information flow from one brain to another during gestural communication. Proc Natl Acad Sci USA. 2010;107:9388–9393. doi: 10.1073/pnas.1001791107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J R Stat Soc B. 1995;57:289–300. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.