Abstract

Paradoxically, improvements in emergency medicine have increased survival albeit with severe disability ranging from quadriplegia to “locked-in syndrome.” Locked-in syndrome is characterized by intact cognition yet complete paralysis, and hence these individuals are “locked-in” their own body, at best able to communicate using eye blinks alone. Sniffing is a precise sensory-motor acquisition entailing changes in nasal pressure. The fine control of sniffing depends on positioning the soft palate, which is innervated by multiple cranial nerves. This innervation pattern led us to hypothesize that sniffing may remain conserved following severe injury. To test this, we developed a device that measures nasal pressure and converts it into electrical signals. The device enabled sniffs to control an actuator with speed similar to that of a hand using a mouse or joystick. Functional magnetic resonance imaging of device usage revealed a widely distributed neural network, allowing for increased conservation following injury. Also, device usage shared neural substrates with language production, rendering sniffs a promising bypass mode of communication. Indeed, sniffing allowed completely paralyzed locked-in participants to write text and quadriplegic participants to write text and drive an electric wheelchair. We conclude that redirection of sniff motor programs toward alternative functions allows sniffing to provide a control interface that is fast, accurate, robust, and highly conserved following severe injury.

Keywords: locked-in, quadriplegic, soft palate

Paradoxically, improvements in emergency medicine have rendered injuries previously fatal into injuries that are now survived yet accompanied by life-long severe disability ranging from quadriplegia to “locked-in syndrome” (1, 2). Locked-in syndrome is characterized by intact cognition yet complete paralysis (3–5), and hence these individuals are locked-in their own body, completely dependent on assistive technology. The locked-in state may follow stroke or trauma, and is also often the end stage of amyotrophic lateral sclerosis (6).

The most appealing prospect in assistive technology for the severely disabled is a genuine brain–computer or brain–machine interface (BCI/BMI), where brain activity would be harnessed (either invasively or noninvasively) to directly control devices (7–12). Although significant progress toward true BCIs has been made (10, 11, 13–15), these devices have yet to provide a solution for the masses of severely disabled (16). In contrast, slower measures dependent on autonomic functions such as salivary pH (17) or brain activity as measured with functional MRI (fMRI) (18, 19) both provide valuable windows of communication, but neither allows rapid self-expression. Thus, currently available means of communication and environmental control for high-level spinal cord injury rely on capturing residual control of organs such as the head (20) or tongue (21), and solutions for locked-in syndrome rely on capturing residual control of the sphincter muscles or eye movements (22–24). Although these and other approaches provide solutions (25), there remains a profound need for effective human-to-machine interfaces that will function in severe disability (26).

Whether inspiratory air is directed through the mouth as an inspiration or through the nose as a sniff is determined in part by positioning of the soft palate. Whereas a closed soft palate allows only oral airflow, an open soft palate allows nasal airflow (27). The resultant sniffs are highly coordinated motor acts directed at sensory acquisition (28, 29). Humans can rapidly modulate their own sniffs, changing airflow rate within 160 ms in accordance with odorant content (30). Furthermore, overall sniff duration (31) and pattern (32) are modulated in real time to optimize olfactory perception. In other words, sniffs may be used to convey a highly accurate binary signal (sniff onset or offset), as well as a highly accurate analog (sniff magnitude and duration) and directional (“sniff in” or “sniff out”) signal. Furthermore, because the soft palate is innervated by multiple cranial nerves (33, 34), we hypothesized that sniff modulation may remain highly conserved following injury. To test this, we built a sniff-dependent interface we call the sniff controller. The device measures changes in nasal pressure, or sniffs, that change as a function of soft-palate positioning (Fig. 1 A and B). We then set out to test its use in both healthy and disabled participants.

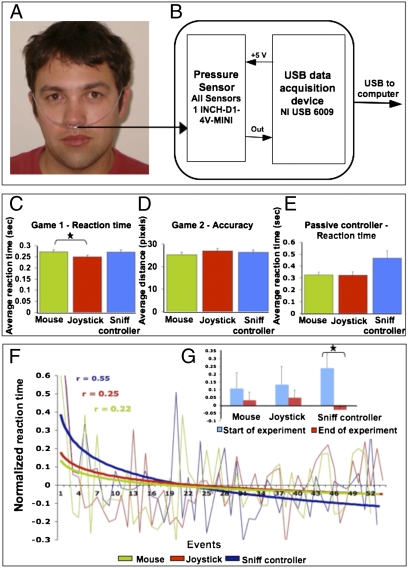

Fig. 1.

Sniffs provide rapid and accurate control. (A) The nasal cannula used to carry nasal pressure to the sensor. (B) The sniff controller. (C) Reaction time (RT) for game 1 using a mouse (M), joystick (J), or sniff controller (SC) [game 1 RT (s): M = 0.27 ± 0.06, J = 0.25 ± 0.05, SC = 0.27 ± 0.07; F = 3.78, P < 0.03, reflecting faster J than M (Tukey P < 0.04) but no other significant differences; game 2 RT (s): M = 0.63 ± 0.4, J = 0.58 ± 0.3, SC = 0.61 ± 0.4; F = 0.44, P = NS]. (D) Activation accuracy (distance from target in pixels) for game 2 using a mouse, joystick, or sniff controller. (E) Reaction time using a mouse, joystick, or passive sniff controller (PSC) [RT (s): M = 0.32 ± 0.07, PSC = 0.46 ± 0.18, Tukey P = 0.06; J = 0.32 ± 0.08, PSC = 0.46 ± 0.18, Tukey P = 0.057]. (F and G) Reaction time over a 5-min period (logarithmic fit). Note improvement with the sniff controller [normalized RT (Z score): first 8 trials M = 0.11 ± 0.32, last 8 trials M = 0.03 ± 0.162, t = 0.58, P = NS; first 8 trials J = 0.132 ± 0.37, last 8 trials J = 0.05 ± 0.153, t = 0.58, P = NS; first 8 trials SC = 0.24 ± 0.29, last 8 trials SC = 0.03 ± 0.07, t = 2.49, P < 0.02]. Error bars represent SE. *P < 0.05.

Results

To ask whether the sniff controller can “press a button” as would a healthy hand, we measured the performance of 36 healthy participants who played a series of computer games using either a computer mouse, a gaming joystick trigger, or the sniff controller (here sniff magnitude beyond a set threshold pressed the button). Despite life-long experience with the former, sniff-controller reaction time was equal to that of the mouse and joystick trigger (all Tukey P > 0.05) (Fig. 1 C and D). Furthermore, whereas reaction time was constant throughout the 5-min game for both the mouse and joystick, it in fact diminished over time (i.e., performance improved) for the sniff controller (P < 0.02) (Fig. 1 F and G). Finally, game 2 called for pressing the button as a function of a moving cursor's location, and thus also provided a measure of accuracy in addition to latency. Sniff-controller accuracy was equal to that of the mouse or joystick [distance accuracy (pixels): mouse = 25.35 ± 6.2, joystick = 26.91 ± 6.5, sniff controller = 26.35 ± 6.33; F = 0.5, P = nonsignificant (NS)] (game 3 in Fig. S1). In other words, sniffs can provide rapid and accurate control.

The sniff controller reflects changes in nasal pressure following positioning of the soft palate, yet the origin of the air passed through the nose is inconsequential to this. With artificially respirated individuals in mind, we developed the passive sniff controller. Here a small pump is used to generate a low-flow (3 L/min) stream of air into a nasal mask. The sniff controller measures pressure in this mask (Fig. S2). Opening the soft palate reduces mask pressure (as air can escape into the nose), and closing the soft palate increases mask pressure. This generates a signal independent of respiration. We tested this passive version in 10 healthy participants using a computer game, and found that it allowed fast responses, albeit slightly slower than that of a mouse or joystick trigger (Tukey P = 0.057) (Fig. 1E). Thus, the sniff controller can be used independently of respiration.

We next replaced the gaming software with text-writing software that allowed selecting letters from the alphabet and words from a word-completion list (Movie S1). Two healthy subjects then used the passive sniff controller to write text while holding their breath for extended periods of time (to simulate a person without respiration). These subjects were able to write with the passive sniff controller (while not breathing) at a rate of a signal every 3.2 ± 0.14 s. Using word completion, this allowed these subjects to write a sentence containing 43 letters within 289 ± 55 s, or in other words at 6.73 s per letter (Movie S1). Implementation of intelligent word completion and writing codes (35) may allow normal writing speed at this signal-generating rate.

To investigate the neural substrates of using the sniff controller, we used fMRI to measure the brain response in 12 healthy participants during volitional closing and opening of the soft palate. We used a block-design paradigm where subjects alternated between 32 s of repeatedly opening and closing of their soft palate versus 32 s of rest. This can be thought of as a soft-palate equivalent of the classic finger-tapping task. Soft-palate positioning was monitored in real time using both spirometry and an MR acquisition that contained the soft palate itself.

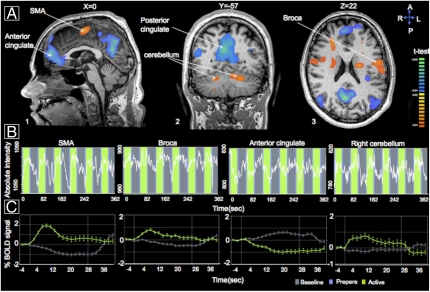

Consistent with the neural representation of sniffing (36, 37), volitional motor control of the soft palate involved a large network of brain structures (Table S1). These regions included several cortical (supplementary motor area BA6) and subcortical (left and right cerebellum) substrates, as well as a clear representation of soft-palate positioning within classical language areas, particularly in the Pars opercularis (Broca, BA44) (Fig. 2 and Fig. S3). This commonality with language production further implicates the sniff controller as a potentially intuitive alternative to language production. These results in healthy participants encouraged us to test our device in locked-in individuals.

Fig. 2.

Sniffs rely on a distributed neural substrate overlapping with language production. (A) Several regions with significantly lower or higher levels of activity during soft-palate repositioning as compared with a resting baseline. (B) Example time courses of fMRI signal from several key regions during volitional positioning of the soft palate. (C) The mean group fMRI signal change in the same key regions. Critically, note soft-palate positioning representation in the Pars opercularis (Broca, BA44) language area. SMA, supplementary motor area; BOLD, blood oxygen level dependent.

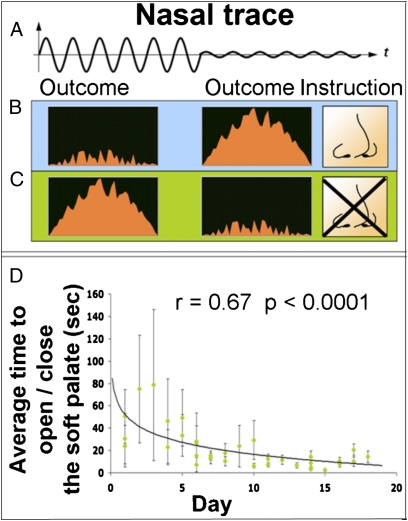

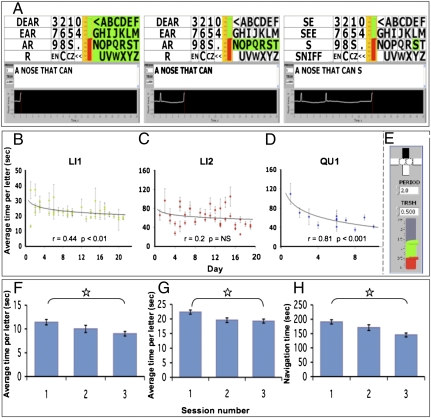

We approached LI1, a 51-y-old woman who was locked-in following a stroke that occurred ~7 mo prior (Fig. S4). LI1 had no peripheral motion, was unable to generate well-controlled eye blinks, and was trachiated yet breathing independently. Although most of LI1’s respiratory flow passed in and out through the trachea, we hypothesized that if she opened her soft palate, a portion of the respiratory flow would be detected at the nose as a sniff. We accommodated LI1 with the sniff controller linked to biofeedback software (Fig. 3 B and C). Whereas LI1 was initially unable to direct airflow through her nose, she gained the ability over time such that after 19 d of 20 min/d of practice, LI1 generated a sniff within 2.3 ± 1.14 s of an instruction to do so (Fig. 3D). At this stage, we replaced the biofeedback software with text-writing software that allowed LI1 to sniff at an appropriate time to select letters from the alphabet and words from a word-completion list (Fig. 4A and Movie S2). LI1 started writing with this device at once, initially answering questions, and after a few days generated her first poststroke meaningful self-initiated communication that entailed a profound personal message to her family. LI1 continues to use the device as her sole means of self-initiated meaningful expression. Her mean time per letter improved from 26.93 ± 15.81 to 20.66 ± 8.92 s (r = 0.44, P < 0.01) within 20 d (Fig. 4B), but has not further accelerated. To estimate the number of errors LI1 makes at this writing rate, we provided her with a daily task of writing (from memory) the beginning of a familiar Israeli song containing 78 characters (with spaces). Her mean number of errors (i.e., selecting the wrong letter, and then correcting using the backspace) across 10 d of practice was 13 ± 9.34 (~17%).

Fig. 3.

A locked-in participant learned to control the soft palate using biofeedback. (A) A schematic nasal trace reflecting first an open soft palate resulting in nasal flow, and then a closed soft palate resulting in blocked nasal flow. (B) An instruction to open the soft palate (open nose) is “rewarded” by a reduction in flame height when nasal airflow is detected, yet the flame grows when nasal flow is not detected. (C) An instruction to close the soft palate (blocked nose) is rewarded by a reduction in the flame height when no nasal airflow is detected, yet the flame grows when nasal flow is detected. (D) LI1’s latency to opening or closing the soft palate from the moment an instruction was given as a function of days of practice (logarithmic fit). Error bars represent SE.

Fig. 4.

Writing with sniffs. (A) The sniff-controlled letter-board interface. The green highlight moves sequentially from the block of letters to the block of signs to the block of word completions repeatedly until selected by a sniff (sniff trace seen in the lower black window). Here the block of letters was selected (left panel), resulting in the green highlight now moving from line to line. The third line was selected by a sniff (middle panel), resulting in the green highlight now moving across the letters in the third line. The sixth letter (S) was selected by a sniff (right panel), resulting in the letter S being added to the text line, and the process then starts over. (B) Learning curve for letter generation for LI1. (C) Learning curve for letter generation for LI2. (D) Learning curve for letter generation for QU1 using the sniff-controlled cursor (all graphs logarithmic fit). (E) The sniff-controlled cursor interface. (Top) The active state denoted by the black bar moves sequentially across the four directions (rectangles) and the two mouse buttons (circles), and can be activated at any stage by a sniff. (Bottom) The red bar denotes the threshold for signal generation, and the green bar denotes current nasal flow. In other words, in the current state a signal was generated, and because the upper bar was currently blackened, the computer cursor would now move from the bottom toward the top of the screen (south to north). (F) Average time per letter for 10 quadriplegic participants in three consecutive trials using the letter-board interface (as in A). (G) Average time per letter for 10 quadriplegic participants in three consecutive trials using the navigational interface (as in E). (H) Ten quadriplegic participants’ total time for opening an internet browser and performing a search. All error bars represent SE. *P < 0.05.

Encouraged by the results with LI1, we approached LI2, a 42-y-old man who was locked-in for ~18 y following a car accident (Fig. S4). LI2 had no peripheral motion, and communicated by blinking of one eye. A past attempt to apply an eye tracker had failed because LI2 “did not like it,” and LI2 remained dependent on the ability of his caretakers to read his eye blinks. Like LI1, LI2 was trachiated yet breathing independently.

Unlike LI1, LI2 did not require a biofeedback training phase. LI2 generated a sniff at first try, and used the sniff controller to write his own name within 20 min of first fitting the device. LI2 compared the device to his previous experience with an eye tracker, writing that it is “more comfortable and more easy to use.” His average time per letter decreased within 3 wk from 60.67 ± 52.79 to 49.33 ± 36.17 s (r = 0.2, P = NS) (Fig. 4C). LI2 continues to use the sniff controller regularly.

We further approached LI3, a 64-y-old man who was locked-in following a stroke that occurred ~4 y prior. LI3 had seemingly intentional motion in his right hand, yet efforts to have him use it for communication through either writing or typing had failed for no apparent reason. His only means of communication was eye movements that were interpretable by his immediate family but did not allow any self-initiated expression. Despite 2 mo of daily practice, LI3 did not gain control of his soft palate. This is consistent with ~25% of healthy volunteers screened for the fMRI study, who similarly did not have volitional control of their soft palate at initial screening (SI Text). That said, in that LI3 was also severely depressed, we could not determine whether this failure reflected a genuine inability or rather disinterest.

LI3 embodied a typical example of the complications often associated with evaluating the cognitive and emotional state of locked-in patients (38). The sniff controller, however, may also provide a means of communication for individuals who are not locked-in yet cannot speak or write. With this in mind, we approached QU1, a 63-y-old woman with quadriplegia resulting from severe multiple sclerosis. QU1 could speak, albeit with significant difficulty. Like LI2, QU1 did not require soft-palate biofeedback, and was able to write with the sniff controller shortly after its introduction at a rate of 33.53 ± 26.1 s per letter. This was the first time QU1 had written in 10 y. After 3 wk of writing with the letter-board software, we furnished QU1 with more sophisticated software that enabled sniff control of the computer cursor (Fig. 4E and Movie S3). QU1 mastered this rapidly, using the cursor to choose letters from a virtual keyboard initially at 109.42 ± 60.83 s per letter, yet within 3 wk at 41.83 ± 29.33 s per letter (r = 0.81, P < 0.001) (Fig. 4D). QU1 now uses the sniff controller to surf the net and write e-mail.

The above studies entailed a significant effort invested in each participant, much like separate case studies that involved detailed measurements performed daily over months. To further assess the practicality of the sniff controller for non-locked-in yet severely disabled individuals, we measured the performance of 10 quadriplegic participants who used the sniff controller for the first time, in two tasks. The first task entailed using the standard letter-board interface (Fig. 4A and Movie S2) to write three words (a total of 11 letters and 2 spaces). Quadriplegic participants wrote with ease, accelerating from 11.41 ± 1.8 to 9 ± 1.51 s per letter in only three tries [t(difference between first and third) = 3.7, P < 0.005] (Fig. 4F). The second task entailed using the navigational interface (Fig. 4E and Movie S3) to open an internet browser, write the same above three words on a page, copy and paste them into a search engine, and perform the search. Again, quadriplegic participants completed this navigational task with ease, improving across three tries [time to opening browser: first = 92.4 ± 20.96 s, second = 8 1.4 ± 18.31 s, third = 74.3 ± 15.5 s, t(difference between first and third) = 4.29, P < 0.002; average time per letter: first = 22.36 ± 2.29 s, second = 19.58 ± 2.45 s, third = 19.25 ± 2.14 s, i(difference between first and third) = 3.25, P < 0.01; time to copy, paste, and search: first = 98.3 ± 17.34 s, second = 89.2 ± 16.65 s, third = 70.8 ± 10.42 s, t(difference between first and third) = 3.57, P < 0.006] (Fig. 4 G and H). In other words, non-locked-in yet severely disabled individuals could use the sniff controller with little or no practice.

Encouraged by the ability to use the sniff controller for writing text in 2 of 3 locked-in individuals, 1 nearly locked-in individual, and 10 of 10 quadriplegic individuals, we set out to test its application to additional aspects of environmental control. We built a simple sniff-dependent code for directional motion where two successive “sniffs in” implied “forward,” two successive “sniffs out” implied backward, successive “sniffs out then in” implied “left,” and successive “sniffs in then out” implied “right” (Fig. 5 A–C). We linked this controller to an electric wheelchair, such that each activation of a command generated an incremental action in the intended direction. In other words, a “left” command turned the chair slightly left, an additional “left” command turned the chair more to the left, and so on.

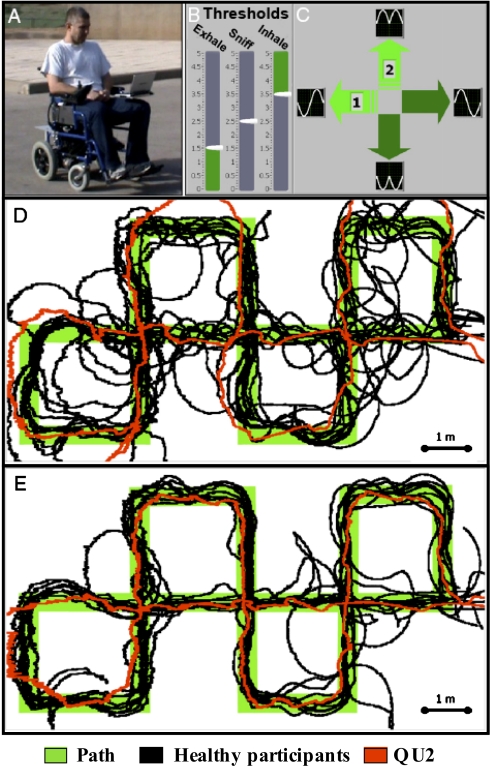

Fig. 5.

Driving with sniffs. (A) A healthy participant driving the sniff-controlled wheelchair. (B and C) The user interface. (B) The threshold settings for sniff-in and sniff-out activation levels. (C) The current direction. Note the sniff pattern denoted for each direction. For example, a turn left is sniff out then in. (D) Results of the first trial driving the sniff-controlled wheelchair. The complex path is denoted in green, with overlaid performance of 10 healthy participants in black and QU2 in red. (E) Results of the third trial driving the sniff-controlled wheelchair, same color code as above.

We first tested the ability of 10 healthy participants to drive the wheelchair along a complex 35-m-long path. The path consisted of many 90° turns, as these were relatively more difficult to manage using our interface. Nevertheless, subjects navigated this path with ease, and improved across three 3- to 5-min-long practice sessions [average distance from path (cm) using sniff controller: practice 1 = 29.52 ± 7.51, practice 2 = 27.06 ± 14.18, practice 3 = 19.01 ± 7.79, F = 3.35, P = 0.058; first vs. third: t = 3.67, P < 0.005] (Fig. 5 D and E and Movie S4). Subjects remained, however, more accurate with a standard joystick (J = 7.33 ± 1.34, t = 4.02, P < 0.003).

After finding that healthy participants could drive the wheelchair using the sniff controller, we set out to test whether a quadriplegic participant could do the same. We fitted the sniff controller to the existing chair of subject QU2, a 30-y-old man paralyzed from the neck down (C3) following a car accident that occurred 6 y earlier. Whereas QU2 performed worse than the average healthy participant at his first use of the sniff controller (QU2 error = 36.25 cm, average healthy error = 29.52 ± 7.51, t = 2.83, P < 0.02), he was equal to healthy participants by his second (21.53 cm error, t = 1.17, P = 0.27) and third (16.68 cm, t = 0.89, P = 0.39) trials. In other words, a quadriplegic person could use the sniff controller to drive an electric wheelchair with high precision following a total of only 15 min of practice (Fig. 5 D and E).

Finally, a concern one may raise regarding the proposed sniff-control technology is that in high-demand situations (e.g., wheelchair control) it may lead to hyperventilation. To test this, 14 subjects activated the sniff controller at different rates (0.2, 0.25, 0.33, 0.5, and 1 Hz) continuously for 2 min, followed by 2 min of regular respiration in which we measured end-tidal CO2. There was no difference in end-tidal CO2 between the baseline period and the following sniffing periods (4.5% ± 0.3% at 0.2 Hz; 4.5% ± 0.4% at 0.25 Hz; 4.4% ± 0.4% at 0.33 Hz; 4.5% ± 0.5% at 0.5 Hz; and 4.3% ± 0.5% at 1 Hz; F = 2.2, P = NS) (Fig. S5), largely negating the concern of hyperventilation.

Discussion

We hypothesized that sniffs would allow precise device control, and that this would remain conserved in cases of severe disability. The former was confirmed in a series of experiments with 96 healthy participants, and the latter was confirmed in ongoing studies with 15 severely disabled participants. Initial observation suggests that sniff control was far slower in disabled than in healthy participants. This impression, however, is exacerbated by the fact that whereas most of the values reported in this manuscript for healthy participants were signal-generation latencies, most of the values for disabled participants were letter-generation latencies. Selecting a letter from the letter board, however, typically entails generating three separate signals: sniffing to select a “view” (letters, numbers, or word completion), sniffing to select a line, and then sniffing to select a letter. Furthermore, the opportunity to make each of these selections was subject to a preset interselection interval that further lengthened the process. To more directly gauge the difference between healthy and disabled users, one can compare activation of the computer game by healthy users (Fig. 1) to the biofeedback task performed by LI1 (Fig. 3). This comparison reveals an ~10-fold increase in reaction time for LI1 compared with healthy users (~2.5 s vs. ~250 ms). In turn, using the sniff controller, quadriplegic participants were equal to healthy participants at writing speed (Fig. 4 F–H) and wheelchair-driving accuracy (Fig. 5 D and E). In other words, disabled participants could use the sniff controller at speeds that allowed various tasks.

Regarding activities such as wheelchair control, one may question the practical boundary conditions of the sniff controller: Could a person crash a wheelchair because they took a breath instead of sniffing? If the user has good control of the soft palate, then they can breath independently of sniffing. However, considering the possibility of less-than-perfect control, the sniff controller can be programmed as a function of error cost. In the current wheelchair control software, all commands were double, that is, two consecutive sniffs in, two consecutive sniffs out, and so forth (Movie S4). The probability of unintentionally generating two consecutive breaths within a defined limited time window is low. If one wanted to control an actuator with even greater safety, one could implement an even more complex code, for example, two quick sniffs in followed by a sniff out to actuate. The probability of unintentionally activating such a sniff train is exceedingly low. However, the safer the code, the longer it takes to actuate. In this respect, our double-sniff code was a good compromise, as it was sufficiently fast to drive yet none of our naive participants, either healthy or quadriplegic, crashed the wheelchair during a total of 33 driving sessions. Finally, in this respect, we speculate that all users may significantly improve at sniff control over prolonged practice. Such improvement depends on the development of intricate volitional dissociation between sniffing and breathing. At the simplest level, volitional opting for a nasal over oral (or tracheal) inspiration is sufficient to generate sniff control. However, practice-derived control of the soft palate has allowed long-term healthy users such as coauthors A.P. and N. Sobel to use the sniff controller to write and talk at the same time, drive a wheelchair and talk at the same time, and so forth. We speculate that practice may allow many disabled users similar levels of control.

A key question is how sniff control compares with other available assistive technologies. As noted in the introduction, genuine brain–computer or brain–machine interfaces provide the most promising direction (7–15), yet these devices have yet to provide a solution for the masses of severely disabled (16). For severely disabled individuals who are not locked-in, there are several types of assistive technology. These include the aforementioned tongue control, sip-puff, head-motion sensors, and eye tracking (20, 21, 25). Each of these methods has its advantages, yet we submit that the combination of simplicity, robustness, high degrees of freedom, minimal attentional demands, and neural conservation renders sniff control a particularly appealing solution (see SI Discussion for additional comparison across methods). As to assistive technology applicable in the locked-in state, aside from directly measuring brain activity or slow autonomic responses, to the best of our knowledge the only previously available solutions were sphincter control and eye tracking (22–24). Sniff control, however, costs a fraction of these methods, yet provides several advantages highlighted by the increased degrees of freedom offered by the combination of binary and proportional sniff-dependent signals.

Using the sniff controller, locked-in participants wrote at a rate similar to that measured by others using a noninvasive P300 brain–machine interface (39, 40). In the eyes of the healthy observer, writing at about 3 letters (LI1) or 1.5 letters (LI2) per min may seem frustratingly slow. Such writing speeds, however, are greeted with enthusiasm by locked-in individuals. The critical aspect of such assistive technology is that it allows self-generated meaningful expression rather than merely answering yes/no questions. The speed of this self-expression is less important to individuals who, put bluntly, have no other options. Indeed, after establishing communication with LI1 and LI2, we asked them to suggest improvements to the sniff controller. Both LI1 and LI2 are computer-savvy, and are clearly capable of raising the issue of writing speed, yet neither of them did. LI1 requested that we better secure the laptop to her wheelchair so that it would not fall (it never did, yet this concerned her). Finally, in this respect, it is noteworthy that the influential novel The Diving Bell and the Butterfly (41) was written by locked-in Jean-Dominique Bauby using eye movements at a rate of about 1 word every 2 min, a rate faster than LI2 sniff control but slower than LI1 sniff control. In other words, such writing rates allow meaningful self-expression.

With all of the above in mind, we conclude that redirection of sniff motor programs toward alternative functions allows sniffing to provide a control interface that is fast, accurate, robust, and highly conserved following severe injury. This approach, which may provide a host of viable solutions for the growing population of individuals who are severely disabled, now awaits testing in additional disorders of consciousness, including the vegetative state.

Methods

Ninety-six healthy and 15 severely disabled subjects participated in studies after providing informed consent to procedures approved by the Helsinki Committee. Disabled participants are described in detail in SI Methods. The sniff controller consisted of a nasal cannula that carries changes in nasal air pressure from the nose to a pressure transducer (Fig. 1B). The pressure transducer translates these pressure changes into an electrical signal that is passed to the computer via a USB connection. For computer games, subjects used the various actuators to indicate when a target changed its color, or when it arrived at an intended location. To write text with the sniff controller, using a simple interface (Fig. 4A and Movies S1 and S2), the user sniffed when a particular letter was highlighted (letters were highlighted sequentially) or, using a sophisticated interface (Fig. 4E and Movie S3), the user sniffed when a particular cursor direction was “active” (the active state shifted sequentially). A block-design fMRI study of soft-palate closure was conducted using imaging methods previously described (36, 37), here using a random-effects statistical design. All statistical comparisons depended on repeated measures of analysis of variance followed by individual tests with Tukey correction for multiple comparisons whenever appropriate. See SI Methods for additional details regarding methods.

Supplementary Material

Acknowledgments

This work was supported by an FP7 Grant from the European Research Council (200850). N.S. is also supported by the James S. McDonnell Foundation.

Footnotes

Conflict of interest statement: The Weizmann Institute has filed for a patent on the sniff-controlled technology that is at the heart of this manuscript.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1006746107/-/DCSupplemental.

See Commentary on page 13979

References

- 1.Ling GS, Rhee P, Ecklund JM. Surgical innovations arising from the Iraq and Afghanistan wars. Annu Rev Med. 2010;61:457–468. doi: 10.1146/annurev.med.60.071207.140903. [DOI] [PubMed] [Google Scholar]

- 2.Marris E. Four years in Iraq: The war against wounds. Nature. 2007;446:369–371. doi: 10.1038/446369a. [DOI] [PubMed] [Google Scholar]

- 3.American Congress of Rehabilitation Medicine Recommendations for use of uniform nomenclature pertinent to patients with severe alterations in consciousness. Arch Phys Med Rehabil. 1995;76:205–209. doi: 10.1016/s0003-9993(95)80031-x. [DOI] [PubMed] [Google Scholar]

- 4.Plum F, Posner JB. Diagnoses of Stupor and Coma. Philadelphia: F.A. Davis; 1966. [Google Scholar]

- 5.Smith E, Delargy M. Locked-in syndrome. BMJ. 2005;330:406–409. doi: 10.1136/bmj.330.7488.406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hayashi H, Kato S. Total manifestations of amyotrophic lateral sclerosis. ALS in the totally locked-in state. J Neurol Sci. 1989;93:19–35. doi: 10.1016/0022-510x(89)90158-5. [DOI] [PubMed] [Google Scholar]

- 7.Kennedy PR, Bakay RA. Restoration of neural output from a paralyzed patient by a direct brain connection. Neuroreport. 1998;9:1707–1711. doi: 10.1097/00001756-199806010-00007. [DOI] [PubMed] [Google Scholar]

- 8.Kübler A, Neumann N. Brain-computer interfaces—The key for the conscious brain locked into a paralyzed body. Prog Brain Res. 2005;150:513–525. doi: 10.1016/S0079-6123(05)50035-9. [DOI] [PubMed] [Google Scholar]

- 9.Nicolelis MAL. Brain-machine interfaces to restore motor function and probe neural circuits. Nat Rev Neurosci. 2003;4:417–422. doi: 10.1038/nrn1105. [DOI] [PubMed] [Google Scholar]

- 10.Birbaumer N, et al. A spelling device for the paralysed. Nature. 1999;398:297–298. doi: 10.1038/18581. [DOI] [PubMed] [Google Scholar]

- 11.Wolpaw JR, McFarland DJ. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proc Natl Acad Sci USA. 2004;101:17849–17854. doi: 10.1073/pnas.0403504101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clin Neurophysiol. 2002;113:767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 13.Sellers EW, Donchin E. A P300-based brain-computer interface: Initial tests by ALS patients. Clin Neurophysiol. 2006;117:538–548. doi: 10.1016/j.clinph.2005.06.027. [DOI] [PubMed] [Google Scholar]

- 14.Hochberg LR, et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 15.Abbott A. Neuroprosthetics: In search of the sixth sense. Nature. 2006;442:125–127. doi: 10.1038/442125a. [DOI] [PubMed] [Google Scholar]

- 16.Ryu SI, Shenoy KV. Human cortical prostheses: Lost in translation? Neurosurg Focus. 2009;27:E5. doi: 10.3171/2009.4.FOCUS0987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Wilhelm B, Jordan M, Birbaumer N. Communication in locked-in syndrome: Effects of imagery on salivary pH. Neurology. 2006;67:534–535. doi: 10.1212/01.wnl.0000228226.86382.5f. [DOI] [PubMed] [Google Scholar]

- 18.Monti MM, et al. Willful modulation of brain activity in disorders of consciousness. N Engl J Med. 2010;362:579–589. doi: 10.1056/NEJMoa0905370. [DOI] [PubMed] [Google Scholar]

- 19.Owen AM, Coleman MR. Functional neuroimaging of the vegetative state. Nat Rev Neurosci. 2008;9:235–243. doi: 10.1038/nrn2330. [DOI] [PubMed] [Google Scholar]

- 20.Dymond E, Potter R. Controlling assistive technology with head movements—A review. Clin Rehabil. 1996;10:93–103. [Google Scholar]

- 21.Struijk LN. An inductive tongue computer interface for control of computers and assistive devices. IEEE Trans Biomed Eng. 2006;53:2594–2597. doi: 10.1109/TBME.2006.880871. [DOI] [PubMed] [Google Scholar]

- 22.Birbaumer N. Breaking the silence: Brain-computer interfaces (BCI) for communication and motor control. Psychophysiology. 2006;43:517–532. doi: 10.1111/j.1469-8986.2006.00456.x. [DOI] [PubMed] [Google Scholar]

- 23.Schnakers C, et al. Cognitive function in the locked-in syndrome. J Neurol. 2008;255:323–330. doi: 10.1007/s00415-008-0544-0. [DOI] [PubMed] [Google Scholar]

- 24.Majaranta P, Raiha KJ. Text entry by gaze: Utilizing eye-tracking. In: Mackenzie IS, Tanaka-Ishii K, editors. Text Entry Systems: Mobility, Accessibility, Universality. San Francisco: Morgan Kaufman; 2007. pp. 175–191. [Google Scholar]

- 25.Fugger E, Asslaber M, Hochgatterer A. Mouth-controlled interface for human-machine interaction in education & training. In: Marincek C, Buhler C, Knops H, Andrich R, editors. Assistive Technology: Added Value to the Quality of Life, AAATE 01. Amsterdam: IOS; 2001. pp. 379–383. [Google Scholar]

- 26.Wellings DJ, Unsworth J. Fortnightly review. Environmental control systems for people with a disability: An update. BMJ. 1997;315:409–412. doi: 10.1136/bmj.315.7105.409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Knecht M, Hummel T. Recording of the human electro-olfactogram. Physiol Behav. 2004;83:13–19. doi: 10.1016/j.physbeh.2004.07.024. [DOI] [PubMed] [Google Scholar]

- 28.Mainland J, Sobel N. The sniff is part of the olfactory percept. Chem Senses. 2006;31:181–196. doi: 10.1093/chemse/bjj012. [DOI] [PubMed] [Google Scholar]

- 29.Kepecs A, Uchida N, Mainen ZF. Rapid and precise control of sniffing during olfactory discrimination in rats. J Neurophysiol. 2007;98:205–213. doi: 10.1152/jn.00071.2007. [DOI] [PubMed] [Google Scholar]

- 30.Johnson BN, Mainland JD, Sobel N. Rapid olfactory processing implicates subcortical control of an olfactomotor system. J Neurophysiol. 2003;90:1084–1094. doi: 10.1152/jn.00115.2003. [DOI] [PubMed] [Google Scholar]

- 31.Sobel N, Khan RM, Hartley CA, Sullivan EV, Gabrieli JD. Sniffing longer rather than stronger to maintain olfactory detection threshold. Chem Senses. 2000;25:1–8. doi: 10.1093/chemse/25.1.1. [DOI] [PubMed] [Google Scholar]

- 32.Laing DG. Natural sniffing gives optimum odour perception for humans. Perception. 1983;12:99–117. doi: 10.1068/p120099. [DOI] [PubMed] [Google Scholar]

- 33.Strutz J, Hammerich T, Amedee R. The motor innervation of the soft palate. An anatomical study in guinea pigs and monkeys. Arch Otorhinolaryngol. 1988;245:180–184. doi: 10.1007/BF00464023. [DOI] [PubMed] [Google Scholar]

- 34.Shimokawa T, Yi S, Tanaka S. Nerve supply to the soft palate muscles with special reference to the distribution of the lesser palatine nerve. Cleft Palate Craniofac J. 2005;42:495–500. doi: 10.1597/04-142r.1. [DOI] [PubMed] [Google Scholar]

- 35.Ward DJ, MacKay DJ. Artificial intelligence: Fast hands-free writing by gaze direction. Nature. 2002;418:838. doi: 10.1038/418838a. [DOI] [PubMed] [Google Scholar]

- 36.Simonyan K, Saad ZS, Loucks TM, Poletto CJ, Ludlow CL. Functional neuroanatomy of human voluntary cough and sniff production. Neuroimage. 2007;37:401–409. doi: 10.1016/j.neuroimage.2007.05.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sobel N, et al. Sniffing and smelling: Separate subsystems in the human olfactory cortex. Nature. 1998;392:282–286. doi: 10.1038/32654. [DOI] [PubMed] [Google Scholar]

- 38.Laureys S, et al. The locked-in syndrome: What is it like to be conscious but paralyzed and voiceless? Prog Brain Res. 2005;150:495–511. doi: 10.1016/S0079-6123(05)50034-7. [DOI] [PubMed] [Google Scholar]

- 39.Guger C, et al. How many people are able to control a P300-based brain-computer interface (BCI)? Neurosci Lett. 2009;462:94–98. doi: 10.1016/j.neulet.2009.06.045. [DOI] [PubMed] [Google Scholar]

- 40.Nijboer F, et al. A P300-based brain-computer interface for people with amyotrophic lateral sclerosis. Clin Neurophysiol. 2008;119:1909–1916. doi: 10.1016/j.clinph.2008.03.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Bauby J-D. The Diving Bell and the Butterfly. Paris: Editions Robert Laffont; 1997. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.