SYNOPSIS

Objective

Existing knowledge of evidence-based chronic disease prevention is not systematically disseminated or applied. This study investigated state and territorial chronic disease practitioners' self-reported barriers to evidence-based decision making (EBDM).

Methods

In a nationwide survey, participants indicated the extent to which they agreed with statements reflecting four personal and five organizational barriers to EBDM. Responses were measured on a Likert scale from 0 to 10, with higher scores indicating a larger barrier to EBDM. We analyzed mean levels of barriers and calculated adjusted odds ratios for barriers that were considered modifiable through interventions.

Results

Overall, survey participants (n=447) reported higher scores for organizational barriers than for personal barriers. The largest reported barriers to EBDM were lack of incentives/rewards, inadequate funding, a perception of state legislators not supporting evidence-based interventions and policies, and feeling the need to be an expert on many issues. In adjusted models, women were more likely to report a lack of skills in developing evidence-based programs and in communicating with policy makers. Participants with a bachelor's degree as their highest degree were more likely than those with public health master's degrees to report lacking skills in developing evidence-based programs. Men, specialists, and individuals with doctoral degrees were all more likely to feel the need to be an expert on many issues to effectively make evidence-based decisions.

Conclusions

Approaches must be developed to address organizational barriers to EBDM. Focused skills development is needed to address personal barriers, particularly for chronic disease practitioners without graduate-level training.

Seven of every 10 Americans who die each year, or more than 1.7 million people, do so as a result of a chronic disease.1 In the United States, the direct and indirect costs of the most common chronic diseases totaled $1.3 trillion in 2003, with projected costs expected to reach $4.2 trillion in 2023.2 Evidence-based interventions have great potential to impact chronic disease, based on their reliance upon research-proven methods.3–6

Based on more than a decade of discourse, evidence-based public health is summarized as “making decisions based on the best available scientific evidence, using data and information systems systematically, applying program-planning frameworks, engaging the community in decision making, conducting sound evaluation, and disseminating what is learned.”7 Ideally, public health practitioners would make evidence-based decisions by employing these concepts in all chronic disease prevention programs. However, existing knowledge on effective chronic disease prevention is not systematically disseminated or applied.

There are now analytic tools that can identify evidence-based interventions and foster uptake. For example, the Task Force on Community Preventive Services has produced the Guide to Community Preventive Services (hereafter, the Community Guide), enumerating interventions that are effective in preventing chronic diseases.5 The topics covered in the Community Guide are diverse and include tobacco, physical activity, nutrition, cancer screening, diabetes, obesity, and other related topics. Despite the availability of such tools, numerous studies show that implementation of evidence-based interventions is low in many clinical and community settings.8–12

Effective programs do not achieve their potential if they are not disseminated beyond their original testing in research trials.13–15 Sparse knowledge exists regarding effective approaches for dissemination of research-tested interventions among real-world practice audiences. A systematic review of 35 dissemination studies found no strong evidence to recommend any particular dissemination strategy as effective in promoting the uptake of evidence-based chronic disease control interventions.16 Further research is necessary to understand the determinants and approaches that will enhance the dissemination of effective interventions. Given the 50 states' constitutional authority to protect the public's health, practitioners in state health departments are in a unique position to implement programs and services related to chronic disease control.17,18 These practitioners can provide rich insight into the processes by which evidence-based programs are implemented and disseminated.

Both individual and organizational factors can impede public health practitioners' ability to implement evidence-based programs.19 Previously identified barriers to implementation include lack of skilled personnel, lack of time to gather evidence, inadequate resources and funding, fragmented local and state public health services, and limited buy-in from leadership.8, 20–22 Additionally, public health decisions are made on short timescales due to short-term targets and budget cycles, impeding the ability to make longer-term plans that are often necessary for evidence-based interventions.23

To date, no U.S. studies have identified the salience of barriers to evidence-based practice among a representative sample of chronic disease practitioners. This article explores state and territorial chronic disease practitioners' barriers to evidence-based decision making (EBDM) and how these differ by characteristics of the practitioners. This analysis is part of an ongoing study that aims to increase the dissemination of evidence-based chronic disease interventions in public health agency settings.

METHODS

Survey development

State-level chronic disease practitioners from the 50 U.S. states and territories completed a 74-question online survey from June through August 2008. Along with questions about barriers to EBDM, the survey included questions about the use of the Community Guide and other resources, the importance and availability of key components of EBDM, personal chronic disease-related health behaviors of the respondent, and additional demographic information. Open-ended questions captured qualitative data on participants' perceptions of barriers to EBDM, changes needed to increase EBDM, and other resources for using evidence-based interventions. The survey was designed to be completed in 15 minutes.

The research team developed survey questions based on previous work24 and input from chronic disease practitioners. The survey was tested with a panel of consultants comprising chronic disease experts and a representative sample of the target population of the survey (n=12). After reviewing a draft of the survey, panel members gave cognitive response feedback in a one-hour telephone interview based on methods previously published.25–28 The research team incorporated this feedback into the final version of the survey.

Because it represents chronic disease practitioners at the state and territorial levels, the membership of the National Association of Chronic Disease Directors (NACDD) was chosen as the target population for this study. All members of NACDD were contacted by e-mail to explain the purpose of the study and the survey. One week later, participants received a link to the survey. Trained research staff phoned each participant to ensure receipt of the survey and to discuss any questions. Reminder e-mails were sent every few weeks until the survey closed.

Of particular relevance to this article were nine statements relating to barriers to evidence-based practices, presented on a Likert scale from 0 to 10, with 0 indicating “strongly disagree” and 10 indicating “strongly agree.” Participants reported the extent to which they agreed with statements reflecting four personal and five organizational barriers. Seven of the nine statements were reverse-coded so that for all barriers, a higher score indicated a larger barrier to EBDM.

Analysis

Of 469 survey respondents (response rate = 65%), 95% reported working in state health departments. Analysis was restricted to those individuals, resulting in a final sample size of 447 participants. Descriptive statistics were calculated for each barrier. Sample sizes varied due to missing data. For barriers with greater than 10% missing data, a Chi-square analysis was performed to compare participants with and without missing data across the variables listed in Table 1. Additionally, the mean score for each barrier was calculated for each U.S. state, excluding states with fewer than four respondents for a given barrier statement. These mean scores were divided into quartiles and mapped to discern geographic patterns. Due to a low number of respondents per state (mean = 8.0, 95% confidence interval [CI] 6.9, 9.0; range: 1–18), state-level results are not reported. However, patterns are presented to inform future research.

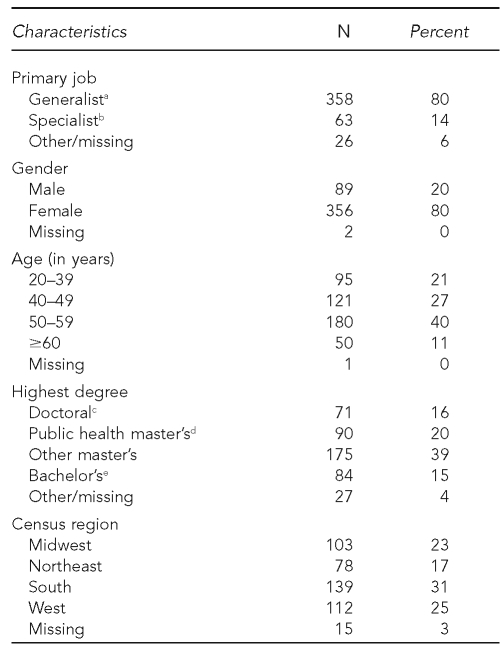

Table 1.

Characteristics of state and territorial chronic disease practitioners (n=447) who responded to a national survey on evidence-based decision making, 2008

aGeneralists included program managers/administrators/coordinators, program planners, division or bureau heads, division deputy directors, department heads, and an academic educator.

bSpecialists were health educators, epidemiologists, statisticians, program evaluators, community health nurses, social workers, dietitians, or nutritionists.

cDoctor of Philosophy, Doctor of Public Health, Doctor of Medicine, Doctor of Science, or Doctor of Osteopathic Medicine

dMaster of Public Health or Master of Science in Public Health

eBachelor of Arts or Bachelor of Science

In the survey, participants recorded all of the academic degrees that they held. To incorporate this information into multivariate analyses, the research team created a variable for the highest degree achieved. Survey participants were coded in the following order: (1) doctoral degree (Doctor of Philosophy, Doctor of Public Health, Doctor of Science, Doctor of Medicine, or Doctor of Osteopathic Medicine); (2) public health master's degree (Master of Public Health or Master of Science in Public Health); (3) other master's degree; and then (4) bachelor's degree (Bachelor of Arts or Bachelor of Science).

As in previous research, participants selected the job description that best reflected their primary position, and these responses were categorized by the research team into specialists and generalists.11 Generalists were program managers/administrators/coordinators, program planners, division or bureau heads, division deputy directors, department heads, or academic educators. Specialists were health educators, epidemiologists, statisticians, program evaluators, community health nurses, social workers, dietitians, or nutritionists.

The research team determined that lack of skills to develop evidence-based chronic disease programs (“develop”), lack of skills to effectively communicate evidence-based strategies to state-level policy makers (“communicate”), and feeling the need to be an expert on many issues to effectively make evidence-based decisions (“expert”) were the most modifiable barriers. Programs and interventions can be developed to modify these skills and perceptions. For those modifiable barriers, the scores were ranked and placed into tertiles. Using logistic regression models, we calculated adjusted odds ratios (AORs) and 95% CIs to compare those who reported the lowest third of barrier scores with those who reported the highest third. As there was a lack of previous research detailing the variables that impact barriers to EBDM, the following variables were included in a forced-entry, exploratory regression analysis: gender, age, highest degree, primary job, and census region.

Other barriers addressed in this study included fears about personal job security (“job security”), chronic disease prevention not being a high organizational priority (“prevention priority”), lack of organizational incentives/rewards for EBDM (“incentives”), the organizational culture not supporting creative thinking or the use of new ideas (“supportive culture”), inadequate agency funding for developing and implementing evidence-based programs (“funding”), and state legislators being unsupportive of evidence-based interventions and policies (“legislators”). The research team considered expert, communicate, develop, and job security to be personal barriers, while incentives, funding, legislators, supportive culture, and prevention priority were deemed to be organizational barriers.

RESULTS

Table 1 shows selected characteristics of the sample. Among survey respondents who reported working in state health departments (n=447), 80% were classified as generalists and 63% identified their primary position as a program manager/administrator/coordinator. The majority (80%) were female. The average length of experience in public health was 15.4 years (95% CI 14.6, 16.2). The final sample represented all 50 U.S. states, the District of Columbia, American Samoa, Puerto Rico, the Republic of Marshall Islands, and the U.S. Virgin Islands. Delaware, Georgia, Mississippi, Nevada, the District of Columbia, and each of the U.S. territories had fewer than four respondents.

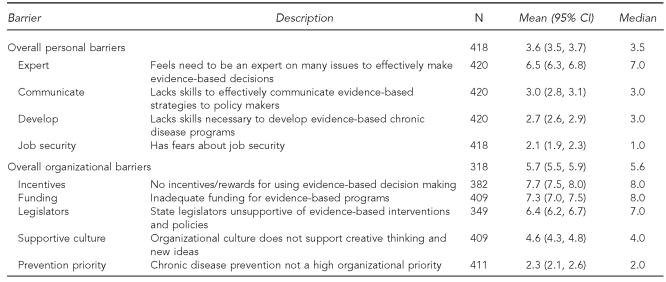

Seventy-one percent (n=318) of participants reported that they were aware of barriers to using evidence-based interventions or making evidence-based decisions. In response to the nine Likert scale barrier statements, higher scores were reported overall for organizational barriers (mean = 5.7; 95% CI 5.5, 5.9) than for personal barriers (mean = 3.6; 95% CI 3.5, 3.7) (Table 2). The most highly endorsed organizational barriers were incentives (mean = 7.7; 95% CI 7.5, 8.0), funding (mean = 7.3; 95% CI 7.0, 7.5), and legislators (mean = 6.4; 95% CI 6.2, 6.7). Among the personal barriers, expert was the major barrier (mean = 6.5; 95% CI 6.3, 6.8). Incentives and legislators each had greater than 10% missing data; however, looking across variables listed in Table 1, participants who did not answer these questions did not significantly differ from those who did (p≤0.05).

Table 2.

State and territorial chronic disease practitioners' self-reported barriersa to using evidence-based decision making to prevent chronic disease, 2008

aBarrier scores were measured on a 0–10 Likert scale, with higher scores indicating a greater perceived barrier.

CI = confidence interval

In the exploratory analysis of regional differences in barriers, patterns emerged in several of the maps showing barrier quartiles by state (data not shown). Regarding the develop barrier, a cluster of higher scores (third and fourth quartiles) emerged in the middle of the country. The majority of the highest barriers to legislators stretched across the South, with the third quartile clustering in the states surrounding Ohio. A visible clustering pattern appeared in the funding barrier, with many southern states reporting the highest barriers and states in the Rocky Mountain West reporting the lowest barriers.

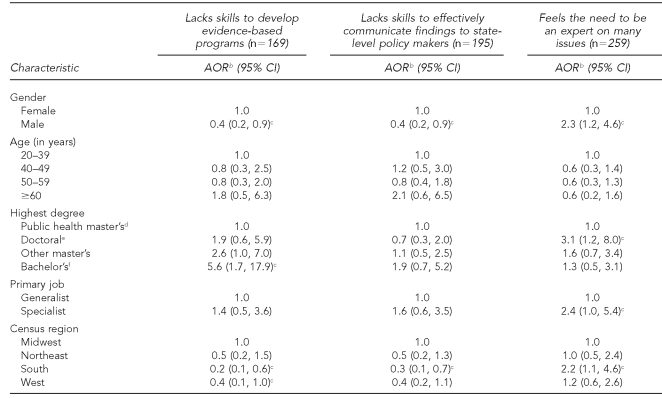

When the modifiable barriers were divided into tertiles, the lowest scores corresponded to responses of 0–1 for develop and communicate and 0–5 for expert. The highest scores corresponded to responses of 4–8 for develop, 4–10 for communicate, and 8–10 for expert. Table 3 shows the odds of reporting the highest levels for each of these barriers as compared with the lowest levels.

Table 3.

Odds of reporting highest tertile of scoresa for modifiable barriers to evidence-based decision making in a survey of state and territorial chronic disease practitioners, 2008

aBarrier scores were measured on a 0–10 Likert scale, with higher scores indicating a greater perceived barrier.

bAOR compared highest to lowest tertiles of barrier scores; logistic regression model included gender, age, degree, primary job, and census region as covariates.

cStatistically significant at p<0.05

dMaster of Public Health or Master of Science in Public Health

eDoctor of Philosophy, Doctor of Public Health, Doctor of Medicine, Doctor of Science, or Doctor of Osteopathic Medicine

fBachelor of Arts or Bachelor of Science

AOR = adjusted odds ratio

CI = confidence interval

Men and women reported significantly different barriers in develop, communicate, and expert. Controlling for age, highest degree, primary job, and census region, men were 60% less likely than women to report scores in the highest tertile for the barriers develop and communicate (for both barriers: AOR=0.4, 95% CI 0.2, 0.9). However, men were 2.3 times more likely to report scores in the highest tertile for the expert barrier (AOR=2.3, 95% CI 1.2, 4.6).

Compared with participants with a public health master's degree as their highest degree achieved, those with a bachelor's degree as their highest degree were 5.6 times more likely to report the highest barriers in develop in the adjusted model (AOR=5.6, 95% CI 1.7, 17.9). Significant differences were also found among highest degree and primary job type within the expert barrier. Participants with a doctoral degree, as compared with those with a public health master's degree, were 3.1 times as likely to report the highest barriers to expert, controlling for the other covariates (AOR=3.1, 95% CI 1.2, 8.0). Specialists also were 2.4 times as likely to report the highest expert barriers compared with generalists (AOR=2.4, 95% CI 1.0, 5.4).

Considering census regions, chronic disease practitioners in the South were less likely to report the highest scores for barriers in develop (AOR50.2, 95% CI 0.1, 0.6) and communicate (AOR50.3, 95% CI 0.1, 0.7) compared with the Midwest and more likely to report barriers in expert (AOR52.2, 95% CI 1.1, 4.6). Practitioners in the West were also less likely to report barriers in develop compared with the Midwest (AOR50.4, 95% CI 0.1, 1.0).

DISCUSSION

While experts agree that public health decisions should be science-based, chronic disease control programs are too often developed and implemented without utilizing practices found through research to be effective.7 A better understanding of the barriers to the dissemination of evidence-based practices has been needed, particularly in regard to organizational infrastructure barriers.29 While previous work has identified several barriers to EBDM,8, 20–22 this analysis was designed to quantify state-level chronic disease practitioners' assessments of how these barriers impact their work.

Participants in this survey identified that organizational factors were more likely to create additional barriers to EBDM than were personal skills and qualities as public health practitioners. Notably, they stated that inadequate funding for evidence-based programs, state legislators who did not support evidence-based interventions and policies, and a lack of incentives or rewards for using EBDM were the predominant organizational barriers to EBDM. While organizational barriers are difficult to modify through interventions, the results of this survey help communicate practitioners' opinions on the barriers that most restrict their practice of EBDM. A Designing for Dissemination conference identified that scientists, practitioners, and policy makers are disconnected in their views of dissemination.30 A growing body of evidence that addresses these disconnections may foster dialogue among the different groups, potentially resulting in funding, legislation, research, and organizational infrastructures that are more supportive of the public's health.

Despite the finding that the personal barriers were not the highest reported barriers, they may be the easiest to target for improvement. EBDM courses, such as the one developed by members of this team,11,31 could be employed to improve the skills of practitioners. According to the results of the logistic regression analysis in this survey, these courses could be tailored to help improve either the skills or beliefs of those groups that were found to have significant differences in their reports of personal barriers. For example, practitioners with a bachelor's degree as their highest degree were more than five times as likely to report a lack of skills in developing evidence-based programs compared with those who had a public health master's degree. Training courses could be developed to target practitioners without graduate-level training. Specialists (e.g., epidemiologists and health educators) and doctoral-level practitioners may benefit from basic skills training that complements their existing expertise.

Open-ended questions within this survey provided evidence that practitioners independently identified the same barriers that the quantitative aspects of this survey investigated. Participants stated that individuals' skills can impede EBDM, that funding is often inadequate to conduct evidence-based interventions, that political barriers exist, and that organizational barriers (e.g., lack of understanding, flexibility, or support) can undermine EBDM.32 Other qualitative work conducted by members of this team has highlighted the importance of leadership support, consistency in leadership direction, public health training for all staff members, funding, and the turnover of state government and its consequential changes in priorities.33

Limitations

A major limitation of this study was that data were self-reported. In particular, it is difficult to ascertain the difference between people's report of their personal skills and their actual skill level. One way to address this limitation would be to more objectively assess skills in practice settings (e.g., a manager's assessment) or to survey other stakeholders (e.g., policy makers or agency heads) on their commitment to EBDM. Additionally, those who responded to the survey may have had differing opinions on barriers to EBDM than those who did not respond, although studies of household surveys suggest that nonresponse bias is less likely with response rates higher than 65%.34 Data were not available to compare Table 1 characteristics with the entire NACDD membership.

Future research is needed to determine effective methods of overcoming these barriers (e.g., incorporating EBDM into funding requirements, making EBDM a component of practitioners' performance reviews, and offering tailored training to different types of practitioners). The discovery of geographic patterns in several of the barriers also warrants additional research, including state-specific data collection. States often differ in how they fund programs or in their laws and policies that influence public health spending and practice. Further evidence of regional differences in barriers to EBDM may indicate a need to prioritize funding or other resources by region. Also, this study did not consider the varying organizational structures of state health departments or the political climates within which they work. An important link exists between the structure of a state health department and its functioning capabilities.35

CONCLUSIONS

This survey examined public health practitioners' perceptions of barriers to EBDM. The results may improve state health departments' abilities to facilitate and encourage EBDM and, in turn, assist chronic disease practitioners in implementing chronic disease interventions that have been proven effective. Use of such interventions will improve the health of the public through the prevention of chronic diseases.

Footnotes

This work was funded through the Centers for Disease Control and Prevention grant #5R18DP001139-02 (Improving Public Health Practice through Translation Research) and contract #U48/DP000060 (Prevention Research Centers Program). The authors appreciate the assistance of the National Association of Chronic Disease Directors and all members who participated in the survey, and thank Melody Simchera for her contributions, as well as Fran Wheeler, David Momrow, Chris Maylahn, Russ Glasgow, LaDene Larsen, and Marc Manley for their input.

REFERENCES

- 1.Kung HC, Hoyert DL, Xu J, Murphy SL. Deaths: final data for 2005. Natl Vital Stat Rep. 2008 Apr 24;56:1–120. [PubMed] [Google Scholar]

- 2.DeVol R, Bedroussian A. An unhealthy America: the economic burden of chronic disease. Santa Monica (CA): Milken Institute; 2007. Oct, [Google Scholar]

- 3.Brownson RC, Bright FS. Chronic disease control in public health practice: looking back and moving forward. Public Health Rep. 2004;119:230–8. doi: 10.1016/j.phr.2004.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Remington PW, Brownson RC, Wegner MV, editors. Chronic disease epidemiology and control. 3rd ed. Washington: American Public Health Association; 2010. [Google Scholar]

- 5.Zaza S, Briss PA, Harris KW. The guide to community preventive services: what works to promote health? New York: Oxford University Press; 2005. [Google Scholar]

- 6.Kohatsu ND, Robinson JG, Torner JC. Evidence-based public health: an evolving concept. Am J Prev Med. 2004;27:417–21. doi: 10.1016/j.amepre.2004.07.019. [DOI] [PubMed] [Google Scholar]

- 7.Brownson RC, Fielding JE, Maylahn CM. Evidence-based public health: a fundamental concept for public health practice. Annu Rev Public Health. 2009;30:175–201. doi: 10.1146/annurev.publhealth.031308.100134. [DOI] [PubMed] [Google Scholar]

- 8.Brownson RC, Ballew P, Dieffenderfer B, Haire-Joshu D, Heath GW, Kreuter MW, et al. Evidence-based interventions to promote physical activity: what contributes to dissemination by state health departments. Am J Prev Med. 2007;33(1 Suppl):S66–73. doi: 10.1016/j.amepre.2007.03.011. [DOI] [PubMed] [Google Scholar]

- 9.Balas EA. From appropriate care to evidence-based medicine. Pediatr Ann. 1998;27:581–4. doi: 10.3928/0090-4481-19980901-11. [DOI] [PubMed] [Google Scholar]

- 10.Bero LA, Grilli R, Grimshaw JM, Harvey E, Oxman AD, Thomson MA. Closing the gap between research and practice: an overview of systematic reviews of interventions to promote the implementation of research findings. The Cochrane Effective Practice and Organization of Care Review Group. BMJ. 1998;317:465–8. doi: 10.1136/bmj.317.7156.465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dreisinger M, Leet TL, Baker EA, Gillespie KN, Haas B, Brownson RC. Improving the public health workforce: evaluation of a training course to enhance evidence-based decision making. J Public Health Manag Pract. 2008;14:138–43. doi: 10.1097/01.PHH.0000311891.73078.50. [DOI] [PubMed] [Google Scholar]

- 12.Lawrence RS. Diffusion of the U.S. Preventive Services Task Force recommendations into practice. J Gen Intern Med. 1990;5(5 Suppl):S99–103. doi: 10.1007/BF02600852. [DOI] [PubMed] [Google Scholar]

- 13.Brownson RC, Kreuter MW, Arrington BA, True WR. Translating scientific discoveries into public health action: how can schools of public health move us forward? Public Health Rep. 2006;121:97–103. doi: 10.1177/003335490612100118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cameron R, Brown KS, Best JA. The dissemination of chronic disease prevention programs: linking science and practice. Can J Public Health. 1996;87(Suppl 2):S50–3. [PubMed] [Google Scholar]

- 15.Oldenburg BF, Sallis JF, Ffrench ML, Owen N. Health promotion research and the diffusion and institutionalization of interventions. Health Educ Res. 1999;14:121–30. doi: 10.1093/her/14.1.121. [DOI] [PubMed] [Google Scholar]

- 16.Ellis P, Robinson P, Ciliska D, Armour T, Brouwers M, O'Brien MA, et al. A systematic review of studies evaluating diffusion and dissemination of selected cancer control interventions. Health Psychol. 2005;24:488–500. doi: 10.1037/0278-6133.24.5.488. [DOI] [PubMed] [Google Scholar]

- 17.McGowan AK, Brownson RC, Wilcox LS, Mensah GA. Prevention and control of chronic disease. In: Goodman RA, Lopez W, Matthews GW, Foster KL, editors. Law in public health practice. 2nd ed. New York: Oxford University Press; 2007. pp. 402–26. [Google Scholar]

- 18.Association of State and Territorial Directors of Health Promotion and Public Health Education, Centers for Disease Control and Prevention (US). Policy and environmental change: new directions for public health. Atlanta: CDC; 2001. [Google Scholar]

- 19.Bowen S, Zwi AB. Pathways to “evidence-informed” policy and practice: a framework for action. PLoS Med. 2005;2:e166. doi: 10.1371/journal.pmed.0020166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Robinson K, Elliott SJ, Driedger SM, Eyles J, O'Loughlin J, Riley B, et al. Using linking systems to build capacity and enhance dissemination in heart health promotion: a Canadian multiple-case study. Health Educ Res. 2005;20:499–513. doi: 10.1093/her/cyh006. [DOI] [PubMed] [Google Scholar]

- 21.Baker EL, Potter MA, Jones DL, Mercer SL, Cioffi JP, Green LW, et al. The public health infrastructure and our nation's health. Annu Rev Public Health. 2005;26:303–18. doi: 10.1146/annurev.publhealth.26.021304.144647. [DOI] [PubMed] [Google Scholar]

- 22.Brownson RC, Gurney JG, Land GH. Evidence-based decision making in public health. J Public Health Manag Pract. 1999;5:86–97. doi: 10.1097/00124784-199909000-00012. [DOI] [PubMed] [Google Scholar]

- 23.Taylor-Robinson DC, Milton B, Lloyd-Williams F, O'Flaherty M, Capewell S. Planning ahead in public health? A qualitative study of the time horizons used in public health decision-making. BMC Public Health. 2008;8:415. doi: 10.1186/1471-2458-8-415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Brownson RC, Ballew P, Kittur ND, Elliot MB, Haire-Joshu D, Krebill H, et al. Developing competencies for training practitioners in evidence-based cancer control. J Cancer Educ. 2009;24:186–93. doi: 10.1080/08858190902876395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Jobe JB, Mingay DJ. Cognitive research improves questionnaires. Am J Public Health. 1989;79:1053–5. doi: 10.2105/ajph.79.8.1053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jobe JB, Mingay DJ. Cognitive laboratory approach to designing questionnaires for surveys of the elderly. Public Health Rep. 1990;105:518–24. [PMC free article] [PubMed] [Google Scholar]

- 27.Streiner DL, Norman GR. Health measurement scales: a practical guide to their development and use. 3rd ed. New York: Oxford University Press; 2003. [Google Scholar]

- 28.Willis GB. Cognitive interviewing: a tool for improving questionnaire design. Thousand Oaks (CA): Sage Publications, Inc.; 2004. [Google Scholar]

- 29.Kerner J, Rimer B, Emmons K. Introduction to the special section on dissemination: dissemination research and research dissemination: how can we close the gap? Health Psychol. 2005;24:443–6. doi: 10.1037/0278-6133.24.5.443. [DOI] [PubMed] [Google Scholar]

- 30.National Cancer Institute. Conference summary report. Designing for Dissemination; 2002 Sep 19–20; Washington. [Google Scholar]

- 31.Franks AL, Brownson RC, Baker EA, Leet TL, O'Neall MA, Bryant C, et al. Prevention research centers: contributions to updating the public health workforce through training. Prev Chronic Dis. 2005;2:A26. [PMC free article] [PubMed] [Google Scholar]

- 32.Dodson EA, Baker EA, Brownson RC. Use of evidence-based interventions in state health departments: a qualitative assessment of barriers and solutions. J Public Health Manag Pract. doi: 10.1097/PHH.0b013e3181d1f1e2. In press. [DOI] [PubMed] [Google Scholar]

- 33.Baker EA, Brownson RC, Dreisinger M, McIntosh LD, Karamehic-Muratovic A. Examining the role of training in evidence-based public health: a qualitative study. Health Promot Pract. 2009;10:342–8. doi: 10.1177/1524839909336649. [DOI] [PubMed] [Google Scholar]

- 34.Groves RM. Nonresponse rates and nonresponse bias in household surveys. Public Opin Q. 2006;70:646–75. [Google Scholar]

- 35.Beitsch LM, Brooks RG, Grigg M, Menachemi N. Structure and functions of state public health agencies. Am J Public Health. 2006;96:167–72. doi: 10.2105/AJPH.2004.053439. [DOI] [PMC free article] [PubMed] [Google Scholar]