Abstract

The paper investigates parameterized approximate message-passing schemes that are based on bounded inference and are inspired by Pearl's belief propagation algorithm (BP). We start with the bounded inference mini-clustering algorithm and then move to the iterative scheme called Iterative Join-Graph Propagation (IJGP), that combines both iteration and bounded inference. Algorithm IJGP belongs to the class of Generalized Belief Propagation algorithms, a framework that allowed connections with approximate algorithms from statistical physics and is shown empirically to surpass the performance of mini-clustering and belief propagation, as well as a number of other state-of-the-art algorithms on several classes of networks. We also provide insight into the accuracy of iterative BP and IJGP by relating these algorithms to well known classes of constraint propagation schemes.

1. Introduction

Probabilistic inference is the principal task in Bayesian networks and is known to be an NP-hard problem (Cooper, 1990; Roth, 1996). Most of the commonly used exact algorithms such as join-tree clustering (Lauritzen & Spiegelhalter, 1988; Jensen, Lauritzen, & Olesen, 1990) or variable-elimination (Dechter, 1996, 1999; Zhang, Qi, & Poole, 1994), and more recently search schemes (Darwiche, 2001; Bacchus, Dalmao, & Pitassi, 2003; Dechter & Mateescu, 2007) exploit the network structure. While significant advances were made in the last decade in exact algorithms, many real-life problems are too big and too hard, especially when their structure is dense, since they are time and space exponential in the treewidth of the graph. Approximate algorithms are therefore necessary for many practical problems, although approximation within given error bounds is also NP-hard (Dagum & Luby, 1993; Roth, 1996).

The paper focuses on two classes of approximation algorithms for the task of belief updating. Both are inspired by Pearl's belief propagation algorithm (Pearl, 1988), which is known to be exact for trees. As a distributed algorithm, Pearl's belief propagation can also be applied iteratively to networks that contain cycles, yielding Iterative Belief Propagation (IBP), also known as loopy belief propagation. When the networks contain cycles, IBP is no longer guaranteed to be exact, but in many cases it provides very good approximations upon convergence. Some notable success cases are those of IBP for coding networks (McEliece, MacKay, & Cheng, 1998; McEliece & Yildirim, 2002), and a version of IBP called survey propagation for some classes of satisfiability problems (Mézard, Parisi, & Zecchina, 2002; Braunstein, Mézard, & Zecchina, 2005).

Although the performance of belief propagation is far from being well understood in general, one of the more promising avenues towards characterizing its behavior came from analogies with statistical physics. It was shown by Yedidia, Freeman, and Weiss (2000, 2001) that belief propagation can only converge to a stationary point of an approximate free energy of the system, called Bethe free energy. Moreover, the Bethe approximation is computed over pairs of variables as terms, and is therefore the simplest version of the more general Kikuchi (1951) cluster variational method, which is computed over clusters of variables. This observation inspired the class of Generalized Belief Propagation (GBP) algorithms, that work by passing messages between clusters of variables. As mentioned by Yedidia et al. (2000), there are many GBP algorithms that correspond to the same Kikuchi approximation. A version based on region graphs, called “canonical” by the authors, was presented by Yedidia et al. (2000, 2001, 2005). Our algorithm Iterative Join-Graph Propagation is a member of the GBP class, although it will not be described in the language of region graphs. Our approach is very similar to and was independently developed from that of McEliece and Yildirim (2002). For more information on BP state of the art research see the recent survey by Koller (2010).

We will first present the mini-clustering scheme which is an anytime bounded inference scheme that generalizes the mini-bucket idea. It can be viewed as a belief propagation algorithm over a tree obtained by a relaxation of the network's structure (using the technique of variable duplication). We will subsequently present Iterative Join-Graph Propagation (IJGP) that sends messages between clusters that are allowed to form a cyclic structure.

Through these two schemes we investigate: (1) the quality of bounded inference as an anytime scheme (using mini-clustering); (2) the virtues of iterating messages in belief propagation type algorithms, and the result of combining bounded inference with iterative message-passing (in IJGP).

In the background section 2, we overview the Tree-Decomposition scheme that forms the basis for the rest of the paper. By relaxing two requirements of the tree-decomposition, that of connectedness (via mini-clustering) and that of tree structure (by allowing cycles in the underlying graph), we combine bounded inference and iterative message-passing with the basic tree-decomposition scheme, as elaborated in subsequent sections.

In Section 3 we present the partitioning-based anytime algorithm called Mini-Clustering (MC), which is a generalization of the Mini-Buckets algorithm (Dechter & Rish, 2003). It is a message-passing algorithm guided by a user adjustable parameter called i-bound, offering a flexible tradeoff between accuracy and efficiency in anytime style (in general the higher the i-bound, the better the accuracy). MC algorithm operates on a tree-decomposition, and similar to Pearl's belief propagation algorithm (Pearl, 1988) it converges in two passes, up and down the tree. Our contribution beyond other works in this area (Dechter & Rish, 1997; Dechter, Kask, & Larrosa, 2001) is in: (1) Extending the partition-based approximation for belief updating from mini-buckets to general tree-decompositions, thus allowing the computation of the updated beliefs for all the variables at once. This extension is similar to the one proposed by Dechter et al. (2001), but replaces optimization with probabilistic inference. (2) Providing empirical evaluation that demonstrates the effectiveness of the idea of tree-decomposition combined with partition-based approximation for belief updating.

Section 4 introduces the Iterative Join-Graph Propagation (IJGP) algorithm. It operates on a general join-graph decomposition that may contain cycles. It also provides a user adjustable i-bound parameter that defines the maximum cluster size of the graph (and hence bounds the complexity), therefore it is both anytime and iterative. While the algorithm IBP is typically presented as a generalization of Pearl's Belief Propagation algorithm, we show that IBP can be viewed as IJGP with the smallest i-bound.

We also provide insight into IJGP's behavior in Section 4. Zero-beliefs are variable-value pairs that have zero conditional probability given the evidence. We show that: (1) if a value of a variable is assessed as having zero-belief in any iteration of IJGP, it remains a zero-belief in all subsequent iterations; (2) IJGP converges in a finite number of iterations relative to its set of zero-beliefs; and, most importantly (3) that the set of zero-beliefs decided by any of the iterative belief propagation methods is sound. Namely any zero-belief determined by IJGP corresponds to a true zero conditional probability relative to the given probability distribution expressed by the Bayesian network.

Empirical results on various classes of problems are included in Section 5, shedding light on the performance of IJGP(i). We see that it is often superior, or otherwise comparable, to other state-of-the-art algorithms.

The paper is based in part on earlier conference papers by Dechter, Kask, and Mateescu (2002), Mateescu, Dechter, and Kask (2002) and Dechter and Mateescu (2003).

2. Background

In this section we provide background for exact and approximate probabilistic inference algorithms that form the basis of our work. While we present our algorithms in the context of directed probabilistic networks, they are applicable to any graphical model, including Markov networks.

2.1 Preliminaries

Notations: A reasoning problem is defined in terms of a set of variables taking values on finite domains and a set of functions defined over these variables. We denote variables or subsets of variables by uppercase letters (e.g., X, Y, Z, S, R. . .) and values of variables by lower case letters (e.g., x, y, z, s). An assignment (X1 = x1,. . ., Xn = xn) can be abbreviated as x = (x1,. . ., xn). For a subset of variables S, DS denotes the Cartesian product of the domains of variables in S. xS is the projection of x = (x1,. . ., xn) over a subset S. We denote functions by letters f, g, h, etc., and the scope (set of arguments) of the function f by scope(f).

Definition 1 (graphical model) (Kask, Dechter, Larrosa, & Dechter, 2005) A graphical model is a 3-tuple, , where: X = {X1,. . ., Xn} is a finite set of variables; D = {D1,. . ., Dn} is the set of their respective finite domains of values; F = {f1,. . ., fr} is a set of positive real-valued discrete functions, each defined over a subset of variables , called its scope, and denoted by scope (fi). A graphical model typically has an associated combination operator 1 ⊗, (e.g., - product, sum). The graphical model represents the combination of all its functions: . A graphical model has an associated primal graph that captures the structural information of the model:

Definition 2 (primal graph, dual graph) The primal graph of a graphical model is an undirected graph that has variables as its vertices and an edge connects any two vertices whose corresponding variables appear in the scope of the same function. A dual graph of a graphical model has a one-to-one mapping between its vertices and functions of the graphical model. Two vertices in the dual graph are connected if the corresponding functions in the graphical model share a variable. We denote the primal graph by G = (X, E), where X is the set of variables and E is the set of edges.

Definition 3 (belief networks) A belief (or Bayesian) network is a graphical model , where G = (X, E) is a directed acyclic graph over variables X and P = {pi}, where pi = {p(Xi | pa (Xi))} are conditional probability tables (CPTs) associated with each variable Xi and pa(Xi) = scope(pi)−{Xi} is the set of parents of Xi in G. Given a subset of variables S, we will write P(s) as the probability P(S = s), where s ∈ DS. A belief network represents a probability distribution over X, . An evidence set e is an instantiated subset of variables. The primal graph of a belief network is called a moral graph. It can be obtained by connecting the parents of each vertex in G and removing the directionality of the edges. Equivalently, it connects any two variables appearing in the same family (a variable and its parents in the CPT).

Two common queries in Bayesian networks are Belief Updating (BU) and Most Probable Explanation (MPE).

Definition 4 (belief network queries) The Belief Updating (BU) task is to find the posterior probability of each single variable given some evidence e, that is to compute P(Xi|e). The Most Probable Explanation (MPE) task is to find a complete assignment to all the variables having maximum probability given the evidence, that is to compute argmaxX∏ipi.

2.2 Tree-Decomposition Schemes

Tree-decomposition is at the heart of most general schemes for solving a wide range of automated reasoning problems, such as constraint satisfaction and probabilistic inference. It is the basis for many well-known algorithms, such as join-tree clustering and bucket elimination. In our presentation we will follow the terminology of Gottlob, Leone, and Scarcello (2000) and Kask et al. (2005).

Definition 5 (tree-decomposition, cluster-tree) Let be a belief network. A tree-decomposition for is a triple 〈T, χ, ψ〉, where T = (V, E) is a tree, and χ and ψ are labeling functions which associate with each vertex v ∈ V two sets, χ(v) ⊆ X and ψ(v) ⊆ P satisfying:

For each function pi ∈ P, there is exactly one vertex v ∈ V such that pi ∈ ψ(v), and scope(pi) ⊆χ(v).

For each variable Xi ∈ X, the set {v ∈ V|Xi ∈ χ(v)} induces a connected subtree of T. This is also called the running intersection (or connectedness) property.

We will often refer to a node and its functions as a cluster and use the term tree-decomposition and cluster-tree interchangeably.

Definition 6 (treewidth, separator, eliminator) Let D = 〈T, χ, ψ〉 be a tree-decomposition of a belief network . The treewidth (Arnborg, 1985) of D is maxv∈V|χ(v) − 1. The treewidth of is the minimum treewidth over all its tree-decompositions. Given two adjacent vertices u and v of a tree-decomposition, the separator of u and v is defined as , and the eliminator of u with respect to v is elim(u, v) = χ(u) − χ(v). The separator-width of D is max(u,v)|sep(u, v)|. The minimum treewidth of a graph G can be shown to be identical to a related parameter called induced-width (Dechter & Pearl, 1987).

Join-tree and cluster-tree elimination (CTE) In both Bayesian network and constraint satisfaction communities, the most used tree-decomposition method is join-tree decomposition (Lauritzen & Spiegelhalter, 1988; Dechter & Pearl, 1989), introduced based on relational database concepts (Maier, 1983). Such decompositions can be generated by embedding the network's moral graph G into a chordal graph, often using a triangulation algorithm and using its maximal cliques as nodes in the join-tree. The triangulation algorithm assembles a join-tree by connecting the maximal cliques in the chordal graph in a tree. Subsequently, every CPT pi is placed in one clique containing its scope. Using the previous terminology, a join-tree decomposition of a belief network is a tree T = (V, E), where V is the set of cliques of a chordal graph G′ that contains G, and E is a set of edges that form a tree between cliques, satisfying the running intersection property (Maier, 1983). Such a join-tree satisfies the properties of tree-decomposition and is therefore a cluster-tree (Kask et al., 2005). In this paper, we will use the terms tree-decomposition and join-tree decomposition interchangeably.

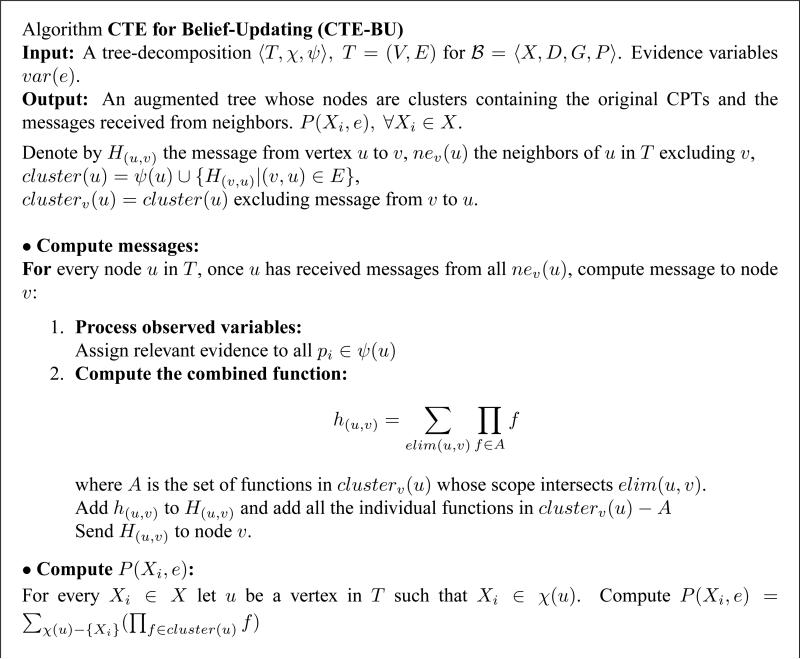

There are a few variants for processing join-trees for belief updating (e.g., Jensen et al., 1990; Shafer & Shenoy, 1990). We adopt here the version from Kask et al. (2005), called cluster-tree-elimination (CTE), that is applicable to tree-decompositions in general and is geared towards space savings. It is a message-passing algorithm; for the task of belief updating, messages are computed by summation over the eliminator between the two clusters of the product of functions in the originating cluster. The algorithm, denoted CTE-BU (see Figure 1), pays a special attention to the processing of observed variables since the presence of evidence is a central component in belief updating. When a cluster sends a message to a neighbor, the algorithm operates on all the functions in the cluster except the message from that particular neighbor. The message contains a single combined function and individual functions that do not share variables with the relevant eliminator. All the non-individual functions are combined in a product and summed over the eliminator.

Figure 1.

Algorithm Cluster-Tree-Elimination for Belief Updating (CTE-BU).

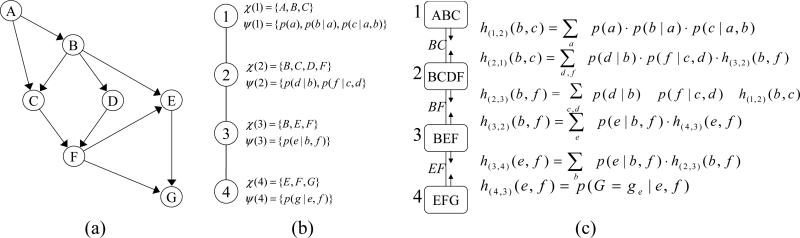

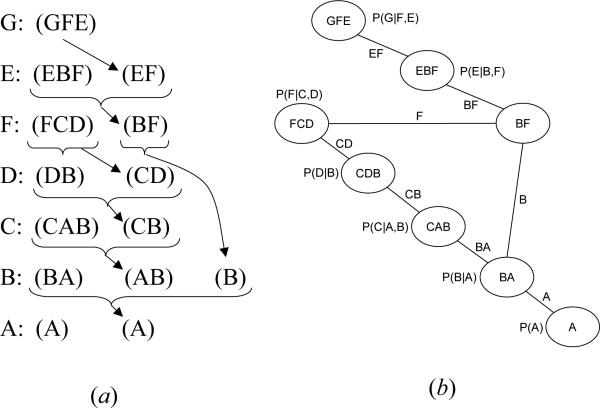

Example 1 Figure 2a describes a belief network and Figure 2b a join-tree decomposition for it. Figure 2c shows the trace of running CTE-BU with evidence G = ge, where h(u,v) is a message that cluster u sends to cluster v.

Figure 2.

(a) A belief network; (b) A join-tree decomposition; (c) Execution of CTE-BU.

Theorem 1 (complexity of CTE-BU) (Dechter et al., 2001; Kask et al., 2005) Given a Bayesian network and a tree-decomposition 〈T, χ, ψ〉 of , the time complexity of CTEBU is O(deg · (n + N) · dw*+1) and the space complexity is O(N · dsep), where deg is the maximum degree of a node in the tree-decomposition, n is the number of variables, N is the number of nodes in the tree-decomposition, d is the maximum domain size of a variable, w* is the treewidth and sep is the maximum separator size.

3. Partition-Based Mini-Clustering

The time, and especially the space complexity, of CTE-BU renders the algorithm infeasible for problems with large treewidth. We now introduce Mini-Clustering, a partition-based anytime algorithm which computes bounds or approximate values on P(Xi, e) for every variable Xi.

3.1 Mini-Clustering Algorithm

Combining all the functions of a cluster into a product has a complexity exponential in its number of variables, which is upper bounded by the induced width. Similar to the mini-bucket scheme (Dechter, 1999), rather than performing this expensive exact computation, we partition the cluster into p mini-clusters mc(1),. . ., mc(p), each having at most i variables, where i is an accuracy parameter. Instead of computing by CTE-BU , we can divide the functions of ψ(u) into p mini-clusters mc(k), k ∈ {1,. . ., p}, and rewrite . By migrating the summation operator into each mini-cluster, yielding , we get an upper bound on h(u,v). The resulting algorithm is called MC-BU(i).

Consequently, the combined functions are approximated via mini-clusters, as follows. Suppose u ∈ V has received messages from all its neighbors other than v (the message from v is ignored even if received). The functions in clusterv(u) that are to be combined are partitioned into mini-clusters {mc(1),. . ., mc(p)}, each one containing at most i variables. Each mini-cluster is processed by summation over the eliminator, and the resulting combined functions as well as all the individual functions are sent to v. It was shown by Dechter and Rish (2003) that the upper bound can be improved by using the maximization operator max rather than the summation operator sum on some mini-buckets. Similarly, lower bounds can be generated by replacing sum with min (minimization) for some mini-buckets. Alternatively, we can replace sum by a mean operator (taking the sum and dividing by the number of elements in the sum), in this case deriving an approximation of the joint belief instead of a strict upper bound.

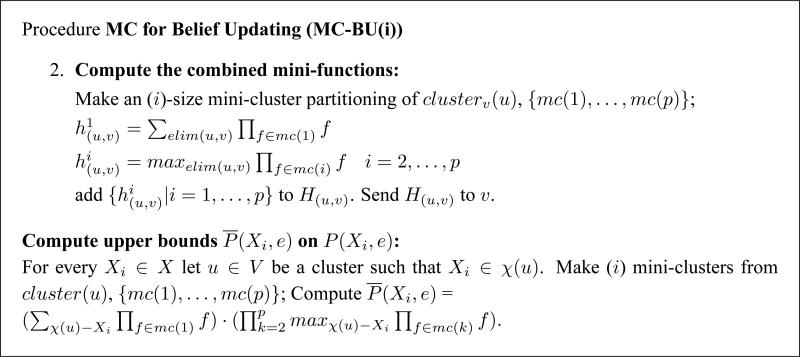

Algorithm MC-BU for upper bounds can be obtained from CTE-BU by replacing step 2 of the main loop and the final part of computing the upper bounds on the joint belief by the procedure given in Figure 3. In the implementation we used for the experiments reported here, the partitioning was done in a greedy brute-force manner. We ordered the functions according to their sizes (number of variables), breaking ties arbitrarily. The largest function was placed in a mini-cluster by itself. Then, we picked the largest remaining function and probed the mini-clusters in the order of their creation, trying to find one that together with the new function would have no more than i variables. A new mini-cluster was created whenever the existing ones could not accommodate the new function.

Figure 3.

Procedure Mini-Clustering for Belief Updating (MC-BU).

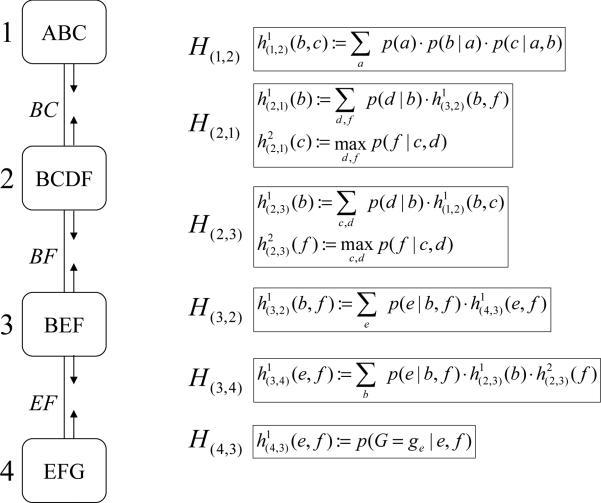

Example 2 Figure 4 shows the trace of running MC-BU(3) on the problem in Figure 2. First, evidence G = ge is assigned in all CPTs. There are no individual functions to be sent from cluster 1 to cluster 2. Cluster 1 contains only 3 variables, χ(1) = {A, B, C}, therefore it is not partitioned. The combined function is computed and the message is sent to node 2. Now, node 2 can send its message to node 3. Again, there are no individual functions. Cluster 2 contains 4 variables, χ(2) = {B, C, D, F}, and a partitioning is necessary: MC-BU(3) can choose mc(1) = {p(d|b), h(1,2)(b, c)} and md(2) = {p(f|c, d)}. The combined functions and are computed and the message is sent to node 3. The algorithm continues until every node has received messages from all its neighbors. An upper bound on p(a, G = ge) can now be computed by choosing cluster 1, which contains variable A. It doesn't need partitioning, so the algorithm just computes . Notice that unlike CTE-BU which processes 4 variables in cluster 2, MC-BU(3) never processes more than 3 variables at a time.

Figure 4.

Execution of MC-BU for i = 3.

It was already shown that:

Theorem 2 (Dechter & Rish, 2003) Given a Bayesian network and the evidence e, the algorithm MC-BU(i) computes an upper bound on the joint probability P(Xi, e) of each variable Xi (and each of its values) and the evidence e.

Theorem 3 (complexity of MC-BU(i)) (Dechter et al., 2001) Given a Bayesian network and a tree-decomposition 〈T, χ, ψ〉 of , the time and space complexity of MC-BU(i) is O(n · hw* · di), where n is the number of variables, d is the maximum domain size of a variable and , which bounds the number of mini-clusters.

Semantics of Mini-Clustering The mini-bucket scheme was shown to have the semantics of relaxation via node duplication (Kask & Dechter, 2001; Choi, Chavira, & Darwiche, 2007). We extend it to mini-clustering by showing how it can apply as is to messages that flow in one direction (inward, from leaves to root), as follows. Given a tree-decomposition D, where CTE-BU computes a function h(u,v) (the message that cluster u sends to cluster v), MC-BU(i) partitions cluster u into p mini-clusters u1,. . ., up, which are processed independently and then the resulting functions h(ui,v) are sent to v. Instead consider a different decomposition D′, which is just like D, with the exception that (a) instead of u, it has clusters u1,. . ., up, all of which are children of v, and each variable appearing in more than a single mini-cluster becomes a new variable, (b) each child w of u (in D) is a child of uk (in D′), such that h(w,u) (in D) is assigned to uk (in D') during the partitioning. Note that D′ is not a legal tree-decomposition relative to the original variables since it violates the connectedness property: the mini-clusters u1,. . ., up contain variables elim(u, v) but the path between the nodes u1,. . ., up (this path goes through v) does not. However, it is a legal tree-decomposition relative to the new variables. It is straightforward to see that H(u,v) computed by MC-BU(i) on D is the same as {h(ui,v)|i = 1,. . ., p} computed by CTE-BU on D′ in the direction from leaves to root.

If we want to capture the semantics of the outward messages from root to leaves, we need to generate a different relaxed decomposition (D″) because MC, as defined, allows a different partitioning in the up and down streams of the same cluster. We could of course stick with the decomposition in D′ and use CTE in both directions which would lead to another variant of mini-clustering.

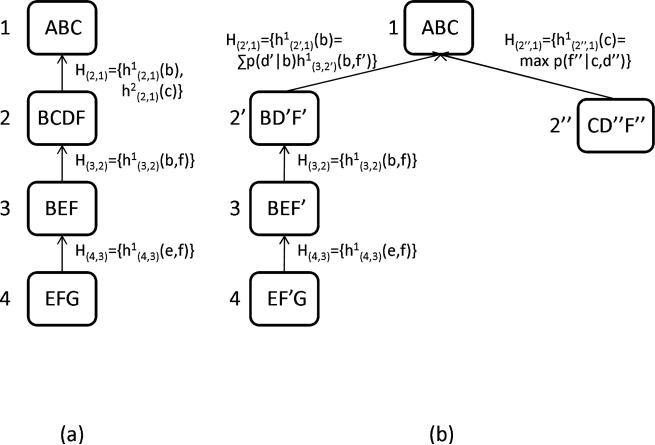

Example 3 Figure 5(a) shows a trace of the bottom-up phase of MC-BU(3) on the network in Figure 4. Figure 5(b) shows a trace of the bottom-up phase of CTE-BU algorithm on a problem obtained from the problem in Figure 4 by splitting nodes D (into D′ and D″) and F (into F′ and F″).

Figure 5.

Node duplication semantics of MC: (a) trace of MC-BU(3); (b) trace of CTE-BU.

The MC-BU algorithm computes an upper bound P̄(Xi, e) on the joint probability P(Xi, e). However, deriving a bound on the conditional probability P(Xi|e) is not easy when the exact value of P(e) is not available. If we just try to divide (multiply) P̄(Xi, e) by a constant, the result is not necessarily an upper bound on P(Xi|e). It is easy to show that normalization, , with the mean operator is identical to normalization of MC-BU output when applying the summation operator in all the mini-clusters.

MC-BU(i) is an improvement over the Mini-Bucket algorithm MB(i), in that it allows the computation of P̄(Xi, e) for all variables with a single run, whereas MB(i) computes P̄(Xi, e) for just one variable, with a single run. When computing P̄(Xi, e) for each variable, MB(i) has to be run n times, once for each variable, an algorithm we call nMB(i). It was demonstrated by Mateescu et al. (2002) that MC-BU(i) has up to linear speed-up over nMB(i). For a given i, the accuracy of MC-BU(i) can be shown to be not worse than that of nMB(i).

3.2 Experimental Evaluation of Mini-Clustering

The work of Mateescu et al. (2002) and Kask (2001) provides an empirical evaluation of MC-BU that reveals the impact of the accuracy parameter on its quality of approximation and compares with Iterative Belief Propagation and a Gibbs sampling scheme. We will include here only a subset of these experiments which will provide the essence of our results. Additional empirical evaluation of MC-BU will be given when comparing against IJGP later in this paper.

We tested the performance of MC-BU(i) on random Noisy-OR networks, random coding networks, general random networks, grid networks, and three benchmark CPCS files with 54, 360 and 422 variables respectively (these are belief networks for medicine, derived from the Computer based Patient Case Simulation system, known to be hard for belief updating). On each type of network we ran Iterative Belief Propagation (IBP) - set to run at most 30 iterations, Gibbs Sampling (GS) and MC-BU(i), with i from 2 to the treewidth w* to capture the anytime behavior of MC-BU(i).

The random networks were generated using parameters (N,K,C,P), where N is the number of variables, K is their domain size (we used only K=2), C is the number of conditional probability tables and P is the number of parents in each conditional probability table. The parents in each table are picked randomly given a topological ordering, and the conditional probability tables are filled randomly. The grid networks have the structure of a square, with edges directed to form a diagonal flow (all parallel edges have the same direction). They were generated by specifying N (a square integer) and K (we used K=2). We also varied the number of evidence nodes, denoted by |e| in the tables. The parameter values are reported in each table. For all the problems, Gibbs sampling performed consistently poorly so we only include part of its results here.

In our experiments we focused on the approximation power of MC-BU(i). We compared two versions of the algorithm. In the first version, for every cluster, we used the max operator in all its mini-clusters, except for one of them that was processed by summation. In the second version, we used the operator mean in all the mini-clusters. We investigated this second version of the algorithm for two reasons: (1) we compare MC-BU(i) with IBP and Gibbs sampling, both of which are also approximation algorithms, so it would not be possible to compare with a bounding scheme; (2) we observed in our experiments that, although the bounds improve as the i-bound increases, the quality of bounds computed by MC-BU(i) was still poor, with upper bounds being greater than 1 in many cases.2 Notice that we need to maintain the sum operator for at least one of the mini-clusters. The mean operator simply performs summation and divides by the number of elements in the sum. For example, if A, B, C are binary variables (taking values 0 and 1), and f(A, B, C) is the aggregated function of one mini-cluster, and elim = {A, B}, then computing the message h(C) by the mean operator gives: .

We computed the exact solution and used three different measures of accuracy: 1) Normalized Hamming Distance (NHD) - we picked the most likely value for each variable for the approximate and for the exact, took the ratio between the number of disagreements and the total number of variables, and averaged over the number of problems that we ran for each class; 2) Absolute Error (Abs. Error) - is the absolute value of the difference between the approximate and the exact, averaged over all values (for each variable), all variables and all problems; 3) Relative Error (Rel. Error) - is the absolute value of the difference between the approximate and the exact, divided by the exact, averaged over all values (for each variable), all variables and all problems. For coding networks, we report only one measure, Bit Error Rate (BER). In terms of the measures defined above, BER is the normalized Hamming distance between the approximate (computed by an algorithm) and the actual input (which in the case of coding networks may be different from the solution given by exact algorithms), so we denote them differently to make this semantic distinction. We also report the time taken by each algorithm. For reported metrics (time, error, etc.) provided in the Tables, we give both mean and max values.

In Figure 6 we show that IBP converges after about 5 iterations. So, while in our experiments we report its time for 30 iterations, its time is even better when sophisticated termination is used. These results are typical of all runs.

Figure 6.

Convergence of IBP (50 variables, evidence from 0-30 variables).

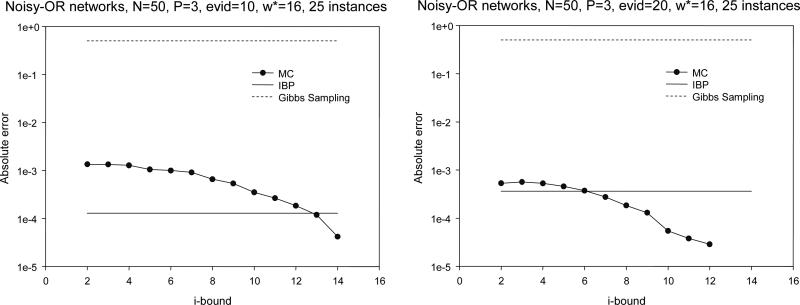

Random Noisy-OR networks results

are summarized in Tables 1 and 2, and Figure 7. For NHD, both IBP and MC-BU gave perfect results. For the other measures, we noticed that IBP is more accurate when there is no evidence by about an order of magnitude. However, as evidence is added, IBP's accuracy decreases, while MC-BU's increases and they give similar results. We see that MC-BU gets better as the accuracy parameter i increases, which shows its anytime behavior.

Table 1.

Performance on Noisy-OR networks, w* = 10: Normalized Hamming Distance, absolute error, relative error and time.

| N=50, P=2, 50 instances | ||||||||

|---|---|---|---|---|---|---|---|---|

| 0 | NHD |

Abs. Error |

Rel. Error |

Time |

||||

| |e| 10 | ||||||||

| 20 |

max |

mean |

max |

mean |

max |

mean |

max |

mean |

| 0 | 9.0E-09 | 1.1E-05 | 0.102 | |||||

| IBP | 0 | 3.4E-04 | 4.2E-01 | 0.081 | ||||

| |

0 |

9.6E-04 |

1.2E+00 |

0.062 |

||||

| 0 | 0 | 1.6E-03 | 1.1E-03 | 1.9E+00 | 1.3E+00 | 0.056 | 0.057 | |

| MC-BU(2) | 0 | 0 | 1.1E-03 | 8.4E-04 | 1.4E+00 | 1.0E+00 | 0.048 | 0.049 |

| |

0 |

0 |

5.7E-04 |

4.8E-04 |

7.1E-01 |

5.9E-01 |

0.039 |

0.039 |

| 0 | 0 | 1.1E-03 | 9.4E-04 | 1.4E+00 | 1.2E+00 | 0.070 | 0.072 | |

| MC-BU(5) | 0 | 0 | 7.7E-04 | 6.9E-04 | 9.3E-01 | 8.4E-01 | 0.063 | 0.066 |

| |

0 |

0 |

2.8E-04 |

2.7E-04 |

3.5E-01 |

3.3E-01 |

0.058 |

0.057 |

| 0 | 0 | 3.6E-04 | 3.2E-04 | 4.4E-01 | 3.9E-01 | 0.214 | 0.221 | |

| MC-BU(8) | 0 | 0 | 1.7E-04 | 1.5E-04 | 2.0E-01 | 1.9E-01 | 0.184 | 0.190 |

| 0 | 0 | 3.5E-05 | 3.5E-05 | 4.3E-02 | 4.3E-02 | 0.123 | 0.127 | |

Table 2.

Performance on Noisy-OR networks, w* = 16: Normalized Hamming Distance, absolute error, relative error and time.

| N=50, P=3, 25 instances | ||||||||

|---|---|---|---|---|---|---|---|---|

| 10 | NHD |

Abs. Error |

Rel. Error |

Time |

||||

| |e| 20 | ||||||||

| 30 |

max |

mean |

max |

mean |

max |

mean |

max |

mean |

| 0 | 1.3E-04 | 7.9E-01 | 0.242 | |||||

| IBP | 0 | 3.6E-04 | 2.2E+00 | 0.184 | ||||

| |

0 |

6.8E-04 |

4.2E+00 |

0.121 |

||||

| 0 | 0 | 1.3E-03 | 9.6E-04 | 8.2E+00 | 5.8E+00 | 0.107 | 0.108 | |

| MC-BU(2) | 0 | 0 | 5.3E-04 | 4.0E-04 | 3.1E+00 | 2.4E+00 | 0.077 | 0.077 |

| |

0 |

0 |

2.3E-04 |

1.9E-04 |

1.4E+00 |

1.2E+00 |

0.064 |

0.064 |

| 0 | 0 | 1.0E-03 | 8.3E-04 | 6.4E+00 | 5.1E+00 | 0.133 | 0.133 | |

| MC-BU(5) | 0 | 0 | 4.6E-04 | 4.1E-04 | 2.7E+00 | 2.4E+00 | 0.104 | 0.105 |

| |

0 |

0 |

2.0E-04 |

1.9E-04 |

1.2E+00 |

1.2E+00 |

0.098 |

0.095 |

| 0 | 0 | 6.6E-04 | 5.7E-04 | 4.0E+00 | 3.5E+00 | 0.498 | 0.509 | |

| MC-BU(8) | 0 | 0 | 1.8E-04 | 1.8E-04 | 1.1E+00 | 1.0E+00 | 0.394 | 0.406 |

| |

0 |

0 |

3.4E-05 |

3.4E-05 |

2.1E-01 |

2.1E-01 |

0.300 |

0.308 |

| 0 | 0 | 2.6E-04 | 2.4E-04 | 1.6E+00 | 1.5E+00 | 2.339 | 2.378 | |

| MC-BU(11) | 0 | 0 | 3.8E-05 | 3.8E-05 | 2.3E-01 | 2.3E-01 | 1.421 | 1.439 |

| |

0 |

0 |

6.4E-07 |

6.4E-07 |

4.0E-03 |

4.0E-03 |

0.613 |

0.624 |

| 0 | 0 | 4.2E-05 | 4.1E-05 | 2.5E-01 | 2.4E-01 | 7.805 | 7.875 | |

| MC-BU(14) | 0 | 0 | 0 | 0 | 0 | 0 | 2.075 | 2.093 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0.630 | 0.638 | |

Figure 7.

Absolute error for Noisy-OR networks.

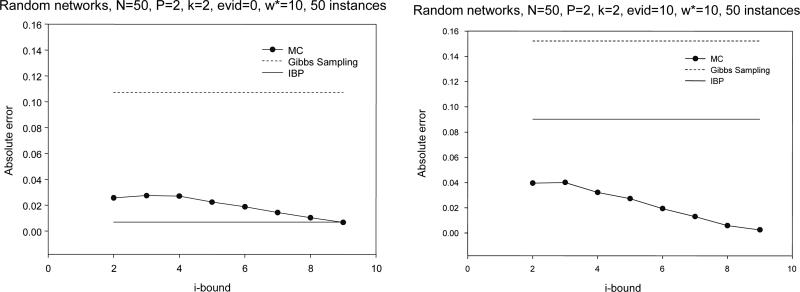

General random networks results

are summarized in Figure 8. They are similar to those for random Noisy-OR networks. Again, IBP has the best result only when the number of evidence variables is small. It is remarkable how quickly MC-BU surpasses the performance of IBP as evidence is added (for more, see the results of Mateescu et al., 2002).

Figure 8.

Absolute error for random networks.

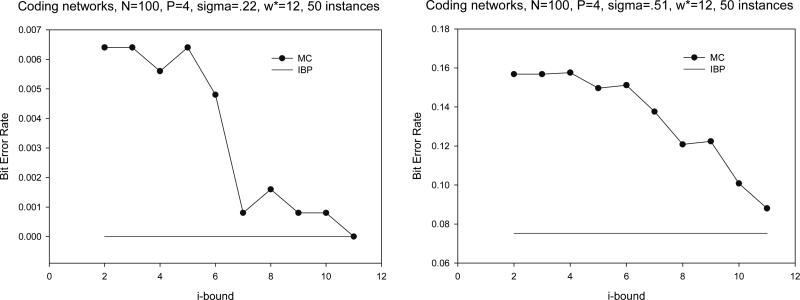

Random coding networks results

are given in Table 3 and Figure 9. The instances fall within the class of linear block codes, (σ is the channel noise level). It is known that IBP is very accurate for this class. Indeed, these are the only problems that we experimented with where IBP outperformed MC-BU throughout. The anytime behavior of MC-BU can again be seen in the variation of numbers in each column and more vividly in Figure 9.

Table 3.

Bit Error Rate (BER) for coding networks.

| BER | σ = .22 | σ = .26 | σ = .32 | σ = .40 | σ = .51 | Time | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| max | mean | max | mean | max | mean | max | mean | max | mean | ||

| N=100, P=3, 50 instances, w*=7 | |||||||||||

| IBP | 0.000 | 0.000 | 0.000 | 0.000 | 0.002 | 0.002 | 0.022 | 0.022 | 0.088 | 0.088 | 0.00 |

| GS | 0.483 | 0.483 | 0.483 | 0.483 | 0.483 | 0.483 | 0.483 | 0.483 | 0.483 | 0.483 | 31.36 |

| MC-BU(2) | 0.002 | 0.002 | 0.004 | 0.004 | 0.024 | 0.024 | 0.068 | 0.068 | 0.132 | 0.131 | 0.08 |

| MC-BU(4) | 0.001 | 0.001 | 0.002 | 0.002 | 0.018 | 0.018 | 0.046 | 0.045 | 0.110 | 0.110 | 0.08 |

| MC-BU(6) | 0.000 | 0.000 | 0.000 | 0.000 | 0.004 | 0.004 | 0.038 | 0.038 | 0.106 | 0.106 | 0.12 |

| MC-BU(8) | 0.000 | 0.000 | 0.000 | 0.000 | 0.002 | 0.002 | 0.023 | 0.023 | 0.091 | 0.091 | 0.19 |

| N=100, P=4, 50 instances, w*=11 | |||||||||||

| IBP | 0.000 | 0.000 | 0.000 | 0.000 | 0.002 | 0.002 | 0.013 | 0.013 | 0.075 | 0.075 | 0.00 |

| GS | 0.506 | 0.506 | 0.506 | 0.506 | 0.506 | 0.506 | 0.506 | 0.506 | 0.506 | 0.506 | 39.85 |

| MC-BU(2) | 0.006 | 0.006 | 0.015 | 0.015 | 0.043 | 0.043 | 0.093 | 0.094 | 0.157 | 0.157 | 0.19 |

| MC-BU(4) | 0.006 | 0.006 | 0.017 | 0.017 | 0.049 | 0.049 | 0.104 | 0.102 | 0.158 | 0.158 | 0.19 |

| MC-BU(6) | 0.005 | 0.005 | 0.011 | 0.011 | 0.035 | 0.034 | 0.071 | 0.074 | 0.151 | 0.150 | 0.29 |

| MC-BU(8) | 0.002 | 0.002 | 0.004 | 0.004 | 0.022 | 0.022 | 0.059 | 0.059 | 0.121 | 0.122 | 0.71 |

| MC-BU(10) | 0.001 | 0.001 | 0.001 | 0.001 | 0.008 | 0.008 | 0.033 | 0.032 | 0.101 | 0.102 | 1.87 |

Figure 9.

Bit Error Rate (BER) for coding networks.

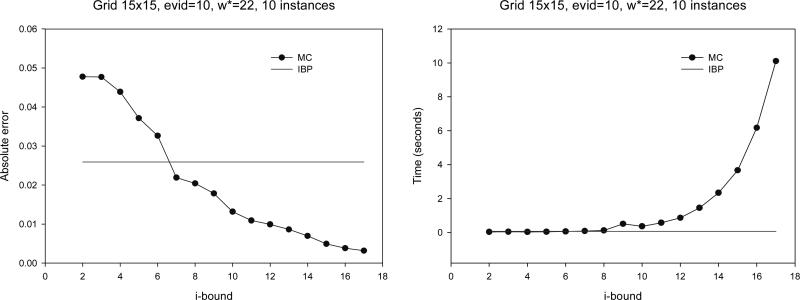

Grid networks results

are given in Figure 10. We notice that IBP is more accurate for no evidence and MC-BU is better as more evidence is added. The same behavior was consistently manifested for smaller grid networks that we experimented with (from 7×7 up to 14×14).

Figure 10.

Grid 15×15: absolute error and time.

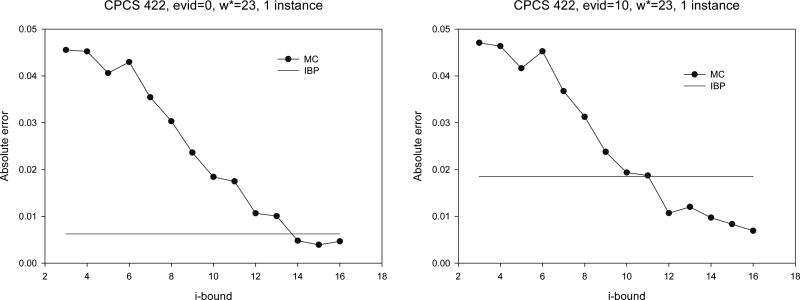

CPCS networks results

We also tested on three CPCS benchmark files. The results are given in Figure 11. It is interesting to notice that the MC-BU scheme scales up to fairly large networks, like the real life example of CPCS422 (induced width 23). IBP is again more accurate when there is no evidence, but is surpassed by MC-BU when evidence is added. However, whereas MC-BU is competitive with IBP time-wise when i-bound is small, its runtime grows rapidly as i-bound increases. For more details on all these benchmarks see the results of Mateescu et al. (2002).

Figure 11.

Absolute error for CPCS422.

Summary

Our results show that, as expected, IBP is superior to all other approximations for coding networks. However, for random Noisy-OR, general random, grid networks and the CPCS networks, in the presence of evidence, the mini-clustering scheme is often superior even in its weakest form. The empirical results are particularly encouraging as we use an un-optimized scheme that exploits a universal principle applicable to many reasoning tasks.

4. Join-Graph Decomposition and Propagation

In this section we introduce algorithm Iterative Join-Graph Propagation (IJGP) which, like mini-clustering, is designed to benefit from bounded inference, but also exploit iterative message-passing as used by IBP. Algorithm IJGP can be viewed as an iterative version of mini-clustering, improving the quality of approximation, especially for low i-bounds. Given a cluster of the decomposition, mini-clustering can potentially create a different partitioning for every message sent to a neighbor. This dynamic partitioning can happen because the incoming message from each neighbor has to be excluded when realizing the partitioning, so a different set of functions are split into mini-clusters for every message to a neighbor. We can define a version of mini-clustering where for every cluster we create a unique static partition into mini-clusters such that every incoming message can be included into one of the mini-clusters. This version of MC can be extended into IJGP by introducing some links between mini-clusters of the same cluster, and carefully limiting the interaction between the resulting nodes in order to eliminate over-counting.

Algorithm IJGP works on a general join-graph that may contain cycles. The cluster size of the graph is user adjustable via the i-bound (providing the anytime nature), and the cycles in the graph allow the iterative application of message-passing. In Subsection 4.1 we introduce join-graphs and discuss their properties. In Subsection 4.2 we describe the IJGP algorithm itself.

4.1 Join-Graphs

Definition 7 (join-graph decomposition) A join-graph decomposition for a belief network is a triple D = 〈JG, χ, ψ〉, where JG = (V, E) is a graph, and χ and ψ are labeling functions which associate with each vertex v ∈ V two sets, χ(v) ⊆ X and ψ(v) ⊆ P such that:

For each pi ∈ P, there is exactly one vertex v ∈ V such that pi ∈ ψ(v), and scope(pi) ⊆ χ(v).

(connectedness) For each variable Xi ∈ X, the set {v ∈ V|Xi ∈ χ(v)} induces a connected subgraph of JG. The connectedness requirement is also called the running intersection property.

We will often refer to a node in V and its CPT functions as a cluster3 and use the term join-graph decomposition and cluster-graph interchangeably. Clearly, a join-tree decomposition or a cluster-tree is the special case when the join-graph D is a tree.

It is clear that one of the problems of message propagation over cyclic join-graphs is over-counting. To reduce this problem we devise a scheme, which avoids cyclicity with respect to any single variable. The algorithm works on edge-labeled join-graphs.

Definition 8 (minimal edge-labeled join-graph decompositions) An edge-labeled join-graph decomposition for is a four-tuple D = 〈JG, χ, ψ, θ〉, where JG = (V, E) is a graph, χ and ψ associate with each vertex v ∈ V the sets χ(v) ⊆ X and ψ(v) ⊆ P and θ associates with each edge the set θ((v, u)) ⊆ X such that:

For each function pi ∈ P, there is exactly one vertex v ∈ V such that pi ∈ ψ(v), and scope(pi) ⊆ χ(v).

(edge-connectedness) For each edge , such that ∀Xi ∈ X, any two clusters containing Xi can be connected by a path whose every edge label includes Xi.

Finally, an edge-labeled join-graph is minimal if no variable can be deleted from any label while still satisfying the edge-connectedness property.

Definition 9 (separator, eliminator of edge-labeled join-graphs) Given two adjacent vertices u and v of JG, the separator of u and v is defined as sep(u, v) = θ((u, v)), and the eliminator of u with respect to v is elim(u, v) = χ(u) − θ((u, v)). The separator width is max(u,v)|sep(u, v)|.

Edge-labeled join-graphs can be made label minimal by deleting variables from the labels while maintaining connectedness (if an edge label becomes empty, the edge can be deleted). It is easy to see that,

Proposition 1 A minimal edge-labeled join-graph does not contain any cycle relative to any single variable. That is, any two clusters containing the same variable are connected by exactly one path labeled with that variable.

Notice that every minimal edge-labeled join-graph is edge-minimal (no edge can be deleted), but not vice-versa.

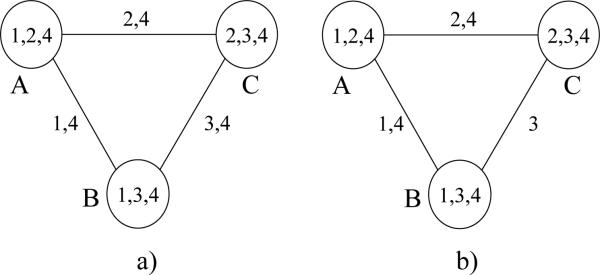

Example 4 The example in Figure 12a shows an edge minimal join-graph which contains a cycle relative to variable 4, with edges labeled with separators. Notice however that if we remove variable 4 from the label of one edge we will have no cycles (relative to single variables) while the connectedness property is still maintained.

Figure 12.

An edge-labeled decomposition.

The mini-clustering approximation presented in the previous section works by relaxing the join-tree requirement of exact inference into a collection of join-trees having smaller cluster size. It introduces some independencies in the original problem via node duplication and applies exact inference on the relaxed model requiring only two message passings. For the class of IJGP algorithms we take a different route. We choose to relax the tree-structure requirement and use join-graphs which do not introduce any new independencies, and apply iterative message-passing on the resulting cyclic structure.

Indeed, it can be shown that any join-graph of a belief network is an I-map (independency map, Pearl, 1988) of the underlying probability distribution relative to node-separation. Since we plan to use minimally edge-labeled join-graphs to address over-counting problems, the question is what kind of independencies are captured by such graphs.

Definition 10 (edge-separation in edge-labeled join-graphs) Let D = 〈JG, χ, ψ, θ〉, JG = (V, E) be an edge-labeled decomposition of a Bayesian network . Let NW, NY ⊆ V be two sets of nodes, and EZ ⊆ E be a set of edges in JG. Let W, Y, Z be their corresponding sets of variables . We say that EZ edge-separates NW and NY in D if there is no path between NW and NY in the JG graph whose edges in EZ are removed. In this case we also say that W is separated from Y given Z in D, and write 〈W|Z|Y〉D. Edge-separation in a regular join-graph is defined relative to its separators.

Theorem 4 Any edge-labeled join-graph decomposition D = 〈JG, χ, ψ, θ〉 of belief network is an I-map of P relative to edge-separation. Namely, any edge separation in D corresponds to conditional independence in P.

Proof: Let MG be the moral graph of BN. Since MG is an I-map of P, it is enough to prove that JG is an I-map of MG. Let NW and NY be disjoint sets of nodes and NZ be a set of edges in JG, and W, Z, Y be their corresponding sets of variables in MG. We will prove:

by contradiction. Since the sets W, Z, Y may not be disjoint, we will actually prove that 〈W − Z|Z|Y − Z〉MG holds, this being equivalent to 〈W|Z|Y〉MG.

Supposing 〈W − Z|Z|Y − Z〉MG is false, then there exists a path α = γ1, γ2,. . ., γn−1, β = γn in MG that goes from some variable α = γ1 ∈ W − Z to some variable β = γn ∈ Y − Z without intersecting variables in Z. Let Nv be the set of all nodes in JG that contain variable v ∈ X, and let us consider the set of nodes:

We argue that S forms a connected sub-graph in JG. First, the running intersection property ensures that every Nγi, i = 1,. . ., n, remains connected in JG after removing the nodes in NZ (otherwise, it must be that there was a path between the two disconnected parts in the original JG, which implies that a γi is part of Z, which is a contradiction). Second, the fact that (γi, γi+1), i = 1,. . ., n − 1, is an edge in the moral graph MG implies that there is a conditional probability table (CPT) on both γi and γi+1, i = 1,. . ., n − 1 (and perhaps other variables). From property 1 of the definition of the join-graph, it follows that for all i = 1,. . ., n − 1 there exists a node in JG that contains both γi and γi+1. This proves the existence of a path in the mutilated join-graph (JG with NZ pulled out) from a node in NW containing α = γ1 to the node containing both γ1 and γ2(Nγ1 is connected), then from that node to the one containing both γ2 and γ3(Nγ2 is connected), and so on until we reach a node in NY containing α = γn. This shows that 〈NW|NZ|NY〉JG is false, concluding the proof by contradiction. □

Interestingly however, deleting variables from edge labels or removing edges from edge-labeled join-graphs whose clusters are fixed will not increase the independencies captured by edge-labeled join-graphs. That is,

Proposition 2 Any two (edge-labeled) join-graphs defined on the same set of clusters, sharing (V , χ, ψ), express exactly the same set of independencies relative to edge-separation, and this set of independencies is identical to the one expressed by node separation in the primal graph of the join-graph.

Proof: This follows by looking at the primal graph of the join-graph (obtained by connecting any two nodes in a cluster by an arc over the original variables as nodes) and observing that any edge-separation in a join-graph corresponds to a node separation in the primal graph and vice-versa. □

Hence, the issue of minimizing computational over-counting due to cycles appears to be unrelated to the problem of maximizing independencies via minimal I-mapness. Nevertheless, to avoid over-counting as much as possible, we still prefer join-graphs that minimize cycles relative to each variable. That is, we prefer minimal edge-labeled join-graphs.

Relationship with region graphs

There is a strong relationship between our join-graphs and the region graphs of Yedidia et al. (2000, 2001, 2005). Their approach was inspired by advances in statistical physics, when it was realized that computing the partition function is essentially the same combinatorial problem that expresses probabilistic reasoning. As a result, variational methods from physics could have counterparts in reasoning algorithms. It was proved by Yedidia et al. (2000, 2001) that belief propagation on loopy networks can only converge (when it does so) to stationary points of the Bethe free energy. The Bethe approximation is only the simplest case of the more general Kikuchi (1951) cluster variational method. The idea is to group the variables together in clusters and perform exact computation in each cluster. One key question is then how to aggregate the results, and how to account for the variables that are shared between clusters. Again, the idea that everything should be counted exactly once is very important. This led to the proposal of region graphs (Yedidia et al., 2001, 2005) and the associated counting numbers for regions. They are given as a possible canonical version of graphs that can support Generalized Belief Propagation (GBP) algorithms. The join-graphs accomplish the same thing. The edge-labeled join-graphs can be described as region graphs where the regions are the clusters and the labels on the edges. The tree-ness condition with respect to every variable ensures that there is no over-counting.

A very similar approach to ours, which is also based on join-graphs appeared independently by McEliece and Yildirim (2002), and it is based on an information theoretic perspective.

4.2 Algorithm IJGP

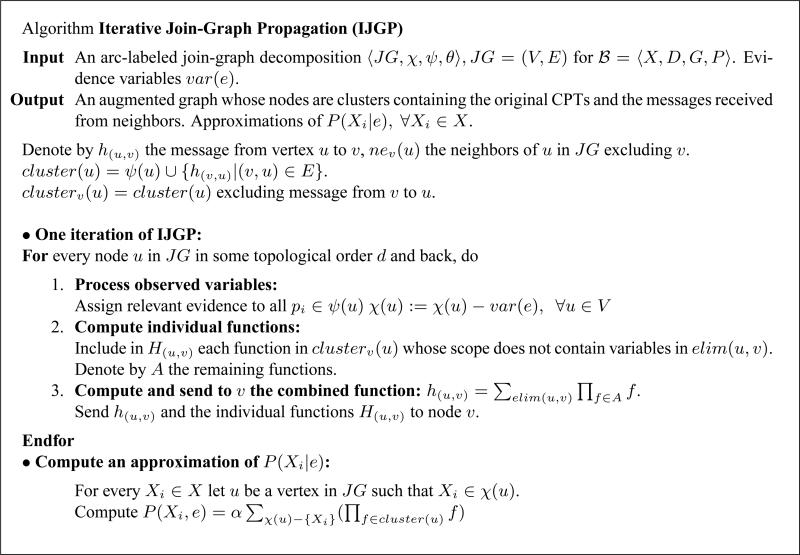

Applying CTE iteratively to minimal edge-labeled join-graphs yields our algorithm Iterative Join-Graph Propagation (IJGP) described in Figure 13. One iteration of the algorithm applies message-passing in a topological order over the join-graph, forward and back. When node u sends a message (or messages) to a neighbor node v it operates on all the CPTs in its cluster and on all the messages sent from its neighbors excluding the ones received from v. First, all individual functions that share no variables with the eliminator are collected and sent to v. All the rest of the functions are combined in a product and summed over the eliminator between u and v.

Figure 13.

Algorithm Iterative Join-Graph Propagation (IJGP).

Based on the results by Lauritzen and Spiegelhalter (1988) and Larrosa, Kask, and Dechter (2001) it can be shown that:

Theorem 5

If IJGP is applied to a join-tree decomposition it reduces to join-tree clustering, and therefore it is guaranteed to compute the exact beliefs in one iteration.

The time complexity of one iteration of IJGP is O(deg · (n + N) · dw*+1) and its space complexity is O(N · dθ), where deg is the maximum degree of a node in the join-graph, n is the number of variables, N is the number of nodes in the graph decomposition, d is the maximum domain size, w* is the maximum cluster size and is the maximum label size.

For proof, see the properties of CTE presented by Kask et al. (2005).

The special case of Iterative Belief Propagation

Iterative belief propagation (IBP) is an iterative application of Pearl's algorithm that was defined for poly-trees (Pearl, 1988), to any Bayesian network. We will describe IBP as an instance of join-graph propagation over a dual join-graph.

Definition 11 (dual graphs, dual join-graphs) Given a set of functions F = {f1,. . ., fl} over scopes S1,. . ., Sl, the dual graph of F is a graph DG = (V, E, L) that associates a node with each function, namely V = F and an edge connects any two nodes whose function's scope share a variable, . L is a set of labels for the edges, each edge being labeled by the shared variables of its nodes, . A dual join-graph is an edge-labeled edge subgraph of DG that satisfies the connectedness property. A minimal dual join-graph is a dual join-graph for which none of the edge labels can be further reduced while maintaining the connectedness property.

Interestingly, there may be many minimal dual join-graphs of the same dual graph. We will define Iterative Belief Propagation on any dual join-graph. Each node sends a message over an edge whose scope is identical to the label on that edge. Since Pearl's algorithm sends messages whose scopes are singleton variables only, we highlight minimal singleton-label dual join-graphs.

Proposition 3 Any Bayesian network has a minimal dual join-graph where each edge is labeled by a single variable.

Proof: Consider a topological ordering of the nodes in the acyclic directed graph of the Bayesian network d = X1,. . ., Xn. We define the following dual join-graph. Every node in the dual graph D, associated with pi is connected to node pj, j < i if Xj ∈ pa(Xi). We label the edge between pj and pi by variable Xj, namely lij = {Xj}. It is easy to see that the resulting edge-labeled subgraph of the dual graph satisfies connectedness. (Take the original acyclic graph G and add to each node its CPT family, namely all the other parents that precede it in the ordering. Since G already satisfies connectedness so is the minimal graph generated.) The resulting labeled graph is a dual graph with singleton labels. □

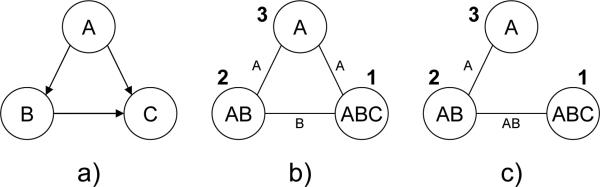

Example 5 Consider the belief network on 3 variables A, B, C with CPTs 1.P(C|A, B), 2.P(B|A) and 3.P(A), given in Figure 14a. Figure 14b shows a dual graph with singleton | labels on the edges. Figure 14c shows a dual graph which is a join-tree, on which belief propagation can solve the problem exactly in one iteration (two passes up and down the tree).

Figure 14.

a) A belief network; b) A dual join-graph with singleton labels; c) A dual join-graph which is a join-tree.

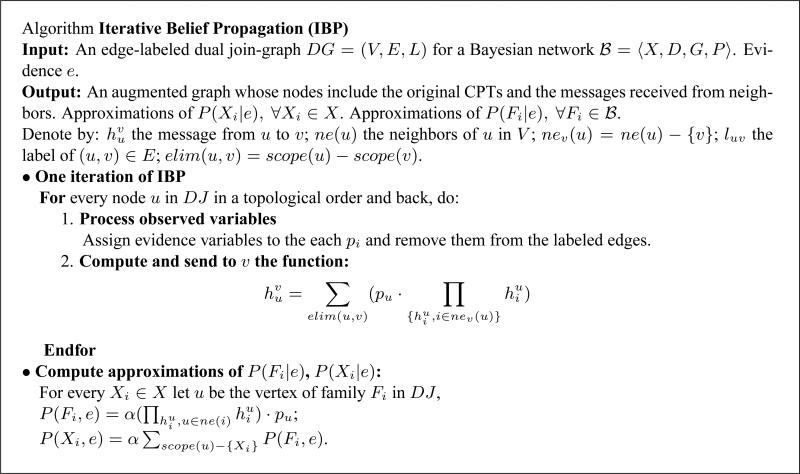

For completeness, we present algorithm IBP, which is a special case of IJGP, in Figure 15. It is easy to see that one iteration of IBP is time and space linear in the size of the belief network. It can be shown that when IBP is applied to a minimal singleton-labeled dual graph it coincides with Pearl's belief propagation applied directly to the acyclic graph representation. Also, when the dual join-graph is a tree IBP converges after one iteration (two passes, up and down the tree) to the exact beliefs.

Figure 15.

Algorithm Iterative Belief Propagation (IBP).

4.3 Bounded Join-Graph Decompositions

Since we want to control the complexity of join-graph algorithms, we will define it on decompositions having bounded cluster size. If the number of variables in a cluster is bounded by i, the time and space complexity of processing one cluster is exponential in i. Given a join-graph decomposition D = 〈JG, χ, ψ, θ〉, the accuracy and complexity of the (iterative) join-graph propagation algorithm depends on two different width parameters, defined next.

Definition 12 (external and internal widths) Given an edge-labeled join-graph decomposition D = 〈JG, χ, ψ, θ〉 of a network , the internal width of D is maxv∈V|χ(v)|, while the external width of D is the treewidth of JG as a graph.

Using this terminology we can now state our target decomposition more clearly. Given a graph G, and a bounding parameter i we wish to find a join-graph decomposition D of G whose internal width is bounded by i and whose external width is minimized. The bound i controls the complexity of join-graph processing while the external width provides some measure of its accuracy and speed of convergence, because it measures how close the join-graph is to a join-tree.

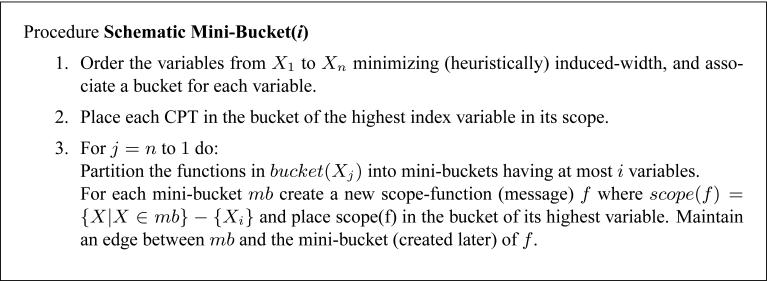

We can consider two classes of algorithms. One class is partition-based. It starts from a given tree-decomposition and then partitions the clusters until the decomposition has clusters bounded by i. An alternative approach is grouping-based. It starts from a minimal dual-graph-based join-graph decomposition (where each cluster contains a single CPT) and groups clusters into larger clusters as long as the resulting clusters do not exceed the given bound. In both methods one should attempt to reduce the external width of the generated graph-decomposition. Our partition-based approach inspired by the mini-bucket idea (Dechter & Rish, 1997) is as follows.

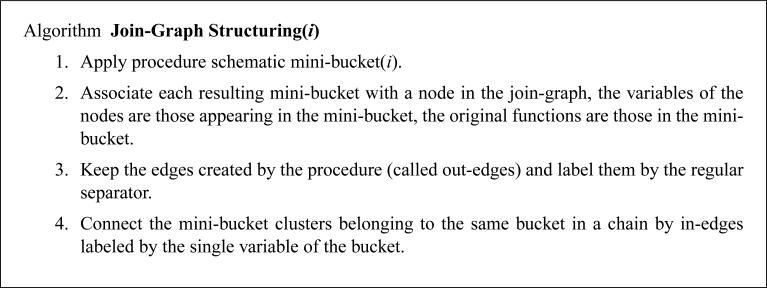

Given a bound i, algorithm Join-Graph Structuring(i) applies the procedure Schematic Mini-Bucket(i), described in Figure 17. The procedure only traces the scopes of the functions that would be generated by the full mini-bucket procedure, avoiding actual computation. The procedure ends with a collection of mini-bucket trees, each rooted in the mini-bucket of the first variable. Each of these trees is minimally edge-labeled. Then, in-edges labeled with only one variable are introduced, and they are added only to obtain the running intersection property between branches of these trees.

Figure 17.

Procedure Schematic Mini-Bucket(i).

Proposition 4 Algorithm Join-Graph Structuring(i) generates a minimal edge-labeled join-graph decomposition having bound i.

Proof: The construction of the join-graph specifies the vertices and edges of the join-graph, as well as the variable and function labels of each vertex. We need to demonstrate that 1) the connectedness property holds, and 2) that edge-labels are minimal.

Connectedness property specifies that for any 2 vertices u and v, if vertices u and v contain variable X, then there must be a path u, w1,. . ., wm, v between u and v such that every vertex on this path contains variable X. There are two cases here. 1) u and v correspond to 2 mini-buckets in the same bucket, or 2) u and v correspond to mini-buckets in different buckets. In case 1 we have 2 further cases, 1a) variable X is being eliminated in this bucket, or 1b) variable X is not eliminated in this bucket. In case 1a, each mini-bucket must contain X and all mini-buckets of the bucket are connected as a chain, so the connectedness property holds. In case 1b, vertexes u and v connect to their (respectively) parents, who in turn connect to their parents, etc. until a bucket in the scheme where variable X is eliminated. All nodes along this chain connect variable X, so the connectedness property holds. Case 2 resolves like case 1b.

To show that edge labels are minimal, we need to prove that there are no cycles with respect to edge labels. If there is a cycle with respect to variable X, then it must involve at least one in-edge (edge connecting two mini-buckets in the same bucket). This means variable X must be the variable being eliminated in the bucket of this in-edge. That means variable X is not contained in any of the parents of the mini-buckets of this bucket. Therefore, in order for the cycle to exist, another in-edge down the bucket-tree from this bucket must contain X. However, this is impossible as this would imply that variable X is eliminated twice. □

Example 6 Figure 18a shows the trace of procedure schematic mini-bucket(3) applied to the problem described in Figure 2a. The decomposition in Figure 18b is created by the algorithm graph structuring. The only cluster partitioned is that of F into two scopes (FCD) and (BF), connected by an in-edge labeled with F.

Figure 18.

Join-graph decompositions.

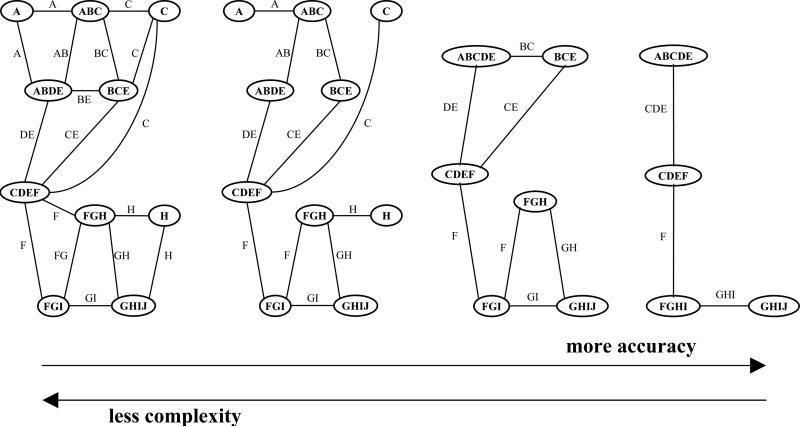

A range of edge-labeled join-graphs is shown in Figure 19. On the left side we have a graph with smaller clusters, but more cycles. This is the type of graph IBP works on. On the right side we have a tree decomposition, which has no cycles at the expense of bigger clusters. In between, there could be a number of join-graphs where maximum cluster size can be traded for number of cycles. Intuitively, the graphs on the left present less complexity for join-graph algorithms because the cluster size is smaller, but they are also likely to be less accurate. The graphs on the right side are computationally more complex, because of the larger cluster size, but they are likely to be more accurate.

Figure 19.

Join-graphs.

4.4 The Inference Power of IJGP

The question we address in this subsection is why propagating the messages iteratively should help. Why is IJGP upon convergence superior to IJGP with one iteration and superior to MC? One clue can be provided when considering deterministic constraint networks which can be viewed as “extreme probabilistic networks”. It is known that constraint propagation algorithms, which are analogous to the messages sent by belief propagation, are guaranteed to converge and are guaranteed to improve with iteration. The propagation scheme of IJGP works similar to constraint propagation relative to the flat network abstraction of the probability distribution (where all non-zero entries are normalized to a positive constant), and propagation is guaranteed to be more accurate for that abstraction at least.

In the following we will shed some light on the IJGP's behavior by making connections with the well-known concept of arc-consistency from constraint networks (Dechter, 2003). We show that: (a) if a variable-value pair is assessed as having a zero-belief, it remains as zero-belief in subsequent iterations; (b) that any variable-value zero-beliefs computed by IJGP are correct; (c) in terms of zero/non-zero beliefs, IJGP converges in finite time. We have also empirically investigated the hypothesis that if a variable-value pair is assessed by IBP or IJGP as having a positive but very close to zero belief, then it is very likely to be correct. Although the experimental results shown in this paper do not contradict this hypothesis, some examples in more recent experiments by Dechter, Bidyuk, Mateescu, and Rollon (2010) invalidate it.

4.4.1 IJGP and Arc-Consistency

For any belief network we can define a constraint network that captures the assignments having strictly positive probability. We will show a correspondence between IJGP applied to the belief network and an arc-consistency algorithm applied to the constraint network. Since arc-consistency algorithms are well understood, this correspondence not only proves the target claims, but may provide additional insight into the behavior of IJGP. It justifies the iterative application of belief propagation, and it also illuminates its “distance” from being complete.

Definition 13 (constraint satisfaction problem) A Constraint Satisfaction Problem (CSP) is a triple 〈X, D, C〉, where X = {X1,. . ., Xn} is a set of variables associated with a set of discrete-valued domains D = {D1,. . ., Dn} and a set of constraints C = {C1,. . ., Cm}. Each constraint Ci is a pair 〈Si, Ri〉 where Ri is a relation Ri ⊆ DSi defined on a subset of variables Si ⊆ X and DSi is a Cartesian product of the domains of variables Si. The relation Ri denotes all compatible tuples of DSi allowed by the constraint. A projection operator π creates a new relation, , where Sj ⊆ Si. Constraints can be combined with the join operator ⋈, resulting in a new relation, . A solution is an assignment of values to all the variables x = (x1,. . . xn), ∈ Di, such that ∀Ci ∈ C, xSi ∈ Ri. The constraint network represents its set of solutions, .

Given a belief network , we define a flattening of the Bayesian network into a constraint network called , where all the zero entries in a probability table are removed from the corresponding relation. The network is defined over the same set of variables and has the same set of domain values as .

Definition 14 (flat network) Given a Bayesian network , the flat network is a constraint network, where the set of variables is X, and for every Xi ∈ X and its CPT we define a constraint RFi over the family of Xi, as follows: for every assignment x = (xi, xpa(Xi) to Fi, (xi, xpa(Xi)) ∈ RFi iff P(xi|xpa(Xi)) > 0.

Theorem 6 Given a belief network , where X = {X1,. . ., Xn}, for any tuple where is the set}of solutions of the flat constraint network.

Proof: . □

Constraint propagation is a class of polynomial time algorithms that are at the center of constraint processing techniques. They were investigated extensively in the past three decades and the most well known versions are arc-, path-, and i-consistency (Dechter, 1992, 2003).

Definition 15 (arc-consistency) (Mackworth, 1977) Given a binary constraint network (X, D, C), the network is arc-consistent iff for every binary constraint Rij ∈ C, every value v ∈ Di has a value u ∈ Dj s.t. (v, u) ∈ Rij.

Note that arc-consistency is defined for binary networks, namely the relations involve at most two variables. When a binary constraint network is not arc-consistent, there are algorithms that can process it and enforce arc-consistency. The algorithms remove values from the domains of the variables that violate arc-consistency until an arc-consistent network is generated. There are several versions of improved performance arc-consistency algorithms, however we will consider a non-optimal distributed version, which we call distributed arc-consistency.

Definition 16 (distributed arc-consistency algorithm) The algorithm distributed arc-consistency is a message-passing algorithm over a constraint network. Each node is a variable, and maintains a current set of viable values Di. Let ne(i) be the set of neighbors of Xi in the constraint graph. Every node Xi sends a message to any node Xj ∈ ne(i), which consists of the values in Xj's domain that are consistent with the current Di, relative to the constraint Rji that they share. Namely, the message that Xi sends to Xj, denoted by , is:

| (1) |

and in addition node i computes:

| (2) |

Clearly the algorithm can be synchronized into iterations, where in each iteration every node computes its current domain based on all the messages received so far from its neighbors (Eq. 2), and sends a new message to each neighbor (Eq. 1). Alternatively, Equations 1 and 2 can be combined. The message Xi sends to Xj is:

| (3) |

Next we will define a join-graph decomposition for the flat constraint network so that we can establish a correspondence between the join-graph decomposition of a Bayesian network and the join-graph decomposition of its flat network . Note that for constraint networks, the edge labeling θ can be ignored.

Definition 17 (join-graph decomposition of the flat network) Given a join-graph decomposition D = 〈JG, χ, ψ, θ〉 of a Bayesian network , the join-graph decomposition Dflat = 〈JG, χ, ψflat〉 of the flat constraint network has the same underlying graph structure JG = (V, E) as D, the same variable-labeling of the clusters χ, and the mapping ψflat maps each cluster to relations corresponding to CPTs, namely Ri ∈ ψflat(v) iff CPT pi ∈ (v).

The distributed arc-consistency algorithm of Definition 16 can be applied to the join-graph decomposition of the flat network. In this case, the nodes that exchange messages are the clusters (namely the elements of the set v of JG). The domain of a cluster of v is the set of tuples of the join of the original relations in the cluster (namely the domain of cluster u is ). The constraints are binary, and involve clusters of v that are neighbors. For two clusters u and v, their corresponding values tu and tv (which are tuples representing full assignments to the variables in the cluster) belong to the relation Ruv (i.e., (tu, tv) ∈ Ru,v) if the projections over the separator (or labeling θ) between u and v are identical, namely πθ((u,v))tu = πθ((u,v))tv.

We define below the algorithm relational distributed arc-consistency (RDAC), that applies distributed arc-consistency to any join-graph decomposition of a constraint network. We call it relational to emphasize that the nodes exchanging messages are in fact relations over the original problem variables, rather than simple variables as is the case for arc-consistency algorithms.

Definition 18 (relational distributed arc-consistency algorithm: RDAC over a join-graph) Given a join-graph decomposition of a constraint network, let Ri and Rj be the relations of two clusters (Ri and Rj are the joins of the respective constraints in each cluster), having the scopes Si and Sj, such that . The message Ri sends to Rj denoted h(i,j) is defined by:

| (4) |

where is the set of relations (clusters) that share a variable with Ri. Each cluster updates its current relation according to:

| (5) |

Algorithm RDAC iterates until there is no change.

Equations 4 and 5 can be combined, just like in Equation 3. The message that Ri sends to Rj becomes:

| (6) |

To establish the correspondence with IJGP, we define the algorithm IJGP-RDAC that applies RDAC in the same order of computation (schedule of processing) as IJGP.

Definition 19 (IJGP-RDAC algorithm) Given the Bayesian network , let Dflat = 〈JG, χ, ψflat, θ〉 be any join-graph decomposition of the flat network . The algorithm IJGP-RDAC is applied to the decomposition Dflat of , and can be described as IJGP applied to D, with the following modifications:

Instead of ∏, we use ⋈.

Instead of ∑, we use π.

- At end end, we update the domains of variables by:

where u is the cluster containing Xi.(7)

Note that in algorithm IJGP-RDAC, we could first merge all constraints in each cluster u into a single constraint R. From our construction, IJGP-RDAC enforces arc-consistency over the join-graph decomposition of the flat network. When the join-graph Dflat is a join-tree, IJGP-RDAC solves the problem namely it finds all the solutions of the constraint network.

Proposition 5 Given the join-graph decomposition Dflat = 〈JG, χ, ψflat, θ〉, JG = (V, E), of the flat constraint network , corresponding to a given join-graph decomposition D of a Bayesian network , the algorithm IJGP-RDAC applied to Dflat enforces arc-consistency over the join-graph Dflat.

Proof: IJGP-RDAC applied to the join-graph decomposition Dflat = 〈JG, χ, ψflat, θ〉, JG = (V, E), is equivalent to applying RDAC of Definition 18 to a constraint network that has vertices V as its variables and as its relations. □

Following the properties of convergence of arc-consistency, we can show that:

Proposition 6 Algorithm IJGP-RDAC converges in O(m · r) iterations, where m is the number of edges in the join-graph and r is the maximum size of a separator Dsep(u,v) between two clusters.

Proof: This follows from the fact messages (which are relations) between clusters in IJGP-RDAC change monotonically, as tuples are only successively removed from relations on separators. Since the size of each relation on a separator is bounded by r and there are m edges, no more than O(m · r) iterations will be needed. □

In the following we will establish an equivalence between IJGP and IJGP-RDAC in terms of zero probabilities.

Proposition 7 When IJGP and IJGP-RDAC are applied in the same order of computation, the messages computed by IJGP are identical to those computed by IJGP-RDAC in terms of zero / nonzero probabilities. That is, h(u,v)(x) ≠ 0 in IJGP iff x ∈ h(u,v) in IJGP-RDAC.

Proof: The proof is by induction. The base case is trivially true since messages h in IJGP are initialized to a uniform distribution and messages h in IJGP-RDAC are initialized to complete relations.

The induction step. Suppose that is the message sent from u to v by IJGP. We will show that if , then where is the message sent by IJGP-RDAC from u to v. Assume that the claim holds for all messages received by u from its neighbors. Let f ∈ clusterv(u) in IJGP and Rf be the corresponding relation in IJGP-RDAC, and t be an assignment of values to variables in elim(u, v). We have . □

Next we will show that IJGP computing marginal probability P(Xi = xi) = 0 is equivalent to IJGP-RDAC removing xi from the domain of variable Xi.

Proposition 8 IJGP computes P(Xi = xi) = 0 iff IJGP-RDAC decides that xi ∈ Di.

Proof: According to Proposition 7 messages computed by IJGP and IJGP-RDAC are identical in terms of zero probabilities. Let f ∈ cluster(u) in IJGP and Rf be the corresponding relation in IJGP-RDAC, and t be an assignment of values to variables in χ(u)\Xi. We will show that when IJGP computes P(Xi = xi) = 0 (upon convergence), then IJGP-RDAC computes xi ∈ Di. We have . Since arc-consistency is sound, so is the decision of zero probabilities. □

Next we will show that P(Xi = xi) = 0 computed by IJGP is sound.

Theorem 7 Whenever IJGP finds P(Xi = xi) = 0, then the probability P(Xi) expressed by the Bayesian network conditioned on the evidence is 0 as well.

Proof: According to Proposition 8, whenever IJGP finds P(Xi = xi) = 0, the value xi is removed from the domain Di by IJGP-RDAC, therefore value xi ∈ Di is a no-good of the network , and from Theorem 6 it follows that . □

In the following we will show that the time it takes IJGP to find all P(Xi = xi) = 0 is bounded.

Proposition 9 IJGP finds all P(Xi = xi) = 0 in finite time, that is, there exists a number k, such that no P(Xi = xi) = 0 will be found after k iterations.

Proof: This follows from the fact that the number of iterations it takes for IJGP to compute P(Xi = xi) = 0 is exactly the same number of iterations IJGP-RDAC takes to remove xi from the domain Di (Proposition 7 and Proposition 8), and the fact the IJGP-RDAC runtime is bounded (Proposition 6). □

Previous results also imply that IJGP is monotonic with respect to zeros.

Proposition 10 Whenever IJGP finds P(Xi = xi) = 0, it stays 0 during all subsequent iterations.

Proof: Since we know that relations in IJGP-RDAC are monotonically decreasing as the algorithm progresses, it follows from the equivalence of IJGP-RDAC and IJGP (Proposition 7) that IJGP is monotonic with respect to zeros. □

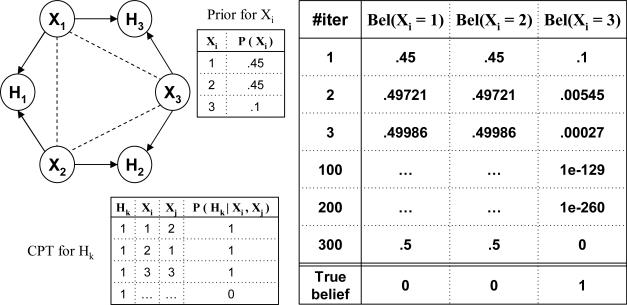

4.4.2 A Finite Precision Problem

On finite precision machines there is the danger that an underflow can be interpreted as a zero value. We provide here a warning that an implementation of belief propagation should not allow the creation of zero values by underflow. We show an example in Figure 20 where IBP's messages converge in the limit (i.e., in an infinite number of iterations), but they do not stabilize in any finite number of iterations. If all the nodes Hk are set to value 1, the belief for any of the Xi variables as a function of iteration is given in the table in Figure 20. After about 300 iterations, the finite precision of our computer is not able to represent the value for Bel(Xi = 3), and this appears to be zero, yielding the final updated belief (5, 5, 0), when in fact the true updated belief should be (0, 0, 1). Notice that (5, 5, 0) cannot be regarded as a legitimate fixed point for IBP. Namely, if we would initialize IBP with the values (5, 5, 0), then the algorithm would maintain them, appearing to have a fixed point, but initializing IBP with zero values cannot be expected to be correct. When we initialize with zeros we forcibly introduce determinism in the model, and IBP will always maintain it afterwards.

Figure 20.

Example of a finite precision problem.

However, this example does not contradict our theory because, mathematically, Bel(Xi = 3) never becomes a true zero, and IBP never reaches a quiescent state. The example shows that a close to zero belief network can be arbitrarily inaccurate. In this case the inaccuracy seems to be due to the initial prior belief which are so different from the posterior ones.

4.4.3 Accuracy of IBP Across Belief Distribution

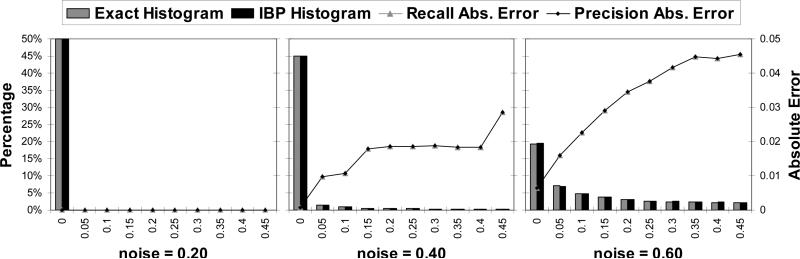

We present an empirical evaluation of the accuracy of IBP's prediction for the range of belief distribution from 0 to 1. These results also extend to IJGP. In the previous section, we proved that zero values inferred by IBP are correct, and we wanted to test the hypothesis that this property extends to small beliefs (namely, that are very close to zero). That is, if IBP infers a posterior belief close to zero, then it is likely to be correct. The results presented in this paper seem to support the hypothesis, however new experiments by Dechter et al. (2010) show that it is not true in general. We do not have yet a good characterization of the cases when the hypothesis is confirmed.

To test this hypothesis, we computed the absolute error of IBP per intervals of [0, 1]. For a given interval [a, b], where 0 ≤ a < b ≤ 1, we use measures inspired from information retrieval: Recall Absolute Error and Precision Absolute Error.

Recall is the absolute error averaged over all the exact posterior beliefs that fall into the interval [a, b]. For Precision, the average is taken over all the approximate posterior belief values computed by IBP to be in the interval [a, b]. Intuitively, Recall([a,b]) indicates how far the belief computed by IBP is from the exact, when the exact is in [a, b]; Precision([a,b]) indicates how far the exact is from IBP's prediction, when the value computed by IBP is in [a, b].

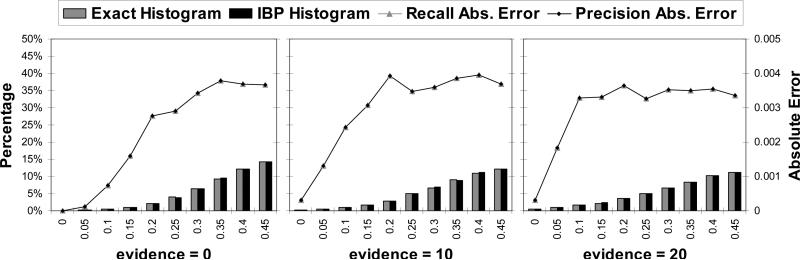

Our experiments show that the two measures are strongly correlated. We also show the histograms of distribution of belief for each interval, for the exact and for IBP, which are also strongly correlated. The results are given in Figures 21 and 22. The left Y axis corresponds to the histograms (the bars), the right Y axis corresponds to the absolute error (the lines).

Figure 21.

Coding, N=200, 1000 instances, w*=15.

Figure 22.

10×10 grids, 100 instances, w*=15.

We present results for two classes of problems: coding networks and grid network. All problems have binary variables, so the graphs are symmetric about 0.5 and we only show the interval [0, 0.5]. The number of variables, number of iterations and induced width w* are reported for each graph.

Coding networks

IBP is famously known to have impressive performance on coding networks. We tested on linear block codes, with 50 nodes per layer and 3 parent nodes. Figure 21 shows the results for three different values of channel noise: 0.2, 0.4 and 0.6. For noise 0.2, all the beliefs computed by IBP are extreme. The Recall and Precision are very small, of the order of 10−11. So, in this case, all the beliefs are very small (ε small) and IBP is able to infer them correctly, resulting in almost perfect accuracy (IBP is indeed perfect in this case for the bit error rate). When the noise is increased, the Recall and Precision tend to get closer to a bell shape, indicating higher error for values close to 0.5 and smaller error for extreme values. The histograms also show that less belief values are extreme as the noise is increased, so all these factors account for an overall decrease in accuracy as the channel noise increases. These networks are examples with a large number of ε-small probabilities and IBP is able to infer them correctly (absolute error is small).

Grid networks

We present results for grid networks in Figure 22. Contrary to the case of coding networks, the histograms show higher concentration of beliefs around 0.5. However, the accuracy is still very good for beliefs close to zero. The absolute error peaks close to 0 and maintains a plateau, as evidence is increased, indicating less accuracy for IBP.

5. Experimental Evaluation

As we anticipated in the summary of Section 3, and as can be clearly seen now by the structuring of a bounded join-graph, there is a close relationship between the mini-clustering algorithm MC(i) and IJGP(i). In particular, one iteration of IJGP(i) is similar to MC(i). MC sends messages up and down along the clusters that form a set of trees. IJGP has additional connections that allow more interaction between the mini-clusters of the same cluster. Since this is a cyclic structure, iterating is facilitated, with its virtues and drawbacks.s

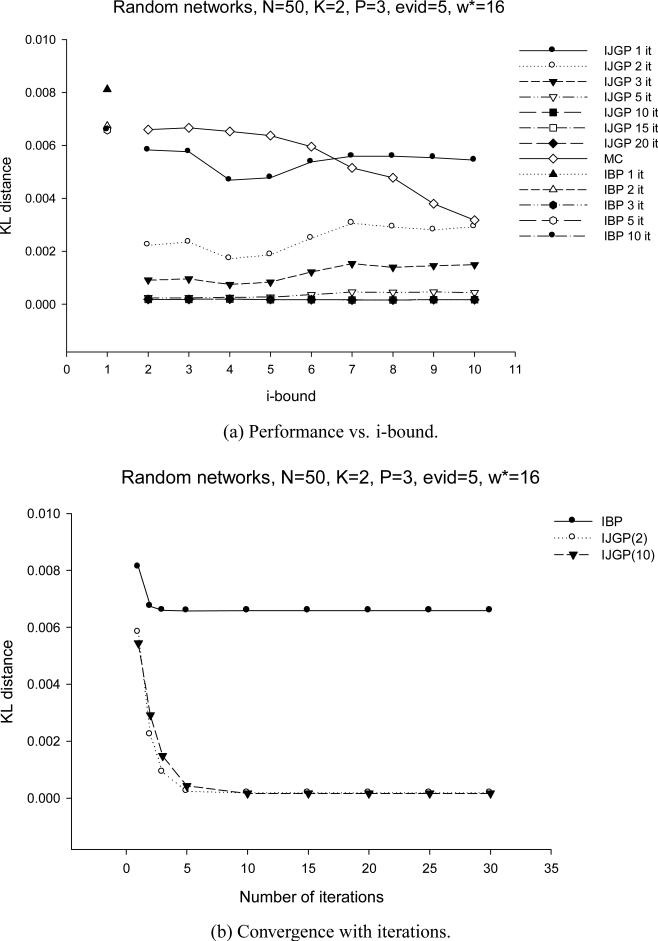

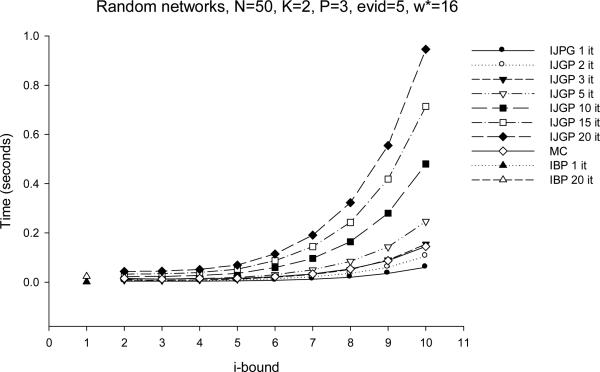

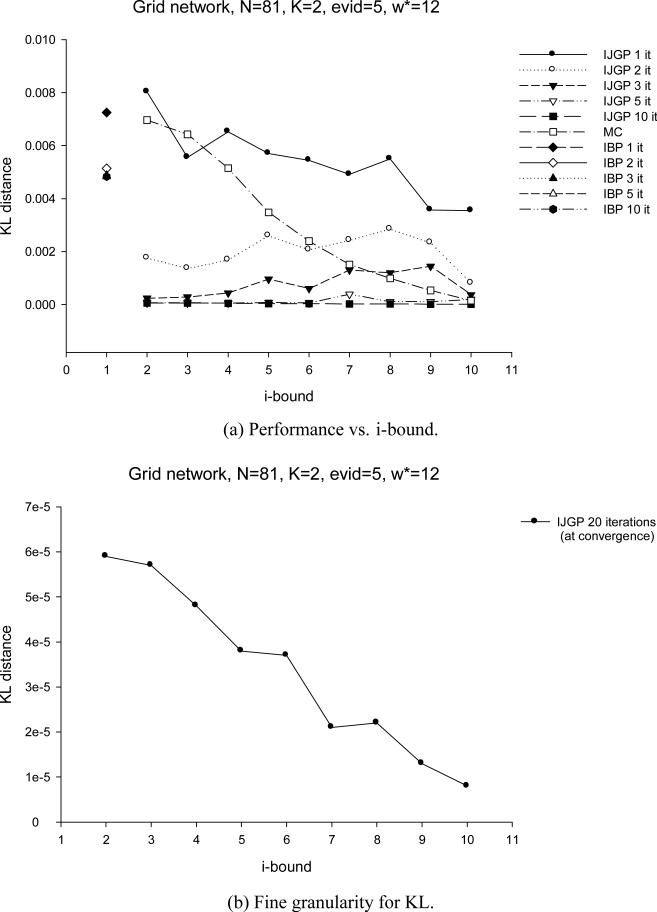

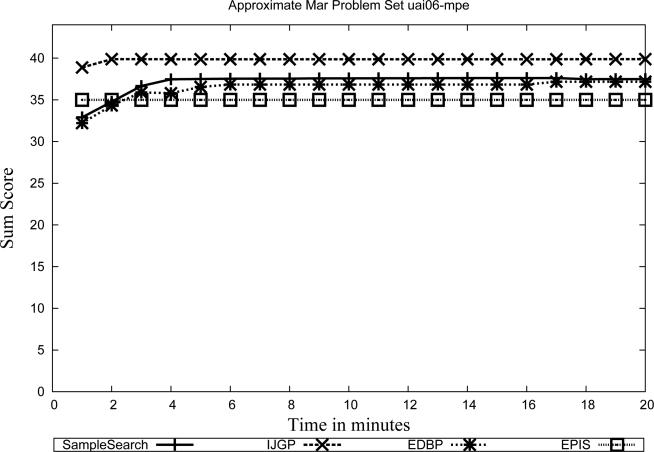

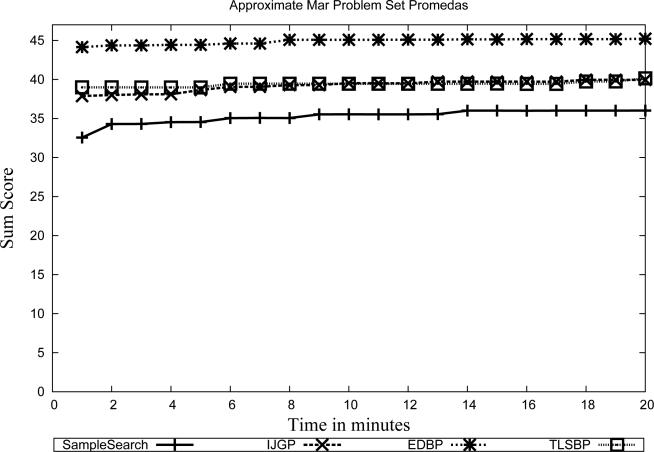

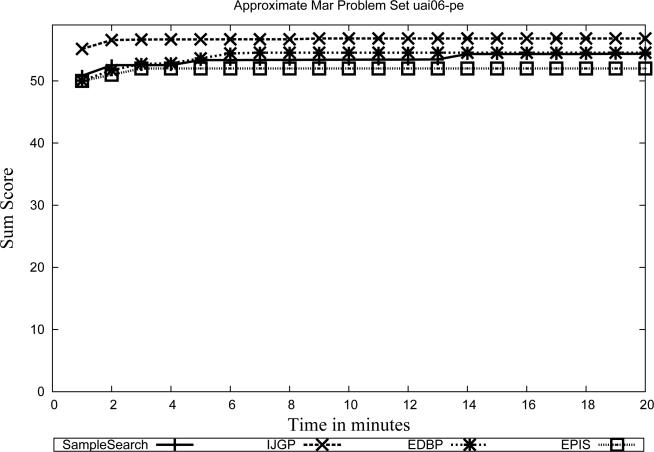

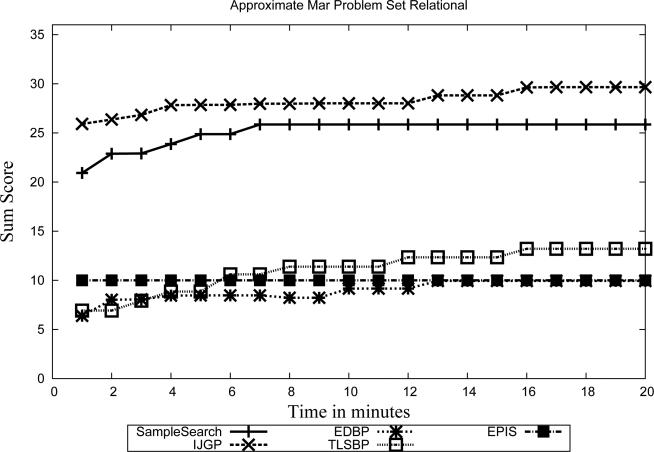

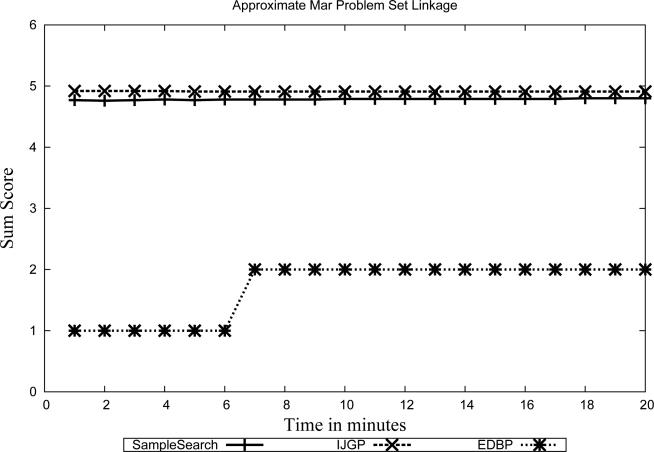

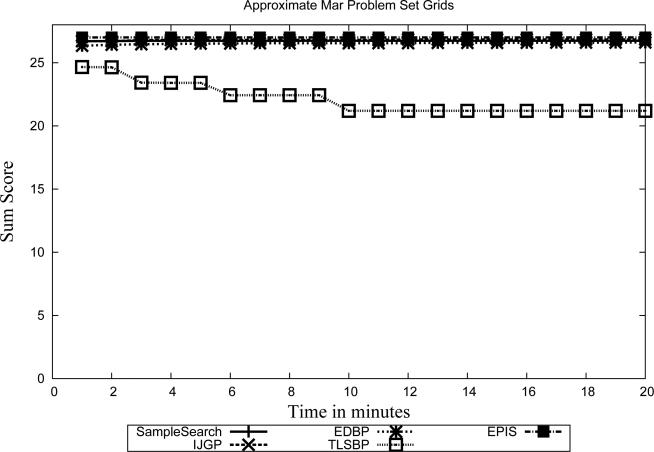

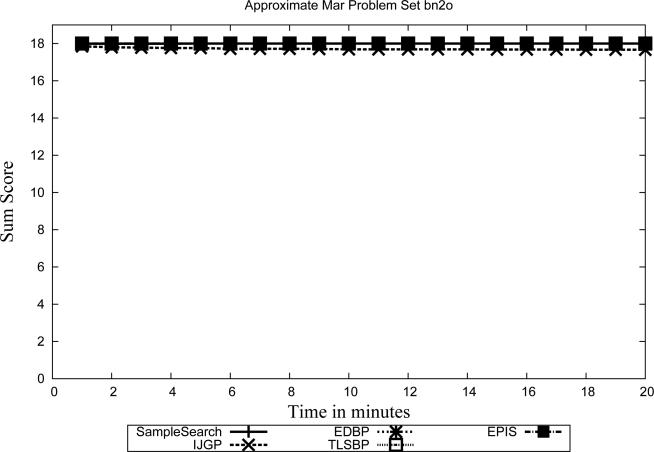

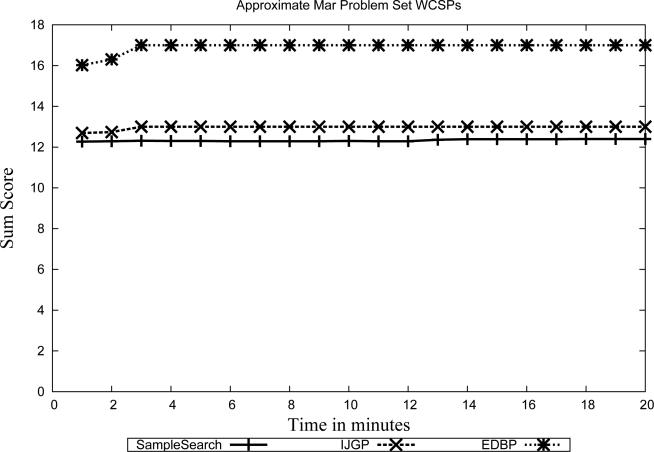

In our evaluation of IJGP(i), we focus on two different aspects: (a) the sensitivity of parametric IJGP(i) to its i-bound and to the number of iterations; (b) a comparison of IJGP(i) with publicly available state-of-the-art approximation schemes.

5.1 Effect of i-bound and Number of Iterations

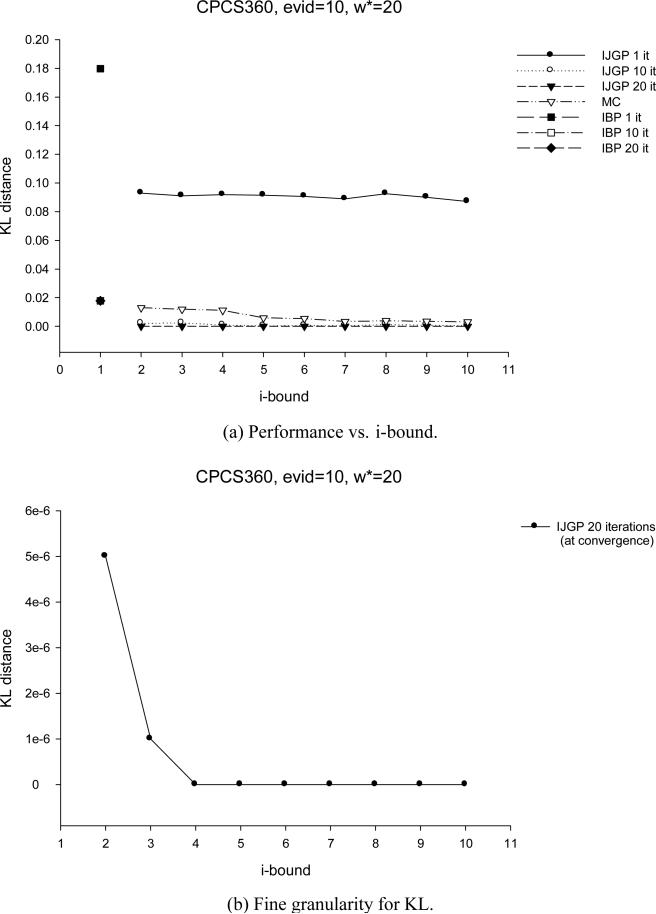

We tested the performance of IJGP(i) on random networks, on M-by-M grids, on the two benchmark CPCS files with 54 and 360 variables, respectively and on coding networks. On each type of networks, we ran IBP, MC(i) and IJGP(i), while giving IBP and IJGP(i) the same number of iterations.

We use the partitioning method described in Section 4.3 to construct a join-graph. To determine the order of message computation, we recursively pick an edge (u,v), such that node u has the fewest incoming messages missing.

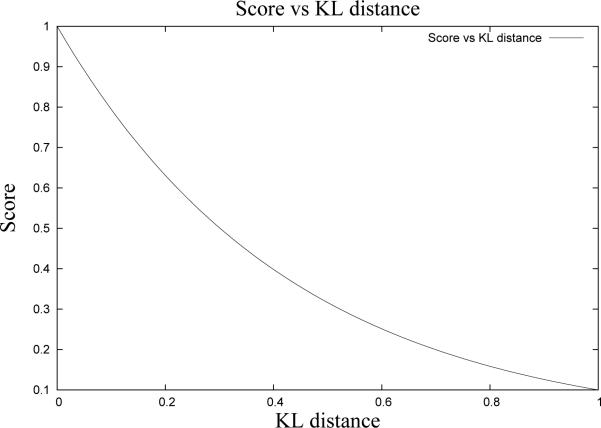

For each network except coding, we compute the exact solution and compare the accuracy using the absolute and relative error, as before, as well as the KL (Kullback-Leibler) distance - Pexact(X = a) · log(Pexact(X = a)Papproximation(X = a)) averaged over all values, all variables and all problems. For coding networks we report the Bit Error Rate (BER) computed as described in Section 3.2. We also report the time taken by each algorithm.