Abstract

Knowledge of musical rules and structures has been reliably demonstrated in humans of different ages, cultures, and levels of music training, and has been linked to our musical preferences. However, how humans acquire knowledge of and develop preferences for music remains unknown. The present study shows that humans rapidly develop knowledge and preferences when given limited exposure to a new musical system. Using a non-traditional, unfamiliar musical scale (Bohlen-Pierce scale), we created finite-state musical grammars from which we composed sets of melodies. After 25–30 min of passive exposure to the melodies, participants showed extensive learning as characterized by recognition, generalization, and sensitivity to the event frequencies in their given grammar, as well as increased preference for repeated melodies in the new musical system. Results provide evidence that a domain-general statistical learning mechanism may account for much of the human appreciation for music.

Keywords: statistical learning, auditory perception, music cognition, preference, grammar

Music we encounter every day is composed according to structural rules and principles. These rules dictate the relationship between pitches and chords over time (Piston, Devoto, & Jannery, 1987), and are thought to be analogous to linguistic grammar (Bernstein, 1973; Lerdahl & Jackendoff, 1983). The existence of these sorts of musical regularities across cultures has led some to conclude that structural principles in music are universal and moreover, that they reflect innate biases in human cognition (Justus & Hutsler, 2005), or even innate knowledge. Here we challenge the necessity of innate knowledge of musical structure by demonstrating that such knowledge can be quickly acquired via passive exposure.

People of different cultures, ages, and levels of music training have reliably been shown to possess knowledge of musical rules and structures consistent with their experience (Bigand, Poulin, Tillmann, Madurell, & D’Adamo, 2003; Bigand, Tillmann, Poulin-Charonat, & Manderlier, 2005; Castellano, Bharucha, & Krumhansl, 1984; Koelsch, 2005; Koelsch, Gunter, Friederici, & Schroger, 2000; Trainor & Trehub, 1994). For instance, while Western listeners are shown to be sensitive to the statistical structure of Western musical scales (Krumhansl & Kessler, 1982), Indonesian participants are sensitive to the statistical structure of tones in the Balinese scale (Castellano et al., 1984), and Finnish music students demonstrate knowledge of the statistical structure of North Sami yoiks, a native song tradition of Finland (Krumhansl et al., 2000).

Some aspects of musical knowledge begin to be apparent very early in life. Demany and Armand (1984), for instance, found evidence for knowledge of octave equivalence—the idea that pitches recur at the octave—in the first three months of life. Other types of musical knowledge appear to emerge later in development: sensitivity to key membership emerges at about 5 years of age, and it is not until about 7 years that children begin to show sensitivity to implied harmony (Trainor & Trehub, 1994). These developmental changes are consistent with the idea that experience and exposure are crucial to musical knowledge. Although neurophysiological markers of sensitivity to musical rule violations are larger in musicians relative to nonmusicians (Besson & Faïta, 1995), some neurophysiological signatures to harmonic and melodic rule sensitivity have been observed even in individuals without music training (Koelsch, Gunter, Friederici, & Schröger, 2000; Loui, Grent-’t-Jong, Torpey, & Woldorff, 2005; Miranda & Ullman, 2007), suggesting that explicit training may be helpful, but is not necessarily required, for the acquisition of such knowledge.

Despite all that we know about musical knowledge, its source remains an open question. While human knowledge of musical structure may be a result of innate constraints in our auditory system (Hauser & McDermott, 2003) or cognitive functions more specifically geared for music (Lerdahl & Jackendoff, 1983), such knowledge also may be acquired via exposure, suggesting a sensitivity to the statistical regularities present within and between sounds in the music of one’s cultural experiences (Hannon & Trainor, 2007; Huron, 2006; Krumhansl, 1990). Here we investigate the latter possibility: we test the hypothesis that humans can acquire structural regularities akin to the harmonic and grammatical regularities of music via passive exposure. This proposal is analogous to the statistical learning theory in language acquisition (Newport & Aslin, 2000; Saffran, Aslin, & Newport, 1996), which posits that language contains statistical regularities to which humans are sensitive, and that linguistic knowledge is acquired via the computation of such statistics. Importantly, in statistical learning theory, languages evolve to fit human learning abilities, explaining the existence of universals without recourse to innate substantive knowledge (Newport & Aslin, 2000), something also possible in music.

Studies investigating language learning have used many natural and artificial languages (e.g., Hudson Kam & Newport, 2005; Saffran et al., 1996). Similarly, studies investigating the acquisition of musical knowledge have used Western and non-Western musical sounds. Implicit learning of nonverbal material in the auditory modality has been investigated with tones in a context-independent grammar (Kuhn & Dienes, 2005), with tones and timbres in finite state grammars (Altmann, Dienes, & Goode, 1995; Tillman & McAdams, 2004), and with rules of musical transformations (Dienes & Longuet-Higgins, 2004). While results demonstrate successful learning of artificial musical systems, these studies utilize pitches and some timbres (or combinations of timbres) that are familiar to participants from Western cultures. Thus, it remains unclear whether participants’ successful learning of musical grammars can occur using tones defined by unfamiliar scales. In that regard, work investigating the learning of musical tuning systems using non-Western music has shown that while Western one-year-old infants and adults could detect mistunings only in familiar Western scales and not in augmented or non-Western (Javanese pelog) scales, six-month-old Western infants were able to perceive large mistunings in both Western and augmented scales but not in Javanese scales (Lynch & Eilers, 1992), suggesting that a combination of acculturation and innate predispositions might have contributed to the perception of musical tunings. Although the sense of scale and tuning is fundamental to music perception, it remained to be determined whether the learning of higher-order structural aspects of musical grammars could be acquired from passive exposure to a non-traditional, artificial musical scale.

In addition to challenges in demonstrating learning, the question of how musical knowledge relates to preferences is unclear. People around the world enjoy musical experiences and show different affective responses to different music. These affective responses also have been associated with the manipulation of musical structure: violations of typical musical structures and patterns have been linked to preferences in humans regardless of music training (Loui & Wessel, 2007; Steinbeis, Koelsch, & Sloboda, 2006), supporting the notion, first proposed by Meyer (1956) and later formalized by Narmour (1990) and others, that the violation of expectations (i.e., for common musical structures) elicits arousal and affect in music perception. Regarding the question of how exposure to structured music relates to the development of knowledge and affect, one possibility is that repeated listening of music leads to both knowledge and preference formation. This is consistent with several studies showing the effects of mere exposure on preference for artificial visual stimuli (e.g., Zajonc, 1968), and effects of mere exposure on preference for musical compositions (Peretz, Gaudreau, & Bonnel, 1998; Tan, Spackman, & Peaslee, 2006). In particular, Tan et al. showed a mere exposure effect, as indicated by increased preference ratings, after exposure to short complex compositions; in contrast, preference ratings decreased with repeated exposure for short, simple compositions, in line with the two-factor theory proposed by Berlyne (1971).

Another possibility is that different amounts or types of musical exposure contribute to affective change and structural knowledge respectively (Kuhn & Dienes, 2005; Szpunar, Schellenberg, & Pliner, 2004). As most people have had large but variable amounts of exposure to many different types of music, the possible dissociation between knowledge and affect is difficult to identify by looking at existing musical structures; however, this question can be addressed by using a novel musical system.

In the current study, the questions we ask pertain to the acquisition of musical knowledge and preference, similar to previous studies; however, our approach differs from previous investigations in using a novel and artificial musical system independent of prior associations–the non-octave-based Bohlen-Pierce scale. We created a novel musical system in this scale and exposed participants to exemplar melodies generated according to statistical regularities, and then tested participants in various ways to see whether they had acquired knowledge of the new musical system. Additionally, we explored whether this knowledge was related to changes in preferences for melodies in the new musical system.

Defining the New Musical System

We were interested in whether people can quickly learn: (a) the regularities underlying a new musical system, and (b) to like music composed in unfamiliar ways. We exposed people to melodies composed according to finite-state grammars and then tested them to see what they had learned. To ensure that they were indeed learning and not simply falling back on their existing knowledge of music, we used the Bohlen-Pierce scale. This scale was first proposed by Heinz Bohlen in the early 1970s and uses a set of mathematical relationships that are designed to be different from Western music, while still giving rise to a sense of tonality. Thus, it has been adopted by various contemporary composers and has even received some interest in music cognition and psychoacoustics (Krumhansl, 1987; Mathews, Pierce, Reeves, & Roberts, 1988; Sethares, 2004).

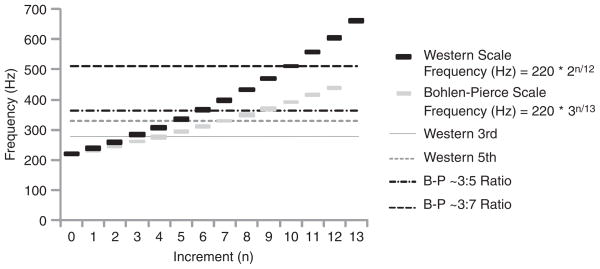

In the equal-tempered Western chromatic scale, frequencies of notes are based on 12 logarithmically even divisions of an octave, which is a 2:1 frequency ratio; thus, the equal-tempered Western scale is defined as frequency (Hz) = k*2n/12, where k is constant and n is the number of steps along the chromatic scale. In contrast, the Bohlen-Pierce scale is based on 13 logarithmically even divisions of a tritave, which is a 3:1 ratio in frequency. Tones in one tritave of the Bohlen-Pierce scale are defined as frequency (Hz) = k*3n/13, where k is constant and n is the number of steps along the Bohlen-Pierce scale. The actual frequencies used are shown in Figure 1, alongside the closest (analogous) frequencies in one octave of the Western chromatic scale.

FIGURE 1.

Frequencies along the Bohlen-Pierce scale compared to the Western scale. One cycle of each scale is shown in the figure. The starting point of the two scales is the same (220 Hz) and both scales are equal-tempered, thus making them analogs of each other except for the intrinsic differences in base and recurrence (2n/12 vs. 3n/13). The horizontal dotted and dashed lines represent major third and perfect fifth intervals in the Western scale and approximate 3:5 and 3:7 intervals in the Bohlen-Pierce scale.

Using this scale, it is possible to define chords with pitches whose frequencies are approximately related to each other in low-integer ratios, which are perceived as being relatively consonant psychoacoustically (Kameoka & Kuriyagawa, 1969). One “major”chord in this new scale is defined as a set of three pitches that approximate a 3:5:7 ratio in frequency (Krumhansl, 1987). To create the actual melodies, we first created chord progressions, which were simply different chords occurring sequentially. We created two chord progressions, each of which consisted of four chords. The two chord progressions contained identical chords, but were reversed in sequential order relative to each other. Thus, the event frequencies for each note were the same for the two chord progressions, but transitional probabilities between the notes were different.

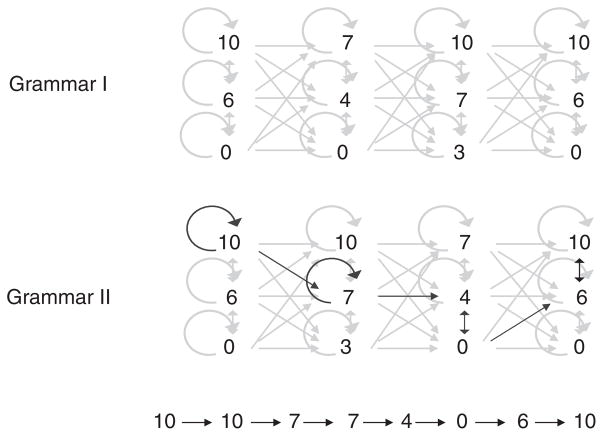

These chord progressions were then used to form two finite-state grammars, shown in Figure 2. Each chord was treated as a state, and each pitch as a possible node in the state. A melody (from either of the two grammars) began with a note selected from among the three pitches in the first chord. Next, the following note was selected by following any of the six possible pathways that lead either to: a repeated note, a note up or down within the same chord, or a move forward to one of the three notes in the next chord. One possible pathway through Grammar I is highlighted, resulting in the melody shown at the bottom of the figure. Actual melodies that the participants were exposed to ranged from four to eight notes. A small subset of melodies was legal in both grammars. These melodies were not used in Experiment 1, but were included in the exposure sets (but not the test items) in Experiments 2 and 3.

FIGURE 2.

(A) A diagram of the finite-state grammar illustrating the composition of melody from harmony. Each number represents n in the Bohlen-Pierce scale formula in Figure 1a. Legal paths of each grammar are shown by the arrows. (B) The derivation of one melody from one of the two grammars. Dark arrows illustrate the paths taken, whereas light arrows illustrate other possible paths that are legal in the grammar. The resultant melody is shown at the bottom of the figure.

The artificial musical system adheres to various rules for a generative theory of tonal music (Lerdahl & Jackendoff, 1983). This makes the system viable as a new compositional tool from which large numbers of melodies can be generated, while being completely unfamiliar to all participants of our studies.1

The Present Studies

To investigate humans’ acquisition of various aspects of musical knowledge, we exposed participants to melodies from one of the two grammars and tested them to see what they had learned. We began by asking simply whether participants could recognize the particular melodies to which they had been exposed. However, we were primarily interested in learning above and beyond recognition. In particular, we tested for participants’ sensitivity to the exposure frequencies (rate of recurrence) of the various tones in the exposure melodies. We also tested for their ability to learn the underlying structure from the exposure melodies using a generalization test for the ability to distinguish novel grammatical from ungrammatical melodies. Moreover, we tested for participants’ formation of preferences for melodies in the new system, similar to the mere exposure effect (Zajonc, 1968). In Experiments 1 and 2 we explored the effect of exposure set size on learning outcomes, and in Experiment 3 we examined the contribution of music training to learning.

Experiment 1

In the first experiment, we were interested in testing whether prolonged exposure to certain subsets of items in a new musical grammar could lead to learning. To ensure maximal exposure within a relatively short time frame, we repeated a small number of melodies (five melodies from each grammar) many times. Then participants were tested for recognition (as a measure of rote memory), generalization (as a measure of grammar learning), goodness-of-fit ratings (as a measure of sensitivity to statistical regularities), and preference (as a measure of implicit memory and emotional response to music). As people with and without music training are shown to behave differently in some musical tests (e.g., Krumhansl, 1990), we chose to conduct the initial experiment on persons with music training, but in Experiment 3 we will test directly for the contribution of Western music training on the ability to learn the new musical system.

Method

PARTICIPANTS

Participants were 17 female and 5 male undergraduate students from the University of California at Berkeley (age range = 18 to 22 years) who received course credit for participating. Inclusion criteria for participants included having normal hearing (assessed via self-report) and more than 5 years of music training. Actual participants’ music training ranged from 5 to 14 years (M = 9.0; SD = 2.2) in one or more instruments including piano, violin, viola, flute, clarinet, oboe, saxophone, trumpet, percussion, guitar, zither, and voice. Half of the participants heard melodies from Grammar I and the other half from Grammar II.

STIMULI

Stimuli in this experiment consisted of five melodies in each of the two grammars. Each note in a melody was a pure tone that lasted 500 ms, with envelope rise and fall times of 5 ms each. Melodies contained eight notes each and were arranged into a tone stream, in which each of the five melodies was presented 100 times total. The tone stream was segmented such that 500 ms of silence occurred between individual melodies, so that melodies were temporally separated from each other. An additional five melodies in each grammar were constructed for use as test stimuli. Test and exposure melodies were generated using Max/MSP (Zicarelli, 1998).

PROCEDURE

Experiments were run in a sound-attenuated chamber. Each experiment was run in five phases, listed below in the order in which they were administered.

1. Pre-exposure probe tone ratings

The probe tone ratings test, developed by Krumhansl and Kessler (1982), measures sensitivity to the underlying frequency structure of music. The task involves listening to a melody (or melody fragment) that is followed by a probe tone. The listener rates how well the probe tone fits into the previous fragment. Ratings have been shown to reflect the statistics of musical compositions (Krumhansl, 1990); that is, tones that are most critical to a given harmonic structure or key are rated as better fitting than tones less critical to or not present in the key. In most existing musical systems, the most critical tones also happen to be the one occurring most frequently in pieces in that key. For instance, C is the most frequent note in pieces written in the key of C. The use of a novel set of occurrence frequencies in a novel musical system can disentangle the contributions of tone frequency and importance in the given musical structure.

In the present experiment, participants heard a melody from their assigned grammar followed by a probe tone. The probe tone ranged from 220 Hz (B-P note 1 in Figure 1) to 606.5Hz (B-P note 13 in Figure 1). The participant’s task was to rate how well the probe tone fit the preceding melody on a scale of 1 to 7, where “1” was “poor” and “7” was “well.” There were 13 trials, all using the same melody, with the participant rating each probe tone once. If this task measures sensitivity to relative frequency, we would expect little difference in ratings given to individual tones at this point in the study, as participants have not yet been exposed to the melodies.

2. Exposure

Exposure lasted 25 min in total, during which participants listened to the melodies from their assigned exposure grammar. Stimuli were presented via headphones at 70 dB. Participants were given the option of drawing on paper as a way of passing the time while listening.

3a. Forced-choice recognition

This testing phase began immediately after the previously described exposure phase. In the third phase participants were given a two-alternative forced-choice task. The task comprised two blocks of five trials (10 trials in total), each of which tested a different aspect of participants’ knowledge. The first, the recognition block, examined participants’ knowledge of the specific melodies to which they had been exposed. On each trial, two melodies were played one after another, with .5 s of silence between the two. Participants’ task was to indicate which melody (the first or second) sounded more familiar. One of the two melodies belonged to the set that had been presented during exposure, whereas the other melody was drawn from the alternate grammar; that is, it was one of the other participants’ five exposure melodies. Note that the right answer for one group of participants was the wrong answer for the other group, serving as a control for possible item effects.

3b. Forced-choice generalization

The second block of two-alternative forced-choice trials tested for generalization: whether participants had learned anything about the underlying grammar from which their exposure melodies had been generated. The participants’ task was similar to forced-choice recognition trials except participants heard two unfamiliar melodies, one of which was generated by their own grammar, whereas the other was generated by the other group’s grammar. In this block, if participants had extracted some information about the grammar underlying their exposure melodies, they should select the novel melodies generated by their grammar.

4. Post-exposure probe tone ratings

The fourth phase consisted of a probe tone ratings task that was identical to the first phase. If, over the course of exposure, participants had learned something about the relative frequencies of the various tones, we would expect ratings at this stage to be different than the pre-exposure ratings. In particular, the tones that occurred in the input melodies more frequently should be rated higher than those that occurred infrequently. Finally, if this task measures innate sensitivity to octave based scales, we should see no change in ratings between the first and second test.

5. Preference ratings

In the final phase, participants were given 20 trials of preference ratings. This test examined whether participants could learn to like melodies in a novel musical system. On each trial, participants heard one melody and indicated their preference for it on a scale of 1 to 7, with “1” being the “least preferable” and “7” being the “most preferable.” Melodies were drawn either from one of three possible sets: (1) the exposure set (old melody/same grammar), (2) the same grammar but not the exposure set (new melody/same grammar), or (3) the alternate grammar (new melody/different grammar). If participants can learn to like individual melodies based on mere exposure to those melodies, preferences should be higher for the old melodies than either of the other two types of melodies. If instead, preferences for the new melodies are based on aspects of the structure of the melodies, we might expect preferences for the new melodies in the same grammar to also be higher than preferences for melodies composed according to the other grammar. Alternately, preferences for music might be unrelated to exposure, in which case we would expect little effect of exposure on preferences.

Results

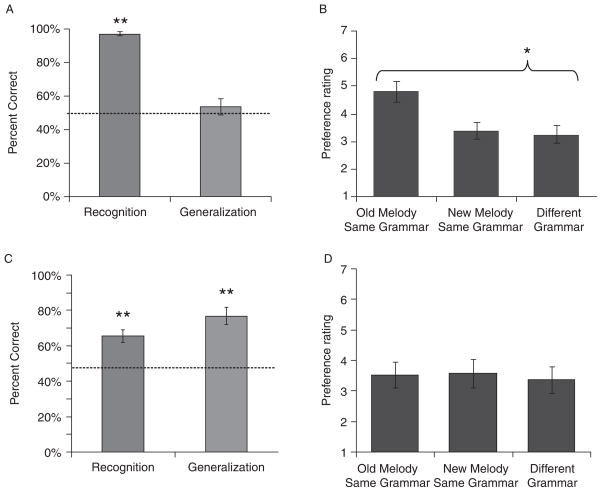

Mean performance on the recognition and generalization trials is shown in Figure 3. Performance was very good on recognition trials; participants recognized old melodies with very high accuracy and at a rate that was significantly above chance (50%), t(20) = 33.10, p <.001 (two-tailed). However, learning was restricted to the particular exposure melodies; performance on the generalization task (comparing new melodies from the exposure grammar to melodies from the other grammar) was not significantly different from chance, t(20) = 0.67, p =.41 (two-tailed; Figure 3a).

FIGURE 3.

(A) Two-alternative forced choice results for Experiment 1. ( B) Preference rating results for Experiment 1. (C) Two-alternative forced choice results for Experiment 2. (D) Preference rating results for Experiment 2.

Preference ratings for the three types of melodies (old melodies, new melodies/same grammar, new melodies/other grammar) are shown in Figure 3b. There was a significant effect of melody type, F(2, 63) = 3.42, p <.038, and a further t-test contrasting ratings for old melodies against the other groups showed that participants rated the old melodies as significantly more preferable than new melodies in the same grammar, t(20) = 3.60, p =.0018, suggesting some implicit memory and preference formation for the repeated melodies. No significant difference in preference ratings was observed between new items in the exposure grammar and melodies in the other grammar, t(20) = 0.71, p =.71 (Figure 3b).

As mentioned, participants performed the probe tone task before and after exposure, allowing us to directly assess participants’ sensitivity to exposure frequencies of the various tones. Probe tone ratings collected before and after exposure were both significantly correlated with the exposure frequencies (pre-exposure r(20) =.36, SE = 0.05, t-test against chance level of 0: t(20) = 2.71, p <.05; post-exposure r(20) = .64, SE = 0.04, t(20) = 7.75, p < .001), but the difference between pre-exposure correlations and post-exposure correlations was not significant, t(20) = 1.22, p = .23. In a subsequent analysis, effects of the melody were partialled out using the partial correlation equation:

where X was the ratings profile, Y was the exposure profile, and Z was the melody profile; that is, a profile that represents the melody used to obtain probe tone ratings. The melody profile was a plot of event frequencies of each tone (number of times each tone along the B-P scale occurred) in the melody that was presented at the start of each trial of the probe tone ratings procedure. When X was the pre-exposure ratings profile, rXY·;Z dropped to chance levels (not significantly above zero correlation), but when X was post-exposure ratings, rXY·Z was significantly above chance. Thus, when effects of the melody used to obtain the ratings were partialled out using partial correlation, post-exposure ratings were still correlated with exposure frequencies, r′(19) = .26, t(20) = 3.00, p < .01, whereas pre-exposure ratings were not, r′(19) = .05, t(20) = 0.60, p = .55, with the partial correlation scores for post-exposure ratings being significantly higher than for pre-exposure ratings, t(20) = 2.95, p < .01.

Discussion

In this experiment, we observed ceiling levels of recognition accuracy, combined with the inability to form generalizations. In addition, we observed an increase in preference for some melodies in the new musical system: after hearing a small set of melodies for a large number of repetitions, participants rated the previously-encountered melodies as more preferable than other melodies. This was similar to the mere exposure effect, first reported by Zajonc (1968), which refers to the finding that items become more preferable as a function of repeated exposure.

Probe tone data demonstrated increased sensitivity to the event frequencies of the new musical system by observing an increase in correlation to the exposure ratings after exposure compared to before exposure. This is consistent with previous reports of probe tone ratings and their correlation with existing works in Western music (Krumhansl, 1990), but the present data extend the use of the probe tone methodology into a novel musical context.

The pattern of results obtained from forced-choice tests, i.e., successful recognition and unsuccessful generalization, suggests that participants may be overlearning the five melodies that were repeatedly presented to them during exposure, rather than learning the structure underlying the melodic exemplars. It is possible that this is due to the small number of exposure melodies. In an artificial language learning study Gomez (2002) found that the ability to learn certain structures was dependent on set size of stimuli in the input, with increased set sizes leading to improved learning of underlying regularities. If this were the case, then increasing variability by adding to the set size of melodies presented might allow participants to generalize their knowledge to new instances of the same grammar. We investigate this possibility in Experiment 2 by increasing the set size of melodies.

Experiment 2

Method

PARTICIPANTS

Twenty-four undergraduate students (13 females, 11 males, aged 18 to 22 years) participated in this study. Recruitment criteria and group assignments were the same as Experiment 1. Participants reported an average of 9.6 years of music training (SD = 2.6; range = 5 to 14) in one or more instruments including piano, violin, viola, cello, flute, clarinet, saxophone, guitar, bass, and voice. None of the participants in Experiment 2 were in Experiment 1.

STIMULI AND PROCEDURE

Five hundred new melodies were generated for each of the two grammars. Experiment 2 was identical to Experiment 1 in procedure, except for the exposure phase, where instead of presenting 5 melodies, we presented 400 melodies in random order with no repeats. Each melody consisted of eight tones. Tones were similar in frequencies and amplitudes as those described in Experiment 1. The entire exposure phase lasted for 30 minutes.

Results

Performance on recognition and generalization is shown in Figure 3c. Results from recognition tests showed a similar pattern from Experiment 1 in revealing that participants could still recognize old melodies significantly better than chance, t(23) = 5.77, p < .01. In addition, participants were also above chance in identifying new melodies in the same grammar as being more familiar, t(23) = 4.26, p < .01, thus demonstrating successful generalization (Figure 3c).

Preference ratings for Experiment 2 are shown in Figure 3d. Here, participants rated old melodies in the same grammar as being similar in preference to new melodies in the same grammar or new melodies in the other grammar, F(2, 65) = 0.36, p = .70. Thus, the familiarity preference observed in Experiment 1 was not present in Experiment 2 (Figure 3d).

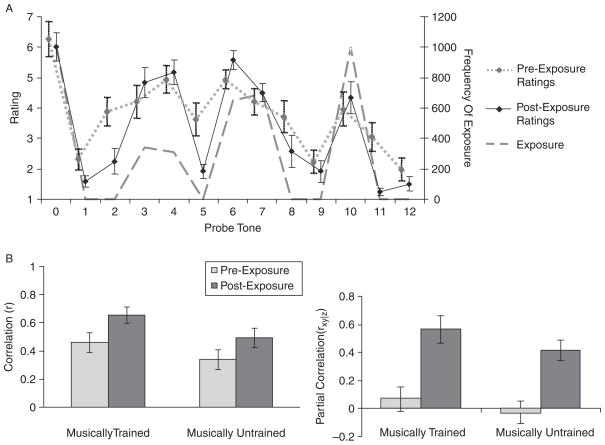

As in Experiment 1, pre-exposure probe tone ratings and post-exposure probe tone ratings were both correlated with exposure frequencies (pre-exposure r(22) = .46, SE = 0.07; post-exposure r(22) = .65, SE = 0.06; see Figure 5a). When effects of the melody used to obtain ratings were partialled out (using partial correlation as described in Experiment 1), pre-exposure correlations dropped to chance levels, r′(21) = .08, SE = 0.07, t(22) = 0.38, p = .36, whereas post-exposure correlations were significantly above chance, (r′(21) = .56, SE = 0.07, t(22) = 3.17, p < .01; see Figure 5b), suggesting that after brief exposure to a new musical system, participants became sensitive to its underlying statistical structure.

FIGURE 5.

(A) Pre- and post-exposure probe tone ratings and exposure frequencies from Experiment 2. The solid line represents post-exposure ratings, the dotted line represents pre-exposure ratings, and the dashed line represents the profile of the exposure set (exposure frequencies are plotted on the secondary y-axis). Error bars reflect between-subject standard errors. Exposure frequencies were more highly correlated with post-exposure ratings than with pre-exposure ratings, suggesting that participants became increasingly sensitive to the statistical structure of the musical system as a result of exposure. (B) Correlations of probe tone ratings with exposure frequencies pre- and post-exposure for trained (Experiment 2) and untrained (Experiment 3) individuals. Error bars reflect between-subject standard errors. (C) Partial correlations of probe tone ratings with exposure frequencies, while partialling out the effect of the melody profile (i.e., event frequencies of tones used in the melody which was played in probe tone trials to obtain ratings). Data from musically trained (Experiment 2) and untrained (Experiment 3) individuals show significant correlations with exposure for post-exposure ratings, but no correlation with exposure for pre-exposure ratings, suggesting that both groups of subjects had no prior knowledge of the musical system, but acquired sensitivity to the statistical structure of the new musical system during their period of exposure.

Discussion

Significant levels of recognition and generalization, combined with an increase in correlation for probe tone ratings after exposure, suggest that exposure to a large set of exemplars leads to generalized knowledge of a harmonically based artificial musical grammar, as compared to the more item-based knowledge observed in Experiment 1. However, the same was not true for preferences; exposure to a large number of melodies did not lead to changes in preference that extended to old and new melodies generated by the same grammar. Instead, participants in the current experiment showed low preference ratings for all of the melodies in the novel musical system.

Experiment 3

Thus far, the experiments have been conducted with participants with five or more years of music training. However, musicians differ from nonmusicians in several ways that may affect their learning. First, due to their training they may attend more to the structural aspects of music to which they are exposed. Furthermore, they may have more explicit knowledge of new music, and subsequently be more willing and able to learn it. Both of these differences might make individuals with music training better able to learn the structure of unfamiliar music composed in a novel tuning system. Alternatively, individuals with music training might have more exposure to music composed in the octave and the statistical regularities found in most Western music, making them less able to learn the structure of unfamiliar music composed in a novel tuning system. Thus, in a third experiment we asked whether prior music training made a difference in participants’ ability to learn the musical structure. We replicated Experiment 2 on individuals without formal music training outside of normal school education.

Method

PARTICIPANTS

Twenty-four participants (13 females, 11 males, 18–22 years of age) were recruited in the same way as in Experiments 1 and 2, but instead of having five or more years of music training, these participants were required to have no music training outside of normal school education. All participants in Experiment 3 reported having normal hearing but no music training.

STIMULI AND PROCEDURE

Experiment 3 was identical to Experiment 2 in stimuli and procedure.

Results

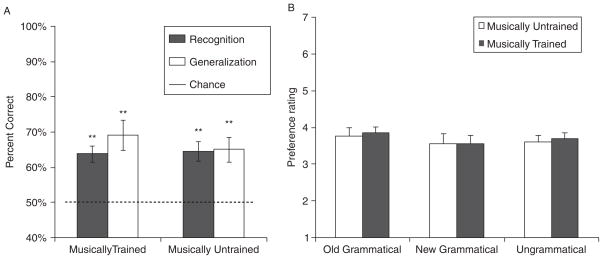

As shown in Figure 4a, the participants in Experiment 3 performed above chance in the recognition, t(23) = 4.97, p < .01, and generalization tests, t(23) = 2.35, p < .05. A comparison between trained participants from Experiment 2 and the untrained participants in the present experiment revealed no effect of music training, F(1, 92) = 1.98, n.s., and no interaction between recognition and generalization abilities and music training, F(1, 92) = 2.53, n.s. Thus, untrained participants appear to perform as well as participants with significant formal music training.

FIGURE 4.

(A) Two-alternative forced choice results for musicians (Experiment 2) compared with nonmusicians (Experiment 3). (B) Preference ratings for musicians (Experiment 2) and nonmusicians (Experiment 3).

Preference ratings (shown in Figure 4b) showed no significant differences between Old Grammatical, New Grammatical, and Ungrammatical melodies, F(2, 69) = 0.15, n.s. As in the recognition and generalization tasks, the untrained participants’ ratings were very similar to the trained participants’preference ratings; a two-way repeated-measures ANOVA on preference ratings with factors of melody type (Old Grammatical, New Grammatical, Ungrammatical) and music training (musician vs. non-musician) revealed no significant effects of music training, F(1, 92) = 0.39, n.s., and no interaction between music training and melody type, F(1, 92) = 0.03, n.s.

Pre-exposure and post-exposure ratings were both correlated with exposure frequencies (pre-exposure r(22) = .34, p < .052, SE = 0.07; post-exposure r(22) = .49, p < .0075, SE = 0.07). Similar to trained participants in Experiment 2, ratings of the untrained participants in Experiment 3 showed an increase in correlation with exposure after hearing the set of melodies (Figure 5b). When effects of the melody presented to obtain the probe tone ratings were partialled out of the pre-exposure ratings (using the partial-correlation procedure described in Experiment 1), the resulting partial correlation dropped to chance levels, r′(21) = −.03, SE = 0.08, but the same procedure applied to post-exposure ratings still resulted in highly significant partial correlations, r′(21) = .42, SE = 0.08, t(23) = 6.34, p < .0001 (see Figure 5c), suggesting that participants without music training were able to acquire sensitivity to the statistical structure during exposure to the new musical system.

Discussion

Results from untrained participants in the current experiment showed the same pattern as for trained participants. Both groups were able to recognize individual melodies and to generalize their knowledge of the melodies to new melodies composed from the same grammar. Likewise, neither group showed any systematic preferences for familiar or grammatical melodies over ungrammatical melodies. As before, results of the probe tone task demonstrated an acquired sensitivity to the underlying statistical structure of the new musical system. Taken together, the results show that participants rated based only on what they learned from exposure to melodies in the new musical system, without relying on prior musical knowledge (i.e., presumably from the Western musical scale). This was true of both trained and untrained groups, suggesting that learning occurred regardless of prior training. In sum, the effects of learning and preference shown here are independent of long-term training and not reliant on knowledge acquired from explicit training in music.

General Discussion

Knowledge of musical rules and structures has been reliably demonstrated in people of different ages, cultures, and levels of music training (Krumhansl et al., 2000; Koelsch et al., 2000; Trainor & Trehub, 1994), but how this knowledge is gained from neural processing of sounds has remained unknown. Some have suggested that our knowledge of the “musical lexicon,” a collection of mental representations that contains knowledge that enables melody recognition, reflects biological modules specialized for music (Peretz & Coltheart, 2003). A related possibility, and one that we investigate in the present studies, is that knowledge of musical structure is implicitly acquired from passive exposure to acoustical and statistical properties of musical sounds in the environment. In three experiments we exposed people to melodies created in a novel musical system and using an unfamiliar tuning system. Thus, we were able to test for learning free of prior exposure. We found that even given limited exposure of 30 minutes, participants could learn many aspects of the new music, including individual melodies (as assessed by recognition tests) and event frequencies of tones (as assessed by probe-tone tests). Importantly, using generalization tests we also showed that participants could extract knowledge of a more abstract nature when they heard a larger number of melodies (in Experiments 2 and 3 as compared to Experiment 1), such that they could distinguish melodies composed in their exposure grammar from melodies composed in an alternate but very similar grammar. Both recognition and generalization results were observed after a single exposure to each melody, suggesting that learning is robust and our two-alternative forced choice methods were sensitive to underlying recognition and generalization abilities. In contrast, preference for the new music increased with repeated exposure to small numbers of melodies, and was limited to the exposure melodies. Combining the findings of increased preference without generalization in Experiment 1, and successful generalization without preference change in Experiments 2 and 3, we observe a double dissociation between generalization and preference. The observed dissociation between generalization and preference may suggest that grammar learning and affect involve separate mechanisms; however, it remains to be seen whether further exposure might lead to increased preference for previously unencountered items in the same grammar. In Experiment 3 we extended the findings to untrained individuals, thus demonstrating that the ability to learn a new musical system reflects mechanisms that are not limited to music training.

Results from Experiment 3 parallel those observed from other behavioral tests that evaluate learning and memory of music (Demorest, Morrison, Beken, & Jungbluth, 2008; Halpern, Bartlett, & Dowling, 1995) in observing no significant interaction between musicianship and test performance. The criteria we used in distinguishing musicians from nonmusicians were loose, with all individuals who had had five or more years of music training being allowed to participate as musicians. Actual trained participants reported years of training ranged from 5 to 14 in a variety of instruments. However, further analysis revealed no significant correlation between number of years of music training and measures of learning outcome, suggesting that the ability to learn the new musical system reflects a grammar-learning capability that is relatively independent of music training, and that may be shared with extramusical domains such as language and more general cognitive processing.

The current methods provide a test for learning and memory using a system free of prior exposure and affective associations, thus affording a new approach for investigations of auditory perception and music cognition that avoids the confounding factors of prior knowledge and long-term memory. As an application of artificial grammar learning, the present research converges with recent work investigating language acquisition and implicit learning studies more generally (Newport & Aslin, 2000; Reber, 1989) in providing evidence for a rapid, potentially domain-general learning mechanism in humans. Using an alternative musical system, the present research allows the possibility of asking other questions about the nature of constraints on musical structure, by testing the relative ease of acquisition of different musical components such as harmony, melody, and timbre. Ongoing studies are being done to tease apart the contributions of bigram frequencies, rule learning, and Gestalt perception to the present learning results. Although the current research may not directly reveal the origins of music or musical universals, it suggests that much of what we know and like about music can be learned.

Acknowledgments

We thank E. Hafter for valuable advice, C. Krumhansl for helpful discussions on the Bohlen-Pierce scale, and T. Wickens for statistical help and the use of laboratory space. We also thank E. Wu, P. Chen, & J. Wang for data collection and comments on this manuscript, and the University of California’s Academic Senate and dissertation research grant for funding this research.

Footnotes

Example melodies generated in Grammar I and Grammar II of the new musical system are available on http://sites.google.com/site/psycheloui/publications/downloads.

Contributor Information

Psyche Loui, Beth Israel Deaconess Medical Center and Harvard Medical School.

David L. Wessel, University of California, Berkeley

Carla L. Hudson Kam, University of California, Berkeley

References

- Altmann GTM, Dienes Z, Goode A. Modality independence of implicitly learned grammatical knowledge. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1995;21:899–912. [Google Scholar]

- Berlyne DE. Aesthetics and psychobiology. New York: Appleton-Century-Crofts; 1971. [Google Scholar]

- Bernstein L. The unanswered question. Cambridge, MA: Harvard University Press; 1973. [Google Scholar]

- Besson M, Faita F. An event-related potential (ERP) study of musical expectancy: Comparison of musicians with nonmusicians. Journal of Experimental Psychology: Human Perception and Performance. 1995;21:1278–1296. [Google Scholar]

- Bigand E, Poulin B, Tillmann B, Madurell F, D’Adamo DA. Sensory versus cognitive components in harmonic priming. Journal of Experimental Psychology: Human Perception and Performance. 2003;29:159–171. doi: 10.1037//0096-1523.29.1.159. [DOI] [PubMed] [Google Scholar]

- Bigand E, Tillmann B, Poulin-Charronnat B, Manderlier D. Repetition priming: Is music special? Quarterly Journal of Experimental Psychology. 2005;58:1347–1375. doi: 10.1080/02724980443000601. [DOI] [PubMed] [Google Scholar]

- Castellano MA, Bharucha JJ, Krumhansl CL. Tonal hierarchies in the music of North India. Journal of Experimental Psychology: General. 1984;113:394–412. doi: 10.1037//0096-3445.113.3.394. [DOI] [PubMed] [Google Scholar]

- Demany L, Armand F. The perceptual reality of tone chroma in early infancy. Journal of the Acoustical Society of America. 1984;76:57–66. doi: 10.1121/1.391006. [DOI] [PubMed] [Google Scholar]

- Demorest SM, Morrison SJ, Beken MN, Jungbluth D. Lost in translation: An enculturation effect in music memory performance. Music Perception. 2008;25:213–223. [Google Scholar]

- Dienes Z, Longuet-Higgins C. Can musical transformations be implicitly learned? Cognitive Science: A Multidisciplinary Journal. 2004;28:531–558. [Google Scholar]

- Gomez RL. Variability and detection of invariant structure. Psychological Science. 2002;13:431–437. doi: 10.1111/1467-9280.00476. [DOI] [PubMed] [Google Scholar]

- Halpern AR, Bartlett JC, Dowling WJ. Aging and experience in the recognition of musical transpositions. Psychology and Aging. 1995;10:325–342. doi: 10.1037//0882-7974.10.3.325. [DOI] [PubMed] [Google Scholar]

- Hannon EE, Trainor LJ. Music acquisition: Effects of enculturation and formal training on development. Trends in Cognitive Sciences. 2007;11:466–472. doi: 10.1016/j.tics.2007.08.008. [DOI] [PubMed] [Google Scholar]

- Hauser M, McDermott J. The evolution of the music faculty: A comparative perspective. Nature Neuroscience. 2003;6:663–668. doi: 10.1038/nn1080. [DOI] [PubMed] [Google Scholar]

- Hudson Kam CL, Newport EL. Regularizing unpredictable variation: The roles of adult and child learners in language formation and change. Language Learning and Development. 2005;1:151–195. [Google Scholar]

- Huron D. Sweet anticipation: Music and the psychology of expectation. Cambridge, MA: MIT Press; 2006. [Google Scholar]

- Justus T, Hutsler JJ. Fundamental issues in the evolutionary psychology of music: Assessing innateness and domain specificity. Music Perception. 2005;23:1–27. [Google Scholar]

- Kameoka A, Kuriyagawa M. Consonance theory part I: Consonance of dyads. Journal of the Acoustical Society of America. 1969;45:1451–1459. doi: 10.1121/1.1911623. [DOI] [PubMed] [Google Scholar]

- Koelsch S. Neural substrates of processing syntax and semantics in music. Current Opinion in Neurobiology. 2005;15:1–6. doi: 10.1016/j.conb.2005.03.005. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Gunter TC, Friederici AD, Schröger E. Brain indices of music processing: ‘Non-musicians’ are musical. Journal of Cognitive Neuroscience. 2000;12:520–541. doi: 10.1162/089892900562183. [DOI] [PubMed] [Google Scholar]

- Krumhansl CL. General properties of musical pitch systems: Some psychological considerations. In: Sundberg J, editor. Harmony and tonality. Stockholm: Royal Swedish Academy of Music; 1987. pp. 33–52. [Google Scholar]

- Krumhansl CL. Cognitive foundations of musical pitch. New York: Oxford University Press; 1990. [Google Scholar]

- Krumhansl CL, Kessler EJ. Tracing the dynamic changes in perceived tonal organization in a spatial representation of musical keys. Psychological Review. 1982;89:334–368. [PubMed] [Google Scholar]

- Krumhansl CL, Toivanen P, Eerola T, Toiviainen P, Jarvinen T, Louhivuori J. Cross-cultural music cognition: Cognitive methodology applied to North Sami yoiks. Cognition. 2000;76:13–58. doi: 10.1016/s0010-0277(00)00068-8. [DOI] [PubMed] [Google Scholar]

- Kuhn G, Dienes Z. Implicit learning of nonlocal musical rules: Implicitly learning more than chunks. Journal of Experimental Psychology: Learning, Memory and Cognition. 2005;31:1417–1432. doi: 10.1037/0278-7393.31.6.1417. [DOI] [PubMed] [Google Scholar]

- Lerdahl F, Jackendoff R. Generative theory of tonal music. Cambridge, MA: MIT Press; 1983. [Google Scholar]

- Loui P, Grent-’t-Jong T, Torpey D, Woldorff M. Effects of attention on the neural processing of harmonic syntax in Western music. Cognitive Brain Research. 2005;25:678–687. doi: 10.1016/j.cogbrainres.2005.08.019. [DOI] [PubMed] [Google Scholar]

- Loui P, Wessel D. Harmonic expectation and affect in Western music: Effects of attention and training. Perception and Psychophysics. 2007;69:1084–1092. doi: 10.3758/bf03193946. [DOI] [PubMed] [Google Scholar]

- Lynch MP, Eilers RE. A study of perceptual development for musical tuning. Perception and Psychophysics. 1992;52:599–608. doi: 10.3758/bf03211696. [DOI] [PubMed] [Google Scholar]

- Mathews MV, Pierce JR, Reeves A, Roberts LA. Theoretical and experimental explorations of the Bohlen-Pierce scale. Journal of the Acoustical Society of America. 1988;84:1214–1222. [Google Scholar]

- Meyer LB. Emotion and meaning in music. Chicago, IL: University of Chicago Press; 1956. [Google Scholar]

- Miranda RA, Ullman MT. Double dissociation between rules and memory in music: An event-related potential study. NeuroImage. 2007;38:331–345. doi: 10.1016/j.neuroimage.2007.07.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Narmour E. The analysis and cognition of basic melodic structures: The implication-realization model. Chicago, IL: University of Chicago Press; 1990. [Google Scholar]

- Newport EL, Aslin RN. Innately constrained learning: Blending old and new approaches to language acquisition. In: Howell SC, Fish SA, Keith-Lucas T, editors. Proceedings of the 24th Annual Boston University Conference on Language Development. Somerville, MA: Cascadilla Press; 2000. pp. 1–21. [Google Scholar]

- Peretz I, Gaudreau D, Bonnel AM. Exposure effects on music preference and recognition. Memory and Cognition. 1998;26:884–902. doi: 10.3758/bf03201171. [DOI] [PubMed] [Google Scholar]

- Peretz I, Coltheart M. Modularity of music processing. Nature Neuroscience. 2003;6:688–691. doi: 10.1038/nn1083. [DOI] [PubMed] [Google Scholar]

- Piston W, Devoto M, Jannery A. Harmony. New York: W. W. Norton; 1987. [Google Scholar]

- Reber A. Implicit learning and tacit knowledge. Journal of Experimental Psychology: General. 1989;118:219–235. [Google Scholar]

- Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- Sethares WA. Tuning timbre spectrum scale. New York: Springer Verlag; 2004. [Google Scholar]

- Steinbeis N, Koelsch S, Sloboda JA. The role of harmonic expectancy violations in musical emotions: Evidence from subjective, physiological, and neural responses. Journal of Cognitive Neuroscience. 2006;18:1380–1393. doi: 10.1162/jocn.2006.18.8.1380. [DOI] [PubMed] [Google Scholar]

- Szpunar KK, Schellenberg EG, Pliner P. Liking and memory for musical stimuli as a function of exposure. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2004;30:370–381. doi: 10.1037/0278-7393.30.2.370. [DOI] [PubMed] [Google Scholar]

- Tan SL, Spackman MP, Peaslee CL. The effects of repeated exposure on liking and judgment of intact and patchwork compositions. Music Perception. 2006;23:407–421. [Google Scholar]

- Tillmann B, McAdams S. Implicit learning of musical timbre sequences: Statistical regularities confronted with acoustical (dis)similarities. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2004;30:1131–1142. doi: 10.1037/0278-7393.30.5.1131. [DOI] [PubMed] [Google Scholar]

- Trainor LJ, Trehub SE. Key membership and implied harmony in Western tonal music: Developmental perspectives. Perception and Psychophysics. 1994;56:125–132. doi: 10.3758/bf03213891. [DOI] [PubMed] [Google Scholar]

- Zajonc RB. Attitudinal effects of mere exposure. Journal of Personality and Social Psychology. 1968;9:1–27. [Google Scholar]

- Zicarelli D. An extensible real-time signal processing environment for Max. Proceedings of the International Computer Music Conference; Ann Arbor, MI: University of Michigan Press; 1998. pp. 463–466. [Google Scholar]