Abstract

Multiple types of users (i.e. patients and care providers) have experiences with the same technologies in health care environments and may have different processes for developing trust in those technologies. The objective of this study was to assess how patients and care providers make decisions about the trustworthiness of mutually used medical technology in an obstetric work system. Using a grounded theory methodology, we conducted semi-structured interviews with 25 patients who had recently given birth and 12 obstetric health care providers to examine the decision-making process for developing trust in technologies used in an obstetric work system. We expected the two user groups to have similar criteria for developing trust in the technologies, though we found patients and physicians differed in processes for developing trust. Trust in care providers, the technologies’ characteristics and how care providers used technology were all related to trust in medical technology for the patient participant group. Trustworthiness of the system and trust in self were related to trust in medical technology for the physician participant group. Our findings show that users with different perspectives of the system have different criteria for developing trust in medical technologies.

INTRODUCTION

Research Question

How is trust in medical technology constructed by patients and care providers in a health care work system?

This study looks at patients as passive users of technologies whose trusting relationships with the technology have the potential to affect the way the technology is used or not used in their care. This research also conceptualizes trust in technology as a concept greater than user performance and behavioral tasks.

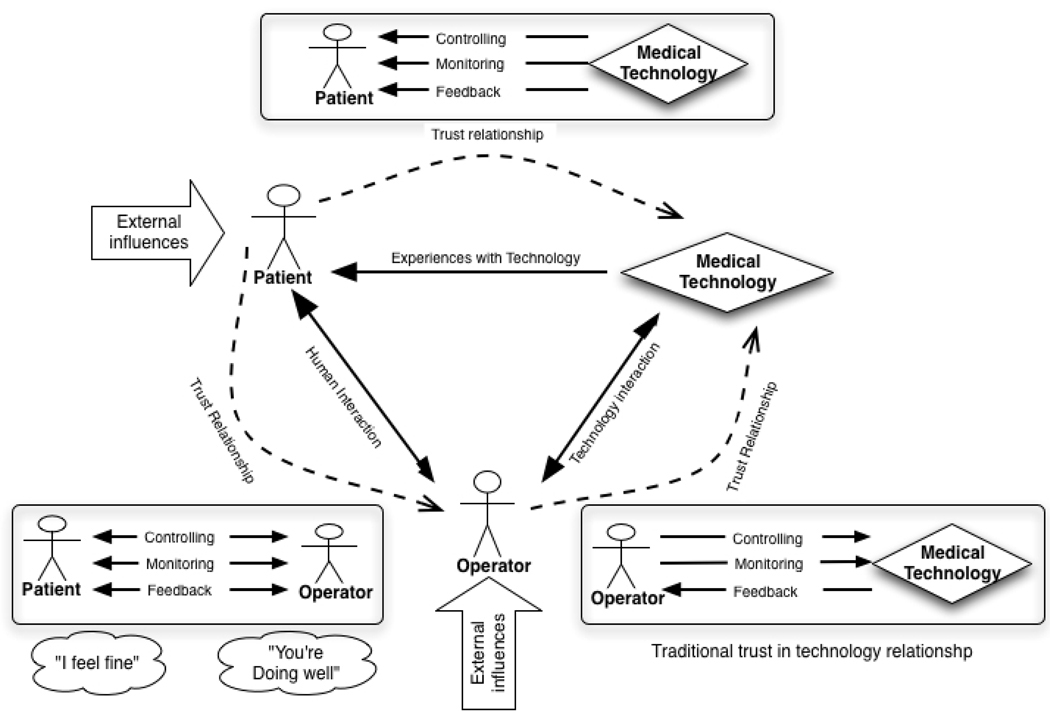

In order to understand health care systems and evaluate quality, it is important that we understand a person’s trust in the technologies used in health care systems. Trust is a fundamental aspect of doctor patient relationships and the introduction of technologies into that relationship can enhance or diminish the interpersonal relationship. Understanding trust in medical technologies will provide insight into decision making about which technologies will be accepted or rejected, which work system designs will lead to positive patient outcomes, and which will have the inverse effect. The majority of trust in technology research looks at trust in technology from the standpoint of the operator of the technology, which in a medical setting would be the care provider (physician, nurse or technician) (Parasuraman and Riley 1997, Jian et al. 1998, Parasuraman and Miller 2004). The conceptual model for this research hypothesizes relationships between the care provider and the technology, the care provider and the patient, and the patient and the technology (Figure 1).

Figure 1.

Relationship between patient, technology, and care provider

This model assumes that the patient’s experience with the technology is passive, meaning the machine does things to the patient without feedback from the patient. The machine monitors the patient’s health status, controls the patient’s bodily functions, and presents feedback to the patient. This model also assumes that the patient and the care provider have a reciprocal relationship, with the care provider as a mediator between the patient and the technology allowing the patient to operate the technology through commands to the care provider. For example, the patient can let the physician know if they want more medication from the machine and the physician can control the machine to administer the medication. As indicated in this model, it was expected that patients and physicians would experience technology in different ways, given the different levels of interaction they had with the technology.

Trust

Fundamentally, trust is a feeling of certainty that a person or thing will not fail and is often based on inconclusive evidence. There are several trust relationships such as, a human’s trust with another human (i.e. interpersonal trust), a human’s trust with a system or institution (i.e. social trust) and a human’s trust with a technology or device (i.e. trust in automation). Social trust, which is influenced by factors such as the media and a generalized social confidence in the institution, is defined as trust in an institution. Low social trust is exhibited when certain ethnic groups have a low trust in healthcare as an institution because of historical oppression and deception of their cultural group (Doescher et al. 2000). If an individual patient trusts their doctor because the doctor has been assessed as trustworthy over time, this is interpersonal trust. To trust a technology is to believe that a tool, machine, or equipment will not fail (Sheridan 2002).

Trust has also been defined as an emotional characteristic, where patients have a comforting feeling of faith or dependence in a care providers’ intentions with common dimensions such as competence, compassion, privacy and confidentiality, reliability and dependability, and communication (Pearson and Raeke 2000). These features were also accommodated in the model developed here for patient trust in medical technology. Factors such as competence, compassion, privacy and confidentiality, reliability and dependability and communication can be supported or ignored through a provider’s use of the technology. Providers can express competence by being able to use technology appropriately. They can express compassion by responding to technology that makes the patient experience uncomfortable, such as the fetal monitor belt or blood pressure cuff being too tight during contractions or the noise of an MRI. Providers can also express respect for privacy and confidentiality by assuring their patients that their records and other information are being kept private. They can express reliability and dependability, in the way they respond to the technology. For example, providers can respond to alarms every time they sound or they can decide to only respond sometimes. Communication can affect trust, through providers’ explanation of what the technology is doing and how they are using the feedback from the technology to make decisions.

The concept of human trust has been explored in complex automated systems (Dzindolet et al. 2003, Lee and See 2004, Madhavan et al. 2006) and online systems (Friedman et al. 2000, Daignault et al. 2002, Corritore et al. 2003) Several key findings from this research include an understanding of the relationship of trust in automation in terms of reliance, compliance, and rejection. Trust in automated systems can lead to appropriate use, disuse, misuse, or abuse of the automation (Parasuraman and Riley 1997) and care providers’ belief in their own abilities is related to their respective trust in the automation (Lee and Moray 1992, Lee and Moray 1994). This study differs from trust in automation research in that it looks at the formation of trust in medical technology from the perspectives of multiple user groups (patients and care providers) that simultaneously use medical technology in separate and different ways.

Trust and health behavior

Trust literature in health care work systems has focused on interpersonal trust between patients and physician (Anderson and Dedrick 1990, Thom et al. 1999, Pearson and Raeke 2000, Hall et al. 2002, Franks et al. 2005, Tarn et al. 2005, Arora and Gustafson 2009) and patient’s trust in health systems (Zheng et al. 2002, Balkrishnan et al. 2004). Several scales have been developed to measure patients’ trust in their care- provider. The Trust in Physician Scale was validated by Thom et al. (1999); the researchers administered the scale to 414 patients, found high internal consistency, and test-re-test reliability (Thom et al. 1999). They concluded that the Trust in Physician Scale is a desirable psychometric instrument because it displays construct and predictive validity. They also conclude that the instrument’s metrics are distinct from patient satisfaction (Thom et al. 1999).

Patient’s trust has been linked to important organizational and economic factors such as decreases in the possibility of a patient leaving a care provider’s practice and withdrawing from health plans (Pearson and Raeke 2000). Studies have also found a link between physicians' comprehensive knowledge of patients and patients' trust in physicians with outcomes such as adherence and satisfaction (Safran et al. 1998a).

This research explores patients’ and care providers’ trust in medical technologies they use simultaneously. The conceptual model for this research argues that patient-provider trust relationships mediate how trust is developed in medical technologies and influence how the technology is used or not in care providing activities. To date there is little knowledge of effects of medical technology trust or distrust by either patients or providers on patient physician interpersonal relationships.

Obstetrics as a domain

The care-providing process in obstetrics is currently characterized as steadily increasing in physician usage and reliance on technology (Davis-Floyd 1993, Davis- Floyd 1994, Klein et al. 2006). In parallel, the malpractice crisis is affecting obstetricians more than other health care systems, thus causing obstetricians to either limit the care they provide or leave the field altogether The electronic fetal monitor is an example of automation technology and has been a key technology with regards to malpractice litigation in obstetrics (Cartwright 1998, Lent 1999, McCartney 2002). The fetal heart monitor measures fetal heartbeats during a mother’s labor to detect complications or abnormalities. In the past, a nurse or physician accomplished this monitoring manually with a fetal stethoscope. In recent years, a continuous electronic fetal heart-monitoring machine has replaced the manual method as a component of required standard care. The new automation allows for continuous monitoring and nurses’ monitoring of multiple patients (Cartwright 1998). This technology, like others, is subject to trust or distrust by the care provider. However, it is unclear what occurs when the patient trusts or distrusts a technology: will the patient influence the care provider to trust the technology more or less to match her own level of trust? Understanding the relationship between patients, care providers, and technologies is necessary for understanding health system issues such as malpractice and medical error. Understanding the definition of trust in medical technology is a necessary first step for understanding health care systems and technological interventions in those systems. Characterizing trust in medical technology as it is constructed by members of the obstetric work system will serve as a foundation for theories about the role of patients in health care systems and will be an particularly important construct to understand for new technologies that depend on patient and care provider shared use of the same technology, such as electronic personal health records.

Researchers have explored patient care provider trust relationships, but gaps in our collective knowledge of the antecedent, facets, and outcomes of this construct continue to exist. Understanding trust in relation to the sociotechnical work system may provide insight into knowledge of patient care provider trust knowledge. This research is sufficiently novel in that few studies have explored patients’ trust in technology (Timmons et al. 2008) and fewer studies have explored obstetric patients. Obstetrics is a particularly important health domain to study because of its widespread impact; approximately 4.3 million women gave birth in the US in 2007 and over 99% of that population gave birth in a hospital (Martin et al. 2006). Additionally, fetal monitors are an ideal place to start exploring patient’s trust in medical technology because it is an important area of study in itself and there is little available data in the patient decision-making and work system literature regarding this technology. Obstetrics has one of the highest incidents of patient-initiated malpractice cases, causing many obstetricians to limit the care they provide or leave the field (Rosenblatt et al. 1990, Bernstein 2005) and some studies argue that patient attitudes about the fetal monitor are an important variable in this phenomena. Patients in obstetrics have been studied in terms of their request of technologies and rejection of technologies that are standards of care (Nerum et al. 2006, Young 2006). Understanding the role of patient trust in technology in obstetrics may provide insight towards patient request of sometimes risky and unnecessary technologies and rejection of technologies that are necessary for providing safe care.

The purpose of this study was to understand patients’ and physicians’ trusting relationships with technology in obstetric work systems using qualitative research methods. The results are a model that describes how participants constructed a trusting relationship with the technology in the system.

METHODS

Qualitative research methods are well suited to uncovering meaning people assign to their experiences (Hignett 2003). To uncover participants’ understanding of their experiences with medical technology the methods used in this study involved a grounded theory analysis that included (Glaser and Strauss 1967, Charmaz 2006):

Developing codes, categories and themes inductively rather than imposing predetermined classifications on the data (Glaser and Strauss 1967).

Generating working hypotheses or assertions (Erickson 1985) from the data.

Analyzing narratives of participants’ experiences with medical technology (Polkinghorne 1991).

Grounded Theory

A grounded theory approach investigates actions, processes and interactions between people and artifacts to develop theories about these interactions in the field (Glaser and Strauss 1967, Charmaz 2006). Grounded theory has been successfully applied to studies of health systems and interactions with technologies (Charmaz 1990, Thom and Campbell 1997, Winkelman et al. 2005, Levy 2006). Grounded theory is the most appropriate approach in studies where little is known about the topic because it allows for the generation of new hypotheses that can be tested in future studies rather than forcing existing theories onto data and environments where it may be incongruous. The difference between grounded theory and phenomenological qualitative methods is that grounded theory moves the analysis beyond a description of the experience to generate a theory (Creswell 2007). The focus of grounded theory is unraveling the elements of an experience. From the examination of the components of the experience and their relationships, a theory is developed that provided insights into the “nature and meaning of an experience for a particular group of people in a particular setting” (Moustakas 1994). In this study we explore the nature and meaning of trusting technology for patients and care providers in an obstetric work system. A key feature of grounded theory is the notion of constant comparison, which compares the data inductively, creates codes and then groups them into categories to ascertain importance. Data is then compared to other pieces of data with similar categories. As the pieces are compared, additional categories and relationships emerge; categories are defined, described, and become the building blocks for the grounded theory (Schwandt 2007).

Participants

A maximum variation sampling framework was used in the theoretical sampling tradition. In this framework participants were not included because of their representativeness but for their relevance to the research question (Patton 2001). Participants represented two major groups of obstetric system users, patients and physicians. Twenty-five new mothers represented the patient group. All mothers had given birth in a hospital and were between the ages of 19 and 35. Seventeen participants self identified as White or Caucasian, one participant identified as Asian and one as Hispanic, five participants identified as Black or African-American. The participant’s number of children ranged from one to four, 12 mothers had one child, nine had two children, four had three children, and two had four children. All names have been changed to respect participant privacy. Twelve obstetric clinicians participated in interviews to represent the provider group.

Procedure

Field data acquisition

Participants were recruited in a large southeastern rural area in the United States through an obstetric resident who facilitated data collection. Interviews were conducted because recruiting participants for focus group sessions was not feasible. Participants were informed about the study from a physician stakeholder at a hospital in Southwestern Virginia or volunteered directly from advertisements for the study. After being given informed consent, interviews took place in the patient’s room, a private space, or by telephone. Once verbal consent was received (due to HIPPA regulations) participants were reminded that the interview would be audio-recorded and that confidentiality would be maintained. Participants from the provider group were informed about the study from an obstetric resident and invited to participate. If they agreed to participate, interviews were scheduled by telephone.

Ethical Issues

Hospital and university ethics committees approved the protocol. The purpose of the study was explained both verbally and in writing to each potential participant, consent was requested, and each participant was informed of their right not to participate. Participants were all 18 years of age or older, so that consent could be provided legally. Confidentiality between the participants and the researcher was assured. Additionally, any identifying information from patients was withheld from the researcher, allowing informed consent to be provided verbally.

Apparatus

Questions for the semi-structured interview were related to understanding how participants experienced the technology and how that experience was related to attitudes of trust or distrust (see Table 1 and Table 2).

Table 1.

Example Interview Questions and Related Constructs

| Construct of Interest |

Question | |

|---|---|---|

| User Experience | 1. | What kinds of medical technology were used? |

| 2. | What did you notice about the technology and equipment that was used? (What did you see, hear, or feel) | |

| 3. | How did you feel about the stimulation from the technology? | |

| Technological Trust | 4. | What made you feel that you could trust or not trust the medical equipment to perform effectively? |

| 5. | What could be changed to help you trust the technology more? What would make you trust the technology less? | |

Table 2.

The Relationship Between Interview Questions and Research Questions

| Research question | Interview Question |

|---|---|

| How do patients and care providers experience medical technology? | Q1, Q2, Q3 |

| How do patients and care providers come to trust or distrust technology? | Q4, Q5 |

Data collection and analysis

Evidentiary adequacy is a concern for establishing rigor in qualitative research, specifically, extensiveness of the group of evidence used for data and adequacy of time spent in the field (Erickson 1985, Morrow 2005). The data consisted of over 37 hours of recorded interviews, field notes, and demographic questionnaires. Verbatim transcripts were created for each interview. During each interview, memos about salient themes and observations were collected during and after the interview by either the interviewer or a member of the research team. Thirty-seven verbatim transcripts, 370 pages total, resulted from these sampling strategies to provide the data for the study.

The analytical process was based on engagement with participants and immersion in the data that were repeatedly sorted, coded, and compared using the constant comparative method (Muhr 2004, Morrow 2005). In accordance with grounded theory (Glaser and Strauss 1967), analysis of data began as they were collected, and were then reanalyzed once data saturation had occurred (or no new themes began to arise). At the conclusion of analysis, categories had been identified and linked into a preliminary framework (Levy 2006).

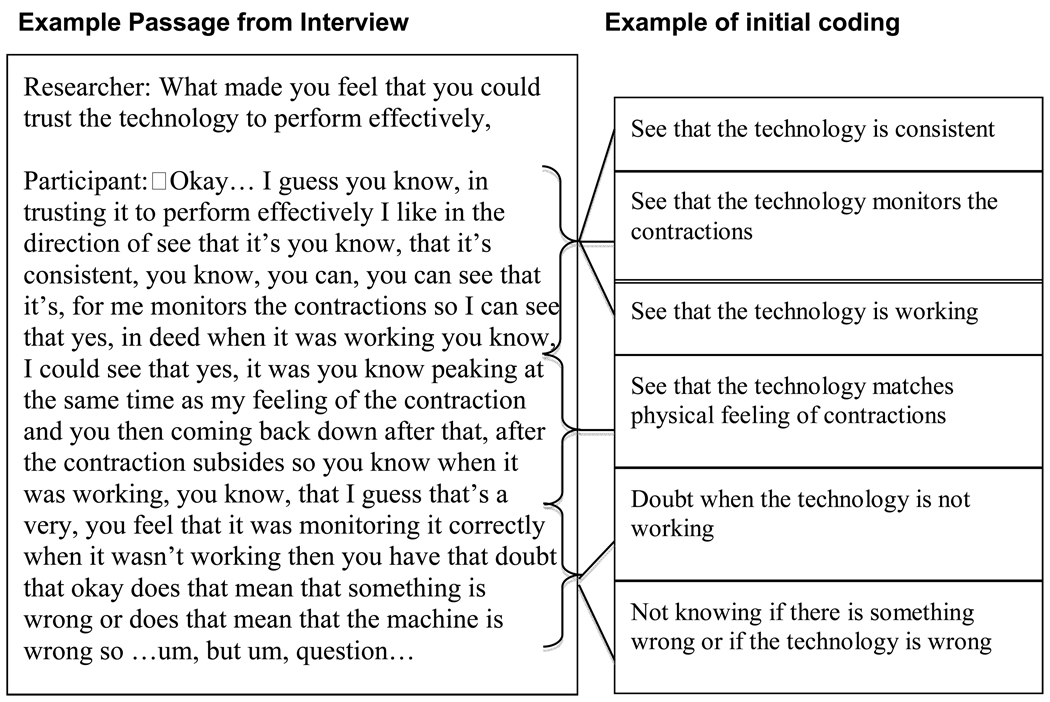

Each line of each entire transcript was coded in an initial open coding framework using Atlas.ti software (Muhr 2004, Charmaz 2006). Open codes were collected in vivo or in the participants own words (see figure 2). During the open coding process, theoretical, methodological, self-reflective, and analytical memos were written by members of the research team to bring meaning to the data. Theoretical memos consisted of explanations of the data using theoretical or working hypotheses or comparisons of the data to existing theories. Examples of working hypotheses included notions such as “patients’ trust in medical technology is an extension of existing theories such as technology acceptance (Davis 1989) or “patients’ trust in medical technology is trust in care provider”. Methodological memos consisted of documentation of the methods used to categorized (code) and sort the data. These documentations included definitions of codes and categories and rules for inclusion or exclusion. Self-reflective memos consisted of the researchers’ own reactions to participant accounts. Analytical memos consisted of thoughts, questions, reflections, and speculations about the data. Three researchers were involved in data collection, coding, and analysis activities.

Figure 2.

Example of coding

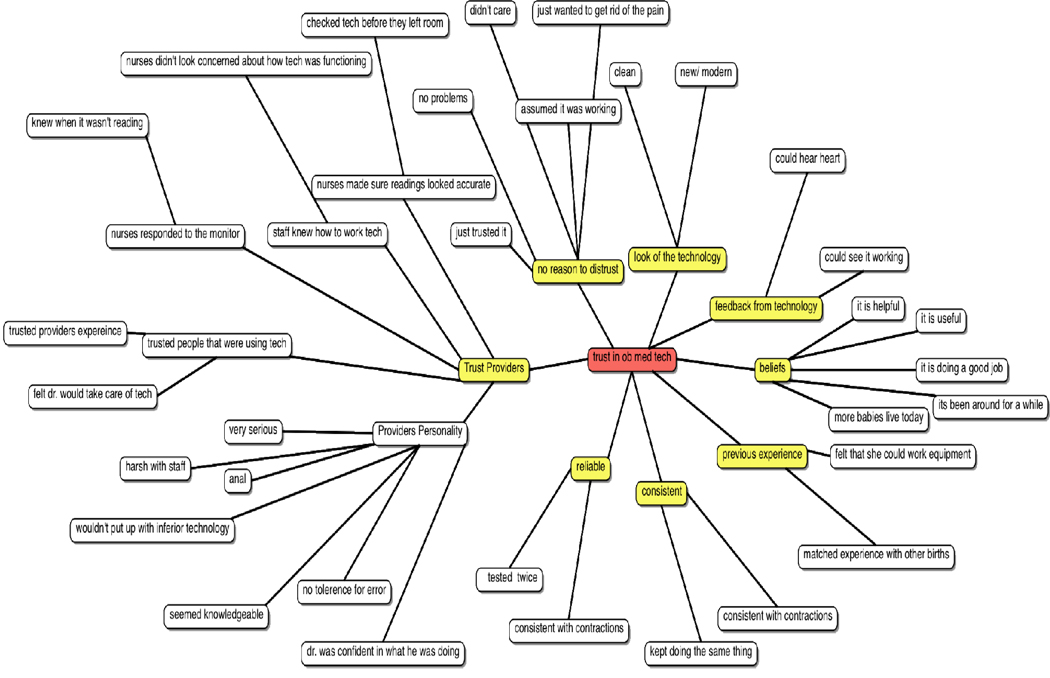

Open codes were grouped with similar codes to form code families or focused codes (see figure 3). These codes were systematically compared and contrasted to create progressively more complex and comprehensive categories. These codes were described as themes and reassembled using axial coding procedures. Axial coding treats a grouping of codes as an axis around which the analyst describes relationships and specifies the dimensions of the category (Charmaz 2006).

Figure 3.

Example of code to category formation.

The purpose of axial coding was to reassemble the data after it had been fractured by line-by line coding (Charmaz 2006). Data were incorporated from memos and other portions of the study including demographic questionnaires and accounts of user experience to form theory.

Accountability to the research process and generation of grounded theory was achieved through maintaining an audit trail that outlined the research process and evolutions of codes, categories, and theory (Miles and Hubberman 1994, Wolf 2003). The audit trail consisted of chronological narrative entries of research activities, including pre-entry conceptualizations, entry into the field, interviews, transcription, initial coding, analytical activities, and the evolution of the trust in medical technology model. The audit trail also included a complete list of the 169 in vivo codes for trust in medical technology that formed the basis of analysis.

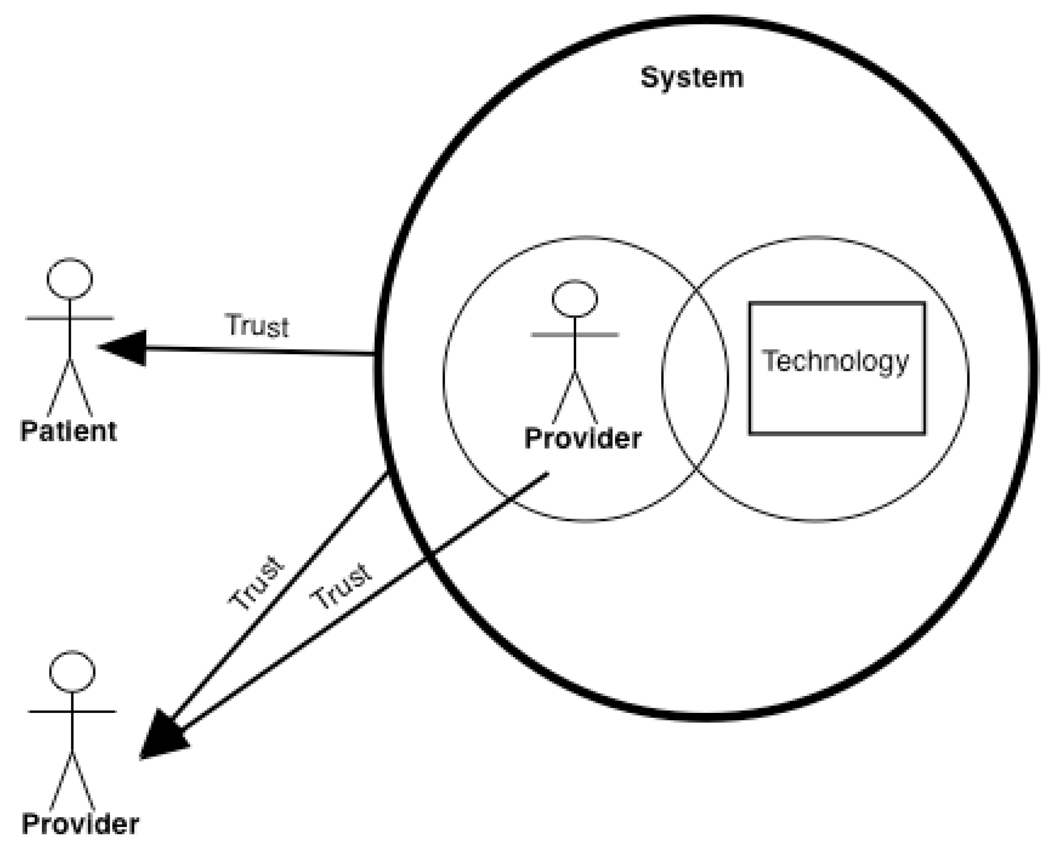

RESULTS

The grounded theory model for trust in medical technology in obstetric work systems that emerged from the current study is presented in Figure 4. The first section describes patients’ user experience with the technology. The second section describes the model of patients trust relationship with technology and the third section describes a model for physicians trust relationship with technology.

Figure 4.

Model of patient and provider trust in medical technology

Patient’s Trust in Technology

Patients develop trust in medical technology by combining the technology and those who use the technology into a system and then evaluating the system to determine whether the technology is trustworthy or not. Major components of the system are trust in care providers, how providers use the technology, and characteristics of the technology (see Figure 5). Patients have direct relationships with the technology and the providers in the system, but it is how these two components interact that is a key component of trust formation. Mediating the decision to trust the system or not is a dynamic comparison of a person’s mental model of how the system should function and one’s personal beliefs. In the absence of mismatches, patients have “no reason to distrust the technology.” A description of the key themes that formulate this relationship follows.

Figure 5.

Patient's Trust in Medical Technology

Trust in health-care providers

Trusting care providers was the largest and most salient component of the trust in technology coding scheme; as assessed by having the greatest number of codes between participants and highest frequency of codes in individual interviews. Participants expressed trust in providers as a component of trusting technology in a variety of ways. Trusting providers includes trusting that the provider is competent, capable, and knowledgeable enough to use the technology. “Amanda” expressed the doctor’s personality as a way of her developing trust in the technology by saying, “It wasn’t so much the equipment, it was the physician. Like, he was kind of a jerk, and he just wouldn’t put up with anything inferior.” She describes trust in care providers in terms of providers exhibiting behaviors worthy of trust. In her mind, her physician was a perfectionist that could not be bothered with bedside manner, which made him appear more competent as a physician. Specifically, she said “I didn’t trust him to give me *laugh* um good advice like how to deal with the pregnancy or give me emotional support *laugh* or anything like that. But I trusted him to cut me open and take the baby out and sew me back up.” In a similar example, Sarah described the physician’s behavior in influencing her trust in the technology; she said it was “the doctor’s confidence in what he was doing,” and that “he seemed very knowledgeable about the technology.” In another case, “Tiffany” “just kind of trusted the people that were using [the technology], that [they] were in charge of it.” Tiffany trusted the providers to do their jobs and believed that part of their job was to be able to use the technology.

Technology characteristics

The technology characteristics that were related to patients’ trust in medical technology were categorized as a) the look of the technology, b) perceived reliability, c) consistency, and d) feedback from the technology. The look of the technology was described as the technology being perceived as modern or high-tech. “Amy” described her trust in the technology by saying “everything just looked so pristine, and new and modern and that just makes you feel like, okay, this is not going to break down on me and kill me.” “Maria” also expressed her trust by saying “it appeared to be new, it was clean.” Reliability was only experienced by a few mothers because of the nature of their pregnancies. Kelly had twins, and because she had “things tested twice,” with each baby she felt trust towards the technology. Some mothers felt trust because the technology was consistent in its functioning and with their own bodily experience. Jennifer described consistency by saying,

In trusting it to perform effectively I like to see that it’s… consistent… you can see that it’s, for me monitors the contractions so I can see that yes, indeed when it was working… I could see that yes, it was you know peaking at the same time as my feeling of the contraction and you then coming back down after that, after the contraction subsides so you know when it was working, you know, that I guess that’s a very, you feel that it was monitoring it correctly. When it wasn’t working then you have that doubt that okay does that mean that something is wrong or does that mean that the machine is wrong.

“Nicole” expressed consistency by saying “from when they first hooked it up, it kept doing the same thing all day and so I felt like it was working.”

Feedback from the technology was defined as obtaining any feedback from the technology as a factor in making the patient trust it. Specifically, it was defined as trusting the technology because the user can hear, see, or feel it doing something. “Rachel” described feedback from the technology by saying, “…I saw the times (on the monitors) when I was having a contraction…so that in itself made me know it (the monitor) is doing its job…I heard my son’s heartbeat so I knew it was working…” Specifically, hearing the baby’s heartbeat was a dominant theme in describing trust originating from feedback from the technology.

Trust in how providers use technology

The intersection between trusting providers and trusting the technology relates to how the providers use the technology. This theme was developed from the categories a) realizing the providers were not using the technology correctly and b) not trusting how the technology is used. “Jennifer” described her experience with observing how providers used the technology by saying,

I felt like it wasn’t necessarily the fetal monitors that were the problem but it was the staff’s use of the fetal monitors or mishandling of or not knowing the best ways to, to uh get the machine to work, to give them the data that they needed. It was hit or miss whether it was monitoring correctly, like at first it seemed like okay is the machine working okay but then you know with all its alarms going off but then you, more and more realize that it wasn’t necessarily the machine but the fact that it wasn’t… being used to its best ability.

In Jennifer’s experience, the provider’s mishandling of the technology influenced her low trust in the technology and the providers. Heather said, “I guess when it comes down to it, I trust the technology but I don’t necessarily trust how it’s used. So if I trusted that it was being used for my best interest, then I would be more okay with it being used.” “Lindsay” similarly expressed a feeling that the technology was being used inappropriately when she said “I just think that our culture hasn’t done a good job of saying that the technology is there if you need it, rather than the technology is there, let’s use it.”

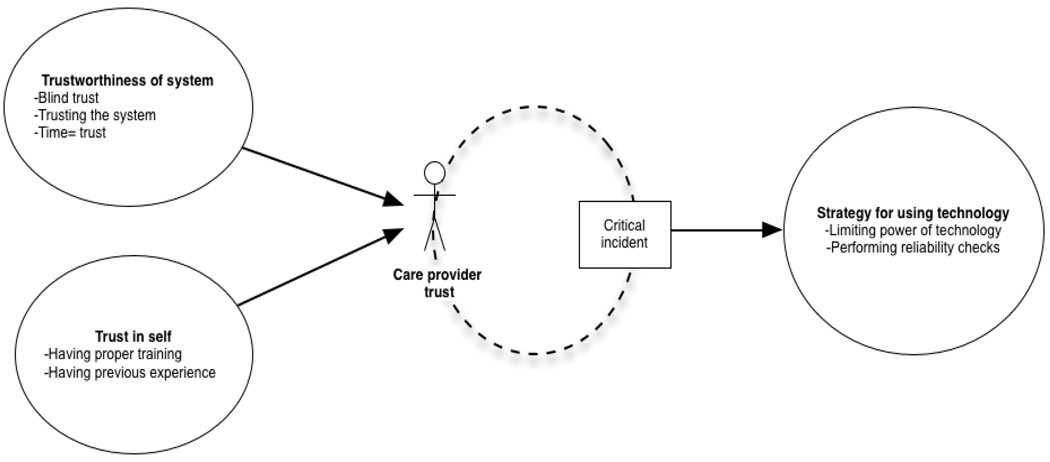

Provider Trust in Technology

In the providers’ trust in technology model, trust is made of the trustworthiness of the system and the providers’ trust in their own abilities to use the technology. This trust level is then dynamically mediated by the presence of critical incidents or outcomes. The result of this process is the formation of strategies that determine how much power the technology will have in the care providing process. The themes that make up this model are described below.

Trustworthiness of the system

The trustworthiness of the system is made of the themes a) generally not questioning trustworthiness or blind trust; b) assuming other members of the system have evaluated technology’s trustworthiness; and c) time equals trust. Blind trust is experiencing trust in the technology as a part of the total system and not questioning its trustworthiness. This was expressed by statements such as “as a whole I trust it” (Mark), “I go on the trusting side” (Janet) and “I don’t doubt it’s functioning” (Peter). Assuming that other members of the system evaluated the technology for trustworthiness was the notion that there are checks and balances in the system to determine when technologies should be used and when they should not. This includes beliefs that technicians and other personnel regularly check the system to make sure it is working and it is the provider’s role to trust that those entities are doing their jobs. This theme was expressed with statements such as “most medical technology is based on research” (Brenda). Another physician stated that they trusted the technology because “I imagine the equipment is calibrated and tested” (James). Another physician stated that the hospital had “policies in place for checking equipment,” (Dorothy). Time equals trust is the notion that the trust in the technology is based on the fact that the system has used the technology for a long time. This theme calls for trust in the status quo, by saying that this is the technology the medical world, this hospital, or the doctor has always used and therefore the individual has no stature to question its trustworthiness.

Trust in Self

Trust in self is the notion that a provider must trust their own ability to use the technology. This theme is made of two categories a) having proper training with the technology and b) having previous experience with the technology. Having proper training with the technology was expressed as a factor in the trustworthiness of the technology through Frederick’s statement:

I think it has to do with if you are comfortable working in an environment where you have worked with the equipment in the past, and if not, then you have the proper orientation to using the equipment and the different buttons on it, the different alarms. And just kind of, I feel it essential being comfortable using the technology before D-day where we use it in the room and with the patient.

Critical incident

A critical incident is an event that has a significant effect, either positive or negative on task performance or user satisfaction (Flanagan 1954). Critical incidents were described as mediating moments when the technologies failed to produce the expected results. These moments were limited to moments when the user realized the technology was not being accurate or seeing that the technology’s predictions did not match the outcomes. These critical incidents could possibly be expanded to include moments where technology breaks or causes harm as well. The critical incidents were factors that adjusted the providers’ trust levels and caused them to create strategies to allow the technology less power in decision-making.

Strategy for decision-making

Providers discussed two decision-making strategies they used to accommodate their level of trust in the technology; a) allowing the technology limited power and b) performing reliability checks. These strategies together represented the most grounded theme (highest number of codes), in provider’s discussions of their trust in the technology. Limiting the power of the technology is a strategy that prevents full reliance on the technology for decision-making. This strategy included viewing the technology as “only part of the picture, not the end all” (Mary). “Henry” discussed this strategy by saying, “You have to look at the whole clinical picture and there are so many variables that play into it and the technology is a good part of that but I wouldn’t make a decision based only on the technology.” Reliability checks involved looking at the technology’s output in context and checking those findings against other factors the provider could observe. “John” described this by saying,

We have to be in the room to be able to tell what is going on… sometimes it looks like the baby’s heart rate is dropping but what actually is happening is the baby moved and now you are picking up the mom’s heartbeat, so you have to be in the room in order to tell what is going on.

DISCUSSION

Recent healthcare literature has explored the role of patients’ trust in physicians in patient behaviors such as adhering to medical advice, malpractice litigation (Thom et al. 2002, Boehm 2003) and seeking healthcare services (Pearson and Raeke 2000, Piette et al. 2005). Some researchers believe that changes in healthcare practices are undermining the trust relationship between patients and physicians (Pearson and Raeke 2000); particularly that technology replaces human elements in medical practice, therefore reducing patient’s trust in physicians (Boehm 2003). The findings of this research show that technology may redefine the trust relationship between patients and providers and associated expectations from each group and actually operates as part of a larger work system.

Boehm (2003) defines trust-gaining behaviors for physicians as communication, revealing emotions, building relationships over time, and self-disclosure. Boehm (2003) argues that increasing interpersonal trust between patients and physicians is a solution to growing malpractice claims. Results of this research show that patient trust in physician can also be defined behaviorally as perceptions of a physician’s behavior with the system.

There is a distinction between social trust and interpersonal trust; interpersonal trust is trust developed over periods of interaction in which “trustworthy” behavior can be assessed, while social trust is trust in an organization (Pearson and Raeke 2000, Falcone and Castelfranchi 2001). Patient’s trust in physician has been linked to behavioral outcomes such as adhering to medical advice and treatment (Thom et al. 1999), patient satisfaction (Safran et al. 1998b), health status (Safran et al. 1998b), and organizational and economic factors such as decreases in the possibility of a patient leaving a care provider’s practice and withdrawing from a health plan (Kao et al. 1998). espite theoretical assumptions, only one study has actually examined the relationship of patient trust to these outcomes and they were not able to form a causal relationship (Safran et al. 1998a). The findings of this research suggest that the design of the technology and the integration of the technology within well-functioning systems may be related to patient trust in the provider, which is related to patient outcomes such as patient satisfaction, and adherence to medical advice and treatment. Designing technologies and systems that allow for appropriate integration of humans and technologies has produced positive system outcomes in many work systems (Hendrick and Kleiner 2001).

A study of interpersonal relationships between patient and physicians, found that patients prefer physicians with technical characteristics as opposed to interpersonal qualities (Fung et al. 2005). The current study shows that providers’ use and competency with technology is a factor in patients’ trust in physician. It also shows that the characteristics of the technology are only part of the equation for a patient’s trust in technology. Future research is needed to quantify the effects of patients’ trust in technology. Anecdotally, the findings of this research show that patient distrust leads to more time spent negotiating use of technology between patients and providers and more interruptions in system processes. Such interruptions in care have been found to be a source of human and system inefficiency and errors (Flynn et al. 1999, Chisholm and Collison 2008).

Researchers have investigated the relationship between physician styles and patient outcomes, and patient personality traits and outcomes. An observational study examined patients’ perceptions of their physician’s interpersonal manner through ratings such as, satisfaction, trust, knowledge of patient, and autonomy support (Franks et al. 2005). The researchers found a relationship between patient’s perceptions of their physician and health status decline, but believe this relationship is not a physician effect. Rather, they believe it results from another confounding variable (Franks et al. 2005). This indicates a need for more dynamic measures of physician traits. While some interpersonal trust studies recommend assessing psychological and personality traits to understand trust formation, attitudes towards technology would also be an insightful addition. Additionally, because the findings of this study allude to flexible mental model processing (Craik 1943), performance measures of physician’s actions with the technology may also provide insight into the relationship between patients and providers.

Pearson and Raeke’s review paper sought to find the points of trust relationships in healthcare that are strong and to find the “emerging points of weakness that threaten health outcomes (p. 509)” (Pearson and Raeke 2000). The results of this study showed that the interaction between providers and technology is a point that influences patient trust in technology and is essentially a point that can threaten health outcomes. Moments where providers are unable to use technology appropriately make patients vulnerable to errors and unnecessary interventions.

Our purposive sample and qualitative methodological framework do not allow us to generalize our results to the population of all patients and physicians. There are a number of reasons why our findings might be different among different groups of patients. First, we focused on mothers’ experiences during childbirth; patients with other conditions might have different expectations or experiences with medical technologies and care providers. The population of patients in this study was women who chose to give birth in a hospital setting; women who choose to give birth in non-medical setting may perceive medical technology more negatively. The population of care providers in this study was obstetricians, who have specific experiences with specific technologies.

CONCLUSION

The findings from this research suggest patients trust what they perceive to be a well-functioning system (see Figure 4). This means that the trustworthiness of the technology cannot be separated from the work system in which it operates. Well-functioning systems are those in which users and technology work together confidently and efficiently.

A key characteristic of the research findings is the use of flexible mental models to form judgments about trustworthiness (Craik 1943). For both patients and physicians, trust is not static; the system’s functioning is compared to match the user’s mental model of how that system should function. For patients, this model may be loosely defined as the absence of any signs of dysfunction. Participants described this as the absence of “uh-oh moments.” This can also be combined with beliefs about how the technology should be used, such as believing that a person does not need to use certain technologies unless there is a clinical reason for its necessity. For physicians, their assessment of trust changes in relation to outcomes and having the technology’s feedback match their clinical assessment of what is occurring with the patient.

These findings may resonate more clearly when conceived in terms of another system with active and passive users, such as commercial aviation. When a person decides to take an airplane for travel, their trust in the airplane is a function of the system as a whole and how the users in that system interact with the technology. A pilot commenting on the intercom system that they do not understand what the red blinking light on the dashboard means, will instill distrust in the passenger. Condensation forming on the ceiling or an unusual noise will be perceived as a surrogate for quality. Alternatively, a flight attendant confidently demonstrating how to use the airplane’s devices in emergency situations will instill trust in the system.

Similarly, the pilot must trust that the work system of which she is a part is well functioning. Pilots must trust that the manufacturers of the plane and its parts have checked the plane’s design for quality. They must also trust that the co-pilot, maintenance personnel, and air traffic controllers are all appropriately trained and have done their jobs effectively. They also have to have a level of trust in themselves; they must assess if they sufficient experience with a particular type of plane and if they are alert enough to complete the flight. In short, do they feel confident in their abilities to interact with this technology to produce safe and efficient outcomes? The difference between these two systems is that the aviation industry has years of research and intervention in optimizing human-technology interaction and integration. However, even in this industry, overuse or misuse of automation occurs (Boehm-Davis et al. 2007).

Therefore, technologies must be well designed and usable, but other factors are important for trust to occur and practical guidelines emerge:

Care-providers must be well-trained in how to use the technology and must understand the “total system” concept, in which they have a key role. They must trust that they are prepared to interact with the technology in the system. This includes making sure care providers’ capabilities and limitations are well considered in system design, which is also the purview of designers.

Patients must have indications (i.e. feedback) that the system is functioning well. This includes an absence of moments creating uncertainty, such as false alarms or providers displaying usability issues with the technology.

Technologies must be designed for the factors of trustworthiness such as accuracy, reliability and consistency; what is usable for providers may also be usable for patients. It should be recognized that surrogate metrics for reliability such as modern-ness and cleanliness exist as well.

Patients will benefit from education and information about the technology and its use. When asked about education and the technology, most patients said that they were given little or no information about how the technology worked or would be used on them. The hospital tour showed them what technologies might be used, but this information was often brief and not necessarily useful.

Patients see themselves as the products of these work systems and perhaps outside stakeholders, rather than members of the system. While patients have relationships with both providers and the technologies, it is how all entities work together that influences trust in the technology. For providers, trust in the technology is essentially rooted in their trust in the system, which includes themselves.

Figure 6.

Care Provider’s Trust in Medical Technology

ACKNOWLEDGEMENTS

This work was partially supported by the Francis Research Fellowship and by grant UL1RR025011 from the Clinical and Translational Science Award (CTSA) program of the National Center for Research Resources, National Institutes of Health. The authors would also like to thank undergraduate researchers Sarah Brickel and Sahar Dagar for assistance in data collection and Dr. Pascale Carayon and two anonymous reviewers for feedback on earlier versions of this manuscript. The University of Wisconsin-Madison Systems Engineering Initiative for Patient Safety (SEIPS) provided support on this project.

Contributor Information

Enid N. H. Montague, Email: emontague@wisc.edu, University of Wisconsin Madison, Industrial and Systems Engineering, Industrial and Systems Engineering University of Wisconsin-Madison, 3270 Mechanical Engineering, 1513 University Avenue, Madison, 53706 United States & Industrial and Systems Engineering University of Wisconsin-Madison, 3270 Mechanical Engineering, 1513 University Avenue, Madison, 53706 United States.

Dr Woodrow W. Winchester, III, Virginia Polytechnic Institute and State University, Industrial and Systems Engineering, Grado Department of Industrial and Systems Engineering (0118), 250 Durham Hall, Blacksburg, 24061 United States.

Dr Brian M. Kleiner, Virginia Polytechnic Institute and State University, Industrial and Systems Engineering, Grado Department of Industrial and Systems Engineering (0118), 250 Durham Hall, Blacksburg, 24061 United States.

REFERENCES

- Anderson LA, Dedrick RF. Development of the trust in physician scale: A measure to assess interpersonal trust in patient-physician relationships. Psychol Rep. 1990;67(3.2):1091–1100. doi: 10.2466/pr0.1990.67.3f.1091. [DOI] [PubMed] [Google Scholar]

- Arora N, Gustafson D. Perceived helpfulness of physicians’ communication behavior and breast cancer patients’ level of trust over time. Journal of General Internal Medicine. 2009;24(2):252–255. doi: 10.1007/s11606-008-0880-x. Available from: http://dx.doi.org/10.1007/s11606-008-0880-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balkrishnan R, Hall MA, Blackwelder S, Bradley D. Trust in insurers and access to physician: Associated enrollee behaviors and changes over time. Health Services Research. 2004;39(4) doi: 10.1111/j.1475-6773.2004.00259.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernstein PS. Battling the obstetric malpractice crisis: Improving patient safety, part 1. Medscape Ob/Gyn Women's Health. 2005;10(2) [Google Scholar]

- Boehm FH. Building trust. Family Practice News. 2003;33(15) 12(1) [Google Scholar]

- Boehm-Davis D, Casali JG, Kleiner BM, Lancaster J, Saleem JJ, Wochinger K. Pilot performance, strategy and workload while executing approaches at ateep angles and with lower landing minima. Human Factors: The Journal of the Human Factors and Ergonomics Society. 2007;49(5):759–772. doi: 10.1518/001872007X230145. [DOI] [PubMed] [Google Scholar]

- Cartwright E. The logic of heartbeats: Electronic fetal monitoring and biomedically constructed birth. In: Davis-Floyd R, Dumit J, editors. Cyborg babies: From techno-sex to techno-tots. New York: Routledge; 1998. [Google Scholar]

- Charmaz K. 'discovering' chronic illness: Using grounded theory. Soc Sci Med. 1990;30(11):1161–1172. doi: 10.1016/0277-9536(90)90256-r. [DOI] [PubMed] [Google Scholar]

- Charmaz K. Constructing grounded theory. 2006 [Google Scholar]

- Chisholm CD, Collison EK. Emergency department workplace interruptions are emergency physicians "Interrupt-driven" And "Multitasking". Academic Emergency Medicine. 2008;7(11) doi: 10.1111/j.1553-2712.2000.tb00469.x. 1239-124 Available from: http://dx.doi.org/10.1111/j.1553-2712.2000.tb00469. [DOI] [PubMed] [Google Scholar]

- Corritore CL, Kracher B, Wiedenbeck S. On-line trust: Concepts, evolving themes, a model. International Journal of Human-Computer Studies. 2003;58:737–758. [Google Scholar]

- Craik K. The nature of explanation. Cambridge: Cambridge University Press; 1943. [Google Scholar]

- Creswell JW. Qualitative inquiry and research design. 2nd ed. Thousand Oaks, Calif: SAGE; 2007. [Google Scholar]

- Daignault M, Shepherd M, Marche S, Watters C. Enabling trust online. Proceedings of the 3rd International Symposium on Electronic Commerce (ISECí02) 2002 [Google Scholar]

- Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly. 1989;13(3):319–340. Available from: http://www.jstor.org/stable/249008. [Google Scholar]

- Davis- Floyd R. Culture and birth: The technocratic imperative. International Journal of Childbirth Education. 1994;9(2):6–7. [PubMed] [Google Scholar]

- Davis-Floyd RE. The technocratic model of birth. In: Hollis ST, Pershing L, Young MJ, editors. Feminist theory in the study of folklore. University of Illinois Press; 1993. pp. 297–326. [Google Scholar]

- Doescher MP, Saver BG, Franks P, Fiscella K. Racial and ethnic disparities in perceptions of physician style and trust. Arch Fam Med. 2000:9. doi: 10.1001/archfami.9.10.1156. [DOI] [PubMed] [Google Scholar]

- Dzindolet MT, Peterson SA, Pomranky RA, Pierceb LG, Beckc HP. The role of trust in automation reliance. Int. J. Human-Computer Studies. 2003;58:697–718. [Google Scholar]

- Erickson F. Qualitative methods in research on teaching. 1985:147. [Google Scholar]

- Falcone R, Castelfranchi C. Social trust: A cognitive approach. In: Castelfranchi C, Tan Y-H, editors. Trust and deception in virtual societies. Kluwer Academic Publishers; 2001. pp. 55–90. [Google Scholar]

- Flanagan JC. The critical incident technique. Psychological Bulletin. 1954;51(4):327–358. doi: 10.1037/h0061470. [DOI] [PubMed] [Google Scholar]

- Flynn EA, Barker KN, Gibson JT, Pearson RE, Berger BA, Smith LA. Impact of interruptions and distractions on dispensing errors in an ambulatory care pharmacy. American Journal of Health-System Pharmacy. 1999;56(13):1319–1325. doi: 10.1093/ajhp/56.13.1319. [DOI] [PubMed] [Google Scholar]

- Franks P, Fiscella K, Shields CG, Meldrum SC, Duberstein P, Jerant AF, Tancredi DJ, Epstein RM. Are patients' ratings of their physicians related to health outcomes. Annals of Family Medicine. 2005;3(3):229–234. doi: 10.1370/afm.267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman B, Kahn PH, Howe DC. Trust online. Communications of the ACM. 2000;43(12) [Google Scholar]

- Fung CH, Elliott MN, Hays RD, Kahn KL, Kanouse DE, Mcglynn EA, Spranca MD, Shekelle PG. Patients' preferences for technical versus interpersonal quality when selecting a primary care physician. Health Serv Res. 2005;40(4):957–977. doi: 10.1111/j.1475-6773.2005.00395.x. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=16033487. [DOI] [PMC free article] [PubMed]

- Glaser BG, Strauss AL. The discovery of grounded theory: Strategies for qualitative research. Chicago: Aldine; 1967. [Google Scholar]

- Hall MA, Camacho F, Dugan E, Balkrishnan R. Trust in the medical profession: Conceptual and measurement issues. Health Serv Res. 2002;37(5):1419–1439. doi: 10.1111/1475-6773.01070. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=12479504. [DOI] [PMC free article] [PubMed]

- Hendrick HW, Kleiner BM. Macroergonomics: An introduction to work system design. Santa Monica: Human Factors and Ergonomics Society; 2001. [Google Scholar]

- Hignett S. Hospital ergonomics: A qualitative study to explore the organizational and cultural factors. Ergonomics. 2003;46(9):882–903. doi: 10.1080/0014013031000090143. [DOI] [PubMed] [Google Scholar]

- Jian JY, Bisantz AM, Drury CG. Proceedings of the Human Factors and Ergonomics Society. Chicago: Human Factors and Ergonomics Society; 1998. Towards an empirically determined scale of trust in computerized systems: Distinguishing concepts and types of trust; pp. 501–505. [Google Scholar]

- Kao AC, Green DC, Davis NA, Koplan JP, Cleary PD. Patients’ trust in their physician: Effects of choice, continuity, and payment method. Journal of General Internal Medicine. 1998;13(10):681–686. doi: 10.1046/j.1525-1497.1998.00204.x. Available from: http://www.blackwell-synergy.com/doi/abs/10.1046/j.1525-1497.1998.00204.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein MC, Sakala C, Simkin P, Davis-Floyd R, Rooks JP, Pincus J. Why do women go along with this stuff? Birth. 2006;33(3):245–250. doi: 10.1111/j.1523-536X.2006.00110.x. Available from: http://www.blackwell-synergy.com/doi/abs/10.1111/j.1523-536X.2006.00110.x. [DOI] [PubMed]

- Lee JD, Moray N. Trust, control strategies and allocation of function in human-machine systems. Ergonomics. 1992;35(10):1243–1270. doi: 10.1080/00140139208967392. [DOI] [PubMed] [Google Scholar]

- Lee JD, Moray N. Trust, self-confidence, and operators' adaptation to automation. Int. J. Human-Computer Studies. 1994;40:153–184. [Google Scholar]

- Lee JD, See KA. Trust in automation: Designing for appropriate reliance. Human Factors. 2004;46(1):30–80. doi: 10.1518/hfes.46.1.50_30392. [DOI] [PubMed] [Google Scholar]

- Lent M. The medical and legal risks of the electronic fetal monitor. Stanford Law Review. 1999;51(4):807–837. [PubMed] [Google Scholar]

- Levy V. Protective steering: A grounded theory study of the processes by which midwives facilitate informed choices during pregnancy. Journal of Advanced Nursing. 2006;53(1):114–124. doi: 10.1111/j.1365-2648.2006.03688.x. [DOI] [PubMed] [Google Scholar]

- Madhavan P, Wiegmann DA, Lacson FC. Automation failures on tasks easily performed by operators undermine trust in automated aids. Human Factors: The Journal of the Human Factors and Ergonomics Society. 2006;48:241–256. doi: 10.1518/001872006777724408. Available from: http://www.ingentaconnect.com/content/hfes/hf/2006/00000048/00000002/art00004 M3 - "doi:10.1518/001872006777724408". [DOI] [PubMed] [Google Scholar]

- Martin JA, Hamilton BE, Sutton PD, Ventura SJ, Menacker F, Kirmeyer S, Division of Vital Statistics. Births: Final data for 2007. National vital statistics reports. 2006;55(1):1–120. Available from: http://www.cdc.gov/nchs/fastats/births.htm. [PubMed]

- Mccartney PR. Electronic fetal monitoring and the legal medical record. MCN. 2002:249. doi: 10.1097/00005721-200207000-00012. [DOI] [PubMed] [Google Scholar]

- Miles MB, Hubberman AM. Data management and analysis methods. In: Denzin NK, Lincoln YS, editors. Qualitative data analysis: An expanded sourcebook. 2nd ed. CA: Thousand Oaks; 1994. pp. 428–444. [Google Scholar]

- Morrow SL. Quality and trustworthiness in qualitative research in counseling psychology. Journal of Counseling Psychology. 2005;52(2):250–260. [Google Scholar]

- Moustakas C. Phenomenological research methods. Thousand Oaks, CA: SAGE; 1994. [Google Scholar]

- Muhr T. Atlas.ti. GmbH, Berlin: Scientific Software Development; 2004. User's manual for atlas.To 5.0. [Google Scholar]

- Nerum H, Halvorsen L, Sorlie T, Oian P. Maternal request for cesarean section due to fear of birth: Can it be changed through crisis-oriented counseling? Birth. 2006;33(3):221–228. doi: 10.1111/j.1523-536X.2006.00107.x. Available from: http://www.blackwell-synergy.com/doi/abs/10.1111/j.1523-536X.2006.00107.x. [DOI] [PubMed]

- Parasuraman R, Miller C. Trust and etiquette in high-criticality automated systems. Communications of the ACM. 2004;47(4) [Google Scholar]

- Parasuraman R, Riley V. Humans and automation: Use, misuse, disuse, abuse. Human Factors: The Journal of the Human Factors and Ergonomics Society. 1997;39:230–253. Available from: http://www.ingentaconnect.com/content/hfes/hf/1997/00000039/00000002/art00006 M3 - "doi:10.1518/001872097778543886". [Google Scholar]

- Patton MQ. Qualitative research and evaluation methods. 3rd ed. SAGE; 2001. [Google Scholar]

- Pearson SD, Raeke LH. Patients’ trust in physicians: Many theories, few measure, and little data. Journal of General Internal Medicine. 2000;15:509–513. doi: 10.1046/j.1525-1497.2000.11002.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piette JD, Heisler M, Krein S, Kerr EA. The role of patient-physician trust in moderating medication nonadherence due to cost pressures. Arch Intern Med. 2005;165(15):1749–1755. doi: 10.1001/archinte.165.15.1749. Available from: http://archinte.ama-assn.org/cgi/content/abstract/165/15/1749. [DOI] [PubMed] [Google Scholar]

- Polkinghorne D. Two conflicting calls for methodological reform. The Counseling Psychologist. 1991;19(1):103–114. [Google Scholar]

- Rosenblatt RA, Weitkamp G, Lloyd M, Schafer B, Winterscheid LC, Hart LG. Why do physicians stop practicing obstetrics? The impact of malpractice claims. Obstet Gynecol. 1990;76(2):245–250. [PubMed] [Google Scholar]

- Safran DG, Kosinski M, Tarlov AR. The primary care assessment survey: Tests of data quality and measurement performance. Medical Care. 1998a;36:728–739. doi: 10.1097/00005650-199805000-00012. [DOI] [PubMed] [Google Scholar]

- Safran DG, Taira DA, Rogers WH, Kosinski M, Ware JE, Tarlov AR. Linking primary care performance to outcomes of care. Journal of Family Practice. 1998b;47(3):213–220. Available from: <Go to ISI>://000075959400007. [PubMed] [Google Scholar]

- Schwandt T. The sage dictionary of qualitative inquiry. Third ed. Thousand Oaks, CA: SAGE; 2007. [Google Scholar]

- Sheridan TB. Humans and automation. Santa Monica: John Wiley and Sons; 2002. [Google Scholar]

- Tarn DM, Meredith LS, Kagawa-Singer M, Matsumura S, Bito S, Oye RK, Liu H, Kahn KL, Fukubara S, Wenger NS. Trust in one's physician: The role of ethnic match, autonomy, acculturation, and religiosity among japanese americans. Annals of Family Medicine. 2005;3(4):339–347. doi: 10.1370/afm.289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thom DH, Campbell BB. Patient physician trust: An exploratory study. Journal of Family Practice. 1997;44(2) 169(8) [PubMed] [Google Scholar]

- Thom DH, Kravitz RL, Bell RA, Krupat E, Azari R. Patient trust in physician: Relationship to patient requests. Family Practice. 2002;19(5) doi: 10.1093/fampra/19.5.476. [DOI] [PubMed] [Google Scholar]

- Thom DH, Ribisl KM, Stewart AL, Luke DA. Further validation and reliability testing of the trust in physician scale. Med Care. 1999;37(5):510–517. doi: 10.1097/00005650-199905000-00010. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=10335753. [DOI] [PubMed]

- Timmons S, Harrison-Paul R, Crosbie B. How do lay people come to trust the automatic external defibrillator? Health Risk & Society. 2008;10(3):207–220. Available from: <Go to ISI>://000258321900002. [Google Scholar]

- Winkelman WJ, Leonard KJ, Rossos PG. Patient-perceived usefulness of online electronic medical records: Employing grounded theory in the development of information and communication technologies for use by patients living with chronic illness. Journal of the American Medical Informatics Association. 2005;12(3):306–314. doi: 10.1197/jamia.M1712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolf ZR. Exploring the audit trail for qualitative investigators. Nurse Educator. 2003;28(4):175–178. doi: 10.1097/00006223-200307000-00008. [DOI] [PubMed] [Google Scholar]

- Young D. "Cesarean delivery on maternal request": Was the nih conference based on a faulty premise? Birth. 2006;33:171–174. doi: 10.1111/j.1523-536X.2006.00101.x. Available from: http://www.blackwell-synergy.com/doi/abs/10.1111/j.1523-536X.2006.00101.x. [DOI] [PubMed]

- Zheng B, Hall MA, Dugan E, Kidd KE, Levine D. Development of a scale to measure patients' trust in health insurers. Health Serv Res. 2002;37(1):187–202. Available from: http://www.ncbi.nlm.nih.gov/entrez/query.fcgi?cmd=Retrieve&db=PubMed&dopt=Citation&list_uids=11949920. [PubMed]