Abstract

We study the Cox models with semiparametric relative risk, which can be partially linear with one nonparametric component, or multiple additive or nonadditive nonparametric components. A penalized partial likelihood procedure is proposed to simultaneously estimate the parameters and select variables for both the parametric and the nonparametric parts. Two penalties are applied sequentially. The first penalty, governing the smoothness of the multivariate nonlinear covariate effect function, provides a smoothing spline ANOVA framework that is exploited to derive an empirical model selection tool for the nonparametric part. The second penalty, either the smoothly-clipped-absolute-deviation (SCAD) penalty or the adaptive LASSO penalty, achieves variable selection in the parametric part. We show that the resulting estimator of the parametric part possesses the oracle property, and that the estimator of the nonparametric part achieves the optimal rate of convergence. The proposed procedures are shown to work well in simulation experiments, and then applied to a real data example on sexually transmitted diseases.

Keywords and phrases: Backfitting, partially linear models, penalized variable selection, proportional hazards, penalized partial likelihood, smoothing spline ANOVA

1. Introduction

In survival analysis, a problem of interest is to identify relevant risk factors and evaluate their contributions to survival time. Cox proportional hazards (PH) model is a popular approach to study the influence of covariates on survival outcome. Conventional PH models assume that covariates have a log-linear effect on the hazard function. These PH models have been studied by numerous authors; see, e.g., the references in [15]. The log-linear assumption can be too rigid in practice, especially when continuous covariates are present. This limitation motivates PH models with nonparametric relative risk. Some examples are [6, 11, 12, 23, 35]. However, nonparametric models may suffer from the curse of dimensionality. They also lack the easy interpretation in parametric risk models. PH models with semiparametric relative risk strike a good balance by allowing nonparametric risk for some covariates and parametric risk for others. The benefits of such models are two-folds. First, they have the merits of models with parametric risk, including easy interpretation, easy estimation and easy inference. Second, their nonparametric part allows a flexible form for some continuous covariates whose patterns are unexplored and whose contribution cannot be assessed by simple parametric models. For example, [10] proposed efficient estimation for a partially linear Cox model with additive nonlinear covariate effects. [3] studied partially linear hazard regression for multivariate survival data with time-dependent covariates via a profile pseudo-partial likelihood approach, where the only nonlinear covariate effect was estimated by local polynomials. But these models are limited to one nonparametric component or additive nonparametric components, ignoring the possible interactions between different nonparametric components. [30] proposed a partially linear additive hazard model whose nonlinear varying coefficients represent the interaction between the time-dependent nonlinear covariate and other covariates.

Variable selection in survival data has drawn much attention in the past decade. Traditional procedures such as Akaike information criterion (AIC) and Bayesian information criterion (BIC), as noted by [2], suffer from the lack of stability and lack of incorporating stochastic errors inherited in the stage of variable selection. [26] and [32] extended respectively the LASSO and the adaptive LASSO variable selection procedures to the Cox model. [8] extended the nonconcave penalized likelihood approach ([7]) to the Cox PH models. [4] studied variable selection for multivariate survival data. The Cox models considered in these three papers all assumed a linear form of covariate effects in the relative risk. More recently, [13] and [14] proposed procedures for selecting variables in semiparametric linear regression models for censored data, where the dependence of response over covariates was also assumed to be of linear form. Hence, the aforementioned variable selection procedures are limited in their rigid assumption of parametric covariate effects which may not be realistic in practice. We will fill in these gaps in three aspects: (i) our models are flexible with semiparametric relative risk, which allows nonadditive nonparametric components, without limiting to single or additive nonlinear covariate effects; and (ii) our approach can simultaneously estimate the parametric coefficient vector and select contributing parametric components; and (iii) our approach also provides a model selection tool for the nonparametric components.

Let the hazard function for a subject be

| (1.1) |

where h0 is the unknown baseline hazard, ZT = (UT, WT) is the covariate vector, β is the unknown coefficient vector, and η(w) = η(w1, … , wq) is an unknown multivariate smooth function. We propose a doubly penalized profile partial likelihood approach for estimation, following the general profile likelihood framework set up by [20]. Given β, η is estimated by smoothing splines through the minimization of a penalized log partial likelihood. Then the smoothing spline ANOVA decomposition not only allows the natural inclusion of interaction effects but also provides the basis for deriving an empirical model selection tool. After substituting the estimate of η, we obtain a profile partial likelihood, which is then penalized to get an estimate of β. To achieve variable selection in β, we use the smoothly clipped absolute deviation (SCAD) penalty. We show that our estimate of η achieves the optimal convergence rate, and our estimate of β possesses the oracle property such that the true zero coefficients are automatically estimated as zeros and the remaining coefficients are estimated as well as if the correct submodel were known in advance. Our numerical studies reveal that the proposed method is promising in both estimation and variable selection. We then apply it to a study on sexually transmitted diseases with 877 subjects.

The rest of the article is organized as follows. Section 2 gives the details of the proposed method, in the order of model description and estimation procedure (§2.1), model selection in the nonparametric part (§2.2), asymptotic properties (§2.3), and miscellaneous issues (§2.4) like standard error estimates and smoothing parameter selection. Section 3 presents the empirical studies, and Section 4 gives an application study. Remarks in Section 5 conclude the article.

2. Method

Let T be the failure time and C be the right-censoring time. Assume that T and C are conditionally independent given the covariate. The observable random variable is (X, Δ, Z), where X = min(T, C), Δ = I[T≤C], and Z = (U, W) is the covariate vector with U ∈ ℝd and W ∈ ℝq. With n i.i.d. (Xi, Δi, Zi), i = 1, … , n, we assume a Cox model for the hazard function as in (1.1).

2.1. Estimation and Variable selection for parametric parts

Let Yi(t) = I[Xi≥t]. We propose to estimate (β, η) through a penalized profile partial likelihood approach. Given β, η is estimated as the minimizer of the penalized partial likelihood

| (2.1) |

where the summation is the negative log partial likelihood representing the goodness-of-fit, J(η) is a roughness penalty specifying the smoothness of η, and λ > 0 is a smoothing parameter controlling the tradeoff. A popular choice for J is the L2-penalty which yields tensor product cubic splines (see, e.g., [9]) for multivariate W. Note that η in (2.1) is identifiable up to a constant, so we use the constraint ∫ η = 0.

Once an estimate η̂ of η is obtained, the estimator of β is then the maximizer of the penalized profile partial likelihood

| (2.2) |

where pθj (| · |) is the SCAD penalty on β ([7]).

The detailed algorithm for our estimation procedure is as follows.

Step 1. Find a proper initial estimate β̂(0). We note that, as long as the initial estimate is reasonable, convergence to the true optimizer can be achieved. Difference choices of the initial estimate will affect the number of iterations needed but not the convergence itself.

Step 2. Let β̂(k−1) be the estimate of β before the kth iteration. Plug β̂(k−1) into (2.1) and solve for η by minimizing the penalized partial likelihood lβ̂(k−1) (η). Let η̂(k) be the estimate thus obtained.

Step 3. Plug η̂(k) into (2.2) and solve for β by maximizing the penalized profile partial likelihood lη̂(k)(β). Let β̂(k) be the estimate thus obtained.

Step 4. Replace β̂(k−1) in Step 2 by β̂(k) and repeat Steps 2 and 3 until convergence to obtain the final estimates β̂ and η̂.

Our experience shows that the algorithm usually converges quickly within a few iterations. As in the classical Cox proportional hazards model, the estimation of baseline hazard function is of less interest and not required in our estimation procedure.

In Step 3, we use a one-step approximation to the SCAD penalty ([34]). It transforms the SCAD penalty problem to a LASSO-type optimization, where the celebrated LARS algorithm proposed in [5] can be used. Let lη̂(β) be the profile log partial likelihood in Step 3, and I(β) = −∇2lη̂ (β) be the Hessian matrix, where the derivative is with respect to β treating η̂ as fixed. Compute the Cholesky decomposition of I (β̂(k−1)) such that I (β̂(k−1)) = VTV. Let and . Decompose V and the new estimate β̂(k) accordingly such that V = [VA, VB] and .

(Step 3a) Let y = Vβ̂(k−1). Then for each j ∈ B, replace the j-th column of V by setting .

(Step 3b) Let be the projection matrix to the column space of VA. Compute y* = y − HAy and VB* = VB − HAVB.

- (Step 3c) Apply the LARS algorithm to solve

(Step 3d) Compute to obtain .

(Step 3e) For j ∈ A, set . For j ∈ B, set .

2.2. Model Selection for Nonparametric Component

While the SCAD penalty takes care of variable selection for the parametric components, we still need an approach to assess the structure of the nonparametric components. In this section, we will first transform the profile partial likelihood problem in (2.1) to a density estimation problem with biased sampling, and then derive a model selection tool based on the Kullback-Leibler geometry. In this part, we treat β as fixed, taking the value from the previous step in the algorithm.

Let (i1, … , iN) be the indices for the failed subjects. Then the profile partial likelihood in (2.1) for estimating η is

Consider the empirical measure on the discrete domain Wn = {W1, … , Wn} such that . Then defines a density function on Wn. Let a1(·), … , aN (·) be weight functions defined on the discrete domain Wn such that , p = 1, … , N. Alternatively, one can think of ap’s as vectors of weights with length n. Then each term in the profile partial likelihood, with the constant n ignored, becomes . Thus, this resembles a density estimation problem with bias introduced by the known weight function ap(·).

For two density estimates η1 and η2 in the above pseudo biased sampling density estimation problem, define their Kullback-Leibler distance as

| (2.3) |

Let η0 be the true function. Suppose the estimation of η0 has been done in a space ℋ1, but in fact η0 ∈ ℋ2 ⊂ ℋ1. Let η̂ be the estimate of η0 in ℋ1. Let η̃ be the Kullback-Leibler projection of η̂ in ℋ2, that is, the minimizer of KL(η̂, η) for η ∈ ℋ2, and ηc be the estimate from the constant model. Set η = η̃ + α(η̃ − ηc) for α real. Differentiation KL(η̂, η) with respect to α and evaluating at α = 0, one has

which, through straightforward calculation, yields

Hence the ratio KL(η̂, η̃)/KL(η̂, ηc) can be used to diagnose the feasibility of a reduced model η ∈ ℋ2: the smaller the ratio is, the more feasible the reduced model is.

2.3. Asymptotic Results

Denote by ℋm (W) the Sobolev space of functions on W whose mth order partial derivatives are square integrable. Let

and η̂* be the estimate of η0 in ℋ that minimizes the penalized partial likelihood

| (2.4) |

Note that ℋ is an infinite dimensional function space. Hence, in practice, the minimization of (2.4) is usually performed in a data-adaptive finite dimensional space

where NJ = {η ∈ ℋ, J(η) = 0} is the null space of J, and RJ is the reproducing kernel (see, e.g., [28]) in its complement space ℋJ = ℋ⊖NJ, and {Wi1, … , Wiqn} is a random subset of {Wi : i = 1, … , n}. When qn = n, one selects all the Wi, i = 1, … , n as the knots. This is the number of knots used in conventional smoothing splines. However, under the regression setting, [16] showed that a qn of the order n2/(r+1)+ε, ∀ε > 0 is sufficient to yield an estimate with the optimal convergence rate. Here r is a constant associated with the Sobolev space ℋ, e.g., r = 2m for splines of order m (one-dimension w) and r = 2m − δ, ∀δ > 0 for tensor product splines (multi-dimension w). We shall show that such an order for qn also works for the η estimation in our partially linear Cox model.

Let and sn[β, η](t) = sn (1; β, η)(t). Define

For any functions f and g, define

| (2.5) |

Write V (f) ≡ V (f, f). Let η̂ be the estimate that minimizes (2.4) in ℋn. Then we have

Theorem 2.1

Under Conditions A1-A7 in the Appendix,

This is the optimal convergence rate for estimate of a nonparametric function. In the view of Lemma 6.1, this theorem also indicates the same convergence rate in terms of the L2-norm. Also note that although a higher order of qn such as O(n) would yield the same convergence rate for η̂, it will make the function space ℋn too big to apply an entropy bound result that is critical in the proof of Theorem 2.2.

Let , where

Let be the true coefficient vector. Without loss of generality, assume that β20 = 0. Let s be the number of nonzero components in β0. Define , and

Define π1 : ℝd+q → ℝs such that π1 (u, w) = u1, where u1 is the vector of the first s components of u. Let V0(π1) be defined like V (π1) in (2.5) but with β replaced by β0.

Theorem 2.2

Under Conditions A1-A7 in the Appendix, if an = O(n−1/2), bn = o(1) and qn = o(n1/2), then

There exists a local maximizer β̂ of ℒP (β) such that ||β̂ − β0|| = Op(n−1/2).

-

Further assume that for all 1 ≤ j ≤ d, θj = o(1), , andWith probability approaching one, the root-n consistent estimator β̂ in (i) must satisfy β̂2 = 0 and

2.4. Miscellaneous Issues

In this section, we will propose the standard error estimates for both the parametric and the nonparametric components, and discuss the selection of the smoothing parameters θ and λ.

2.4.1. Standard error estimates

Let lp(β) be the profile log partial likelihood in the last iteration of Step 3 and

Then the standard errors for the nonzero coefficients of β̂ are given by the sandwich formula

Sometimes the standard errors for zero coefficients are also of interest. A discussion of this problem is in Section 5

In (2.1), η can be decomposed as η = η[0] + η[1] where η[0] lies in the null space of the penalty J representing the lower order part and η[1] lies in the complement space representing the higher order part. A Bayes model interprets (2.1) as a posterior likelihood when η[0] is assigned an improper constant prior and η[1] is assigned a Gaussian prior with zero mean and certain covariance matrix. The minimizer η̂ of (2.1) then becomes the posterior mode. When the minimization is carried out in a data-adaptive function space ℋn with basis functions ψ = (ψ1, … , ψqn)T, we can write η̂ = ψT ĉ. Then a quadratic approximation to (2.1) yields an approximate posterior covariance matrix for c, which can be used to construct point-wise confidence intervals for η.

2.4.2. Smoothing parameter selection

As shown in [33], the effective degrees of freedom for l1-penalty model is well approximated by the number of nonzero coefficients. Note that our SCAD procedure is implemented by a LASSO approximation at each step. Hence, if we let  be the set of nonzero coefficients, the AIC score for selecting θ in Step 3 is

where |Â| is the cardinality of Â.

As illustrated in Section 2.2, the estimation of η in Step 2 can be cast as a density estimation problem with biased sampling. Let KL(η0, η̂λ) be the Kullback-Leibler distance, as defined in (2.3), between the true “density” and the estimate . An optimal λ should minimize KL(η0, η̂λ) or the relative Kullback-Leibler distance

| (2.6) |

The second term of (2.6) is directly computable from the estimate η̂λ. But the first term needs to be estimated. Let ψ be the vector of spline basis functions as in the previous subsection and η = ψT c. Through a delete-one cross-validation approximation, a proxy for (2.6) can be derived as

where P1 = I − 11T / N, Q = (ψ(Wi1), … , ψ(WiN)), and H matrix for minimizing (2.1) with respect to the coefficient vector c. λ is chosen to minimize this score.

3. Numerical Studies

In the simulations, we generated failure times from the exponential hazard model with h(t|U, W) = exp[UT β0 + η0(W)]. We used the same settings for the parametric component, which consists of eight covariates Uj, j = 1, … , 8. The Uj’s were generated from a multivariate normal distribution with zero mean and Cov(Uj, Uk) = 0:5|j−k|. The true coefficient vector was β0 = (0:8; 0; 0; 1; 0; 0; 0:6; 0)T.

The theory in Section 2.3 gives the sufficient order for qn, the number of knots in our smoothing spline estimation of η. In practice, [16] suggested qn = kn2/(2m+1) with k = 10 if the tensor product splines of order m are used. Since we use tensor product cubic splines in all the simulations below, our choice is qn = 10n2/5.

3.1. Variable Selection for Parametric Components

The nonparametric part had one covariate W generated from Uniform(0, 1). Two different η0 were used:

Note that both functions satisfies . Given U and W, the censoring times were generated from exponential distributions such that the censoring rates are respectively 23% and 40%. Sample sizes n = 150 and 500 were considered. One thousand data replicates were generated for each of the four combinations of η0 and n.

For a prediction procedure M and the estimator (β̂M, η̂M) yielded from the procedure, an appropriate measure for the goodness-of-fit under Cox model with h0(t) ≡ 1 is the model error: ME(β̂M, η̂M) = E[(exp(−UT β̂M − η̂M(W)) − exp(−UT β0 − η0(W)))2]. The relative model error (RME) of M1 versus M2 is defined as the ratio ME(β̂M1, η̂M1)/ME(β̂M2, η̂M2). The procedure M0 with complete oracle is used as our benchmark. In M0, (U1, U4, U7, W) are known to be the only contributing covariates, the exact form of η0 is known, and the only parameters to be estimated are the coefficients of U1, U4, U7. Note that M0 can be implemented only in simulations, but is unrealistic in practice since neither the contributing covariates nor the form of η0 would be known. We then compare the performance of the following four procedures, including the proposed procedures, through their RMEs versus M0:

MA: procedure with partial oracle and misspecified parametric η0, that is, (U1, U4, U7, W) are known to be the only contributing covariates but η0 is misspecified to be of the parametric form η0(W) = βW W and βW is estimated together with the coefficients for (U1, U4, U7);

MB: procedure with partial oracle and estimated η0, that is, (U1, U4, U7, W) are known to be the only contributing covariates but the form of η0 is unknown, and η0 is estimated together with the coefficients for (U1, U4, U7) by penalized profile partial likelihood;

MC: the proposed partial linear procedure with the SCAD penalty on β;

MD: the proposed partial linear procedure with the adaptive LASSO penalty on β.

Procedure MA has a mis-specified covariate effect. We intend to show that the estimation results can be unsatisfactory if the semiparametric form of covariate effect is mistakenly specified as parametric. Procedure MB is “partial oracle” and expected to have equal or better performance than procedures MC and MD. Note, however, MB is unrealistic in practice since the contributing covariates would not be known. MC and MD are two versions of the proposed partial linear procedure with different penalties on β.

For each combination of η0 and n, we computed the following quantities out of the 1000 data replicates: the median RMEs of the complete oracle procedure M0 versus the procedures MA to MD, the average number of correctly selected nonzero coefficients (CC), the average number of incorrectly selected nonzero coefficients (IC), the proportion of under-fit replicates that excluded any nonzero coefficients, the proportion of correct-fit replicates that selected the exact subset model, and the proportion over-fit replicates that included all three significant variables and some noise variables. The results are summarized in Table 1. In general, a partial oracle with misspecified parametric η0 (Procedure MA) has much inferior performance when comparing with the other three procedures; the proposed procedure with the SCAD penalty (Procedure MC) or the adaptive LASSO penalty (Procedure MD) is competitive to the partial oracle with estimated η0 (Procedure MB); the SCAD penalty performs slightly better than the adaptive LASSO penalty. Also, the proposed procedure generally performs as well as the complete oracle. For Procedure MC, we also did some extra computation to evaluate the proposed standard error estimate of β. In Table 2, SD is the median absolute deviation divided by 0.6745 of the 1000 nonzero β̂’s that can be regarded as the true standard error, SDm is the median of the 1000 estimated SDs, and SDmad is the median absolute deviation of the 1000 estimated SDs divided by 0.6745. The standard errors were set to 0 for the coefficients estimated as 0s. The results in Table 2 suggests a good performance of the proposed standard error formula for β.

Table 1.

Variable Selection for Parametric Components (Section 3.1).

| No. of Non-zeros |

Proportion of |

|||||

|---|---|---|---|---|---|---|

| Procedure | MRME | CC | IC | Under-fit | Correct-fit | Over-fit |

|

n = 150, η0 = η0a (23% censoring) |

||||||

| MA | 0.168 | - | - | - | - | - |

| MB | 0.475 | - | - | - | - | - |

| MC | 0.409 | 2.998 | 0.825 | 0.002 | 0.476 | 0.522 |

| MD | 0.387 | 2.998 | 0.959 | 0.002 | 0.444 | 0.554 |

|

n = 150, η0 = η0b (40% censoring) |

||||||

| MA | 0.167 | - | - | - | - | - |

| MB | 0.711 | - | - | - | - | - |

| MC | 0.518 | 2.996 | 0.949 | 0.004 | 0.430 | 0.566 |

| MD | 0.563 | 2.998 | 1.131 | 0.002 | 0.378 | 0.620 |

|

n = 500, η0 = η0a (23% censoring) |

||||||

| MA | 0.056 | - | - | - | - | - |

| MB | 0.431 | - | - | - | - | - |

| MC | 0.396 | 3.000 | 0.717 | 0.000 | 0.525 | 0.475 |

| MD | 0.375 | 3.000 | 0.736 | 0.000 | 0.540 | 0.460 |

|

n = 500, η0 = η0b (40% censoring) |

||||||

| MA | 0.057 | - | - | - | - | - |

| MB | 0.712 | - | - | - | - | - |

| MC | 0.619 | 3.000 | 0.749 | 0.000 | 0.512 | 0.488 |

| MD | 0.628 | 3.000 | 0.776 | 0.000 | 0.529 | 0.471 |

Table 2.

Standard Deviations for β̂’s in the Partial Linear SCAD Procedure MC (Section 3.1).

| β̂1 | β̂4 | β̂7 | ||||

|---|---|---|---|---|---|---|

| n, censor % | SD | SDm(SDmad) | SD | SDm(SDmad) | SD | SDm(SDmad) |

| 150, 23% | 0.124 | 0.113(0.015) | 0.141 | 0.121(0.017) | 0.135 | 0.109(0.015) |

| 150, 40% | 0.159 | 0.135(0.017) | 0.188 | 0.145(0.021) | 0.155 | 0.128(0.019) |

| 500, 23% | 0.065 | 0.059(0.005) | 0.073 | 0.063(0.005) | 0.062 | 0.057(0.005) |

| 500, 40% | 0.075 | 0.070(0.006) | 0.088 | 0.076(0.006) | 0.078 | 0.066(0.006) |

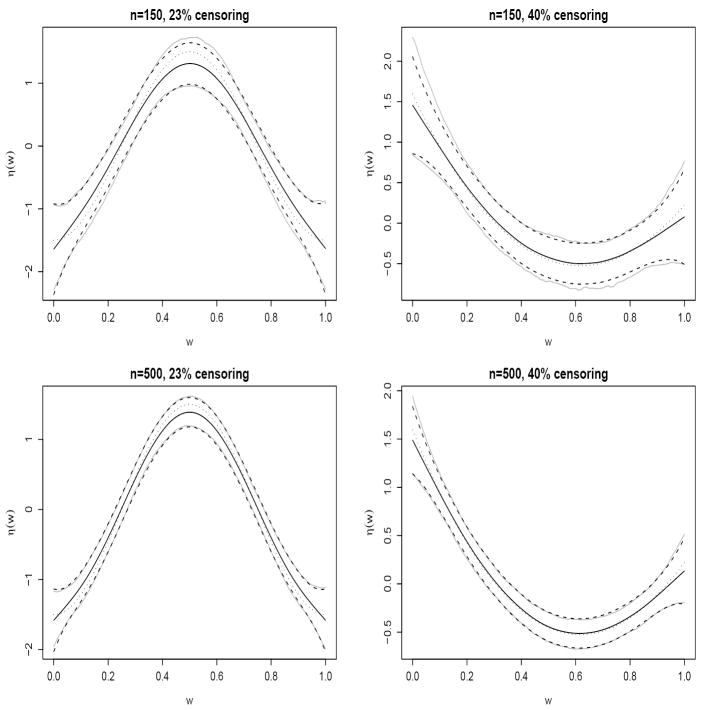

To examine the estimation of η0, we computed the point-wise estimates at the grid w = (0, 1, by = 0:01) for each data replicate. Then at each grid point, the mean, the 0.025 and the 0.975 quantiles of the 1000 estimates, together with the mean of the 1000 95% confidence intervals were computed. The results are in Figure 1. The plots show satisfactory nonparametric fits and standard error estimates.

Fig 1.

Estimates for Nonparametric Components (Section 3.1). Dotted lines are true function, solid lines are connected point-wise mean estimates, faded lines are connected 0.025 and 0.975 quantiles of the point-wise estimates, and dashed lines are the connected point-wise 95% confidence intervals.

3.2. Model Selection for Nonparametric Components

In this section, we present some simulations to evaluate the model selection tool for nonparametric part introduced in Section 2.2. We used the SCAD penalty on the parametric components in this section. Two covariates W1 and W2, independently generated from Uniform(0, 1), were used. We considered two scenarios for the true model of the nonparametric part: (i) nonparametric univariate model η0(W) = η01(W1) and (ii) nonparametric bivariate additive model η0(W) = η01(W1) + η02(W2). For scenario (i), the data sets generated in the last section were used, with W1 being the existing W covariate and W2 being an additional noise covariate. The fitted model was nonparametric additive in W1 and W2. The ratios KL(η̂, η̃)/KL(η̂, ηc) for the projections to the univariate models η0(W) = η01(W1) and η0(W) = η02(W2) were computed. For scenario (ii), we considered two sample sizes n = 150 and 300. The true η0 was

where η0a and η0b are as defined in Section 3.1. The censoring times were generated from exponential distributions such that the resulting censoring rates were respectively 25% and 39%. Note that both choices of η0 are additive in w1 and w2. The fitted model was the nonparametric bivariate full model with both the main effects and the interaction. Then the ratios KL(η̂, η̃)/KL(η̂, ηc) for the projections to the bivariate additive model and the two univariate models were computed. In both scenarios, we claim a reduced model is feasible when the corresponding ratio KL(η̂, η̃)/KL(η̂, ηc) < 0:05.

For each of the eight combinations of η0 and n, we simulated 1000 data replicates and computed the proportions of replicates that produced the following results in the reduced model: selected the main effect of W1, selected the main effect of W2, selected the interaction W1 : W2, under-fitted the model by excluding at least one truly significant effect, correctly fitted the model by reducing to the exact subset model, and over-fitted the model by including all the truly significant effects and some irrelevant effects. These proportion results are summarized in Table 3. It shows that the variable selection tool for the nonparametric component works very well. The better performance appears to be associated with bigger sample sizes and lower censoring rates.

Table 3.

Model Selection for Nonparametric Components (Section 3.2).

| Sample Size | Proportion of Selecting |

Proportion of |

||||

|---|---|---|---|---|---|---|

| W1 | W2 | W1 : W2 | Under-fit | Correct-fit | Over-fit | |

| True model: η0(w1, w2) = η0a(w1), 23% censoring |

||||||

| n = 150 | 1.000 | 0.036 | - | 0.000 | 0.964 | 0.036 |

| n = 500 | 1.000 | 0.002 | - | 0.000 | 0.998 | 0.002 |

| True model: η0(w1, w2) = η0b(w1), 40% censoring |

||||||

| n = 150 | 1.000 | 0.304 | - | 0.000 | 0.696 | 0.304 |

| n = 500 | 1.000 | 0.062 | - | 0.000 | 0.938 | 0.062 |

| True model: η0(w1, w2) = 0.7η0a(w1) + 0.3η0b(w2), 25% censoring |

||||||

| n = 150 | 1.000 | 0.998 | 0.084 | 0.002 | 0.914 | 0.084 |

| n = 300 | 1.000 | 1.000 | 0.013 | 0.000 | 0.987 | 0.013 |

| True model: η0(w1, w2) = η0a(w1) + η0b(w2), 39% censoring |

||||||

| n = 150 | 1.000 | 0.672 | 0.201 | 0.328 | 0.471 | 0.201 |

| n = 300 | 1.000 | 0.616 | 0.096 | 0.384 | 0.520 | 0.096 |

4. Example

An example in [17] is a study on two sexually transmitted diseases: gonorrhea and chlamydia. The purpose of the study was to identify factors that are related to time until reinfection by gonorrhea or chlamydia given an initial infection of either disease. A sample of 877 individuals with an initial diagnosis of gonorrhea or chlamydia were followed for reinfection. Recorded for each individual were follow-up time, indicator of reinfection, demographic variables including race (white or black, U1), marital status (divorced/separated, married, or single, U2 and U3), age at initial infection (W1), years of schooling (W2), and type of initial infection (gonorrhea, chlamydia, or both, U4 and U5), behavior factors at the initial diagnosis including number of partners in the last 30 days (U6), indicators of oral sex within past 12 months and within past 30 days (U7 and U8), indicators of rectal sex within past 12 months and within past 30 days (U9 and U10), and condom use (always, sometimes, or never, U11 and U12), symptom variables at time of initial infection including presence of abdominal pain (U13), sign of discharge (U14), sign of dysuria (U15), sign of itch (U16), sign of lesion (U17), sign of rash (U18), and sign of lymph involvement (U19), and symptom variables at time of examination including involvement vagina at exam (U20), discharge at exam (U21), and abnormal node at exam (U22).

We used qn = 10 · 8772/5 = 151 knots in all the analysis below. We first considered the partial linear Cox model

where η3(W3i) = η3(W1i, W2i) is the interaction term between W1 and W2. However, the interaction term was found to be negligible with the ratio KL(η̂, η̃)/KL(η̂, ηc) = 0.003. Hence, we took out this interaction term and refitted the model. In this model, neither W1 (age) nor W2 (years of schooling) in the nonparametric component were found to be negligible, with the ratios KL(η̂, η̃)/KL(η̂, ηc) equal to 0.633 for removing W1 and 0.259 for removing W2. Their effects are plotted in Figure 2 together with the 95% point-wise confidence interval. We can see that the hazard increased with age at both ends of the age domain (between age 13 and 20, and between age 38 and 48) and stayed flat in the middle, and that the hazard decreased with years of school from 6 years to 10 years but stayed flat afterwards. The fitted coefficients from the proposed method with the SCAD penalty are in Table 4 together with their standard error estimates. For comparisons, Table 4 also lists the fitted coefficients and standard errors for three other models, namely the proposed semiparametric relative risk model with the adaptive LASSO penalty, and the parametric relative risk models with the SCAD and the adaptive LASSO penalties. We can see that the SCAD penalty yielded sparser models than the adaptive LASSO penalty, and that both parametric models missed the age effect. Common factors identified by all the four procedures to be associated with reinfection risk are marital status, type of infection, oral sex behavior, condom use, sign of abdominal pain, sign of lymph involvement and sign of discharge at exam.

Fig 2.

Nonparametric Component Estimates for Sexually Transmitted Diseases Data. Left: Effect of age at initial infection. Right: Effect of years of schooling. Solid lines are the estimates, dashed lines are 95% confidence intervals, and dotted lines are the reference zero lines.

Table 4.

Fitted Coefficients and Their Standard Errors for Sexually Transmitted Diseases Data. (Models from top to bottom: semiparametric relative risk with SCAD penalty and with adaptive LASSO penalty, parametric relative risk with SCAD penalty and with adaptive LASSO penalty.)

| age | yschool | npart | raceW | maritalM | maritalS |

| -(-) | -(-) | 0(-) | 0(-) | 0(-) | 0.487(0.212) |

| -(-) | -(-) | 0.060(0.048) | -0.127(0.097) | 0(-) | 0.448(0.186) |

| 0(-) | -0.059(0.018) | 0(-) | 0(-) | 0(-) | 0.332(0.213) |

| 0(-) | -0.119(0.031) | 0.026(0.024) | 0(-) | 0(-) | 0.210(0.119) |

| typeC | typeB | oralY | oralM | rectY | rectM |

| -0.412(0.149) | -0.337(0.144) | -0.336(0.201) | -0.341(0.235) | 0(-) | 0(-) |

| -0.349(0.137) | -0.300(0.130) | -0.330(0.155) | -0.318(0.173) | 0(-) | 0(-) |

| -0.376(0.149) | -0.249(0.145) | -0.236(0.202) | -0.348(0.235) | 0(-) | 0(-) |

| -0.228(0.096) | -0.083(0.065) | -0.110(0.058) | -0.371(0.117) | 0(-) | 0(-) |

| abdom | disc | dysu | condS | condN | itch |

| 0.253(0.151) | 0(-) | 0.193(0.152) | 0(-) | -0.327(0.114) | 0(-) |

| 0.177(0.120) | 0(-) | 0.089(0.074) | 0.152(0.114) | -0.291(0.106) | 0(-) |

| 0.285(0.148) | 0(-) | 0(-) | 0(-) | -0.296(0.114) | 0(-) |

| 0.184(0.094) | 0(-) | 0(-) | 0(-) | -0.223(0.092) | 0(-) |

| lesion | rash | lymph | involve | discE | node |

| 0(-) | 0(-) | 0(-) | 0.423(0.166) | -0.460(0.220) | 0(-) |

| 0(-) | 0(-) | 0(-) | 0.327(0.159) | -0.407(0.209) | 0(-) |

| 0(-) | 0(-) | 0(-) | 0.392(0.168) | -0.443(0.221) | 0(-) |

| 0(-) | 0(-) | 0(-) | 0.289(0.133) | -0.280(0.163) | 0(-) |

5. Discussion

We have proposed a Cox PH model with semiparametric relative risk. The nonparametric part of the risk is estimated by smoothing spline ANOVA model and model selection procedure derived based on a Kullback-Leibler geometry. The parametric part of the risk is estimated by penalized profile partial likelihood and variable selection achieved by choosing a nonconcave penalty. Both theoretical and numerical studies show promising results for the proposed method. An important question in using the method in practice is which covariate effects should be treated as parametric. We suggest the following guideline for making choices. As a starting point, the effects of all the continuous covariates are put in the nonparametric part and those of the discrete covariates in the parametric part. If the estimation results show that some of the continuous covariate effects can be described by certain parametric forms such as linear form, then a new model can be fitted with those continuous covariate effects moved to the parametric part. In this way, one can take full advantage of the flexible exploratory analysis provided by the proposed method.

We thank a referee for raising the interesting question on the standard error estimates for the coefficients estimated to be 0 in β̂. [25] and [7] suggested to set these standard errors to 0s based on the belief that those covariates with zero coefficient estimates are not important. This is the approach adopted here. When such a belief is in doubt, nonzero standard errors are preferred even for coefficients estimated to be 0s. This problem has been addressed only in a few papers. [22] looked at the problem for LASSO but it is based on a smooth approximation. [24] presented a Bayesian approach and pointed out that no fully satisfactory frequentist solution had been proposed so far, no matter LASSO or SCAD variable selection procedure is considered. This problem presents an interesting challenge that we hope to address in some future work.

Another choice of pθ (| · |) is the adaptive LASSO penalty ([31]). Our simulations in §3.1 indicates a similar performance when compared to the SCAD penalty. So we decided not to present the details here.

Although our method is presented for time-independent covariates, a lengthier argument modifying [23] can yield similar theoretical results for external time-dependent covariates ([15]). However, the implementation of such extension is more complicated and not pursued here.

A recently proposed nonparametric component selection procedure in a penalized likelihood framework is the COSSO method in [19] where the penalty switches from J(η) to J1/2(η). Taking advantage of the smoothing spline ANOVA decomposition, the COSSO method does model selection by applying a soft thresholding type operation to the function components. An extension of COSSO to the Cox proportional hazards model with nonparametric relative risk is available in [18]. Although a similar extension to our proportional hazards model with semiparametric relative risk is of interest, it is not clear whether the theoretical properties of the COSSO method such as the existence and the convergence rate of the COSSO estimator can be transferred to the estimation of η under our semiparametric setting. Furthermore, the dimension of the function space in COSSO is O(n), too big to allow an entropy bound that is critical in deriving the asymptotic properties of β̂.

6. Proofs

For z = (u, w), let pz(t) = exp(uT β+η0(w))q(t, u, w)/s[β, η0](t) and f̄t ≡ ∫ ∫ f(u, w)pz (t)dudw. Let S̃(t, u, w) = E [Y(t) = 1∣U = u, W = w] = P(Y(t) = 1∣U = u, W = w) and q(t, u, w) = S̃(t, u, w)p(u, w), where p(u, w) is the density function of (U, W). Let Ƶ = U × W be the domain of the covariate Z = (UT, WT)T. We need the following conditions.

A1. The true coefficient β0 is an interior point of a bounded subset of ℝd.

A2. The domain Ƶ of covariate is a compact set in ℝd+q.

A3. Failure time T and censoring time C are conditionally independent given the covariate Z.

A4. Assume the observations are in a finite time interval [0, τ ]. Assume that the baseline hazard function h0(t) is bounded away from zero and infinity.

A5. Assume that there exist constants k2 > k1 > 0 such that k1 < q(t, u, w) < k2 and .

A6. Assume the true function η0 ∈ ℋ. For any η in a sufficiently big convex neighborhood B0 of η0, there exist constants c1, c2 > 0 such that c1eη0(w) ≤ eη(w) ≤ c2eη0(w) for all w.

A7. The smoothing parameter λ ≍ n−r/(r+1).

Condition A1 requires that β0 is not on the boundary of the parameter space. Condition A2 is also a common boundedness assumption on covariate. Condition A3 assumes noninformative censoring. Condition A4 is the common boundedness assumption on the baseline hazard. Condition A5 bounds the joint density of (T, Z) and thus also the derivatives of the partial likelihood. Condition A6 assumes that η0 has proper level of smoothness and integrates to zero. The neighborhood B0 in Condition A6 should be big enough to contain all the estimates of η0 considered below. When the members of B0 are all uniformly bounded, Condition A6 is automatically satisfied. The order for λ in Condition A7 matches that in standard smooth spline problems.

We first show the equivalence between V (·) and the L2-norm .

Lemma 6.1

Let f ∈ ℋ. Then there exist constants 0 < c3 ≤ c4 < ∞ such that

Proof

For z = (u, w), let pz (t) = exp(uT β + η0(w))q(t, u, w)/s[β, η0](t) and f̄t ≡ ∫ ∫ f(u, w)pz (t)dudw. Simple algebraic manipulation yields

By Conditions A4 and A5, there exist positive constants c1 and c2 such that

Let m(Ƶ) < ∞ be the Lebesgue measure of Ƶ. Then

The lemma follows from the Cauchy-Schwartz inequality and Condition A4.

Proof of Theorem 2.1

We will prove the results using an eigenvalue analysis of three steps. In the first step (linear approximation), we show the convergence rate Op(n−r/(r+1)) for the minimizer η̃ of a quadratic approximation to (2.4). In the second step (approximation error), we show that the difference between η̃ and the estimate η̂* in ℋ is also Op(n−r/(r+1)), and so is the convergence rate of η̂*. In the third step (semiparametric approximation), we show that the projection η* of η̂* in ℋn is not so different from either η̂* or the estimate η̂ in ℋn, and then the convergence rate of η̂ follows.

A quadratic function B is said to be completely continuous with respect to another quadratic functional A, if for any ε > 0, there exists a finite number of linear functionals L1, … , Lk such that Lj f = 0, j = 1, … , k, implies that B(f) ≤ εA(f); When a quadratic functional B is completely continuous with respect to another quadratic functional A, there exists eigenfunctions {ϕν, ν = 1, 2, ⋯} such that B(ϕν, ϕμ) = δνμ and A(ϕν, ϕμ) = ρν δνμ, where δνμ is the Kronecker delta and 0 ≤ ρν ↑ ∞. And functions satisfying A(f) < ∞ can be expressed as a Fourier series expansion f = Σνfνϕν, where fν = B(f, ϕν) are the Fourier coefficients. See, e.g., [29] and [9].

We first present two lemmas without proof. The first one follows directly from the results in Section 8.1 of [9] and Lemma 6.1. The second one is exactly Lemma 8.1 in [9].

Lemma 6.2

V is completely continuous to J and the eigenvalues ρν of J with respect to V satisfy that as ν → ∞, .

Lemma 6.3

As λ → 0, the sums , and are all order O(λ−1/r).

Step 1: Linear Approximation

A linear approximation η̃ to η̂* is the minimizer of a quadratic approximation to (2.4),

| (6.1) |

Let η = Σν ηνϕν and η0 = Σν ην,0ϕν be the Fourier expansions of η and η0. Plugging them into (6.1) and dropping the terms not involving η yield

| (6.2) |

where . The Fourier coefficients that minimize (6.2) are η̃ν = (γν + ην,0)/(1 + λρν). Note that ∫ ϕν (w)dw = 0 and V (ϕν) = 1. Straightforward calculation gives E[γν] = 0 and . Then,

| (6.3) |

Combining Lemma 6.3 and (6.3), we obtain that (V + λJ)(η̃ − η0) = Op(λ + n−1 λ−1/r), as n → ∞ and λ → 0.

Step 2: Approximation Error

We now investigate the approximation error η̂* − η̃ and prove the convergence rate of η̂*. Define Af,g(α) and Bf,g(α) respectively as the resulting functionals from setting η = f + αg in (2.4) and (6.1). Differentiating them with respect to α and then setting α = 0 yields

| (6.4) |

| (6.5) |

Set f = η̂* and g = η̂* − η̃ in (6.4), and set f = η̃ and g = η̂* − η̃ in (6.5). Then subtracting the resulted equations gives

| (6.6) |

where . Define

and S [f, g](t) be its limit. The following lemma is needed to proceed.

Lemma 6.4

Proof

Let f = Σνfνϕν and g = Σμgμϕμ be the Fourier series expansion of f and g. [1] shows that supt |sn[β, η0] − s[β, η0]| converges to zero in probability. Note that

defines a local martingale with mean zero. Combining the above uniform convergence result and the martingale property with the boundedness condition, we obtain that for any ν and μ,

Then from the Cauchy-Schwartz inequality and Lemma 6.3,

A Taylor expansion at η0 gives

Then by the mean value theorem, Condition A6, Lemma 6.4, and (6.6),

for some c ∈ [c1, c2]. Then the convergence rate of η̂* follows from that of η̃ proved in the previous step.

Step 3: Semiparametric Approximation

Our last goal is the convergence rate for the minimizer η̂ in the space ℋn. For any h ∈ ℋ ⊖ ℋn, one has h(Wil) = J(RJ(Wil,·),h) = 0, so for j = 1, 2 and . Hence, by the same arguments used in the proof of Lemma 6.4,

| (6.7) |

where the last equality follows from qn ≍ n2/(r+1)+ε and Condition A7.

Let η* be the projection of η̂* in ℋn. Setting f = η̂* and g = η̂* − η* in (6.4) and noting that J(η*, η̂* − η*) = 0, some algebra yields

| (6.8) |

Recall that with E[γν] = 0 and . An application of the Cauchy-Schwartz inequality and Lemma 6.3 shows that the first term in (6.8) is of order {(V + λJ)(η̂* − η*)}1/2Op (n−1/2 λ−1/2r). By the mean value theorem, Condition A6, Lemma 6.4, and (6.7), the remaining term in (6.8) is of order op({λJ(η̂*−η*)(V + λJ)(η̂*−η0)}1/2). These, combined with (6.8) and the convergence rates of η̂*, yield λJ (η̂* − η*) = Op(n−1 λ−1/r + λ) and V (η̂* − η*) = Op(n−1 λ−1/r + λ).

Note that J(η̂* − η*, η*) = J(η̂* − η*, η̂) = 0, so J(η̂*, η̂* − η̂), = J (η̂* − η*) + J (η*, η* − η̂). Set f = η̂ and g = η̂ − η* in (6.4), and set f = η̂* and g = η̂* − η̂ in (6.4). Adding the resulted equations yields

| (6.9) |

By the mean value theorem, Condition A6, and Lemma 6.4, the left-hand side of (6.9) is bounded from below by

For the right-hand side, the terms in the first and second brackets are respectively of the orders {(V + λJ)(η̂* − η*)}1/2Op(n−1/2 λ−1/2r) and op({λJ(η̂* − η*)(V + λJ)(η̂* − η0)}1/2) by similar arguments for (6.8), and the terms in the third bracket is of the order

by Condition 3, Lemma 6.4, and (6.7). Putting all these together, one obtains (V + λJ)(η̂ − η*) = Op(n−1 λ−1/r + λ) and hence (V + λJ)(η̂ − η0) = Op (n−1 λ−1/r + λ). And an application of Condition A7 yields the final convergence rates.

Proof for the Asymptotic Properties of β̂

Let Pn be the empirical measure of (Xi, Δi = 1, Zi), i = 1, … , n such that it is related to the empirical measure Qn of (Xi, Δi, Zi), i = 1, … , n by Let . Let P be its corresponding (sub)probability measure. Let L2(P) = {f : ∫ f2dP < ∞} and || · ||2 be the usual L2-norm. For any subclass F of L2(P) and any ε > 0, let N[](ε, F, L2(P)) be the bracketing number and

Lemma 6.5

Let m0(t, u, w; β, η) = uT β+η(w)−log s [β, η ](t), m1(t, u, w; s, β, η) = 1[s≤t] exp(uT β + η(w)), and m2(t, u, w; s, β, η, f) = 1[s≤t]f (u, w) exp(uT β + η(w)). Define the classes of functions

Then and J[](δ, Mj, L2(P)) = cjδ{qn + log(1/δ)}1/2 j = 1, 2.

Proof

The proof is similar to that of Corollary A.1 in [10] and thus omitted here.

Lemma 6.6

Proof

Write

where A1n = sn [U; β0, η̂] (t)−s [U; β0, η̂] (t) and A2n = sn [β0, η̂](t)−s[β0, η̂](t). Note that qn = o(n1/2), hence the result follows from Lemma 3.4.2 of [27] and Lemma 6.5.

Proof of Theorem 2.2

Let γn = n−1/2. To prove 2.2(i), we need to show that ∀δ > 0, there exists a large constant C such that

Consider ℒP(β0 + γnυ) − ℒP(β0). We can decompose it to the sum of Dn1 = lp(β0 + γnυ) − lp(β0) and the penalty difference Dn2. As shown in [7], under the assumption of an = O(n−1/2) and bn = o(1), n−1 Dn2 is bounded by

| (6.10) |

where s is the number of nonzero elements in β0.

Applying the second order Taylor expansion to n−1 Dn1 gives

| (6.11) |

with , and , where U(u, w) ≡ u.

Let . Note that . We have

| (6.12) |

where

Lemma 3.4.2 of [27] and Lemma 6.5 indicate that (Pn − P) {s−1[β0, η̂] (t) s[U − Ūn; β0, η̂](t)} = op(n−1/2), where the fact that qn = op(n−1/2) is used again. Also (Pn − P) {U − Ūn} = Op(n−1/2) by the LLN. Hence we have I1n = Op(n−1/2). Lemma 6.6 gives I2n = Op(n−1/2). Lastly, by the boundedness assumption I3n = P {s−1 [β0, η̂](t) s[Ūn − U; β0, η̂] (t)} = Op (E|Ūn − U|) = Op (n−1/2). Hence J1n = Op (n−1/2). Also, J2n converges to V(U) > 0. Thus, when C is sufficiently large, the second term in (6.11) dominates both terms in (6.10). And Theorem 2.2(i) follows.

Next, we shall show the sparsity of β̂. It suffices to show that for any given β1 satisfying ||β1 − β10|| = Op (n−1/2) and any j = s + 1, … , d, ∂ℒP (β)/∂βj > 0 for 0 < βj < Cn−1/2 and ∂ℒP (β)/∂βj < 0 for −Cn−1/2 < βj < 0. For βj ≠ 0 and j = s + 1, … , d,

Similar to bounding J1n, the first term can be shown to be Op(n−1/2). Recall that and . Hence the sign of ∂ℒp (β)/∂βj is completely determined by that of βj. Then β̂2 = 0.

Lastly, we show the asymptotic normality of β̂1 using the result in [21]. Let zi = (Xi, Δi, Zi). Note that β̂1 is the solution of the estimating equation:

| (6.13) |

where and . Let

be the Fréchet derivative of M(z, β10, η) at η0. Since the convergence rate of η̂ is n−r/[2(r+1)] = o(n−1/4), the linearization assumption (Assumption 5.1) in [21] is satisfied. A derivation similar to bounding (6.12) can verify the stochastic assumption (Assumption 5.2) in [21]. Direct calculation yields E[D(z, η − η0)] = 0 for η close to η0. Then the mean-square continuity assumption (Assumption 5.3) in [21] also holds with α(z) ≡ 0. By Lemma 5.1 in [21], β̂1 thus has the same distribution as the solution to the equation

A straightforward simplification yields the result.

Acknowledgments

We would like to thank the Associate Editor and two referees for their insightful comments that have improved the article.

Contributor Information

Pang Du, Department of Statistics, Virginia Tech, Blacksburg, VA 24061, USA, pangdu@vt.edu.

Shuangge Ma, Department of Epidemiology and Public Health, Yale University School of Medicine, New Haven, CT 06520, USA, shuangge.ma@yale.edu.

Hua Liang, Department of Biostatistics and Computational Biology, University of Rochester, Rochester, NY 14642, USA, hliang@bst.rochester.edu.

References

- 1.Andersen PK, Gill RD. Cox’s regression model for counting processes: A large sample study. Ann Statist. 1982;10:1100–1120. [Google Scholar]

- 2.Breiman Leo. Heuristics of instability and stabilization in model selection. Ann Statist. 1996;24(6):2350–2383. [Google Scholar]

- 3.Cai J, Fan J, Jiang J, Zhou H. Partially linear hazard regression for multivariate survival data. J Amer Statist Assoc. 2007;102(478):538–551. [Google Scholar]

- 4.Cai J, Fan J, Li R, Zhou H. Variable selection for multivariate failure time data. Biometrika. 2005;92:303–316. doi: 10.1093/biomet/92.2.303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression (with discussion) Ann Statist. 2004;32(2):407–499. [Google Scholar]

- 6.Fan J, Gijbels I, King M. Local likelihood and local partial likelihood in hazard regression. Ann Statist. 1997;25(4):1661–1690. [Google Scholar]

- 7.Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J Amer Statist Assoc. 2001;96(456):1348–1360. [Google Scholar]

- 8.Fan J, Li R. Variable selection for Cox’s proportional hazards model and frailty model. Ann Statist. 2002;30(1):74–99. [Google Scholar]

- 9.Gu C. Smoothing Spline ANOVA Models. Springer-Verlag; New York: 2002. [Google Scholar]

- 10.Huang J. Efficient estimation of the partly linear additive Cox model. Ann Statist. 1999;27(5):1536–1563. [Google Scholar]

- 11.Huang JZ, Kooperberg C, Stone CJ, Truong YK. Functional ANOVA modeling for proportional hazards regression. Ann Statist. 2000;28(4):961–999. [Google Scholar]

- 12.Huang JZ, Liu L. Polynomial spline estimation and inference of proportional hazards regression models with flexible relative risk form. Biometrics. 2006;62:793–802. doi: 10.1111/j.1541-0420.2005.00519.x. [DOI] [PubMed] [Google Scholar]

- 13.Johnson BA. Variable selection in semiparametric linear regression with censored data. J Roy Statist Soc Ser B. 2008;70(2):351–370. [Google Scholar]

- 14.Johnson BA, Lin DY, Zeng D. Penalized estimating functions and variable selection in semiparametric regression models. J Amer Statist Assoc. 2008;103(482):672–680. doi: 10.1198/016214508000000184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kalbfleisch JD, Prentice RL. The Statistical Analysis of Failure Time Data. Wiley; New York: 2002. [Google Scholar]

- 16.Kim Y-J, Gu C. Smoothing spline Gaussian regression: More scalable computation via efficient approximation. J Roy Statist Soc Ser B. 2004;66:337–356. [Google Scholar]

- 17.Klein JP, Moeschberger ML. Survival Analysis: Techniques for Censored and Truncated Data. Springer-Verlag Inc; 1997. [Google Scholar]

- 18.Leng C, Zhang HH. Model selection in nonparametric hazard regression. Journal of Nonparametric Statistics. 2006;18(7-8):417–429. [Google Scholar]

- 19.Lin Y, Zhang HH. Component selection and smoothing in smoothing spline analysis of variance models. Ann Statist. 2006;34(5):2272–2297. [Google Scholar]

- 20.Murphy SA, van der Vaart AW. On profile likelihood (with discussion) J Amer Statist Assoc. 2000;95(450):449–465. [Google Scholar]

- 21.Newey WK. The asymptotic variance of semiparametric estimators. Econometrica. 1994;62:1349–1382. [Google Scholar]

- 22.Osborne Michael R, Presnell Brett, Turlach Berwin A. On the LASSO and its dual. J Comput Graph Statist. 2000;9(2):319–337. [Google Scholar]

- 23.O’Sullivan F. Nonparametric estimation in the Cox model. Ann Statist. 1993;21:124–145. [Google Scholar]

- 24.Park Trevor, Casella George. The Bayesian Lasso. J Amer Statist Assoc. 2008;103(482):681–686. [Google Scholar]

- 25.Tibshirani RJ. Regression shrinkage and selection via the lasso. J Roy Statist Soc Ser B. 1996;58:267–288. [Google Scholar]

- 26.Tibshirani Robert. The lasso method for variable selection in the Cox model. Statist in Med. 1997;16:385–395. doi: 10.1002/(sici)1097-0258(19970228)16:4<385::aid-sim380>3.0.co;2-3. [DOI] [PubMed] [Google Scholar]

- 27.van der Vaart AW, Wellner JA. Weak Convergence and Empirical Processes. Springer-Verlag Inc; 1996. [Google Scholar]

- 28.Wahba G. volume 59 of CBMS-NSF Regional Conference Series in Applied Mathematics. SIAM; Philadelphia: 1990. Spline Models for Observational Data. [Google Scholar]

- 29.Weinberger HF. volume 15 of CBMS-NSF Regional Conference Series in Applied Mathematics. SIAM; Philadelphia: 1974. Variational Methods for Eigenvalue Approximation. [Google Scholar]

- 30.Yin G, Li H, Zeng D. Partially linear additive hazards regression with varying coefficients. J Amer Statist Assoc. 2008;103(483):1200–1213. [Google Scholar]

- 31.Zou H. The adaptive LASSO and its oracle properties. J Amer Statist Assoc. 2006;101(476):1418–1429. [Google Scholar]

- 32.Zou H. A note on path-based variable selection in the penalized proportional hazards model. Biometrika. 2008;95(1):241–247. [Google Scholar]

- 33.Zou H, Hastie T, Tibshirani R. On the “degree of freedom” of the LASSO. Ann Statist. 2007;35(5):2173–2192. [Google Scholar]

- 34.Zou H, Li R. One-step sparse estimates in nonconcave penalized likelihood models (with discussion) Ann Statist. 2008;36(4):1509–1533. doi: 10.1214/009053607000000802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Zucker DM, Karr AF. Nonparametric survival analysis with time-dependent covariate effects: A penalized partial likelihood approach. Ann Statist. 1990;18:329–353. [Google Scholar]