Summary

In the precedence effect, sounds emanating directly from the source are localized preferentially over their reflections. Although most studies have focused on the delay between the onset of a sound and its echo, humans still experience the precedence effect when this onset delay is removed. We tested in barn owls the hypothesis that an ongoing delay, equivalent to the onset delay, is discernible from the envelope features of amplitude-modulated stimuli and may be sufficient to evoke this effect. With sound pairs having only envelope cues, owls localized direct sounds preferentially and neurons in their auditory space-maps discharged more vigorously to them, but only if the sounds were amplitude modulated. Under conditions that yielded the precedence effect, acoustical features known to evoke neuronal discharges were more abundant in the envelopes of the direct sounds than of the echoes, suggesting that specialized neural mechanisms for echo suppression were unnecessary.

Introduction

In nature, sounds from an object of interest reflect off of nearby surfaces and arrive at our ears shortly after the arrival of the direct sound. If the sound is long and the delay is short, the direct and reflected waveforms will summate in each ear, altering the spectral and temporal features of the inputs to the auditory system. Despite this complexity in the physical signal, we effortlessly localize the actual sound-source and not the reflective surface. The perceptual dominance of the direct sound over the reflection, or echo, has been termed “localization dominance” (Litovsky et al., 1999) and has been documented in a variety of classes including birds, mammals, and invertebrates (Dent and Dooling, 2004). Localization dominance and its complement, lag “discrimination suppression”, which refers to the weaker perceptual salience of the echo, are components of the precedence effect (Blauert, 1997; Haas, 1951; Litovsky et al., 1999; Wallach et al., 1949). As the delay between the direct (lead) sound and the reflection (lag sound) increases, the direct-sound’s dominance subsides, the reflection’s salience increases, and the “echo threshold” is said to have been crossed.

Normally, a direct sound arrives slightly before its echo, and this time advantage has been the focus of most precedence effect studies, especially, those that use transient stimuli such as clicks. Click-like stimuli have the practical advantage of eliminating the temporal overlap of the leading and lagging sounds. In nature, however, sounds are often longer than the delay so that the leading and lagging sounds overlap. Moreover, the onsets of naturalistic sounds are sometimes gradual, which can obscure the lead/lag relationship at the sound pair’s beginning. Interestingly, Zurek (1980), and more recently, Dizon and Colburn (2006), showed that human listeners experience localization dominance even when the onset (and offset) time-of-arrival differences are removed from lead/lag sound pairs. Here, we test the hypothesis that the delays between corresponding envelope peaks, or “ongoing echo delays” (OEDs), may be sufficient to cause localization dominance.

This hypothesis predicts that localization dominance with lead/lag pairs having synchronized onsets (and offsets) would depend on the depth of envelope modulations. We test this prediction and examine possible neural correlates in the Barn owl (Tyto alba), a nocturnal predator whose sound localization is guided by activity on a topographic map of auditory space generated in the external nucleus of its inferior colliculus (ICx, Konishi and Knudsen, 1978). Lesions of this auditory space-map lead to scotoma-like deficits in sound localization (Wagner, 1993) and the resolving power of its neurons can account for the owl’s auditory spatial discrimination (Bala et al., 2003; Bala et al., 2007). Moreover, studies have shown that at short delays, the neural representation of the direct sound is stronger on the map than that of the echo (Keller and Takahashi, 1996b; Nelson and Takahashi, 2008; Spitzer et al., 2004). Correspondingly, owls localize the direct sound preferentially (Keller and Takahashi, 1996b; Nelson and Takahashi, 2008; Spitzer and Takahashi, 2006), and their ability to discriminate changes in the locations of simulated echoes is poorer (Spitzer et al., 2003).

We first confirm that owls, like humans, localize the leading sound preferentially with only OEDs. This preference, however, required deep amplitude modulations. With stimuli used in the behavioral experiments, we also demonstrate that responses on the auditory space map are considerably stronger to the leading sound, but again, only if the envelopes were deeply amplitude-modulated. We propose a general mechanism for localization dominance based on temporal superposition and the temporal response properties of space map neurons. This mechanism requires no specialized circuitry for the “suppression” of echoes, such as lateral inhibition (Burger and Pollak, 2001; Fitzpatrick et al., 1995; Keller and Takahashi, 1996b; Lindemann, 1986; Pecka et al., 2007; Spitzer et al., 2004; Yin, 1994).

Results

Behavior

We first determined whether or not owls experience localization dominance when only OEDs are available, by measuring the accuracy and precision of the owl’s head turns. For owls, whose eyes are nearly immobile in their sockets, these head-saccades are natural orienting responses analogous to the ocular saccades of primates. The stimuli, synthesized as explained in Figure 1, consisted of broadband noise-bursts (2-11 kHz) multiplied by one of 50 different noise envelopes having spectra that encompassed the behavioral and neural (IC) modulation transfer functions of the barn owl (< 150 Hz, Dent et al., 2002; Keller and Takahashi, 2000). The noise envelopes were modulated at 0, 50, or 100% before delaying the lag sound by 3, 12, or 24 ms and truncating the leading and lagging segments.

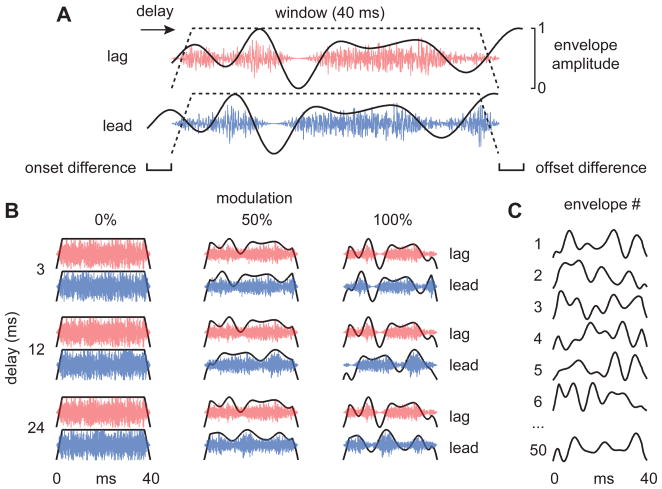

Figure 1. Stimulus configurations.

(A) Lead (bottom, blue) and lag (top, red) waveforms (100% modulation). Carriers were one of 50 arbitrary broadband noises (2-11 kHz). The stimuli were generated by delaying an identical copy of the lead sound 3, 12, or 24 ms and then truncating them so that the lead and lag sounds had synchronized onsets and offsets (trapezoidal dashed lines; 40 ms duration; 2.5 ms ramps). The delay was thus defined only by the ongoing echo delay (OED). (B) Three envelope modulation depths and three OEDs resulted in nine different stimulus combinations. Note that 0% modulation (left column) indicates that no modulation was purposely imposed; only the noise carrier’s intrinsic modulations were present. (C) Examples of the arbitrary envelopes used. All envelopes had random phase spectra and amplitude spectra that spanned the owl’s modulation transfer function (<150 Hz). For each behavioral trial, an envelope and carrier were chosen at random and applied to the lead and lag sounds. For physiology, all 50 envelopes were presented in a random order over 1–3 repetitions.

Prior to truncation, the lagging sound was an identical copy of the leading sound (same noise carrier, same envelope), but with a delay. For a trial, two speakers, diametrically opposed across the owl’s center of gaze, were chosen to emit a direct sound and a simulated echo (Fig. 2A). By thus positioning the sources, we could readily classify a saccade as being directed toward the lead or lag, even in cases when the error was large (Fig. 2B). Sounds were also presented from single speakers to monitor each bird’s localization performance in a standard localization task. We report data from three birds, C, S, and T.

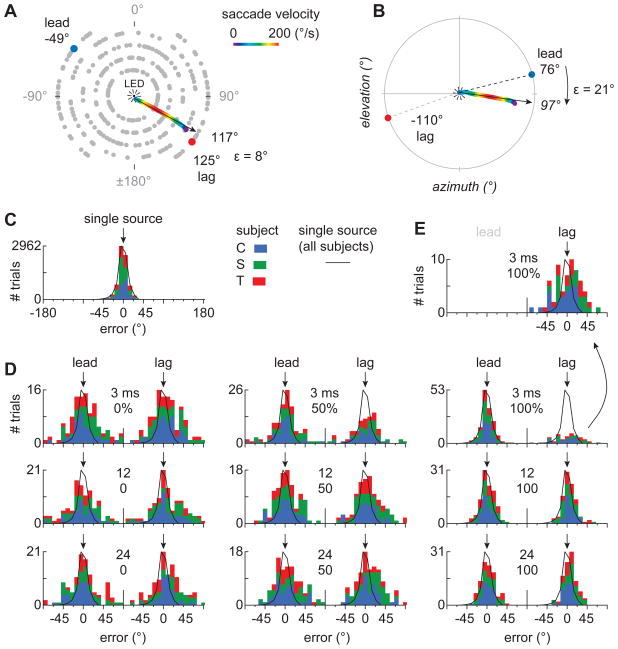

Figure 2. Head saccades.

(A) Speaker locations used in head-saccade experiments. Gray dots mark the possible speaker locations. At the start of a trial, the owl was required to fixate an LED (center) before a speaker or speaker-pair was activated. In trials with lead/lag pairs, the two speakers were diametrically opposed across the center of the array. The multicolored lines in A and B show sample saccades and their trajectories (arrow). Colors represent the angular velocity of the saccade. (C) Distribution of head-aims relative to single sources (0°) in three owls (C, S, and T). Each color represents a different bird. The black line is the normalized average distribution of head-aims for all 3 subjects. (D) Distributions of head saccades in precedence-effect trials for OEDs of 3, 12, and 24 ms and modulations of 0, 50, and 100%. The locations of the lead and lag sources are indicated by arrows (0°). The distributions converge at the center of the abscissa because the speakers were separated by 180°. The distribution of lag-directed saccades for an OED of 3 ms is magnified and re-plotted in (E).

Figure 2C shows the distribution of head-aims for single-source trials in all three birds. The abscissa represents localization error (ε), measured from the start of each saccade, using polar coordinates, and in reference to the polar angle of the speaker to which each saccade was directed (Fig. 2B). The center of each distribution represents saccade accuracy and its breadth represents saccade precision. Saccade radii, shown in Figure S1, were highly variable across trials and did not vary consistently with delay or modulation depth.

The upper left panel of Figure 2D shows the distribution of saccades elicited by lead/lag pairs with an OED of 3 ms and a modulation of 0%, i.e., when only the rather shallow modulations intrinsic to the noise carrier were present. Because the speaker pair was diametrically opposed across the central LED and the center of the owl’s gaze, the lead and lag sources are shown to be 180° apart. The distribution is bimodal and symmetric, indicating that the owls localized the lead and lag sources with equal frequency. In earlier studies with similar stimuli, but with the delays at the onset and offset intact, owls showed clear preferences for leading stimuli (Nelson and Takahashi, 2008; Spitzer and Takahashi, 2006). Without the explicit delay at the onset (or offset), we now observed no evidence for localization dominance (0% modulation). The center and right panels in the upper row show results obtained at the same 3 ms delay but with deeper modulations (50% center; 100% right). These distributions are asymmetric with more saccades to the leading source, suggesting that the owls experience localization dominance.

Thus, owls behaved in a manner consistent with localization dominance with stimulus pairs lacking onset (and offset) delays. Consistent with our hypothesis, the results also show that the ongoing delays in the envelope features were necessary and sufficient to induce localization dominance in owls.

Note that without the modulations (0%), the owls localized either source, suggesting that each sound of the pair was perceived as a spatially separate event. Although the two sounds’ carriers were identical, the 3-ms delay causes segments of the waveform in the leading source to align with segments in the lagging source that were 3 ms earlier and were therefore statistically uncorrelated in their fine-structure. Earlier studies have shown that the owl’s auditory system represents two, concomitant, uncorrelated noises as separate foci of activity on its auditory space map and that a delay of about 0.1 ms (100 μs) is sufficient to cause such decorrelation (Keller and Takahashi, 1996a, 2005). Thus, the decorrelated fine-structure allows two separate foci to form, while the envelope determines their relative strengths.

The lower two rows in Figure 2D show results for longer OEDs (12, 24 ms). The distributions are all bimodal and symmetric suggesting that localization dominance no longer operates at these longer OEDs, regardless of the modulation depth.

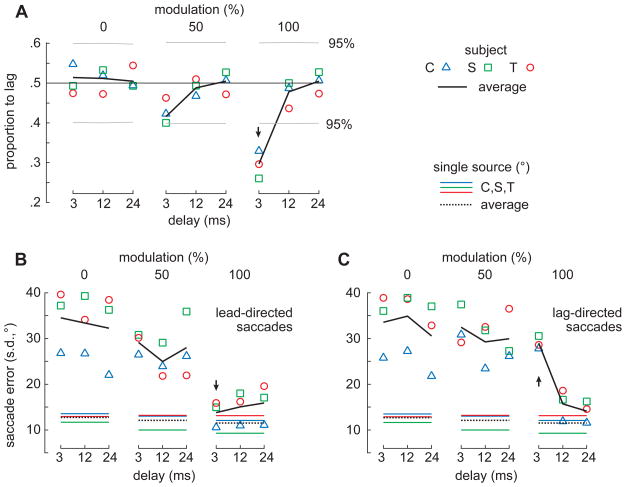

The effects of OED and modulation depth on localization dominance (Fig. 2D) are summarized in Figure 3A, which plots the proportion of lag-directed saccades for all subjects (black line; ± 95% confidence interval; gray horizontal lines) against OED for envelope modulations of 0% (left), 50% (center), and 100% (right). These data show that when the envelope modulations are minimal (left), owls saccade to the lag source on roughly half the trials, regardless of the OED. For deeper modulations and short OEDs (top, left), the owls preferentially localized the lead source (100%; subject C: p=0.046, S: p=0.039, T: p=0.0016, all subjects: p<1×10−6, Contingency table analyses).

Figure 3. Head saccade characteristics.

(A) Proportion of lag-directed saccades for each bird (colored symbols). Black lines show the average of the birds. Horizontal gray lines and an arrow indicate proportions that differed significantly from 0.5 (log likelihood ratio, 95% confidence). (B, C) Saccade error (ε), defined as the standard deviation (s.d.) of each saccade distribution (Figs. 2C-E), plotted against OED and modulation depth for lead-directed (B) and lag-directed (C) saccades. Colored lines show the error with single sounds. See also Figure S1.

Inspection of Figure 2 shows that localization error was also affected by OED and modulation depth. The outline of the distribution of saccades to single-sources (Fig. 2C) is superimposed on each histogram in Figure 2D and demonstrates that the distributions are broader (i.e., the saccades are less precise) than those toward single sources when modulations are shallow (Fig. 2D, left and center columns). When the modulations were maximal (right column), saccade precision to the lead and lag sources rivals that to single sources.

The trend noted above is more readily apparent in Figures 3B and C which plot saccade error against delay for the three modulation depths. Comparing across panels of Figure 3B, one can see that lead-directed saccades increased in precision (i.e., decreased in error) with modulation depth and were nearly as precise as saccades to single sources (colored lines, black dashed line) when the modulation depth was 100% (s.d.<~20°).

Lag-directed saccades (Fig. 3C) were generally imprecise for all OEDs at modulation depths of 0 and 50% and for the shortest OED (3ms) at 100%. In the latter case, saccade precision was roughly equivalent to that towards unmodulated stimuli (0%; s.d.>~21°). For the longer delays at 100% modulation, saccades were nearly as precise as those to single sources. Comparison of points indicated by arrows in the right-hand panels of Figures 3B and C (at 3 ms), show that saccades to deeply modulated lag sounds were significantly less precise than those that were lead-directed (subject C: p=0.0001, S: p=0.0001, T: p=0.0045, all subjects: p=0.0001; Levene's test).

Physiology

The behavioral results above suggest that owls experience localization dominance with stimuli in which the lead/lag relationship is defined only by the ongoing envelope features of the two sounds. We now describe the responses evoked in neurons of the owl’s auditory space map by stimuli used in the behavioral experiments.

The space-map neurons of our sample all had well-defined spatial receptive fields (SRF; N=28 cells) as shown in Figure 4A, which represents the firing rate (color-scale) of a typical cell evoked by stimuli placed at different locations in virtual auditory space. In the diagram, negative azimuths and elevations correspond to loci that are, respectively, to the bird’s left and below its eye-level. For trials with lead/lag pairs, one source, called the “target”, was placed in the cell’s SRF, and the other source, called the “masker” was placed outside the SRF at a mirror-symmetric location across the intersection of the owl’s mid-sagittal plane and eye-level (gray crosshair, Fig. 4A). For any given trial, the sound-source in the cell’s SRF, i.e., the target, could lead or lag.

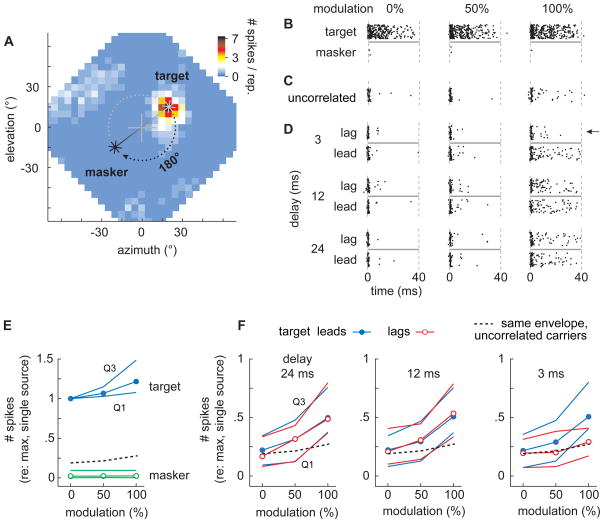

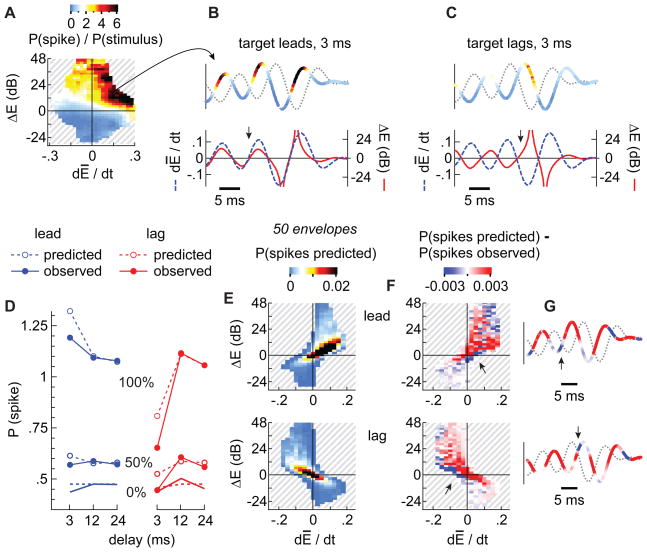

Figure 4. Neural responses.

(A) Spatial response of a typical space-map neuron. The octagonal region represents the locations in the frontal hemisphere from which virtual auditory stimuli were presented. Crosshairs at the center represent the center of the owl’s gaze. Pixel colors represent the average spike count elicited by a single source emitting broadband noise (100 ms). This cells spatial receptive field (SRF) was therefore in the upper-right quadrant of frontal space. In trials with lead/lag pairs, the source in the cell’s SRF is referred to as the target and the other source, which was always placed diametrically across from the center of gaze, is referred to as the masker. (B) Spike-raster plots of neural responses elicited by the target (top row) and masker (second row) having envelope modulations of 0%, 50%, and 100%. (C) Responses evoked by two uncorrelated noises (see text). (D) Responses evoked by lead/lag pairs analogous to those used in the behavioral experiments (envelope modulations of 0, 50, 100%; OEDs of 3, 12, 24 ms). Responses evoked by lagging targets are shown in the rows above those evoked by leading targets. (E) Median spike counts (normalized) plotted against modulation depth for targets (blue), maskers (green unfilled circles), and two uncorrelated noises (black dashed line). Thin lines demarcate the first and third quartiles about the median. (F) Median spike counts (normalized) elicited by leading (blue filled circles) and lagging targets (red unfilled circles) and by two simultaneous sources (black dashed lines) are plotted against modulation depth for OEDs of 24 ms (left), 12 ms (center), and 3 ms (right).

We also conducted trials with single-sources at the locations of the target and masker, and with two sources simultaneously emitting noise bursts with uncorrelated carriers but identical, synchronous envelopes from the masker and target loci (no OEDs). The latter stimulus, which we will refer to as “two uncorrelated noise carriers”, provides a reference against which to compare the neuronal responses elicited by lead/lag pairs. Specifically, the superposition of the leading and lagging sounds in each ear would be expected to cause the frequency-specific binaural cues at any given instant and in any given spectral band to correspond to different locations in auditory space, resulting in a spatial decorrelation. Given the sensitivity of space-map cells to these frequency-specific binaural cues (Euston and Takahashi, 2002; Spezio and Takahashi, 2003), the effects of the lead/lag delay must be differentiated from the effects of spatial decorrelation. The neural responses to two uncorrelated noise carriers thus allow us to account for the effects of delay per se, independently of spatial decorrelation.

The responses of a typical neuron to single sources placed at target and masker loci are shown as dot-raster displays for three envelope modulation depths (Fig. 4B). The top and bottom halves of each panel show the responses to the target and masker respectively. As shown, the target evoked a vigorous burst at the stimulus’ onset that decayed to a lower steady-state level. This burst/steady-state response property is clearest in the 0% modulation condition (Fig. 4B left). The responses increased slightly with increased modulation depths (Fig. 4B center, right). By contrast, the masker, which was outside the cell’s SRF, evoked few if any spikes regardless of modulation depth (Fig. 4B bottom rasters).

Responses evoked in the same neuron by two uncorrelated noises are shown in the spike-raster displays of Figure 4C. Responses evoked by lead/lag pairs having different combinations of OED and modulation depth are shown in Figure 4D. The top half of each panel shows the response when the target lagged, and the bottom half, when the target led. By and large, the firing rates are similar whether the target lagged or led, except in the case shown in the upper right, which depicts the response to a 3-ms OED and 100% modulation. In this case, the cell’s response to a leading target is considerably stronger than its response to a lagging target. Finally, as with single sources and two uncorrelated noises, deeper modulations caused higher firing rates whether the target led or lagged.

The observations above are summarized for our sample population of cells in the bottom panels of Figure 4 (50 envelopes, 1–3 repetitions). Figure 4E plots against modulation depth the normalized firing rate of all of our cells evoked by single maskers (green), single targets (blue), and by two uncorrelated noises (dashed line). Overall, targets evoked strong responses that increased slightly with modulation depth. Maskers, by contrast, evoked little if any activity regardless of modulation depth. The responses to two uncorrelated noises (dashed line) fell between these two extremes.

Each panel in Figure 4F shows the responses of cells to lead/lag stimuli plotted against modulation depth for OEDs of 24 (left), 12, or 3 ms. Responses to leading targets (blue) increased with modulation depth but did not vary with OED (P<1×10−6; df=26; Kruskal-Wallis, Student-Newman-Keuls test, P=0.05). Responses to the lagging targets (red) were similar to those of leading targets at the longer delays of 12 and 24 ms.

When the OED was 3 ms (Fig. 4F right), however, we observed an interesting difference in the responses to the lead and lag relative to those evoked by two uncorrelated noises. Specifically, the responses to the lead sound for the deeper modulations were stronger than those evoked by two uncorrelated noises, while the responses to the lagging sound were similar to but not less than those evoked by two uncorrelated noises. In other words, the weak response to the lag simply reflects the effects of spatial decorrelation resulting from the superposition of two sounds. There is no evidence of a suppression of the lag response that is attributable to the delay per se.

Our behavioral and physiological experiments show that the conditions that result in localization dominance (Figs. 2, 3), i.e., short OEDs and deep modulations, are also those that result in a preferential neuronal response to the leading source (Fig. 4). This correlation between the differential neural responses to the leading and lagging sources and localization dominance has been pointed out earlier, beginning with the work of Yin (1994). What causes the lead sound to evoke a greater neural response than that evoked by the lag sound at short delays, or by two uncorrelated noises, and why does this effect depend upon modulation depth?

Analyses of acoustical cues and neuronal responses

We hypothesize that the stronger neural response to the leading sound (above) might be explained by a combination of simple acoustical principles and the firing properties of space-map neurons.

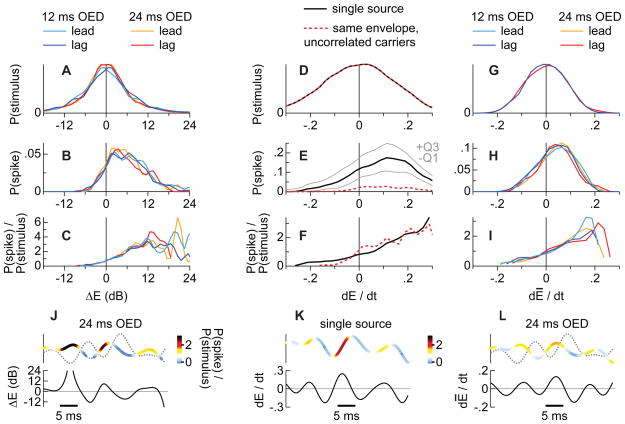

First, neurons in the owl’s space map are highly selective for the binaural cues that correspond to the locations of their SRFs (Fig. 4A). When two sources concurrently emit sounds with overlapping amplitude spectra, the frequency-specific binaural cues assume values that are the average of each individual source’s cues weighted by their amplitudes. Thus, if one of the two sources momentarily achieves a higher amplitude than the other, the binaural cues approximate the values of the higher-amplitude source for that moment (Blauert, 1997; Keller and Takahashi, 2005; Snow, 1954; Takahashi and Keller, 1994). A space map neuron is therefore likely to fire when the amplitude modulation at a given instant favors the target, which is in its SRF, over the masker. This preference for amplitudes that favor the target is shown in Figures 5A-C. Figure 5A shows the distribution of the relative amplitudes of target and masker, ΔE, sampled at regular (0.1 ms) intervals for conditions in which the two sounds were effectively uncorrelated (OEDs of 12 and 24 ms). The distribution in Figure 5A shows that positive (target > masker) and negative (target < masker) ΔE values were equally likely. The relative amplitude was also sampled within a ±1 ms time window surrounding each spike, instead of at regular intervals, and accumulated into the distribution of Figure 5B (28 cells). This distribution, by contrast, demonstrates that the probability of spiking is highest when ΔE > 0. Figure 5C scales the spiking probability by the frequency of occurrence of ΔE.

Figure 5. Generic neuronal preferences.

(A) Distribution of ΔE values obtained from lead/lag pairs with OEDs of 12 ms (blue lines) or 24 ms (red or orange lines) and 100% modulation. At these OED values, the carriers and envelopes are uncorrelated within a short time window (see text). Positive values indicate that the target’s amplitude was higher than the masker’s. (B) Spiking probability plotted against ΔE shows that neurons discharged when the target’s amplitude was higher than the masker’s. The ordinate represents median spike probability per stimulus and sampled cell. (C) Spiking probability scaled to the likelihood of ΔE values, obtained by dividing the distributions in A and B. (D–F) Distributions analogous to A-C for dĒ/dt, measured for single sounds and two uncorrelated noise carriers (dashed line). Positive derivatives indicate that the envelope’s amplitude was rising. Thin lines in E show the first and third spike-probability quartiles for the sampled cells (N=28). (G-F) Distributions analogous to A-C for dE/dtand lead/lag pairs having OEDs of 12 and 24 ms (solid lines). Similarity of F and I suggest that the neurons’ preference for positive derivatives remains similar whether there are two sounds or just one. Upper traces in J-L show representative lead/lag envelope pairs; the thicker, colored line represents the target’s waveform while the dotted line represents the masker’s waveform. The color of the target’s envelope indicates spike probability (warmer colors represent higher probabilities). Lower traces show ΔE (J), dĒ/dt (K), and dĒ/dt (L) from which the probabilities were estimated. See also Figure S2.

Second, neurons of the owl’s space map, like those of many other auditory structures, are especially sensitive to the onsets of sounds. When a sound’s envelope is unmodulated, the only onset is at the beginning of the sound. The firing rate is correspondingly highest there and diminishes as the sound continues (Fig. 4B; 0% depth). For the more deeply amplitude-modulated sounds, the stimulus resembles a series of distinct peaks, and the rising edge of each such peak may effectively serve as an onset-like event. A space map neuron is therefore likely to fire on the rising edges of single sounds or when the target’s envelope, which is in its SRF, is rising. The preference for rising amplitudes is shown in Figures 5D-F, which are analogous to Figures 5A-C, except that the stimuli analyzed were the envelopes of single sounds or two uncorrelated noises. As shown (Figs. 5E,F), the probability of spiking is highest when the derivative of an envelope segment ( dĒ/dt ) is positive even though positive and negative derivatives were equally likely (Fig. 5D). Figures 5G-I show the distributions related to dE/dt, but for pairs of sounds. For this analysis, the derivative dĒ/dt was measured using the composite envelope Ē of the masker and target ([Etarget + Emasker] / 2), as shown for a representative envelope pair in Figure 5L. Importantly, the distributions of spikes (Fig. 5H,I) show a bias for positive values much like those for single sources (Fig. 5E,F), suggesting that a cell’s preference for rising amplitudes remains similar in one- and two-source conditions. Additional distributions for an OED of 3 ms and 50% modulations are shown in Figure S2.

We next tested our hypothesis (above) that it is the conjunction of these two stimulus features, ΔE and dĒ/dt, that determines the responses of space-map neurons to lead/lag pairs. More specifically, space map neurons are most likely to discharge when the amplitude of the target exceeds that of the masker (ΔE > 0) while the composite envelope is rising ( dĒ/dt > 0). According to our hypothesis, the leading source elicits a higher spike rate because these spike-conducive conditions are more abundant for the lead than for the lag when OEDs are short and modulations are deep. With longer OEDs and shallower modulations, the advantage for the leading sound disappears. To test this hypothesis, estimates of the derivative and difference were assigned to (three dimensional) frequency distributions having axes of ΔE and dĒ/dt . This procedure was repeated for all 50 pairs of envelopes used in the experiments.

Figures 6A and B show, respectively, the histograms for leading and lagging sounds that were 100% modulated and presented with an OED of 3 ms. Warmer colors represent high incidences of the derivatives and differences. As shown, there is a peak near the origin for both the leading (Fig. 6A) and lagging (Fig. 6B) conditions. However, it is also clear that for the leading sounds, there are more events in the upper right quadrant, which represents the spike-conducive conditions (dĒ/dt > 0; ΔE > 0; Fig. 5) that are rare in the histogram for the lagging sounds. This analysis suggests that leading sounds evoke more spikes simply because the conjunction of conditions that favor spiking are more prevalent in the leading sound than in the lagging sound for an OED of 3 ms and 100% modulation.

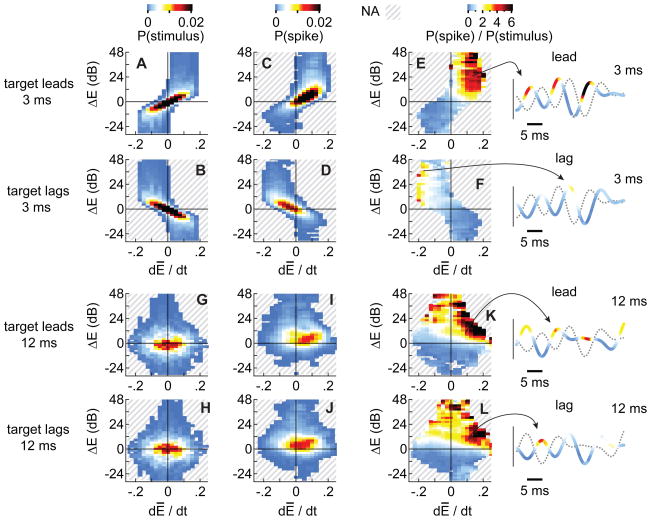

Figure 6. Analysis of spike-conducive features (100% modulation).

To analyze the stimulus envelopes, ΔE and dĒ/dt (see text) were measured at regular intervals for the 50 envelope pairs used in our experiments. Each measurement was then assigned to a frequency-distribution histogram with axes of dĒ/dt and ΔE. Distributions are shown for four stimulus conditions: 3 ms OED (A, B) or 12 ms OED (G, H); target leading (A, G) or lagging (B, H). Analogous measurements were then made at the times when spikes were evoked (C, D, I, J). Values represent median spike probability, per histogram bin, stimulus, and sampled cell. Cross-hatches represent areas for which there were no values. After scaling each stimulus distribution to the volume under each spike-specific distribution, spike-specific distributions were divided by the stimulus distributions. These quotients are shown for the same stimuli in panels E, F, K, and L. To the right of each panel are representative lead/lag envelope pairs; the thicker, colored line represents the target’s waveform while dotted lines represent the masker’s waveform. The color of the target’s envelope indicates spike probability (warmer colors represent higher probabilities).

This difference in the statistics of the leading and lagging envelopes is accentuated by the space-map neurons which are highly sensitive to the spike-conducive conditions. To quantify this sensitivity, we first estimated ΔE and dĒ/dt values within a ±1 ms time window surrounding each spike (instead of at regular intervals) and assigned them to a frequency distribution. The spike-specific distributions are shown in Figures 6C and D for leading and lagging targets, respectively, normalized to the volumes under the distributions. As one might expect, there are more events for the leading sound than for the lagging sound because leading sounds evoke more spikes. To determine the efficacy of the spike-conducive conditions, we divided the normalized spike-specific distributions (Figs. 6C, D) by the stimulus distributions (Figs. 6A, B). The quotients, shown in Figures 6E and F for the leading and lagging targets, respectively, are quite different, with far more events in the upper right quadrant for the lead (Fig. 6E) than the lag (Fig. 6F). Inspection of the quotient histogram suggests that the cells are very sensitive to positive values of ΔE and dĒ/dt , especially when they are large. These large values are absent in the lagging sound’s envelopes.

Spike-probabilities computed from the quotient plots (Fig. 6E, F) are superimposed on examples of the leading and lagging envelopes in the right-most column of Figure 6. As shown in the upper two pairs of envelopes, spiking probabilities are higher for the lead than lag at 3 ms and occur along the rising edges of the lead envelope (warmer colors) and when the leading envelope has a higher amplitude than the lagging envelope.

Similar analyses were conducted for longer OEDs and shallower modulations, i.e., the conditions under which localization dominance failed. Figures 6G and H show the stimulus distributions for the leading and lagging sounds at an OED of 12 ms and a 100% modulation depth. Similar results were obtained with an OED of 24 ms and are not shown. Comparison of the two distributions shows that both the leading and lagging stimuli have an abundance of events in the upper right quadrant where the conjunction of spike-conducive values are represented, and importantly, there is little lead/lag asymmetry. Correspondingly, neither the spike-specific distribution (Figs. 6I, J), nor their quotients (Figs. 6K, L) show a lead/lag asymmetry. The abundance of spike-conducive events for the leading and lagging stimuli is consistent, however, with the observation that both the leading and lagging stimuli evoke a high response (Fig. 4). Thus, the responses to both the lead and lag stimuli are strong and equal.

Figures 7A and B show the stimulus distributions for the leading and lagging sounds at an OED of 3 ms and a 50% modulation depth. As was the case when the stimuli were deeply modulated (Figs. 6A-F), the stimulus distributions for the leading and lagging sounds are asymmetric, i.e., there are more spike-conducive events (dĒ/dt > 0; ΔE > 0) for the leading sound than for the lagging sound. However, when the modulations are shallow and the amplitudes change slowly, neither the leading nor the lagging sound has a significant advantage over the other (Figs. 7C-F) because the magnitudes of dĒ/dt and especially ΔE remain small. The small magnitudes are, in turn, consistent with the weak responses to leading and lagging stimuli with 50% modulation (Fig. 4).

Figure 7. Analysis of spike-conducive features (50% modulation).

Plots shown here are analogous to those in Figures 6A-F except that dĒ/dt and ΔE were measured when the stimuli were modulated at 50% (OED = 3 ms).

Model verification

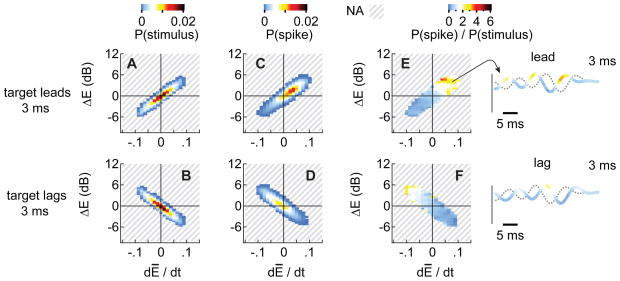

As an indication of how these spike-conducive conditions might account for the asymmetry in responses to lead and lag sounds, we parameterized a model of the average space map neuron with the sensitivity to dĒ/dt and ΔE derived from quotient histograms (e.g., Fig. 6K, L) obtained with long OEDs (12 and 24 ms). We then predicted the responses evoked by an OED of 3 ms.

Quotients corresponding to the longer delays were first averaged (Fig. 8A). Then, for every envelope in each of the 50 lead/lag pairs used in the physiology experiments (3 ms OED), we estimated the probability of a spike given the model neuron’s sensitivity to ΔE and dĒ/dt values. Estimates for representative lead and lag targets are shown in Figures 8B and C, respectively. The upper traces in each panel represent a lead/lag pair; the thicker, colored line represents the target while the dotted line represents the masker. The coloration of the envelope predicts the probability that the envelope segment would result in a spike, given its ΔE and dĒ/dt values. Note that spike-conducive conditions (warmer colors) were predicted to arise more frequently, and for longer periods of time, when the target led than when it lagged. Correspondingly, the lower traces show that both positive differences and derivatives coincided when the target led (Fig. 8B) but were out-of-phase when the target lagged (Fig. 8C). The spiking probabilities then were accumulated into distributions (Fig. 8E) and normalized to the overall probability with which spikes were evoked when the OED was long and the target led or lagged (i.e., to the volumes under plots shown in Figs. 6K and L).

Figure 8. Model verification.

(A) The average preferences of a space-map neuron for combinations of ΔE and dE/dt were derived from the quotient histograms obtained with OEDs of 12 and 24 ms, 100% modulation (see also Figs. 6K,L) and used to predict responses obtained for stimuli with 3 ms OEDs and other modulation depths. The upper traces in B and C show representative lead (B) and lag (C) target envelopes color-coded according to their spike probabilities; derived from the quotient histogram (A). The lower traces show the derivative (blue) and differences (red) for the upper traces. (D) Overall predicted (open circles) and observed (filled circles) spike probabilities for lead (blue) and lag (red) targets. Not surprisingly, the predictions are nearly perfect for the 12 and 24 ms OEDs, the values on which the model neuron was parameterized. (E) Spike probabilities for lead and lag targets assigned to two-dimensional distributions. (F) Spikes predicted (E) minus spikes observed (e.g., Figs. 6C,D) per histogram bin, stimulus, and sampled cell. (G) Representative lead and lag target envelopes color-coded according to the differences between spikes predicted and spikes observed (F). Bluish colors and arrows indicate where the neural responses were underestimated.

Figure 8D compares the predicted (dashed lines) and observed (solid lines) spike probabilities for each of the modulation depths and OEDs. Because the model was parameterized using data when the OED was 12 and 24 ms, predictions for these stimuli were nearly perfect. The model was also reasonably accurate, however, in predicting the overall probabilities when the OED was 3 ms, although it slightly overestimated the responses to both the leading and lagging sounds.

Figure 8F, which plots the difference between predicted (Fig. 8E) and observed spike probabilities (Fig. 6C,D), confirms that spike probabilities were lower than predicted for large areas of feature spaces (red areas). A subtle but potentially interesting exception is indicated by the arrows pointing to a blue region near the origins of the upper (target led) and lower plots (target lagged). Projecting these spike probabilities onto the traces of the representative waveforms (Fig. 8G), we see that the cells fired more spikes than predicted immediately after a peak in the masker’s envelope, whether the target led (top, blue segments, arrows) or lagged (bottom). Quotients used to predict spikes were consistently small near the origin (Fig. 8A) and the differences shown (Figs. 8F,G) should therefore be viewed with caution. However, one might have expected even fewer spikes after a peak in the masker’s envelope (i.e., the source outside the SRF) if the representations of the two sound-sources on the map were inhibiting one another.

Discussion

Owls, like humans (Dizon and Colburn, 2006; Zurek, 1980), behaved in a manner consistent with localization dominance with stimulus pairs lacking onset (and offset) delays, provided that the envelopes were well modulated. Neurons in the owl’s space map also fired preferentially to the leading stimuli at short delays, but again, only when the modulations were deep. Analysis of the signals suggests that the acoustical conditions that favor spiking in the space map are more abundant for the leading sound at short delays than for the lag. At long delays or with shallow modulations, this asymmetry in the leading and lagging signals disappears, as does localization dominance and its neural parallel.

Integration of acoustics, physiology, & behavior

Our results suggest that localization dominance in the owl is a byproduct of generic neuronal preferences for onsets and binaural cues. Thus, when modulations are deep and the delay is short, an envelope-peak in the lead waveform is likely to be present by itself, briefly, causing the frequency-specific binaural cues to approximate values at the lead-source’s location. Moreover, its rising edge is unobstructed by the corresponding peak in the lagging waveform. The space-map neurons therefore respond vigorously, as they would to one of a series of brief transients from a single (i.e., unmasked) source. The requirement for a rising edge may be related to the finding that human localization dominance is absent if a signal’s rise time is slow (Rakerd and Hartmann, 1985). The corresponding peak in the lag and its rising edge, on the other hand, are likely to be superimposed with its counterpart in the lead, resulting in a spatial de-correlation. The space-map neurons, being sensitive to the coherency of spatial cues (Albeck and Konishi, 1995; Euston and Takahashi, 2002; Spezio and Takahashi, 2003; Yin et al., 1987), would therefore respond weakly.

Evidence for localization dominance and its neural parallel were not observed at longer delays or with shallower modulations. At the longer delays, the peaks in the lag and their rising edges were no longer masked by the corresponding peaks in the lead, evoking responses similar to those of the lead. With shallower modulations, neither source had an amplitude advantage over the other (ΔE ≈ 0), and the rises in amplitudes were slow ( dĒ/dt ≈ 0). Thus, for delays tested here and a short integration time window (Joris et al., 2006; Siveke et al., 2008; Wagner, 1991, 1992), the lead/lag pair becomes equivalent to two, simultaneous, uncorrelated noises, to each of which, the space map neurons respond weakly (Keller and Takahashi, 2005).

In nature, reflections are not simply delayed copies of the direct sound but have lower overall amplitudes. In terms of our model, the echo’s weakness would mean that ΔE would favor the direct sound consistently, thus further strengthening localization dominance.

Principles shared with other models

Our explanation is related to that of Tollin (1998) and Trahiotis and Hartung (2002) who attributed the weak response of an IC neuron to a lagging click (Fitzpatrick et al., 1995; Litovsky and Yin, 1998; Yin, 1994) to the generation of binaural cues that do not correspond to the neuron’s preferred values. These suboptimal binaural cues were attributed, in turn, to the superposition of the ringing responses of cochlear filters, passed through a hair cell model (Meddis et al., 1990), evoked by the lead and lag clicks in each ear (Hartung and Trahiotis, 2001). In our “envelope” hypothesis and in this “ringing” hypothesis, it is the superposition of waveforms, whether internal (Hartung and Trahiotis, 2001; Trahiotis and Hartung, 2002) or external, that alters the binaural cues and weakens the responses of neurons to the lagging sound, not neural inhibition.

Our explanation is also reminiscent of the process of “glimpsing,” in which a masked target sound is tracked by catching glimpses of the target during quiet periods in the masker (Miller and Licklider, 1950). With the stimuli used presently, glimpses of the lead or lag target become available whenever ΔE favors one source over the other. Thus, deep modulations tend to offer more such glimpses than shallow ones. The idea of glimpsing is also consistent with our recent study of unmodulated sounds (Nelson and Takahashi, 2008) which showed that the owl’s tendency to localize the lead or lag source depends on the lengths of the onset and offset time differences (Fig. 1A) during which clear glimpses of the lead or lag sounds were available. It is also found in the study by Devore et al. (2009), which showed that sounds are localized in reverberant rooms by “glimpsing” the spatial cues available at the onset of the sound when reflections from room-surfaces had yet to arrive. Here again, specialized mechanisms to prevent responses to reflections need not be invoked.

Localization Precision

Envelope modulation depth had an effect on saccade precision, in addition to its effect on the proportion of turns toward one source or the other. Interestingly, high saccade precision was observed under conditions that elicited robust firing on the space map. For instance, at a 3 ms delay and 100% modulation, the firing evoked by the leading sound was considerably stronger than that evoked by the lag or by two uncorrelated noises. Correspondingly, the saccades to the lead were not just more numerous; they were more precise. The saccades to the lag, when they occurred, had considerably greater error. When conditions were such that both the lead and lag sources evoked a strong response (12 or 24 ms OED, 100% modulation), the precision of saccades to both sources was equal and approximated that to single sources. Conversely, if both sources evoked a weak response, as when the modulations were shallow (0%), saccades to both sources had large errors.

Although a high spike count is associated with high precision for trials employing lead/lag pairs, it is interesting to note that the responses to paired stimuli were considerably lower than those evoked by single stimuli (Fig. 4), even though the saccades to some of these paired sounds (100% modulation) were nearly as precise as those to single sources. One interpretation may be that the putative function relating saccade precision and spike count is steep and asymptotic. Thus, the response to single sources is larger than needed for near-optimal precision. Precisely how vigorous firing observed in single units is related to saccade precision is unknown, although it is consistent with the observation that low-amplitude stimuli, which evoke fewer spikes on the space map, also elicit saccades with low precision (Whitchurch and Takahashi, 2006). One possibility is that high rates of firing in single space-map units reflect binaural cues that are stable over the duration of a sound, as is the case when there is only a single source. With a single sound source, the frequency-specific binaural cues remain at values corresponding to the source’s location and only the space-map cells tuned to that combination of cues will fire vigorously, resulting in a well-focused pattern of activity across the map. However, when there are multiple sources concurrently emitting sounds with overlapping spectra, as is the case when lead and lag sounds overlap, the binaural cues fluctuate over time, and the patterns of fluctuation may differ in each spectral band. As a result, the binaural cues assume values that correspond to different locations from moment to moment, briefly exciting neurons tuned to those various locations. The spatial pattern of activity on the map, i.e., the neural image, may therefore jitter, with no strong focus remaining at any one location for long. The owl’s saccadic system may make use of the space map’s image in a way that causes saccade precision to depend upon the spatial pattern of activity on the map.

Experimental Procedures

Animals

Experiments were carried out in 6 captive-bred adult Barn owls (Tyto alba) under a protocol approved by the Institutional Animal Care and Use Committee of the University of Oregon. The birds were held in our colony under a permit from the US Fish and Wildlife Service (SCCL-723257).

Behavioral subjects

Three Barn owls (C, S, and T), housed together in a single enclosure within our breeding colony, were hand-reared and trained to make head saccades toward single visual and auditory stimuli for a food reward. The owls’ weights were maintained at ~90% of the free-feeding level during training and testing.

Behavioral apparatus

Behavioral experiments were conducted in a double-walled anechoic chamber (Industrial Acoustics Co. IAC; 4.5 m × 3.9 m × 2.7 m). An owl was tethered to a 10 × 4 cm perch mounted atop a 1.15 m post in the chamber. A dispenser for food-rewards was also attached to the post near the owl’s talons. A custom-built, head-mounted, magnetic search-coil system (Remmel Labs) was used to measure the owl’s head movements in azimuth and elevation (Spitzer and Takahashi, 2006; Whitchurch and Takahashi, 2006). The coils were calibrated before each session to ensure accuracy within ±1° after adjusting for small differences in the angles with which head-posts were attached to each owl’s skull.

Sounds were presented from 10 dome tweeters (2.9-cm, Morel MDT-39), located 1.5 m from the bird. Speaker pairs were positioned with opposite polar angles (Fig. 2) relative to the location of a central fixation LED (2.9 mm, λ = 568 nm). Radii (r) were standardized across speaker pairs at 10°, 15°, 20°, 25°, and 30°. New polar angles were generated randomly for each speaker pair every 2 to 4 test sessions. Subjects were monitored continuously throughout test sessions using an infrared camera and infrared light source (Canon Ci-20R and IR-20W).

Behavioral stimuli

A session consisted primarily of single-source trials (~80%), amongst which we randomly interspersed paired-source trials. In paired-source trials, one of 50 different broadband (2–11 kHz) noises was randomly chosen and multiplied with one of 50 different low-pass filtered (<150 Hz) envelopes (Fig. 1C; 43, 52 or 64 ms initial durations). On a third of the trials, this envelope was flat and did not alter the natural envelopes of the noise carrier. On remaining trials, the envelope was scaled so that its deepest trough decreased to either 50% or 100% of its maximum (Fig. 1B). Each modulated noise was then copied and the lag sound was delayed 3, 12, or 24 ms. Lead and lag sounds were 100% correlated at this time. Finally, both sounds were multiplied with a single 40 ms window (2.5 ms linear ramps) to synchronize their onsets and offsets (Fig. 1A). Stimuli in single-source trials were drawn randomly from the pool of stimuli used in paired-source trials. Stimuli were converted to analog waveforms at 48.8-kHz and routed to speakers using two power multiplexers (RP2.1, HB7, PM2R, Tucker-Davis Technologies).

Stimulus sound-pressure level (SPL) was roved in 1 dB increments between 27 and 33 dB across trials, in reference to the output of an acoustic calibrator (re: 20 μPa, 4321, Brüel & Kjær). The owl’s hearing threshold, measured with broadband noise, is approximately −9 dB SPL (Whitchurch and Takahashi, 2006). Stimuli were presented with equal SPL on paired-source trials.

Behavioral paradigm

Head orientation and angular velocity were monitored continuously (20 Hz, RP2, Tucker-Davis Technologies) throughout test sessions. Stimuli were presented only after the owl fixated the centrally located LED for a random period of from 0.5 to 1 s. The LED was extinguished as soon as subjects oriented to within 3° of the LED. Trials were aborted if the owl moved beyond this 3° radius or if head velocity exceeded 2.6 °/s before stimuli were presented. Once a trial was initiated, measurements were sampled at a rate of 1 kHz for 4.5 s, starting 1 s before the stimulus’ onset. Subjects were automatically fed a small piece of a mouse (~1 g), after a ~1 to 3 s delay, if saccade velocity decreased to less than of 4°/s and if the saccade ended at a location that was within 5-8° from a previously activated speaker or member of a speaker pair.

Behavioral data analysis

After measuring baseline head-velocity for 250 ms prior to stimulus presentation, the beginning of a saccade was defined as the time at which velocity exceeded this baseline measure by 5 s.d. for 50 ms. The end of a saccade was defined as the time at which velocity decreased to below baseline by 8 s.d. for 50 ms.

Each saccade's polar angle (α ) was measured starting from the location where the saccade began to each subsequent point that was sampled along its trajectory (αs), weighted by head-velocity (v):

| Eqn. 1 |

Head-velocity was cubed because fewer points were sampled when head-velocity was high. Trajectories were measured in this way because the birds would frequently alter their trajectory as velocity decreased near the end of the saccade. Each saccade’s radius was measured from its start to its end and assumed a linear trajectory.

Because the birds were first required to fixate a central LED, and because each speaker-pair was separated by ~180°, it was easy to determine whether saccades were lead- or lag-directed. Localization dominance was therefore estimated as the proportion of trials on which saccades were lag-directed. Saccade angles were rarely >75° (absolute polar coordinates re: either source at 0°; see Fig 2; <1% of trials/subject) and were never equal with respect to each paired-source (±90°). In contrast with a similar previous study (Spitzer and Takahashi, 2006), saccades were rarely directed first toward one speaker and then toward the second, after making a ~180°, hairpin, turn (< 0.5% of trials/subject). Reversals in saccade direction that did occur were as likely to occur on single-source trials and almost always corresponded to a spurious head movement prior to stimulus presentation.

Saccades with radii less than 5° or more than 45° were excluded from analysis. Also excluded were saccades to specific locations unassociated with the speakers (e.g., to the feeder or chamber door). Subject C rarely turned upward (-90 < polar angle < 90°; Fig. 3A) when single sounds were presented and instead made highly stereotyped saccades to the left (−90±10°), right (90±10°), or to the feeder (~ ±180°). These stereotyped saccades were excluded from analysis whether a single or paired stimulus was presented.

Neurophysiology

Experiments were conducted in a single-walled sound-attenuating chamber (Industrial Acoustics Co.; 1.8m3) equipped with a stereotaxic frame and surgical microscope.

Single unit recordings were obtained from the space maps of 3 birds anesthetized with a mixture of ketamine and Diazepam. Anesthesia was induced by an intramuscular injection of ketamine (22 mg/kg) and Diazepam (5.6 mg/kg) and maintained with additional doses as needed (typically, every 2-3 hrs after induction).

Upon induction of anesthesia, the owl was given a subcutaneous injection of physiological saline (10 cc; subcutaneous). Electrocardiographic leads and a temperature probe were attached and the owl was wrapped in a water-circulating blanket and placed in a stereotaxic frame. Axial body temperature was maintained between 35° and 40° C. Its head was secured to the frame by a previously implanted head-post. Earphones were inserted into the ear canals, and a recording-well, implanted previously (Euston and Takahashi, 2002), was opened and cleaned to admit a glass-coated tungsten electrode (1 MΩ < impedance <12 MΩ at 1 kHz). The electrode’s output was amplified and then fed to a signal processor (Tucker-Davis Technologies, RP2), oscilloscope, and audio monitor.

Typical recording sessions involved 1 to 3 electrode penetrations and lasted less than 12 hrs. At the end of a session, the well was rinsed with a 0.25% solution of chlorhexidine and sealed. The owl was placed in an isolated recovery chamber until it recovered from anesthesia, after which, it was returned to its flight enclosure.

Neurophysiological stimulus presentation

Stimuli were presented in virtual auditory space (VAS) generated using individualized head related transfer functions (HRTF, Keller et al., 1998). HRTFs for each ear were measured from 613 locations in the frontal hemifield (Fig. 1F), band-pass filtered, and stored digitally as 255-point (8.5-ms; 30-kHz) finite impulse response filters (HRIR). Stimuli were convolved with the HRIRs, converted to analog waveforms at 48.8-kHz, amplified (RP2.1, HB6, Tucker-Davis Technologies), and presented over the earphones (Etymotic Research, ER-1). Stimuli were presented 30 dB above the response threshold of each space-specific neuron at its best location.

We characterized each isolated unit’s SRF by presenting sounds from 290 virtual locations. The “best location” within a SRF was defined as the location that evoked the maximum average spike count per repetition. Stimuli presented from this location in subsequent tests are referred to as targets. Stimuli presented from outside the SRF are referred to as maskers.

Stimuli were the same as those used in our behavioral experiments except that they were presented in VAS rather than in the free-field. For each cell, we also presented a pair of independent (i.e., 0% correlated) noise carriers simultaneously and without a delay from both the masker and target loci, as well as noise-bursts from a single source at the location of the masker or the target. The stimuli (N=1050) were presented in a random order over 1–3 repetitions. The inter-stimulus interval was 600 ms.

Neurophysiological data analysis

Spike times were adjusted by subtracting the median first-spike latency of each cell’s response (9.4 – 14.3 ms; 0% modulation; 50-150 repetitions). Subtracting the median first-spike latency of all the cells (12.2 ms), as opposed to each individual cell’s measured latency, weakened but did not eliminate the overall biases that we observed for rising envelopes ( dĒ/dt > 0) and binaural cues (ΔE > 0).

Each cell’s response was quantified by counting the spikes that the target evoked within a 50-ms time window (-5 to 45 ms, Figs. 4B-C). The spike count was then normalized to that evoked, in each cell, by single 40-ms sounds from the center of its SRF (Yin, 1994). We used non-parametric statistics because variation was often skewed in a positive direction when responses were weak

To quantify the times-of-occurrence of spikes relative to the amplitude modulations, stimuli and neural responses were further analyzed by measuring: (1) the instantaneous amplitude of each target’s envelope in relation to the masker’s (ΔE = target dB - masker dB) and (2) the rate with which the composite envelope ( Ē= Etarget + Emasker / 2) was increasing or decreasing ( dĒ/dt, t = ±1 ms). Differences and derivatives were sampled regularly (0.1 ms interval) across each of the 50 envelopes or were sampled at the times when spikes were evoked. Times near the onsets and offsets of the sounds (± 5 ms) were excluded to avoid sampling each stimulus’ onset and offset ramp.

Highlights.

The precedence effect in owls requires envelope modulations

Neural correlate of the precedence effect requires envelope modulations

Preferences for binaural cues and modulations can explain the precedence effect

No evidence for the suppression of directional information of echoes

Supplementary Material

Acknowledgments

We thank Drs. C. H. Keller and E. A. Whitchurch for technical support and helpful discussions. This study was supported by grants from the National Institutes of Deafness and Communication Disorders: (F32) DC008267 to B.S.N and (RO1) DC003925.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Albeck Y, Konishi M. Responses of neurons in the auditory pathway of the barn owl to partially correlated binaural signals. J Neurophysiol. 1995;74:1689–1700. doi: 10.1152/jn.1995.74.4.1689. [DOI] [PubMed] [Google Scholar]

- Bala ADS, Spitzer MW, Takahashi TT. Prediction of auditory spatial acuity from neuronal images on the owl’s auditory space map. Nature. 2003;424:771–773. doi: 10.1038/nature01835. [DOI] [PubMed] [Google Scholar]

- Bala ADS, Spitzer MW, Takahashi TT. Auditory spatial acuity approximates the resolving power of space-specific neurons. PLoS ONE. 2007:e675. doi: 10.1371/journal.pone.0000675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blauert J. Spatial Hearing: The Psychophysics of Human Sound Localization. Cambridge, MA: The MIT Press; 1997. Revised Edition. [Google Scholar]

- Burger MR, Pollak GD. Reversible inactivation of the dorsal nucleus of the lateral lemniscus reveals its role in the processing of multiple sound sources in the inferior colliculus of bats. J Neurosci. 2001;21:4830–4843. doi: 10.1523/JNEUROSCI.21-13-04830.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dent ML, Dooling R. The Precedence Effect in Three Species of Birds (Melopsittacus undulatus, Serinus canaria, and Taeniopygia guttata) J Comp Psychol. 2004;118:325–331. doi: 10.1037/0735-7036.118.3.325. [DOI] [PubMed] [Google Scholar]

- Dent ML, Klump GM, Schwenzfeier C. Temporal modulation transfer functions in the barn owl (Tyto alba) J Comp Physiol A. 2002;187:937–943. doi: 10.1007/s00359-001-0259-5. [DOI] [PubMed] [Google Scholar]

- Devore S, Ihlefeld A, Hancock K, Shinn-Cunningham B, Delgutte B. Accurate Sound Localization in Reverberant Environments Is Mediated by Robust Encoding of Spatial Cues in the Auditory Midbrain. Neuron. 2009;62:123–134. doi: 10.1016/j.neuron.2009.02.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dizon MM, Colburn SH. The influence of spectral, temporal, and interaural stimulus variations on the precedence effect. J Acoust Soc Am. 2006;119:2947–2964. doi: 10.1121/1.2189451. [DOI] [PubMed] [Google Scholar]

- Euston DR, Takahashi TT. From spectrum to space: the contribution of level difference cues to spatial receptive fields in the barn owl inferior colliculus. J Neurosci. 2002;22:264–293. doi: 10.1523/JNEUROSCI.22-01-00284.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzpatrick DC, Kuwada S, Batra R, Trahiotis C. Neural responses to simple simulated echoes in the auditory brain stem of the unanesthetized rabbit. J Neurophysiol. 1995;74:2469–2486. doi: 10.1152/jn.1995.74.6.2469. [DOI] [PubMed] [Google Scholar]

- Haas H. Uber den einfluss eines einfachechos auf die horsamkeit von sprache [On the influence of a single echo on the intelligibility of speech] Acustica. 1951;1:49–58. [Google Scholar]

- Hartung K, Trahiotis C. Peripheral auditory processing and investigations of the “precedence effect” which utilize successive transient stimuli. J Acoust Soc Am. 2001;110:1505–1513. doi: 10.1121/1.1390339. [DOI] [PubMed] [Google Scholar]

- Joris PX, Louage DH, Cardoen L, van der Heijden M. Correlation Index: A new metric to quantify temporal coding. Hear Res. 2006;216–217:19–30. doi: 10.1016/j.heares.2006.03.010. [DOI] [PubMed] [Google Scholar]

- Keller CH, Hartung K, Takahashi TT. Head-related transfer functions on the barn owl: measurements and neural responses. Hear Res. 1998;118:13–34. doi: 10.1016/s0378-5955(98)00014-8. [DOI] [PubMed] [Google Scholar]

- Keller CH, Takahashi TT. Binaural cross-correlation predicts the responses of neurons in the owl's auditory space map under conditions simulating summing localization. J Neurosci. 1996a;16:4300–4309. doi: 10.1523/JNEUROSCI.16-13-04300.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keller CH, Takahashi TT. Responses to simulated echoes by neurons in the barn owl's auditory space map. J Comp Physiol A. 1996b;178:499–512. doi: 10.1007/BF00190180. [DOI] [PubMed] [Google Scholar]

- Keller CH, Takahashi TT. Representation of Temporal Features of Complex Sounds by the Discharge Patterns of Neurons in the Owl’s Inferior Colliculus. J Neurophysiol. 2000;84:2638–2650. doi: 10.1152/jn.2000.84.5.2638. [DOI] [PubMed] [Google Scholar]

- Keller CH, Takahashi TT. Localization and Identification of Concurrent Sounds in the Owl’s Auditory Space Map. J Neurosci. 2005;25:10446–10461. doi: 10.1523/JNEUROSCI.2093-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konishi M, Knudsen EI. Center-surround organization of auditory receptive fields in the owl. Science. 1978;202:778–780. doi: 10.1126/science.715444. [DOI] [PubMed] [Google Scholar]

- Lindemann W. Extension of a binaural cross-correlation model by contralateral inhibition. I Simulation of lateralization for stationary signals. J Acoust Soc Am. 1986;80:1608–1622. doi: 10.1121/1.394325. [DOI] [PubMed] [Google Scholar]

- Litovsky RY, Colburn HS, Yost WA, Guzman SJ. The precedence effect. J Acoust Soc Am. 1999;106:1633–1654. doi: 10.1121/1.427914. [DOI] [PubMed] [Google Scholar]

- Litovsky RY, Yin TCT. Physiological studies of the precedence effect in the inferior colliculus of the cat. II Neural mechanisms. J Neurophysiol. 1998;80:1302–1316. doi: 10.1152/jn.1998.80.3.1302. [DOI] [PubMed] [Google Scholar]

- Meddis R, Hewitt MJ, Shackleton TM. Implementation details of a computational model of the inner hair-cell auditory-nerve synapse. J Acoust Soc Am. 1990;87:1813–1816. [Google Scholar]

- Miller GA, Licklider JCR. The Intelligibility of Interrupted Speech. J Acoust Soc Am. 1950;22:167–173. [Google Scholar]

- Nelson BS, Takahashi TT. Independence of echo-threshold and echo-delay in the barn owl. PLoS ONE. 2008:e3598. doi: 10.1371/journal.pone.0003598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pecka M, Zahn TP, Saunier-Rebori B, Siveke I, Felmy F, Wiegrebe L, Klug A, Pollak GD, Grothe B. Inhibiting the Inhibition: A Neuronal Network for Sound Localization in Reverberant Environments. J Neurosci. 2007;27:1782–1790. doi: 10.1523/JNEUROSCI.5335-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rakerd B, Hartmann WM. Localization of sounds in rooms, II: The effects of a single reflecting surface. J Acoust Soc Am. 1985;78:524–533. doi: 10.1121/1.392474. [DOI] [PubMed] [Google Scholar]

- Siveke I, Ewert SD, Grothe B, Wiegrebe L. Psychophysical and Physiological Evidence for Fast Binaural Processing. J Neurosci. 2008;28:2043–2052. doi: 10.1523/JNEUROSCI.4488-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snow WB. The effects of arrival time on stereophonic localization. J Acoust Soc Am. 1954;26:1071–1074. [Google Scholar]

- Spezio ML, Takahashi TT. Frequency-specific interaural level difference tuning predicts spatial response patterns of space-specific neurons in the barn owl inferior colliculus. J Neurosci. 2003;23:4677–4688. doi: 10.1523/JNEUROSCI.23-11-04677.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spitzer MW, Bala ADS, Takahashi TT. Auditory spatial discrimination by barn owls in simulated echoic conditions. J Acoust Soc Am. 2003;113:1631–1645. doi: 10.1121/1.1548152. [DOI] [PubMed] [Google Scholar]

- Spitzer MW, Bala ADS, Takahashi TT. A neuronal correlate of the precedence effect is associated with spatial selectivity in the barn owl's auditory midbrain. J Neurophysiol. 2004;92:2051–2070. doi: 10.1152/jn.01235.2003. [DOI] [PubMed] [Google Scholar]

- Spitzer MW, Takahashi TT. Sound localization by barn owls in a simulated echoic environment. J Neurophysiol. 2006;95:3571–3584. doi: 10.1152/jn.00982.2005. [DOI] [PubMed] [Google Scholar]

- Takahashi TT, Keller CH. Representation of multiple sound sources in the owl’s auditory space map. J Neurosci. 1994;14:4780–4793. doi: 10.1523/JNEUROSCI.14-08-04780.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tollin DJ. In: Greenberg S, Slaney M, editors. Computational model of the lateralisation of clicks and their echoes; Proc. the NATO Advanced Study Institute on Computational Hearing; Il Ciocco: NATO Scientific and Environmental Affairs Division; 1998. pp. 77–82. [Google Scholar]

- Trahiotis C, Hartung K. Peripheral auditory processing, the precedence effect and responses of single units in the inferior colliculus. Hear Res. 2002;168:55–59. doi: 10.1016/s0378-5955(02)00357-x. [DOI] [PubMed] [Google Scholar]

- Wagner H. A temporal window for lateralization of interaural time differences by barn owls. Journal of Comparative Physiology A. 1991;169:281–289. doi: 10.1007/BF00206992. [DOI] [PubMed] [Google Scholar]

- Wagner H. On the ability of neurons in the barn owl's inferior colliculus to sense brief appearances of interaural time difference. J Comparative Physiology A. 1992;170:3–11. doi: 10.1007/BF00190396. [DOI] [PubMed] [Google Scholar]

- Wagner H. Sound-localization deficits induced by lesions in the barn owls auditory space map. J Neurosci. 1993;13:371–386. doi: 10.1523/JNEUROSCI.13-01-00371.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallach H, Newman EB, Rosenzweig MR. The precedence effect in sound localization. Am J Psychol. 1949;57:315–336. [PubMed] [Google Scholar]

- Whitchurch EA, Takahashi TT. Combined auditory and visual stimuli facilitiate head saccades in the barn owl (Tyto alba) J Neurophysiol. 2006;96:730–745. doi: 10.1152/jn.00072.2006. [DOI] [PubMed] [Google Scholar]

- Yin TC. Physiological correlates of the precedence effect and summing localization in the inferior colliculus of the cat. J Neurosci. 1994;14:5170–5186. doi: 10.1523/JNEUROSCI.14-09-05170.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin TCT, Chan JCK, Carney LH. Effects of interaural time delays of noise stimuli on low-frequency cells in the cat's inferior colliculus. III Evidence for cross-correlation. J Neurophysiol. 1987;58:562–583. doi: 10.1152/jn.1987.58.3.562. [DOI] [PubMed] [Google Scholar]

- Zurek PM. The Precedence effect and its possible role in the avoidance of interaural ambiguities. J Acoust Soc Am. 1980;67:952–964. doi: 10.1121/1.383974. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.