Abstract

We present a nonparametric, probabilistic mixture model for the supervised parcellation of images. The proposed model yields segmentation algorithms conceptually similar to the recently developed label fusion methods, which register a new image with each training image separately. Segmentation is achieved via the fusion of transferred manual labels. We show that in our framework various settings of a model parameter yield algorithms that use image intensity information differently in determining the weight of a training subject during fusion. One particular setting computes a single, global weight per training subject, whereas another setting uses locally varying weights when fusing the training data. The proposed nonparametric parcellation approach capitalizes on recently developed fast and robust pairwise image alignment tools. The use of multiple registrations allows the algorithm to be robust to occasional registration failures. We report experiments on 39 volumetric brain MRI scans with expert manual labels for the white matter, cerebral cortex, ventricles and subcortical structures. The results demonstrate that the proposed nonparametric segmentation framework yields significantly better segmentation than state-of-the-art algorithms.

1 Introduction

Supervised image parcellation (segmentation) tools traditionally use atlases, which are parametric models that summarize the training data in a single coordinate system [1–9]. Yet, recent work has shown that more accurate segmentation can be achieved by utilizing the entire training data [10–16], by mapping each training subject into the coordinates of the new image via a pairwise registration algorithm. The transferred manual labels are then fused to generate a segmentation of the new subject. There are at least two advantages of this approach: (1) across-subject anatomical variability is better captured than in a parametric model, and (2) multiple registrations improve robustness against occasional registration failures. The main drawback of the label fusion (multi-atlas) approach is the computational burden introduced by the multiple registrations and the manipulation of the entire training data.

Early label fusion methods proposed to transfer the manual labels to the test image via nearest neighbor interpolation after pairwise registration [12, 14]. Segmentation labels of the test image were then estimated via majority voting. Empirical results suggested that errors in the manual labeling and in registration are averaged out during label fusion, resulting in accurate segmentation. More recent work has shown that a weighted averaging strategy can be used to improve segmentation quality [11]. The basic idea is that training subjects more similar to the test subject should carry more weight during label fusion. The practical advantages of various strategies based on this idea have lately been demonstrated [11, 13, 16]. Some of these strategies use the whole image to determine a single, global weight for each training subject [11, 15, 16], whereas others use local image intensities for locally adapting the weights [11, 13].

This paper presents a novel unified probabilistic model that enables local and global weighting strategies within a label fusion-like segmentation framework. We formulate segmentation using MAP, where a nonparametric approach is used to estimate the joint density on the image intensities and segmentation labels of the new subject. The proposed framework generalizes a model we recently presented at MICCAI 2009 [16], which is based on the assumption that the test subject is generated from a single, unknown training subject. That specific model leads to a segmentation algorithm that assigns greater importance to the training subjects that are globally more similar to the test subject and can be viewed as a particular instantiation of the more general approach presented in this paper. In addition, the proposed approach further enables two possible variants within the same framework. First, we present a local mixture model that assumes each voxel in the test image is generated from some training subject with a uniform prior, independently of other voxels. This local model yields a pixel-wise weighting strategy in segmentation. Second, we develop a semi-local mixture model that relaxes the independence assumption of the local model with a Markov Random Field prior. This model leads to a weighting strategy where intensity information in a local neighborhood is pooled in a principled manner.

In related literature, soft weighting of training subjects was recently used for shape regression [17], where the weights depended on the subjects’ age. The proposed nonparametric parcellation framework is also parallel to STAPLE [18], which fuses multiple segmentations of a single subject. In contrast, our framework handles multiple subjects and accounts for inter-subject variability through registration.

The paper is organized as follows. The next section presents the non-parametric generative model for image segmentation. In section 3, we discuss three instantiations of the framework. In section 4, we present inference algorithms for these instantiations. We conclude with experiments in section 5. We report experiments on 39 brain MRI scans that have corresponding manual labels, including the cerebral cortex, white matter, and sub-cortical structures. Experimental results suggest that the proposed nonparametric parcellation framework achieves better segmentation than the existing state-of-the-art algorithms.

2 Theory

Let {Ii} be N training images with corresponding label maps {Li}, i = 1, . . . ,N. We assume the label maps take discrete values from 1 to that indicate the label identity at each spatial location. We treat these training images as spatially continuous functions on by assuming a suitable interpolator. Let denote a new, previously unseen test image defined on a discrete grid . Let denote the spatial mapping (warp) from the test image coordinates to the coordinates of the training image i. We assume that {Φi} have been precomputed using a pairwise registration procedure, such as the one described in Section 4.1.

Our objective is to estimate the label map L̂ associated with the test image I. One common formulation to compute L̂ is via MAP:

| (1) |

where p(L, I|{Li, Ii, Φi}) denotes the joint probability of the label map L and image I given the training data.

Rather than using a parametric model for p(L, I|{Li, Ii, Φi}), we employ a non-parametric estimator, which is an explicit function of the entire training data, not a summary of it. Let denote an unknown (hidden) random field that, for each voxel in test image I, specifies the training image Ii that generated that voxel. Given M, the training data, and warps, and assuming the factorization depicted in the graphical model of Fig. 1, we can construct the conditional probability of generating the test image and label map:

| (2) |

| (3) |

| (4) |

where pM(x)(L(x), I(x)|LM(x), IM(x), ΦM(x)(x)) is the conditional probability of (L(x), I(x)) given that voxel x ∈ Ω of the test subject was generated from training subject M(x). Note that Eq. (4) assumes that (L(x), I(x)) are conditionally independent given the membership M(x), corresponding warp ΦM(x) and training data. Given a prior on M, we can view p(L, I|{Li, Ii, Φi}) as a mixture:

| (5) |

where denotes the marginalization over the unknown random field M . Substituting Eq. (4) into Eq. (5) yields:

| (6) |

In the next section, we present instantiations of the individual terms in Eq. (6).

Fig. 1.

Generative model for (L(x),I(x)) given M(x) = i and (Li,Ii,Φi). Φi is the mapping from the image coordinates to the template coordinates. Squares indicate non-random parameters, while circles indicate random variables. Shaded variables are assumed to be observed.

3 Model Instantiation

3.1 Image Likelihood

We adopt a Gaussian distribution with a stationary variance σ2 as the image likelihood:

| (7) |

3.2 Label Likelihood

We use the distance transform representation to encode the label prior information, cf. [19]. Let denote the signed distance transform of label l in training subject i, assumed to be positive inside the structure of interest. We define the label likelihood as:

| (8) |

where ρ > 0 is the slope constant and , where is the total number of labels including a background label. pi(L(x) = l|Li,Φi(x)) encodes the conditional probability of observing label l at voxel x ∈ Ω of the test image, given that it was generated from training image i.

3.3 Membership Prior

The latent random field encodes the local association between the test image and training data. We place a Markov Random Field (MRF) prior on M:

| (9) |

where β ≥ 0 is a scalar parameter, is a spatial neighborhood of voxel x, Zβ is the partition function that only depends on β, and δ(M(x), M(y)) = 1, if M(x) = M(y) and zero otherwise. In our implementation, includes the immediate 8 neighbors of each voxel. Similar models have been used in the segmentation literature, e.g. [5, 9], mainly as priors on label maps to encourage spatially contiguous segmentations. In contrast, we use the MRF prior to pool local intensity information in determining the association between the test subject and training data.

The parameter β influences the average size of the local patches of the test subject that are generated from a particular training subject. In this work, we consider three settings of the parameter β. For β = 0, the model effectively assumes that each test image voxel is independently generated from a training subject, drawn with a uniform prior. β → +∞ forces the membership of all voxels to be the same and corresponds to assuming that the whole test subject is generated from a single unknown training subject, drawn from a uniform prior. A positive, finite β favors local patches of voxels to have the same membership.

The β → +∞ case reduces to a model similar to the one we presented in [16], except now we make the simplifying assumption that the training data is apriori mapped to the test subject's coordinate frame as a preprocessing step. Due to this simplification, the warp cost in registration plays no role in the segmentation algorithms we present in this paper. Without this simplification, however, inference for finite values of β becomes intractable. As demonstrated in the next section, the resulting inference algorithms allow us to determine the association between the training data and test subject using local intensity information.

4 Algorithms

4.1 Efficient Pairwise Registration

To perform pairwise registration, we employ an efficient algorithm [20, 21] that uses a one-parameter subgroup of diffeomorphisms, where a warp Φ is parameterized with a smooth, stationary velocity field via an ODE [22]: and initial condition Φ(x, 0) = x. The warp Φ(x) = exp(v)(x) can be computed efficiently using scaling and squaring and inverted by using the negative of the velocity field: Φ−1 = exp(−v) [22].

We impose an elastic-like regularization on the stationary velocity field:

| (10) |

where λ > 0 is the warp stiffness parameter, Zλ is a partition function that depends only on λ, and xj and vk denote the j'th and k'th component (dimension) of position x and velocity v, respectively. A higher warp stiffness parameter λ yields more rigid warps.

To derive the registration objective function, we assume a simple additive Gaussian noise model, consistent with the image likelihood term described in Section 3.1. This model leads to the following optimization problem for registering the i-th training image to the test subject:

| (11) |

where σ2 is the stationary image noise variance, and . To solve Eq. (11), we use the bidirectional log-domain Demons framework [20], which decouples the optimization of the first and second terms by introducing an auxiliary transformation. The update warp is first computed using the Gauss-Newton method. The regularization is achieved by smoothing the updated warp field. It can be shown that the smoothing kernel corresponding to Eq. (10) can be approximated with a Gaussian; , where and γ > 0 controls the size of the Gauss-Newton step.

4.2 Segmentation Algorithms

Here, we present algorithms to solve the optimization problem of Eq. (6) for the three cases of β in the model presented in Section 3.

4.3 Global Mixture

First, we consider β → +∞, which is equivalent to a global mixture model, where the test subject is assumed to be generated from a single, unknown training subject. In this case, the segmentation problem in Eq. (6) reduces to

| (12) |

Eq. (12) cannot be solved in closed form. However, an efficient solution to this MAP formulation can be obtained via Expectation Maximization (EM). Here, we present a summary.

The E-step updates the posterior of the membership associated with each training image:

| (13) |

where L̂(n−1)(x) is the segmentation estimate of the test image from the previous iteration and . The M-step updates the segmentation estimate:

| (14) |

The E-step in Eq. (13) determines a single membership index for the entire training image, based on all the voxels. The M-step in Eq. (14) performs an independent optimization at each voxel x ∈ Ω; it determines the mode of a vector, where is the number of labels. The EM algorithm is initialized with and iterates between Equations (14) and (13), until convergence.

4.4 Local Mixture: Independent Prior

The second case we consider is β = 0, which corresponds to assuming a voxel-wise independent mixture model with a uniform prior on M:

| (15) |

where |Ω| is the cardinality of the image domain, i.e., the number of voxels. It is easy to show that the segmentation problem reduces to

| (16) |

where the image and label likelihood terms in the summation can be computed using Eqs. (7) and (8). The optimization problem can be solved by simply comparing numbers at each voxel.

4.5 Semi-local mixture: MRF Prior

Finally, we consider a finite, positive β. This leads to an MRF prior, which couples neighboring voxels and thus the exact marginalization of Eq. (6) becomes computationally intractable. An efficient approximate solution can be obtained using variational mean field [23]. The main idea of variational mean field is to approximate the posterior distribution of the membership p(M |I, L, {Ii, Li, Φi}), with a simple distribution q that is fully factorized:

| (17) |

The objective function of Eq. (6) can then be approximated by an easier-to-optimize lower bound, which is a function of q. This approximate problem can be solved via coordinate-ascent, where the segmentation L and approximate posterior q are updated sequentially, by solving the optimization for each variable while fixing the other. One particular formulation leads to a straightforward update rule for L:

| (18) |

where q(n−1) is an estimate of the posterior at the (n − 1)'th iteration. Eq. (18) is independent for each voxel and entails determining the mode of a vector. For a fixed segmentation estimate L̂(n)(x) the optimal q is the solution of the following fixed-point equation:

| (19) |

and . We solve Eq. (19) iteratively. The variational mean field algorithm alternates between Eqs. (19) and (18), until convergence.

5 Experiments

We validate the proposed framework on 39 T1-weighted brain MRI scans of dimensions 256 × 256 × 256, 1mm isotropic. Each MRI volume is an average of 3-4 scans and was gain-field corrected and skull-stripped. These volumes were then manually delineated by an expert anatomist into left and rightWhite Matter (WM), Cerebral Cortex (CT), Lateral Ventricle (LV), Hippocampus (HP), Thalamus (TH), Caudate (CA), Putamen (PU), Pallidum (PA) and Amygdala (AM). We use volume overlap with manual labels, as measured by the Dice score [24], to quantify segmentation quality. The Dice score ranges from 0to 1, with higher values indicating improved segmentation.

5.1 Setting Parameters Through Training

The proposed nonparametric parcellation framework has two stages with several input parameters. The registration stage has two independent parameters: γ that controls the step size in the Gauss-Newton optimization and α that determines the smoothness of the final warp. The segmentation stage has two additional input parameters: σ2, which is the intensity variance of the image likelihood in Eq. (7) and the slope ρ of the distance transform uses to compute the label prior in Eq. (8). Furthermore, the semi-local model of Section 4.5 has a non-zero, finite β parameter.

Nine subjects were used to determine the optimal values of these parameters. First, 20 random pairs of these nine subjects were registered for a range of values of γ and α. Registration quality was assessed by the amount of pairwise label overlap and used to select the optimal (γ*, α*) pair.

We used the optimal (γ*, α*) pair to register all 72 ordered pairs of the nine training subjects. We performed nine leave-one-out segmentations using both the global and local models to determine the corresponding optimal pairs of σ2 and ρ. The optimal pair for the local model was then used to determine the optimal value for β in the semi-local model. The optimal parameter values were finally used to segment the remaining 30 subjects.

5.2 Benchmarks

The first benchmark we consider is the whole-brain parcellation tool available in the Freesurfer software package [25]. The Freesurfer parcellation tool uses a unified registration-segmentation procedure that models across-scanner intensity variation [2, 3]. We consider this as a state-of-the-art benchmark, since numerous imaging studies across multiple centers have shown Freesurfer's a robustness and accuracy as a segmentation tool.

As a second benchmark, we use our implementation of the Label Fusion algorithm [12, 14]. We employ the pairwise registrations obtained with (γ*, α*) to transfer the labels of the training subjects via the trilinear interpolation of the probability maps, obtained by assigning 1 to entries corresponding to the manual labels and zero elsewhere. Segmentation is then computed through majority voting at each voxel. We use trilinear interpolation instead of nearest neighbor interpolation because we find that trilinear interpolation yields better results.

5.3 Results

We report test results for the 30 subjects not included in the group used for setting the algorithm parameters γ, α, σ2, ρ, and β. For each test subject, we treated the remaining subjects as training data in a cross-validation evaluation.

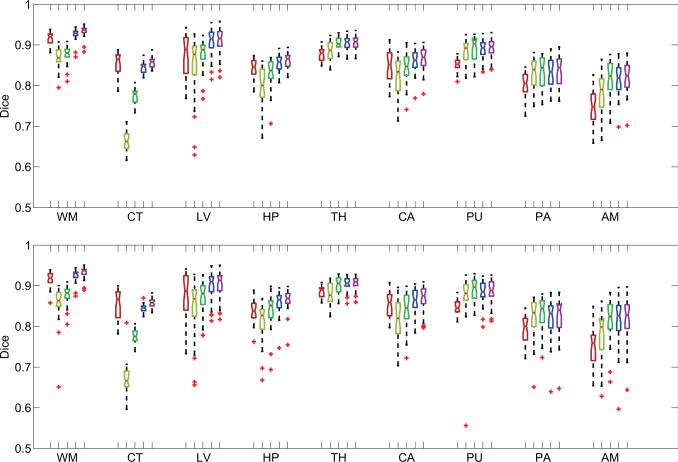

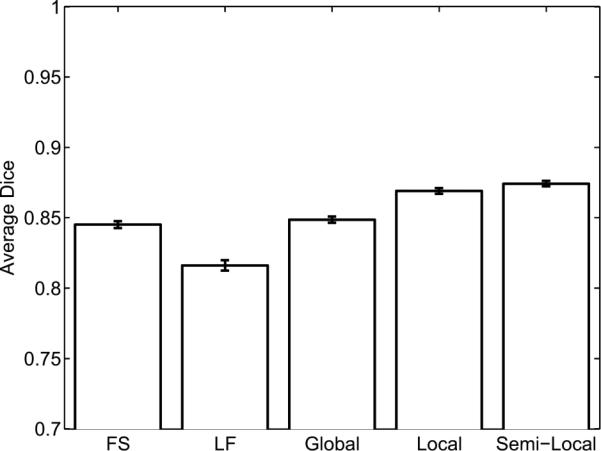

Fig. 2 illustrates a typical automatic segmentation result obtained with the local mixture model and overlaid on the MRI volume. Fig. 3 shows box-plots of Dice scores for the two benchmarks and the proposed non-parametric parcellation algorithms. Table 1 provides the mean Dice scores averaged over all subjects and both hemispheres. Fig. 4 provides an overall comparison between the average dice scores achieved by the algorithms.

Fig. 2.

A typical segmentation obtained with the local mixture model.

Fig. 3.

Boxplots of Dice scores for Freesurfer (red), Label Fusion (yellow), the global mixture model (green), the local mixture model (blue) and the semi-local mixture model (purple). Top row is left hemisphere. Bottom row is right hemisphere. Medians are indicated by horizontal bars. Boxes indicate the lower and upper quartiles and vertical lines extend to 1.5 inter-quartile spacing. ‘+’s indicate outliers.

Table 1.

Comparison of average dice scores. Boldface font indicates best scores for each structure. As a reference, the last row lists approximate average volumes.

| WM | CT | LV | HP | TH | CA | PU | PA | AM | |

|---|---|---|---|---|---|---|---|---|---|

| Freesurfer | 0.92 | 0.85 | 0.87 | 0.84 | 0.88 | 0.85 | 0.85 | 0.80 | 0.75 |

| Label Fusion | 0.85 | 0.66 | 0.84 | 0.77 | 0.86 | 0.80 | 0.86 | 0.81 | 0.75 |

| Global Mixture | 0.88 | 0.77 | 0.87 | 0.83 | 0.90 | 0.84 | 0.89 | 0.83 | 0.81 |

| Local Mixture | 0.93 | 0.84 | 0.90 | 0.86 | 0.90 | 0.86 | 0.88 | 0.82 | 0.82 |

| Semi-local Mixture | 0.93 | 0.86 | 0.91 | 0.87 | 0.91 | 0.87 | 0.89 | 0.83 | 0.82 |

| | |||||||||

| Volumes (×l03mm3) | 450 | 448 | 25 | 7 | 14 | 7 | 10 | 3 | 3 |

Fig. 4.

Average Dice scores for each algorithm (FS: Freesurfer, LF: Label Fusion, Global: Global Mixture, Local: Local Mixture, and Semi-Local: MRF-based model). Error bars show standard error. Each subject and ROI was treated as an independent sample with an equal weight.

On average, the local and semi-local mixture models yield better segmentations than the global mixture model, mainly due to the large improvement in the white matter, cerebral cortex and lateral ventricles, the segmentation of which clearly benefits from the additional use of local intensity information. A paired t-test between the local and semi-local models reveals that a statistically significant improvement is achieved with the MRF model that pools local intensity information. Yet, this improvement is overall quite modest: about 1% per ROI.

As discussed earlier, the global mixture model is similar to that of [16], except that [16] incorporates registration into the model. Despite this, we find that both algorithms achieve similar segmentation accuracy (results not shown).

A paired sample t-test implies that the difference in accuracy between the proposed semi-local mixture model and Freesurfer is statistically significant (p < 0.05, Bonferroni corrected) for all ROIs, except the cerebral cortex and right Caudate, where the two methods yield comparable results. The same results are obtained when comparing the local mixture model and Freesurfer.

Compared to the Label Fusion benchmark, the nonparametric parcellation algorithms (global, local and semi-local) yield significantly better segmentation (paired sample t-test, p < 0.05, Bonferroni corrected) in all regions, except Pallidum and Putamen, where the improvement over Label Fusion does not reach statistical significance. We note, however, that the results we report for our Label Fusion implementation are lower than the ones reported in [12]. This might be due to differences in the data and/or registration algorithm. Specifically, normalized mutual information (NMI) was used as the registration cost function in [12]. Entropy-based measures such as NMI are known to yield more robust alignment results. We leave a careful analysis of this issue to future work.

Table 2 lists the average run-times for all five algorithms. The parametric atlas-based Freesurfer algorithm is the fastest, mainly because it needs to compute only a single registration. The remaining algorithms take up more than 20 hours of CPU time on a modern machine, most of which is dedicated to the many registrations performed with the training data. The two iterative algorithms that solve the global mixture and semi-local mixture models (EM and variational mean field, respectively) require significantly longer run-times. The local-mixture model, on the other hand, requires minimal computation time once the registrations are complete, since it simply performs a voxelwise weighted averaging. Its run-timeis similar to that required by Label Fusion.

Table 2.

Approximate average run-time to segment one test subject (in CPU hours).

| Freesurfer | Label Fusion | Global Mixture | Local Mixture | Semi-local Mixture |

|---|---|---|---|---|

| 10 | 24 | 32 | 24 | 40 |

6 Conclusion

This paper presents a novel, nonparametric mixture model of images and label maps, that yields accurate image segmentation algorithms. The resulting algorithms are conceptually similar to recent label fusion (or multi-atlas) methods that utilize the entire training data, rather than a summary of it, and register the test subject to each training subject separately. Segmentation is then achieved by fusing the transferred manual labels. In the proposed framework, similarities between the test image and training data determine how the transferred labels are weighed during fusion. As we discuss in this paper, different settings of a model parameter yields various weighting strategies. Our experiments suggests that a semi-local strategy that is derived from an MRF model that encourages local image patches to be associated with the same training data provides the best segmentation results. We also show that a computationally less expensive local strategy that treats each voxel independently leads to accurate segmentations that are better than the current state-of-the-art.

We leave an investigation of various registration algorithms within the proposed framework to future work. It is clear that alternative strategies can be used to improve the alignment between the training data and test subject. For example, one could use a richer representation of diffeomorphic warps, cf. [4], or a more sophisticated registration cost function, cf. [12]. Since any multi-atlas segmentation algorithm will be robust against occasional registration failures, whether a better alignment algorithm will lead to more accurate segmentation remains an open question.

Acknowledgements

Support for this research is provided in part by: NAMIC (NIH NIBIB NAMIC U54-EB005149), the NAC (NIH NCRR NAC P41-RR13218), the mBIRN (NIH NCRR mBIRN U24-RR021382), the NIH NINDS R01-NS051826 grant, the NSF CAREER 0642971 grant, NCRR (P41-RR14075, R01 RR16594-01A1), the NIBIB (R01 EB001550, R01EB006758), the NINDS (R01 NS052585-01), the MIND Institute, and the Autism & Dyslexia Project funded by the Ellison Medical Foundation. B.T. Thomas Yeo is funded by the A*STAR, Singapore.

References

- 1.Ashburner J, Friston K. Unified segmentation. Neuroimage. 2005;26:839–851. doi: 10.1016/j.neuroimage.2005.02.018. [DOI] [PubMed] [Google Scholar]

- 2.Fischl B, et al. Sequence-independent segmentation of magnetic resonance images. Neuroimage. 2004;23:69–84. doi: 10.1016/j.neuroimage.2004.07.016. [DOI] [PubMed] [Google Scholar]

- 3.Han X, Fischl B. Atlas renormalization for improved brain MR image segmentation across scanner platforms. IEEE TMI. 2007;26(4):479–486. doi: 10.1109/TMI.2007.893282. [DOI] [PubMed] [Google Scholar]

- 4.Joshi S, Davis B, Jomier M, Gerig G. Unbiased diffeomorphism atlas construction for computational anatomy. Neuroimage. 2004;23:151–160. doi: 10.1016/j.neuroimage.2004.07.068. [DOI] [PubMed] [Google Scholar]

- 5.Van Leemput K, et al. Automated model-based bias field correction of MR images of the brain. 1999;18(10):885–896. doi: 10.1109/42.811268. [DOI] [PubMed] [Google Scholar]

- 6.Pohl K, et al. A bayesian model for joint segmentation and registration. Neuroimage. 2006;31:228–239. doi: 10.1016/j.neuroimage.2005.11.044. [DOI] [PubMed] [Google Scholar]

- 7.Twining C, et al. A unified information-theoretic approach to groupwise non-rigid registration and model building. In Proc. IPMI. 2005;LNCS 3565:1–14. doi: 10.1007/11505730_1. [DOI] [PubMed] [Google Scholar]

- 8.Tu Z, et al. Brain anatomical structure segmentation by hybrid discriminative/generative models. IEEE TMI. 2008;27(4):495–508. doi: 10.1109/TMI.2007.908121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yeo BTT, et al. Effects of registration regularization and atlas sharpness on segmentation accuracy. Medical Image Analysis. 2008;12(5):603–615. doi: 10.1016/j.media.2008.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Aljabar P, et al. Classifier selection strategies for label fusion using large atlas databases. In Proc. of MICCAI. 2007;LNCS 4791:523–531. doi: 10.1007/978-3-540-75757-3_64. [DOI] [PubMed] [Google Scholar]

- 11.Artaechevarria X, et al. Combination strategies in multi-atlas image segmentation: Application to brain MR data. IEEE TMI. 2009;28(8):1266–1277. doi: 10.1109/TMI.2009.2014372. [DOI] [PubMed] [Google Scholar]

- 12.Heckemann R, et al. Automatic anatomical brain MRI segmentation combining label propagation and decision fusion. Neuroimage. 2006;33(1):115–126. doi: 10.1016/j.neuroimage.2006.05.061. [DOI] [PubMed] [Google Scholar]

- 13.Isgum I, et al. Multi-atlas-based segmentation with local decision fusion-application to cardiac and aortic segmentation in CT scans. IEEE TMI. 2009;28(7) doi: 10.1109/TMI.2008.2011480. [DOI] [PubMed] [Google Scholar]

- 14.Rohlfing T, et al. Evaluation of atlas selection strategies for atlas-based image segmentation with application to confocal microscopy images of bee brains. NeuroImage. 2004;21(4):1428–1442. doi: 10.1016/j.neuroimage.2003.11.010. [DOI] [PubMed] [Google Scholar]

- 15.Rohlfing T, et al. Performance-based classifier combination in atlas-based image segmentation using expectation-maximization parameter estimation. IEEE TMI. 2004;23(8):983–994. doi: 10.1109/TMI.2004.830803. [DOI] [PubMed] [Google Scholar]

- 16.Sabuncu M, et al. Supervised nonparameteric image parcellation. In Proc. of MICCAI. 2009;LNCS 5762 doi: 10.1007/978-3-642-04271-3_130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Davis B, et al. Population shape regression from random design data. In Proc. of ICCV. 2007:1–7. [Google Scholar]

- 18.Warfield S, et al. Simultaneous truth and performance level estimation (STAPLE): an algorithm for validation of image segmentation. TMI. 2004;23(7):903–921. doi: 10.1109/TMI.2004.828354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Pohl K, et al. Logarithm odds maps for shape representation. MICCAI 2006. 2006;LNCS 4191:955–963. doi: 10.1007/11866763_117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Vercauteren T, et al. Symmetric log-domain diffeomorphic registration: A demons-based approach. In Proc of MICCAI. 2008;LNCS 5241:754–761. doi: 10.1007/978-3-540-85988-8_90. [DOI] [PubMed] [Google Scholar]

- 21.Sabuncu M, et al. Asymmetric image template registration. In Proc. of MICCAI. 2009;LNCS 5761 doi: 10.1007/978-3-642-04268-3_70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Arsigny V, Commowick O, Pennec X, Ayache N. A log-Euclidean framework for statistics on diffeomorphisms. In Proc of MICCAI. 2006;LNCS 4190:924–931. doi: 10.1007/11866565_113. [DOI] [PubMed] [Google Scholar]

- 23.Jaakkola T. Tutorial on variational approximation methods. Advanced Mean Field Methods: Theory and Practice. 2000 [Google Scholar]

- 24.Dice L. Measures of the amount of ecologic association between species. Ecology. 1945;26(3):297–302. [Google Scholar]

- 25.Freesurfer http://surfer.nmr.mgh.harvard.edu.