Abstract

With high rates of trauma exposure among students, the need for intervention programs is clear. Delivery of such programs in the school setting eliminates key barriers to access, but there are few programs that demonstrate efficacy in this setting. Programs to date have been designed for delivery by clinicians, who are a scarce resource in many schools. This study describes preliminary feasibility and acceptability data from a pilot study of a new program, Support for Students Exposed to Trauma, adapted from the Cognitive Behavioral Intervention for Trauma in Schools (CBITS) program. Because of its “pilot” nature, all results from the study should be viewed as preliminary. Results show that the program can be implemented successfully by teachers and school counselors, with good satisfaction among students and parents. Pilot data show small reductions in symptoms among the students in the SSET program, suggesting that this program shows promise that warrants a full evaluation of effectiveness.

Keywords: school, trauma, violence, intervention

In the last two decades, there has been increasing attention to children's exposure to violence (Osofksy, 2003; Pynoos, Steinberg, & Goenjian, 1996; Stein, Jaycox, Kataoka, Rhodes, & Vestal, 2003). Early reports showed that between 20% and 50% of American children were victims of violence within their families, at school, and in their communities (Finkelhor & Dziuba-Leatherman, 1994). In fact, the rate of violent victimization among adolescents between the ages of twelve and nineteen was higher than all other age groups in the years 1976-2000 (Klaus & Rennison, 2002). Not only are a large number of American children victims of this violence, but an even greater number may be traumatized through witnessing violence. Minority children, those with lower socio-economic status, and those in urban areas are disproportionately affected (see Stein, et al., 2003, for a review).

A trauma is defined in the psychiatric literature as a sudden, life threatening event, in which an individual feels horrified, terrified, or helpless and includes such events as personal experience or witnessing of violent assaults (American Psychiatric Association, 1994). Studies have documented the broad range of negative sequelae of trauma exposure for children and adolescents, including problems such as posttraumatic stress disorder (PTSD) (Jaycox et al., 2002; Singer, Anglin, Song, & Lunghofer, 1995; Stein et al., 2001); other anxiety problems (Finkelhor, 1995; Osofsky, Wewers, Hann, & Fick, 1993); depressive symptoms (Jaycox et al., 2002; Kliewer, Lepore, Oskin, & Johnson, 1998; Overstreet, 2000); dissociation (Putnam, 1997); impairment in school functioning (Hurt, Malmud, Brodsky, & Giannetta, 2001; Schwab-Stone, et al., 1995); decreased intellectual functioning and reading ability (Delaney-Black et al., 2002); lower grade-point average (GPA) and more days of school absence (Hurt et al., 2001), and decreased rates of high school graduation (Grogger, 1997). Finally, trauma exposure is related to behavioral problems, particularly aggressive and delinquent behavior (Farrell & Bruce, 1997; Martinez & Richters, 1993; Ruchkin, Henrich, Jones, Vermeiren, & Schwab-Stone, 2007).

Schools have long been identified as an ideal entry point for improving access to mental health services for children (Allensworth, Lawson, Nicholson, & Wyche, 1997; Cooper, 2008). However, few mental health programs have been rigorously evaluated in the real-world setting of schools (Hoagwood & Erwin, 1997). To date, several intervention programs have been designed for helping children cope with exposure to traumatic events, including violence (Jaycox, Morse, Tanielian, & Stein, 2006; Jaycox, Stein, Amaya-Jackson, & Morse, 2007). The majority of these have not yet been evaluated, with only a handful showing effectiveness in experimental studies (Jaycox, Stein, & Amaya-Jackson, in press). Moreover, to date, most programs have been designed for delivery by mental health clinicians. Only a few programs have been designed for use by non-clinicians such as school counselors and teachers (e.g., Healing After Trauma Skills, or HATS, see Jaycox, Morse, Tanielian, & Stein, 2006 for a full listing), and these have not been fully evaluated. Our own program, the Cognitive-Behavioral Intervention for Trauma in Schools program (CBITS; Jaycox, 2003) was developed in collaboration between RAND, UCLA, and the Los Angeles Unified School District, and is one of the few school programs with clear evidence of improved youth outcomes (Kataoka et al., 2003; Stein et al, 2003). Early quasi-experimental work with recent immigrant children showed that the program improved PTSD and depressive symptoms, as well as parent reported behavioral problems (Kataoka et al., 2003), and a subsequent randomized controlled trial in the general school population replicated these findings (Stein et al., 2003). But CBITS' reliance on clinically-trained school mental health professionals limits its reach, as many schools are not resourced with such professionals. This lack of trained clinicians is often a key barrier, as was found in a study of implementation of evidence-based programs in school following Hurricane Katrina (Jaycox et al., 2007). However, whether a trauma-focused intervention would be feasible and acceptable to non-clinical school personnel remains unknown.

To address this question, this paper reports on an effort to adapt the CBITS program for use by school personnel without clinical training (Jaycox, Kataoka, Stein, Wong, & Langley, 2005), which we called Support for Students Exposed to Trauma, or SSET. The project aims to establish the feasibility of the program, including both structural implementation issues and implementer fidelity to the manual, as a demonstration that this approach can be implemented as intended. We also conduct a small pilot study to observe change as a function of participation, and to evaluate participant and parent satisfaction with the program.

Methods

Support for Students Exposed to Trauma (SSET)

The SSET Program consists of 10 lessons designed to reduce post-traumatic and depressive symptoms and improve functioning in middle school youth who have been exposed to traumatic events (Jaycox & Langley, 2005). The core cognitive behavioral elements of the 10 lessons are the same as those used in CBITS. These include 1) psycho-education (common reactions to stress or trauma), 2) relaxation training, 3) cognitive coping (thoughts and feelings, helpful thinking), 4) gradual in vivo mastery of trauma reminders and generalized anxiety (approaching safe situations that may be hard for me), 5) processing traumatic memories (newspaper and first-person stories and drawings), and 6) social problem-solving. The main differences between the two programs are in format (SSET converts the CBITS session format to a lesson plan format), elimination of individual break-out sessions in SSET, elimination of parent sessions in SSET, and changes in the imaginal exposure to trauma that is conducted in CBITS to a more curricular format in SSET.

Like CBITS, each session is designed to be completed within a class period (about 45 minutes). Lessons generally start with review of independent practice from the prior session, followed by a lesson plan that includes some didactic presentation of materials, engagement activities to promote mastery of the skill, and a plan for independent practice prior to the next lesson.

Two teachers at one school, and a teacher and school counselor at a second school, were identified by the principal as candidates to deliver the SSET program. There was a range of teacher experience, with one teacher having taught social studies to middle school students for 10 years, another teacher with 7 years experience teaching 8th algebra and algebra readiness who also received some training for working with at-risk youth, and one teacher in her first full year of teaching 6th grade math. The school counselor had four years of experience and had received special training in School Safety, Peer Helpers, and suicide prevention. None of the implementers had any specific clinical training, though they are professionals with an interest in students' social and emotional development. Each implementer was given a stipend for running the program to offset the fact that they gave up their planning period to run SSET groups.

The second author (AKL) trained the four together over two days, utilizing an implementation manual (Langley, Jaycox, & Dean, 2005) as well as the SSET manual. These four implementers conducted two groups each (one immediate and one delayed group) per year, for two years. Groups were run during the teachers' planning period, and at the school counselors' convenience, during the school day, about once a week. They received weekly group or individual supervision while running groups during the first year, and biweekly supervision during the second year. They also received a yearly stipend in exchange for giving up their planning period, as done in other special programs run within the school district.

Setting

This project was conducted in two middle schools in the Los Angeles Unified School District, chosen by convenience. One of the middle schools serves 1,840 6th-8th grade students (91% Latino, 4% Filipino, 3% African American, 1% Caucasian, and 1% Asian/Pacific islander) on a traditional academic calendar (September-June) and is located in the San Fernando Valley of Los Angeles. It is a newly constructed school (3 years old) built to accommodate overcrowding in the area. Eighty two percent of the student body is eligible for the free or reduced-fee lunch program and 59% are English learners. The second middle school serves 2,403 6th - 8th grade students (82% Latino, 18% African American) on a 4-track year-round calendar in order to accommodate school overcrowding and is located in South Central Los Angeles. Eighty eight percent of students are eligible for the free or reduced-fee lunch program and 52% are considered English as a second language learners. Both schools qualify as Title I schools under the No Child Left Behind Act of 2001.

Recruitment & Screening Procedures

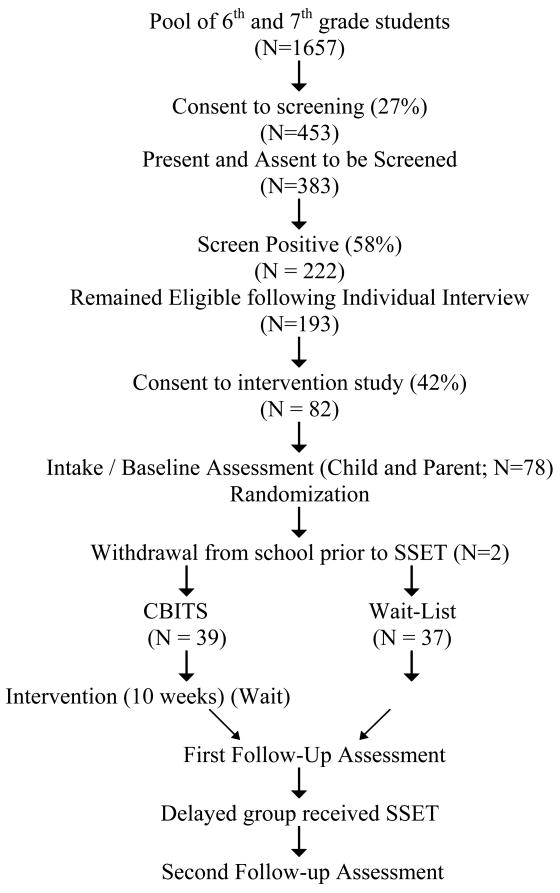

The chart depicted in Figure 1 shows the flow of children through the study, in total across the two years. As in prior studies (Stein et al., 2003), we first obtained active parent consent and student assent, and then used two measures to select students for inclusion in the SSET program. We encouraged return of consent forms by offering a classroom-level incentive ($50) to every classroom in which 70% or more of students returned signed consent forms (whether consenting or refusing participation). Parental consent forms to participate in screening were sent home with 1657 6th and 7th graders across the two middle schools over a two year period. Eight hundred sixty one (52%) of the consents were returned, with 453 (52%) of those returned consenting yes to participation. Three hundred eighty three students (those with parental permission and who assented) were administered the screening protocol in small groups to assess their exposure to violence as well as current PTSD symptoms. All procedures were reviewed and approved by the RAND and UCLA IRB's and also approved by the LAUSD Program Evaluation and Research Branch.

Figure 1.

Flow Chart (Total of 2 Years)

Fifty-eight percent (n= 222) met the following criteria: 1) experience of severe violence in the prior year (responses on the LES indicating being the victim or witness of violence involving a knife of gun or having a summed score greater than 3, consistent with exposure to one or more violent events and 2) current symptoms of PTSD as assessed on the Child PTSD Symptom Scale of 11 or greater, indicating moderate levels of symptom severity. These criteria are consistent with earlier work in evaluating the CBITS program (Kataoka et al., 2003; Stein et al., 2003), though the earlier work required exposure to more instances of community violence. These children were interviewed individually by research staff in order to validate the screener. One hundred ninety three (87%) remained eligible for the program following the individual interview and a second level of parental consent was sent home with these students requesting consent to participate in the intervention study. Ninety eight (51%) of these consents were returned, with 82 (84%) endorsing consent to participate.

Seventy eight students consented to participate in the intervention and were randomized (within schools) to the immediate program or to be on the waiting list control group, as described below. Two students withdrew from school shortly after randomization, before the start of groups, leaving 76 in the analytic sample. Those assigned to the immediate group began the program within a few weeks, and completed the post-program assessment at the end of the intervention. Those on the waiting list waited a comparable period of time, completed the post-program assessment, and then completed the program themselves. In the first year of the project, the timeline for one track within the school did not allow full implementation of the project – the school year ended before students on the wait-list completed the program. For these 10 students, they received the SSET program at the beginning of the next school year, but did not complete the third assessment. Thus, all but 10 were then assessed one final time, after both groups had received the program.

We developed procedures for reviewing and responding to the surveys at the time of screening and after each assessment period, in order to identify students who were at the higher levels of distress, to evaluate possible child abuse, and to ensure appropriate and timely referrals of students in need. These procedures used the assessment data as a trigger to initiate or augment the regular school systems of care, including referrals for mental health intervention within the school, reports of suspected child abuse, and linking students with services in the community.

Randomization Procedures

Randomization was conducted separately within each school and track, and was stratified by gender. In order to be able to accommodate students who returned their parent consent forms late, we conducted an initial randomization for enrolled students and also randomized empty slots for late entries. The resulting randomization produced relatively equivalent groups, although there was a small difference in ethnic composition, with slightly more non-Hispanics in the immediate intervention group (See Table 1).

Table 1. Sample Demographics.

| Total Sample (n=76) |

Control (n=37) |

Intervention (n=39) |

Statistic | p- value |

|

| Gender (%) | ChiSq=0.61 | 0.44 | |||

| Male | 48.68 | 51.35 | 46.15 | ||

| Female | 51.32 | 48.65 | 53.85 | ||

| Age (Mean years (sd)) | 11.5 (0.7) | 11.5 (0.7) | 11.4 (0.6) | T=-0.49 | 0.63 |

| Hispanic (%) | ChiSq=6.11 | 0.01 | |||

| No | 3.95 | 2.70 | 5.13 | ||

| Yes | 96.05 | 97.30 | 94.87 | ||

| Parent marital status (%) | ChiSq=0.24 | 0.62 | |||

| Married | 52.63 | 64.86 | 41.03 | ||

| Widowed | 2.63 | 5.41 | 0.00 | ||

| Separated/divorced | 25.00 | 16.22 | 33.33 | ||

| Never married | 11.84 | 8.11 | 15.38 | ||

| Cohabitating | 7.89 | 5.41 | 10.26 | ||

| Parent education | |||||

| (Mean years (sd)) | 8.5 (3.6) | 8.2 (4.0) | 8.8 (3.2) | T=0.55 | 0.58 |

| Parent employment status (%) | ChiSq=0.57 | 0.45 | |||

| Fulltime | 48.68 | 43.24 | 53.85 | ||

| Temporarily laid off | 2.63 | 29.73 | 5.13 | ||

| Part time | 22.37 | 0.00 | 15.38 | ||

| Unemployed | 1.32 | 2.70 | 0.00 | ||

| Retired | 2.63 | 0.00 | 5.13 | ||

| Homemaker | 22.37 | 24.32 | 20.51 | ||

| Family Income (%) | ChiSq=.74 | 0.74 | |||

| $4999 or less | 13.16 | 16.22 | 10.26 | ||

| $5000-14,999 | 27.63 | 29.73 | 25.64 | ||

| $15,000-24,999 | 38.16 | 29.73 | 46.15 | ||

| $25,000-39,000 | 10.53 | 13.51 | 7.69 | ||

| $40,000+ | 9.21 | 10.81 | 7.69 | ||

| Don't know | 1.32 | 0.00 | 2.56 | ||

Feasibility Measures

To measure fidelity to the SSET manual, all lessons were audio-recorded. We developed a measure of fidelity and had 3 raters (two developers and one independent research rater) use it to rate 3 randomly selected lessons, followed by discussion and modification of the measure for clarity and to reach consensus. Following this procedure, the single independent rater who was not involved in the pilot study used the measure to rate two components of each session: coverage and quality. Coverage was the degree to which the group leader covered a number of required elements unique to each lesson. The number of required elements varied depending on the lesson. For example, lesson 1 had six required elements while lesson 4 had nine. Each element in a lesson was rated using a 0-3 scale. A zero rating indicated that the element was not at all covered during the lesson; while a rating of three meant that the group leader had covered the element thoroughly and integrated it into the larger context of treatment. Using this rating system, we calculated an average score for coverage, as well as a score for each group leader and for each lesson.

Overall quality of each lesson was assessed with 7 items, such as whether the group leader conveyed empathy and whether the group leader could motivate the children during the lesson. Each item was rated on a 0-3 scale with a unique set of anchors for each item. For example, one item was whether the group leader presented the agenda for the lesson at the beginning and reviewed the lesson at the end. A score of 0 indicated that the group leader never presented the agenda or summarized the lesson, while a score of 3 indicated that that group leader summarized the agenda and the lesson, and listed the points that would be covered and how they would help.

All four group leaders were asked to keep track of students' attendance during the course of the program, in order to assess feasibility from the perspective of student compliance with the program.

Child Screening and Outcome Measures

Screening measures were administered as described above, and primary outcome measures were administered at baseline, 3 months (after the immediate group received SSET), and 6 months (after the delayed group received SSET). Student assessments were conducted at school in small groups in English. Students received a small gift for completion of each assessment. Parent assessments were conducted by telephone, in English or Spanish. Parents received a $15 money order in the mail upon completion of the interview. Teacher assessments were requested from the students' English teachers at the same intervals. Measures included the CPPS, described above, as well as several other measures of mood and behavioral problems.

We used the Modified Life Experiences Survey (LES; Singer et al., 1995; Singer, Miller, Guo, Slovak, & Frierson, 1998) to assess exposure to violence through direct experience and witnessing of events at home, at school, and in the neighborhood. The original version was developed to determine the extent of violence exposure in the community, and includes 26 items used to assess exposure in the past year, and 12 items assessing lifetime exposure to these events. The scale shows a high degree of internal consistency on its subscales. We modified the form to a 17 item measure as in prior studies (Jaycox et al., 2001; Kataoka et al., 2003; Stein et al., 2003) to collect information that is acceptable to schools, omitting items assessing violence at home and child abuse and thus assessing community violence exposure only. Experience of severe violence in the prior year was defined by responses indicating being the victim or witness of violence involving a knife of gun or having a summed score greater than 3, consistent with exposure to one or more violent events.

We used the Child PTSD Symptom Scale (CPSS; Foa, Treadwell, Johnson, & Feeny, 2001), to assess PTSD symptoms for both assessment of child need and for use in examining child outcomes over time. This scale has been used in school aged children as young as 8 and has shown good convergent and discriminant validity and high reliability (Foa et al., 2001). In our earlier work, scale internal consistency was high (Cronbach's alpha=0.89; Jaycox et al., 2002). In this study we use it as a continuous scale as designed, and also use cut-points to determine eligibility for the study as in prior work (Kataoka et al., 2003; Stein et al., 2003) and to define a “high symptoms” group who had a score of 18 or higher for exploratory analyses.

Children's Depression Inventory (CDI; Kovacs, 1981). This 27-item measure assesses children's cognitive, affective and behavioral depressive symptoms. The scale has high internal consistency, moderate test-retest reliability, and correlates in the expected direction with measures of related constructs (e.g. self-esteem, negative attributions, and hopelessness; Kendall, Cantwell, & Kazdin, 1989). Normative data are available (Finch et al., 1985). We used a 26-item version of the scale that omits an item about suicidal ideation. A high symptoms group was defined as those who scored 13 or higher on the depression scale.

Strengths and Difficulties Questionnaire—Parent Report, and Teacher Report (SDQ, Goodman, 1997; Goodman, Meltzer, & Bailey, 1998). This questionnaire contains 25 items, 20 assessing problem areas (emotional symptoms, conduct problems, hyperactivity/inattention, and peer relationship problems), 5 assessing prosocial behavior, and items that tap functional impairment related to these problems (Goodman, 1999). The scale compares favorably to the Rutter scales (Goodman, 1997) and the Child Behavior Checklist (Goodman & Scott, 1999), and distinguishes well between clinical and non-clinical samples. Norms for the scale are currently available from the nationally representative National Health Interview Survey. The follow-up version of the questionnaire includes two questions that assess changes as a function of an intervention. High symptoms groups were defined as those who scored above the “abnormal” cutoff on the SDQ of 16 for teacher reported problems and 17 for parent reported problems, as recommended by the developer of the questionnaires.

Satisfaction Measures

Parent and child satisfaction with SSET was assessed after the intervention. Parental satisfaction was based on a series of 13 questions regarding items such as privacy given to the child and family, explanation of the intervention, and the professionalism of the group leaders. Each question could be answered on a scale of 0 (very poor) to 6 (outstanding). The individual scores for each question are then averaged to get an overall score parent satisfaction score. Child satisfaction was determined in a similar manner. The child satisfaction survey had fifteen questions asking about their satisfaction with the group leader, the group in general, and the content of the intervention. Answer choices ranged from “not at all true” (0) to “very true” (3). An overall child satisfaction score was determined by averaging the individual scores for each of the fifteen questions. The child satisfaction survey also had two open ended questions where the child could list things he or she liked best about the group and things he or she didn't like about the group.

Analysis

We computed descriptive statistics on parent and child satisfaction with CBITS, implementer fidelity to the model, and student attendance and compliance to examine the feasibility and acceptability of this model.

Because there were not enough pilot study participants to have adequate power to detect statistically significant effects, we focus on trends and effect sizes rather than significance. Specifically, with the number of participants included here, we can expect only a 14% chance of detecting a small effect (0.2) and a 58% chance to detect a medium sized effect (0.5; Cohen, 1998). Thus, we present the data and observed effect sizes as well as conducting basic significance tests. In addition to observing a change due to treatment we also needed to take changes over time into account, and thus calculated the effect based on differences between groups as well as differences over time. For significance testing, we conducted linear regressions of scores on the primary outcome measures at the first follow-up, controlling for scores at baseline, and examined the effect of intervention group. We added school and group leader to the models as fixed effects, one at a time, to observe whether these effects changed the intervention effect, as we did not have sufficient sample to adjust for clustering of students within group and school. Finally, we examined outcomes within the high symptoms groups on each of the four measures, to observe the impact of the program on more symptomatic children, as well as in the low symptoms groups.

Missing values* were imputed 5 times separately for each informant (student, teacher, and parent) and each imputation class. Six imputation classes were formed with the 3 time points and 2 treatment groups (immediate and delayed intervention). At baseline, items were imputed from within the group (immediate or delayed intervention) at the baseline wave. At the first follow-up, missing items from all individuals were imputed from the control group at the first follow-up, because missing values within the immediate group indicate that the students did not receive treatment. At the second follow-up, after both groups should have received treatment, missing values were imputed from the first follow-up from within the respective groups. We imputed individual items in the scales. Continuous variables were imputed using predictive mean matching. Dichotomous variables were imputed using logistic regression with imputed values stochastically assigned 0 or 1 using Bernoulli sampling. Ordered categorical variables with few categories were imputed using the ordered logistic regression. All imputations were implemented in Proc MI in SAS Version 9. Missing values on the satisfaction surveys were not imputed, as they were gathered anonymously and were not linked to the rest of the data gathered.

Results

The selected sample consisted of 76 students in the 6th and 7th grades (age 11.5, SD=0.7; see Table 1) of two large middles schools in urban Los Angeles during two school years (2005-6 and 2006-7). Most students were Hispanic (96%), with 88% reporting ethnic and racial status as Hispanic/White, 8% Hispanic/African American, 3% Non-Hispanic/African American, and 1% Non-Hispanic/White. The sample was evenly split in terms of gender (51% female, 49% male) and of lower socioeconomic status, with parents reporting 8th grade education on average and 80% reporting a family income of $25,000 or less. There were no differences between students at the two schools at baseline.

Implementation and Fidelity

We randomly selected 26 of the 160 tapes (16%) to measure fidelity. Several audio tapes were of poor quality and, therefore, could not be rated, and had to be replaced with others. Overall, we found that both coverage and quality were high. The average coverage rating was 2.39 out of 3, with a slight decrease in average coverage rating from year 1 (2.43) to year 2 (2.35). The ratings ranged from a low of 1.80 to a high of 3, with the majority of the sessions rating above 2.20. The average coverage ratings for each lesson varied; lesson 1 had the highest coverage rating of 2.92, while lesson 7 had the lowest, 2.02. Group leader coverage ratings also showed variability, ranging from 2.21 to 2.65, but all implementers were in the acceptable range of fidelity.

The average quality rating was 2.37 out of 3, with the quality of each session improving from 2.31 in Year 1 to 2.45 in Year 2. The quality ratings of the 26 sessions analyzed ranged from 1.86 to 3, with most sessions rating 2.14 or higher. A calculation of the average rating for each lesson revealed that lesson 2 had the highest quality rating, 2.61, and lesson 9 had the lowest, 2.14. Quality by group leader also varied, ranging from 2.31 to 2.59.

Of the four group leaders, all but one teacher returned attendance data for her four groups. Using the attendance data for the remaining twelve groups we were able to determine that, on average, students attended approximately 8 of the 10 lessons. There was, however, great variability among the students, at the individual level. Attendance ranged from a low of just one lesson, to a high of all ten lessons.

Child Outcomes

In the pilot study, we observed the four main outcome variables over time within the immediate SSET group and the delayed SSET group (See Table 2). We also calculated “differences of differences” effect sizes which reflect this change between the two groups and over time. Between baseline and the first follow-up, during which the immediate SSET group participated in the lessons, we observed decreases in PTSD (treated ES = -.39, control ES = -.16, difference ES = -.23) and depression (treated ES = -.25, control ES = .07, difference ES = -.32) scores that were more pronounced than in the comparison group. However, changes in parent reported behavior problems were negligible (treated ES = -.39, control E = -.28, difference ES = -.10). Changes in teacher reports showed a small effect size (treated ES = .006, control ES = .28, difference ES = -.28), with the immediate intervention group showing slight decreases whereas the delayed intervention group showed slight increases in behavior problems by teacher report.

Table 2.

Main Outcome Measures by Intervention Group

| Baseline (N=76) |

SSET Groups |

First Follow Up (N=76) |

SSET Groups |

Second Follow Up (N=66) |

|||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | (SD) | Mean | (SD) | Mean | (SD) | ||||

| PTSD self-report | |||||||||

| Delayed | 19.41 | (10.00) | 18.32 | (11.31) | X | 15.59 | (9.42) | ||

| Immediate | 17.46 | (10.37) | X | 13.72 | (8.43) | 11.97 | (9.07) | ||

| Depression self-report | |||||||||

| Delayed | 14.32 | (9.20) | 14.92 | (9.02) | X | 12.91 | (8.55) | ||

| Immediate | 13.87 | (8.52) | X | 11.77 | (7.90) | 12.21 | (9.79) | ||

| Parent reported behavior problems | |||||||||

| Delayed | 12.46 | (5.90) | 11.30 | (5.84) | X | 8.91 | (6.08) | ||

| Immediate | 11.64 | (5.80) | X | 9.72 | (4.94) | 8.41 | (4.91) | ||

| Teacher reported behavior problems | |||||||||

| Delayed | 8.59 | (7.37) | 9.30 | (7.98) | X | 9.19 | (6.86) | ||

| Immediate | 11.33 | (7.87) | X | 10.28 | (6.95) | 10.47 | (5.75) | ||

During the time period between the first and second follow-up, when the delayed SSET group participated in the lessons and the immediate group did not, we observed that the immediate SSET group scores stayed about the same, and that there was some decrease in self-reported scores for PTSD and depression as well as parent reported behavior problems in the delayed group (see Table 2). There was very little change noted during this period for teacher reported behavior problems.

The regression analysis examining depression and PTSD scores at the first follow-up, controlling for scores at baseline, revealed a significant intervention group effect for depression scores (Estimate=0.65; T=-1.99, p=.046) and a non-significant trend for PTSD scores (Estimate = 0.58, T=-1.89, p=.058). These estimates of the intervention effect remained stable with comparable levels of significance when school or group leader were controlled as fixed effects. Neither teacher nor parent reports of behavior problems showed a significant intervention effect (T=-0.19 and T=-1.22, respectively). Of course, experimentwise error must be taken into account in evaluating these results, because we conduct four separate tests of outcomes.

Next, we examined impact among children in the high symptoms group for each measure (See Table 3). Among those in the high symptoms groups, intervention effects were more pronounced, with a 10-point reduction in PTSD symptoms, 5-point reduction in depressive symptoms, and 5-point reduction in behavioral problems in the immediate intervention group between baseline and first follow-up assessment, though the delayed intervention group also showed more modest reductions. In contrast, in the low symptoms group, we observed little or no change across time in either group (See Table 4).

Table 3.

Main Outcome Measures by Intervention Group for High-Symptoms Groups

| Baseline |

SSET Groups |

First Follow Up |

SSET Groups |

Second Follow Up |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N |

Mean |

SD |

N |

Mean |

SD |

N |

Mean |

SD |

||||

| PTSD self-report | ||||||||||||

| Delayed | 20 | 26.50 | 7.37 | 20 | 24.30 | 11.02 | X | 18 | 19.83 | 9.34 | ||

| Immediate | 19 | 26.32 | 6.38 | X | 19 | 15.58 | 9.22 | 15 | 13.60 | 9.29 | ||

| Depression self-report | ||||||||||||

| Delayed | 16 | 23.19 | 6.71 | 16 | 22.00 | 6.82 | X | 13 | 20.23 | 7.50 | ||

| Immediate | 21 | 20.05 | 6.38 | X | 21 | 15.00 | 7.50 | 19 | 15.84 | 9.67 | ||

| Parent reported behavior problems | ||||||||||||

| Delayed | 9 | 20.11 | 2.89 | 9 | 17.00 | 5.77 | X | 7 | 13.43 | 6.68 | ||

| Immediate | 6 | 22.67 | 3.20 | X | 6 | 14.67 | 6.50 | 6 | 12.17 | 6.82 | ||

| Teacher reported behavior problems | ||||||||||||

| Delayed | 5 | 23.80 | 5.93 | 5 | 17.80 | 13.07 | X | 5 | 15.00 | 8.34 | ||

| Immediate | 13 | 20.46 | 4.54 | X | 13 | 15.85 | 6.32 | 11 | 14.36 | 5.46 | ||

Table 4.

Main Outcome Measures by Intervention Group for Low-Symptoms Groups

| Baseline |

SSET Groups |

First Follow Up |

SSET Groups |

Second Follow Up |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N |

Mean |

SD |

N |

Mean |

SD |

N |

Mean |

SD |

||||

| PTSD self-report | ||||||||||||

| Delayed | 17 | 11.06 | 4.85 | 17 | 11.29 | 6.82 | X | 14 | 10.14 | 11.29 | ||

| Immediate | 20 | 9.05 | 4.86 | X | 20 | 11.95 | 7.42 | 19 | 10.68 | 11.95 | ||

| Depression self-report | ||||||||||||

| Delayed | 21 | 7.57 | 2.82 | 21 | 9.52 | 6.39 | X | 19 | 7.89 | 4.83 | ||

| Immediate | 18 | 6.67 | 3.51 | X | 18 | 8.00 | 6.73 | 15 | 7.60 | 8.08 | ||

| Parent reported behavior problems | ||||||||||||

| Delayed | 28 | 10.00 | 4.27 | 28 | 9.46 | 4.62 | X | 25 | 7.64 | 5.38 | ||

| Immediate | 33 | 9.64 | 3.38 | X | 33 | 8.82 | 4.12 | 28 | 7.61 | 4.12 | ||

| Teacher reported behavior problems | ||||||||||||

| Delayed | 32 | 6.22 | 3.93 | 32 | 7.97 | 6.19 | X | 27 | 8.11 | 6.14 | ||

| Immediate | 26 | 6.77 | 4.38 | X | 26 | 7.50 | 5.49 | 23 | 8.61 | 4.98 | ||

Satisfaction

Of the 76 parents with children participating in SSET, 49 (64%) completed the parent satisfaction survey. Their individual satisfaction scores were averaged to create an overall satisfaction score of 4.50 out of 6, indicating that parent satisfaction was between “very good” and “excellent.” Individual scores did show some variability but no parent was dissatisfied with SSET. The lowest score was 2.85, rating the intervention between “fair” and “good,” but the majority of the parents rated the intervention “very good” or better.

Child satisfaction was high as well. Of the 76 students who participated in SSET, 64 (84%) filled out a satisfaction survey. More than 50% of the sample (35 students) had satisfaction scores between “mostly true” and “very true,” and the overall average child satisfaction score was 2.52 out of 3, falling in the middle of that range. As with parent satisfaction, there was a wide distribution of satisfaction scores at the individual level with scores ranging from a low of 1.41 to a high of 3.00.

Discussion

A primary thrust of this pilot study was to establish the feasibility of implementing a trauma-focused intervention for children using school counselors and teachers rather than scarce specialized clinical mental health staff. Our findings indicate that implementation of the program itself does appear both feasible and acceptable. Parents and children reported a high degree of satisfaction with SSET. All four school teachers implementing SSET continued with the project for the full two years, running four groups each, and our data showed that they were able to deliver the program as intended, with a good quality and a high degree of fidelity to the manual. This high degree of fidelity to the manual allows us to examine the outcomes with some confidence in this small pilot study. Indeed, without the fidelity, our outcome data would not be readily interpretable. On the other hand, tracking of students' attendance and compliance was a challenge for one of the teachers, who did not turn in her tracking forms. This could indicate that the program implementation is burdensome, particularly when conducted within the context of a research project that places extra demands on implementers.

The main obstacle to implementation noted in this project involved successfully securing informed consent at two stages and screening of children for violence exposure and PTSD symptoms. We had low rates of uptake of the program at the first consent stage, where only 27% of students agreed to participate in the screening, but high rates of screening into the program (193/383 or 50%) subsequently. Parents may have been doing some self-selection as they decided whether or not to participate in the project, as evidenced by this is a high rate of screening-in compared to our earlier CBITS work (Jaycox et al., 2002; Stein et al., 2003). Securing consent for the second stage, the SSET groups, was also challenging. In this low SES population, it was extremely difficult to contact parents and for them to return signed consent forms, even if they appeared to want their child to participate. In addition to raising issues about implementation in future work, the reduction in sample size during the consent process makes it possible that the results obtained in this study would not be generalizable to the full group of students who would be eligible for such a program.

In this project, research staff led the consent and screening efforts. Some of the burden of the consent process was related to the conduct of the research project itself (detailed informed consent forms and requirements), but even if the research were not being conducted, school personnel would need to obtain some form of parental permission for both screening and the SSET program. Thus, these challenges to implementation remain, particularly if they are to be conducted by school personnel without help from a research team. In such cases, efforts to find ways to incorporate these procedures into existing school routines would appear to be advantageous. For instance, permission for screening might be included with regular beginning-of-the-school-year forms. In regions with less poverty and daily stress, obtaining consent may be less difficult.

We found that the intervention may have small effects on self-reported PTSD and depressive symptoms, as well as teacher reported behavior problems, but we did not observe any effects on parent reported behavior problems. In our earlier CBITS work, we found larger effects (1.08 for PTSD and .45 for depression; Stein et al., 2003), but no changes in teacher reported behavioral problems. But in the SSET program several of the more clinical elements were removed to ease administration, and the individual sessions were eliminated. Thus, it would be expected that we would find smaller effects in the SSET program. The null findings in a small pilot cannot be considered conclusive, but the lack of effects on parent reported behavior is important, and attention to behavioral issues will need to be considered in the further development and refinement of the SSET program.

Examination of the students with high symptoms showed more pronounced reductions in symptoms, showing a clear pattern of improvement for those in the immediate SSET group. The group of students with low symptoms showed little change, perhaps due to a floor effect wherein many students in this vulnerable population would not be expected to have scores much lower than those considered moderate here given the stressors of their environment. Whether it is worthwhile for these students to participate in the SSET program is unclear. Over time, some of these students will undoubtedly face additional stressors and exposures to violence, and the skills they learned in the SSET program could potentially be useful to them. Without longer term follow-up data, we are unable to address this question. More research, with a longer-term follow-up design, is needed to address inclusion of those at the lower ranges of symptoms at baseline.

Our project served mostly Latino students, the largest ethnic group in Los Angeles schools, and served students from lower socio-economic backgrounds. As national attention begins to focus on racial disparities in health care (Department of Health and Human Services, 2000 & 2001), the Surgeon General has raised concerns about access to mental health services for minority children, including Latinos (U.S. Public Health Service, 2000). Delivering mental health services through the school system can address key financial and structural barriers that often prevent Latinos from receiving needed services (Garrison, Roy, & Azar, 1999).

Data from the current pilot show small short-term changes among those who participated in the program, but we did not examine longer term effects. The second follow-up assessments show sustained gains made as a result of the intervention, but we do not know whether these gains would remain over a longer period of time, or what the down-stream impact of the program might be on functional as well as symptom outcomes. Our use of a wait-list control group in this pilot work controls for the passage of time, but not for attention and support offered to the students. Future work should compare SSET to an active control group which offers support to students without teaching the specific skills that are core elements of SSET.

Other limitations include the specific sample targeted in this project, namely ethnic minority students from inner city schools; hence, the results may not be generalizable to other types of schools or students. Finally, if a program such as SSET is to be shown to truly effective and valuable to school administrators, it would need to show clear linkages with improved school behavior and performance in addition to the mental and behavior outcomes assessed here.

This study demonstrated that the SSET program can be implemented successfully by teachers and school counselors, as demonstrated by good coverage of the elements of the lessons as well as high quality of the lessons. Although student attendance records and compliance data were incomplete, the data that was collected showed that most students attended regularly and participated in the program, and both students and parents reported good to high satisfaction with the program. Pilot data show small reductions in symptoms among the students in the SSET programs. Overall, the SSET program appears to be a feasible approach for addressing violence-related PTSD and depression in low income, urban students. With a paucity of programs developed for use by school personnel, the SSET program meets a significant need and provides a model for a feasible, acceptable, and, pending further research, potentially effective approach. Evaluating the effectiveness of SSET is clearly warranted, and is a crucial next step in the development of the program.

Acknowledgments

The authors wish to acknowledge the contributions of a large team: Steven Evans, Phyllis Ellickson, Sheryl Kataoka (consultation), Dan McCaffrey (randomization), Fernando Cadavid (student screening and student and teacher surveys), Barbara Colwell, Roberta Bernstein, Pia Escudero (LAUSD school logistics), Diana Polo Huizar (parent interviews), Stefanie Stern (focus groups and adherence ratings), Anita Chandra (qualitative interviews), Taria Francois (data entry & manuscript preparation), Suzanne Blake, Daryl Narimatsu (school principals), and Patricia Fuentes-Gamboa, Yvette Landeros, Lajuana Worship, Maria Sanchez (SSET implementers). This project was supported by a grant from the National Institutes of Mental Health (R01MH072591). The SSET manual is available upon request from the first author.

Footnotes

Missing values for student assessments ranged from none to nine missing values missing on total scores included in this paper, depending on the time point. Parent and teacher assessments had more substantial missing values, with 20–27 values missing for teacher reports and 16–24 values missing for parent reports on the different time points.

References

- Allensworth D, Lawson E, Nicholson L, Wyche J. Schools and Health: Our Nation's Investment. Washington, DC: National Academy Press; 1997. [PubMed] [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition. (DSM-IV) Washington, DC: Author; 1994. [Google Scholar]

- Cohen J. Statistical Power Analysis for the Behavioral Sciences. 2nd. Hillsdale, NJ: Laurence Erlbaum & Associates; 1998. [Google Scholar]

- Cooper J. The federal case for school based mental health services and supports. Journal of the American Academy of Child and Adolescent Psychiatry. 2008;47(1):4–8. doi: 10.1097/chi.0b013e31815aac71. [DOI] [PubMed] [Google Scholar]

- Delaney-Black V, Covington C, Ondersma SJ, Nordstrom-Klee B, Templin T, Ager J, et al. Violence exposure, trauma, and IQ and/or reading deficits among urban children. Archives of Pediatrics and Adolescent Medicine. 2002;156(3):280–285. doi: 10.1001/archpedi.156.3.280. [DOI] [PubMed] [Google Scholar]

- Department of Health and Human Services. Health People 2010: With Understanding and Improving Health and Objectives for Improving Health. 2nd. Vol. 2. Washington, DC: U.S. Government Printing Office; 2000. [Google Scholar]

- Department of Health and Human Services. Mental Health: Culture, Race, and Ethnicity A Supplement to Mental Health: A Report of the Surgeon General. Rockville, MD: Substance Abuse and Mental Health Services Administration, Center for Mental Health Services, National Institutes of Health, National Institute of Mental Health; 2001. [PubMed] [Google Scholar]

- Farrell AD, Bruce SE. Impact of exposure to community violence on violent behavior and emotional distress among urban adolescents. Journal of Clinical Child Psychology. 1997;26(1):2–14. doi: 10.1207/s15374424jccp2601_1. [DOI] [PubMed] [Google Scholar]

- Finch AJ, Jr, Saylor CF, Edwards GL. Children's depression inventory: Sex and grade norms for normal children. Journal of Clinical Child Psychology. 1985;53(3):424–5. doi: 10.1037//0022-006x.53.3.424. [DOI] [PubMed] [Google Scholar]

- Finkelhor D. The victimization of children: A developmental perspective. American Journal of Orthopsychiatry. 1995;65(2):177–193. doi: 10.1037/h0079618. [DOI] [PubMed] [Google Scholar]

- Finkelhor D, Dziuba-Leatherman J. Children as victims of violence: A national survey. Pediatrics. 1994;94(4 Pt 1):413–20. [PubMed] [Google Scholar]

- Foa EB, Treadwell K, Johnson K, Feeny NC. The Child PTSD Symptom Scale: A Preliminary examination of its psychometric properties. Journal of Clinical Child Psychology. 2001;30(3):376–384. doi: 10.1207/S15374424JCCP3003_9. [DOI] [PubMed] [Google Scholar]

- Garrison EG, Roy IS, Azar V. Responding to the mental health needs of Latino children and families through school-based services. Clinical Psychology Review. 1999;19:199–219. doi: 10.1016/s0272-7358(98)00070-1. [DOI] [PubMed] [Google Scholar]

- Goodman R. The Strengths and Difficulties Questionnaire: A Research Note. Journal of Child Psychology and Psychiatry. 1997;38:581–586. doi: 10.1111/j.1469-7610.1997.tb01545.x. [DOI] [PubMed] [Google Scholar]

- Goodman R. The extended version of the Strengths and Difficulties Questionnaire as a guide to child psychiatric caseness and consequent burden. Journal of Child Psychology and Psychiatry. 1999;40:791–801. [PubMed] [Google Scholar]

- Goodman R, Meltzer H, Bailey V. The Strengths and Difficulties Questionnaire: A pilot study on the validity of the self-report version. European Child and Adolescent Psychiatry. 1998;7:125–130. doi: 10.1007/s007870050057. [DOI] [PubMed] [Google Scholar]

- Goodman R, Scott S. Comparing the Strengths and Difficulties Questionnaire and the Child Behavior Checklist: Is small beautiful? Journal of Abnormal Child Psychology. 1999;27:17–24. doi: 10.1023/a:1022658222914. [DOI] [PubMed] [Google Scholar]

- Grogger J. Local violence and educational attainment. The Journal of Human Resources. 1997:659–682. [Google Scholar]

- Hoagwood K, Erwin HD. Effectiveness of school-based mental health services for children: A 10-year research review. Journal of Child & Family Studies. 1997;4(6):435–451. [Google Scholar]

- Hurt H, Malmud E, Brodsky NL, Giannetta J. Exposure to Violence: Psychological and Academic Correlates in Child Witnesses. Archives of pediatrics & adolescent medicine. 2001;155:1351–1356. doi: 10.1001/archpedi.155.12.1351. [DOI] [PubMed] [Google Scholar]

- Jaycox L. Cognitive-Behavioral Intervention for Trauma in Schools. Longmont, CO: Sopris West Educational Services; 2003. [Google Scholar]

- Jaycox LH, Kataoka SH, Stein BD, Wong M, Langley A. Responding to the Needs of the Community: A Stepped Care Approach to Implementing Trauma-Focused Interventions in Schools. Report on Emotional and Behavioral Disorders in Youth. 2005;5(4):85–88. 100–103. [Google Scholar]

- Jaycox LH, Langley AK. Support for Students Exposed to Trauma: The SSET Program. Lesson Plans, Worksheets, and Materials. 2005. Unpublished manual. [Google Scholar]

- Jaycox LH, Morse LK, Tanielian T, Stein BD. Santa Monica, CA: RAND Corporation; 2006. How Schools Can Help Students Recover from Traumatic Experiences: A Tool-Kit for Supporting Long-term Recovery Technical Report: TR-413. Available online: http://www.rand.org/pubs/technical_reports/TR413/ [Google Scholar]

- Jaycox LH, Stein BD, Amaya-Jackson LM. School-based treatment for children and adolescents. In: Foa E, Keane TM, Friedman MJ, Cohen JA, editors. Effective Treatments for PTSD: Practical Guidelines from the Internationl Society for Traumatic Stress Studies. 2nd. Guilford Publications; in press. [Google Scholar]

- Jaycox LH, Stein BD, Amaya-Jackson LM, Morse LK. School-based interventions for child traumatic stress. In: Evans SW, Weist M, Serpell Z, editors. Advances in School-Based Mental Health Interventions, Volume 2. Kingston, NJ: Civic Research Institute; 2007. pp. 16-1–16-19. [Google Scholar]

- Jaycox LH, Stein BD, Kataoka S, Wong M, Fink A, Escudera P, et al. Violence exposure, PTSD, and depressive symptoms among recent immigrant school children. Journal of the American Academy of Child and Adolescent Psychiatry. 2002;41(9):1104–1110. doi: 10.1097/00004583-200209000-00011. [DOI] [PubMed] [Google Scholar]

- Jaycox LH, Tanielian T, Sharma P, Morse L, Clum G, Stein BD. Schools' mental health responses following Hurricanes Katrina and Rita. Psychiatric Services. 2007;58(10):1339–1343. doi: 10.1176/ps.2007.58.10.1339. [DOI] [PubMed] [Google Scholar]

- Kataoka S, Stein BD, Jaycox LH, Wong M, Escudero P, Tu W, et al. A school-based mental health program for traumatized Latino immigrant children. Journal of the American Academy of Child and Adolescent Psychiatry. 2003;42(3):311–318. doi: 10.1097/00004583-200303000-00011. [DOI] [PubMed] [Google Scholar]

- Kendall PC, Cantwell DP, Kazdin AE. Depression in children and adolescents: Assessment issues and recommendations. Cognitive Therapy and Research. 1989;13(2):109–146. [Google Scholar]

- Klaus P, Rennison CM. Age patterns in violent victimization, 1976-2002 (NCJ 190104) Washington, D.C.: U.S. Department of Justice, Office of Justice Programs, Bureau of Justice Statistics; 2002. [Google Scholar]

- Kliewer W, Lepore SJ, Oskin D, Johnson PD. The role of social and cognitive processes in children's adjustment to community violence. Journal of Consulting & Clinical Psychology. 1998;66(1):199–209. doi: 10.1037//0022-006x.66.1.199. [DOI] [PubMed] [Google Scholar]

- Kovacs M. Rating scales to assess depression in school-aged children. Acta Paedopsychiatr. 1981;46(5-6):305–15. [PubMed] [Google Scholar]

- Langley AK, Jaycox LH, Dean KL. SSET: Group Leader Training Manual. 2005. Unpublished manual. [Google Scholar]

- Martinez P, Richters JE. The NIMH Community Violence Project: II. Children's distress symptoms associated with violence exposure. Psychiatry Interpersonal and Biological Processes. 1993;56(1):22–35. doi: 10.1080/00332747.1993.11024618. [DOI] [PubMed] [Google Scholar]

- Osofsky JD. Prevalence of children's exposure to domestic violence and child maltreatment: Implications for prevention and intervention. Clinical Child and Family Psychology Review. 2003;6(3):161–170. doi: 10.1023/a:1024958332093. [DOI] [PubMed] [Google Scholar]

- Osofsky JD, Wewers S, Hann DM, Fick AC. Chronic community violence: What is happening to our children? Psychiatry. 1993;56(1):36–45. doi: 10.1080/00332747.1993.11024619. [DOI] [PubMed] [Google Scholar]

- Overstreet S. Exposure to community violence: Defining the problem and understanding the consequences. Journal of Child & Family Studies. 2000;9(1) [Google Scholar]

- Putnam FW. Dissociation in Children and Adolescents: A Developmental Perspective. New York: Guilford; 1997. [Google Scholar]

- Pynoos RS, Steinberg AM, Goenjian A. Traumatic stress in childhood and adolescence: Recent developments and current controversies. In: van der Kolk BA, McFarlane AC, Weisaeth L, editors. Traumatic stress: The effects of overwhelming experience on mind, body, and society. New York: Guilford Press; 1996. pp. 331–358. [Google Scholar]

- Ruchkin V, Henrich CC, Jones SM, Vermeiren R, Schwab-Stone M. Violence exposure and psychopathology in urban youth: The mediating role of posttraumatic stress. Journal of Abnormal Child Psychology. 2007;4(35):578–593. doi: 10.1007/s10802-007-9114-7. [DOI] [PubMed] [Google Scholar]

- Schwab-Stone ME, Ayers TS, Kasprow W, Voyce C, Barone C, Shriver T, et al. No safe haven: A study of violence exposure in an urban community. Journal of the American Academy of Child & Adolescent Psychiatry. 1995;34(10):1343–1352. doi: 10.1097/00004583-199510000-00020. [DOI] [PubMed] [Google Scholar]

- Singer MI, Anglin TM, Song LY, Lunghofer L. Adolescents' exposure to violence and associated symptoms of psychological trauma. Journal of the American Medical Association. 1995;273(6):477–482. [PubMed] [Google Scholar]

- Singer MI, Miller DB, Guo S, Slovak K, Frierson T. The Mental Health Consequences of Children Exposed to Violence: Final Report. Cleveland, OH: Case Western Reserve University; 1998. [Google Scholar]

- Stein BD, Jaycox LH, Kataoka S, Rhodes HJ, Vestal KD. Prevalence of child and adolescent exposure to community violence. Clinical Child and Family Psychology Review. 2003;6(4):247–264. doi: 10.1023/b:ccfp.0000006292.61072.d2. [DOI] [PubMed] [Google Scholar]

- Stein BD, Jaycox LH, Kataoka S, Wong M, Tu W, Wlliott M, et al. A mental health intervention for school children exposed to violence. Journal of the American Medical Association. 2003;290:603–611. doi: 10.1001/jama.290.5.603. [DOI] [PubMed] [Google Scholar]

- Stein BD, Zima BT, Elliott MN, Burnam MA, Shahinfar A, Fox NA, et al. Violence exposure among school-age children in foster care: Relationship to distress symptoms. Journal of the American Academy of Child and Adolescent Psychiatry. 2001;40(5):588–594. doi: 10.1097/00004583-200105000-00019. [DOI] [PubMed] [Google Scholar]

- U.S. Public Health Service. Report of the Surgeon General's Conference on Children's Mental Health: A National Action Agenda. Washington, D.C.: Department of Health and Human Services; 2000. [Google Scholar]