Abstract

Background

Cultural competency is an important part of medical policy and practice, yet the evidence base for the effectiveness of training in this area is weak. One reason is the lack of valid, reliable, and feasible tools to quantify measures of knowledge, skill, and attitudes before and/or after cultural training. Given that cultural competency is a critical aspect of “professionalism” and “interpersonal and communication skills,” such a tool would aid in assessing the impact of such training in residency programs.

Objectives

The aim of this study is to enhance the feasibility and extend the validity of a tool to assess cultural competency in resident physicians. The work contributes to efforts to evaluate resident preparedness for working with diverse patient populations.

Method

Eighty-four residents (internal medicine, psychiatry, obstetrics-gynecology, and surgery) completed the Cross-Cultural Care Survey (CCCS) to assess their self-reported knowledge, skill, and attitudes regarding the provision of cross-cultural care. The study entailed descriptive analyses, factor analysis, internal consistency, and validity tests using bivariate correlations.

Results

Feasibility of using the CCCS was demonstrated with reduced survey completion time and ease of administration, and the survey reliably measures knowledge, skill, and attitudes for providing cross-cultural care. Resident characteristics and amount of postgraduate training relate differently to the 3 different subscales of the CCCS.

Conclusions

Our study confirmed that the CCCS is a reliable and valid tool to assess baseline attitudes of cultural competency across specialties in residency programs. Implications of the subscale scores for designing training programs are discussed.

Background

Cultural competency education is a requirement of medical schools and residency programs in the United States.1,2 Mandates from the Liaison Committee on Medical Education and the Accreditation Council for Graduate Medical Education (ACGME) to include and measure the efficacy of cultural content in the curriculum have prompted attempts to improve the reliability, validity, and feasibility of cultural competency measures specifically designed for this purpose. Until recently, these efforts have been hampered because a standardized and validated tool, specifically assessing the impact of cultural training in medicine, is lacking.3

Cultural competency is relevant to 2 of the ACGME competencies—professionalism and interpersonal and communication skills.2 The ACGME requires that residents demonstrate compassion, respect, and responsiveness to patient needs, regardless of their gender, age, culture, race, religion, disability, and sexual orientation. Some specialties, such as psychiatry, have detailed and specific program requirements regarding cultural competency.4

Acquisition of cultural competence requires a multifaceted process of learning. Medical education literature5,6 supports training that addresses varying learning styles and levels of competence upon program entry. This complexity is supported by cultural competence models that emphasize a tripartite assessment of knowledge, skill, and awareness or attitudes (KSAs).7 Integrating multiple opportunities for enhancing cultural competence training programs is presumed to be effective.8

To date there has been only limited research on the design and impact of cultural competency training.9 Much of the literature is descriptive in nature, and studies report mixed results. Self-assessments typically reflect significant increases in knowledge, attitudes, and awareness after cultural training is provided, while more objective behavior-based measures have yielded findings indicating varying degrees of effectiveness. Only a few studies10 have examined the impact of training on patient behavior change or health outcomes.

One challenge in evaluating the impact of cultural training is related to the lack of a standardized and validated assessment tool.3 Although one of the most commonly accepted definitions of culture is broad and includes race, ethnicity, gender, sexual orientation, religion, and limited English proficiency,11 most existing measures only assess competencies related to race and ethnicity.12,13 An example of a measure developed to assess the impact of cultural training is the Cross-Cultural Care Survey (CCCS),14 which assesses resident preparedness to treat diverse patient populations. Initial reliability and validity of the CCCS has been documented.15 Unlike most measures that focus primarily on assessing attitudes, the CCCS is a multidimensional tool to assess knowledge (preparedness), skill, attitudes, and quantity of cultural content integrated into a resident training program. Our primary goal was to enhance the feasibility and further provide support for the validity and reliability of this tool for use in assessing cultural competency among residents across specialties.

Methods

The study was conducted in 2009. We received permission from Elyse Park, PhD, to amend the original CCCS. Dr Park also provided comments on the revised version of our survey and had awareness of our study goals. The study was granted an “exclusion” from the Institutional Review Board process (CHS No. 15822) by the Committee on Human Studies of the University of Hawaii at Manoa. The protocol was submitted to the Research and Institutional Review Committee of the Queen's Medical Center and received approval via an expedited process (RA-2008-015). The study's findings and conclusions do not necessarily represent the views of the Queen's Medical Center.

CCCS Measure Modifications

To further validate the survey and potentially expand its use beyond residents, modifications were made to enhance the feasibility or “user-friendliness” of the measure. During piloting of the initial version of the CCCS, the response rate was low, with excessive missing or incomplete responses. Based on a pilot study, we eliminated questions while keeping core content intact, and these survey edits reduced completion time from 20 minutes to an average of 5 minutes.16 Another modification was made to the subscales of the CCCS to align it with the current knowledge/skill/attitudes model of cultural competency. We added CCCS items representing the attitude component of cultural competence and reran the factor analysis to verify the stability of the subscale and to ensure it represented a separate dimension that was not duplicating the content of the original subscales. The components of the CCCS and related variables are described next.

To assess knowledge, the survey includes items indicating perception of preparedness to care for a series of types of patients or pediatric patients' families (response choices are 1 for “very unprepared”; 2, “somewhat unprepared”; 3, “somewhat prepared”; 4, “well prepared”; and 5, “very well prepared”). The scale includes items that refer to patients from cultures different from their own, patients with health beliefs at odds with Western medicine, patients with religious beliefs that might affect treatment, and patients with limited English proficiency. Questions to assess skill level in performing selected tasks/services, believed useful in treating culturally diverse patients or pediatric patients' families (scored with 1 for “not at all skillful” to 5 for “very skillful”), are included. Items refer to addressing patients from different cultures, assessing patients' understanding of their illness, negotiating treatment plans, and working with interpreters. Three attitude items from within the supplemental items of the CCCS were added to the multidimensional CCCS tool. These items were scored using a Likert-like scale ranging from 1 “not at all important” to 4 “very important.”

Participants

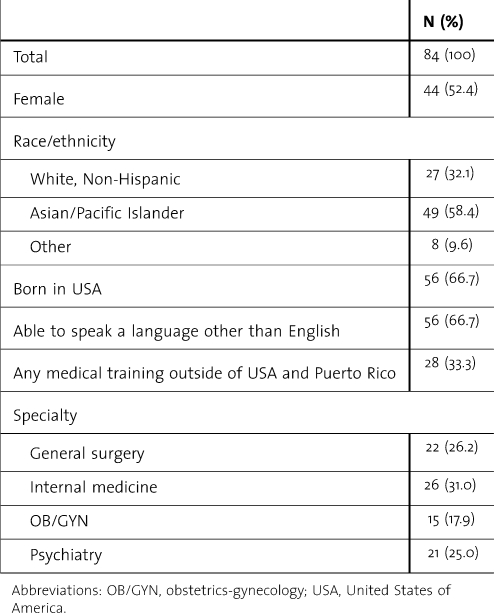

The sample consisted of 84 residents in 4 specialties from a community-based hospital with a university affiliation. Participants' characteristics are displayed in Table 1. Social and demographic characteristics of residents were collected along with the CCCS tool. Resident characteristics expected to relate to level of cultural competence were assessed to further study the validity of the CCCS. Variables included (1) whether residents were able to treat patients who speak a language other than English, and (2) whether they were born in the United States or another country. An additional section asked participants about the extent of cross-cultural care training beyond medical school. The mean score of the 10 items (α = .93) was used to reflect quantity of cross-cultural postgraduate training. Residents' self-reporting of how often they feel helpless when dealing with patients from other cultures was assessed with a Likert-like scale ranging from “never” to “often.”

Table 1.

Demographic Characteristics of Residents Across 4 Specialties

Survey Procedure

We used the CCCS to assess residents in internal medicine, obstetrics-gynecology, psychiatry, and surgery at an academic institution that has affiliations with several community-based hospitals. Faculty from the 4 specialties distributed the survey and assisted with collection of the completed forms. Individual resident participation was strictly voluntary, and there was no identifying information on the forms; specialty and program year was the only information requested.

Statistical Analysis

SPSS version 15 (SPSS Inc, Chicago, IL) was used to conduct the analyses. The psychometric properties of the revised 19-item measure were examined to ascertain that the more clinically feasible version of the CCCS is as rigorous and science-based as the original version. Factor analysis was used to establish a preliminary structure based on the KSA cultural competence model. Before conducting the factor analysis, we tested the suitability of creating subscales within the CCCS by using the Kaiser-Meyer-Olkin (KMO) test. The KMO measure of sampling adequacy was 0.902, indicating that the items in the CCCS share a high degree of common variance. Principal components analyses were then performed on the 19 items; an oblique rotation was used to facilitate interpretation. Eigenvalues greater than 1, component loadings greater than .3, and internal interitem correlations greater than .3 were required to retain items. All items loaded at .6 or higher on their respective factors and there were no cross-loadings.

To assess construct validity and to examine the residents' KSAs regarding cultural competence, t test values were reported for the total scale scores for the knowledge, skill, and attitudes components and the resident characteristics/experiences. Bivariate correlation coefficients were also generated to examine associations between the 3 components and resident characteristics/experiences.

Results

Subscales and Structure of the CCCS

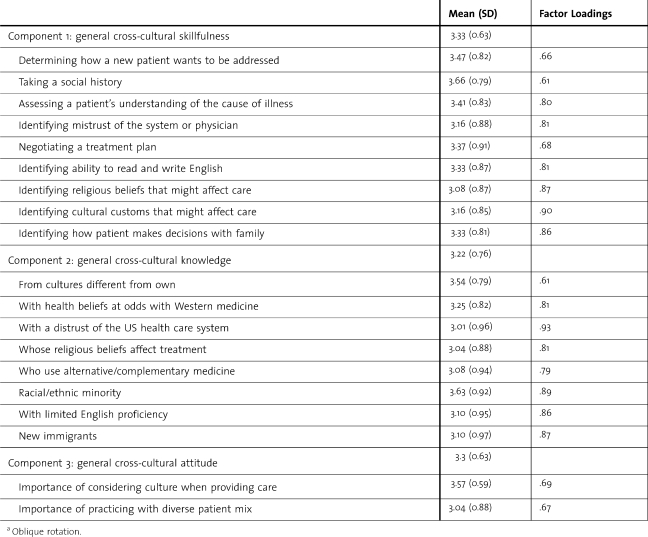

Consistent with the theoretic model, the factor analysis revealed the presence of 3 components (subscales) explaining 70% of the total variance (Table 2). Two subscales corresponded to the 2 original scales on the CCCS and the 2 attitude items formed the new subscale. General cross-cultural skillfulness (skill) was the strongest factor, accounting for approximately 51% of the variance. The standardized Cronbach α indicated high internal consistency for the 9 items (α = .93). The second factor, general cross-cultural preparedness (knowledge), accounted for an additional 11% of the variance. The 8 items' standardized Cronbach α indicated extremely high internal consistency (α = .94). The third factor, attitude toward culture (attitude), consisted of only 2 items but accounted for 8% of the variance. The standardized Cronbach α was adequate (α = .62). The revised CCCS thus can be used as 1 score or as 3 subscale scores.

Table 2.

Mean Scores and Principal Component Factor Analysis Loadingsa of the CCCS Among Residents Across 4 Medical Specialties

The means and standard deviations of the CCCS items by subscale are shown in Table 2 and illustrate the level of cultural competence of participants. The knowledge and skill subscales were moderately associated, r = .66, P < .01. Attitude was positively correlated with skill (r = .23, P < .05,) but was not associated with knowledge. The 3 subscales thus are further supported as fairly distinct parts of cultural competence.

Cultural Competence of Residents

Resident Characteristics and Experiences

There were no differences in any component of cultural competence between US-born residents and those from other nations. Residents reporting the ability to speak a second language had slightly higher levels of perceived skills (M, 3.44 versus 3.1) t = (54.78) 2.23, P < .05 and a slightly more favorable attitude (M, 3.4 versus 3.11) t = (54.84) 2.05, P < .05 than monolingual English speakers.

Helplessness

As residents' knowledge of various cultural groups/populations increased, their level of helplessness when working with culturally diverse patients decreased (r = −.24, P < .05). There was also a relationship between formal postgraduate training and experiencing helplessness (r = .21, P = .05). As the amount of reported training increased, the degree of helplessness also increased.

Quantity of Cultural Competence Training

The amount of postgraduate training did not relate to attitude toward the value of culture. Postgraduate training in culture related positively to both skill (r = .34, P < .01) and knowledge (r = .35, P < .001).

Discussion

This study supported the validity and reliability of an existing tool that measures resident preparedness to provide care to diverse patient populations. Additionally, this study enhanced the feasibility of the existing measure by making it easier to administer and use for assessment and evaluation purposes. The CCCS assesses residents' perceived cultural competence in the 3 arenas most often represented in models of cross-cultural care: (1) self-reported knowledge as reflected in preparedness to treat specific types of patients, manage specific issues and situations, or to provide certain services; (2) self assessment of skills; and (3) attitudes about the importance of cross-cultural care and desire to work with diverse patient populations. Our findings suggest the CCCS can be reliably used as 3 subscales reflecting these arenas.

While the average scores of residents on each component of the CCCS were remarkably similar, the associations suggest that residents tend to rate their knowledge for working with various populations and their ability to perform various skills as distinctly separate and different. Preparedness to work with diverse patient populations was moderately associated with the amount of training during residency. The data suggest a link between knowledge and increased skillfulness, further supported by the finding that residents' level of helplessness decreased in relation to increasing knowledge of various cultural groups. While the direction of this relationship cannot be determined, this finding is consistent with data on the original version of the CCCS. A contradictory finding was the observation that more postgraduate training was associated with greater levels of helplessness. While further study of the nature of this relationship is needed, one potential implication is that different types of training may be needed to increase residents' perceived knowledge. Alternatively, the finding could indicate that more training increases residents' awareness of limits of their knowledge and the possibilities of encountering challenges in providing cross-cultural care.

Previous training is moderately associated with perceived skill in implementing culturally responsive patient care. The amount of training and the presence of good role models were significantly correlated with perceived skillfulness in previous studies.14 For example, residents who reported receiving little or no instruction were 8 times more likely to report low skill levels. Further analysis of the data reflected that residents who received formal cultural competency training through the Health Resources and Services Administration Title VII training grant programs perceived themselves as more skillful than those who had not participated.17 Further outcome data from cultural competency training provided in specific programs are needed to develop training to increase residents' perceived skill.

Residents' attitudes were unrelated to knowledge and only modestly related to skill. This is consistent with the concept of cultural humility, which maintains the patient as the “expert” on his or her condition, since it is impossible for a doctor to be “competent” on all aspects of every culture.18 So-called cultural factors are only deemed important if the patient indicates that they are important. Therefore, the doctor's attitude and openness is most critical; that is, he or she may not be familiar with a culture but can be culturally sensitive and skilled.

Implications for Designing Cultural Competence Training Programs

Our findings suggest that developers of cultural competence training content will need to consider different approaches for each area of competence; otherwise, efforts to educate residents in cultural competency may not lead to desired outcomes. Postgraduate training did not correlate with residents' attitude toward culture. More troubling, increasing amounts of postgraduate training were related to higher levels of helplessness, suggesting that current training may not improve residents' self-efficacy to work with culturally diverse patients. Recently, a “social justice” component has been recommended to bolster understanding of cultural competency in medical education.19 This includes “critical self-reflection,” which involves setting aside one's potential biases and assumptions and obtaining a perspective that includes considering the historical and social context that may impact a patient's perceptions and experiences with health care and the health care system.

Limitations and Suggestions for Future Research

Our study has several limitations. Because the sample was limited to 1 institution, the results may not be readily generalizable. However, sampling residents from 4 programs lent additional support for use of the CCCS in examining similarities and determining differences in training needs across different groups within 1 program. Therefore, further study is needed with a larger sample size at multiple program sites to gain additional insight of the strengths and training needs of specific groups of residents. Additionally, the survey is a self-reported measure, reflecting the perceptions of the respondents. Program directors are advised to guard against socially desirable responses that may arise from the use of the CCCS as a stand-alone assessment, by using it as part of a “package” of tools for assessing cultural competency. Other tools may include a 360-degree evaluation or a cultural standardized patient examination. Use of the CCCS remains a viable option as self-perceived competence is a routine component of medical education evaluations.

Future research needs to address the continued difficulty with applying current definitions of “culture” and “competency” to develop training programs. Program directors must also grapple with the lack of a standardized definition of what constitutes “training.” Defining outcomes for patient-provider relationships and for reliable indicators of patient improvement, as a result of culturally competent care, is needed. Nonetheless, further testing of the CCCS as an outcome measure for training programs appears warranted. The utility of adapting the CCCS for other types of health care providers may further provide data for designing best practices for culturally competent medical education.

Research on the efficacy of cultural competency training and what constitutes evidence-based training is still in its infancy. Our study contributed to efforts to validate a standardized tool to assess baseline attitudes of cultural competency across specialties in residency programs. Development of outcome measures is needed to justify the emphasis on cultural training—starting in medical school and ongoing throughout a physician's career.

References

- 1.Liaison Committee on Medical Education. Functions and structure of a medical school: standards for accreditation of medical education programs leading to the MD degree. Available at: http://www.lcme.org/functions2008jun.pdf. Accessed January 15, 2010.

- 2.Accreditation Council for Graduate Medical Education. Common program requirements. Available at: http://acgme.org/acWebsite/dutyhours/dh_dutyhoursCommonPR07012007.pdf. Accessed October 1, 2009.

- 3.Chun M. B., Takanishi D. M., Jr The need for a standardized evaluation method to assess efficacy of cultural competence initiatives in medical education and residency programs. Hawaii Med J. 2009;68(1):2–6. [PubMed] [Google Scholar]

- 4.Accreditation Council for Graduate Medical Edcuation. ACGME program requirements for graduate medical education in psychiatry. Available at: http://www.acgme.org/acWebsite/downloads/RRC_progReq/400_psychiatry_07012007_u04122008.pdf. Accessed April 15, 2009.

- 5.Wong R. Y., Lee P. E. Teaching physicians geriatric principles: a randomized control trial on academic detailing plus printed materials versus printed materials only. J Gerontol A Biol Sci Med Sci. 2004;59:1036–1040. doi: 10.1093/gerona/59.10.m1036. [DOI] [PubMed] [Google Scholar]

- 6.Weingardt K. R. The role of instructional design and technology in the dissemination of empirically supported, manual-based therapies. Clin Psychol Sci Pract. 2004;11(3):313–331. [Google Scholar]

- 7.Capinha-Bacote J. The Process of Cultural Competence in the Delivery of Healthcare Services: The Journey Continues. 5th ed. Cincinatti, OH:: Transcultural C.A.R.E. Associates; 2007. [Google Scholar]

- 8.Wega W. Higher stakes ahead for cultural competence. Gen Hosp Psychiatry. 2005;27(6):446–450. doi: 10.1016/j.genhosppsych.2005.06.007. [DOI] [PubMed] [Google Scholar]

- 9.Yamada A. M., Brekke J. S. Addressing mental health disparities through clinical competence not just cultural competence: the need for assessment of sociocultural issues in the delivery of evidence-based psychosocial rehabilitation services. Clin Psychol Rev. 2008;28(8):1386–1399. doi: 10.1016/j.cpr.2008.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Beach M. C., Cooper L. A., Robison K. A., et al. Cultural competence: a systematic review of health care provider educational interventions. Med Care. 2005;43(4):356–373. doi: 10.1097/01.mlr.0000156861.58905.96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cross T. L., Bazron B. J., Dennis K. W., Isaacs M. R. Towards a Culturally Competent System of Care: A Monograph on Effective Services for Minority Children Who Are Severely Emotionally Disturbed. Washington, DC:: Child and Adolescent Service System Program (CASSP) Technical Assistance Center, Georgetown University Child Development Center; 1989. [Google Scholar]

- 12.Gozu A., Beach M. C., Price E. G., et al. A systematic review of the methodological rigor of studies evaluating cultural competence training of health professionals. Acad Med. 2005;80(6):578–586. doi: 10.1097/00001888-200506000-00013. [DOI] [PubMed] [Google Scholar]

- 13.Gozu A., Beach M. C., Price E. G., et al. Self-administered instruments to measure cultural competence of health professionals: a systematic review. Teach Learn Med. 2007;19(2):180–190. doi: 10.1080/10401330701333654. [DOI] [PubMed] [Google Scholar]

- 14.Weissman J. S., Betancourt E. G., Campbell E. R., et al. Resident physicians' preparedness to provide cross-cultural care. JAMA. 2005;294(9):1058–1067. doi: 10.1001/jama.294.9.1058. [DOI] [PubMed] [Google Scholar]

- 15.Park E. R., Chun M. B., Betancourt J. R., Green A. R., Weissman J. S. Measuring residents' perceived preparedness and skillfulness to deliver cross-cultural care. J Gen Intern Med. 2009;24(9):1053–1056. doi: 10.1007/s11606-009-1046-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chun M. B. J., Huh J., Hew C., Chun B. An evaluation tool to measure cultural competency in graduate medical education. Hawaii Med J. 2009;68(5):116–117. [PubMed] [Google Scholar]

- 17.Green A. R., Betancourt J. R. Providing culturally competent care: residents in HRSA Title VII funded residency programs feel better prepared. Acad Med. 2008;83(11):1071–1079. doi: 10.1097/ACM.0b013e3181890b16. [DOI] [PubMed] [Google Scholar]

- 18.Tervalon M., Murray-Garcia J. Cultural humility versus cultural competence: a critical distinction in defining physician training outcomes in multicultural education. J Health Care Poor Underserved. 1998;9(2):117–125. doi: 10.1353/hpu.2010.0233. [DOI] [PubMed] [Google Scholar]

- 19.Kumagai A. K., Lypson M. L. Beyond cultural competence: critical consciousness, social justice, and multicultural education. Acad Med. 2009;84(6):782–787. doi: 10.1097/ACM.0b013e3181a42398. [DOI] [PubMed] [Google Scholar]