Abstract

Objective

To validate standardized instructions for the creation, implementation, and performance assessment of a low-fidelity model for Pfannenstiel incision.

Study Design

The Pfannenstiel model used at the University of Florida-Jacksonville was broken down into composite steps and constructed by obstetrics-gynecology faculty from across the country. The model was then utilized at participants' home institutions and evaluated with respect to realism of the model, ability to replicate the simulation, appropriateness of the skills checklists, and perceived utility of a publication of similarly catalogued simulation modules for use in obstetrics-gynecology training programs.

Results

The model was correctly constructed by 94.7% (18 of 19) participants and 72.2% (13 of 18) completed a post construction/post simulation survey indicating a high degree of perceived educational utility, feasibility of construction, and desire for additional catalogued modules.

Conclusions

A low-fidelity simulation model was developed, successfully reproduced using inexpensive materials, and implemented across multiple training programs. This model can serve as a template for developing, standardizing and cataloging other low-fidelity simulations for use in resident education. As discussions among medical educators continue regarding further restrictions on duty hours, it is highly likely that more programs will be looking for guidance in establishing quick, inexpensive, and reliable means of developing and assessing surgical skills in their learners. Furthermore, the Accreditation Council for Graduate Medical Education (ACGME) has well-defined goals of programs developing better and more reproducible tools for all of their assessments. For programs with limited resources, preparing and disseminating reproducible, validated tools could be invaluable in complying with future ACGME mandates.

Introduction

Historically, graduate medical education has been based on an apprenticeship model of learning. Surgical subspecialties in particular have a long tradition of attaining skills through long hours in multiyear training programs. With the advent of duty hour restrictions in 2003 by the Accreditation Council for Graduate Medical Education (ACGME), residents and fellows have seen a reduction in opportunities and experiences in the operating room.1 Opportunities for operative experience have been further impacted by fiscal concerns, patient-safety mandates, newly developed technology, faculty demands, and ethical issues. These concerns dictate that much of the teaching and assessment of surgical skills should be accomplished in the laboratory setting.2

In addition, residency programs are required to develop and implement tools that objectively evaluate 6 core competencies: patient care, medical knowledge, practice-based learning and improvement, interpersonal communication skills, professionalism, and systems-based practice. Surgical skills can comprise more than one of these competencies. Traditionally, surgical skills have been attained and assessed in the operating room by tallying numbers of procedures performed and by subjective skills assessments from attending faculty. These methods have questionable validity and poor reliability.2 In addition, standardization of resident skills assessment in the operating room is difficult due to variations in teaching/learning style, volume and breadth of types of cases, level of participation, and exposure to complications.

Reznick and colleagues3 discuss a new surgical-skills examination tool, the Objective Structured Assessment of Technical Skill (OSATS). In their study, OSATS demonstrated high reliability and construct validity, suggesting that this tool could be effectively used to measure residents' technical ability outside of the operating room using bench model simulations. The assessment of bench model skills was performed using a 2-tiered approach: (1) a global assessment designed to rate multiple dimensions of surgical performance, and 2) a procedure-specific checklist of separate items representative of necessary steps for effective performance of the surgical task.

Additional research4–6 has also shown that low-cost bench station OSATS can be as effective as expensive and elaborate animal models, both producing assessment capabilities with high levels of reliability and validity with blinding. Ault et al7 demonstrated the portability of OSATS and the global assessment of surgical skills. In their study, 2 iterations of OSATS at remote sites demonstrated psychometric properties showing that central administration with remote implementation of the examination was feasible and effective. Additionally, data from multiple studies8 indicate that simulations and low-fidelity bench models promote transfer of technical skills from bench to patients and that assessment of these skills can be used as a surrogate to measure surgical-skills acquisition.

The idea of formally and systematically integrating simulation into residency program curricula is a relatively new concept.7 Simulators range from simple objects or training “boxes” to technologically advanced virtual-reality trainers with haptic systems. When participating in a simulation, learners are often asked to suspend their disbelief to some degree in exchange for variable degrees of physical, conceptual, and emotional fidelity.9 Published reports describe simulations measuring skills including performing cervical dilation,10 performing ultrasound-guided amniocentesis,11 conducting breech delivery,12 and managing obstetric emergencies and trauma.13–16 For the most part, gynecologic simulators mirror those used to teach obstetric techniques: objects, box trainers, or task trainers (some equipped with sensors and computer-based software that provide feedback to the user).

Currently, no standard curriculum exists for obstetrics-gynecology simulation, and many programs cannot afford high-fidelity simulation models for training purposes. In addition, the complexity and fidelity of currently used simulation models vary substantially among residency programs. This study describes the validation of an inexpensive but realistic simulation model that can be incorporated into resident education. A single simulation module was developed and utilized at multiple US obstetrics-gynecology residency programs to assess its reproducibility, realism, and performance assessment tools. We then assessed the feasibility of a future publication of low-fidelity obstetrics-gynecology simulations for widespread use by residency programs.

Materials and Methods

The University of Florida College of Medicine–Jacksonville Institutional Review Board approved this pilot study. The current simulated abdominal-wall model developed and used at University of Florida College of Medicine–Jacksonville was broken down into composite parts for construction. Photographs of key elements of the construction process and detailed written instructions for each step were assembled as a PowerPoint file (Microsoft Corporation, Redmond, Washington) for distribution to participants. A “grocery list” detailing items required for the construction of the model, as well as suggested national retailers and the approximate cost of each item, was also created.

A model-building-session evaluation tool was developed to capture both demographic information about the participants and prior experience with model construction, cooking/baking, and arts/crafts. We assessed the latter activities because we hypothesized that prior experience would lend to a more positive experience in simulation building. The evaluation tool also asked for individual commentary on difficult steps and errors in construction. A 4-point Likert scale was developed ranging from strongly disagree to strongly agree (with no neutral response). The survey was reviewed for face validity by a group of 3 obstetrics-gynecology attending physicians active in simulations.

The first stage of this study occurred during a midterm meeting of the Association of Professors of Gynecology and Obstetrics (APGO) Solvay Educational Scholars Program held in Baltimore, Maryland in 2008. Attending obstetrics-gynecology faculty who did not currently have simulation curricula or access to high-fidelity models or who were active in obstetrics-gynecology simulation were asked to participate in this study. First, they constructed the abdominal wall model using the provided materials, instructions, and accompanying photos. Faculty participants were then requested to evaluate the simulation model assembly experience using the session evaluation tool. Finally, we instructed participants to perform a simulated Pfannenstiel incision at their home institution and complete a questionnaire addressing the utility of the model, feasibility of the construction process, realism of the model as it relates to actual surgical techniques, and desire to access a publication of similarly cataloged low-fidelity obstetrics-gynecology simulations.

Results

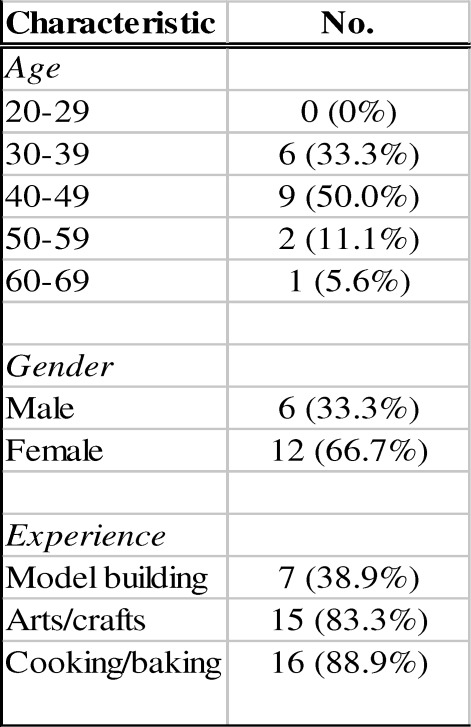

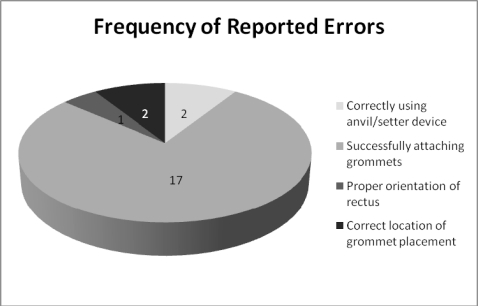

All 19 of the available obstetrics-gynecology faculty meeting the above criteria consented to participate in the study; of these, 18 (94.7%) constructed a simulated abdominal wall model during the session. Six (33%) of the participants were men and 12 (66.7%) were women. The participants' ages ranged from 30 to 60. The total time from the start of construction to a completed model ranged from 25 to 60 minutes. Sixteen participants (88.9%) stated that they had experience with cooking or following a recipe. Fifteen (83.3%) reported experience with arts and crafts, and 7 (38.9%) reported specific experience in model building (figure 1).The participants also reported their perceived success in completing the model assembly. Twelve participants (66.7%) reported a completed assembly, while 6 (33.3%) reported that they did not feel that they completed the assembly process during the session. Of particular note, all of the 18 participants reported difficulty attaching the grommets to the edge of the model as outlined in the assembly instructions (likely due to incorrectly sized grommets provided by the investigator). We did not consider this problem to represent an error or an incompletely constructed model. When compared to a standardized model (constructed by the investigator), all 18 participants (100%) were considered to have completed the construction process. The number of deviations in assembly compared to the standardized model was measured, and 6 participants (33.3%) indicated no errors, while 12 participants (66.7%) reported 1–3 errors (figure 2).

Figure 1.

Demographics of Participants (n = 18)

Figure 2.

Frequency of Reported Errors

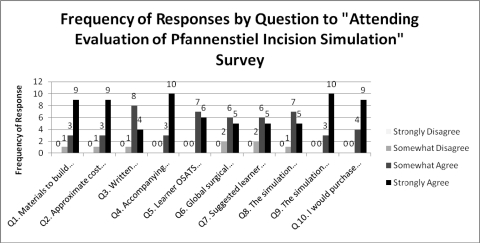

Of the 18 participants, 13 (72.2%) completed the actual simulated Pfannenstiel incision at their home institution using their constructed model and returned a completed survey to the investigator. figure 3 illustrates the frequency of responses to each survey question.

Figure 3.

Frequency of Responses by Question to “Attending Evaluation of Pfannenstiel Incision Simulation” Survey

Discussion

Many reports describe the utility of simulations in medical education. No consensus currently exists, however, on how to specifically implement simulation curricula into obstetrics-gynecology residency education. This pilot study demonstrated that a low-fidelity model for an abdominal wall can be cheaply and quickly reproduced by a diverse group of obstetrics-gynecology faculty using a set of standardized instructions. The portability of the Pfannenstiel skin incision module to multiple residency programs was also demonstrated in this study. Participants indicated a high degree of agreement that this simulation could be easily constructed with low-cost materials and incorporated into their training programs, and that a catalog of other low-fidelity simulations would be useful for their training centers.

As discussions among medical educators continue regarding further restrictions on duty hours, it is highly likely that more programs will be looking for guidance in establishing quick, inexpensive, and reliable means of developing and assessing surgical skills in their learners. Furthermore, the ACGME has well-defined goals for developing better and more reproducible tools for all of their assessments. Low-fidelity simulators are cost-effective; preparing and disseminating them as reproducible, validated tools could be invaluable in complying with future ACGME mandates.

Limitations of this study include the small sample size of 18 as well as the selection bias that results from sampling obstetrics-gynecology educators who indicate their active interest in medical education and curriculum design and feedback by attending an APGO meeting. While this group might be considered maximally motivated, it is also reflective of the population that would be constructing and using the model in actual practice (ie, obstetrics-gynecology residency educators). A potential concern when envisioning a catalog of simulations is that ease of construction, simplicity of use, and expense will vary among simulated models. Fortunately, conversations with educators who have initiated simulation programs suggest that this variability is not perceived as a major concern. There is, in fact, a small body of simulations that have been developed independently and yet with remarkable consistency. This includes the Loop Electrocautery Excision Procedure (LEEP) sausage model, which in some form has been “invented” by a dozen or more gynecology educators. Experiences like these suggest that where interest in simulation exists, construction ideas are readily proposed and replicated.

We believe that the rate-limiting step for widespread implementation of simulation education is not the perceived value (which is well supported by literature) nor the inability to identify future need. Rather, we suspect it is the “deer in the headlights” phenomenon that leads already busy and multitasking educators to believe that they cannot comply with the trend. Making available a manual of easy and inexpensive simulations would go far to overcome this concern. Further research might also ultimately form the basis of a unified basic simulation curriculum that could be applied by and to all programs training obstetrics-gynecology residents.

Acknowledgments

The authors would like to acknowledge Diane Magrane, MD, Andrew M. Kaunitz, MD, Guy I. Benrubi, MD, and Elisa Zenni, MD.

Footnotes

Kelly A. Best, MD, is Assistant Professor, Associate Program Director at University of Florida College of Medicine–Jacksonville; Brent E. Seibel, MD, is Assistant Professor, Director of Simulation Education at University of Florida College of Medicine-Jacksonville; and Deborah S. Lyon, MD, is Associate Professor, Program Director at University of Florida College of Medicine–Jacksonville.

Editor's Note: The online version of this article includes a "grocery list" (23KB, doc) for materials, an Evaluation (24KB, doc) of Simulation Model Assembly Experience, an Attending Evaluation (35.5KB, doc) of Pfannenstiel Incision Simulation, and photographs of the completed abdominal wall model.

References

- 1.Blanchard M., Amini S., Frank T. Impact of work hour restrictions on resident case experience in an obstetrics and gynecology residency program. Am J Obstet Gynecol. 2004;191(5):1746–1751. doi: 10.1016/j.ajog.2004.07.060. [DOI] [PubMed] [Google Scholar]

- 2.Reznick R. K. Teaching and testing clinical skills. Am J Surg. 1993;165(3):358–361. doi: 10.1016/s0002-9610(05)80843-8. [DOI] [PubMed] [Google Scholar]

- 3.Reznick R., Regehr G., MacRae H., Martin J., McCulloch W. Testing technical skill via an innovative “bench station” examination. Am J Surg. 1997;173(3):226–230. doi: 10.1016/s0002-9610(97)89597-9. [DOI] [PubMed] [Google Scholar]

- 4.Lentz G., Mandel L., Lee D., Gardella C., Melville J., Goff B. A. Testing surgical skills of obstetric and gynecologic residents in a bench laboratory setting: validity and reliability. Am J Obstet Gynecol. 2001;184(7):1462–1470. doi: 10.1067/mob.2001.114850. [DOI] [PubMed] [Google Scholar]

- 5.Goff B. A., Nielsen P. E., Lentz G. M. Surgical skills assessment: a blinded examination of obstetrics and gynecology residents. Am J Obstet Gynecol. 2002;186(4):613–617. doi: 10.1067/mob.2002.122145. [DOI] [PubMed] [Google Scholar]

- 6.Nielsen P. E., Foglia L. M., Mandel L. S., Chow G. E. Objective structured assessment of technical skills for episiotomy repair. Am J Obstet Gynecol. 2003;189(3):1257–1260. doi: 10.1067/s0002-9378(03)00812-3. [DOI] [PubMed] [Google Scholar]

- 7.Ault G., Reznick R., MacRae H. Exporting a technical skills evaluation technology to other sites. Am J Surg. 2001;182(3):254–256. doi: 10.1016/s0002-9610(01)00700-0. [DOI] [PubMed] [Google Scholar]

- 8.Beaubien J. M., Baker D. P. The use of simulation for training teamwork skills in healthcare: how low can we go? Qual Saf Health Care. 2004;13(suppl 1):151–156. doi: 10.1136/qshc.2004.009845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Rudolph J. W., Simon R. E., Raemer D. B. Which reality matters? Questions on the path to high engagement in healthcare simulation. Simul Healthc. 2007;2(3):161–163. doi: 10.1097/SIH.0b013e31813d1035. [DOI] [PubMed] [Google Scholar]

- 10.Tuffnell D. J., Bryce F., Johnson N., Lilford R. J. Simulation of cervical change in labour: reproducibility of expert assessment. Lancet. 1989;2(8671):1089–1090. doi: 10.1016/s0140-6736(89)91094-5. [DOI] [PubMed] [Google Scholar]

- 11.Maher J. E., Kleinman G. E., Lile W. The construction and utility of an amniocentesis trainer. Am J Obstet Gynecol. 1998;179(5):1225–1227. doi: 10.1016/s0002-9378(98)70136-x. [DOI] [PubMed] [Google Scholar]

- 12.Deering S., Brown J., Hodor J. Simulation training and resident performance of a singleton vaginal breech delivery. Obstet Gynecol. 2006;107(1):86–89. doi: 10.1097/01.AOG.0000192168.48738.77. [DOI] [PubMed] [Google Scholar]

- 13.American College of Obstetricians and Gynecologists (ACOG) Post partum hemorrhage. ACOG practice bulletin no. 76. Obstet Gynecol. 2006;108:1039–1047. doi: 10.1097/00006250-200610000-00046. [DOI] [PubMed] [Google Scholar]

- 14.Anderson E. R., Black R., Brocklehurst P. Acute obstetric emergency drill in England and Wales: a survey of practice. BJOG. 2005;112(3):372–375. doi: 10.1111/j.1471-0528.2005.00432.x. [DOI] [PubMed] [Google Scholar]

- 15.Goffman D., Heo H., Pardanni S. Improving shoulder dystocia management among resident and attending physicians using simulations. Am J Obstet Gynecol. 2008;199(3):294e1–294e5. doi: 10.1016/j.ajog.2008.05.023. [DOI] [PubMed] [Google Scholar]

- 16.Johanson R., Akhtar S., Edwards C. MOET: Bangladesh—an initial experience. J Obstet Gynaecol Res. 2002;28(4):217–223. doi: 10.1046/j.1341-8076.2002.00039.x. [DOI] [PubMed] [Google Scholar]