Abstract

Objective:

To quantify the accuracy of commonly used intracerebral hemorrhage (ICH) predictive models in ICH patients with and without early do-not-resuscitate orders (DNR).

Methods:

Spontaneous ICH cases (n = 487) from the Brain Attack Surveillance in Corpus Christi study (2000–2003) and the University of California, San Francisco (June 2001–May 2004) were included. Three models (the ICH Score, the Cincinnati model, and the ICH grading scale [ICH-GS]) were compared to observed 30-day mortality with a χ2 goodness-of-fit test first overall and then stratified by early DNR orders.

Results:

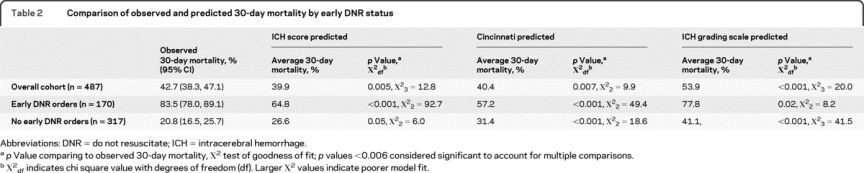

Median age was 71 years, 49% were female, median Glasgow Coma Scale score was 12, median ICH volume was 13 cm3, and 35% had early DNR orders. Overall observed 30-day mortality was 42.7% (95% confidence interval [CI] 38.3–47.1), with the average model-predicted 30-day mortality for the ICH Score, Cincinnati model, and ICH-GS at 39.9% (p = 0.005), 40.4% (p = 0.007), and 53.9% (p < 0.001). However, for patients with early DNR orders, the observed 30-day mortality was 83.5% (95% CI 78.0–89.1), with the models predicting mortality of 64.8% (p < 0.001), 57.2% (p < 0.001), and 77.8% (p = 0.02). For patients without early DNR orders, the observed 30-day mortality was 20.8% (95% CI 16.5–25.7), with the models predicting mortality of 26.6% (p = 0.05), 31.4% (p < 0.001), and 41.1% (p < 0.001).

Conclusions:

ICH prognostic model performance is substantially impacted when stratifying by early DNR status, possibly giving a false sense of model accuracy when DNR status is not considered. Clinicians should be cautious when applying these predictive models to individual patients.

GLOSSARY

- BASIC

= Brain Attack Surveillance in Corpus Christi;

- CI

= confidence interval;

- DNR

= do not resuscitate;

- GCS

= Glasgow Coma Scale;

- ICH

= intracerebral hemorrhage;

- ICH-GS

= intracerebral hemorrhage grading scale;

- ROC

= receiver operating characteristic;

- SFGH

= San Francisco General Hospital;

- UCSF

= University of California San Francisco.

Many predictive models have been proposed to stratify risk of death following intracerebral hemorrhage (ICH) based on factors known early in the hospital course.1–5 While many of these models have been shown to perform well on average,1,6–8 using them to predict the outcome for an individual patient may be problematic.

None of the commonly used predictive models have accounted for the impact of do-not-resuscitate (DNR) orders on mortality after ICH. Early DNR orders are common after ICH9,10 and have been associated with a doubling in the chances of death after ICH even despite adjusting for traditional predictors of mortality.10 While the association between DNR orders and death is strong and consistently seen in several studies,9–11 the interpretation of this association is complex. DNR orders may simply represent a proxy for disease severity or comorbidities not accounted for in statistical models12; alternatively, there is evidence to suggest that DNR orders after ICH may be representative of a reduction in the overall level of aggressiveness of treatment provided to ICH patients.9

Use of ICH predictive models without considering the impact of early DNR orders may lead to inaccurate estimates of mortality risk for individual patients. Our goal was to quantify the accuracy of several common ICH predictive models when stratifying by early DNR orders.

METHODS

Case identification and data collection.

This project combined patients from 2 separate studies of ICH to create a single large diverse cohort of ICH cases for analysis. The first source of patients for this project was from the Brain Attack Surveillance in Corpus Christi Project (BASIC), an ongoing population-based stroke surveillance project in Nueces County, TX.13 Methods for the case identification and selection of BASIC cases have been previously reported.10,13 Briefly, active and passive surveillance is used at all 7 hospitals in Nueces County, TX, to identify stroke cases among patients >44 years old. Trained abstractors record data from the chart and all cases are validated by study neurologists based on review of source documentation. BASIC cases of ICH identified from January 1, 2000, through December 31, 2003, were included.

The second source of patients for this analysis was a prospective study of all adult ICH patients (age >18) admitted through the emergency departments at the University of California San Francisco (UCSF) Medical Center or San Francisco General Hospital (SFGH) from June 1, 2001, through May 31, 2004. Cases were identified prospectively by treating physicians or by review of clinical service admission logs.

Case definition of ICH for both studies was a nontraumatic spontaneous intracerebral or isolated intraventricular hemorrhage. Warfarin-associated hemorrhages and hemorrhage due to vascular malformation were included, while ICH due to known tumor or hemorrhagic conversion of ischemic stroke was excluded. Status as dead or alive at 30 days was determined through in-person or telephone interview (California only), review of medical records, or search of state and national databases as previously described.10,14

CT scans were reviewed for volume and location of hemorrhage by study investigators as previously described.10,15 Hemorrhage volume was calculated using the ABC/2 method.16 Intraventricular hemorrhage was coded as present or absent.

Definition of DNR orders.

DNR orders were defined as a clearly documented plan in the medical record to limit cardiopulmonary resuscitation or mechanical ventilation in the event of a cardiac or respiratory arrest. Decisions on DNR status were left to the individual clinician and the patient or family. DNR orders were considered early if written within 24 hours of hospital presentation (BASIC) or within the first full day of hospitalization (California). The BASIC study had a restriction that DNR orders were not coded if they were written after at least one clinical examination consistent with brain death even if there was no formal declaration of brain death. Nine cases met this criterion and were not coded as early DNR. Investigators and chart abstractors were unaware of the specific study goals at the time of data collection.

Selection of ICH predictive models for analysis.

A total of 22 ICH models predicting mortality were reviewed for applicability to the present study. A 2005 review of ICH predictive models1 provided the main source of models for consideration, though several additional models were also reviewed for applicability.4,17–19 The primary criterion for selection of a model for analysis was whether all elements required for the model were available in our dataset; 8 models met this criterion. From these 8 models, the ICH Score3 and a model developed in Cincinnati2 were selected, as these are 2 of the more widely cited ICH predictive models. The 6 remaining possible models used essentially the same elements as the ICH Score (age, ICH volume, Glasgow Coma Scale score [GCS], intraventricular hemorrhage, and infratentorial hemorrhage), though with different scoring systems,4,18,20–22 or addition of heart disease as a predictor.23 From these 6 additional candidate models, the ICH grading scale (ICH-GS)4 was selected as it was reported to outperform the ICH Score for prediction of mortality in its development cohort.4 Patients in the current study were not part of the initial ICH Score development cohort as that group was from 1997 to 1998.3 The components of the 3 predictive models studied are shown in appendix e-1 on the Neurology® Web site at www.neurology.org. All patients included had complete data available for these models.

Statistical analysis.

Continuous variables were summarized with medians and quartiles and categorical variables were summarized as proportions. Baseline characteristics of the Texas and California cohorts were compared with t tests (age and ICH volume), χ2 tests (categorical variables), or Wilcoxon rank sum test (GCS) as appropriate. Overall model performance was first assessed with the Cuzick test for trend, which is a nonparametric test assessing for an ordinal trend of increasing mortality with higher predicted mortality.24 The primary statistical analysis consisted of a comparison of each model's predicted mortality with the actual observed mortality in the study cohort with a χ2 test of goodness of fit.25 With this statistical test, lower p values (or higher χ2 values) indicate poorer model fit to the observed data. Within each category of predicted mortality for a model, the number of patients predicted to die by the model was determined by multiplying the model-predicted mortality by the number of patients. For example, the predicted mortality for an ICH Score of 3 is 72%.3 The study cohort had 94 individuals with an ICH Score of 3, therefore 94 × 72% = 67.7 deaths were expected according to the model. This predicted number of 67.7 was compared to the actual mortality of 67 cases. A χ2 statistic was calculated as the sum of (observed − expected)2/expected across each strata of predicted mortality, and compared to a χ2 distribution with (number of mortality categories − 2) degrees of freedom. Cells with fewer than 5 subjects expected to die were collapsed with adjacent cells. The Cincinnati model had 2 of 6 categories with similar predicted mortalities (44% and 46%, and 74 and 75%), and these similar categories were collapsed for analysis purposes. Since the primary analysis involved a series of 9 separate statistical comparisons, a p value of 0.006 (or 0.05/9) was used as the threshold for significance. Bar charts comparing the observed and model-predicted mortality by level of predicted mortality and stratified by DNR status were created for each model. Summary statistics and determination of model scores were calculated in Stata 9 (StatCorp LP, College Station, TX), SAS 9.1.2 (SAS Institute, Cary, NC), or R version 2.9.0 (2009, R Foundation for statistical computing).

Standard protocol approvals, registrations, and patient consents.

This study was approved by the Institutional Review Boards of the University of Michigan, the University of California San Francisco, and the individual hospital systems.

RESULTS

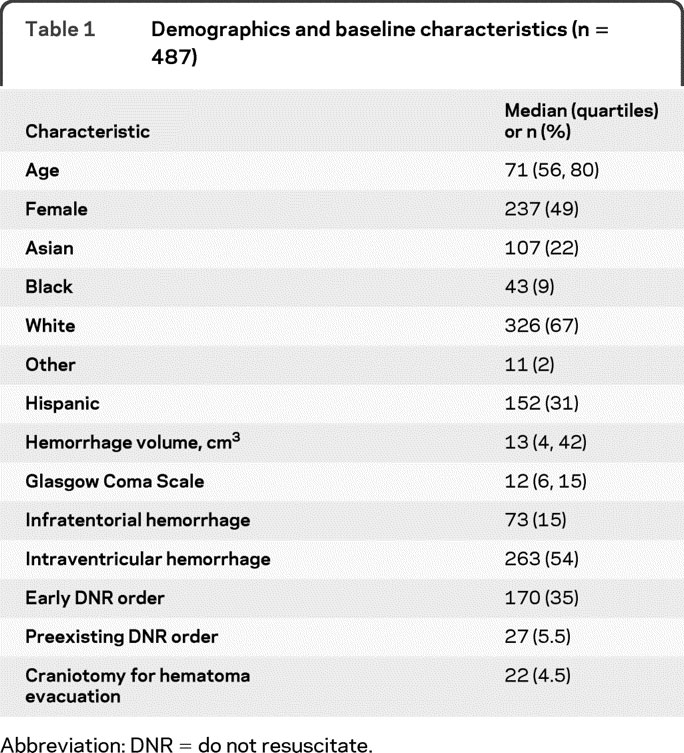

A total of 487 patients were available from the 2 study cohorts and all are included in this analysis (Texas 244, California 243). Median age was 71 years (quartiles 56, 80) and 49% of the population was female. Additional baseline characteristics of the study population are shown in table 1. Early DNR orders were recorded in 170/487 (34.9%) of the study population, and 30-day mortality was 208/487 (42.7%). There was no difference in use of early DNR orders (p = 0.68) or 30-day mortality (p = 0.49) between the Texas and California study population. Texas patients were older (median 74 vs 64, p < 0.001), more often Hispanic (56.6% vs 5.8%, p < 0.001), had a smaller hemorrhage volume (median 24 vs 35.5, p = 0.001), and were less likely to have infratentorial hemorrhage (11.1% vs 18.9%) than California patients. There were no differences by cohort in gender, GCS, or presence of intraventricular hemorrhage.

Table 1 Demographics and baseline characteristics (n = 487)

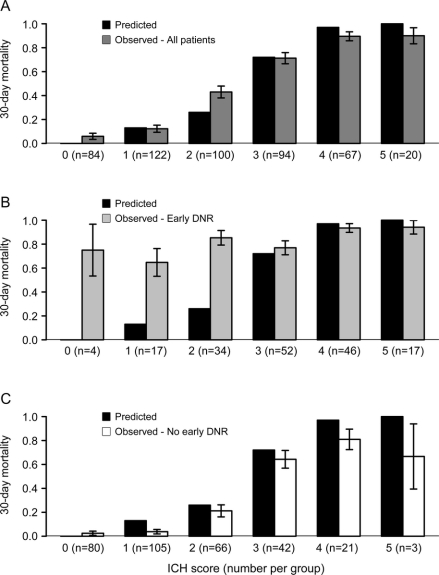

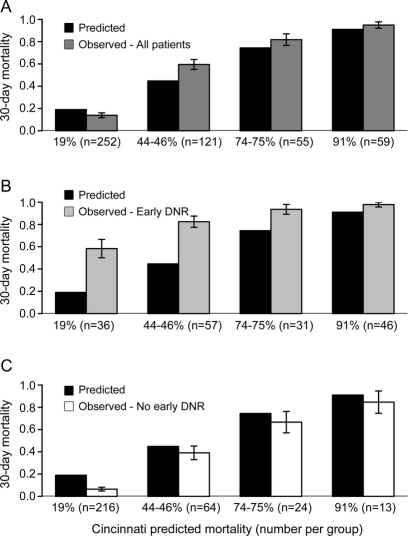

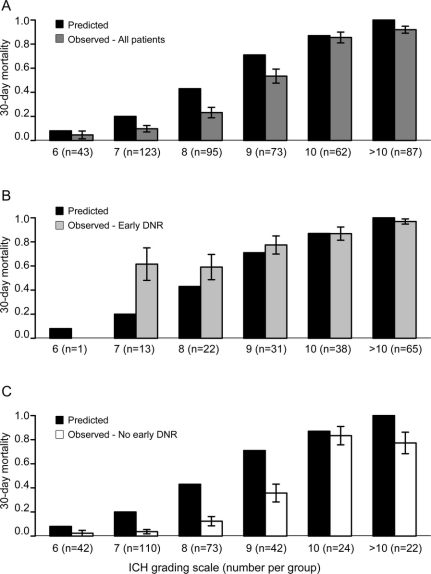

Increasing scores in all 3 models were associated with increasing 30-day mortality (p < 0.001, Cuzick test for trend). The observed mortality and the average predicted mortality from various models are shown in table 2, overall and stratified by early DNR status. All 3 models numerically underpredicted 30-day mortality in those with DNR orders, and overpredicted 30-day mortality in those without DNR orders. Goodness-of-fit p values comparing observed and predicted mortality are shown in table 2 and discussed in further detail below. Figures 1 through 3 show the comparison of the predicted and observed mortality broken down by strata of predicted mortality for each of the 3 models.

Table 2 Comparison of observed and predicted 30-day mortality by early DNR status

Figure 1 Comparison of observed and predicted mortality for the intracerebral hemorrhage (ICH) Score

Comparison of ICH Score predicted 30-day mortality with actual observed mortality for (A) the overall cohort, (B) patients with early do-not-resuscitate (DNR) orders, and (C) patients without early DNR orders. Note that the predicted mortality for an ICH Score of 0 is 0%, and therefore there is no solid black bar shown for an ICH Score of 0. Error bars represent the standard error.

Figure 2 Comparison of observed and predicted mortality for the Cincinnati model

Comparison of Cincinnati model–predicted 30-day mortality with actual observed mortality for (A) the overall cohort, (B) patients with early do-not-resuscitate (DNR) orders, and (C) patients without early DNR orders. Predicted mortality categories of 44% and 46% and 74% and 75% were pooled for the graph and for the primary analysis. Error bars represent the standard error.

Figure 3 Comparison of observed and predicted mortality for the intracerebral hemorrhage (ICH) grading scale (ICH-GS)

Comparison of ICH-GS–predicted 30-day mortality with actual observed mortality for (A) the overall cohort, (B) patients with early do-not-resuscitate (DNR) orders, and (C) patients without early DNR orders. No patients had an ICH-GS of 13. Only 4 patients had an ICH-GS of 5 (all survived to 30 days) and are not shown in the figure. One patient with early DNR orders had an ICH-GS of 6 (deceased) and is not shown in B. Error bars represent the standard error.

When considering all patients both with and without early DNR orders (first row of table 2), the average 30-day mortality predicted by the ICH Score (39.9%) and the Cincinnati model (40.4%) were fairly similar to the overall observed mortality (42.7%). In contrast, the goodness-of-fit test indicated borderline lack of model fit for both the ICH Score (χ23 = 12.8, p = 0.005) and the Cincinnati model (χ22 = 9.8, p = 0.007) based on the corrected threshold p value of 0.006. The reason for this discrepancy between the similar average predicted mortality but borderline significant goodness-of-fit test is apparent in figures 1A and 2A. Note that the averages are similar because the overpredictions for some categories of predicted mortality balance out the underpredictions for other categories. The ICH-GS overestimated the average mortality compared with the observed mortality for the overall cohort (53.9% vs 39.9%) and in every strata of risk (figure 3A) and showed lack of model fit (χ23 = 20.0, p < 0.001).

For patients with early DNR orders (row 2 of table 2), all 3 models numerically underpredicted average 30-day mortality. Goodness-of-fit tests indicated a greater lack of model fit for the ICH Score (χ22 = 92.7, p < 0.001, figure 1B) and Cincinnati model (χ22 = 49.4, p < 0.001, figure 2B) than was seen for each model in the overall cohort. Goodness-of-fit tests for the ICH-GS indicated no difference between model-predicted and observed mortality in individuals with early DNR orders (χ22 = 8.2, p = 0.02, figure 3B) based on the corrected threshold p value of 0.006.

For individuals without early DNR orders, all 3 models tended to overestimate the average 30-day mortality (row 3 of table 2). Goodness-of-fit tests indicated no difference between model-predicted and observed mortality for the ICH Score (χ22 = 6.0, p = 0.05, figure 1C). In contrast, both the Cincinnati model (χ22 = 18.6, p < 0.001, figure 2C) and the ICH-GS (χ22 = 41.5, p < 0.001, figure 3C) showed a difference between model-predicted and observed mortality in these individuals without early DNR orders.

Additional post hoc exploratory analyses were performed to assess the impact of the different cohorts and preexisting DNR orders on our findings. When examining the Texas and California cohorts separately, the trends of overestimating average mortality for those without DNR orders and underestimating average mortality for those with DNR orders persisted for all 3 models. Restricting the analysis to cases without preexisting DNR orders also showed persistent overestimation of average mortality for those without DNR orders and underestimation of average mortality for those with DNR orders for all models.

DISCUSSION

Early DNR orders dramatically impact the performance of existing ICH predictive models. The 3 models investigated tended to underestimate 30-day mortality in patients with early DNR orders, and overestimate mortality in those without early DNR orders. Relatively high 30-day mortality was noted in patients with early DNR orders even in otherwise good prognostic categories for all 3 models. Applying these predictive models to an individual patient without considering early DNR status could lead to inaccurate predictions of mortality risk with potentially critical implications for patient and family decision-making. Our findings highlight the problems that can occur in any prognostic model if an important predictive factor is not considered.26

Although each of the 3 models examined tended to underestimate mortality for patients with DNR orders and overestimate risk for those without such orders, there were differences among the models. The ICH-GS tended to overestimate mortality in the overall study cohort. Therefore the overprediction of mortality in those without early DNR orders was even more dramatic than in the other models (figure 3C), and the ICH-GS actually seemed to perform better in patients with early DNR orders (comparing the p values across the ICH-GS in table 2). In contrast, the ICH Score actually performed best in patients without early DNR orders. Use of DNR orders varies dramatically across institutions,9 and it is possible that the differential model performance by DNR status observed here is representative of underlying differences in DNR use in the original development cohorts for each model. This hypothesis cannot be proven as the DNR status is unknown for all of the development cohorts. Alternatively, the differential model performance could be explained by other differences in patient characteristics across the model development cohorts, or due to the differential weighting of risk factors within the models.

The impact of withdrawal of supportive treatment on the performance of the ICH Score and the Cincinnati model was assessed previously in a single-center study of 241 patients.27 The authors of this study concluded that withdrawal of supportive treatment did not impact model performance as the receiver operating characteristic (ROC) curves and model R2 were similar when comparing the overall cohort and then excluding those with withdrawal of support. However, the sensitivity of both models for predicting 30-day mortality was reduced when removing patients who had withdrawal of support, indicating that the models were less able to predict who will die in this restricted group of patients.27 Even though overall model performance was not affected as measured by ROC curves and model R2, the change in sensitivity indicates that there was some degree of change in model performance. ROC curves may not detect clinically meaningful differences in individual risk assessment when used to assess the performance of prognostic models, and assessment of model calibration with methods such as the χ2 goodness-of-fit test have been suggested.28 It is difficult to directly compare this prior analysis with our results, since withdrawal of supportive treatment and early DNR orders are distinct, yet related, concepts. A DNR order is typically a necessary prerequisite for withdrawal of supportive treatment. Our analysis may therefore be more reflective of the decisions made early in the course of ICH treatment.

All clinical prognostic models, whether defined by an explicit predictive score, or a clinician's experience and intuition, have a degree of uncertainty. DNR status appears to further complicate the predictive accuracy of existing models. How to counsel patients and families in the face of this uncertainty is a challenge for the practicing clinician. Some guidance can be found in a study of surrogate decision-makers in the medical intensive care unit, which reported that the majority (155/179, 87%) of surrogates wanted physicians to discuss prognosis, even when it was uncertain. Many of the surrogate decision-makers wanted the physicians to explicitly discuss uncertainty when making prognostic estimates.29

Several factors may have confounded our findings. First, it is possible that the early DNR orders served as a marker of comorbid illness or hemorrhage severity that was not captured by the predictive models. Data on comorbid conditions likely to impact DNR decisions, such as cancer or dementia, were not collected. It is unlikely that preexisting DNR orders accounted for our findings. A low proportion of cases (5.5%) had preexisting DNR orders clearly documented in the chart. Additionally, the post hoc exploratory analysis excluding cases with preexisting DNR orders did not alter the findings.

Our analysis has limitations. This was a post hoc combination of 2 separately collected datasets. Therefore there were some minor differences in the data collection procedures, and there may have been differences in the patient treatment protocols. However, a post hoc exploratory analysis demonstrated that the trends of underestimating mortality for patients with early DNR orders and overestimating mortality for those without early DNR orders persisted in each cohort separately. This combined dataset provided a racially and ethnically diverse cohort which may be more broadly representative of ICH patients in general. The specific reasons for the use of early DNR orders were not identified for individual patients, and future studies should assess reasons for DNR orders including patient and family preferences for treatment. Finally, we do not have data on functional outcome or quality of life on the entire cohort, which would be helpful to know if survivors without early DNR orders were left with severe disability. The functional outcome of the California cohort has previously been reported, and very few patients (13/243, 5%) had severe disability (modified Rankin of 5) at 12 months.14

AUTHOR CONTRIBUTIONS

Statistical analysis was conducted by Dr. D.B. Zahuranec, Dr. B.N. Sánchez, and Dr. J.C. Hemphill III.

DISCLOSURE

Dr. Zahuranec has received speaker honoraria from the Society of Vascular and Interventional Neurology and has received/receives research support from the NIH/NINDS (R01 NS38916 [coinvestigator] and R01NS42167 [local coinvestigator]), the American Heart Association (postdoctoral fellowship 0625692Z), and the University of Michigan (Cardiovascular Center McKay Grant Award). Dr. Morgenstern has served on a one-time advisory board for Genentech Inc. and serves on a medical adjudication board for Wyeth; receives research support from AGA Medical Corporation, the NIH/NINDS (R01 NS38916 [PI], R01 NS050372 [coinvestigator], U01 NS052510 [coinvestigator], U01 NS056975 [coinvestigator], U54 NS057405 [coinvestigator], and R01 NS062675 [PI]); and has served as a research consultant for the Alaska Native Medical Center. Dr. Sánchez receives research support from the NIH (R01 ES007821 [coinvestigator], R21 DA024273 [coinvestigator], U54NS057405 [coinvestigator], R01 ES016932 [coinvestigator], R01 ES017022 [coinvestigator], R01 NS38916 [coinvestigator], and R01 NS062675 [coinvestigator]). Dr. Resnicow serves on the editorial board of Health Psychology and receives research support from the NIH (P50 CA 101451 [PI], RO1 HL085420 [coinvestigator], RO1 DK064695 [coinvestigator], R01 HL068971 [coinvestigator], R34 MH 079123 [coinvestigator], R01 HL085400 [PI], R01 HL089491 [coinvestigator], and R21 CA134961 [PI]) and the Centers for Disease Control (U48 DP0005 [coinvestigator]). Dr. White receives research support from the NIH (K23 AG032875 [PI], R01 HLO094553 [PI], and R21 HL094975)]) and the Greenwall Foundation Faculty Scholars Program in Bioethics. Dr. Hemphill has received stock and stock options for serving on scientific advisory boards of Innercool Therapies and Ornim; has received funding for travel from Novo Nordisk; has served as a consultant for Novo Nordisk, UCB, and Medivance; serves on the speakers' bureau of the Network for Continuing Medical Education; has received research support from Novo Nordisk, the NIH (NINDS K23 NS41240 [PI] and U10 NS058931 [PI]), and the University of California; and has performed case reviews and given expert testimony regarding stroke or neurocritical care.

Supplementary Material

Address correspondence and reprint requests to Dr. Darin B. Zahuranec, University of Michigan Cardiovascular Center, 1500 East Medical Center Drive, SPC#5855, Ann Arbor, MI 48109-5855 zdarin@umich.edu

Supplemental data at www.neurology.org

e-Pub ahead of print on July 7, 2010, at www.neurology.org.

Study funding: Supported by the NIH (RO1 NS38916 and K23 NS41240) and the American Heart Association (postdoctoral fellowship 0625692Z).

Disclosure: Author disclosures are provided at the end of the article.

Received December 10, 2009. Accepted in final form May 7, 2010.

REFERENCES

- 1.Ariesen MJ, Algra A, van der Worp HB, Rinkel GJ. Applicability and relevance of models that predict short term outcome after intracerebral haemorrhage. J Neurol Neurosurg Psychiatry 2005;76:839–844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Broderick JP, Brott TG, Duldner JE, Tomsick T, Huster G. Volume of intracerebral hemorrhage: a powerful and easy-to-use predictor of 30-day mortality. Stroke 1993;24:987–993. [DOI] [PubMed] [Google Scholar]

- 3.Hemphill JC 3rd, Bonovich DC, Besmertis L, Manley GT, Johnston SC. The ICH Score: a simple, reliable grading scale for intracerebral hemorrhage. Stroke 2001;32:891–897. [DOI] [PubMed] [Google Scholar]

- 4.Ruiz-Sandoval JL, Chiquete E, Romero-Vargas S, Padilla-Martinez JJ, Gonzalez-Cornejo S. Grading scale for prediction of outcome in primary intracerebral hemorrhages. Stroke 2007;38:1641–1644. [DOI] [PubMed] [Google Scholar]

- 5.Tuhrim S, Horowitz DR, Sacher M, Godbold JH. Validation and comparison of models predicting survival following intracerebral hemorrhage. Crit Care Med 1995;23:950–954. [DOI] [PubMed] [Google Scholar]

- 6.Clarke JL, Johnston SC, Farrant M, Bernstein R, Tong D, Hemphill JC 3rd. External validation of the ICH Score. Neurocrit Care 2004;1:53–60. [DOI] [PubMed] [Google Scholar]

- 7.Hallevi H, Dar NS, Barreto AD, et al. The IVH score: a novel tool for estimating intraventricular hemorrhage volume: clinical and research implications. Crit Care Med 2009;37:969–974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Weimar C, Ziegler A, Sacco RL, Diener HC, Konig IR. Predicting recovery after intracerebral hemorrhage–an external validation in patients from controlled clinical trials. J Neurol 2009;256:464–469. [DOI] [PubMed] [Google Scholar]

- 9.Hemphill JC 3rd, Newman J, Zhao S, Johnston SC. Hospital usage of early do-not-resuscitate orders and outcome after intracerebral hemorrhage. Stroke 2004;35:1130–1134. [DOI] [PubMed] [Google Scholar]

- 10.Zahuranec DB, Brown DL, Lisabeth LD, et al. Early care limitations independently predict mortality after intracerebral hemorrhage. Neurology 2007;68:1651–1657. [DOI] [PubMed] [Google Scholar]

- 11.Shepardson LB, Youngner SJ, Speroff T, Rosenthal GE. Increased risk of death in patients with do-not-resuscitate orders. Med Care 1999;37:727–737. [DOI] [PubMed] [Google Scholar]

- 12.Sulmasy DP. Do patients die because they have DNR orders, or do they have DNR orders because they are going to die? Med Care 1999;37:719–721. [DOI] [PubMed] [Google Scholar]

- 13.Morgenstern LB, Smith MA, Lisabeth LD, et al. Excess stroke in Mexican Americans compared with non-Hispanic Whites: the Brain Attack Surveillance in Corpus Christi Project. Am J Epidemiol 2004;160:376–383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hemphill JC 3rd, Farrant M, Neill TA Jr. Prospective validation of the ICH Score for 12-month functional outcome. Neurology 2009;73:1088–1094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zahuranec DB, Gonzales NR, Brown DL, et al. Presentation of intracerebral haemorrhage in a community. J Neurol Neurosurg Psychiatry 2006;77:340–344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kothari RU, Brott T, Broderick JP, et al. The ABCs of measuring intracerebral hemorrhage volumes. Stroke 1996;27:1304–1305. [DOI] [PubMed] [Google Scholar]

- 17.Cheung RT, Zou LY. Use of the original, modified, or new intracerebral hemorrhage score to predict mortality and morbidity after intracerebral hemorrhage. Stroke 2003;34:1717–1722. [DOI] [PubMed] [Google Scholar]

- 18.Godoy DA, Pinero G, Di Napoli M. Predicting mortality in spontaneous intracerebral hemorrhage: can modification to original score improve the prediction?. Stroke 2006;37:1038–1044. [DOI] [PubMed] [Google Scholar]

- 19.Weimar C, Roth M, Willig V, Kostopoulos P, Benemann J, Diener HC. Development and validation of a prognostic model to predict recovery following intracerebral hemorrhage. J Neurol 2006;253:788–793. [DOI] [PubMed] [Google Scholar]

- 20.el Chami B, Milan C, Giroud M, Sautreaux JL, Faivre J. Intracerebral hemorrhage survival: French register data. Neurol Res 2000;22:791–796. [DOI] [PubMed] [Google Scholar]

- 21.Mase G, Zorzon M, Biasutti E, Tasca G, Vitrani B, Cazzato G. Immediate prognosis of primary intracerebral hemorrhage using an easy model for the prediction of survival. Acta Neurol Scand 1995;91:306–309. [DOI] [PubMed] [Google Scholar]

- 22.Qureshi AI, Safdar K, Weil J, et al. Predictors of early deterioration and mortality in black Americans with spontaneous intracerebral hemorrhage. Stroke 1995;26:1764–1767. [DOI] [PubMed] [Google Scholar]

- 23.Nilsson OG, Lindgren A, Brandt L, Saveland H. Prediction of death in patients with primary intracerebral hemorrhage: a prospective study of a defined population. J Neurosurg 2002;97:531–536. [DOI] [PubMed] [Google Scholar]

- 24.Cuzick J. A Wilcoxon-type test for trend. Stat Med 1985;4:87–90. [DOI] [PubMed] [Google Scholar]

- 25.Hosmer DW, Lemeshow S. Applied Logistic Regression. New York: Wiley; 1989. [Google Scholar]

- 26.Harrell FE Jr, Lee KL, Mark DB. Multivariable prognostic models: issues in developing models, evaluating assumptions and adequacy, and measuring and reducing errors. Stat Med 1996;15:361–387. [DOI] [PubMed] [Google Scholar]

- 27.Matchett SC, Castaldo J, Wasser TE, Baker K, Mathiesen C, Rodgers J. Predicting mortality after intracerebral hemorrhage: comparison of scoring systems and influence of withdrawal of care. J Stroke Cerebrovasc Dis 2006;15:144–150. [DOI] [PubMed] [Google Scholar]

- 28.Cook NR. Use and misuse of the receiver operating characteristic curve in risk prediction. Circulation 2007;115:928–935. [DOI] [PubMed] [Google Scholar]

- 29.Evans LR, Boyd EA, Malvar G, et al. Surrogate decision-makers' perspectives on discussing prognosis in the face of uncertainty. Am J Respir Crit Care Med 2009;179:48–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.