Abstract

Failure to selectively attend to a facial feature, in the part-to-whole paradigm, has been taken as evidence of holistic perception in a large body of face perception literature. In this paper we demonstrate that although failure of selective attention is a necessary property of holistic perception, its presence alone is not sufficient to conclude holistic processing has occurred. One must also consider the cognitive properties that are a natural part of information-processing systems, namely, mental architecture (serial, parallel), a stopping rule (self-terminating, exhaustive), and process dependency. We demonstrate that an analytic model (nonholistic) based on a parallel mental architecture and a self-terminating stopping rule can predict failure of selective attention. The new insights in our approach are based on the systems factorial technology, which provides a rigorous means of identifying the holistic/analytic distinction. The main goal of the study was to compare potential changes in architecture when two second-order relational facial features are manipulated across different face contexts. Supported by simulation data, we suggest that the critical concept for modeling holistic perception is the interactive dependency between features. We argue that without conducting tests for architecture, stopping rule, and dependency, apparent holism could be confounded with analytic perception. This research adds to the list of converging operations for distinguishing between analytic and holistic forms of face perception.

Keywords: Holistic, analytic, face perception, information processing, systems factorial technology, selective attention

Face perception is so important in our daily routines. But have you ever thought that your life can depend on it? In one case of false identification, a victim mistakenly identified an innocent person as a rapist (What Jennifer Saw, 2009). In this case, the face of a wrongly accused person, among other cues, served as the basis for making the wrong conviction. To understand when and why we make mistakes, we need to examine how we perceive faces—how the information coming from different face parts (eyes, nose, lips, etc.) is combined into a single perceptual experience of a single face. In the perception literature two hypotheses have been dominant. The holistic hypothesis assumes that objects (and faces) are perceived as whole entities and not as a sum of independent features.1 The analytic (or feature-based) hypothesis, in contrast, assumes that perception of an object's details is important in everyday life and suggests that perception is conducted on individual features that make up an object.

In the current scientific literature the question is no longer whether faces are exclusively processed as holistic units or as independent facial features. There is vast evidence supporting both hypotheses. The real question is how to tell when one or the other process is in play. What is the basis of holistic face perception that led the rape victim to make her error?

One major approach to distinguishing holistic and analytic face perception has been based on the experimental method dubbed the part-to-whole paradigm. This approach explores whether it is possible to attend selectively to a facial feature (a “part”) under different face contexts (the “whole”; Farah, Tanaka, & Drain, 1995; Farah, Wilson, Drain, & Tanaka, 1998; Tanaka & Farah, 1991, 1993; Tanaka & Sengco, 1997). For example, a task could be to recognize Joe's eyes embedded in different contexts: Joe's face, Bob's face, or in isolation. (Joe's face would be considered an old-face context and Bob's face a new-face context.) If recognition of Joe's eyes is not affected by the change of face context, then selective attention to Joe's eyes has succeeded. In this case face perception has relied on feature-based properties and is not affected by a face's wholeness/configuration. According to the part-to-whole view, facial features are stored in memory separately. Due to very efficient feature selectivity, all attention is diverted to Joe's eyes while all other facial features are ignored.

From a holistic perspective, facial features are stored as a unit representation and not as separate representations. In this case, selective attention to Joe's eyes should fail and recognition accuracy of Joe's eyes should decrease under different face contexts (e.g., when Joe's eyes are in Bob's face). The wholeness of a face, or its configural completeness, prevents accurate feature recognition.

The results of many studies that employed the part-to-whole paradigm showed that selective attention to isolated face parts can, in fact, fail. In other words, face parts were recognized better in their old-face context than when they were in a new-face context, in isolation (Davidoff & Donnelly, 1990; Donnelly & Davidoff, 1999; Leder & Bruce, 2000; Tanaka & Farah, 1993; Tanaka & Sengco, 1997), or in a face with scrambled facial features (Homa, Haver, & Schwartz, 1976; Mermelstein, Banks, & Prinzmetal, 1979). Also recognition of prelearned face parts was hindered by facial context, even if this context was irrelevant for recognition (Leder & Carbon, 2005). These results, by and large, support holistic perception and indicate a rather small role for analytic face perception (Farah et al., 1995, 1998; Tanaka & Farah, 1991, 1993; Tanaka & Sengco, 1997).

In general, the failure of selective attention exhibited in the part-to-whole paradigm has provided an important clue to understanding the holistic properties of face perception, but what we can learn from this failure is limited. We claim that although the failure of selective attention is a necessary component of holistic perception, in itself it is not sufficient to explain it. The part-to-whole paradigm and its focus on the failure of selective attention ignores the cognitive properties that are a natural part of information-processing systems.

In the next section we will briefly review the fundamental cognitive properties of these systems and what role they play in holistic and analytic perception. We will then demonstrate why it is necessary to explore information-processing systems to distinguish holistic from analytic perception successfully.

The Fundamental Properties of Information-Processing Systems

The theory of information-processing systems aims to explain the structure and organization of the mental processes underlying cognition and decision making (e.g., Townsend, 1984; Townsend & Wenger, 2004a). In face perception, for instance, it is largely unknown how information coming from separate feature units is combined into a single final decision. In what order are features processed? Processing can be serial, parallel, or coactive, corresponding to three types of mental architecture. In a serial architecture features are processed in a sequential manner, one after another. In a parallel architecture, all features are processed simultaneously. A coactive architecture pools the features into a single perceptual unit, prior to a single overall decision.

When do we stop searching for the most informative features? This is known as a stopping rule. In the case of self-terminating processing, search can terminate on a single feature. In the case of exhaustive processing all features must be processed.

Another important property of information-processing systems is the dependency between processes. An independent feature-processing system means that during face recognition, feature recognition processes do not corroborate each other. In a dependent feature-processing system, feature recognition processes can either help each other and make the overall decision more efficient or compete and make the overall decision less efficient. These are known as facilitatory and inhibitory dependent systems, respectively.

These three properties—mental architecture, stopping rule, and dependency—can be combined to produce different information-processing models, but none of them have been examined in combination with a part-to-whole paradigm.

Analytic and Holistic Perception as Information-Processing Systems

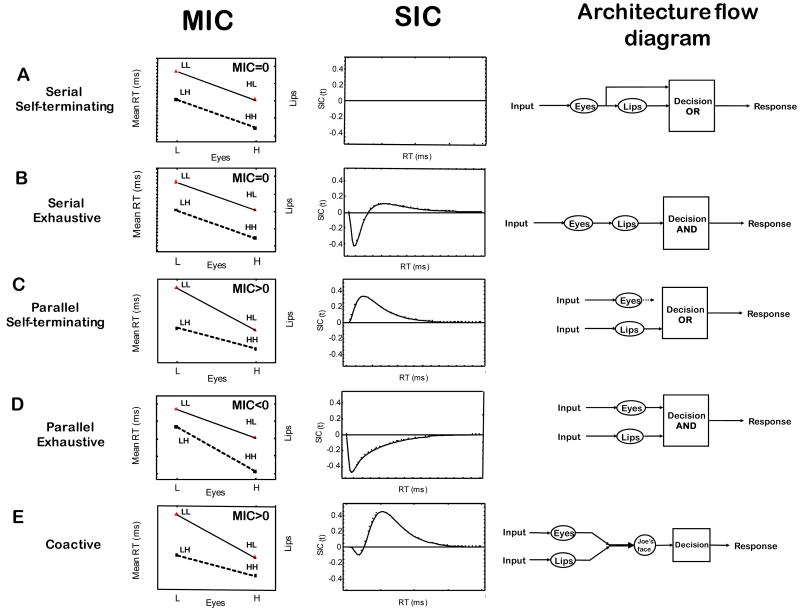

In Figure 1 (right column) we show the schematics of several information-processing systems that include serial, parallel, and coactive architectures. As can be seen, both serial and parallel architectures utilize feature-based face representations.2 Each feature is processed separately, and the outcome is sent to a decision box (OR or AND). The decision box defines the stopping rule mechanism: Processing could terminate on the completion of a single process (OR) or on the completion of all processes (AND). In sum, the models A to D in Figure 1 are considered to be different variants of information-processing models that promote analytic face perception.

Figure 1.

Mean interaction contrast (MIC; left column) and survivor interaction contrast (SIC; middle column) across different architectures and stopping rules (rows A–E), and diagrams of the major types of architecture flow (right column). For illustration purposes the analysis is based on processing of two facial features (eyes and lips). RT: Response time. HH, HL, LH, and LL: The first letter denotes the saliency level (L for low, H for high) of the eye separation, and the second letter denotes the saliency level of the lip position.

Here it can be argued that parallel processing and an exhaustive stopping rule are better suited to holistic perception than to analytic. Although this may be true, both parallel processing and an exhaustive stopping rule can be performed in a very analytic fashion and thus cannot support the strong holistic hypothesis when consider in isolation.

The coactive architecture, model E in Figure 1, combines a parallel architecture, exhaustive stopping rule, and feature dependency (Townsend & Wenger, 2004b; Wenger & Townsend, 2001). The coactively processed unit (Figure 1E, “Joe's face”) corresponds to a template-like mental representation of a face. In the coactive system, the results of simultaneous processing of all features are combined into one single unit. It is assumed that these processes act on each other in a facilitatory way (dependent feature processing). Thus, the third fundamental property of information-processing systems—dependency— is the defining property of coactive processing and can be used to distinguish between holistic and analytic perception.

To stress the importance of testing for the presence of mental architecture, stopping rule, and dependency, we will present as an example a case in which processing is strictly analytic but produces a “holistic” effect of failure of selective attention. A detailed analysis of the predictions of each information-processing model displayed in Figure 1 will not be presented in this paper. Rather, we will focus on a version of a parallel self-terminating model that can be easily identified using the advanced response time technology that we will describe later.

Information-Processing Systems and the Failure of Selective Attention

In the part-to-whole paradigm, failure of selective attention is taken to imply holistic perception. Successful selective attention is taken to imply analytic perception. This logic is challenged when mental architecture, stopping rule, and process dependency are taken into account. These three fundamental properties of information-processing systems provide a richer playground for holistic/analytic assessment.

The intriguing question is which of the models, if any, presented in Figure 1 (except the coactive model) can predict failure of selective attention and at the same time possess analytic perceptual properties. It is easy to demonstrate that an analytic model based on a parallel mental architecture and a self-terminating stopping rule (model C, Figure 1), colloquially known as the “horse-race model” (e.g., Eidels, Townsend, & Algom, in press; LaBerge, 1962; Marley & Colonius, 1992; Pike, 1973; Townsend & Ashby, 1983; Van Zandt, Colonius, & Proctor, 2000; Vickers, 1970), can predict failure of selective attention, thus suggesting holistic perception is at work. The horse-race model is feature based (analytic) because it assumes that features are stored as independent memory representations and are processed independently, as well. The model is parallel and self-terminating because it assumes that all stored features are processed simultaneously, and the processing terminates on recognition of any feature. The “horse” that arrives first wins the race—that is, the first facial feature to be recognized triggers the response.

For example, consider the task of recognizing Joe's eyes when embedded in Joe's face or Bob's face, or when presented in isolation. The novel idea of the horse-race model is that all facial features (including both Joe's and Bob's) are stored as noisy memory representations, and during the task all of these features race to be recognized. The first-to-be-recognized feature is used to make an overall decision. According to this model, from trial to trial a different facial feature might arrive first to be recognized. Sometimes Bob's nose could be recognized first, instead of the target Joe's eyes. In this case the overall decision—“This was not Joe's face it was Bob's face”—would be incorrect. The model can predict failure of selective attention to a single facial feature under different face contexts. Both the new-face (i.e., Bob's face) and isolation contexts reduced the recognition accuracy of Joe's eyes.

Properly diagnosing the fundamental properties of information-processing systems is essential to distinguishing between holistic and analytic face perception. Significant work has been done on developing appropriate diagnostic tests. One approach is termed systems factorial technology (SFT).

Using Systems Factorial Technology (SFT) to Identify Fundamental Face-Processing Characteristics

SFT is a suite of methodologies that permits the assessment of a set of critical properties of an information-processing system, all within the same basic paradigm. SFT springs from generalizations of Sternberg's (1969) additive factors method and Schweickert's latent network theory (e.g., Schweickert, 1978; Schweickert & Townsend, 1989; Townsend, 1984; Townsend & Ashby, 1983). Our current approach is perhaps best captured by the Townsend and Nozawa (1995) double factorial paradigm. SFT has been successfully employed in a wide variety of cognitive tasks (e.g., Egeth & Dagenbach, 1991; Eidels, Townsend, & Pomerantz, 2008; Fific, 2006; Fific, Nosofsky & Little, in press; Fific, Nosofsky, & Townsend, 2008; Fific, Townsend, & Eidels, 2008; Ingvalson & Wenger, 2005; Johnson, Blaha, Houpt, & Townsend, 2009; Sung, 2008; Townsend & Fific, 2004; Wenger & Townsend, 2001, 2006).

In SFT tests, two statistics allow for assessment of the fundamental properties of cognitive systems: the signatures of a mean interaction contrast (MIC) and of a survivor interaction contrast (SIC) function. Both statistics allow for delineation between serial, parallel, and coactive architectures employing different stopping rules (see Figure 1, left and middle columns, for the visual appearance of the MIC's and SIC's shape for each model). For more tutorials on the SFT topic a reader is referred to several recent publications (e.g., Fific, Nosofsky, et al., 2008; Fific, Townsend, et al., 2008; Townsend & Fific, 2004; Townsend, Fific. & Neufeld, 2007).

SFT: Methodology, Measures, and Tests

Suppose that our aim is to explore information-processing properties of a simple task involving detection of two facial features to learn whether the two processes are organized in serial, parallel, or coactive fashion. Also assume that, via experimental control, we can manipulate these two processes such that we can turn them off or on (absent/present), or manipulate the number of processes in a task (load). The question is whether these manipulations are sufficient to infer processing order and stopping rule. The answer is negative. Decades of research on this problem has indicated that we will readily confuse parallel and serial processing orders (e.g., Townsend, 1969, 1971, 1972; Townsend & Ashby, 1983). To infer the correct underlying mental architecture we need an additional manipulation.

The solution advocated by SFT is that instead of manipulating load and switching off/on, a particular process should be speeded up or slowed down, in a factorial manner. Let us define that the first factor is detection of the eyes and the second factor detection of the lips. Let us also manipulate each process's level of saliency, which allows for speeding up or slowing down of a particular process. (In what follows, H indicates a fast process, or high saliency and L a slow process, or low saliency.) Then the factorial combination of two factors with their two saliency levels leads to four experimental conditions, HH, HL, LH, and LL—the so-called double factorial design (2 × 2, as employed in an analysis of variance). For example, HL indicates a condition where the first factor (eyes) is of high salience and the second factor (lips) is of low salience. It is important to note that using this design, the different mental architectures will exhibit different data patterns of mean reaction times and, more importantly, their corresponding survivor functions, which brings us to the two main statistical tests used in SFT.

Mean Interaction Contrast (MIC)

MIC is based on the premise of an interactive analysis of variance (ANOVA) test and is a key computation in the additive factors method that Sternberg made popular (1969; see also Schweickert, 1978; Schweickert & Townsend, 1989):

| (1) |

where RT is response time. This statistic is obtained by the double difference of mean RTs associated with each level of separate experimental factors (in this case, 2 × 2 factorial conditions). So, for example, mean RTHL indicates mean response time for the condition where the first factor (eyes) is of high salience and the second factor (lips) is of low salience. The left column of Figure 1 shows typical patterns of MIC tests that are expected for different mental architectures combined with different stopping rules.

The pattern of “additivity” is reflected by an MIC value of 0. In an ANOVA, additivity is indicated by an absence of interaction between factors, thus implying that the effects of individual factors simply “add” together. In Sternberg's (1969) additive factors method this finding supported serial processing, in which the total response time is the sum of individual times stemming from each factor. Likewise, “overadditivity” is reflected by MIC>0 (a positive MIC), and “underadditivity” is reflected by MIC<0 (a negative MIC). Formal proofs of the results expressed below are provided by Townsend (1984) for parallel and serial systems, and for a wide variety of stochastic mental networks by Schweickert and Townsend (1989). Townsend and Thomas (1994) showed the consequences of the failure of selective influence when channels (items, features, etc.) are correlated.

If processing is strictly serial, then regardless of whether a self-terminating or an exhaustive stopping rule is used, the MIC value will equal zero; that is, the pattern of mean RTs will show additivity. For instance, if processing is serial exhaustive, then the increase in mean RTs for LL trials relative to HH trials will simply be the result of the two individual processes slowing down, giving us the pattern of additivity illustrated in Figure 1B, left column. Parallel exhaustive processing results in a pattern of underadditivity of the mean RTs (MIC<0). Finally, both parallel self-terminating processing and coactive processing will lead to a pattern of overadditivity of the mean RTs (MIC>0), as illustrated in Figure 1C and E.

Although a helpful statistic, MIC has limited diagnostic properties. In the current study, a value of MIC>0 would not discriminate between coactive or parallel self-terminating processing. That is, a value of MIC>0 does not indicate whether holistic or analytic perception occurred. However, values of MIC=0 or MIC<0 would indicate an analytic form of perception. The limitations of MIC are overcome by the SIC test.

Survivor Interaction Contrast (SIC)

More diagnostic power is provided when RTs are examined at the full distribution level. The survivor function, S(t), for a random variable T (which in the present case corresponds to the time of processing) is defined as the probability that the process T takes greater than t time units to complete, S(t)=P(T>t). Note that for time-based random variables, when t=0 it is the case that S(t)=1, and as t approaches infinity it is the case that S(t) approaches 0. Slower processing is associated with greater values of the survivor function across the time domain.

In a manner analogous to calculating the mean RTs, one can compute the survivor functions associated with each of the four main types of facial feature combinations, which we denote SLL(t), SLH(t), SHL(t), and SHH(t). Because processing is presumably slower for low-saliency than for high-saliency facial features, the survivor function for LL targets will tend to be of the highest magnitude, the survivor functions for LH and HL targets will tend to be of intermediate magnitude, and the survivor function for HH targets will be of the lowest magnitude.

In a manner analogous to calculating the MIC, Townsend and Nozawa (1995) showed how to compute the SIC. Specifically, at each sampled value of t, one computes

| (2) |

As illustrated in Figure 1, middle column, the different processing architectures under consideration yield distinct predictions of the form of the SIC function. Moreover, the SIC signatures are invariant across different underlying distribution functions. This is because SFT is a nonparametric methodology, meaning that it does not depend on specific assumptions regarding the particular form of the statistical distribution (gamma, Weibull, ex-Gaussian, etc). The SIC is closely related with the results from the MIC, because the value of the MIC is simply the integral of the SIC. That is, the total area spanned by the negative and positive portion of the SIC curve sums up to the MIC value (e.g., Townsend & Ashby, 1983).

If processing is serial self-terminating, then the value of the SIC is equal to zero at all time values t (Figure 1A, middle column).3 For serial exhaustive processing, the SIC is negative at low values of t, but positive thereafter, with the total area spanned by the negative portion of the function equal to the total area spanned by the positive portion (Figure 1B). Note that for both serial self-terminating and serial exhaustive cases, the integral of the SIC function (i.e., the MIC) is equal to zero, which is a case of mean RT additivity.

If processing is parallel self-terminating, then the value of the SIC is positive at all values of t (Figure 1C). The integral of the SIC in this case is, of course, positive, corresponding to a pattern of mean RT overadditivity, MIC>0. By contrast, for parallel exhaustive processing, the SIC is negative at all values of t (Figure 1D). The negative integral corresponds to the pattern of mean RT underadditivity, MIC<0.

Finally, for the present research purposes, the most critical case involves the signature for coactive processing. In this case the SIC shows a small negative blip at early values of t but then shifts to being strongly positive thereafter (Figure 1E). The predicted pattern of MIC overadditivity is the same as for parallel self-terminating processing, but these two processing architectures are now sharply distinguished in terms of the form of their predicted SIC functions.

The SIC test can diagnose a diverse set of information-processing models (A–E, Figure 1) and thus can serve as a tool for distinguishing between holistic and analytic face perception. Although the SIC test is more diagnostic than MIC we will use both throughout the current study. We include MIC tests mainly for reasons of practicality: (a) MIC patterns allow for visual inspection of mean RTs accompanied by the standard error bars, and (b) MIC tests are directly linked to ANOVA tests for judging significance of SIC shapes. That is, additivity, overadditivity, and underadditivity are inferred by testing the interaction between factors of interest.

Systems Factorial Technology: Methodological Modifications

To apply SFT to the current part-to-whole paradigm we needed to modify the original task (e.g., Tanaka & Sengco, 1997). First, instead of recognition accuracy, SFT uses RT as a dependent variable. Analysis of RT provides a powerful means for identification of mental processes (e.g., Luce, 1986; Ratcliff, 1978; Thornton & Gilden, 2007; Townsend & Ashby, 1983; Townsend & Nozawa, 1995) that taps into decision making, mental architectures, stopping rules, and processing dependency.

Second, most previous implementations of SFT employed search tasks where one or more features or items form a target set embedded within a set of distractors (e.g., Fific, Townsend, et al., 2008; Innes-Ker, 2003; Townsend & Fific, 2004; Wenger & Townsend, 2001). One goal of the present study was to generalize the methodology to a categorization task in which subjects assign each stimulus pattern to a category group. Our present subjects learned to categorize a set of faces into one of two groups (e.g., Fific, Nosofsky, et al., 2008; Fific et al., in press). The categorization paradigm is a reasonable facsimile of the kind of ecological task that we all engage in every day, classifying faces and objects into multiple categories. The nature of the categorization task does not confound holistic with analytic perception. Both holistic and analytic perceptions are possible options here: In an analytic mode subjects could assess only one feature of interest and classify a face. In a holistic mode subjects may need to access all available features and then categorize the stimuli.

Third, first-order relational features are generally thought to refer to the spatial relationships of the individual features located at their canonical positions, such as eyes above nose, nose above mouth, and so on. Second-order relational features are usually defined by spatial displacement of facial features within their canonical face positions (Diamond & Carey, 1986), which are considered to be important in face perception (Haig, 1984; Hosie, Ellis, & Haig, 1988; Leder & Bruce, 1998, 2000; Leder & Carbon, 2004; Maurer, Le Grand, & Mondloch, 2002; Mondloch, Geldart, Maurer, & Le Grand, 2003; Rhodes, 1988; Searcy & Bartlett, 1996; Sergent, 1984). In this study we aimed to investigate second-order relational features that involve spatial relationships rather than, say, specific eyes or a particular nose. This goal is accomplished as a natural part of our categorization design. We manipulated the spatial position of two second-order relational features: eye separation and lip position.4

Fourth, we tested the role of a stopping rule (self-terminating vs. exhaustive) by designing two types of task, each employing a different logical rule (OR/AND). In the OR condition a decision could be made only on processing either of the key, manipulated facial features. The stopping rule here is defined as self-terminating. Thus, the analytic strategy is viable because attendance to single facial features provides sufficient information for correct categorization. Complete processing of all facial features was required in the AND condition. The associated stopping rule is defined as exhaustive, where the decision component waits for positive recognition of all facial features, thus perhaps facilitating a holistic strategy.

Fifth, the SFT tests are applicable at the individual subject level. With SFT we are able to deliver accurate tests for individual subjects without the potential pitfalls of data averaging across subjects (Ashby, Maddox, & Lee, 1994; Estes, 1956). Summary statistics, as are usually reported in research papers, tend to wipe out the individual differences. In some cases this could lead to incorrect conclusions (e.g., Regenwetter et al., 2009). In our previous study employing SFT using a short-term memory paradigm, we found significant differences between subjects adopting either serial or parallel processing strategies (Townsend & Fific, 2004), which would have been obscured if the data had been averaged.

Experimental Design and Hypotheses

Following up on the basic idea contained in the part-to-whole paradigm, we designed a task in which subjects had to categorize two facial features into two face categories. Similar to Tanaka and Sengco (1997), we explored whether it is possible to selectively attend to these two facial features when face context changes. Extending Tanaka and Sengco's logic to our present categorization task, we expected that categorization would be faster, with fewer errors, when the features were seen in a previously learned face than when they were seen in a new face context.

The novel approach in this study is that the current design and SFT allow us to assess the underlying mental architecture, stopping rule, and process dependency used in the task. We predicted that when forced by the manipulation of face context, subjects' processing architecture would switch from coactive or parallel to slow serial processing (Signatures E and B in Figure 1, respectively). In terms of the stopping rule, in the OR condition it was possible to correctly classify a face based on only one processed feature. The most plausible decision strategy in this condition, if the information-processing system utilizes only featural properties, should be based on a parallel self-terminating or serial self-terminating architecture. So, we expected to observe Signature A or C in Figure 1. In the case of holistic processing, which involves integrating facial features, we expected to observe the coactive architecture, Signature E in Figure 1.

In the AND condition, only a subset of the processing architectures discussed previously are plausible candidates for a classification strategy. In particular, it would be implausible to see evidence of any form of self-terminating processing in the present case. The candidate processing architectures correspond to serial exhaustive and parallel exhaustive, Signatures B and D in Figure 1, respectively. Because of the assumed holistic nature of face stimuli, however, our key hypothesis was that we would be able to observe evidence of coactive processing (Signature E in Figure 1) in this condition.

Method

Subjects

The subjects were 12 graduate and undergraduate students (7 females and 5 males) from Indiana University between the ages of 18 and 40 years. All subjects were paid for their participation.

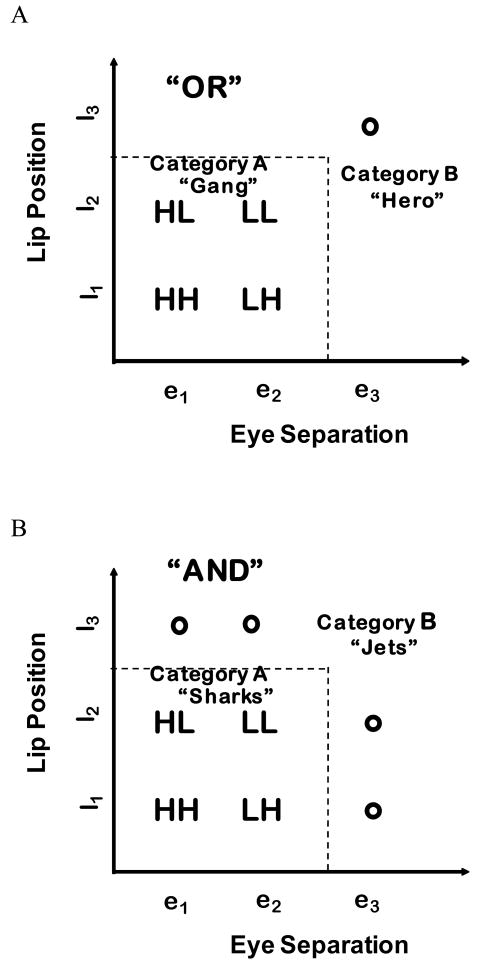

Stimuli

In a categorization task a face was presented on a computer monitor and the task was to classify it as belonging to one of two face categories. In the OR condition the categories were gang and hero; in the AND condition they were Jets and Sharks. All facial stimuli were formed in the two-dimensional face space, varying along eye separation and lip position saliency. In the OR condition, the gang category consisted of four faces that did not share any facial features with the one face that constituted the hero category (Figure 2A). In the AND condition, Sharks and Jets both consisted of four faces and shared some facial properties (Figure 2B).

Figure 2.

Two-dimensional face-space defined by eye separation and lip position, for the OR condition (A) and the AND condition (B). Factorial combination of the facial features and the salience levels produces four conditions: HH, HL, LH, and LL. The first letter denotes the saliency level (L for low, H for high) of the eye separation, and the second letter denotes the saliency level of the lip position. There are three values for the distance between the base of the nose and the upper lip, l1, l2, and l3 on the y-axis, and three values for the distance between eye centers, e1, e2, and e3 on the x-axis.

All faces were designed using the Faces 3 program (IQ Biometrix, Inc., 2006), which allows the distance between facial features to be changed. In Figure 2, the values of e1, e2, and e3 on the x-axis (eye separation) correspond to a distance of 45, 50, and 55 pixels between eye centers. Values of l1, l2, and l3 on the y-axis (lip position) correspond to a distance of 7, 11, and 13 pixels between the nose base and the upper lip. We used the same values, which were predetermined in a pilot study, for both experimental conditions (OR and AND). The size of an individual stimulus face was 135 × 185 pixels. Viewed from a distance of 100 cm, each stimulus subtended a visual angle of about 2.5–3°. A Pentium PC was used to run the study on a CRC monitor, with a display resolution of 1,024 × 768 pixels.

The face context was manipulated to ascertain potential changes in mental architecture during categorization of the two second-order relational facial features (the test phase, see Table 1). Three face examples are displayed in Figure 3A–C. All three faces possess the same second-order relational separation of eyes and lips, but they represent different contexts (old face, new face, and features alone, respectively; see below).

Table 1. Study Design.

| Session | Context | Task | Purpose |

|---|---|---|---|

| Learning phase | |||

| 1–12a | Old face | Categorization | Application of MIC and SIC |

| New face | Complete identification | New-face context learning: preparation for Phase 2 | |

| Test phase | |||

| 1 | Old face | Categorization | Application of MIC and SIC |

| New face | Complete identification | New-face context learning, continued | |

| 2 | Old face, new face | Categorization | Application of MIC and SIC |

| New face | Complete identification | New-face context learning, continued | |

| 3 | Old face, features alone | Categorization | Application of MIC and SIC |

| New face | Complete identification | New-face context learning, continued | |

| 4–12 | Latin square samples of Sessions 1–3 | ||

Note. MIC: mean interaction contrast; SIC: survivor interaction contrast.

The OR condition had 12 sessions; the AND condition had more—around 16, depending on the subject.

Figure 3.

Examples of face contexts. The contexts in A, B, and C were used in a categorization task. The context in D was used in a complete identification task. Note that the eye separation and lip position are the same across all face stimuli in the figure, except in (D).

Design and Procedure

The experiment was divided into two phases, a learning phase and a test phase. We analyze and report results from the test phase only.

In both phases subjects received two types of tasks: categorization and complete identification. Our main focus is on categorization task performance, measured by RT and accuracy, which allows application of SFT tests and assessment of the mental architecture. The complete identification task served only as a control for a novelty effect.

Design of a single categorization task trial

The procedure used in the categorization task was the same in all face context conditions. RT was recorded from the onset of a stimulus display up to the time of response. Each trial consisted of a central fixation point (crosshair) presented for 1,070 ms, followed by a high-pitched warning tone that lasted for 700 msec. Then a face was presented for 190 ms. The subjects' task was to decide to which of the two categories this face belonged and to indicate their choice by pressing a left or right mouse button. If the categorization was incorrect, subjects received an error message. On positive classification no feedback was received. Trial presentation order was randomized within each session. Subjects were instructed to attempt to achieve high accuracy but to respond as quickly as possible within that constraint. In all context conditions, only four stimulus conditions were subsequently analyzed using SIC and MIC tests. These are denoted HH, HL, LH, and LL in Figure 2A and B. The feature saliency is denoted as low (L) or high (H), depending on the relative distance between the two facial features. The distance is between two faces, each of which belongs to a different category (A, B), along one dimension. For example, the distance between eye separation relations e1 and e3 is larger (denoted as high saliency) than the distance between e2 and e3 (denoted as low saliency). A low feature saliency prompts slower decisions if only that feature is used for categorization. A high feature saliency allows for faster decisions if only that feature is used for categorization.

Next, we describe the designs for the categorization task utilizing an old-face context in the learning phase and different face contexts in the test phase.

The learning phase

We used the following experimental design: Feature Configuration (eye separation, lip position) × Feature Saliency (high vs. low discriminability). The main goal of the learning phase was to train the subjects to become experts in the categorization of faces. Subjects were assigned to either the OR or the AND condition (between-subjects factor). Each subject ran multiple sessions as described in Table 1. The subjects in the OR condition participated in a total of 12 learning sessions. The AND subjects required a longer learning period and received around 16 sessions, until they reached a plateau of high classification accuracy (usually less than 10% errors).

Both the OR and AND subjects received detailed instructions concerning which face properties could be used to differentiate the two face categories of gang and hero (OR condition) or Sharks and Jets (AND condition). This constituted what we called the old-face context. The reason for illustrating the full stimulus set and for providing explicit instructions about the category structure was to speed classification learning and to make the data collection process more efficient, especially in the AND condition.

In the OR condition, the subjects were given the following instructions (see Figure 3A): “The differences between faces are based on eye separation and lip position relative to the nose. A hero's face appears to be more SPREAD OUT (eyes are separated the most, and lips are at their lowest position). Gang member faces appear to be more compact—eyes are closer and lips are higher (closer to the nose).”

In the AND condition, along with giving detailed instructions, we illustrated the stimulus categories from Figure 3B. The instructions emphasized that in Jets' faces one of the two facial features was spread out more, such that either the eyes were separated more or the lips were displayed lower relative to the nose.

The test phase

The goal of the test phase was to investigate how well people categorize faces based on two well-learned second-order relational features (eye separation and lip position) when they are displayed in different face contexts (old face, new face, and features alone; see Table 1 for the presentation order of contexts). In the test phase we used the following experimental design: Feature Saliency (high vs. low discriminability) × Feature Configuration (eye separation, lip position) × Face Context (old face, new face, and features alone). Subjects were assigned to the same logical rule condition (AND or OR) as in the learning phase. All other factors were within subject. Each face context was tested in a separate blocked session, which will be described in more detail below. Each face context was used four times across 12 sessions. The order of presentation of a face context, for each subject, was counterbalanced using a Latin-square design. Thus the subjects participated in a total of 12 sessions in Phase 2 in either the OR or the AND condition.

The old-face context

In the categorization tasks subjects had to decide whether a displayed face belonged to one of the two categories: gang or hero in the OR condition, or Jets or Sharks in the AND condition. The old-face context appeared in both the learning and the test phases. The facial outline used in the old-face context is displayed in Figure 3A. The subjects performed a total of 600 trials. Each category was presented 300 times, with each stimulus face in the gang group, the Jets group, and the Sharks group being presented 75 times. The hero category consisted of only one face, presented 300 times.

1. The new-face context

In the new-face context condition the subjects received the following instructions: “After an incident that occurred between the gang and the hero, the members from both groups are hiding from the police. We are informed that they have disguised their faces in order not to be recognized. However ALL of them wear the same disguise. The disguise covers everything except the eyes and lips. Have in mind that lip position and eye separation are the same as before because the disguise does not cover them.” In the new-face context condition it was emphasized that the critical second-order relational information (eye separation and lip position), recognition of which was necessary and sufficient to generate a correct response, did not change. In one session, subjects received 400 trials of the old-face context and 200 of the new-face context, presented randomly. We used more faces from the old-face context than from the new-face context because pilot studies indicated that an approximately 2:1 ratio produced the most sizeable disruptive effects on holistic face processing. Due to the duration of the experiment, which is based on many RT observations, it was necessary to slow down learning of the new-face-context faces. Note that if these faces were not mixed in with the old-face-context faces, but blocked, this could yield to successful learning of the new-face-context faces. In this case the new-face-context faces would not appear as novel configurations but as another set of old-face-context faces. From the new-face context session we analyzed only faces with the new context. Of the 200 new-face trials, 100 faces belonged to the Jets, Sharks, or gang (25 per single face) and 100 to the hero.

The features-alone context

In the features-alone context condition the subjects were told a similar story to that in the old-face-context sessions except for the following: “In this session some of the faces presented will possess the eyes and lips only!” (as illustrated in Figure 3C). The design and procedure for this condition were identical to those for the new-face context: features-alone-context trials were mixed with the old-face-context trials, with the same 2:1 (old face:features alone) ratio as in the new-face context condition.

The complete identification task

The new-face context that was introduced in the learning phase was used later in the test phase (cf. Figure 3B and D). We presented four new faces that possessed the same face background in both the learning and test phases. The relation between eye and lip position was constant across all four faces. The eyes and lips used in this part did not appear in any other part of the study. In the complete identification task the subjects were instructed to learn the new group of four faces where each individual face was given a name (John, Peter, Saul, and Eric). One face was presented on a monitor at a time, and the task was to learn to recognize individual faces. Subjects pressed one of four buttons (labeled 1–4) on a numerical pad. If identification was incorrect, they received an error message and were provided with the correct name of that face. Each face was presented in 75 trials, with 300 total faces.

The purpose of the complete identification task was to control for a novelty effect of the new-face context. Note that in the test phase, the new-face context was intended to disrupt holistic processes of identification. To avoid confounding the effect of novelty with the effect of disruption, it was important to allow subjects to learn this face context in the learning phase, prior to the critical test phase.

Results

Face-Context Effect: Basic Mean RT and Accuracy Analyses for the OR and AND Conditions

We first explore the effects of the type of face context—old face, new face, and features alone—on categorization performance. Preliminary analyses are necessary to establish a platform for more theoretically significant SFT tests. Given that all subjects exhibited a uniform pattern of results, we present the averaged results across subjects. We used a repeated measures ANOVA with a 2 × 2 × 3 design: Feature Saliency (high vs. low discriminability) × Feature Configuration (eye separation, lip position) × Face Context (old face, new face, and features alone), separately for the OR and AND conditions. Note that the old-face context trials were taken from the test phase.

Mean RT analyses

The main effects of eye separation (high, low) and lip position (high, low) saliency were significant for both the OR condition, F(1,5)=208.13, p<.01, ηp2=.98 and F(1,5)= 36.76, p<.01, ηp2=.88, respectively, and the AND condition, F(1,5)= 69.03, p<.01, ηp2=0.93 and F(1,5)=62.55, p<.01, ηp2=0.93, respectively. For both facial features, high saliency produced significantly faster responses than low saliency.

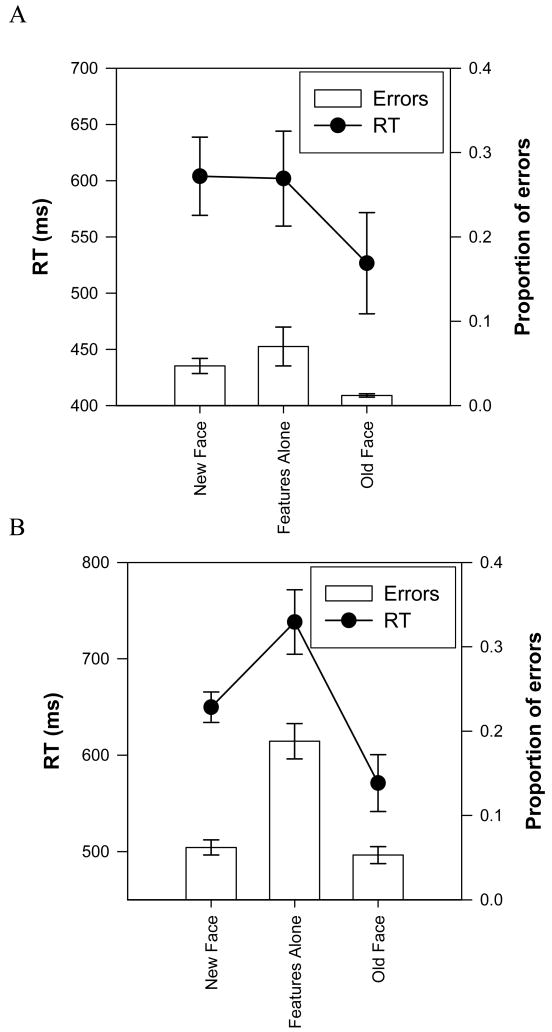

The main effect of face context was significant for both the OR condition, F(2,10)=7.12, p<.01, ηp2=.59, and the AND condition, F(2,10)=12.58, p<.01, ηp2=0.72. The mean RT for each group, averaged over subjects, is presented in Figure 4A and B.

Figure 4.

Mean response times (RTs) for different face groups in the OR condition (A) and in the AND condition (B), averaged across all subjects. Error bars around the means indicate standard errors; bars indicate mean proportion of errors.

To test the differences between different face contexts' mean RTs, we conducted pairwise comparisons using the post hoc least significant difference test. On average, the old-face context exhibited the fastest mean RTs (Figure 4A, B). The new-face and features-alone contexts produced significantly slower RTs than the old-face context in the OR condition, at the level of p<.05: RTnew–RTold=77 ms, p<.05, SE=27 ms; RTfeatures alone–RTold=75 ms, p<.05, SE=19 ms; and in the AND condition, RTnew–RTold=79 ms, p<.05, SE=27 ms; RTfeatures alone–RTold=167 ms, p<.05, SE=38 ms. When Bonferroni method for error adjustment was used the mean RT difference between for the OR condition between RTnew–RTold, and for the AND condition the RT mean difference between RTnew–RTold, did not reach significance at p=.1 level.

Accuracy analysis

Accuracy was defined as a proportion of incorrect categorization trials (errors) for a certain condition. The main effects of eye separation (high, low) and lip position (high, low) saliency were significant (or marginally significant) for both the OR condition, F(1,5)=27.64, p<.01, ηp2=0.85 and F(1,5)=35.51, p<.01, ηp2=.88, respectively, and the AND condition, F(1,5)=6.02, p=.058, ηp2=0.55 and F(1,5)=603.57, p<.01, ηp2=0.99, respectively. For both facial features, high saliency produced significantly more accurate responses than low saliency.

The main effect of face context was marginally significant for the OR condition, F(2,10)= 3.95, p=.054, ηp2=.44, and was significant for the AND condition, F(2,10)=56.6, p<.01, ηp2=0.92. The mean error level for each group, averaged over subjects, is presented in Figure 4A and B.

To test the differences between different face contexts' mean accuracy, we conducted pairwise comparisons using the post hoc least significant difference test. The new-face and features-alone contexts produced less accurate responses than the old-face context, in the OR condition, P(errors)new−P(errors)old=.035, p<.05, SE=.009; P(errors)features alone−P(errors) old=.058, p=.059, SE=.024; and in the AND condition: P(errors)features alone−P(errors)old=.14, p<.01, SE=.013. Statistical significance was not reached in the case of accuracy in the AND condition (see Figure 4B) for the contrast comparison between the old-face and new-face contexts, possibly due to ceiling effects.5

These categorization results replicate previously reported part-to-whole accuracy results (e.g., Tanaka & Sengco, 1997) at the latency response level. The results strikingly demonstrate the effect of superior processing of the facial features that were embedded in their original learned context, as opposed to when they were embedded in a new face context, or when the face context was removed.

Individual Subject Analysis

MIC and SIC results for the OR condition

For each individual subject, we conducted a two-way ANOVA on the RT data using eye separation saliency (high or low) and lip position saliency (high or low) as factors. As covariate variables we used session (1 to 4) and trial order (the order in which single trials appeared in each session). The covariates session and trial order were used to account for the variability coming from between-session and between-trial learning effects. The results of the ANOVAs are summarized in Tables 2 and 3. We report the main effects of eye separation and lip position saliency, each indicating whether second-order relational features can produce a perceptual effect and act as a feature. We also report the interaction between eye separation and lip position saliency, which serves as the test for significance of an MIC test, that is, whether the MIC score indicates underadditivity, additivity, or overadditivity.

Table 2. Summarized ANOVA Results for Three Face Context Conditions: The OR Condition.

| Old-face context | New-face context | Feature-alone context | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Subject | Effects | df | F | Significance | Partial eta squared |

Observed power |

df | F | Significance | Partial eta squared |

Observed power |

df | F | Significance | Partial eta squared |

Observed power |

| 1 | Eyes | 1 | 69.20 | .01 | 0.06 | 1.00 | 1 | 63.22 | .01 | 0.14 | 1.00 | 1 | 60.58 | .01 | 0.14 | 1.00 |

| Lips | 1 | 73.90 | .01 | 0.06 | 1.00 | 1 | 38.96 | .01 | 0.09 | 1.00 | 1 | 0.80 | .37 | 0.00 | 0.15 | |

| Eyes × Lips | 1 | 32.63 | .01 | 0.03 | 1.00 | 1 | 25.55 | .01 | 0.06 | 1.00 | 1 | 0.50 | .48 | 0.00 | 0.11 | |

| Error | 1,167 | 379 | 382 | |||||||||||||

| 2 | Eyes | 1 | 128.60 | .01 | 0.10 | 1.00 | 1 | 54.59 | .01 | 0.13 | 1.00 | 1 | 49.52 | .01 | 0.12 | 1.00 |

| Lips | 1 | 93.35 | .01 | 0.07 | 1.00 | 1 | 14.54 | .01 | 0.04 | 0.97 | 1 | 1.00 | .32 | 0.00 | 0.17 | |

| Eyes × Lips | 1 | 43.20 | .01 | 0.04 | 1.00 | 1 | 3.02 | .08 | 0.01 | 0.41 | 1 | 0.09 | .76 | 0.00 | 0.06 | |

| Error | 1,180 | 381 | 374 | |||||||||||||

| 3 | Eyes | 1 | 228.56 | .01 | 0.16 | 1.00 | 1 | 134.11 | .01 | 0.27 | 1.00 | 1 | 149.55 | .01 | 0.28 | 1.00 |

| Lips | 1 | 153.23 | .01 | 0.12 | 1.00 | 1 | 125.64 | .01 | 0.26 | 1.00 | 1 | 14.32 | .01 | 0.04 | 0.97 | |

| Eyes × Lips | 1 | 86.50 | .01 | 0.07 | 1.00 | 1 | 72.11 | .01 | 0.17 | 1.00 | 1 | 5.97 | .02 | 0.02 | 0.68 | |

| Error | 1,172 | 358 | 385 | |||||||||||||

| 4 | Eyes | 1 | 67.27 | .01 | 0.05 | 1.00 | 1 | 71.77 | .01 | 0.13 | 1.00 | 1 | 72.66 | .01 | 0.17 | 1.00 |

| Lips | 1 | 19.67 | .01 | 0.02 | 0.99 | 1 | 0.45 | .50 | 0.00 | 0.10 | 1 | 12.09 | .01 | 0.03 | 0.93 | |

| Eyes × Lips | 1 | 6.47 | .01 | 0.01 | 0.72 | 1 | 1.44 | .23 | 0.00 | 0.22 | 1 | 14.17 | .00 | 0.04 | 0.96 | |

| Error | 1,178 | 466 | 365 | |||||||||||||

| 5 | Eyes | 1 | 151.43 | .01 | 0.11 | 1.00 | 1 | 46.85 | .01 | 0.11 | 1.00 | 1 | 118.15 | .01 | 0.25 | 1.00 |

| Lips | 1 | 51.72 | .01 | 0.04 | 1.00 | 1 | 69.82 | .01 | 0.16 | 1.00 | 1 | 1.47 | .23 | 0.00 | 0.23 | |

| Eyes × Lips | 1 | 23.59 | .01 | 0.02 | 1.00 | 1 | 14.04 | .01 | 0.04 | 0.96 | 1 | 0.01 | .94 | 0.00 | 0.05 | |

| Error | 1,181 | 368 | 365 | |||||||||||||

| 6 | Eyes | 1 | 132.37 | .01 | 0.10 | 1.00 | 1 | 47.03 | .01 | 0.11 | 1.00 | 1 | 56.49 | .01 | 0.15 | 1.00 |

| Lips | 1 | 91.73 | .01 | 0.07 | 1.00 | 1 | 6.49 | .01 | 0.02 | 0.72 | 1 | 17.24 | .01 | 0.05 | 0.99 | |

| Eyes × Lips | 1 | 61.12 | .01 | 0.05 | 1.00 | 1 | 4.78 | .03 | 0.01 | 0.59 | 1 | 4.10 | .04 | 0.01 | 0.52 | |

| Error | 1,182 | 373 | 333 | |||||||||||||

Table 3. Summarized ANOVA Results for Three Face Context Conditions: The AND Condition.

| Old-face context | New-face context | Feature-alone context | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Subject | Effects | df | F | Significance | Partial eta squared |

Observed power |

df | F | Significance | Partial eta squared |

Observed power |

df | F | Significance | Partial eta squared |

Observed power |

| 7 | Eyes | 1 | 60.78 | .01 | 0.05 | 1.00 | 1 | 11.59 | .01 | 0.03 | 0.92 | 1 | 0.83 | .36 | 0.00 | 0.15 |

| Lips | 1 | 262.02 | .01 | 0.19 | 1.00 | 1 | 17.56 | .01 | 0.05 | 0.99 | 1 | 86.55 | .01 | 0.21 | 1.00 | |

| Eyes × Lips | 1 | 4.76 | .03 | 0.00 | 0.59 | 1 | 0.12 | .73 | 0.00 | 0.06 | 1 | 5.87 | .02 | 0.02 | 0.68 | |

| Error | 1,125 | 373 | 318 | |||||||||||||

| 8 | Eyes | 1 | 111.53 | .01 | 0.09 | 1.00 | 1 | 17.64 | .01 | 0.05 | 0.99 | 1 | 16.22 | .01 | 0.05 | 0.98 |

| Lips | 1 | 195.96 | .01 | 0.15 | 1.00 | 1 | 77.20 | .01 | 0.17 | 1.00 | 1 | 28.05 | .01 | 0.08 | 1.00 | |

| Eyes × Lips | 1 | 11.88 | .01 | 0.01 | 0.93 | 1 | 0.27 | .60 | 0.00 | 0.08 | 1 | 1.01 | .32 | 0.00 | 0.17 | |

| Error | 1,129 | 369 | 314 | |||||||||||||

| 9 | Eyes | 1 | 93.95 | .01 | 0.08 | 1.00 | 1 | 9.24 | .01 | 0.03 | 0.86 | 1 | 19.08 | .01 | 0.05 | 0.99 |

| Lips | 1 | 279.11 | .01 | 0.19 | 1.00 | 1 | 44.86 | .01 | 0.11 | 1.00 | 1 | 129.99 | .01 | 0.28 | 1.00 | |

| Eyes × Lips | 1 | 3.84 | .05 | 0.00 | 0.50 | 1 | 4.62 | .03 | 0.01 | 0.57 | 1 | 3.93 | .05 | 0.01 | 0.51 | |

| Error | 1,159 | 365 | 339 | |||||||||||||

| 10 | Eyes | 1 | 96.22 | .01 | 0.08 | 1.00 | 1 | 23.91 | .01 | 0.06 | 1.00 | 1 | 6.01 | .02 | 0.02 | 0.69 |

| Lips | 1 | 133.29 | .01 | 0.11 | 1.00 | 1 | 26.73 | .01 | 0.07 | 1.00 | 1 | 53.61 | .01 | 0.15 | 1.00 | |

| Eyes × Lips | 1 | 1.97 | .16 | 0.00 | 0.29 | 1 | 0.02 | .88 | 0.00 | 0.05 | 1 | 4.31 | .04 | 0.01 | 0.54 | |

| Error | 1,098 | 374 | 307 | |||||||||||||

| 11 | Eyes | 1 | 58.35 | .01 | 0.05 | 1.00 | 1 | 10.16 | .01 | 0.03 | 0.89 | 1 | 18.74 | .01 | 0.05 | 0.99 |

| Lips | 1 | 192.00 | .01 | 0.14 | 1.00 | 1 | 78.22 | .01 | 0.18 | 1.00 | 1 | 65.94 | .01 | 0.17 | 1.00 | |

| Eyes × Lips | 1 | 0.02 | .90 | 0.00 | 0.05 | 1 | 1.55 | .21 | 0.00 | 0.24 | 1 | 0.04 | .85 | 0.00 | 0.05 | |

| Error | 1,166 | 368 | 334 | |||||||||||||

| 12 | Eyes | 1 | 66.96 | .01 | 0.06 | 1.00 | 1 | 66.57 | .01 | 0.16 | 1.00 | 1 | 19.81 | .01 | 0.07 | 0.99 |

| Lips | 1 | 199.82 | .01 | 0.15 | 1.00 | 1 | 41.56 | .01 | 0.11 | 1.00 | 1 | 16.39 | .01 | 0.06 | 0.98 | |

| Eyes × Lips | 1 | 1.26 | 0.26 | 0.00 | 0.20 | 1 | 0.06 | .82 | 0.00 | 0.06 | 1 | 0.02 | .89 | 0.00 | 0.05 | |

| Error | 1,095 | 349 | 282 | |||||||||||||

The main effects of eye separation and lip position saliency were highly significant for all subjects across the different face context conditions. In the new-face context condition, lip saliency did not reach significance for Subject 4. In the features-alone context condition, lip saliency was not significant for Subjects 1, 2, and 5. The interaction between eye separation and lip position saliency was highly significant for all subjects across different face context conditions, with the exception of those subjects mentioned above.

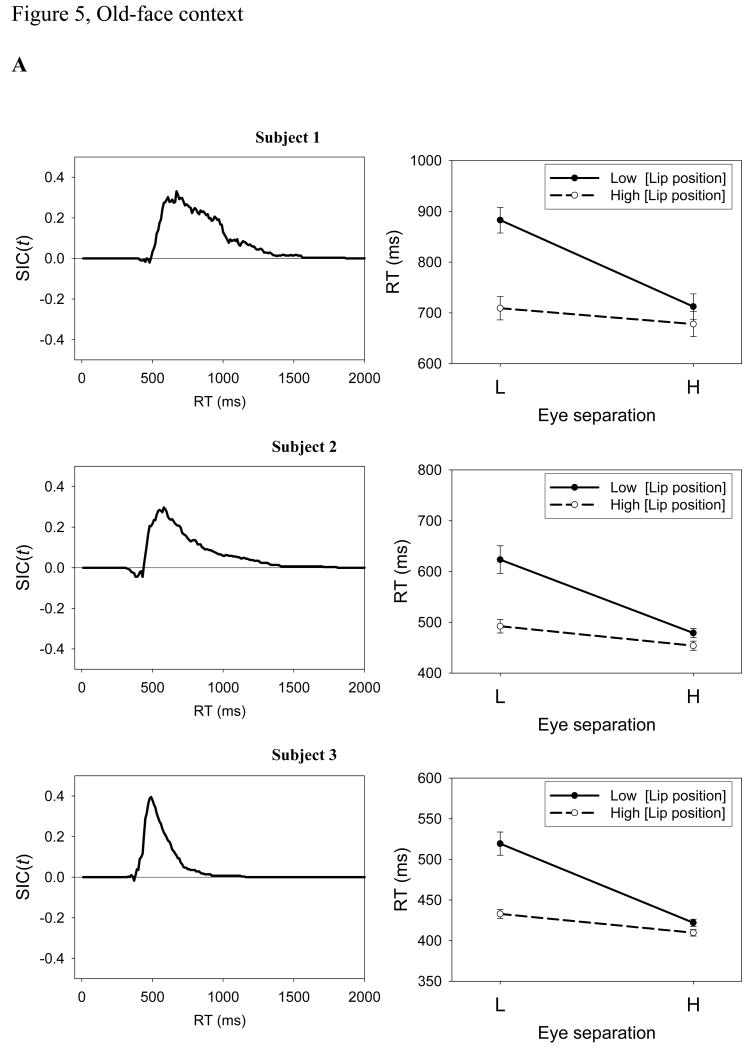

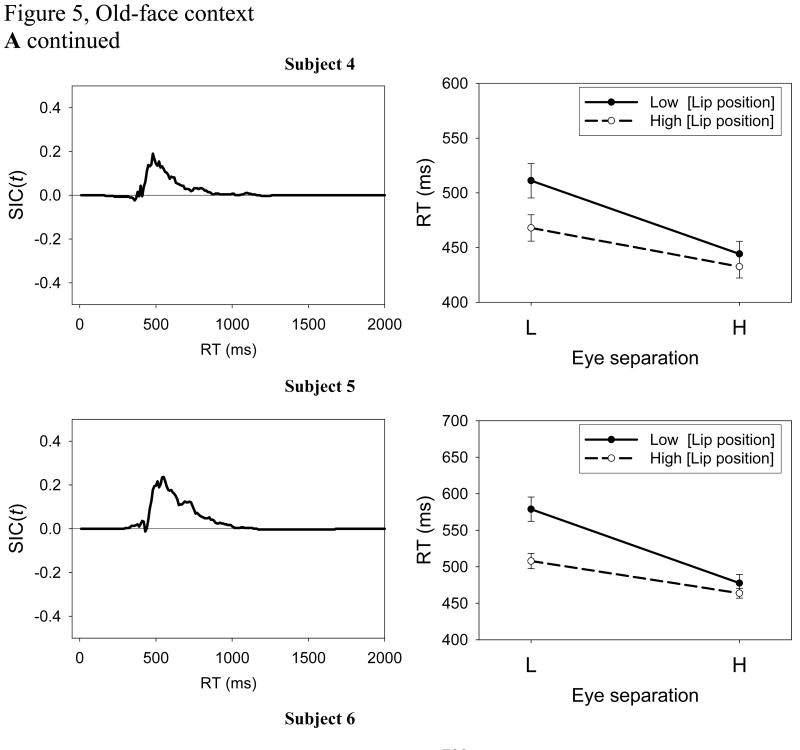

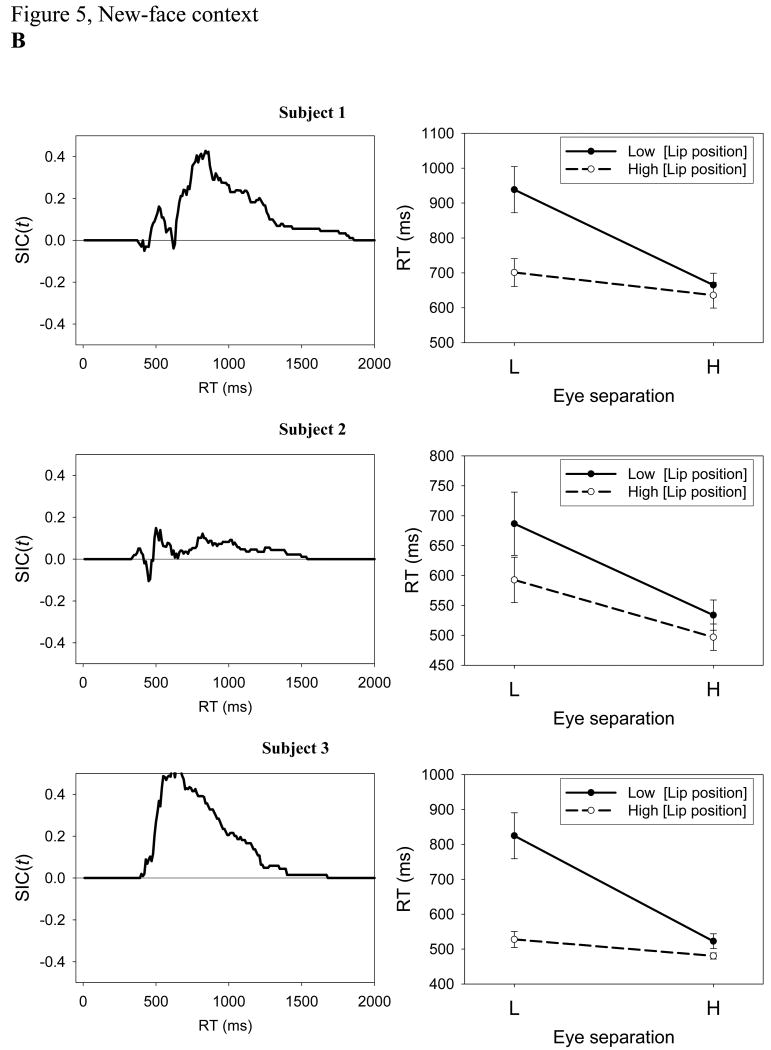

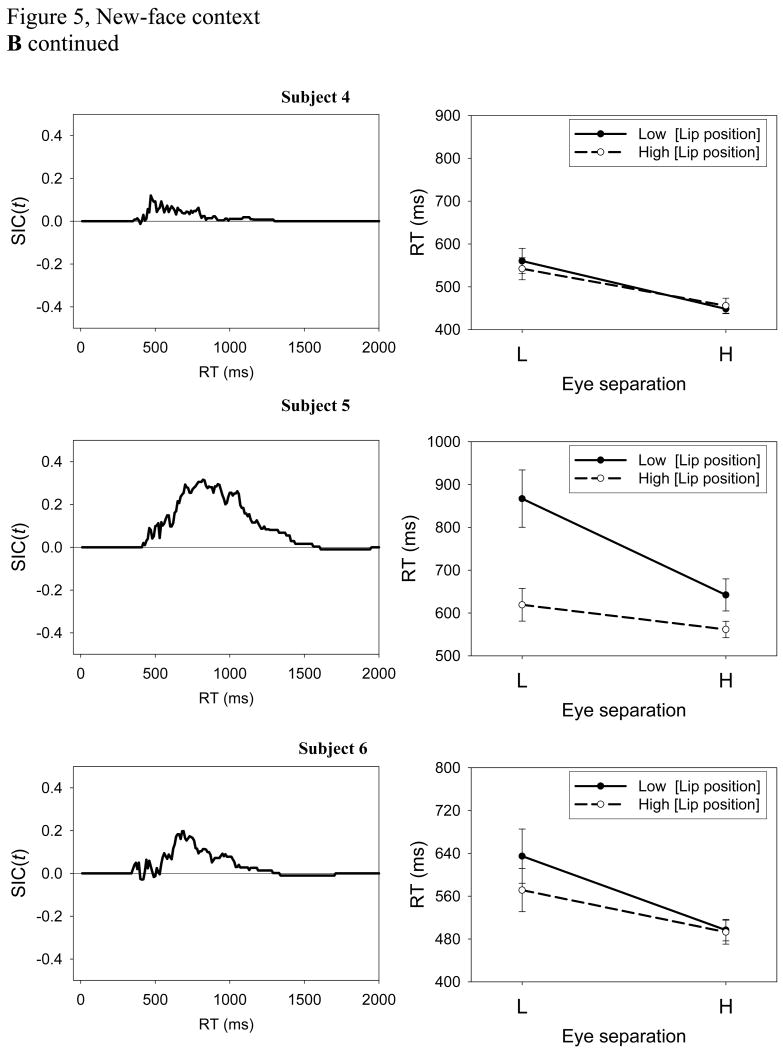

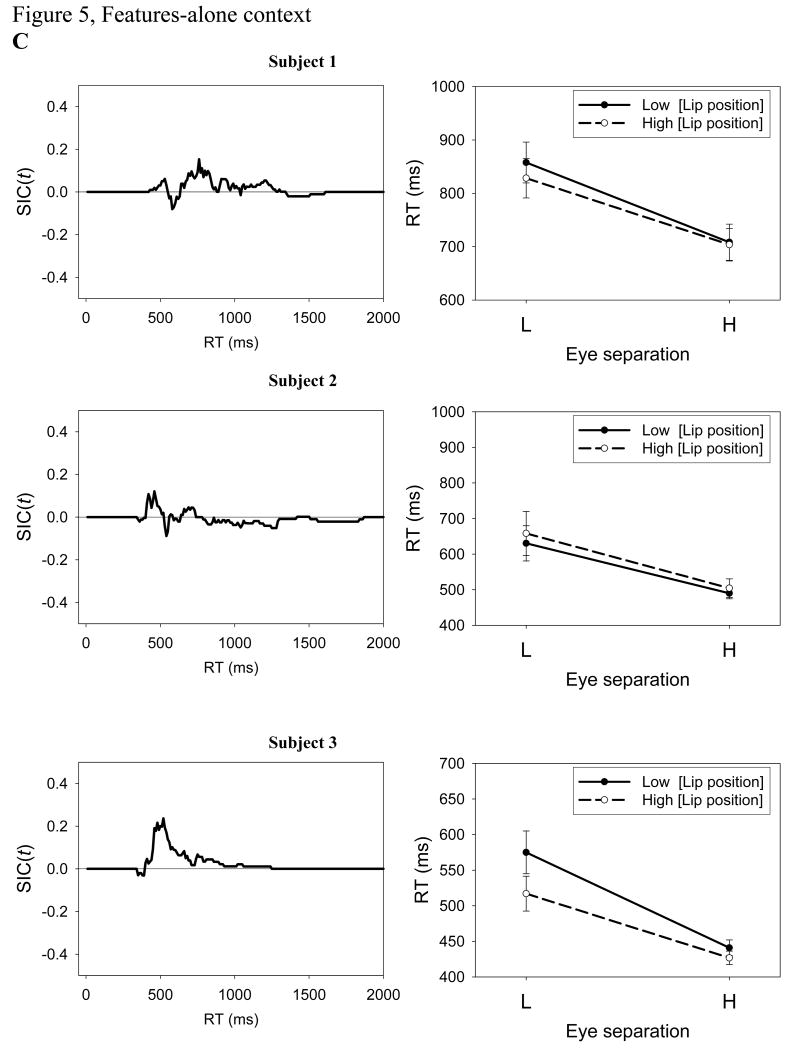

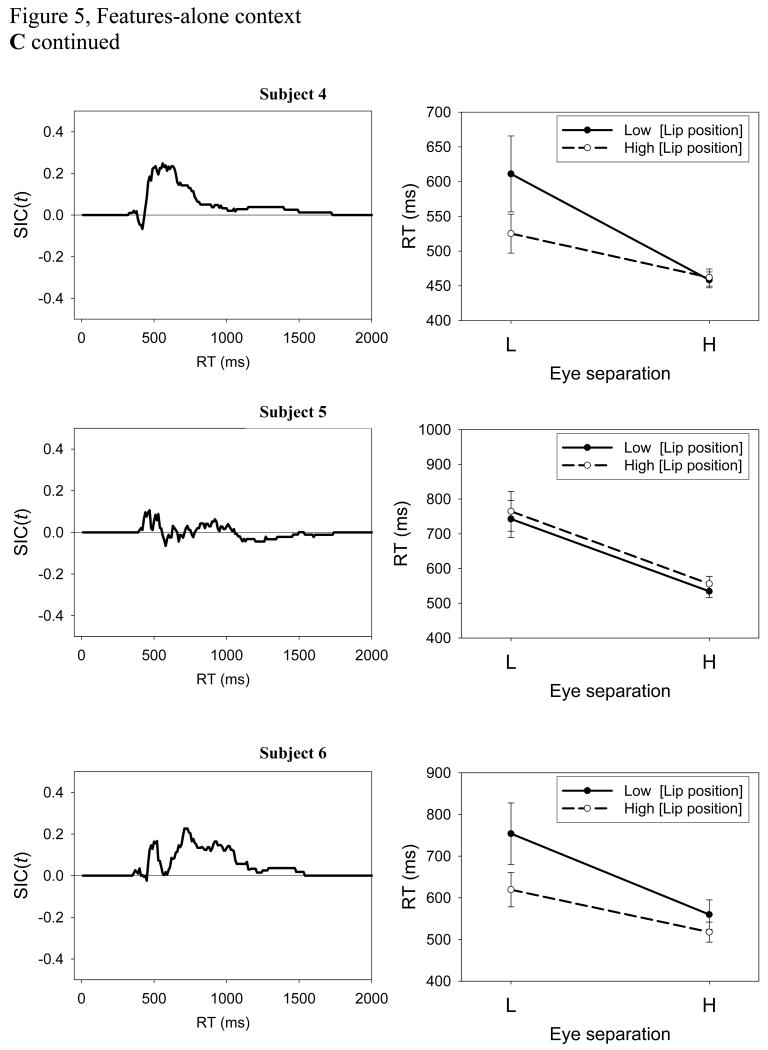

The critical question concerns the results for the MIC. As shown in Figure 5A–C, right column, the MIC values were positive for all subjects and for the different face contexts, revealing overadditivity, which is consistent with the assumption of parallel self-terminating processing. This was further confirmed by SIC tests.

Figure 5.

The systems factorial technology (SFT) test results for the OR condition for (A) the old-face context, (B) the new-face context, and (C) the features-alone context conditions for gang-member faces, across all subjects. The SIC function [SIC(t)] results are displayed in the left column and the MIC results in the right column. A dotted line connects points with high lip position saliency (H), and a solid line connects low saliency (L) points.

The more detailed SIC analyses indicated that the data were dominated by positive unimodal SIC functions (displayed in Figure 5A–C, left column). This is a strong indicator of a parallel self-terminating processing architecture (e.g., as in Figure 1C). In the old-face context condition (Figure 5A, left column) all subjects exhibited clear, positive SIC functions, with overadditive MIC values. Subjects 2 and 6 exhibited small negative deviations at the earliest times that could, in principle, be indicators of coactive processing. However, the departure is too small and it is likely that it represents random deviation rather than significant negativity due to noise in the distributions' tails. In any case we can infer that in the OR condition, old-context faces were processed by a parallel processing architecture most probably accompanied by a termination on the first completed face feature.

In the new-face context condition, we again observed mostly positive unimodal SIC functions (Figure 5B). Based on the comparison of the old-face to the new-face context, we conclude that the change of face context did not alter the processing architecture. Even though the face context in the old-face context condition was almost entirely replaced with a new one, the processing architecture involved in the detection of eye and lip configuration remained parallel, with a self-terminating stopping rule.

Strikingly, in the features-alone context condition, SIC analysis revealed a different picture for three of the subjects: Although Subjects 3, 4, and 6 exhibited the typical evidence for parallel self-terminating processing, Subjects 1, 2, and 5 revealed another kind of MIC and SIC function (Figure 5C). Detailed analysis conducted on these individual subjects revealed a nonsignificant main effect of lip position (low, high) in contrast to a significant main effect of eye separation (low, high). In fact, their SIC functions appear flat, which is usually the signature of self-terminating serial processing. A reasonable explanation for this behavior is that in the test phase, in the OR condition in which all features but lips and eyes are removed, the nose is not present to aid in perception of the lip position. So the default strategy could be to use only the eye separation and to ignore the lip position.

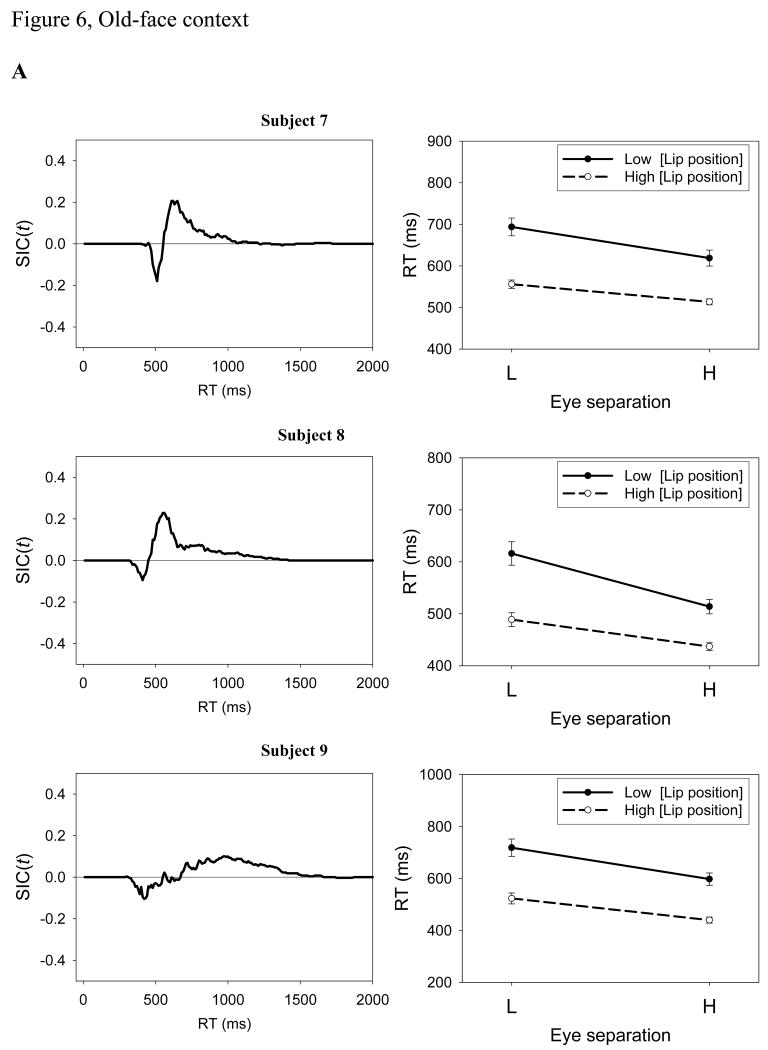

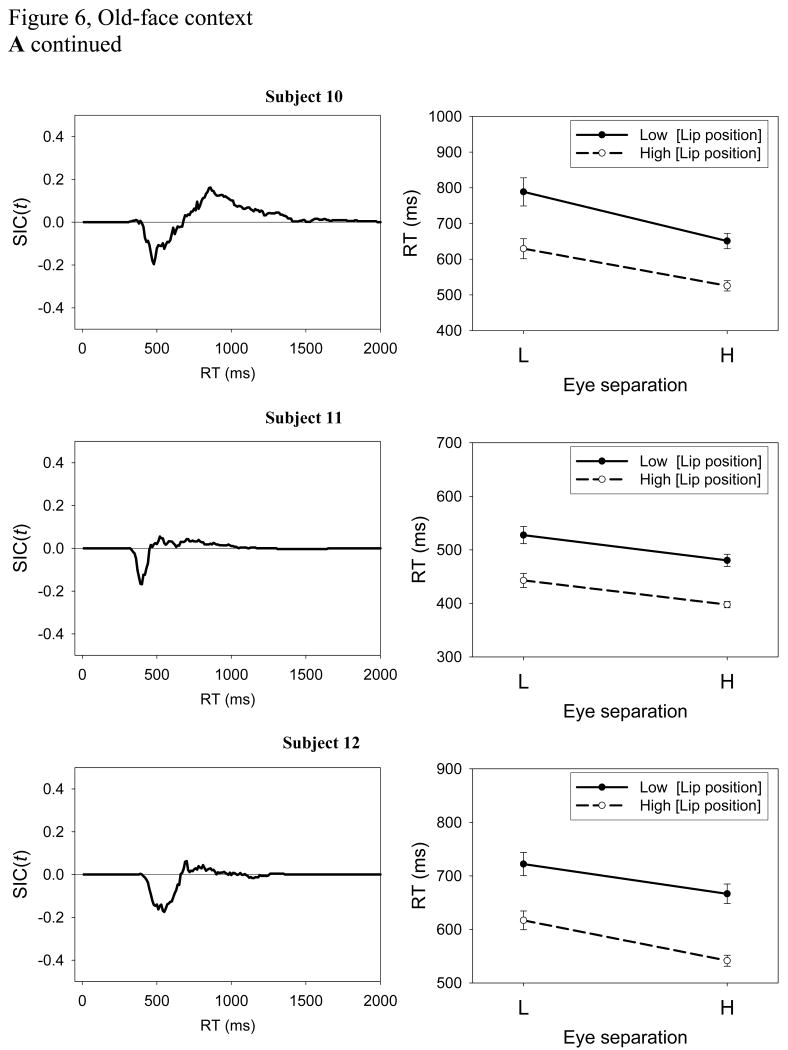

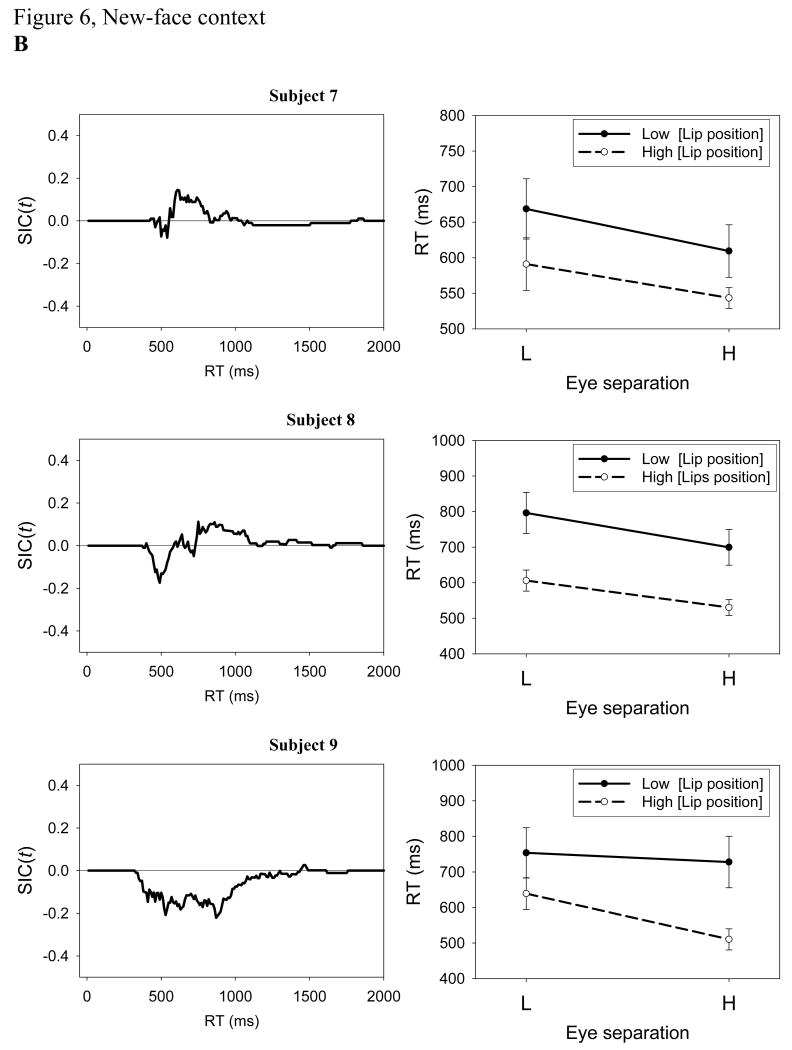

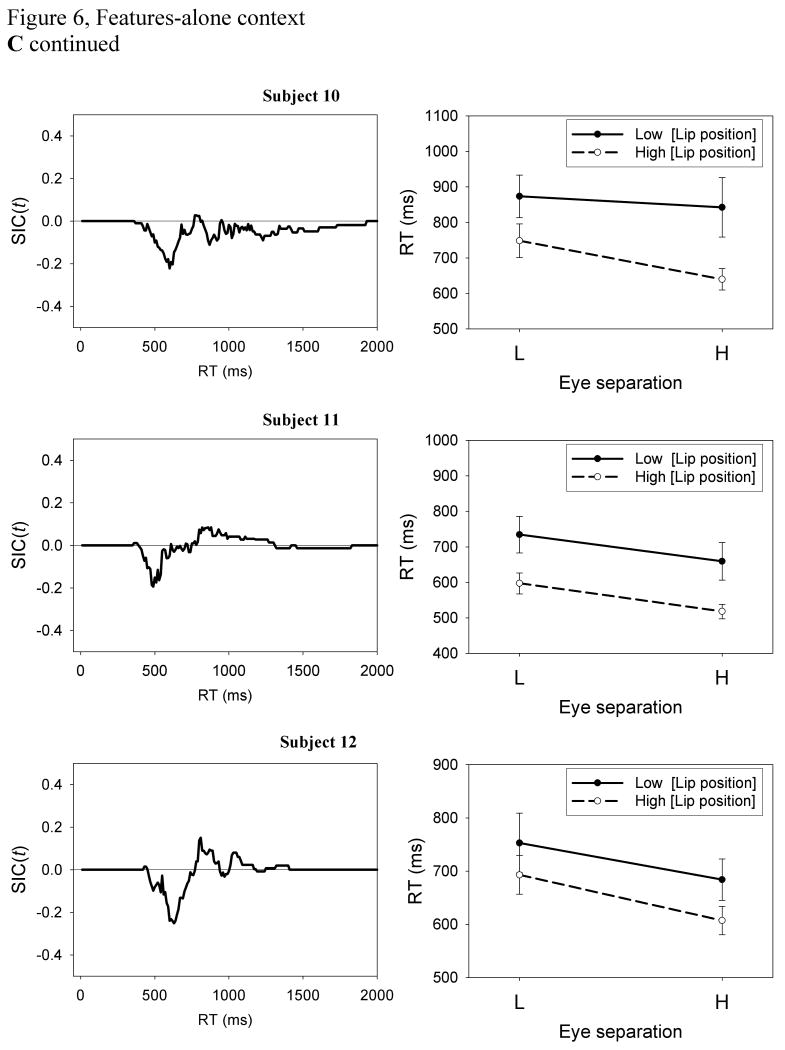

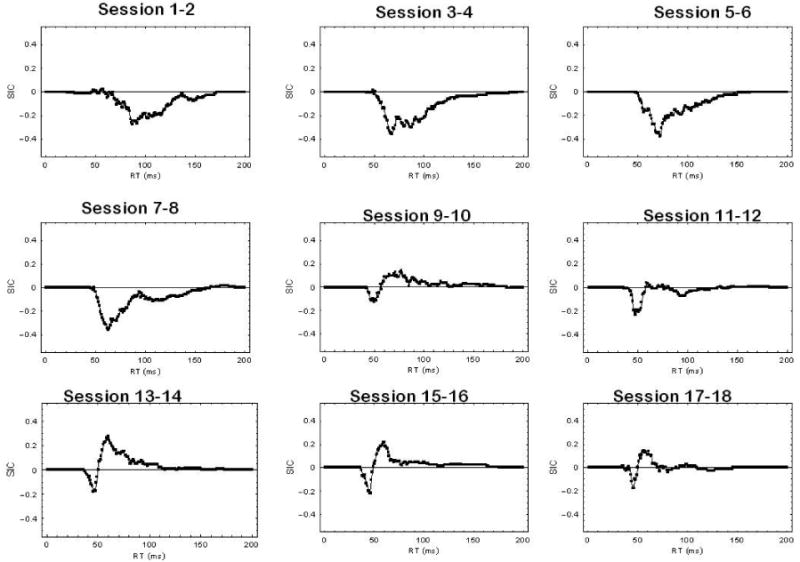

MIC and SIC results for the AND condition

The main effects of eye separation and lip position saliency were highly significant for all subjects across the different face context conditions (Table 3). The only exception to this was Subject 7 in the features-alone context condition, who exhibited nonsignificant eye separation saliency. The interaction between eye separation and lip position saliency was significant for Subjects 7, 8, and 9 in the old-face context condition. In the new-face context condition, only Subject 9 showed significant interaction between the two. In the features-alone context condition, Subjects 7, 9, and 10 showed significant interactions. All other subjects showed nonsignificant interaction, thus indicating additivity.

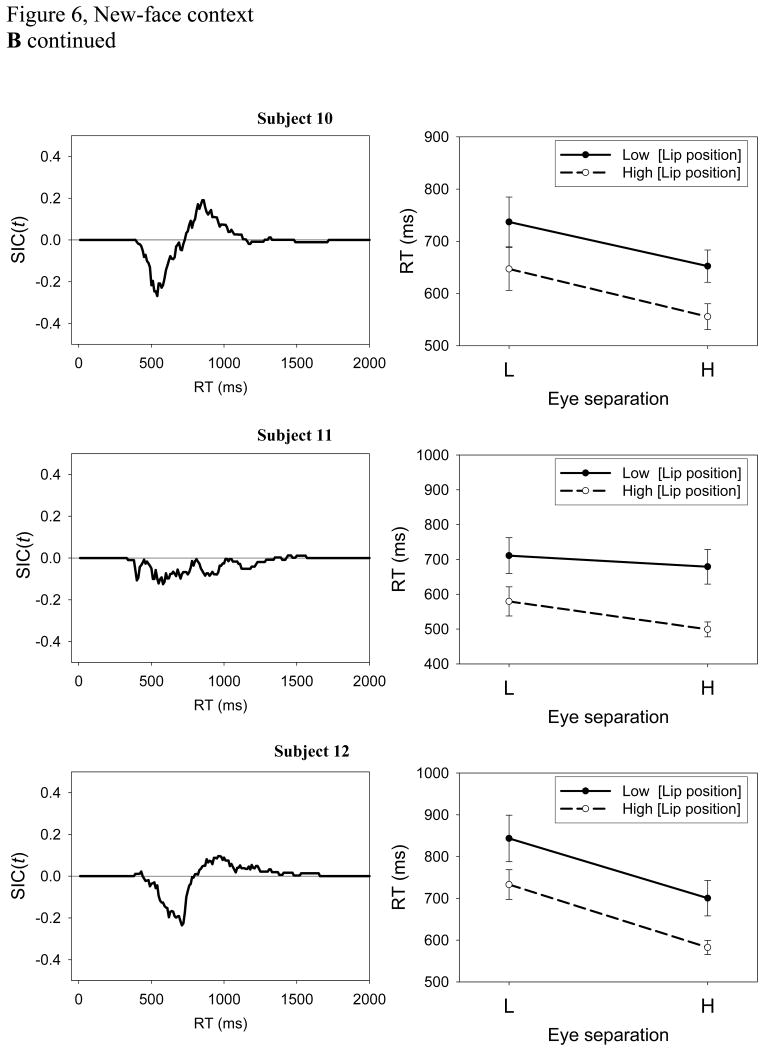

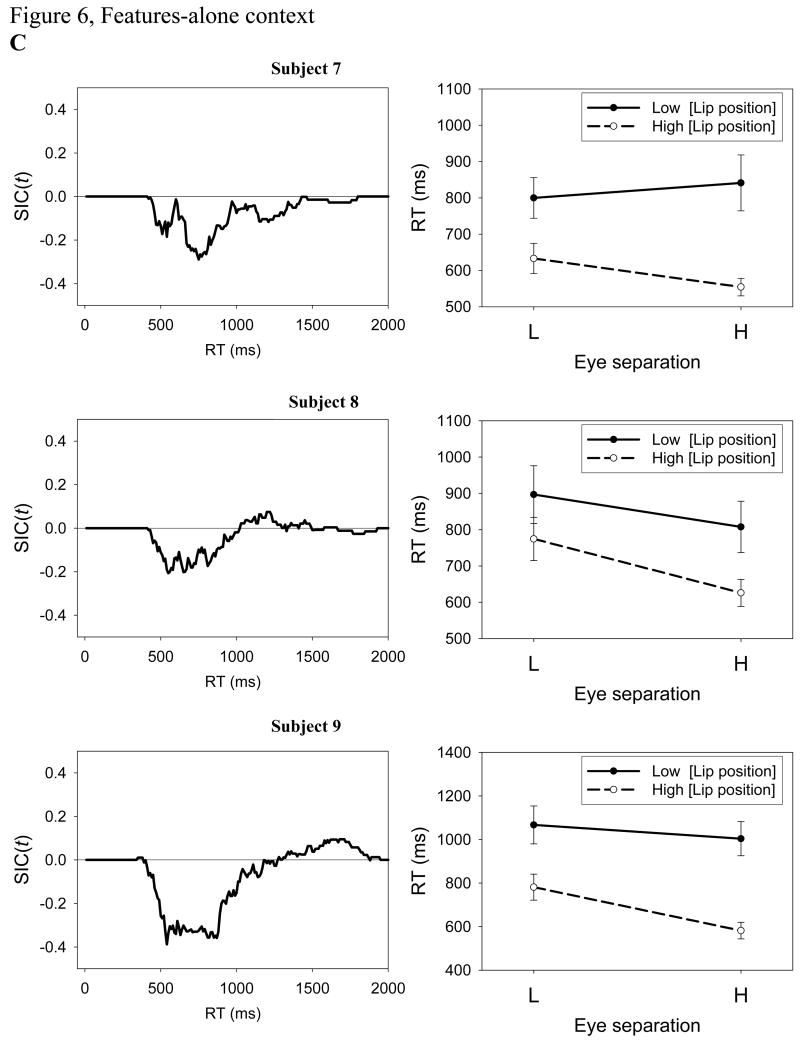

The MIC and SIC results for individual subjects are presented in Figure 6A–C for the old-face, new-face, and features-alone context conditions, respectively. Although different subjects across different face context conditions exhibited a variety of SIC function shapes, all observed SIC shapes were regular and interpretable within the framework of SFT. The only exception was Subject 7 in the features-alone context condition: This subject's mean RT for the HL condition is slightly slower than the mean RT for the LL condition, but even this difference does not exceed the calculated confidence intervals. The summary of indentified mental architectures is presented in Table 4.

Figure 6.

The SFT test results for the AND condition for (A) the old-face context, (B) the new-face context, and (C) the features-alone context conditions for gang-member faces, across all subjects. The SIC function [SIC(t)] results are displayed in the left column and the MIC results in the right column. A dotted line connects points with high lip position saliency (H), and a solid line connects low saliency (L) points.

Table 4. The AND Condition: Inferred Mental Architectures Across Three Face Contexts, for Individual Subjects.

| Subject | Face context | ||

|---|---|---|---|

| Features alone | New face | Old face | |

| 7 | Parallel | Serial | Coactive |

| 8 | Serial | Serial | Coactive |

| 9 | Parallel | Parallel | Coactive |

| 10 | Parallel | Serial | Serial |

| 11 | Serial | Serial/Parallel | Serial |

| 12 | Serial | Serial | Serial |

In Table 4 we display inferred mental architectures across individual subjects, for each face context. Each conclusion is obtained by evaluating both the shape of the observed SIC (Figure 6A–C) and the ANOVA interaction term Feature Saliency (high vs. low discriminability) × Feature Configuration (eye separation, lip position; Table 3), indicating statistical significance of an SIC shape.

In the old-face context condition (Figure 6A) Subjects 7, 8, and 9 exhibited SIC coactive signatures, which, as noted earlier, are characterized by a small negative departure, followed by a larger positive region. Subjects 10, 11, and 12, on the other hand, exhibited an additive S-shaped SIC function, with the positive area approximately equal to the negative area, corresponding to a serial exhaustive signature. This result will be treated further in the Discussion. Although Subject 12 exhibited an SIC function that is mainly negative, along with a negative MIC, the statistical test for the interaction between eye separation and lip position saliency did not reach significance (p=.26). As a convention this is additivity, and together with a recognizable S-shaped SIC function, we conclude serial exhaustive architecture.

In the new-face context condition (Figure 6B), four subjects exhibited MIC additivity and S-shaped SIC functions (Subjects 7, 8, 10, and 12), which is a signature of serial exhaustive processing. Subject 9 exhibited MIC additivity (p=.21) and mainly negative SIC function. Only Subject 11 exhibited a purely negative SIC function, thus suggesting parallel exhaustive processing. Although Subject 7's SIC function bears similarity to a coactive signature (Subject 7, Figure 6B, left column) it is important to note that the corresponding MIC test for that function indicated additivity. Therefore, we cannot accept this SIC function as an indisputable indication of coactive processing.

In the features-alone context condition (Figure 6C), the observed SIC functions for three subjects were negative over time (which corresponds to observed underadditivity of the MIC scores; Figure 6C, right column). This is consistent with parallel exhaustive processing. Exceptions were the data of Subjects 8, 11, and 12, which exhibited MIC additivity and S-shaped SIC functions, the signature of serial exhaustive processing. In the AND condition, both main effects of eye separation (high, low) and lip position (high, low) saliency were significant, which contrasted with the features-alone context condition findings in the OR condition. This suggests that both manipulated facial features produced significant perceptual effects, despite the challenge in perceiving lip position in the features-alone context condition.

Overall, the SIC test results indicated the presence of coactivation in the old-face context condition, but not for an incongruent context. Subjects in the new-face context condition showed signatures of slow serial exhaustive processing. But surprisingly, the features-alone context condition indicated the presence of a parallel exhaustive architecture. If we think of parallel processing as generally being more efficient than serial processing, this seems odd given the overall slower mean RT for the features-alone context condition, compared to the new-face context condition's overall mean RT (Figure 4B). We will address this issue in the Discussion section.

Discussion

Summary

One important operational implication of the holistic hypothesis is that a well-learned face context should facilitate perception of features that were learned together. An earlier approach utilized the part-to-whole paradigm (e.g., Davidoff & Donnelly, 1990; Donnelly & Davidoff, 1999; Leder & Bruce, 2000; Tanaka & Farah, 1993; Tanaka & Sengco, 1997), in which the failure of selective attention to individual facial features was taken as critical evidence for holistic processing.

The overall results of the current study replicate the failure of selective attention, but this time at a RT level (Figure 4A, B): The well-learned context (old face) facilitated categorization in comparison to (a) face contexts that were learned but had not previously been associated with the critical features (new face), as well as (b) the absence of a face context (features alone). These results also firmly extend the validity of the part-to-whole technique to categorization and multiple features.

In the Introduction we demonstrated potential weaknesses when failure of selective attention is examined in isolation to infer holistic perception. To improve the diagnosticity of the part-to-whole paradigm, we recommended an assessment of the information-processing properties using SFT. Overall, the SFT tests revealed signatures of both analytic and holistic face perception, in the form of serial, parallel, and coactive SIC signatures, across different face context conditions.

The SFT indicates that subjects in the OR condition were strongly analytic: They focused on the identification of a single facial feature and used it for the overall response. In doing so they were on average slower in the new-face and features-alone context conditions than in the old-face context condition. This came as a striking confirmation of our central dilemma: The SFT tests revealed that failure of selective attention was also associated with analytic face perception.

The present OR condition results in the old-face and new-face context conditions reveal that the architecture is overwhelmingly confirmatory of parallel self-terminating processing: The subjects processed the two second-order relational features in parallel and were able to cease processing as soon as either was completed. In the features-alone context condition, there was an apparent tendency on the part of some subjects to look first for the eye separation feature. If the target eye feature was present, the decision and response were made without the necessity of assessing the other feature. If the target feature eye separation was not indicative of a gang member, the subject logically had to process the lip position cue. This is a serial self-terminating system (Figure 1A), which predicts the kind of flat SIC functions that some subjects evinced.

A directly related issue is what the mechanism of RT slowing is when a face context changes. We argue that the most parsimonious hypothesis is the adjustment of decision criteria during feature recognition. The subjects might have set up higher decision response criteria when a face context was changed in the new-face and features-alone context conditions than in the old-face context condition. The exact mechanism of the decision criteria adjustment is an important part of the framework of sequential sampling models (e.g., Busemeyer, 1985; Link & Heath, 1975; Ratcliff, 1978; Ratcliff & McKoon, 2008). For example, assume that the task is to decide whether Joe's eyes were presented. With this approach it is assumed that an eye detector collects small pieces of evidence over time. As soon as the accumulated evidence reaches a decision criterion the response is generated. A perceiver can set up a high decision criterion for detecting Joe's eyes. The higher the decision criterion is, the longer it takes to accumulate the critical evidence, and accuracy increases as well due to longer collection time. By setting a decision criterion to a high value the perceiver acts to avoid premature and erroneous responses in the new-face and features-alone context conditions, in the OR condition. We argue that the results in our study could be related to such a mechanism.

The decision mechanism is logically independent of the architecture (serial, parallel). That is, the magnitude of a decision criterion can vary freely and not affect the underlying processing architecture, which is the precursor for an explanation of the current results.

Along with the above hypothesis, the decision component termed “decisional bias” has been studied intensively in face perception (Richler, Gauthier, Wenger, & Palmeri, 2008; Wenger & Ingvalson, 2002, 2003; Wenger & Townsend, 2006) and has been found to be one of the major suspects for confounding feature-based and holistic models of perception. Employing advanced general recognition theory (GRT; Ashby & Townsend, 1986), which provides a more detailed account of all sorts of perceptual dependencies, these studies found that this decision component plays a key role in face perception.

Our AND results also evidenced failure of selective attention at the mean RT level. Unlike in the OR condition, in the AND condition the failure could be attributed to holistic processes. Several subjects exhibited coactive processing associated with categorization in the old-face context, but then switched to analytic processing when this learned facial context was disrupted. Overall the individual-subject SFT analysis revealed heterogeneity of coactive, serial, and parallel architectures across face context conditions. The good thing is that almost all the SIC functions are interpretable within SFT, and they all showed an exhaustive stopping rule, which was the defining property of the AND condition.

Two explanations are viable for the AND condition. First, we attempt to use the architecture-switching hypothesis. According to this hypothesis, subjects switch (not necessarily intentionally) between different mental architectures depending on their overall efficiency in face processing in different face context conditions. While efficiency here needs more explicit definition, we can all agree that switching should occur between a coactive architecture (as a representative of a holistic strategy) on one side, and parallel and serial architectures (as representatives of an analytic strategy) on the other. That is, switching should occur between holistic processing exhibited in the old-face context condition and feature-based processing exhibited in the new-face and features-alone context conditions. Based on individual subjects' performance across the three context conditions, switching appeared to occur for only three subjects (7, 8, and 9; see Table 4).

The switching hypothesis provides a reasonable explanation for only half the subjects, and it is challenged by one particular finding. In the features-alone context condition half of the subjects were recognized as parallel processors and the other half as serial processors. In the new-face context condition most of them were classified as serial. Mean RTs (Figure 4B) showed that, on average, subjects were slower in the features-alone context condition than in the new-face context condition. We have to admit that the switching hypothesis cannot adequately explain why half of the subjects showed parallel processing in the features-alone context, nor why they would switch to faster, but serial, feature-by-feature processing in the new-face context condition.

As an alternative to the above hypothesis we tested the interactive-parallel hypothesis. The following discussion pertains to the third fundamental property of the information processing systems—processing dependency. Thus, we will relax the assumption that facial feature detectors are independent and will allow them to act interactively. In the interactive-parallel hypothesis we argue that subjects do not switch between different mental architectures under different face context conditions. The processing strategy, across different contexts, relies entirely on a parallel exhaustive information-processing model (e.g., Townsend & Wenger, 2004b), in the AND condition. However, we propose that the parallel exhaustive model was aided by an interactive mechanism between face feature detectors. Here we argue for a facilitatory interactive-parallel mechanism that assumes that face feature detectors (of eyes, lips, relational components, etc.) help each other during the process of feature detection (for more details see Fific, Townsend, et al., 2008, pp. 596–597; see also Diederich, 1995; Townsend & Nozawa, 1995; Townsend & Wenger, 2004b).

The initiative to test the interactive-parallel hypothesis came from the learning phase of the study. In this phase, subjects learned to categorize facial features in the old-face context only, and each session was identical to an old-face context condition in the test phase. The SFT test can be applied in each session with the potential to reveal the fundamental information-processing properties, through the course of learning. We decided not to include these data to limit the present research scope. However, it is not possible to overlook that the data showed a very regular type of SIC change throughout learning (see a typical example in the Appendix). At the beginning of learning almost all subjects showed prevalence of the negative SIC function, indicating parallel exhaustive processing. The SIC shape gradually changed to an S-shaped SIC function midway through learning. And for most of the subjects (except Subject 6), the SIC reached the coactive signature for the last learning sessions. At the same time, all subjects exhibited mean RT speed-up as a function of learning session. Judging from this evidence, it can be argued that learning to categorize faces yielded the transformation of an initial parallel system into an interactive-parallel system, by constant increase in the amount of positive facilitation between facial feature detectors.

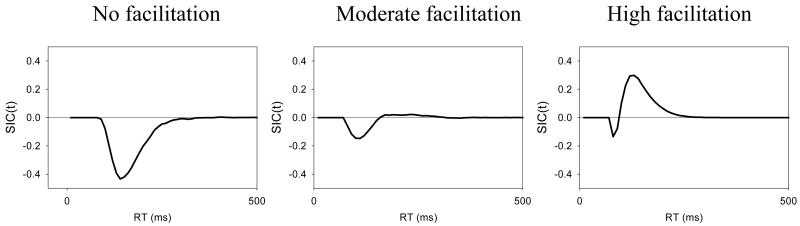

We suggest that a facilitatory interactive-parallel mechanism may be responsible for producing the observed data patterns, in the AND condition. In the features-alone context condition no facilitation is available from the context, because almost the entire face context is removed, so a parallel exhaustive architecture is revealed as a negative SIC function. However some facilitation between two isolated facial features is also a possibility, so some subjects showed close to S-shaped SICs. In the new-face context condition, the context is available, albeit incongruent. However, it can provide some moderate facilitation between feature detectors. In the old-face context condition, a congruent face context facilitates the most facial feature detectors.

We simulated the interactive parallel-exhaustive model under different context conditions and plotted the SIC curves in Figure 7. SICs for the facilitatory interactive-parallel model, for different degrees of facilitation, are presented in a sequence of three panels. The left panel corresponds to a no facilitation case. Clearly in this case, SIC(t) is always negative, as predicted by a parallel exhaustive model. As we gradually increase the magnitude of the interaction (from left to right in Figure 7), the positive part becomes proportionally larger until it exceeds the size of the negative part (resulting, of course, in positive MIC values).6 We argue that the nature of holistic perception can be adequately captured by the facilitatory mechanism as described here. Naturally, considerably more work is required to put this inference to the test.

Figure 7.

Three simulated SIC curves for the interactive-parallel-exhaustive model. SIC curves were obtained for three levels of facilitation (no, moderate, and high facilitation) simulating an increase in perceptual holism. For each experimental condition (HH, HL, LH, and LL) we simulated 5,000 RT trials (For more details see Fific, Townsend, et al., 2008).

To test whether the interactive-parallel hypothesis relates to individual subjects, we will focus on individual findings. Inspection of Table 4 indicates that all subjects (except Subject 11 to some extent) underwent the same order of change of SIC shape as predicted by the interactive-parallel model. It is important to note that the subjects, for the most part, did not show all three stages, but rather only two. This can be explained by the amount of facial feature facilitation exhibited across the three face contexts, due to individual differences.

When compared to the architecture-switching hypothesis, the interactive-parallel hypothesis appears to be a more plausible mechanism, for several reasons: (a) Individual subjects' data also conformed to the order of predicted stages of the interactive-parallel model; (b) the transition of the SIC curve, as predicted by the interactive-parallel hypothesis, was observed in another study dealing with a related visual-grouping phenomenon in a visual search task (Fific, Townsend, et al., 2008); (c) the preliminary analysis conducted on the learning sessions data agreed with the prediction of the interactive-parallel model; and (d) supporting the strong holistic hypothesis, Thomas (2001a, 2001b) showed strong evidence for such an interactive mechanism being employed in face perception.

The test of the interactive-parallel hypothesis may leave some questions yet to be answered, but we think that in the current form it finds a solid support in the data. Note that this study represents an isolated attempt to address the important issue of individual differences in perception, thus revealing new dilemmas about data interpretation and hypothesis testing. Averaging of the above individual subjects' data leads to far simpler hypothesis testing but is mute with respect to exploration of intricate differences exhibited across different individuals. For example, averaging of the SIC curves in the old-face context in the AND condition results in the clear mean coactive signature, thus completely wiping out subjects who do not conform to coactive processing (Subjects 10, 11, and 12). These findings should serve as a solid reason to call for more work on this topic, to achieve a critical mass of evidence.