Abstract

Objective

The purpose of this study was to evaluate and validate an offline, automated scalp EEG-based seizure detection system and to compare its performance to commercially available seizure detection software.

Methods

The test seizure detection system, IdentEvent™, was developed to enhance the efficiency of post-hoc long-term EEG review in epilepsy monitoring units. It translates multi-channel scalp EEG signals into multiple EEG descriptors and recognizes ictal EEG patterns. Detection criteria and thresholds were optimized in 47 long-term scalp EEG recordings selected for training (47 subjects, ~3653 hours with 141 seizures). The detection performance of IdentEvent was evaluated using a separate test dataset consisting of 436 EEG segments obtained from 55 subjects (~1200 hours with 146 seizures). Each of the test EEG segments was reviewed by three independent epileptologists and the presence or absence of seizures in each epoch was determined by majority rule. Seizure detection sensitivity and false detection rate were calculated for IdentEvent as well as for the comparable detection software (Persyst’s Reveal®, version 2008.03.13, with three parameter settings). Bootstrap re-sampling was applied to establish the 95% confidence intervals of the estimates and for the performance comparison between two detection algorithms.

Results

The overall detection sensitivity of IdentEvent was 79.5% with a false detection rate (FDR) of 2 per 24 hours, whereas the comparison system had 80.8%, 76%, and 74% sensitivity using its three detection thresholds (perception score) with FDRs of 13, 8, and 6 per 24 hours, respectively. Bootstrap 95% confidence intervals of the performance difference revealed that the two detection systems had comparable detection sensitivity, but IdentEvent generated a significantly (p < 0.05) smaller FDR.

Conclusions

The study validates the performance of the IdentEvent™ .seizure detection system.

Significance

With comparable detection sensitivity, an improved false detection rate makes the automated seizure detection software more useful in clinical practice.

Keywords: Scalp EEG seizure detection, Independent seizure review, Bootstrap re-sampling, Pattern-Match Regularity Statistic (PMRS), Artifact rejection, Spatiotemporal dynamics

1. Introduction

For long-term video-EEG recordings, often lasting multiple days to weeks in epilepsy monitoring units, an epileptologist typically does not review the entire video-EEG record due to large volumes of video-EEG data and high patient throughput. In many clinical centers, pre-review of long-term recordings is performed by EEG technologists and/or epilepsy fellows, a markedly labor-intensive and time-consuming task. Despite the availability of seizure detection software to aid in the identification of ictal events, many centers forego its use because of less than ideal seizure detection sensitivity and unacceptably high rates of false detections, frequently due to common artifacts. In our experience, a recognized consequence of this process is that seizure events can go undetected in review and ultimately are not included in the description and interpretation of the ictal recordings.

In order to improve precision and accuracy in the identification of seizure events, seizure detection software has made significant advances in the last several years. A new Reveal algorithm by Persyst using matching pursuit, small neural network-rules, and connected-object hierarchical clustering, had sensitivities and false detection rates that compared favorably with two other algorithms (Sensa and CNet) (Wilson et al., 2004). Using wavelet decomposition and feature extraction (Khan and Gotman, 2003), a new method for automatic seizure detection and onset warning was developed featuring a user-tunable threshold to exploit the trade-off between sensitivity and detection delay and an acceptable false detection rate (Saab and Gotman, 2005). Based on power spectral analytical techniques, an algorithm for offline seizure detection by scalp EEG was applied in a standardized way with fixed parameters for all subjects and demonstrated high detection sensitivity, a reasonably low false detection rate, and parameter tuning to patient-specific seizures (Hopfengartner et al., 2007). Most recently, a novel procedure for online real-time automatic detection of polymorphic ictal patterns using alpha, beta, theta, and delta-rhythmic activity, amplitude depression, and polyspikes, resulted in reliable, early, and accurate detection within the first few seconds of ictal patterns without the need to adapt the system to specific patients (Meier et al., 2008).

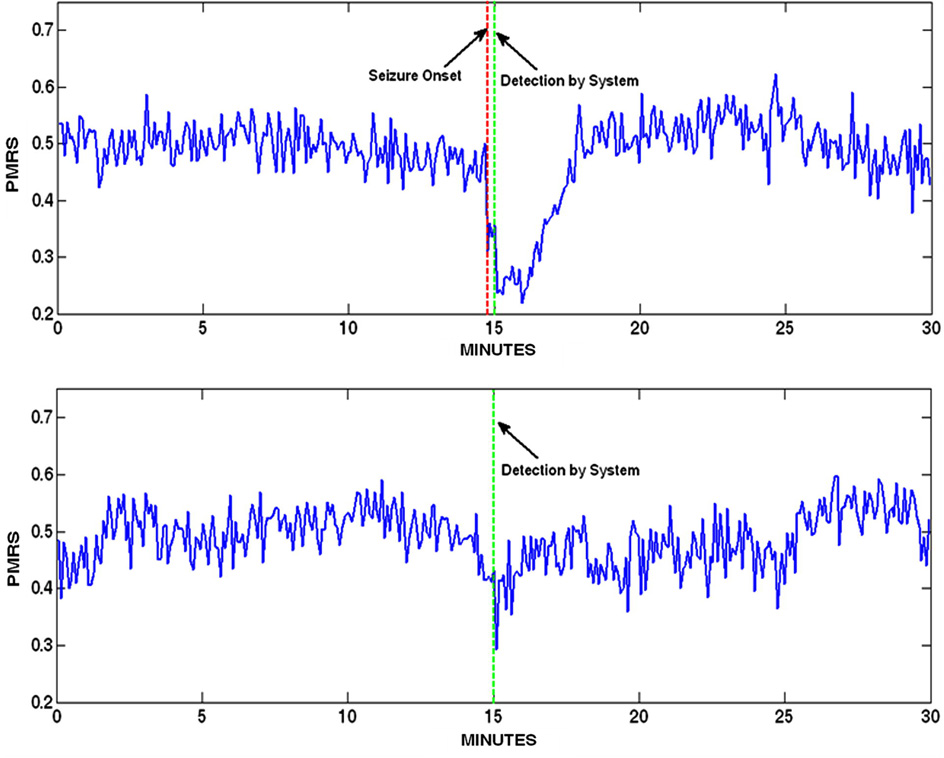

In this paper, we introduce a novel automated seizure detection system, IdentEvent, for efficient scanning of long-term scalp EEG recordings and identification of ictal EEG segments. The system processes multi-channel scalp EEG signals and translates them into three main EEG descriptors: pattern-match regularity statistic (PMRS), local maximum frequency (LMF), and amplitude variation (AV). Similar to the measure of Approximate Entropy (ApEn) (Pincus, 1991), PMRS quantifies the degree of signal regularity of a time series by estimating the likelihood of a signal pattern repeated within the time series. Different from ApEn, which uses a “value match” criterion, PMRS adopts a “pattern match” method, which results in faster computation and more robust measurement with respect to the algorithm parameters (Supplementary Material, Appendix A). PMRS values become smaller when the signal is in a less complex state (i.e., more predictable). Therefore, during a seizure, due to the highly organized and ordered patterns of ictal EEG, PMRS values drop significantly from their background interictal values. Fig. 1 demonstrates this PMRS behavior from one EEG channel that was involved in an ictal activity.

Figure 1.

An example of a PMRS curve before, during, and after a seizure (between two vertical dotted red lines). PMRS values drop significantly during the ictal period compared to other periods.

Local maximum frequency quantifies the largest local frequency within the calculation window. In IdentEvent, local frequency was estimated by the number of occurrences of positive zero crossings (after 0-mean shifted) in a one-second epoch within the calculation window (Supplementary Material, Appendix B). This feature is used primarily for artifact rejection when the EEG segment contains dominating muscle activities. Amplitude variation is simply the standard deviation of the EEG signal within the calculation window (Supplementary Material, Appendix C). Similarly, AV is also used for artifact rejection when the EEG segment contained significant movement or recording artifacts. With a built-in artifact rejection module, IdentEvent further examines the spatiotemporal dynamics of these EEG descriptors to determine whether an EEG segment contains ictal EEG patterns. This process runs sequentially for each 5.12-second EEG epoch.

The purpose of the present study was to evaluate and validate the detection performance of IdentEvent in long-term scalp EEG recordings collected from epilepsy monitoring units. In order to control for the potential variability in the clinical determination of seizure occurrence, all test EEG segments in this performance testing study were independently reviewed by three epileptologists. A “majority rule” method was used to determine the occurrences of seizure events in evaluating and comparing the performance of IdentEvent to an FDA approved and clinically widely utilized seizure detection software (Persyst’s Reveal®, version 2008.03.13). The main hypothesis of this study was that IdentEvent performed at least as well as Reveal.

2. Methods

2.1. Subject Population

EEG recordings used in this study were collected from subjects 18 years of age or older who were admitted to Allegheny General Hospital (AGH, Pittsburgh, PA) and the Medical University of South Carolina (MUSC, Charleston, SC) for long-term EEG-video recordings for diagnostic or presurgical evaluation. Collection of EEG data was approved by AGH’s and MUSC’s Investigational Review Boards, as well as the Western Investigational Review Board (WIRB). All subjects with epileptic seizures from whom informed consent was obtained were included in the study; patients with nonepileptic events were excluded. Test subjects were enrolled sequentially from the clinical sites. It was anticipated that enrolled subjects would include an equal number of males and females, and an ethnic and racial distribution that reflected the general catchment population of each clinical site.

2.2. Test Dataset (EEG)

Test Subjects

Fifty-five long-term scalp EEG recordings (from 55 subjects) were included in the test dataset for this study: 18 collected from AGH and 37 from MUSC. The selection was based solely on the seizure occurrences reported from the clinical centers. As a result, 50 subjects had at least one seizure recorded and the remaining five subjects had no seizures. None of these EEG recordings in the test dataset was used in the algorithm development or the parameter training process.

Independent Seizure Review and EEG Segment Sampling

The objective determination of what constitutes a seizure event, including those events with and without clinical manifestations, is at times not a trivial matter. A “theoretical gold standard” for such a determination is for an epileptologist to review a video-EEG record of a suspected seizure with all available patient-specific clinical information. Because there is inter-rater variability among epileptologists in identifying seizure events based solely on EEG review (Wilson et al., 2003), each of the test EEG segments was marked independently by three epileptologists, who had no involvement in the algorithm development, study design, data analysis, or interpretation of the results. Although we expected scoring correspondence to be high among expert EEG readers (Wilson et al., 2003), only the seizure events that were marked by at least two of the three epileptologists were included in the performance testing. Due to the burdensome nature (time and cost) of carefully reviewing the entire EEG recordings of all 55 test subjects (over 4,500 hours of EEG recordings), multiple EEG segments (~2 to 3 hours each) were sampled from each of the test EEG recordings. The sampling procedure is described step by step below:

For each subject, each continuous long-term EEG recording was evenly segmented. The length of each segment was set between 2 and 3 hours, but kept as close to 3 hours as possible. For example, if a recording had 23 hours and 44 minutes, then it was segmented into 8 segments with 2 hours and 58 minutes each.

Based on the seizure events recorded in the clinical report, a segment was labeled as a “seizure” segment when at least one documented seizure was within the segment. Otherwise, it was labeled as a “non-seizure” segment. For “seizure” segments, since the segmentation was independent of the seizure times, each reported seizure was randomly located within the segment.

In order to include as many seizures and types of seizures as possible, all “seizure” segments were included in the performance testing. However, when there were more than 6 “seizure” segments (3% of the anticipated 200 total seizures) in one test dataset, only 6 “seizure” segments were randomly sampled (using statistical software Splus/R) from all “seizure” segments in the dataset. This procedural step was included to avoid “over-weighting” by limiting the inclusion of seizures to no more than 3% per patient.

“Non-seizure” segments were randomly sampled (same procedure as above) from each of the test datasets. When the total number of “non-seizure” segments was less than or equal to 5, all “non-seizure” segments were sampled. Otherwise, at least 5 “non-seizure” segments were sampled. Due to the large number of “non-seizure” segments, it was anticipated that at least 50% of the total EEG segments sampled would be “non-seizure” segments.

The random sampling process was conducted by independent personnel (no involvement in the algorithm development and study design).

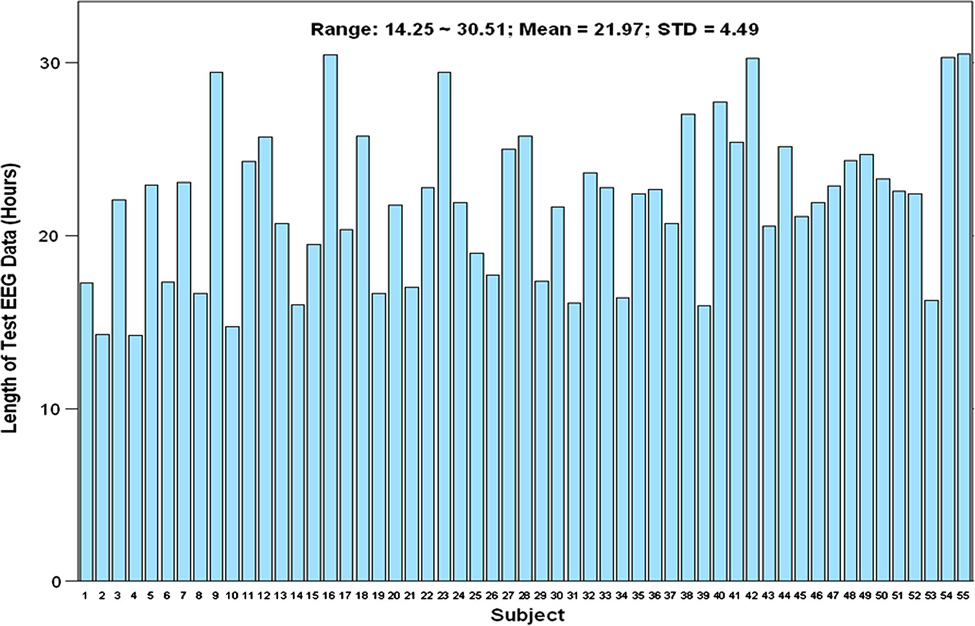

Results: A total of 153 “seizure” segments and 283 “non-seizure” segments were sampled for this performance testing study. Most of the subjects had 5 “non-seizure” segments sampled, except the six subjects with longer recordings and the one subject that had a shorter recording. The total duration of the 436 sampled EEG segments was 1,208.24 hours. Fig. 2 shows the number of sampling segments from all test subjects. Fig. 3 shows the length of EEG data sampled from each of the test subjects.

Figure 2.

Number of sampled “seizure” and “non-seizure” segments for all 55 test subjects.

Figure 3.

Length of sampled EEG segments for all 55 test subjects.

Due to the potential for false negatives in the clinical reports, it is possible that seizures were recorded within the sampled “non-seizure” segments. Therefore, the independent epileptologists were blinded to the segment categories (i.e., “seizure” or “non-seizure”) before reviewing all sampled segments in full.

An event was considered an electrographic seizure only when it was identified by the majority of the expert panel (at least 2 out of 3) performing the review. The resultant “seizure” events constituted the basis (“clinical truth”) for the performance evaluation of the detection sensitivity and false detection rate of the detection software.

For most of the electrographic seizures identified, the onset times varied slightly among the EEG experts, but were always within 30 seconds of each other. Therefore, events that were identified within a two-minute window among reviewers were systematically considered the same event; the earliest identified onset time was specified to be the onset time of the seizure. Additionally, the reviewers were asked to document the patient’s physiological state(s) (awake, drowsy, and/or asleep) during the recording.

Results of Independent Review

a. Test Seizure Sample

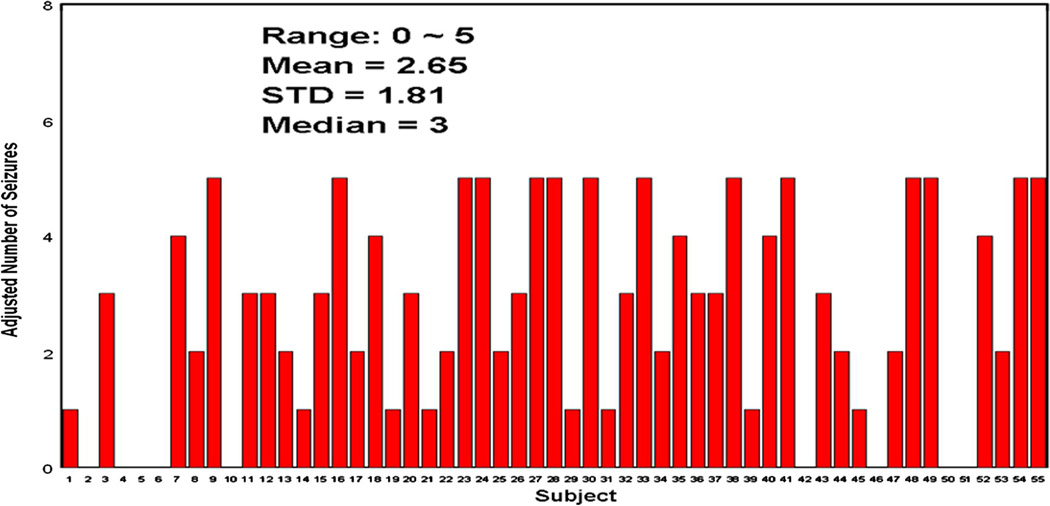

The expert panel identified a total of 209 electrographic seizures. However, 53 seizures were identified in one subject, and seven other subjects each had more than 5 seizures identified. To ensure that no more than 3% of the total number of seizures was obtained from any individual patient, all subjects with more than 5 seizures identified by the panel had their seizure events randomly down-sampled to 5 seizures (only 5 seizures selected). As a result, a final total of 146 electrographic seizures were included in the performance testing. Fig. 4 shows the numbers of seizures identified by the EEG review panel after the “random seizure down-sampling.” Among 146 test seizures, 145 were partial seizures of which 62 were secondarily generalized; 1 seizure was an atypical absence seizure.

Figure 4.

Adjusted number of seizures (randomly down-sampled when the # of seizures was >5) for all 55 test subjects.

b. Inter-rater Variability

The inter-rater variability was evaluated by calculating Cohen’s kappa coefficient (κ) (Cohen, 1960) between each pair of EEG reviewers. It was calculated based on the probabilities of the “observed agreement” and “chance agreement”. If the reviewers are in complete agreement, then κ = 1. If there is no agreement (other than what would be expected by chance), then κ ≤ 0.

The kappa coefficients for all pairs of reviewers ranged from 0.641 to 0.790, with an overall weighted average of 0.680. Based on the interpretation by Landis and Koch (1997), the kappa statistics for all pairs of EEG reviewers indicated “substantial agreement” (0.61 ~ 0.80).

c. Subjects’ Physiologic States

An assessment of the physiological state(s) of the patient (e.g., awake, drowsy, asleep) during the EEG recording was made by the independent reviewers. An EEG segment was considered to contain a specific physiologic state only when it was indicated by at least two independent reviewers. Table 1 summarizes the observations of the test EEG segments that contain each of the states. Test EEG recordings in all subjects but one contained all three physiologic states. This observation suggests that the detection performance (especially the false detection rate) investigated in this study was adequately evaluated during the different physiologic states typically evaluated by long-term EEG recordings.

Table 1.

Subjects’ Physiologic States in Test EEG Segments

| Physiologic State | |||

|---|---|---|---|

| Awake | Drowsy | Asleep | |

| Percentage of Subjects | 100% (55/55) | 100% (55/55) | 98.2% (54/55) |

|

Range of within-subject Percentage* |

50% ~ 100% | 40% ~ 100% | 0.0% ~ 100% |

|

Mean within-subject Percentage* |

91.3% | 81.1% | 52.9% |

|

Median within-subject Percentage* |

100% | 80% | 50% |

Within-subject percentage is the percentage of EEG segments within a patient that exhibited certain physiologic states.

2.3. Seizure Detection System (IdentEvent)

The proposed detection system utilizes an algorithm that analyzes previously recorded scalp EEG records one computational window (5.12-second epoch) at a time and generates seizure detections when sufficient criteria are met. The algorithm begins by filtering the signals to capture only the frequency ranges corresponding to neuronal activity in scalp ictal EEG and calculating a number of mathematical descriptors from the raw signal. After the mathematical descriptors are calculated, the EEG epoch must pass a set of artifact rejection criteria (ARC) designed to eliminate false detections arising from common sources in scalp EEG recordings (muscle contraction, movement, sleep patterns, etc.). If the ARC are passed, then the EEG epoch is further examined to determine whether its signal characteristics (via its mathematical descriptors) match with the criteria designed for detection of unilateral onset (left- or right-sided) or bilateral onset seizure activity. The criteria consist of the aforementioned mathematical descriptors compared to a series of pre-determined thresholds, using different channel combinations. When all signal characteristics pass the criteria, the time of the EEG epoch is stored. This stored time is then accessible by the software, allowing the EEG-trained user to easily review the marked EEG epochs to confirm or reject detected seizure occurrences. Appendix D (in the Supplementary Material) gives a detailed description of the proposed detection algorithm (Fig. 5).

Figure 5.

A flow chart of the IdentEvent seizure detection system.

The test detection algorithm was developed and trained in a training dataset consisting of 47 long-tern scalp EEG recordings with a total of more than 3,600 recording hours. This rich dataset allowed us to optimize the parameters and detection criteria used in the algorithm. Because this dataset was for training purposes, the seizure events were identified based on the electrographic and clinical observations (i.e., EEG and video recording) from the epileptologists at the clinical sites. Based on this training database, all the detection and rejection parameters were determined and remained unchanged in the performance validation study.

2.4. Performance Evaluation

Determining True and False Detections

A detection by the software tested (IdentEvent or Reveal) was considered a true detection when the time of the detection was within two minutes of the seizure onset; onset time was determined by the review panel. A detection was considered a false detection when the detection time was beyond two minutes of any electrographic event identified by the review panel. It is worth noting that the two-minute window (reasonable but arbitrary) used here is only for determining whether a detection is true or false. When used clinically, the user does not necessarily need to review two minutes of EEG for each detection, especially for those detections that are obviously false. However, if the detection is a true detection, the user will always want to look back and forth around the detection.

A better determination of true and false detections would be based on the seizure onset and offset times determined by the EEG review panel. However, it was anticipated that the inter-rater variability regarding the seizure offset time was much greater than that of determining a seizure onset time. This was due to the fact that, in most of the electrographic seizures (especially those with generalization), ictal scalp EEG signals exhibited significant muscle and/or movement artifacts in the latter part of the ictal period, and therefore it was often very difficult to precisely determine when the seizure actually terminated (offset). Therefore, in this performance testing study, a systematic two-minute “ictal” interval was used to determine when a detected event was true or false. The two-minute window was chosen because seizures in Epilepsy Monitoring Units (EMUs) are often shorter than two minutes. Furthermore, for the purpose of seizure detection software intended for use in post-hoc EEG review, users will review the EEG recording before and after each detected event. Thus, even when a true detection is two minutes after the seizure onset, the software will still be able to help a user quickly identify and review the event.

Estimating Performance Statistics - Detection Sensitivity and False Detection Rate

a. Detection Sensitivity

Detection sensitivities were not calculated in individual subjects because the recordings of most subjects contained only a small number of seizures (mean 2.65, as determined by the EEG review panel). We calculated an overall detection sensitivity using the total number of true detections divided by the total number of electrographic seizures (= 146 in 55 test subjects) in order to provide an estimation of the true sensitivity of the test algorithm. Because the outcome responses (detected or not detected) belong to clustered binary data, the standard error of the estimation needs to be adjusted by taking into account the correlation within each cluster (i.e., subject) (Rao and Scott, 1992; Durkalski et al., 2003). The equation for calculating the adjusted standard error is:

| (eq.1) |

where K = total number of test subjects (with seizures only); N = total number of test seizures; xi = number of true detections for subject i; ki = number of test seizures in subject i, and p@ = estimated overall sensitivity.

We report an asymptotic 95% confidence interval (using an adjusted standard error and an asymptotic result; Rao and Scott, 1992) as well as an empirical 95% confidence interval (bias-corrected) and an accelerated (BCa) bootstrap confidence interval (Efron and Tibshirani, 1994) of the detection sensitivity for both IdentEvent and Reveal (with perception score = 0.5, 0.8, and 0.9). The bootstrap analysis was performed using the statistical software Splus/R.

b. False Detection Rate

Because this study included a sufficient duration of EEG recording (range ~14.25 to 30.521 hours; mean = 21.97 hours; Fig. 2), including different physiologic states (Table 1) in each test subject, we calculated the false detection rate for each test subject as the number of false detections divided by the total number of EEG hours. Similarly, the 95% confidence interval of the mean false detection rate was calculated as a BCa bootstrap confidence interval by re-sampling subjects.

Statistical Comparison of Detection Performance

a. Comparison of Detection Sensitivity

Statistical comparison of the detection performance between IdentEvent and Reveal included detection sensitivity and false detection rate. The overall hypothesis was that, compared to Reveal, IdentEvent offered detection sensitivity not inferior to Reveal but with a significantly improved false detection rate. Therefore, statistical tests were performed with respect to the detection sensitivity as well as the false detection rate. The two hypotheses were:

- Hsensitivity: The overall seizure detection sensitivity of IdentEvent software was not inferior to that of Reveal software. (Non-inferiority hypothesis; δ = −10%)

- Hfalse detection rate: The mean false detection rate (FDR) of IdentEvent software was superior to (smaller than) that of Reveal software. (Superiority hypothesis)

As described above, the outcome responses of detecting a seizure (detected or not detected) belong to clustered binary data because there are multiple responses within many subjects. Furthermore, both IdentEvent and Reveal were tested on the same datasets. Therefore, to test the null hypothesis (H0) with the observed within-subject data structure, we applied the bootstrap method to re-sample (3000 times) the test subjects and estimate the (BCa) 90% confidence interval of the true difference (τ) between two overall detection sensitivities, where the lower limit of the 90% confidence interval was used to test the non-inferiority hypothesis with a significance level of 0.05 (one-sided test). The H0 was rejected when the lower limit of the bootstrap confidence interval was greater than δ (−10%). A value of −10% accepted difference (δ) was chosen for comparisons with a wide range of threshold settings for Reveal (perception scores = 0.5, 0.8, and 0.9, with 0.5 being the highest sensitivity setting).

b. Comparison of False Detection Rate

As described previously, false detection rates (of IdentEvent and Reveal) were estimated for each test subject because sufficiently long EEG data and various patient physiologic states were included in the test EEG data for each subject. Therefore, to test the hypothesis Hfalse detection rate: The mean false detection rate of IdentEvent was superior to (less than) that of Reveal, we applied a nonparametric paired two-sample test, the Wilcoxon signed-rank test. The null hypothesis (H0: the two detection devices have equal mean false detection rates) was rejected when the p-value of the test was < 0.05.

3. Results

3.1. Detection Sensitivity

The detection sensitivity for each test subject (with at least one seizure identified by EEG review panel) from IdentEvent is shown in Fig. 6. Overall observed sensitivity achieved almost 80% (79.45%) with an analytical standard error (by equation 1) equal to 0.0436. Therefore, an asymptotic 95% confidence interval of the IdentEvent detection sensitivity can be constructed as [0.71, 0.88]. The 95% BCa bootstrap confidence interval (number of bootstrap re-sampling = 3,000) of the IdentEvent detection sensitivity = [0.70, 0.87], which is similar to the asymptotic confidence interval.

Figure 6.

IdentEvent’s detection sensitivity for each individual subject. The independent EEG reviewers identified at least one seizure in 46 subjects.

When the same test dataset was processed by Reveal using its default setting for scalp EEG (perception score = 0.5), overall observed sensitivity achieved almost 81% (80.8%) with its analytical standard error equal to 0.039. Therefore, an asymptotic 95% confidence interval can be constructed as [0.73, 0.88] ([0.72, 0.88] given by bootstrap). Using less sensitive settings, which would give fewer false detections, perception score = 0.8 and 0.9, the observed sensitivity achieved 76% (se = 0.046 with 95% CI = [0.67, 0.85]) and 74% (se = 0.049 with 95% CI = [0.64, 0.84]), respectively.

3.2. False Detection Rate

The IdentEvent false detection rate (per 24 hours) for each subject is shown in Fig. 7. In the test dataset, IdentEvent performed with a mean false detection rate of 2.1 per 24 hours, with its bootstrap standard error equal to 0.49. The 95% BCa bootstrap confidence interval (number of bootstrap re-sampling = 3,000) of the IdentEvent false detection rate = [1.3, 3.3].

Figure 7.

IdentEvent’s false detection rate per 24 hours for all test subjects.

For Reveal, with a 0.5 perception score setting, the false detection rate (per 24 hours) performed with a mean false detection rate of 13.1 per 24 hours, with its bootstrap standard error equal to 1.76. The 95% BCa bootstrap confidence interval = [10.1, 17.1]. With perception scores = 0.8 and 0.9, Reveal performed a mean false detection rate of 7.9 (se = 1.16 with 95% CI = [5.83, 10.51]) and 5.7 (se = 0.93 with 95% CI = [4.13, 7.84]) per 24 hours, respectively.

3.3. Statistical Comparison of Detection Performance

a. Comparison of Detection Sensitivity

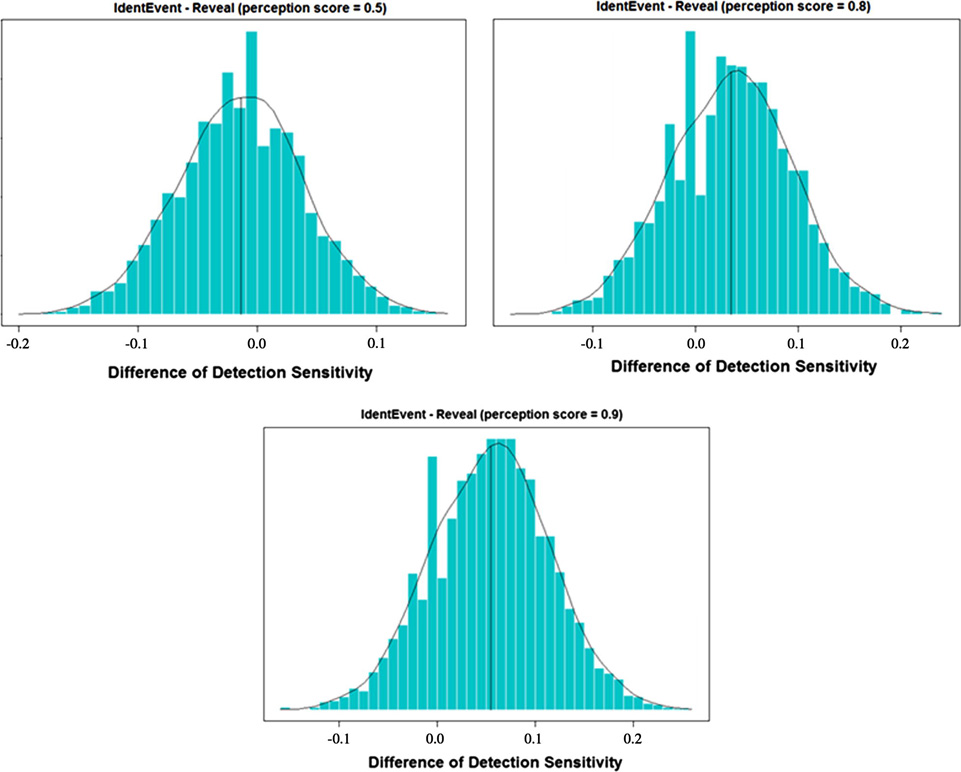

As described in section 2.4, the comparison hypothesis was tested by constructing the 95% confidence interval of the mean difference between two algorithms. The bootstrap BCa confidence interval was estimated as (−0.0997, 0.075). Because the lower limit of the interval was greater than δ (= −0.1), the null hypothesis was rejected. Therefore, we concluded that, with the 0.5 perception score setting on Reveal, the detection sensitivities of IdentEvent was not inferior to that of Reveal, under a 5% significance level. There were similar results when Reveal’s perception scores = 0.8 and 0.9, where their 95% bootstrap BCa confidence interval was estimated as (−0.064, 0.129) and (−0.042, 0.178), respectively. The bootstrap distributions of sensitivity differences are shown in Fig. 8.

Figure 8.

Bootstrap distribution of the sensitivity difference (sensitivityIdentEvent – sensitivityReveal). Reveal perception scores were set to 0.5, 0.8, and 0.9.

b. Comparison of False Detection Rate

The comparison hypothesis of false detection rate was tested by the Wilcoxon signed-rank test. The observed mean false detection rates were 2.1 and 13.1 per 24 hours from IdentEvent and Reveal (perception score = 0.5), respectively. The Wilcoxon signed-rank test yielded a p-value < 0.001, which suggested that, at a 0.05 significance level, the mean false detection rate from IdentEvent was significantly smaller than that of Reveal when its perception score was set at 0.5. The bootstrap 95% confidence interval [−15.0, −8.0] further confirmed this finding.

The statistical test had a p-value < 0.001 when Reveal’s perception score = 0.8, which also suggested that, at a 0.05 significance level, the mean false detection rate from IdentEvent was again significantly smaller. The bootstrap 95% confidence interval for IdentEvent and Reveal (perception score = 0.5), respectively [−8.5, −3.6], further confirmed this finding. When the Reveal perception score = 0.9, the p-value = 0.0026, which again suggested that, at a 0.05 significance level, the mean false detection rate from IdentEvent was significantly smaller. The bootstrap 95% confidence interval [−5.8, −1.9] further confirmed this finding. The bootstrap distributions are shown in Fig. 9.

Figure 9.

Bootstrap distribution of the false detection rate difference (false detection rateIdentEvent – false detection rsteReveal). The Reveal perception score was set to 0.5, 0.8, and 0.9.

4. Discussion

4.1. Summary of the Findings

This study validated the performance of an offline seizure detection algorithm and demonstrated that a novel algorithm, embodied in IdentEvent, resulted in a significantly improved false detection rate with comparable detection sensitivity, when compared to the commercially available software, Reveal. With pre-fixed parameter settings, the overall detection sensitivity of IdentEvent was 79.5% with a false detection rate of 2 per 24 hours, whereas the sensitivities of Reveal were 80.8%, 76%, and 74% using three detection thresholds (perception scores), with corresponding false detection rates of 13, 8, and 6 per 24 hours. The statistical comparisons revealed that: (1) the detection sensitivity of IdentEvent was not inferior to that of Reveal (at each of the three settings tested); and (2) the false detection rate of IdentEvent was significantly smaller than that of Reveal at each of the three settings tested.

4.2. Detection Delay

Seizure detection delay, defined as the time elapsed between the first occurrence of clear changes in electrographic patterns due to seizure activity and the first detection time reported by an algorithm, was not explored in this study. This issue is clearly critical for a seizure detection algorithm coupled to an automated intervention system; typically, such a detection algorithm has been applied to intracranial EEG recordings (Peters et al., 2001; Tsakalis et al., 2006; Founta et al., 2007). A scalp EEG-based seizure detection algorithm is most often used for rapid off-line review of long-term EEG recordings or for online detection to alert health care staff when a seizure occurs, which could greatly benefit from a detector with a low rate of false detections resulting in less unnecessary work for the staff. From a validation point of view, the detection delay for these applications is less important than the ability to accurately detect the seizure’s occurrence - electrographic seizure onset times are sometimes indeterminate, even among experienced epileptologists.

4.3. Performance of Other Existing Scalp EEG-Based Seizure Detection Algorithms

Many scalp EEG automated seizure detection methods have been proposed in the last decade. By employing a Bayesian formulation to output a variable based on the probability that a section of EEG contains seizure activity, Saab and Gotman (2005) reported an algorithm that performed with a sensitivity of 78% and a false detection rate of 0.86/h (~ 21 per day) in a dataset of 360 hours of scalp EEG, including 69 seizures in 16 subjects. The algorithm had a median detection delay of 9.8 seconds, which may have contributed to its high false detection rate.

Based on power spectral analysis techniques, Hopfengärtner et al. (2007) reported the performance of an offline seizure detection algorithm in a total of 3248 hours of scalp EEG recordings with 148 seizures from 19 subjects. The algorithm was evaluated under three different EEG montages (reference to Fz-Cz-Pz average, reference to common average, and bipolar) in two frequency bands (3–12 Hz and 12–18 Hz). In the 3–12 Hz band-passed filtered EEG signals, the method yielded an average sensitivity of 90.9%, 59.5%, and 45.2%, respectively, with corresponding false detection rates of 0.29 per hour (~ 7 false detections per 24 hours), 0.03 per hour (~ 0.7 per 24 hours), and 0.04 per hour (~ 1 per 24 hours). The algorithm performed with lower detection sensitivities (< 60%) in the 12–18 Hz band-passed filtered signals. Although the detection performance was acceptable with the Fz-Cz-Pz-averaged reference EEG in the lower frequency band, performance data from additional subjects were needed to determine the robustness of the algorithm.

Meier et al. (2008) recently reported a new approach for automatic online and real-time seizure detection in scalp EEG. The performance of the algorithm was assessed in EEG recordings from 57 subjects with a total of 43 hours of EEG segments (91 seizures). Without including “subclinical” (or electrographic-only) seizures in the test seizure sample, which are not infrequent in EMU EEG recordings and may constitute all of a particular patient’s ictal events, the algorithm was reported to achieve a mean sensitivity of 90% with a mean false detection rate of over 12 false detections per 24 hours (mostly from the EEG segments with amplitude-depression seizures). Inclusion of subclinical seizures in the test seizure sample would have enhanced the applicability of this performance testing study.

4.4. Discrepancy of Reveal’s False Detection Rate Observed in This Study from What Has Been Reported

Reveal’s false detection rates reported in this study (5.7 – 13.1 false positives per day) were slightly higher than those previously reported (2.4 – 14.4 false positives per day) (Wilson et al., 2004). One possible explanation for this discrepancy is that the reported false detection rate estimation was based exclusively on EEGs recorded from non-epileptic subjects. Therefore, the evaluations of sensitivity and false detection rate were in two different subject populations. This design is generally valid in clinical trials for binary diagnostic tests where sensitivity is evaluated in a patient population and specificity is evaluated in normal controls. However, as an adjuvant diagnostic tool, seizure detection applications are designed to aid in determining when a seizure occurred, not to make a binary assessment of normal vs. abnormal for the patient. Therefore, the sensitivity should be evaluated on the presence of seizure events, and specificity (false detections) should be evaluated on EEG samples when seizure events are not present within the same patient population that the software is intended to be used. Limiting specificity testing to a normal (non-epileptic) population would yield misleadingly lower false detection rates and not provide a true assessment of how accurately the software would perform in actual clinical use.

The present performance testing used EEG recordings from patients with demonstrated or suspected epilepsy, the primary target population for seizure detection software. There were no exclusion criteria with respect to the recording quality insofar as the recordings were deemed clinically acceptable. For these reasons, we believe that the performance statistics reported here were more accurate and the study design was more appropriate (with respect to performance testing) than that reported in Wilson et al. (2004).

4.5. User Tuneability

This study’s aim was to develop an automated seizure detection system that was ready to provide clinically useful information for patients undergoing long-term EEG monitoring without parameter input from clinical users. This aim was achieved by validating the system performance using pre-fixed parameter settings in a standard EEG recording montage. However, ictal EEG patterns in certain patients will not be captured, possibly due to an unusual location of the reference channel or ictal EEG patterns exhibiting higher amplitudes than most others. For such cases, it would be desirable for the detection system to offer an easy-to-use tuneability so that users could switch to a different detection montage or adjust the artifact rejection threshold of amplitude from the default settings. Similarly, tuneability may also be helpful to reduce false detections in certain patients when the user observes that the same EEG pattern causes false detections repeatedly.

4.6. PMRS Review Feature

In addition to the seizure detection report, scanning PMRS values over time provided an efficient way to identify seizures in long-term recordings because: (1) PMRS can be scanned in a much longer time window (e.g., 30 minutes per page) than raw signals; (2) PMRS is very sensitive to sudden changes in signal properties; and (3) most importantly, the pattern of PMRS during an ictal period can be visually distinguished from that of artifacts (see Fig. 10 for an example).

Figure 10.

PMRS time series 15 minutes before and after a detection. Top: Detection of a seizure. The PMRS values during the entire ictal period are significantly lower than other periods such that it creates a concave. Bottom: Detection of a sudden sleep stage change. PMRS exhibits a sharp drop during the change, but the values quickly resume to normal after the change.

4.7. Computation Performance

Running on a desktop PC with an Intel dual core 2 GHz processor, the IdentEvent algorithm processed a 24-hour standard scalp EEG recording in slightly less than 30 minutes (>50 times faster than real time). As a fully threaded application, IdentEvent users can expect enhanced performance on systems with faster processors or additional cores. This performance suggested that the IdentEvent detection algorithm will be clinically useful for the identification of recorded seizures and timely EEG reviews.

4.8. Detections for other clinical settings and patient populations

In this study, the detection algorithm was evaluated in a population of adult patients admitted to EMUs for diagnostic or pre-surgical evaluation. Reliable seizure detection is equally important in more acute clinical settings, such as intensive care units and emergency departments, and for pediatric patients of all ages. However, the heterogeneity of EEG background activities and ictal patterns that exist among these diverse clinical settings and patient populations may confound the assessment of the algorithm performance. We plan to develop and test algorithm performance in a pediatric population where, for example, detection of prolonged generalized spike and wave discharges will be assessed.

4.9. Limitations and Future Improvement

Limitations exist for IdentEvent’s ability to detect seizure events such as those that occur with little or no scalp EEG changes. Furthermore, IdentEvent can miss seizure events when the EEG is highly dominated by movement or muscle artifact because IdentEvent systematically rejects EEG epochs with abnormally high amplitude variation and high signal frequency, respectively. We anticipate that future addition of an automatic artifact removal method (LeVan et al., 2006) will be able to improve the detection performance with regard to these issues. In addition, with additional algorithm training and validation testing, we expect that IdentEvent can also be used for analyzing long-term EEG recordings from intensive care units and pediatric patient populations.

Supplementary Material

Acknowledgments

This work was supported by 5R01NS050582 to JCS and 1R43NS064647 to DSS from NIH-NINDS. Co-authors DSS, RTK, and JCS have a financial interest on the described seizure detection software.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960;20(1):37–46. [Google Scholar]

- Durkalski VL, Palesch YY, Lipsitz SR, Rust PF. Analysis of clustered matched-pair data for a non-inferiority study design. Stat Med. 2003;22:279–290. doi: 10.1002/sim.1385. [DOI] [PubMed] [Google Scholar]

- Efron B, Tibshirani RJ. An Introduction to the Bootstrap. Boca Raton: Chapman & Hall/CRC; 1994. [Google Scholar]

- Founta KN, Smith R. A novel closed-loop stimulation system in the control of focal, medically refractory epilepsy. Acta Neurochir Suppl. 2007;97(2):357–362. doi: 10.1007/978-3-211-33081-4_41. [DOI] [PubMed] [Google Scholar]

- Hopfengärtner R, Kerling F, Bauer V, Stefan H. An efficient, robust and fast method for the offline detection of epileptic seizures in long-term scalp EEG recordings. Clin Neurophysiol. 2007;118:2332–2343. doi: 10.1016/j.clinph.2007.07.017. [DOI] [PubMed] [Google Scholar]

- Khan YU, Gotman J. Wavelet-based automatic seizure detection in intracerebral electroencephalogram. Clin Neurophysiol. 2003;114(5):898–908. doi: 10.1016/s1388-2457(03)00035-x. [DOI] [PubMed] [Google Scholar]

- Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. [PubMed] [Google Scholar]

- LeVan P, Urrestarazu E, Gotman J. A system for automatic artifact removal in ictal scalp EEG based on independent component analysis and Bayesian classification. Clin Neurophysiol. 2006;117:912–927. doi: 10.1016/j.clinph.2005.12.013. [DOI] [PubMed] [Google Scholar]

- Meier R, Dittrich H, Schulze-Bonhage A, Aertsen A. Detecting epileptic seizures in long-term human EEG: A new approach to automatic online and real-time detection and classification of polymorphic seizure patterns. J Clin Neurophysiol. 2008;25(3):119–131. doi: 10.1097/WNP.0b013e3181775993. [DOI] [PubMed] [Google Scholar]

- Peters TE, Bhavaraju NC, Frei MG, Osorio I. Network system for automated seizure detection and contingent delivery of therapy. J Clin Neurophysiol. 2001;18(6):545–549. doi: 10.1097/00004691-200111000-00004. [DOI] [PubMed] [Google Scholar]

- Pincus SM. Approximate entropy as a measure of system complexity. Proc Natl Acad Sci USA. 1991;88:2297–2301. doi: 10.1073/pnas.88.6.2297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rao JNK, Scott AJ. A simple method for the analysis of clustered binary data. Biometrics. 1992;48(2):577–585. [PubMed] [Google Scholar]

- Saab ME, Gotman J. A system to detect the onset of epileptic seizures in scalp EEG. Clin Neurophysiol. 2005;116:427–442. doi: 10.1016/j.clinph.2004.08.004. [DOI] [PubMed] [Google Scholar]

- Tsakalis K, Chakravarthy N, Sabesan S, Iasemidis LD, Pardalos PM. A feedback control systems view of epileptic seizures. Cybern Syst Analysis. 2006;42(4):483–495. [Google Scholar]

- Wilson SB, Scheuer ML, Plummer C, Young B, Pacia S. Seizure detection: correlation of human experts. Clin Neurophysiol. 2003;114(11):2156–2164. doi: 10.1016/s1388-2457(03)00212-8. [DOI] [PubMed] [Google Scholar]

- Wilson SB, Scheuer ML, Emerson RG, Gabor AJ. Seizure detection: evaluation of the Reveal algorithm. Clin Neurophysiol. 2004;115:2280–2291. doi: 10.1016/j.clinph.2004.05.018. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.