Abstract

Technological innovation— broadly defined as the development and introduction of new drugs, devices, and procedures— has played a major role in advancing the field of cardiothoracic surgery. It has generated new forms of care for patients and improved treatment options. Innovation, however, comes at a price. Total national health care expenditures now exceed $2 trillion per year in the United States and all current estimates indicate that this number will continue to rise. As we continue to seek the most innovative medical treatments for cardiovascular disease, the spiraling cost of these technologies comes to the forefront. In this article, we address 3 challenges in managing the health and economic impact of new and emerging technologies in cardiothoracic surgery: (1) challenges associated with the dynamics of technological growth itself; (2) challenges associated with methods of analysis; and (3) the ways in which value judgments and political factors shape the translation of evidence into policy. We conclude by discussing changes in the analytical, financial, and institutional realms that can improve evidence-based decision-making in cardiac surgery.

Keywords: health policy, cost effectiveness, technology, clinical trials

In few fields of public policy are the use and cost of services so powerfully driven by rapid innovation as they are in medicine, where new drugs, procedures, and devices continuously emerge. Cardiothoracic surgery is particularly innovative, with new forms of surgical care being introduced at a high rate that offer the promise of reducing pain, speeding recovery, and ultimately decreasing morbidity and mortality. Unfortunately, along with expanding diagnostic and therapeutic options, health care costs have risen dramatically. According to the Centers for Medicare and Medicaid Services, over the past 3 decades health care spending has grown at an average annual rate of 2.5% faster than the economy as measured by the nominal gross domestic product.1 Annual spending on health care rose to $2 trillion in 2005 and is estimated to reach $4 trillion (20% of the gross domestic product) by 2015. Medical care for cardiovascular diseases accounted for 6 of the 20 most expensive conditions billed to Medicare in 2006, totalling $103 billion2 (Table 1).

Table 1.

Top 10 Most Expensive Conditions Billed to Medicare in 2006

| Rank | Principal Diagnosis | Total National Hospital Bill (millions) |

Percentage of National Bill |

Number of Hospital Stays (thousands) |

|---|---|---|---|---|

| 1 | Coronary artery disease | $29,245 | 6.8 | 659 |

| 2 | Congestive heart failure (CHF) | $23,915 | 5.4 | 835 |

| 3 | Sepsis | $20,319 | 4.8 | 430 |

| 4 | Acute myocardial infarction (AMI, heart attack) | $19,090 | 4.3 | 384 |

| 5 | Pneumonia | $17,541 | 4.0 | 729 |

| 6 | Osteoarthritis | $16,017 | 3.6 | 423 |

| 7 | Complication of device, implant, or graft | $15,965 | 3.6 | 370 |

| 8 | Respiratory failure, insufficiency, arrest (adult) | $14,869 | 3.4 | 251 |

| 9 | Cardiac dysrhythmias | $13,278 | 3.0 | 491 |

| 10 | Acute cerebrovascular disease (stroke) | $10,855 | 2.4 | 351 |

Adapted with permission from Andrews RM: The national hospital bill: the most expensive conditions by payer, 2006. HCUP Statistical Brief #59. Rockville, MD, Agency for Healthcare Research and Quality, September 2008. Available at: http://www.hcup-us.ahrq.gov/reports/statbriefs/sb59.pdf.

Many economists and policymakers believe that technological advances are a key driver of health expenditure growth.3 In response, policymakers feel an intensifying imperative to manage both the health and the economic impact of new and emerging technologies. To inform public policy and budgetary decisions, interest has increased in developing a more rigorous clinical and economic evidence base. Within the American Recovery and Reinvestment Act of 2009, $1.1 billion in research dollars has been allocated for comparative effectiveness research.4 Nevertheless, despite increased investment in evaluative research, managing innovation in health care technology remains a formidable task.

In this article, we address the 3 following challenges in managing the health and economic impact of new and emerging technologies in cardiothoracic surgery: (1) challenges associated with the dynamics of technological growth itself; (2) challenges associated with our methods of analysis; and (3) the ways in which political factors and value judgments shape the translation of evidence into policy. Through an understanding of these challenges we can move beyond simply identifying the problem of rising costs in health care technology to initiating change in our evidence-based models and ultimately our policy decisions.

Dynamics of Technological Change

Cardiothoracic surgery has a rich tradition of innovation. From the development of cardiopulmonary bypass to the design of devices for the failing heart, technology continues to transform the field. However, when an area of medicine is so heavily impacted by technology, managing the dynamics of technological growth presents several significant challenges. At the onset, policymakers must first understand the many areas within cardiothoracic surgery where technological growth is occurring. Through this understanding policymakers cannot only make decisions based on the costs of new technology, but also on how these innovations may impact the field and affect delivery of care.

Coronary revascularization is one area of cardiothoracic surgery that has been heavily influenced by technological growth. Driven in part by the need to develop less invasive approaches for the management of coronary artery disease, surgeons have created numerous revascularization strategies, including off-pump coronary artery bypass grafting (CABG), minimally invasive CABG, endoscopic CABG, hybrid revascularization, and transmyocardial revascularization. In the area of heart failure, mechanical circulatory support devices, including ventricular assist devices (VADs) and ventricular replacement devices, have played a major role in advancing the field for both adults and children. Moreover, as cell-based strategies begin to gradually translate from the laboratory to clinical trials, management of advanced heart failure will continue to be transformed. Valvular surgery has undergone significant change from improvements in valves themselves to new approaches to valvular surgery, including minimally invasive surgery, robotic surgery, and now clinical trials of percutaneous repairs. Within cardiac surgery, there has been a significant increase in the number of concomitant ablation procedures performed for atrial fibrillation. The growth of ablation procedures has been driven, in part, by the development of a variety of ablation catheters that allow ablation to be performed with greater technical ease than the surgical approach used with the original Cox-Maze procedure. In the management of aortic pathology, including aortic aneurysms and dissections, thoracic endovascular aortic repair has provided surgeons with new, less invasive options for the management of aortic disease. Finally, within the field of general thoracic surgery, video-assisted thoracic surgery has significantly impacted the approach to lung resections, esophageal resections, lung volume reduction surgery, and thoracic sympathectomy. Technological growth has transformed many of the most common cardiothoracic surgical procedures and has led to new operations for previously untreatable disease, like hypoplastic left heart syndrome.

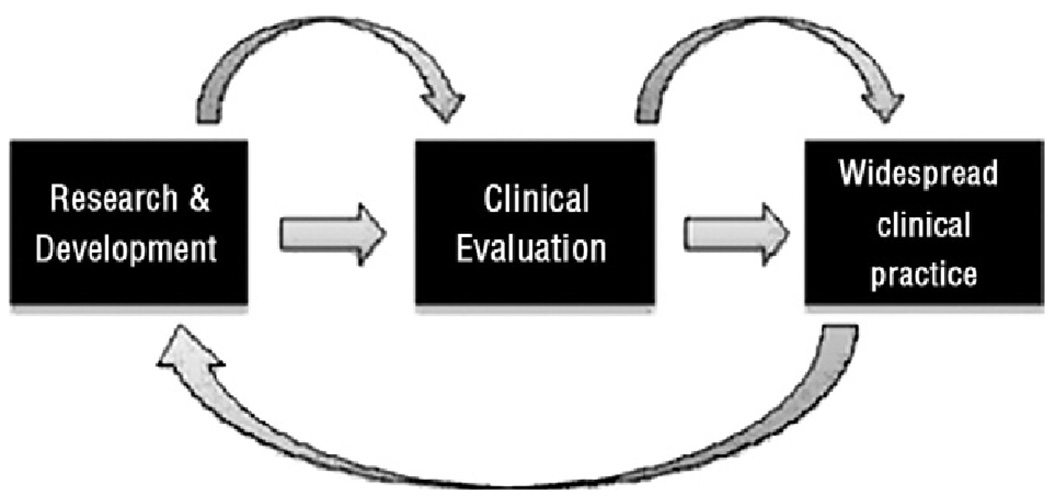

Understanding the rate of innovation and patterns of growth in new surgical procedures, however, is not the only challenge. Policymakers must understand and contend with the fact that much innovation occurs after a new procedure is introduced into widespread practice—“learning by doing” is an important component of technological change in surgery. As part of this process, physicians begin to understand how to best use a new procedure within the context of other therapeutic options, operator and institutional learning curves begin to plateau, and patient selection is refined, which ultimately improves out-comes. Moreover, the experience gained from using technology in clinical practice may provide important feedback to the research enterprise, enabling bench scientists, clinicians, and engineers to further modify a technology (Fig. 1).

Figure 1.

Flow of technological change.

The evolution of the left ventricular assist device (LVAD) provides a strong example of learning by doing in clinical practice. The Randomized Evaluation of Mechanical Assistance for the Treatment of Congestive Heart failure (REMATCH) trial was a multicenter study designed to compare long-term implantation of LVADs with optimal medical management for patients with end-stage heart failure who required, but did not qualify to receive, cardiac transplantation.5 The results of this trial led to FDA approval and Medicare reimbursement for LVADs for long-term use. As this procedure disseminated into practice, feedback from clinicians resulted in critical changes to the device, like locking screw rings to prevent detachment of the blood-transport conduits to and from the pump, as well as stimulating the development of new generation devices to contend with uncorrectable limitations of the first-generation device. Meanwhile, clinicians improved their management of LVAD patients by modifying operative techniques and developing clinical protocols to prevent and manage driveline infections, which were the Achilles heel of these devices. They also began to explore patient characteristics that defined optimal candidates for this particular therapy.6 In the 2 years following Medicare approval, an analysis of a postmarketing registry showed that the overall survival rate of LVAD patients remained similar to that seen in the trial. However, stratification of destination therapy candidates by risk factors, such as poor nutrition, hematological abnormalities, and markers of end organ dysfunction, correlated with dramatically different 1-year survival rates.7 Insights into these factors improved patient selection.

Such incremental improvements in procedure and clinical management typically tend to expand the number of patients who benefit within a given disease category. For example, only 4% of coronary artery disease patients treated with CABG surgery today would have met the eligibility criteria of the trials that established its initial efficacy.8 Over time, improvements in surgical technique expanded the use of CABG surgery to patients with acute myocardial infarction, patients with acute cardiogenic shock, elderly people, and patients with multiple comorbidities—a pattern that holds for many other technologies. Historically, type B aortic dissections, for example, have been treated with antihypertensive therapy as the risks of open surgery outweighed the potential benefits. As the feasibility and safety of stentgraft therapy for thoracic aneurysms has been investigated, the technique has gradually been applied to aortic dissections to avert aneurismal dilation and rupture.9 Similarly, the percutaneous aortic valve represents a technology that, as part of the Placement of Aortic Transcatheter Valve trial, is currently being evaluated in a limited population but will likely expand to a broader group of patients over the next decade. Thus, the target population for procedures often expands to include less sick patients (for whom the risks of the procedure are now acceptable) and sometimes to sicker patients, who initially were too high risk to be considered candidates.

The fact that target populations expand highlights the elasticity of demand, which can pose challenges for policymakers, who may watch evidence-based answers morph before their eyes into new questions. These highly dynamic patterns of evolution and adaptation, in turn, create challenges for our evaluative methods.

Challenges in Measuring Health and Economic Effects

Clinical Outcomes

Managing technology is a multifaceted, dynamic task. Better information on the efficacy and cost-effectiveness of medical technologies can guide policymaking, and industrialized countries have expanded their investment in clinical evaluative research. However, challenges in the analytical enterprise exist.

Randomized controlled trials (RCTs) remain the gold standard in clinical evaluation. Rigorous evidence from RCTs and other well-controlled clinical studies can inform policy decisions about the efficacy and safety of a new technology. Patients, physicians, and policymakers want to establish the benefits of new technologies early and introduce them into general use guided by evidence. As such, there has been an increase in RCTs over time. RCTs in surgery, however, face unique challenges compared to the well-developed schemes used to test pharmaceutical treatments.

For example, complete masking, an important technique for controlling observational bias, is often difficult, if not impossible, to carry out in surgical trials. Also, while a pharmaceutical agent generally does not undergo change while it progresses through the clinical trials process, surgical procedures typically undergo extensive refinement during the development process. Thus, the results of a comparative surgical trial will depend on when in the evolution of a procedure the comparison was made. This phenomenon creates a challenge when deciding when to bring a surgical procedure or device to clinical trial. A delay in assessment may result in widespread use of a technology propagated by single-institution or retrospective reports of success that eliminate the equipoise necessary to justify randomization of patients. Conversely, evaluating a procedure too early will potentially result in increased morbidity and mortality associated with the learning experience rather than the technology itself; surgical trials need to account for learning curves. RCTs are also typically conducted in specialized centers with well-defined populations. Trials of emerging procedures often call into question how significantly the specialized skill of a surgeon and volume of a clinical center will affect the generalizability of the results. In addition, achieving equipoise for randomization may be more difficult to achieve in trials that compare surgical to pharmacologic treatments (as patient and physician preferences may be strong for such radically different treatment options) than for trials comparing 2 pharmaceuticals.

Finally, surgical trials face obstacles as target populations are typically much smaller than those for nonsurgical therapies. This affects the design of trial endpoints. In an ideal scenario, a clinical endpoint is relevant, easy to interpret, and sensitive to treatment differences. At times, however, a single primary endpoint is undesirable because either clinically important events are relatively infrequent (and would require the randomization of a large number of patients) or the treatment effect is manifested on a variety of important endpoints. A composite endpoint may highlight the difference between treatment arms by combining different endpoints (eg, in LVAD trials by combining mortality and device reliability in device replacement-free survival). Composite endpoints have several potential advantages—increased statistical precision and efficiency (based on higher event rates), smaller and less costly sample sizes and shorter study lengths. There are also several disadvantages.10 Most notably, describing the clinical benefit claim on a composite endpoint may be difficult, and it is also difficult to describe a scoring system that, on a sound scientific basis, weighs the relative importance of the parameters of a composite.11 This is especially problematic if the components of a composite endpoint are not consistently superior for one therapy.

The recently published Synergy between percutaneous coronary intervention (PCI) with Taxus and Cardiac Surgery (SYNTAX) trial, in which 1800 patients with left main or 3-vessel coronary artery disease were randomized to undergo CABG or PCI to determine which was the better revascularization strategy, demonstrates the potential challenge with composite endpoints.12 A cursory review of the study would lead to the conclusion that CABG is the better treatment because it resulted in lower rates of the combined endpoint of major adverse cardiac events or cerebrovascular events at 1 year. A closer analysis, however, demonstrates that the composite endpoint, which included death from any cause, stroke, myocardial infarction, or repeat revascularization, was largely driven by the need for revascularization in the PCI group. Both groups at 12 months had similar rates of death from any cause and myocardial infarction.12 However, although not powered for this individual component of the composite, stroke was lower in the PCI group. These results make interpretation of trial outcomes challenging. In addition to understanding the effects of a composite, the follow-up time is important to consider. In SYNTAX, the follow-up time of 12 months limits the evaluation of long-term efficacy of PCI versus CABG in patients with severe coronary artery disease. Often in surgical trials, adverse events are front loaded and benefits are seen over a longer time. In this context, making health policy decisions on the results of randomized trials becomes challenging because the true risks and benefits may not be apparent at the end of the trial.

Thus, although RCTs can provide a firm foundation of expertise about the efficacy and safety of a novel procedure at a certain point in its evolution, the importance of learning by doing means that the results of a trial may become less relevant over time. As technologies evolve in clinical practice, therefore, evaluations should be revisited. Observational studies and “pragmatic” or “practical” RCTs in high-cost, high-prevalent conditions can be useful for analyzing changing outcomes.

Over the past decade, national clinical registries have grown in popularity due to increased attention to outcomes research and quality assurance. The Society of Thoracic Surgeons National Database, established in 1989, serves as one such example. The Society of Thoracic Surgeons database currently captures outcomes from 85% of the cardiac surgery centers in the US and includes 3.6 million patient records. In addition, the American College of Cardiology with its national cardiovascular data registry contains over 1 million patient records. On a local level, many states, including New York, Massachusetts, and California, require that all centers performing cardiac surgery collect patient data and report outcomes. A unique example of a recently created registry, which is sponsored by Centers for Medicare and Medicaid Services, the US Food and Drug Administration (FDA), and the National Heart, Lung, and Blood Institute, is the Interagency Registry for Mechanically Assisted Circulatory Support (INTERMACS). INTERMACS currently contains data from 88 academic medical centers and over 1500 patients who have received mechanical circulatory support devices (MCSDs). A unique feature of INTERMACS is the ability for continued, longitudinal assessment of MCSDs. As noted previously, during clinical trials a technology will only be studied for a relatively short period on a small, often homogenous study population. Changes in technology may not be captured during the course of a trial drawing into question the future benefits of a particular device or technique. Through INTERMACS, for example, MCSDs can be continuously assessed in an effort to study not only the diffusion of this technology but the patient characteristics that may aid in continued refinement of patient selection. Furthermore, adverse events and survival may be analyzed in relation to patient risk factors, device type, or whether a patient was receiving a device as a bridge to transplant or destination therapy. As the registry is continuously updated, changes in risk factors and outcomes may be monitored over time as technology evolves and clinical decision-making becomes more refined.

Registries, however, are not without limitations. With any registry there may be less monitoring of data quality than in a clinical trial; there is a significant financial burden on data collection and reporting, and the ability to adjust for important differences among patients when making comparisons may be hampered by limited knowledge of what patient characteristics affect the evolution of the disease. The newer methods of analysis, such as propensity scores, address some of these limitations, and registries, by nature of their patient volumes and longitudinal follow-up, are a valuable resource for ongoing assessment.

Moreover, if a procedure or device undergoes substantial incremental change or is used in a different patient population, another randomized trial to test the effectiveness of this new indication for use may be warranted. “Practical” clinical trials address questions that arise through further clinical use by selecting clinically relevant interventions to compare; by including diverse populations of patients from a variety of practice settings; and by collecting data on a broad range of health outcomes.13 The movement toward “large, simple” trials, which seek a broader representation of patients and practitioners, tries to make clinical research more efficient and economical while holding bias and imprecision at bay. These trials tend to be large and expensive, however, and public sector funds for this type of research have been limited, allowing for substantial uncertainty to continue.

Economic Outcomes

Increasingly, policymakers seek rigorous evidence not only about efficacy and safety but about the cost of new technologies to guide their decisions. In the past, cost effectiveness analysis (CEA) was often done after clinical evidence had been collected. The recent trend is to conduct these analyses prospectively within RCTs. However, cost is often a secondary endpoint—that is, trial sample size (based on a primary clinical endpoint) may be inadequate to show a definitive statistical difference in cost or cost-effectiveness. Statisticians and health economists continuously debate about preferred methodologies, that is, whether cost-effectiveness or net health benefit is the appropriate metric, and about how to construct coherent data summaries that will allow policymakers to evaluate research data with due regard for statistical uncertainty.14 There is also controversy on discounting, the assignment of values in defining quality-adjusted life years, and the inclusion of future lost earnings in cost calculations.15–17 Furthermore, one important limitation is that cost-effectiveness analyses often do not take technological change into account. Thus, economic analyses may need to be repeated as technology evolves to capture the true effect of the innovation.

LVADs offer an interesting case in the economic assessment of a rapidly evolving technology. The REMATCH trial clearly demonstrated, among patients with end-stage heart failure, better survival, functional status, and quality-of-life benefits in using LVADs for long-term support over medical management.5 Despite these benefits, it was evident during the study that the trial device, the Heartmate XVE, was plagued by several shortcomings, including limited durability and serious adverse events, such as bleeding, infections, and thromboembolism. These limitations led to both high resource usage and costs among the device group.18 The high cost of destination therapy during REMATCH, however, was driven largely by the cost of the index hospitalization, hospital readmissions, and need for device replacement. Blue Cross and Blue Shield projected initial cost-effectiveness ratios, based on limited information about the price of medical therapy, between $500,000 and $1.4 million per quality-adjusted life years, which exceeded all conventional benchmark measures of cost-effectiveness.19 As VAD technology evolved, surgeon learning curves began to plateau, postoperative management improved, and patient selection became refined, the cost-effectiveness ratios for VADs declined to a level that economists would consider cost-effective (a commonly accepted level is about $100,000 per life year saved).20 Thus, just as the dynamics of technological growth challenge assessment in RCTs, so too does rapid technological growth influence cost-effectiveness research.

Value Judgments and Public Policy

Our methods of assessment, such as the RCT and CEA, gain legitimacy from the same claims to strict scientific validity that medicine itself asserts, but, as with medical science, the interpretation and ultimate application of analytical findings may vary considerably. Specialized organizations, payment methods, consumer attitudes, and political interests all shape the movement of evidence into policy. Increasingly, surgical procedures include devices and biologicals (eg, stem cell transplantation), which require FDA approval. Major new procedures may also involve national coverage and reimbursement decisions by major insurers, such as Medicare. Even in the setting of the most rigorous clinical evidence, regulatory and reimbursement decisions depend heavily on the following value judgments: (1) are the benefits worth the risks and (2) are the costs worth the benefits? Our best analytical methods do not eliminate the vexing tradeoffs between the benefits provided (considering the available alternatives) and the acceptability of risk and cost incurred to achieve them. These judgments depend on the interests and values of stakeholders—scientists, physicians, patients, policymakers, purchasers, and insurance institutions.

A case in point can be found in flosequinan, an oral inotropic heart failure medication. This drug was approved in the early 1990s to improve quality of life and functional status, but after its introduction it was found to reduce survival and therefore was withdrawn from the United States market. When the risk-benefit tradeoff was later posed to patients with heart failure, however, 40% of those questioned would have accepted the higher risk of death (Ž5%) to achieve a better quality of life.21 Thus, value judgments are pivotal in regulatory decision-making, and outcomes depend heavily on the representation of stakeholder groups in advisory decision-making panels.

Another important policy decision is coverage and reimbursement, and whether cost effectiveness (CE) ratios should be used, as some health economists argue, as strict thresholds in making these decisions. The downside of using CE ratios as rigid thresholds may not leave room for important qualitative aspects to influence decision-making, such as whether a technology is mature or evolving. There is variation throughout the world on the degree and manner in which cost-effectiveness is used in making these decisions. In the UK, for example, the National Institute for Health and Clinical Excellence, founded in 1999, makes central recommendations to the National Health Services about the cost-effectiveness of particular treatments. Currently, Medicare does not explicitly consider costs in making coverage decisions. For LVADs, the National Institute for Health and Clinical Excellence recommended not funding these devices for long term use in the National Health Services (only for bridge to transplantation), while Medicare did approve coverage. As such, using CE ratios with a strict threshold (rather than providing guidance in combination with other factors) would ignore the prospect for improvement in technology and preclude the development of a promising therapy.

Conclusions

The cost of health care in the US continues to rise and many hope that the recent federal investment in rigorous assessment of both efficacy and cost-effectiveness of new technology will lead to better decisions regarding allocation of limited financial resources to the best available treatments. The design of rigorous randomized trials or otherwise well-controlled studies and economic analyses undoubtedly will provide a more solid foundation for clinical and policy decision-making. The rapid pace of technological growth, however, creates several challenges for policymakers.

Devices, drugs, and surgical techniques evolve during the course of their application. Analyses, therefore, are often a chapter behind the class, offering answers to yesterday’s questions. In addition, innovation in devices or improvements in surgical technique may expand the target population for a given technology beyond what was initially studied. The dynamics of technological growth create challenges for our methods of assessment in RCTs and CEA. Even the most rigorous evidence-based studies are caught in a ceaseless “question and answer” dynamic. RCTs, by their design, exclude some populations to whom the intervention will almost surely be applied. Current methods of resource-based costing also have certain limitations affected by technological growth. CEA performed at the time a technology is studied in a clinical trial does not typically take into account the potential for improvements in cost-effectiveness that will occur as, with ongoing learning, techniques improve, patient selection is refined, or device shortcomings are re-engineered. Thus, the pace of technology challenges our methods of assessment.

There are 3 potential areas where evidence-based decision-making can be improved. The first area for improvement is methodological. Randomized trials of novel procedures are challenging, but innovative trial designs, such as adaptive trial designs, are emerging that are promising. Moreover, pragmatic randomized trials can be designed to address issues that arise in further use or experience with a new procedure. Analytical means to compare effectiveness of different treatment modalities in a nonexperimental setting, such as propensity scores, are becoming more sophisticated, and the informatics technology to capture the needed data as part of the delivery of health care (rather than a separate research project) is growing stronger. Certain limitations of CEA may be overcome with methodological solutions. CEAs have incorporated approaches to deal with statistical uncertainty in evaluating empiric data through sensitivity analyses. However, sensitivity analyses most often focus on changing the discount rate or the price of the intervention being studied. They rarely account for more subtle, but highly impactful, forms of innovation, including both technological change and learning. For example, assessing changes in cost at different time points within a trial may provide a trajectory to estimate future improvements in cost associated with improvement of a given technique or device. Another basis for modeling change could draw upon adverse events that limit the net benefit of a technology; variations in clinical center and adverse event rates could indicate potential achievable performance.

The second area is strengthening the evaluative enterprise, which would require a stable and adequate funding base to support the acquisition of new data and the advancement of analytical tools. Trials of surgical procedures (especially if they do not involve a new drug or device) depend heavily on public sector funds, including the Agency for Healthcare Research and Quality, the National Institutes of Health (NIH), and the Veterans Affairs. The public sector, however, invests much less than the private sector in evaluative research (eg, it is estimated that the NIH spends about 10% of its budget on clinical trials and other evaluative studies). Several positive developments are underway that may ameliorate this situation. In particular, the recent emphasis on comparative effectiveness research in the American Recovery and Reinvestment Act of 2009 (with its $1.1 billion for studies aimed at comparative effectiveness), and the support by NIH of clinical trial networks, such as the Cardiothoracic Surgery Clinical Trials Network, offer unprecedented opportunities.22

The third area for improvement is to strengthen the process of translating research into public policy. Quantitative evidence rarely speaks for itself; that is, it tends not to unequivocally indicate to policymakers what course to take in allocating health care resources. Regulatory policymakers must struggle with value judgments as they weigh risks vs benefits; payers and stakeholders must contemplate costs and benefits in their full complexity. The decision-making process could be strengthened by patient groups, clinicians, and the representative public in the analysis of new technologies. Translating analysis into public policy is a highly dynamic process that involves not only an understanding of the limitations imposed on analytical techniques by technological growth but also value judgments as policymakers assess evidence to decide what technologies are truly most effective.

Acknowledgments

Portions of this article have been adapted from Gelijns AC, et al: Evidence, politics, and technological change. Health Aff 2005 24:29-40.

This research was supported in part by NIH Training Grant 5T32HL007854-13 to Dr. Iribarne, and by U01HL088942 and HL77096 from the National Heart, Lung, and Blood Institute of the National Institutes of Health. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Heart, Lung, and Blood Institute or the National Institutes of Health.

References

- 1.Baltimore, MD: Centers for Medicare and Medicaid Services; Centers for Medicare and Medicaid Services: National Health Expenditures Data. 2009 Available at: http://www.cms.hhs.gov/NationalHealthExpendData/ [PubMed]

- 2.Andrews RM. Rockville, MD: Agency for Healthcare Research and Quality; The National Hospital Bill: The Most Expensive Conditions by Payer, 2006. 2008 Available at: http://www.hcup-us.ahrq.gov/reports/statbriefs/sb59.pdf. [PubMed]

- 3.Cutler DM, McClellan M. Is technological change in medicine worth it? Health Aff. 2001;20:11–29. doi: 10.1377/hlthaff.20.5.11. [DOI] [PubMed] [Google Scholar]

- 4.Washington, DC: The Library of Congress; The American Recovery and Reinvestment Act of 2009, in H.R. 1. 2009 Available at: http://thomas.loc.gov/cgi-bin/query/z?c111:H.R.1.enr.

- 5.Rose EA, Gelijns AC, Moskowitz AJ, et al. Long-term mechanical left ventricular assistance for end-stage heart failure. N Engl J Med. 2001;345:1435–1443. doi: 10.1056/NEJMoa012175. [DOI] [PubMed] [Google Scholar]

- 6.Aaronson KD, Patel H, Pagani FD, et al. Patient selection for left ventricular assist device therapy. Ann Thorac Surg. 2003;75 suppl:S29–S35. doi: 10.1016/s0003-4975(03)00461-2. [DOI] [PubMed] [Google Scholar]

- 7.Lietz K, Long JW, Kfoury AG, et al. Outcomes of left ventricular assist device implantation as destination therapy in the post-REMATCH era: Implications for patient selection. Circulation. 2007;116:497–505. doi: 10.1161/CIRCULATIONAHA.107.691972. [DOI] [PubMed] [Google Scholar]

- 8.Hlatky M, Lee KL, Harrell FE, Jr, et al. Tying clinical research to patient care by use of an observational database. Stat Med. 1984;3:375–384. doi: 10.1002/sim.4780030415. [DOI] [PubMed] [Google Scholar]

- 9.Nienaber CA, Zannetti S, Barbieri B, et al. Investigation of stent grafts in patients with type B aortic dissection: Design of the INSTEAD trial—A prospective, multicenter, European randomized trial. Am Heart J. 2005;149:592–599. doi: 10.1016/j.ahj.2004.05.060. [DOI] [PubMed] [Google Scholar]

- 10.Freemantle N, Calvert M, Wood J, et al. Composite outcomes in randomized trials. greater precision but with greater uncertainty? J Am Med Assoc. 2003;289:2554–2559. doi: 10.1001/jama.289.19.2554. [DOI] [PubMed] [Google Scholar]

- 11.Hauptman PJ. Measurement of end points in heart failure trials: Jousting at windmills? Mount Sinai J Med. 2004;71:298–304. [PubMed] [Google Scholar]

- 12.Serruys PW, Morice MC, Kappetein AP, et al. Percutaneous coronary intervention versus coronary-artery bypass grafting for severe coronary artery disease. N Engl J Med. 2009;360:961–972. doi: 10.1056/NEJMoa0804626. [DOI] [PubMed] [Google Scholar]

- 13.Tunis SR, Stryer DB, Clancy CM, et al. Practical clinical trials: Increasing the value of clinical research for decision making in clinical and health policy. J Am Med Assoc. 2003;290:1624–1632. doi: 10.1001/jama.290.12.1624. [DOI] [PubMed] [Google Scholar]

- 14.Heitjan F, Moskowitz AJ, Whang W, et al. Problems with interval estimates of the incremental cost-effectiveness ratio. Med Decis Mak. 1999;19:9–15. doi: 10.1177/0272989X9901900102. [DOI] [PubMed] [Google Scholar]

- 15.Garber A, Phelps CE. Economic foundations of cost-effective analysis. J Health Econ. 1997;16:1–31. doi: 10.1016/s0167-6296(96)00506-1. [DOI] [PubMed] [Google Scholar]

- 16.Finkelstein E, Corso P. Cost-of-illness analyses for policy making: A cautionary tale for use and misuse. Pharmacoecon J Outcomes Res. 2003;3:367–369. doi: 10.1586/14737167.3.4.367. [DOI] [PubMed] [Google Scholar]

- 17.Inadomi JM. Decision analysis and economic modeling: A primer. Eur J Gastroenterol Hepatol. 2004;6:535–542. doi: 10.1097/00042737-200406000-00005. [DOI] [PubMed] [Google Scholar]

- 18.Oz MC, Gelijns AC, Miller L, et al. Left ventricular assist devices as permanent heart failure therapy: The price of progress. Ann Surg. 2003;238:577–583. doi: 10.1097/01.sla.0000090447.73384.ad. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Blue C. Blue Shield Association Technology Evaluation Center: Special report: Cost-effectiveness of left-ventricular assist devices as destination therapy for end-stage heart failure. Chicago TEC Assessment Program. 2004;19(2):1–35. [PubMed] [Google Scholar]

- 20.Miller LW, Nelson KE, Bostic RR, et al. Hospital costs for left ventricular assist devices for destination therapy: Lower costs for implantation in the post-REMATCH era. J Heart Lung Transplant. 2006;25:778–784. doi: 10.1016/j.healun.2006.03.010. [DOI] [PubMed] [Google Scholar]

- 21.Rector T, Cohn JN. Assessment of patient outcome with the Minnesota living with heart failure questionnaire: Reliability and validity during a randomized, double-blind, controlled trial of pimodbendan. Am Heart J. 1992;124:1017–1025. doi: 10.1016/0002-8703(92)90986-6. [DOI] [PubMed] [Google Scholar]

- 22.Steinbrook R. Health care and the American Recovery and Reinvestment Act. N Engl J Med. 2009;360:1057–1069. doi: 10.1056/NEJMp0900665. [DOI] [PubMed] [Google Scholar]