Abstract

When we observe someone shift their gaze to a peripheral event or object, a corresponding shift in our own attention often follows. This social orienting response, joint attention, has been studied in the laboratory using the gaze cueing paradigm. Here, we investigate the combined influence of the emotional content displayed in two critical components of a joint attention episode: The facial expression of the cue face, and the affective nature of the to-be-localized target object. Hence, we presented participants with happy and disgusted faces as cueing stimuli, and neutral (Experiment 1), pleasant and unpleasant (Experiment 2) pictures as target stimuli. The findings demonstrate an effect of ‘emotional context’ confined to participants viewing pleasant pictures. Specifically, gaze cueing was boosted when the emotion of the gazing face (i.e., happy) matched that of the targets (pleasant). Demonstrating modulation by emotional context highlights the vital flexibility that a successful joint attention system requires in order to assist our navigation of the social world.

Keywords: Attention, Emotion, Eye gaze, Facial expression

The ability to determine the direction of another person's attention is of great importance in the complex social environment we inhabit. Utilizing this information, we can infer the internal mental states of conspecifics, which allows us to make predictions about their future actions and how they might respond to our own behaviour (Emery, 2000). Furthermore, the attention system has developed a tendency to use social gaze direction as a powerful cue. Hence, when we see someone look somewhere, we will often shift our own attention to the same object or part of a scene (Moore & Dunham, 1995). This “joint attention” mechanism serves several important functions; for example, it supports noun acquisition in infants (Charman et al., 2001). It also can alert one to events or objects that have just appeared or have gone unnoticed.

Over the last decade, joint attention behaviour has been the focus of much research in cognitive psychology as we attempt to understand the mechanisms underlying the propensity to align our attention with that of others (e.g., Friesen & Kingstone, 1998; see Frischen, Bayliss, & Tipper, 2007, for review). Using an adapted Posner (1980) cueing paradigm, participants typically view a face looking left or right prior to the presentation of a peripheral to-be-detected target. The usual instruction to subjects is to ignore the eyes since they are nonpredictive of target location. Nevertheless, reaction time (RT) advantages are found for cued (i.e., looked-at) targets over the RTs to targets at uncued locations. This work has investigated the relative automaticity of the effects on covert attention (e.g., Driver et al., 1999) and individual differences in clinical and nonclinical populations (e.g., Bayliss, di Pellegrino, & Tipper, 2005; Ristic et al., 2005).

Like gaze direction, facial expressions shed light on the mental states and likely future behaviour of others. As such, fluent recognition and interpretation of these signals is of vital importance during social interactions. There is abundant evidence that the perception of facial emotion and gaze direction are influenced by each other. For example, approach emotions (e.g., anger) are recognized quicker than avoid emotions (e.g., fear) when the eyes of the face are directed straight ahead than when averted (Adams & Kleck, 2003, but see Bindemann, Burton, & Langton, 2008). Furthermore, our evaluations of the pleasantness of objects are influenced by a combination of gaze direction and facial emotion. That is, the affective evaluation of an object that is looked at by a face is influenced by the emotional expression of that face. For example, an object that is looked at by a disgusted person is liked less than when looked at by a happy face, whereas the emotional expression has no effect on objects that were not the recipient of social attention (Bayliss, Frischen, Fenske, & Tipper, 2007; Bayliss, Paul, Cannon, & Tipper, 2006; see also Corneille, Mauduit, Holland, & Strick, 2009). The neural mechanisms underpinning the processing of social signals from the eyes and the face are also found in overlapping structures. For example, the amygdala and superior temporal sulcus have been found to be important for the evaluation of both types of signal (Adams, Gordon, Baird, Ambady, & Kleck, 2003; Engell & Haxby, 2007; Kawashima et al. 1999).

There have also been several investigations looking into the combined influence of facial emotion and gaze direction on attention. It is surprising given the wealth of evidence for important attention-emotion interactions (see Yiend, 2010, for review) and the previous evidence of gaze-emotion interactions that the data from gaze-cueing paradigms have thus far been rather mixed. For example, in a series of experiments, Hietanen and Leppänen (2003) found no modulation of gaze cueing as a function of emotional expression, comparing neutral, happy, angry, and fearful facial expressions. Further, Graham, Friesen, Fichtenholtz, and LaBar (2010) showed null effects of emotion on attention except at extended cue–target stimulus–onset asynchronies suggesting that processing of gaze and emotion is at least initially independent. Similarly, Bayliss et al. (2007) demonstrated identical cueing effects for happy and disgusted faces. Bonifacci, Ricciardelli, Lugli, and Pellicano (2008) showed equivalent effects on overt orienting of gaze produced by angry and neutral faces (though the angry faces held attention, as described by Fox, Russo, & Dutton, 2002). That happy faces produce equivalent cueing effects as neutral faces is also supported by work by Holmes, Richards, and Green (2006) and Pecchinenda, Pes, Ferlazzo, and Zoccolotti (2008).

On the other hand, there does appear to be one emotional expression that has shown to boost gaze cueing in some circumstances—fear. Mathews, Fox, Yiend, and Calder (2003) found that the eyes of fearful faces produced stronger gaze cueing effects than a neutrally expressive face. Critically, however, this pattern of data was only attained in a group of participants with higher than average levels (yet subclinical) of anxiety. This interaction with anxiety might explain other failures to demonstrate modulation of cueing as a function of emotional expression (Hietanen & Leppänen, 2003). The findings of Mathews et al. have been replicated by Holmes et al. (2006), Pecchinenda et al. (2008), Tipples (2006), Putman, Hermans, and van Honk (2006) with dynamic gaze, and by Fox, Mathews, Calder, and Yiend (2007), who also showed that angry faces do not boost cueing in the same way as fear (see Fox et al., 2002). However, it is important to note that low-level stimulus factors may be able to account for the specific effect of fearful faces on gaze cueing, since the eye region of a fearful face is particularly salient due to the enlarged area of visible sclera (cf. Tipples, 2005).

Here, we propose that emotional facial expressions, in general, do not inevitably modulate gaze cueing as a pure function of the emotional content of the face. This is evident from the lack of specific, replicable effects in the extant literature (with the exception of enhanced gaze cueing from fearful faces in anxious subjects). As indicated earlier, even this latter effect could be the result of the low level attributes of a fearful face, rather than due to the interpretation of the facial emotion. Hence, in order to examine the interactions between emotion and attention systems in social orienting behaviour, we feel that the formation of ordinal predictions about which emotions should exert more control over attention than others may be misguided. Why should attention treat the gaze cue of a disgusted face differently to that of a happy face if all other factors are neutral and constant?

Rather, we suggest here that the systems that give rise to joint attention are imbued with a great degree of flexibility that allows the regulation of gaze-triggered orienting responses as a function of emotional context. In previous studies, the emotional expression of the face does not relate to anything in the experimental environment. The targets to which participants are to respond are usually simple neutral stimuli, like letters, or dots. Therefore, it is possible that in these sparse, emotionally neutral displays, the links between motivational and attentional neural circuits are not strongly activated. Conversely, in rich environment containing many threats or noxious stimuli, the gaze of a happy person may be ignored as irrelevant information due to the evaluative clash between the facial emotion and the emotional context. On the other hand, the gaze of the same happy person at an enjoyable social function may have great influence on one's attention as it matches the general context of the situation. If we can create an experimental context that is similar to these examples, we may observe consistent modulation of gaze cueing as a function of emotional expression.

One recent study has approached the issue of emotional context in gaze cueing. In their study, Pecchinenda et al. (2008) used emotionally valenced word stimuli as targets. They found modulation of gaze cueing as a function of facial emotional expression only when the participants had to explicitly judge the emotional content of the word (i.e., pleasant/ unpleasant discrimination task). Specifically, disgusted and fearful faces elicited stronger gaze cueing than happy and neutral faces. However, when the task was to judge the case (i.e., upper/lower case discrimination task), each emotional face produced equivalent cueing effects. Hence, these authors concluded that gaze cueing can be context-based if the task draws attention to the emotional content of the target stimuli.

In the present study we aim to take this concept further. First, we used emotional pictures as targets, which may yield more automatic appreciation of the emotional content of the stimuli even in a task that does not demand explicit valence recognition. Furthermore, we aim to create specific emotional contexts. That is, rather than having general emotional context where positive and negative stimuli are randomly encountered, a particular group of participants would only encounter negative or only encounter positive stimuli. We feel that this latter approach may have more ecological validity where social/environmental episodes may have extended emotional consistency, such as encountering positive stimuli while travelling through France on a family holiday versus negative stimuli while travelling through postwar Iraq.

Therefore, in this study we predicted that facial emotion would modulate gaze cueing such that larger cueing effects would be observed from gaze cues when presented in an emotional-congruent scenario. As said, in order to establish a consistent emotional context for each participant, we manipulated the emotional content of the targets between groups. Hence, separate groups of participants responded solely to negatively valenced or positively valenced target stimuli. We demonstrate modulation of gaze cueing such that the happy faces elicit stronger gaze cueing only when presented in the positive context of pleasant target stimuli. This is evidence that social orienting mechanisms are indeed equipped with a high degree of flexibility in determining how strongly to utilize gaze cueing in varying contexts.

EXPERIMENT 1

Our previous study investigating gaze cueing and emotion (Bayliss et al., 2007) used a face that produced either a disgusted or happy expression. Both versions of this face produced significant, and almost identical, gaze cueing effects (19 ms and 20 ms, respectively). The targets we used in that study were mundane household objects, which the participants were to categorize. Hence, we are confident that in a neutral emotional context, these gaze cue stimuli produce equivalent cueing effects. However, the main experiment in the present study (Experiment 2) aims to use standardized emotionally-laden target stimuli (taken from the International Affective Picture System, IAPS) in a slightly different task (localization) with modified temporal stimulus presentation parameters. Hence, it was critical to confirm that in a neutral context with stimuli of relatively low arousal, the efficacy of our gaze stimuli is not affected by emotional expression. This would enable to draw conclusions from any modulation in groups viewing positive or negative target stimuli in the critical Experiment 2.

Method

Participants. Twenty female participants recruited from the School of Psychology completed this experiment (mean age =19.3 years) in return for course credits. All-female samples were used throughout this study due to our previous work showing that females show stronger gaze cueing effects (Bayliss et al., 2005) and large gender differences in emotion processing (e.g., Cahill, 2006) which could potentially introduce additional variance into our data. All participants gave informed consent and were unaware of the goal of the study.

Stimuli. The stimuli were presented on a 15-inch monitor placed 60 cm away from the participants and controlled with E-Prime software. The gaze cue stimulus was a female face from the NimStim face set. The happy and disgusted versions of this face were used, and manipulated to generate leftward and rightward gazing versions of each stimulus. The faces measured approximately 12 (height) × 8 (width) cm, the pupils 0.5 × 0.6 cm, and the eye region itself 0.5 × 1.5 cm, and were presented in the centre of the screen. The target stimuli were taken from the IAPS (Lang, Bradley, & Cuthbert, 2005) stimulus set. These stimuli were selected to provide a neutral baseline measure of gaze cueing to complex visual targets with which to compare the findings of Experiment 2 which will utilize stimuli that diverge in terms of valence. Hence, 80 neutrally valenced stimuli (M = 5.04, on a 1–9 scale) with relatively low arousal (M = 3.99) were selected. The content of the pictures included animals, people, foodstuffs, and landscapes (see Appendix for details). These stimuli were presented 9.5 cm from central fixation, filling a rectangle placeholder measuring 7.0 × 9.5 cm. Fixation was indicated by a cross measuring 0.5 × 0.5 cm (see Figure 1 for examples of stimuli).

Figure 1.

Examples of the disgust and happy faces, alongside neutral, negative, and positive targets. Actual target codes from the IAPS are found in the Appendix. The stimuli shown here are copyright-free images that are similar to some of the targets we presented. To view this figure in colour, please see the online issue of the Journal.

Design. There were two within-subjects factors that were central to statistical analysis. First, cueing determined whether the eyes looked at (valid) or away from (invalid) the eventual target location. Face emotion could either be happy or disgusted. The correspondence of the target stimuli to the cueing and face emotion variables was carefully controlled. Each participant viewed (and responded to) each target stimulus only once. The 80 target stimuli were grouped into sets of 20. The condition each group of stimuli would correspond to (valid/invalid and happy/ disgusted face; i.e., four combinations in total) was counterbalanced across participants.

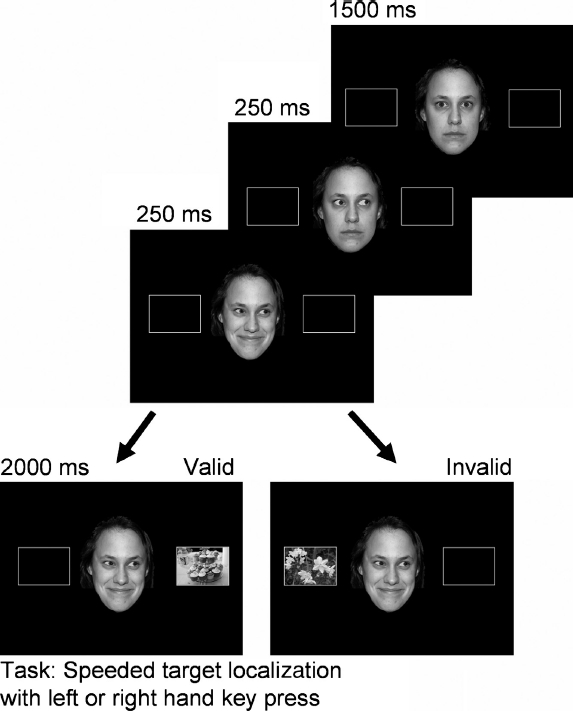

Procedure. On each trial, a white fixation cross was presented on a black screen for 600 ms. Next, the female face, with direct gaze and neutral emotional expression appeared in the centre of the screen, flanked by two rectangle placeholders for 1500 ms. Then, the eyes in the face moved to the left or right. After 250 ms, the emotional expression changed to either happy or disgusted. After a further 250 ms, the target appeared, in one of the placeholders (see Figure 2). After 2000 ms, the entire screen cleared, and a further 2 s elapsed before the next trial began. Participants were instructed to maintain fixation at the centre of the screen throughout each trial, and to ignore the direction of gaze, the emotional expression of the face, and the content of the target picture. It was impressed upon the participants that the only relevant feature of the trial was the location of the target, and it was to this that they were to respond as quickly and as accurately as possible by pressing the “c” key on a standard keyboard with their left index finger and the “m” key with their right index finger for left and right targets, respectively. It is important to note that the target remained on the screen for 2 s no matter when the participant responded, ensuring that each participant was exposed to each picture for the same amount of time.

Figure 2.

Illustration of the time course of stimulus presentation in an example trial. To view this figure in colour, please see the online issue of the Journal.

Participants completed four practice trials (with novel targets) before completing a single experimental block of 80 trials. In each block trial order was randomized with respect to cueing and face emotion, the direction the eyes looked (left and right an equal amount of times), target location (left or right an equal amount of times), and individual target stimulus. After the experiment, participants were fully debriefed.

Results

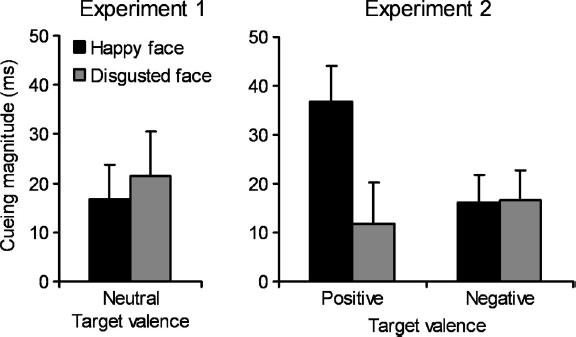

Errors and misses were removed (<1% trials) prior to the calculation of median reaction times for each participant in each condition. These medians were submitted to a 2 (cueing: Valid vs. invalid) × 2 (facial expression: Happy vs. disgust) repeated-measures ANOVA. This revealed a significant main effect of cueing, F(1, 19) = 7.0, MSE = 1038, p =.016, η2p = .269, due to faster reaction times when the target appeared at the cued location (389 ms) than uncued locations (408 ms). Neither the main effect of facial emotion nor the interaction approached significance, Fs<1.3, ps>.25. Follow-up t-tests showed that both the happy face, t(19) = 2.33, p = .031, and the disgusted face, ¿(19) = 2.31, p = .032, produced significant cueing effects (16 ms and 21 ms cueing effect magnitudes, respectively; see Table 1 and Figure 3).

Table 1.

Median reaction times (ms) for each group in each condition, with standard deviation in parentheses

| Happy face |

Disgusted face |

|||

| Target valence | Valid | Invalid | Valid | Invalid |

| Exp. 1: Neutral targets | 391 (62) | 408 (72) | 386 (74) | 407 (68) |

| Exp. 2: Pleasant targets | 411 (109) | 448 (106) | 424 (105) | 436 (107) |

| Exp. 2: Unpleasant targets | 384 (110) | 401 (103) | 388 (108) | 405 (116) |

Figure 3.

Graphs illustrating the mean cueing effects (invalid minus valid) for each condition for Experiments 1 and 2. Error bars denote standard errors of the mean cueing effects.

Discussion

This experiment showed that our gaze cueing stimuli produce equivalent gaze cueing effects with happy and disgusted facial expressions in the context of complex standardized emotionally neutral target stimuli. This replicates the gaze cueing portion of our previous paper using emotional gaze cues (Bayliss et al., 2007). Using these data as a baseline for the magnitude of gaze cueing we can expect these gaze cues to elicit in a neutral context, we now explore the impact of negative and positive contexts on gaze cueing in Experiment 2.

EXPERIMENT 2

In this critical second experiment, we explore our hypothesis that placing participants in a particular emotional context (negative or positive) can modulate the level to which the attention system responds to gaze cues produced by negatively or positively emoting faces. Hence, we used the same task and procedure with two new groups of participants who responded to either negative or positive visual targets. We predict stronger attention shifts in response to faces whose emotional expression is congruent with the overall emotional context defined by the target stimuli.

Method

Participants. Forty female participants, recruited from the School of Psychology, Bangor University completed this experiment (mean age = 20.2 years) in return for course credits. All participants gave informed consent and were unaware of the goal of the study. These participants were randomly assigned to one of two groups. One group (n = 20) viewed happy and disgusted faces producing gaze cues while localizing pleasant stimuli; a second group (n = 20) viewed happy and disgusted faces, but responded to unpleasant stimuli.

Stimuli. The same experimental set-up and face stimuli used in Experiment 1 were used here. New target stimuli were 80 positive and 80 negative pictures. The average valence of the selected positive pictures was 7.11 (SD = 0.44), and the average rating for the negative pictures was 3.04 (SD = 0.58). The mean arousal measures were 4.52 and 5.71, respectively. Using a high number of affective stimuli is advantageous in a number of ways—being exposed to each picture only once avoids any chance of habituation of emotional or visual response to the pictures over the experiment. A single presentation of each target also prohibits influences of memory and perceptual fluency effects on reaction time. The main aim of selecting these stimuli was to place the participant in an overall unpleasant or pleasant context. Thus, the heterogeneity of the stimuli was a necessary feature of the stimulus set (see General Discussion for possible downsides to such heterogeneity of our stimulus sets).

Design. Experiment 2 included the same within-subject factors as Experiment 1, cueing and facial emotion, with target valence as an additional between-subjects factor (positive vs. negative). As in Experiment 1, each participant viewed each target stimulus only once. The 80 unpleasant and 80 pleasant stimuli were grouped into sets of 20. The condition each group of stimuli would correspond to (valid/invalid and positive face/ negative face; i.e., four combinations in total) was counterbalanced across participants.

Procedure. The experimental procedure was identical to that of Experiment 1. In this experiment, however, prior to random group assignment, the experimenter explained to the participants that some of the images might be of a distressing nature. Then, all were shown examples of novel pleasant and unpleasant stimuli from the IAPS set; in order to avoid upset they were given an additional chance to withdraw consent (none did). After the experiment, participants were fully debriefed.

Results

Errors and misses, accounting for a total of <1% of trials in both groups of participants were removed prior to analysis of participants’ median reaction times in each condition (see Table 1). A mixed measures ANOVA was conducted, with target valence (pleasant targets vs. unpleasant targets) as the between-subjects factor, and face emotion (positive vs. negative) and cueing (valid vs. invalid gaze cues) as within-subjects factors. The main effect of cueing was significant, F(1, 38) = 28.8, MSE = 577, p <.001, η2p =.431, due to faster reaction times when the eyes looked at the target (402 ms) as compared with when the eyes looked away from the target (422 ms). The interaction between face emotion and cueing approached significance, F(1, 38) = 4.01, MSE = 380, p = .052, η2p =.095, due to stronger cueing from the happy face (27 ms) than the disgusted face (14 ms). However, this effect was driven by the predicted three-way interaction between face emotion, cueing, and the between-subjects factor, target valence, F(1, 38) = 4.32, MSE = 380, p = .044, η2p =.102. This interaction was significant due to stronger gaze cueing when the emotion expressed by the face was congruent with the valence of the targets being presented (27 ms) than when the face expressed an incongruent emotion (14 ms; see Figure 3). No other main effects or interactions approached significance, Fs <1.1, ps >.3.

In order to further investigate the source of the three-way interaction, follow-up contrasts were performed on the cueing data. For participants in the negative targets group, both the disgusted face, t(19) = 2.92, p = .009, and the happy face, t(19) = 2.80, p = .011, produced significant cueing effects (17 ms and 16 ms cueing, respectively). This mirrors the finding from Experiment 1, where facial emotion did not modulate cueing in the context of neutral targets (see also Bayliss et al., 2007). However, participants in the positive target experimental context, showed a markedly different pattern. These participants showed significant gaze cueing effects by happy faces, t(19) = 5.12, p < .001 (37 ms cueing), but the 12 ms cueing effect elicited by the disgusted face was nonsignificant, t(19) = 1.38, p = .19. A final contrast conformed that that the 37 ms gaze cueing effect elicited by happy gaze cues was significantly stronger than from disgusted gaze cues when viewing positive targets, F(1, 19) = 7.59, MSE = 417, p =.013, η2p =.285.

Discussion

The data from this experiment support our overall hypothesis that gaze cueing can be modulated by emotional expression as a function of emotion context. Interestingly the overall context congruity effect was driven solely by participants in the positive context. Participants in the negative context produced a pattern of gaze cueing effects that were similar to that demonstrated in Experiment 1 (neutral context). This demonstrates that emotional context only modulates gaze cueing when the targets are positive. Specifically, when all the targets in the cueing paradigm are pleasant, happy faces produce stronger gaze cueing effects than a disgusted face does.

Although the results of this second experiment are clear, the rather onesided nature of the emotional context effect is rather surprising. That is, a positive context boosted gaze cueing from a happy face relative to a disgusted face, whereas the predicted reverse pattern was not observed for the negative context. In order to confirm the pattern of data from Experiment 2, we tested a further 40 participants in a replication of Experiment 2. The only difference in the design was that the negative facial expression was changed from disgust to fearful. Fear was chosen as our new negative facial expression since, as we noted in the introduction, this expression most reliably modulates gaze cueing, so could potentially yield a different effect compared with disgusted faces.

The pattern of data was virtually identical to that of Experiment 2: Participants viewing positive targets showed stronger cueing effects with happy faces (29 ms) than fearful faces (8 ms), leading to a significant Face × Cueing interaction, F(1, 19) = 5.15, MSE = 395, p = .035, η2p =.213 in this additional group of subjects. On the other hand, participants responding to negatively valenced targets were not influenced by facial emotion (cueing from happy faces = 8 ms, fearful faces = 12 ms). Hence, in two groups of participants viewing only positive targets, happy faces produced stronger gaze cueing than negative faces did, whereas in a total of four groups of participants viewing neutral or negative targets, we have failed to observe modulation of gaze cueing as a function of facial expression (one group from Bayliss et al., 2007, and three groups in this paper).

Hence, we are confident in our conclusion that a positive emotional context leads to a greater sensitivity towards the social gaze of a smiling face, whereas in a negative or neutral context the gaze cues of emoting people are treated equally by the attention system. There may be stimulus- or procedure-related reasons for the lopsided nature of our findings that future work may clarify, but this experiment demonstrates that at the very least, emotional context derived from target processing can modulate gaze cueing as a function of facial expression.

GENERAL DISCUSSION

We used a gaze cueing paradigm to investigate the influence of two sources of emotional content on visual social orienting (gaze cueing): Emotion of a face and the emotion content of a target. Previous work has produced mixed results concerning interactions between gaze cueing and facial expressions. Either gaze cueing tends to be unaffected by emotional expression, or somewhat limited effects are observed where fearful faces elicit stronger cueing in anxious participants (e.g., Mathews et al., 2003). Some further research has suggested that the task of the participant, whether engaged in explicit emotional discrimination, can influence the degree to which emotional content of the cue face modulates gaze cueing (Pecchinenda et al., 2008). Our novel observation is that gaze direction and face emotion can interact when the emotional context is manipulated in an implicit and consistent manner, as when presenting consistently negative or positive target stimuli that merely have to be localized (and not recognized).

This study confirmed the standard gaze cueing effects where target processing was facilitated when a nonpredictive gaze was oriented towards it (Friesen & Kingstone, 1998). But more importantly, this orienting response to social gaze is modulated by emotional information contained in the two most critical components of a joint attention episode: The face producing the gaze cue, and the object to which attention is directed. Together, these features contribute to the overall affective context of the environment, and as such have an interactive effect on the degree to which the gaze direction of the face has an impact on the observer's spatial attention. Thus, despite the fact that neither the emotional expression of the face, nor the emotional content of the target picture were relevant to the participants’ ongoing task of simply locating the target, emotional context did influence gaze cueing in a rather specific way. That is, attention was affected more by averted gaze when the stimulus face produced an emotion that was congruent with the target valence, but only when the objects in the visual environment (i.e., the targets) were positively valenced.

Our study focused on the valence of the target stimuli. However, the arousal measures of these stimuli were not perfectly matched across our stimulus samples. Due to the large number of stimuli we used, and the overall negative correlation between arousal and valence in the IAPS set, r = —.28, n = 1194, p < .001, the negative stimuli we used were slightly more arousing than the positive set. However, this difference is unlikely to explain our results since our neutral stimulus set had an overall lower mean arousal rating than either of the emotional sets. Hence, the emotional context effect on gaze cueing was demonstrated in the stimulus set with a medium level of arousal. We therefore contend that the valence measures, which varied more strongly across our sets, were responsible for the differences in gaze cueing found here. The role of arousal, however, is clearly of interest for future study. Furthermore, other ways of instilling an emotional context, such as mood induction, could yield modulation of gaze cueing effects as a function of emotional expression even in the context of neutral target stimuli. It is not clear, for example, whether the mere presence of our emotional target stimuli is sufficient to modulate cueing, or if the participant must respond to the stimulus. This could be easily tested by presenting emotional stimuli between trials with targets being standard geometric stimuli. One further issue that is noteworthy is that our conclusions may be limited to understanding interactions between females (the gender of both our participants and our stimulus face)—it is entirely possible that other gender combinations may give rise to rather different data.

Our findings are complimentary to those of Pecchinenda et al. (2008), whose participants responded to emotional target words, preceded by a fearful, disgusted, happy, or neutral face. When responding to the case (upper/lower) that the words were presented in, the cueing effects were identical. However, when the emotional content of the word was relevant to the task (positive/negative), stronger cueing was elicited following disgusted and fearful gaze cues. Our data are similar in that we demonstrate that the emotional content of the face can influence gaze cueing when the targets have emotional content. Hence, the flexibility of the system, along with the importance of considering the content and attributes of the “object” of joint attention (i.e., the visual target) is something to be taken from both reports.

However, the key differences between Pecchinenda et al. (2008) and the present study are as follows. First, in our experiment, we find modulation of gaze cueing despite the emotional content of the targets being entirely irrelevant to the participant's task. Therefore, we contend that the valence of the stimuli can affect attention even in a simple localization task. The fact that we used pictures instead of words may be critical on this point. Second, we find different emotional content of the targets produce different effects on attention. That is, orienting to positive targets is modulated by facial expression, whereas orienting to negative targets is not. On the other hand, Pecchinenda et al.’s data is contingent on attention to emotional content per se. Finally, and most interestingly of all, Pecchinenda et al. report modulation of gaze cueing in the negative emotions, like previous reports do, and that cueing from happy faces is, if anything, suppressed. Our data instead show a boost for cueing from the social signals of a smiling face when the objects are pleasant as compared with when the targets are unpleasant.

This is an interesting aspect of the data presented here. While we take the view that these data are evidence for the combination of emotional context with facial expression on gaze cueing, it is somewhat surprising that we only observe these effects to be significant when the context is positive: Pleasant targets and a boost for cueing for happy faces, rather than a reduction of cueing in other cueing conditions. Considering that, as one can glean from our introduction and the previously discussed Pecchinenda et al. (2008) paper, all of the consistent modulations in the extant literature have been with regard to negative facial emotions, and almost always fearful faces. Therefore, this boost for happy faces cueing attention in a positive context is novel but also surprising given the previous literature. One question that arises is therefore: Given that other authors have shown stronger cueing for fearful (Holmes et al., 2006; Mathews et al., 2003) and disgusted faces (Pecchinenda et al., 2008), and that fearful faces seem to be most modulating across studies and participants (Tipples, 2005), why do the negative faces, when paired with negative targets, not elicit large cueing effects in a similar way to the happy-pleasant conditions?

Despite the lack of perfect symmetry in our results, the overall pattern nevertheless supports our hypothesis that emotional context, not simply facial expression, can modulate gaze cueing. We intentionally used a heterogeneous stimulus set for this study in order to avoid habituation effects of repeatedly viewing the same stimuli—in the present experiment each subject viewed each stimulus only once. It is possible that a lower degree of specificity of the negative stimuli led to a match in terms of valence between an unpleasant stimulus and a negative face, but a mismatch in the precise emotion evoked by a given target stimulus (e.g., a snake evokes fear) and the emotion (e.g., disgust) led to ambiguity in the representation of the emotional context in the groups of subjects viewing negative targets only (see Hansen & Shantz, 1995). On the other hand, since there is only one basic emotion that is of positive valence (happy), each positively valenced target matched perfectly the emotion expressed by the smiling face. This may be why we only see consistent effects of context in the groups of subjects responding to positive stimuli.

An alternative view of our data might suggest that in fact, the potential mismatch between negative stimuli and the face (i.e., threat vs. contaminant and fearful expression vs. disgust) on some trials is not the cause of the failure to observe context effects in the negative stimuli groups. That is, we did not fail to detect the corresponding context effect in the negative stimuli due to some stimulus selection issue, but rather, gaze cueing can only be selectively modulated in a positive context. This idea, which clearly requires further work beyond the scope of the present paper, suggests that the attention system selectively prioritizes the processing of the gaze of happy faces in the context of pleasant surroundings. This notion supports the idea that gaze monitoring is linked strongly to affiliative behaviour (e.g., Argyle & Dean, 1965; Carter & Pelphrey, 2008; Kleinke, 1986).

Indeed, joint attention activates the reward systems of the human brain (Schilbach et al. in press). We suggest that gaze following behaviour is fundamentally more suited to collaborative, positive social situations (e.g., the gaze exchanges between a baby and the caregiver). That is, learning about others behaviour, intentions and the social world in a positive situation (e.g., kin cooperation, sharing, and friendship). Whereas in a negative situation, the collaborative nature of gaze monitoring is less important as a purely social function, and the system is tuned more to the cue as a simple means of gathering information about potential threats. Hence, the emotional facial expression is not encoded as strongly, and therefore fails to modulate gaze cueing. This idea may at first appear speculative, but it fits very well with trends noted previously by this laboratory. For example, Bayliss et al. (2007) showed that objects being looked at are rated more favourably than objects that are ignored by a stimulus face—but only if the face is happy. Similarly, trustworthiness judgements of faces engaging in joint attention with participants are modulated more strongly when the faces are smiling as compared with neutral and angry faces (Bayliss, Griffiths, & Tipper, 2009). These various sources of evidence therefore suggest that, when it comes to joint attention behaviours, there is something special about positive emotional contexts.

To conclude, we present evidence that orienting to the direction of social gaze is modulated by the emotional context in which it is presented. This confirms our previous assertions that considering the object of joint attention as the critical component in social attention episodes will yield significant advances in our understanding of social cueing (Bayliss et al., 2007; Bayliss & Tipper, 2005; Frischen et al., 2007). Our results reveal a degree of flexibility in the gaze cueing system that allows for the integration of multiple sources of information to guide attention. The attention and emotion systems interact at a number of levels, and the role of contextual information is likely to be critical at each (see Frischen, Eastwood, & Smilek, 2008). Our findings reinforce the general view that such context-driven flexibility is important in order to allow our mechanisms of spatial attention to aid rapid detection of the most relevant stimuli in our constantly changing environment.

Acknowledgments

This work was supported by a Leverhulme Early Career Fellowship awarded to APB, and by a Wellcome Programme grant to SPT. The MacBrain Face Stimulus Set (NimStim) was developed by Nim Tottenham (tott0006@tc.umn.edu), supported by the John D. and Catherine T. MacArthur Foundation. The authors thank Cheree Baker, Sarah Dabbs, Nicola Ferreira, and Michelle Ames for assistance with data collection.

APPENDIX: DETAILS OF INTERNATIONAL AFFECTIVE PICTURE SYSTEM (IAPS) IMAGES USED

Experiment 1

Neutral images

Valence: Mean = 5.04, SD = 0.42, maximum = 5.8, minimum = 4.1

Arousal: Mean = 3.99, SD = 0.78, maximum = 6.5, minimum = 2.4

1030, 1121, 1675, 2102, 2191, 2221, 2235, 2372, 2383, 2385, 2396, 2410, 2445, 2487, 2514, 2575, 2635, 2780, 5120, 5395, 5532, 5534, 5535, 5920, 6900, 7037, 7039, 7042, 7043, 7044, 7046, 7054, 7055, 7057, 7058, 7096, 7130, 7160, 7170, 7180, 7182, 7186, 7188, 7190, 7207, 7211, 7217, 7234, 7236, 7237, 7242, 7247, 7248, 7249, 7285, 7484, 7487, 7493, 7500, 7504, 7506, 7546, 7547, 7550, 7560, 7597, 7620, 7640, 7700, 7710, 7820, 7830, 8010, 8060, 8192, 8475, 9070, 9080, 9210, 9913

Experiment 2

Positive images

Valence: Mean = 7.10, SD = 0.44, maximum = 7.9, minimum = 5.8

Arousal: Mean = 4.52, SD = 1.08, maximum = 7.3, minimum = 2.5

1333, 1419, 1450, 1463, 1510, 1540, 1590, 1600, 1601, 1602, 1603, 1604, 1610, 1620, 1650, 1660, 1670, 1720, 1721, 1722, 1731, 1740, 1810, 1811, 1812, 1900, 2092, 2216, 2373, 2791, 5000, 5001, 5010, 5030, 5200, 5201, 5220, 5300, 5480, 5551, 5594, 5611, 5623, 5626, 5660, 5700, 5711, 5750, 5779, 5781, 5800, 5811, 5814, 5820, 5849, 5890, 5891, 5910, 5994, 7200, 7270, 7280, 7325, 7330, 7350, 7400, 7470, 7502, 7508, 8162, 8170, 8185, 8200, 8370, 8420, 8461, 8470, 8496, 8499, 8501

Negative images

Valence: Mean = 3.04, SD = 0.58, maximum = 4.0, minimum = 2.0

Arousal: Mean = 5.71, SD = 0.76, maximum = 7.4, minimum = 3.5

1019, 1052, 1090, 1111, 1120, 1201, 1205, 1220, 1270, 1275, 1280, 1300, 1525, 1932, 2053, 2661, 2683, 2688, 2692, 2710, 2717, 2722, 2751, 2981, 3220, 3230, 3250, 3280, 3500, 5961, 5971, 5972, 5973, 6020, 6021, 6190, 6200, 6210, 6230, 6241, 6260, 6300, 6312, 6360, 6370, 6410, 6415, 6550, 6570, 6610, 6821, 6825, 6834, 6940, 7380, 8230, 8231, 9005, 9008, 9042, 9050, 9090, 9140, 9190, 9250, 9254, 9265, 9270, 9320, 9330, 9341, 9424, 9435, 9480, 9490, 9560, 9561, 9592, 9630, 9830

Contributor Information

Andrew P. Bayliss, School of Psychology, University of Queensland, St. Lucia, Australia.

Stefanie Schuch, Institut für Psychologie, RWTH Aachen University, Aachen, Germany.

Steven P. Tipper, School of Psychology, Bangor University, Bangor, UK

References

- Adams R. B., Gordon H. L., Baird A. A., Ambady N., Kleck R. E. Effects of gaze on amygdala sensitivity to anger and fear faces. Science. 2003;300:1536. doi: 10.1126/science.1082244. [DOI] [PubMed] [Google Scholar]

- Adams R. B., Kleck R. E. Perceived gaze direction and the processing of facial displays of emotion. Psychological Science. 2003;14:644–647. doi: 10.1046/j.0956-7976.2003.psci_1479.x. [DOI] [PubMed] [Google Scholar]

- Argyle M., Dean J. Eye contact, distance and affiliation. Sociometry. 1965;28(3):289–304. [PubMed] [Google Scholar]

- Bayliss A. P., di Pellegrino G., Tipper S. P. Sex differences in eye-gaze and symbolic cueing of attention. Quarterly Journal of Experimental Psychology. 2005;58A(4):631–650. doi: 10.1080/02724980443000124. [DOI] [PubMed] [Google Scholar]

- Bayliss A. P., Frischen A., Fenske M. J., Tipper S. P. Affective evaluations of objects are influenced by observed gaze direction and emotional expression. Cognition. 2007;104(3):644–653. doi: 10.1016/j.cognition.2006.07.012. [DOI] [PubMed] [Google Scholar]

- Bayliss A. P., Griffiths D., Tipper S. P. Predictive gaze cues affect face evaluations: The effect of facial emotion. European Journal of Cognitive Psychology. 2009;21(7):1072–1084. doi: 10.1080/09541440802553490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayliss A. P., Paul M. A., Cannon P. R., Tipper S. P. Gaze cueing and affective judgments of objects: I like what you look at. Psychonomic Bulletin and Review. 2006;13(6):1061–1066. doi: 10.3758/bf03213926. [DOI] [PubMed] [Google Scholar]

- Bayliss A. P., Tipper S. P. Gaze and arrow cueing of attention reveals individual differences along the autism-spectrum as a function of target context. British Journal of Psychology. 2005;96(1):95–114. doi: 10.1348/000712604X15626. [DOI] [PubMed] [Google Scholar]

- Bindemann M., Burton A. M., Langton S. R. H. How do eye gaze and facial expression interact? Visual Cognition. 2008;16(6):708–733. [Google Scholar]

- Bonifacci P., Ricciardelli P., Lugli L., Pellicano A. Emotional attention: Effects of emotion and gaze direction on overt orienting of attention. Cognitive Processing. 2008;9:127–133. doi: 10.1007/s10339-007-0198-3. [DOI] [PubMed] [Google Scholar]

- Cahill L. Why sex matters for neuroscience. Nature Reviews Neuroscience. 2006;7(6):477–484. doi: 10.1038/nrn1909. [DOI] [PubMed] [Google Scholar]

- Carter E. J., Pelphrey K. A. Friend or foe? Brain systems involved in the perception of dynamic signals of menacing and friendly social approaches. Social Neuroscience. 2008;3(2):151–163. doi: 10.1080/17470910801903431. [DOI] [PubMed] [Google Scholar]

- Charman T., Baron-Cohen S., Swettenham J., Baird G., Cox A., Drew A. Testing joint attention, imitation, and play as infancy precursors to language and theory of mind. Cognitive Development. 2001;15(4):481–498. [Google Scholar]

- Corneille O., Mauduit S., Holland R. W., Strick M. Liking products by the head of a dog: Perceived orientation of attention induces valence acquisition. Journal of Experimental Social Psychology. 2009;45(1):234–237. [Google Scholar]

- Driver J., Davis G., Ricciardelli P., Kidd P., Maxwell E., Baron-Cohen S. Gaze perception triggers reflexive visuospatial orienting. Visual Cognition. 1999;6:509–540. [Google Scholar]

- Emery N. J. The eyes have it: The neuroethnology, function and evolution of social gaze. Neuroscience and Biobehavioural Reviews. 2000;24:581–604. doi: 10.1016/s0149-7634(00)00025-7. [DOI] [PubMed] [Google Scholar]

- Engell A. D., Haxby J. V. Facial expression and gaze-direction in human superior temporal sulcus. Neuropsychologia. 2007;45(14):3234–3241. doi: 10.1016/j.neuropsychologia.2007.06.022. [DOI] [PubMed] [Google Scholar]

- Fox E., Mathews A., Calder A. J., Yiend J. Anxiety and sensitivity to gaze direction in emotionally expressive faces. Emotion. 2007;7(3):478–486. doi: 10.1037/1528-3542.7.3.478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E., Russo R., Dutton K. Attentional bias for threat: Evidence for delayed disengagement from emotional faces. Cognition and Emotion. 2002;16(3):355–379. doi: 10.1080/02699930143000527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friesen C. K., Kingstone A. The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin and Review. 1998;5:490–495. [Google Scholar]

- Frischen A., Bayliss A. P., Tipper S. P. Gaze-cueing of attention: Visual attention, social cognition and individual differences. Psychological Bulletin. 2007;133(4):694–724. doi: 10.1037/0033-2909.133.4.694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frischen A., Eastwood J. D., Smilek D. Visual search for faces with emotional expressions. Psychological Bulletin. 2008;134(5):662–676. doi: 10.1037/0033-2909.134.5.662. [DOI] [PubMed] [Google Scholar]

- Graham R., Friesen C. K., Fichtenholtz H. M., LaBar K. S. Modulation of reflexive orienting to gaze direction by facial expressions. Visual Cognition. 2010;18(3):331–368. [Google Scholar]

- Hansen C. H., Shantz C. A. Emotion-specific priming: Congruence effects on affect and recognition across negative emotions. Personality and Social Psychology Bulletin. 1995;21(6):548–557. [Google Scholar]

- Hietanen J. K., Leppänen J. M. Does facial expression affect attention orienting by gaze direction cues? Journal of Experimental Psychology: Human Perception and Performance. 2003;29:1228–1243. doi: 10.1037/0096-1523.29.6.1228. [DOI] [PubMed] [Google Scholar]

- Holmes A., Richards A., Green S. Anxiety and sensitivity to eye gaze in emotional faces. Brain and Cognition. 2006;60(3):282–294. doi: 10.1016/j.bandc.2005.05.002. [DOI] [PubMed] [Google Scholar]

- Kawashima R., Sugiura M., Kato T., Nakamura A., Hatano K., Ito K., et al. The human amygdala plays an important role in gaze monitoring: A PET study. Brain. 1999;122:779–783. doi: 10.1093/brain/122.4.779. [DOI] [PubMed] [Google Scholar]

- Kleinke C. L. Gaze and eye contact: A research review. Psychological Bulletin. 1986;100(1):78–100. [PubMed] [Google Scholar]

- Lang P. J., Bradley M. M., Cuthbert B. N. International Affective Picture System (IAPS): Digitized photographs, instruction manual and affective ratings (Tech. Rep. No. A-6) Gainesville, FL: University of Florida; 2005. [Google Scholar]

- Mathews A., Fox E., Yiend J., Calder A. The face of fear: Effects of eye gaze and emotion on visual attention. Visual Cognition. 2003;10:823–835. doi: 10.1080/13506280344000095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore C., Dunham P. J., editors. Joint attention: Its origins and role in development. Hove, UK: Lawrence Erlbaum Associates Ltd; 1995. [Google Scholar]

- Pecchinenda A., Pes M., Ferlazzo F., Zoccolotti P. The combined effect of gaze direction and facial expression on cueing spatial attention. Emotion. 2008;8(5):628–634. doi: 10.1037/a0013437. [DOI] [PubMed] [Google Scholar]

- Posner M. I. Orienting of attention. Quarterly Journal of Experimental Psychology. 1980;32A:3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- Putman P., Hermans E., van Honk J. Anxiety meets fear in perception of dynamic expressive gaze. Emotion. 2006;6(1):94–102. doi: 10.1037/1528-3542.6.1.94. [DOI] [PubMed] [Google Scholar]

- Ristic J., Mottron L., Friesen C. K., Iarocci G., Burack J. A., Kingstone A. Eyes are special but not for everyone: The case of autism. Cognitive Brain Research. 2005;24:715–718. doi: 10.1016/j.cogbrainres.2005.02.007. [DOI] [PubMed] [Google Scholar]

- Schilbach L., Wilmus M., Eickhoff S. B., Romanzetti S., Tepest R., Bente G., et al. Minds made for sharing: Initiating joint attention recruits reward-related neurocircuitry. Journal of Cognitive Neuroscience. (in press) [DOI] [PubMed]

- Tipples J. Orienting to eye gaze and face processing. Journal of Experimental Psychology: Human Perception and Performance. 2005;31:843–856. doi: 10.1037/0096-1523.31.5.843. [DOI] [PubMed] [Google Scholar]

- Tipples J. Fear and fearfulness potentiate automatic orienting to eye gaze. Cognition and Emotion. 2006;20(2):309–320. [Google Scholar]

- Yiend J. The effects of emotion on attention: A review of attentional processing of emotional information. Cognition and Emotion. 2010;24(1):3–47. [Google Scholar]