Abstract

Studies that have assessed whether children prefer contingent reinforcement (CR) or noncontingent reinforcement (NCR) have shown that they prefer CR. Preference for CR has, however, been evaluated only under continuous reinforcement (CRF) schedules. The prevalence of intermittent reinforcement (INT) warrants an evaluation of whether preference for CR persists as the schedule of reinforcement is thinned. In the current study, we evaluated 2 children's preference for contingent versus noncontingent delivery of highly preferred edible items for academic task completion under CRF and INT schedules. Children (a) preferred CR to NCR under the CRF schedule, (b) continued to prefer CR as the schedule of reinforcement became intermittent, and (c) exhibited a shift in preference from CR to NCR as the schedule became increasingly thin. These findings extend the generality of and provide one set of limits to the preference for CR. Applied implications, variables controlling preferences, and future research are discussed.

Keywords: concurrent-chains arrangement, contingency strength, contingent reinforcement, intermittent reinforcement, noncontingent reinforcement, preference assessment

The importance of determining children's preference for behavioral interventions or teaching strategies increases when different interventions or strategies are equally effective (e.g., Hanley, Piazza, Fisher, Contrucci, & Maglieri, 1997; Heal & Hanley, 2007). For instance, Hanley et al. evaluated the preferences of two children with intellectual disabilities for differential reinforcement of alternative behavior (DRA) and noncontingent reinforcement (NCR) treatments that were equally effective in reducing severe problem behavior. Preferences were determined within a concurrent-chains arrangement composed of initial and terminal links. In the initial link, the child pressed one of three concurrently available switches that produced access to a DRA, an NCR, or an extinction schedule that operated in the terminal links. The largely biased response allocation in the initial links indicated a preference to obtain adult social interaction under a DRA schedule for both children. Thus, a preference for contingent reinforcement (CR) over NCR was demonstrated.

The reliability and generality of children's preferences to obtain reinforcement via contingencies were further evaluated by Luczynski and Hanley (2009). In a play context, eight children of typical development experienced DRA, NCR, and extinction schedules. Social interaction was provided immediately following every vocal response (“excuse me”) in DRA, whereas the amount and temporal distribution of social interaction were yoked on a time-based schedule in NCR. Seven of eight children preferred DRA over NCR, and one child was indifferent. These results provided additional support for the notion that children prefer CR to NCR.

Similar findings regarding children's preference for CR over NCR were reported by Singh (1970) and Singh and Query (1971). Using between-subjects designs and grouped data analyses, these authors evaluated 5- to 12-year-old children's preferences for obtaining the same rate of marbles contingently (by disengaging a lever while sitting on the left chair) or noncontingently (by simply sitting on the right chair). The children obtained a mean of 64% of marbles via pressing the lever across both studies, providing further support for children's preference for CR.

Taken together, these four studies have shown that children prefer CR. NCR is a highly relevant comparison schedule when considering children's preferences for CR for both experimental and ecological reasons. Regarding the former, the influence of the contingency can be isolated from other reinforcement parameters when comparing NCR and CR. Regarding the latter, many have argued that socially mediated contingent reward undermines the automatic reinforcement associated with task completion. This notion is usually described differently as the undermining intrinsic motivation or as the overjustification effect (Deci, Koestner, & Ryan, 1999; Lepper, Greene, & Nisbett, 1973). These assertions regarding the deleterious effects of reinforcement have resulted in strong advocacy against the use of contingent reinforcement (or reward) in popular press books (e.g., Kohn, 1993) and is often echoed by early childhood teachers when describing their reluctance to program reinforcement to promote learning in the classroom. The alternative to not programming CR is providing reinforcement noncontingently. In addition, evaluating the effects of and children's preference for the noncontingent delivery of reinforcement during academic work is important because NCR has precedence as a maintenance tactic in behavior analysis (e.g., Dozier et al., 2001; Dunlap, Koegel, Johnson, & O'Neill, 1987).

When NCR and CR are both simultaneously available, preference for CR has been observed across responses (mands and lever presses), settings (clinical, laboratory, and play contexts), ethnicities (American and American Indian), and children with and without disability. Nevertheless, the conditions for evaluating preference for CR have been restricted to a continuous reinforcement schedule (CRF) in which every response produces a reinforcer. Limiting preference assessments to CRF schedules is problematic because CRF schedules do not necessarily emulate how CR is implemented when adopted as a behavior-change tactic. A common goal in practice is to thin the schedule of reinforcement to increase the practicality for caregivers and teachers. Given the prevalence of intermittent (INT) reinforcement in clinical and educational contexts (Baer, Blount, Detrich, & Stokes, 1987; Cooper, Heron, & Heward, 2007; Martens et al., 2002; McGinnis, Friman, & Carlyon, 1999), evaluating whether preference for CR persists as the schedule of reinforcement is thinned warrants investigation.

In the current study, we evaluated preferences of two children of typical development for CR or yoked NCR. In contrast to Hanley et al. (1997) and Luczynski and Hanley (2009), who included social reinforcers and mands in the contingent relation, we included edible reinforcers and an academic target response commonly encountered in educational settings in the contingent relation. We arranged edible reinforcement to limit the likelihood of large fluctuations in motivation across sessions, a factor left to vary in previous evaluations (Hanley et al.; Luczynski & Hanley). The use of edible items with typically developing children who had not been clinically referred indicates the translational nature of our evaluation, but the presence of a relevant academic task and reinforcement schedules that emulate those used in practical contexts highlights the evaluation's applied relevance. Selecting an academic response, delivering edible reinforcement, and evaluating preference under an INT reinforcement schedule were also arranged to further evaluate the generality of preference for CR. To observe the impact of an INT reinforcement schedule on children's preference, we delivered reinforcement on a CRF schedule before and after arranging a progressively increasing INT schedule of reinforcement.

METHOD

Participants, Setting, and Materials

Two typically developing boys who attended an inclusive preschool participated. Children were selected based on demonstrating the skill of completing an identity-matching task (printed numbers 1 through 10) with 90% accuracy and their consistent daily assent when asked to participate. At the onset of the study, Tim was 5 years 6 months old and Dave was 4 years 9 months old. Both children had demonstrated a preference for differential reinforcement over NCR to access social interaction (Luczynski & Hanley, 2009). Over 2 and 7 months had passed between that evaluation and the current study for Tim and Dave, respectively.

Sessions were conducted in a room (3 m by 3 m) equipped with a one-way observation window, a table, and two chairs. The child sat at the middle of a table, and the experimenter sat directly across from the child.

Small (10 cm by 10 cm) colored cards served as initial-link stimuli, and large (0.5 m by 1 m) colored cards served as discriminative stimuli in the terminal links. The academic task involved comparison stimuli made of 10 squares (4.5 cm by 8.5 cm) permanently fixed in a row that depicted the numbers 1 through 10 in ascending order. Behind the row on the poster board was a pile of 50 replica cards that served as sample stimuli. The 50 cards included five sets of numbers 1 through 10 in a mixed order. The identity-matching task was selected because it was similar to tasks presented during direct instructional periods in preschools.

Preassessments

Highly preferred edible items were used as reinforcers to maintain a high and steady level of motivation across sessions. Twelve snack items were assessed in a paired-item preference assessment as described by Fisher et al. (1992). The top four items were used in the efficacy and preference assessments described below.

A preference assessment was also conducted to identify moderately preferred colors to function as initial-link stimuli in an attempt to decrease the likelihood that selections would be influenced by an existing color bias. The procedures mimicked those outlined for the edible preference assessment with the exception that every color selection resulted in the same consequence (brief social praise; i.e., no differential consequences). Each child's preference hierarchy was examined, and the colors ranked as neither the highest nor the lowest were assigned randomly to each schedule.

General Concurrent-Chains Arrangement

Each session consisted of one initial-link selection and one subsequent terminal-link experience in a concurrent-chains arrangement (Hanley et al., 1997). Three sessions were typically conducted each day to limit the child's consumption of edible items. Between sessions, the experimenter and child engaged in a variety of child-selected activities (e.g., playing tag, soccer) for 3 to 6 min. Prior to each day's sessions, the four top-ranked edible items were equally spaced apart and presented to the child, from which one selection was made. The selected item was then delivered as the reinforcer for that day's sessions. We first evaluated the effects of CR, NCR, and no reinforcement (no Sr+) on academic responding (efficacy evaluation). No Sr+ served as a control condition for interpreting response allocation in the initial link. Next, we evaluated children's preference when reinforcement was delivered under continuous and INT schedules of reinforcement (preference assessment).

Data Collection and Response Measurement

The initial-link response, card selection, was defined as handing the experimenter one of the available cards located 25 cm apart on the session room door. Card selections were scored using paper and pencil. In the terminal links, research assistants stood behind the one-way observation window and scored correct academic responses when the child placed and released a sample stimulus with his hand over more than half of the identical comparison stimulus; incorrect academic responses were scored when the child placed and released a sample stimulus with his hand over more than half of a nonidentical comparison stimulus. If neither of these responses were observed, no change occurred in the experimenter's behavior. Observers also scored reinforcer deliveries in the terminal link when the experimenter placed an edible item in the child's hand, smiled, and delivered a thumbs up. Data were collected using a continuous measurement system with handheld computers. The number of total responses and total reinforcer deliveries were each divided by the session's duration (typically 3 min) to convert the child's and teacher's behavior to a rate (responses per minute). The computers provided a moment-to-moment record of correct and incorrect academic responses and reinforcer deliveries.

Interobserver Agreement

Interobserver agreement was assessed by having a second observer simultaneously but independently score initial-link card selections, correct and incorrect academic responses, and reinforcer deliveries. Initial-link agreement for card selections was defined as both observers scoring the same colored card selected and dividing that number by the total number of selections. Agreement data were collected for 100% of initial-link selections and resulted in 100% agreement for both children. In the terminal links, agreement for correct and incorrect academic responses and reinforcer deliveries was determined by partitioning the duration of terminal links into 10-s bins and comparing data collectors' observations on an interval-by-interval basis. Within each interval, the smaller number of scored events was divided by the larger number; these quotients were then converted to a percentage and averaged across the intervals for all sessions. Percentage of sessions scored by a second observer was 53% for Dave and 44% for Tim. Mean agreement for academic responses was 96% for Dave (session range, 69% to 100%) and 93% for Tim (session range, 62% to 100%). Mean agreement for reinforcer deliveries was 98% for Dave (session range, 85% to 100%) and 97% for Tim (session range, 72% to 100%).

Experimental Design

A multielement design was used to determine the effects of CR, NCR, and no Sr+ on correct academic responding. A concurrent-chains arrangement was used to determine children's preference for CR or NCR. A reversal design was used to determine the impact of the CR schedule (CRF vs. INT) on children's preferences for the schedules.

Efficacy Evaluation

Efficacy sessions involved the experimenter prompting the selection of one colored card from those concurrently available in the initial link, followed by the child experiencing the associated reinforcement schedule in the terminal link. This provided the children with equal exposure to the terminal links within a multielement design and allowed the effects of the reinforcement schedules on academic responding to be evaluated. The order of prompted selections across sessions was random and counterbalanced and allowed children to repeatedly experience the association between the colored card selections and the programmed reinforcement schedules.

During the initial link, the experimenter stood next to the child and said, “Hand me the [color] card,” which was located on the session room door. The child and experimenter then entered the room in which contingency-specifying instructions and role playing were conducted prior to the start of session. When the child selected the colored card associated with the CR schedule, the experimenter held up the card and said,

[Child's name], when you hand me the [color] card, I will give you a piece of food and a thumbs up when you correctly match a number. You can match a number by taking one card from the pile and matching it [simultaneously modeled]. If you make a mistake, I will point to the correct card [simultaneously modeled]. When I give you a piece of food, eat it right away.

Under the NCR schedule, the experimenter held up the associated colored card and said, “[Child's name], when you hand me the [color] card, I will sometimes give you a piece of food and a thumbs up and sometimes I will not. When I give you a piece of food, eat it right away.” Under the no-Sr+ schedule, the experimenter held up the associated colored card and said, “[Child's name], when you hand me the [color] card, I will not give you a piece of food, and I will not give you a thumbs up when you correctly match the numbers.”

After providing the instructions for a given schedule, the experimenter then initiated a brief role play by saying, “Let's practice, one, two, three, start.” In the CR role play, the experimenter used hand-over-hand guidance to prompt an academic response that was immediately followed by a small piece of an edible item, thumbs up, and smile. In the NCR role play, the experimenter delivered an edible item with a thumbs up and smile immediately, independent of responding. By contrast, during the no-Sr+ role play, the experimenter diverted his eyes and head away from the child for 10 s, resulting in the absence of reinforcement. The session then began, and the following conditions were implemented.

In the CR condition, the child was seated at the table facing the fixed row of 10 comparison numbers on the poster board and the pile of 50 sample numbers. Every independent and prompted correct response resulted in the immediate delivery of an edible item with a thumbs up and smile. Incorrect responses were followed by a correction prompt in which the experimenter touched the matching number. Tim and Dave emitted mostly correct responses, with only 2% (10 errors in 563 responses) and 4% (32 errors in 876 responses) incorrect responses, respectively. After delivery of a reinforcer, the experimenter removed the correctly matched sample stimulus from the array of comparison stimuli. The child experienced the schedule's contingencies for 2 min or until 10 edible items had been delivered. The session durations for NCR and no-Sr+ sessions were yoked to the previous CR session. In NCR, the frequency and temporal distribution of reinforcement were also yoked to that observed in the preceding CR session. Yoking involved segmenting the duration of a CR session into 5-s intervals and marking an X for each reinforcer delivery. During the next NCR session, the marked data sheet was present but out of the child's view, and the experimenter delivered reinforcement for every X within an interval. During no-Sr+ sessions, the experimenter directed his eyes and head toward the table. If incorrect academic responses occurred during NCR and no Sr+, the experimenter removed the sample stimulus and provided correction prompts as in CR.

Preference Assessments

CRF preference assessment

In the initial link, the experimenter asked the child to “Hand me the card that you like the best.” Both children always made a selection following the initial prompt. The child then immediately entered the session room and experienced the contingency-specifying instructions and role play associated with the selected card. At the start of the session, correct responses produced an edible item on a CRF schedule (i.e., following every response). The placement of cards in the initial link was determined randomly for the first session and then rotated clockwise for each subsequent session. The assessment continued until one colored card was selected four more times than any other card; this defined a preferred terminal link.

INT preference assessment

When the child met the preference criterion under the CRF schedule, preference between the schedules continued to be evaluated as the schedule of reinforcement during CR was progressively thinned. Each initial-link selection toward CR increased the number of correct academic responses required to produce reinforcement by a unit of 1. That is, the fixed-ratio (FR) schedule increased by 1 (e.g., FR 1, FR 2, FR 3) following each session in which CR was selected. The instructions remained as experienced in the CRF assessment, except the experimenter specified the required number of correct matches in CR. Given that reinforcement in NCR was yoked to that in CR, as the schedule of reinforcement became thinner in CR, a corresponding decrease in reinforcement rate was experienced in NCR. A shift in preference was defined as four selections toward either NCR or no Sr+ without a selection toward CR.

Procedural Fidelity

The experiment necessitated programming specific FR values in CR and a yoked amount of reinforcement in NCR. In CR, fidelity measures determined whether reinforcers were delivered only following the academic response that completed a particular FR schedule (dependent fidelity) and within 2 s of that response (temporal fidelity). Dependent fidelity allowed the assessment of errors of omission or commission, and temporal fidelity allowed for timing errors (e.g., delayed reinforcement) to be detected. In NCR, fidelity measures determined whether the amount of edible items delivered matched that experienced in the preceding CR session. The fidelity measures were determined from each session's data stream.

Response–reinforcer occurrences with fidelity were defined as the schedule-specified number of responses followed by a reinforcer. Occurrences with error included the schedule-specified number of responses not followed by a reinforcer (error of omission) or a reinforcer preceded by another reinforcer before a response was observed (error of commission). The number of occurrences with fidelity was divided by the number with fidelity and error and converted to a percentage of dependent integrity. The occurrences with fidelity in the dependent fidelity assessment were then analyzed for temporal fidelity. Occurrences with temporal fidelity were defined as responses that completed the schedule requirement followed by a reinforcer within 2 s. That number was then divided by the total number of occurrences with dependent fidelity and converted to a percentage. The dependent and temporal fidelity percentages were then averaged across all sessions to yield a single fidelity percentage for each measure and child. Mean overall percentages of fidelity were 99% for Dave (session range, 90% to 100%) and 98% for Tim (session range, 80% to 100%). The results confirmed that reinforcement was delivered as specified by the programmed FR schedule with minimal error.

For NCR sessions, the procedural fidelity measure involved determining the number of reinforcers delivered in each pair of CR and NCR sessions, dividing the smaller number by the larger number, and converting the ratio to a percentage. The percentages were averaged across all sessions to yield a single fidelity percentage for each child. Mean overall percentages of fidelity were 99% for Dave (session range, 89% to 100%) and 99% for Tim (session range, 91% to 100%). These results demonstrate that the amount of reinforcement yoked to NCR was similar to that delivered in CR, which diminished the confounding effect of differences in obtained reinforcement across the schedules as an explanation for preference outcomes.

Contingency Strength Analysis

To determine whether a positive contingency was indeed in place during CR and absent during NCR, contingency strength values experienced across both schedules were quantified, as described by Luczynski and Hanley (2009). In addition to describing the contingency strengths that existed in CR and NCR, these data allowed an assessment of the degree to which contingency strength changed in CR as a function of the progressively INT schedule of reinforcement.

Two conditional probabilities, composed of independent correlations between responses and reinforcers, were used to produce a contingency strength that could be interpreted along a continuum from 1 to −1 and described in terms of positive, neutral, and negative contingencies, as outlined by Hammond (1980). Specifically, the response conditional probability was calculated by counting the number of times at least one reinforcer occurred within 4 s following each correct response and then dividing that number by the total number of correct responses within a session. This yielded a proportional score between 0 and 1. The event conditional probability was calculated by counting the number of times a reinforcer was not preceded within 4 s by a correct response and dividing that number by the total number of reinforcers within a session, which also yielded a proportional score between 0 and 1. Subtracting the event conditional probability from the response conditional probability produced a contingency strength value between 1 and −1.

RESULTS

Efficacy Evaluation

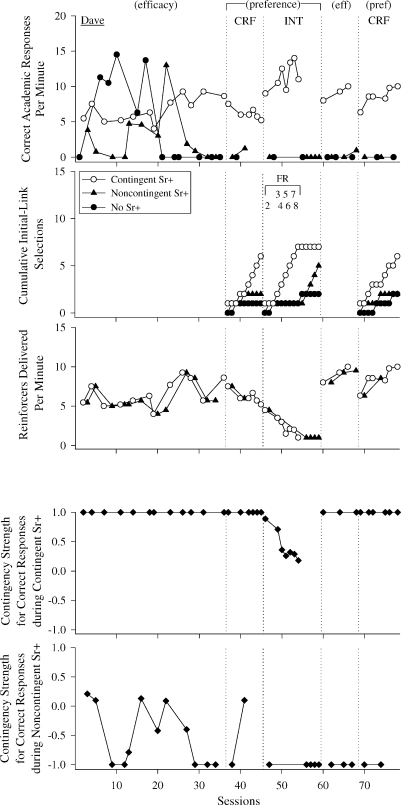

Dave's correct academic responding initially occurred across all schedules during the efficacy evaluation, with variable rates of responding in NCR and no Sr+ and relatively stable rates in CR (Figure 1). After experiencing the programmed contingencies in each terminal link about 10 times each, his rate of academic responding decreased to zero in NCR and no Sr+, but was maintained at a steady level in CR. This general pattern of academic responding persisted through the rest of his evaluation. These data indicate that academic responding became sensitive to the programmed contingencies, which was an important prerequisite to assessing relative preference between the reinforcement schedules.

Figure 1.

Correct academic responses per minute (top row) during contingent reinforcement (open circles), noncontingent reinforcement (filled triangles), and no reinforcement (filled circles) schedules. Cumulative initial-link selections (second row) during the continuous reinforcement (CRF) and intermittent reinforcement (INT) preference assessments. Reinforcers delivered per minute (third row) and contingency strength values for contingent (fourth row) and noncontingent (bottom row) reinforcement sessions for Dave.

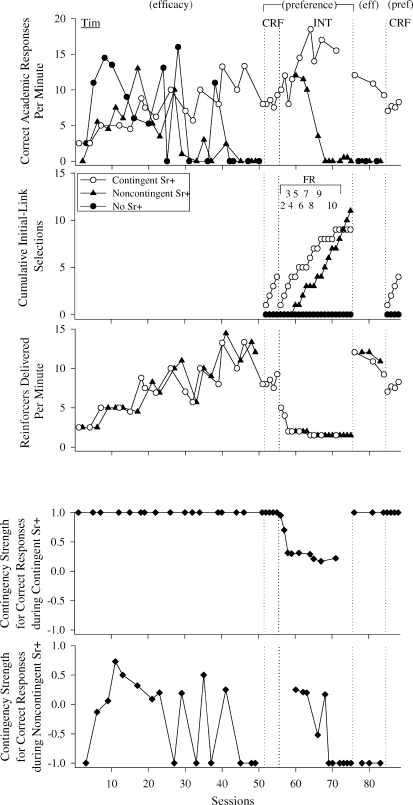

Tim also initially emitted academic responding across all reinforcement schedules with relatively high, variable rates in NCR and no Sr+ and more moderate, stable rates in CR (Figure 2). Like Dave, following extended exposure to the programmed contingencies, Tim's academic responding decreased to zero in NCR and no Sr+ while remaining at a relatively high level in CR. This general pattern of academic responding persisted through the rest of his evaluation as well, with the exception of an increase in academic responding during NCR when an INT schedule was introduced.

Figure 2.

Correct academic responses per minute (top row) during contingent reinforcement (open circles), noncontingent reinforcement (filled triangles), and no reinforcement (filled circles) schedules. Cumulative initial-link selections (second row) during the continuous reinforcement (CRF) and intermittent reinforcement (INT) preference assessments. Reinforcers delivered per minute (third row) and contingency strength values for contingent (fourth row) and noncontingent (bottom row) reinforcement sessions for Tim.

Preference Assessments

In the CRF preference assessment, Dave allocated four more selections toward the colored card associated with gaining access to CR than to the cards associated with NCR or no Sr+, thus demonstrating a preference for CR during academics. After observing a preference for CR under a continuous schedule, the INT preference assessment was initiated. As the INT schedule for correct academic responses became thinner during CR, he continued to allocate selections to access CR. Selections of CR persisted until the intermittency of the schedule reached FR 8. Thereafter, he allocated all selections to access reinforcement under the NCR schedule (with the exception of one selection toward no Sr+). The reallocation of selections suggested a shift in preference from response-dependent to response-independent reinforcement as the schedule became relatively thin.

After Dave experienced another brief history of obtaining contingent reinforcement on a CRF schedule, the second CRF preference assessment was conducted. He allocated four more selections toward CR, replicating his initial preference and demonstrating functional control over the INT schedule producing the shift in preference toward NCR.

In Tim's CRF preference assessment, he allocated selections exclusively toward the colored card associated with CR. Thus, like Dave, he also preferred CR during academics. Selections exclusive to CR continued as the schedule requirement increased to FR 5 during the INT preference assessment. Subsequent selections, however, were more evenly distributed between CR and NCR. After he experienced an FR 10 schedule, a more pronounced change in his pattern of selections was observed, with four consecutive selections toward NCR.

In the second CRF preference assessment, Tim again allocated selections exclusively towards CR. This replicated preference for CR under a CRF schedule and provided evidence that the shift in preference was functionally related to the schedule of contingent reinforcement.

Reinforcement Amount

The data presented in the third panel of both figures allow a comparison of the rate of reinforcement experienced by Dave and Tim during CR and NCR sessions under a CRF schedule and decreases in obtained reinforcement as the INT schedule was thinned. The experimenter delivered nearly identical levels of reinforcement across both schedules throughout all phases. In addition, both children experienced similar decreases in the rate of reinforcement as the schedule of reinforcement was thinned. Therefore, there was no advantage with respect to reinforcement amount for selecting CR or NCR.

Contingency Strength Analysis

During the efficacy evaluation, both children experienced a contingency strength of 1 across all sessions in CR (the strongest positive contingency), which describes that reinforcement was delivered immediately (i.e., within 4 s) following every correct academic response and never in its absence. A strong contingency strength is expected under contingent and continuous reinforcement schedules. The presence of academic responding during NCR resulted in a mixture of positive, neutral, and negative contingency strength values ranging from .2 to −1. The range of values suggests that academic responding adventitiously co-occurred with a varying number of scheduled reinforcer deliveries across sessions. Following repeated exposure to yoked time-based reinforcer deliveries, academic responding decreased to zero in NCR, and both students experienced a contingency strength of −1 (the strongest negative contingency) prior to entering the CRF preference assessment.

During the INT preference assessment, as the schedule of reinforcement became thinner in CR, a concomitant weakening in the strength of the positive contingency was observed for both students. At FR 8 and FR 10, in which a shift in preference occurred for both students, contingency strengths in CR weakened to only slightly positive values of .18 and .22, respectively. Returning to a CRF schedule in the efficacy evaluation reestablished the strongest contingency strength of 1.

DISCUSSION

This study assessed children's preference for contingent and noncontingent edible reinforcement under both continuous and INT schedules of reinforcement for completing academic work. Both children preferred CR over NCR under a CRF schedule. When reinforcement was delivered on a progressively increasing INT schedule, children's preference for CR persisted. However, as the reinforcement schedule became thinner and the contingency in the CR condition grew weaker, preference shifted towards NCR. After the return to a CRF schedule, preference for CR was observed again, which demonstrated that preference shifts were functionally related to reinforcement intermittency.

Preference for CR over NCR delivered under a CRF schedule systematically replicates the results of Hanley et al. (1997), Luczynski and Hanley (2009), Singh (1970), and Singh and Query (1971). Arranging a qualitatively different response (academic work) and reinforcer (edible items) than previous research extends the generality of this phenomenon to additional response and reinforcer types. A more notable extension was the evaluation of preference under a schedule type (INT reinforcement) that emulates a common practice in early childhood education and behavioral treatments. The results indicated that children's preference for response-dependent reinforcement is not limited to CRF schedules. That is, children may still prefer to complete academic work rather than obtain reinforcement freely even as the schedule of reinforcement is thinned (Dave and Tim preferred CR at FR values of 7 and 9, respectively). Observing preference for CR in an instructional context under INT reinforcement provides some evidence that thinning the reinforcement schedule following the acquisition of an academic response (Baer et al., 1987; Cooper et al., 2007; Martens et al., 2002; McGinnis et al., 1999) can still result in a preferred learning context.

Hanley et al. (1997) explained preference for CR by asserting that the programmed contingency allowed children to access reinforcement at moments when it was most valuable. Responding to access reinforcement in Hanley et al. and Luczynski and Hanley (2009) was variable, and children did not maximize reinforcement; thus, fluctuation in the value (i.e., establishing operations) of the reinforcers was assumed in these studies. This suggests the value of a contingency is found in the inherent response-dependent mechanism that allows children to match obtained reinforcement to personal motivating operations.

This proximate advantage afforded by a strong positive contingency was probably reduced in the current study relative to that found in Hanley et al. (1997) and Luczynski and Hanley (2009) because small amounts of highly preferred edible items were used as reinforcers, and no competing reinforcers (e.g., additional toy items) were present. As evidence, responding in the CR condition occurred at similar rates across sessions (Dave, 7.3 responses per minute, SD = 1.6; Tim, 8.0 responses per minute, SD = 1.8), and both children earned all 10 reinforcers in the majority of sessions (93% of sessions for each). The high, stable rates of responding in CR provides evidence that large fluctuations in motivation were likely absent; thus, the presumed momentary advantage of a contingency in the CR condition of our analysis was essentially absent. Therefore, a reliance on either phylogenic (evolutionary history), ontogenic (an individual's learning history), or both explanations is necessary (see discussion in Catania & Sagvolden, 1980), or a search for relevant factors in the experimental arrangement controlling preference for CR is warranted. We conducted a post hoc analysis to identify possible factors that may have influenced preference for CR.

Reinforcement rates were comparable across CR and NCR (see the third panels of each figure); thus, preference for CR cannot be explained by differences in rates of reinforcement. Instructions provided by the experimenter, self-generated rules (e.g., “Doing work is good”; see Horne & Lowe, 1993), or both cannot entirely account for the results of the current investigation because preference shifted to NCR when the schedule of reinforcement was thinned. Responding decreased to zero in the no-Sr+ condition prior to assessing preference. Therefore, supplemental automatic reinforcement produced by engaging in the academic response in CR does not seem to be a plausible explanation for the preference outcomes.

Fisher, Thompson, Hagopian, Bowman, and Krug (2000) and Mischel, Ebbesen, and Zeiss (1972) asserted that engaging in academic responding may serve to mediate time periods between reinforcer deliveries. This assertion was based on children tolerating delays more effectively when alternative activities were present during the delay periods. Yoking the amount and distribution of reinforcement from CR to NCR produced variable interreinforcement times and with only academic materials present in the room, the child did not behave much during the interreinforcement times in NCR (i.e., he simply waited). By contrast, engaging in the academic response filled the time periods when edible items were not being consumed in CR. That is, engaging in academic responding may have functioned as a mediating activity and influenced preference toward CR in the CRF preference assessment.

Although the presence of a mediating activity may account for the observed preferences under the CRF schedule, it does not predict shifts in preference at thinner schedules of reinforcement. As the FR schedule increased, so did the time to complete it; therefore, engaging in academic behavior as a means to mediate the time until reinforcement should have been of even greater value in CR as the schedule was thinned, and our data showed that it was not.

A more plausible and likely account is that accessing reinforcement under an FR schedule added value to CR through a conditioned reinforcement process in the form of response completions becoming discriminative for reinforcement delivery. Completing responses within an FR schedule can acquire a discriminative function, especially in our progressively increasing FR schedule, because correct responses were correlated with the subsequent delivery of reinforcement. Thus, engaging in academic responses may have produced conditioned reinforcement in CR, not via automatic reinforcement but as discriminative stimuli within the reinforcement contingency. However, this conditioned reinforcement may have been at strength under only relatively dense reinforcement schedules, such that a weakening in conditioned reinforcement may have occurred (i.e., the discriminative function of completing responses decreased) as the schedule of reinforcement became relatively thin.

Taken together, preference for CR and the shift in preference from CR to NCR may have been influenced by an interaction between the effort associated with the FR requirement and the supplemental value of conditioned reinforcement in CR. In the CRF preference assessment, the conditioned reinforcement may have been greater than the effort of completing one academic response, leading to a preference for CR. However, at higher FR values, the increased response effort combined with the weakening of the conditioned value of completing each academic response may have resulted in the shift in preference away from CR and toward NCR. This explanation, based on an interaction between response effort and conditioned reinforcement, seems the most plausible for both the initial preference for CR and the shift in preference as a function of reinforcement intermittency.

The aforementioned explanation for the shift in preference from CR to NCR is conceptually systematic and may be an accurate account, but previous research has implicated the role of accessing reinforcement under a strong positive contingency (Hanley et al., 1997; Luczynski & Hanley, 2009). The weakening of the positive contingency strength in CR as the schedule of reinforcement was thinned may have removed this appetitive feature, and therefore shifted preference (see the second panels of each figure). Finally, if the children's behavior was sensitive to subtle, temporally extended events, preference shifts may have occurred because further increases in response requirements under CR, which also increased delays under NCR, were avoided by selecting NCR.

Our tentative inferences regarding potential controlling variables for children's preference for CR and shifts in preference toward NCR at thinner schedules of reinforcement warrant further research. For instance, to better understand the impact of response effort, comparisons of different target responses that vary in associated response effort may be useful. To better understand possible conditioned reinforcement effects, programming variable-interval schedules in comparison to FR schedules could provide information as to whether the conditioned value of responding during the FR schedules controls preference for CR. If fluctuations in conditioned reinforcement influence children's preference between CR and NCR schedules, a strategy for maintaining preference for CR might be to include more explicit conditioned reinforcers as the CR schedule is thinned to stave off the shift in preference to NCR.

Our results, in combination with previous research on children's preference for CR versus NCR, continue to show that children prefer to obtain CR under a relatively dense schedule regardless of the type of response, reinforcer, and context arranged. The thinning of reinforcement to resemble practical application showed that two children still preferred to work to obtain reinforcement in an instructional setting. However, our results also showed that a less preferred learning context may develop as the schedule of reinforcement becomes relatively thin. Due to the limitations of our investigation, which include our small number of intersubject replications, the absence of intrasubject replication for preference shifts, the children's participation in previous research, and the limited parameters evaluated, further research should continue to identify the boundary conditions of preference for contingent reinforcement across different responses, reinforcers, and schedules. These studies may lead to a better understanding of how to design preferred environments that involve programmed reinforcement.

REFERENCES

- Baer R.A, Blount R.L, Detrich R, Stokes T.F. Using intermittent reinforcement to program maintenance of verbal/nonverbal correspondence. Journal of Applied Behavior Analysis. 1987;20:179–184. doi: 10.1901/jaba.1987.20-179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catania A.C, Sagvolden T. Preference for free choice over forced choice in pigeons. Journal of the Experimental Analysis of Behavior. 1980;34:77–86. doi: 10.1901/jeab.1980.34-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper J.O, Heron T.E, Heward W.L. Applied behavior analysis (2nd ed.) Englewood Cliffs, NJ: Pearson Prentice Hall; 2007. [Google Scholar]

- Deci E.L, Koestner R, Ryan R.M. A meta-analytic review of experiments examining the effects of external rewards on intrinsic motivation. Psychological Bulletin. 1999;125:627–668. doi: 10.1037/0033-2909.125.6.627. [DOI] [PubMed] [Google Scholar]

- Dozier C.L, Carr J.E, Enloe K, Landaburu H, Eastridge D, Kellum K.K. Using fixed-time schedules to maintain behavior: A preliminary investigation. Journal of Applied Behavior Analysis. 2001;34:337–340. doi: 10.1901/jaba.2001.34-337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunlap G, Koegel R.L, Johnson J, O'Neill R.E. Maintaining performance of autistic clients in community settings with delayed contingencies. Journal of Applied Behavior Analysis. 1987;20:185–191. doi: 10.1901/jaba.1987.20-185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W, Piazza C.C, Bowman L.G, Hagopian L.P, Owens J.C, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W.W, Thompson R.H, Hagopian L.P, Bowman L.G, Krug A. Facilitating tolerance of delayed reinforcement during functional communication training. Behavior Modification. 2000;24:3–9. doi: 10.1177/0145445500241001. [DOI] [PubMed] [Google Scholar]

- Hammond L.J. The effect of contingency upon the appetitive conditioning of free-operant behavior. Journal of the Experimental Analysis of Behavior. 1980;34:297–304. doi: 10.1901/jeab.1980.34-297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley G.P, Piazza C.C, Fisher W.W, Contrucci S.A, Maglieri K.M. Evaluation of client preference for function-based treatments. Journal of Applied Behavior Analysis. 1997;30:459–473. doi: 10.1901/jaba.1997.30-459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heal N.A, Hanley G.P. Evaluating preschool children's preferences for motivational systems during instruction. Journal of Applied Behavior Analysis. 2007;40:249–261. doi: 10.1901/jaba.2007.59-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horne P.J, Lowe C.F. Determinants of human performance on concurrent schedules. Journal of the Experimental Analysis of Behavior. 1993;59:29–60. doi: 10.1901/jeab.1993.59-29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohn A. Punished by rewards. Boston: Houghton-Mifflin; 1993. [Google Scholar]

- Lepper M.R, Greene D, Nisbett R.E. Undermining children's intrinsic interest with extrinsic reward: A test of the “overjustification” hypothesis. Journal of Personality and Social Psychology. 1973;28:129–137. [Google Scholar]

- Luczynski K.C, Hanley G.P. Do children prefer contingencies? An evaluation of the efficacy of and preference for contingent versus noncontingent social reinforcement during play. Journal of Applied Behavior Analysis. 2009;42:511–525. doi: 10.1901/jaba.2009.42-511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martens B.K, Ardoin S.P, Hilt A.M, Lannie A.L, Panahon C.J, Wolfe L.A. Sensitivity of children's behavior to probabilistic reward: Effects of a decreasing-ratio lottery system on math performance. Journal of Applied Behavior Analysis. 2002;35:403–406. doi: 10.1901/jaba.2002.35-403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGinnis J.C, Friman P.C, Carlyon W.D. The effect of token rewards on “intrinsic” motivation for doing math. Journal of Applied Behavior Analysis. 1999;32:375–379. [Google Scholar]

- Mischel W, Ebbesen E.G, Zeiss A.R. Cognitive and attentional mechanisms in delay of gratification. Journal of Personality and Social Psychology. 1972;21:204–218. doi: 10.1037/h0032198. [DOI] [PubMed] [Google Scholar]

- Singh D. Preference for bar-pressing to obtain reward over free-loading in rats and children. Journal of Comparative and Physiological Psychology. 1970;73:320–327. [Google Scholar]

- Singh D, Query W.T. Preference for work over “freeloading” in children. Psychonomic Science. 1971;24:77–79. [Google Scholar]