Abstract

Purpose

This letter gives details of two releases of audio recordings available from speakers who stutter that can be accessed on the web. Most of the recordings are from school-age children. These are available on the UCLASS website and information is provided about how to access the site. A description of the recordings and background information about the speakers who contributed recordings to UCLASS Releases One and Two are given. The sample types available in Release One are monolog. Release Two has monologs, readings and conversations. Three optional software formats that can be used with the archive are described (though processing the archive is not restricted to these formats). Some perceptual assessment of the quality of each recording is given. An assessment of the strengths and limitations of the recording archive is presented. Finally, some past applications and future research possibilities using the recordings are discussed.

Keywords: Developmental stuttering, UCL Archive of Stuttered Speech (UCLASS) http://www.speech.psychol.ucl.ac.uk/, PRAAT http://www.praat.org, SFS http://www.phon.ucl.ac.uk/resource/sfs/, CHILDES http://childes.psy.cmu.edu, the Wellcome Trust http://www.wellcome.ac.uk

Audio recordings of stuttered speech can be used for research and clinical purposes, but such recordings have not always been easy to obtain in sufficient numbers to fulfill these needs. Speech recordings have been collected at University College London (UCL) for a number of years. The recordings are from children who were referred to clinics in London for assessment of stuttering. The intention was to establish a sample of children aged 8-10 years who were followed up to at least age 12. Children were eligible for inclusion if the diagnosis of stuttering was confirmed by a clinician who specialized in that disorder. The aim was to use this sample to see whether the children had persisted or recovered in stuttering at age 12 plus, and then to examine whether a range of measures obtained at 8-10 could have distinguished the persistent from the recovered children (Davis, Shisca & Howell, 2007; Howell, 2007; Howell, Davis & Williams, 2006; Howell, Davis & Williams, in press). All children who attended the clinics and met these criteria were recorded but not all of them were included in the longitudinal study, for a variety of reasons. For example, some of the children who lived a long way from the capital only visited the London clinic once for a specialist consultation. Another major reason was that some of the children were outside the 8-10 year age range. Also, some of the recordings were short.

The surplus recordings may be useful for training clinicians about the characteristics of stuttering, and for various research applications. Some examples of research applications are: 1) To establish the acoustic properties of stuttered speech; 2) For use as criteria against which to assess whether stuttering occurs in the speech of people with various genetic disorders (van Borsel & Tetnowski, 2007). Some of the unused recordings (where the participants have given written approval) were released previously as UCLASS Release One and more are released here as UCLASS Release Two (UCLASS stands for UCL Archive of Stuttered Speech). The web address for the UCLASS site is http://www.uclass.psychol.ucl.ac.uk/index_new.htm where both Releases can be obtained.

The Release One recordings, all monologs, were from speakers with a wide range of ages (5 years 4 months to 47 years). For the convenience of users, they were prepared in CHILDES, PRAAT and SFS formats, all of which are freeware available on the Internet that can process speech-language recordings. The software formats are widely used for language (CHILDES) and speech (PRAAT and SFS) analysis. Preparing the recordings in these popular formats before they are put on the site avoids unnecessary duplication of work. The details provided here should allow users to use the recordings with other packages. Access to the recordings, the suitable free software packages that were identified, some general software utilities written to handle recordings from people who stutter coded in SFS format and some suggested investigations which could be made using the UCLASS Release One recordings were described in Howell and Huckvale (2004). The recordings and software were supplied as part of the international move towards increased open access to scientific resources in general. In making the recordings available, it was also hoped that they would permit investigations that had not occurred to us and, in turn, that they would provide more information about stuttering. Visits to the UCLASS website have been monitored and showed that the data have continued to be downloaded at the same high rate as when they were first released.

This convinced us that people were finding these recordings useful and that it was worthwhile releasing recordings that have become available since 2004 (the date of UCLASS Release One and the associated description given in Howell & Huckvale, 2004). The data from Release One and Release Two can be grouped together for teaching and research purposes. However, Release Two was set up as a separate database so that ongoing work by users of Release One was not disrupted. Release Two contains conversational and read sample types as well as monologs (all from children). No restrictions other than acknowledgement of the source of the recordings, and of the funding agency who have supported our work (the Wellcome Trust) are placed on use of the recordings and software. All preparatory work (preparation in different formats, transcription conventions, any transcriptions performed etc.) that have been conducted on these recordings to date have been made available. The analyses we have conducted on these recordings are often limited (most of our time is spent on longitudinal recordings which are available at both target ages, 8-10 and 12 plus which are not included in UCLASS). Results are given even when they are limited to a few recordings to illustrate procedures. It is up to users to decide what analyses they wish to conduct, which software they wish to use, whether they want to follow our conventions and to arrange ways of sharing time-consuming activities like transcription and software development. It is not intended that we are involved in all activities associated with these recordings, so as to open up new perspectives into stuttering (although obviously we are interested in any findings).

Summary information is given in Table 1. Column one indicates release and sample type (monolog, reading, conversation) and column two gives the respective total number of recordings. Age range, speakers' mean age and the standard deviation (sd) are presented in columns three to five. Median and mode of ages are given in columns six and seven. The number of recordings broken down by gender is given in columns eight and nine.

Table 1.

The UCLASS Release number and sample type are indicated in column one. The number of recordings is given in column two. Age range, mean age, its sd and median age for all recordings are presented in columns three to six (all ages are given in NNyNNm format). Number of files from males is given in column seven and number from females is given in column eight.

| Age | Gender | ||||||

|---|---|---|---|---|---|---|---|

| Category | N | Range | Mean | sd | Median | Male | Female |

| Release 1 (monolog) |

138 | 5y4m – 47y0m |

13y2.86m | 6y1m | 12y1m | 120 | 18 |

| Release 2 (monolog) |

82 | 7y10m – 20y1m |

12y2.9m | 2y3.89m | 11y7m | 76 | 6 |

| Release 2 (reading) |

108 | 7y10m – 20y7m |

13y0.53m | 2y8.7m | 11y11.5m | 93 | 15 |

| Release 2 (conversation) |

128 | 5y4m – 20y7m |

12y2.71m | 2y4.52m | 12y1m | 110 | 18 |

In the following, information concerning how to access to the UCLASS website is given, followed by a description of recordings and sample types available in UCLASS Release One and UCLASS Release Two. The optional software formats are then described followed by the strengths and limitations of the recording archive and software packages that have been made available. Finally, some past applications and possible future research directions are discussed.

Accessing the UCLASS site

As indicated, the web address for the UCLASS site is http://www.uclass.psychol.ucl.ac.uk/index_new.htm. On entering the site, there is an acknowledgement to the Wellcome Trust. Some general details are given about Release One and Release Two, and about the fluency-enhancing effects of frequency shifted feedback (FSF) on speakers who stutter (Howell, El-Yaniv & Powell, 1987). Howell, Davis, Bartrip and Wormald (2004) give details about some analyses of these recordings. After this, there are links to Releases One and Two, and to a separate site about the FSF recordings (UCLASS-FSF).

Users who are familiar with UCLASS Release One and who have the freeware package they want to use can go directly to the Release Two list of recordings for download. For new users, details of the recordings available for download for each release are given in the next two sections of this letter.

In the middle of the display as you enter the Release One website, links are given which provide access to software packages for speech-language processing (CHILDES, PRAAT and SFS). All these are available for various platforms (Windows, Linux, Solaris Unix, SunOS Unix). CHILDES and PRAAT are available in Mac versions, but SFS is not. Although the recordings have been prepared for these three widely used freeware packages for users' convenience, access is not restricted to these packages. The recordings can also be accessed by general utility software. For example, it is possible to use RealPlayer for the .wav recordings, MS Access for the spreadsheet data, and view, cut and paste the text of transcriptions from the Internet Explorer's window. The freeware packages can be used on any PC manufactured in the last 10 years, although replay facilities are required for users who wish to listen to the recordings. The UCLASS recordings do not require any unusual computer hardware either. There are no restrictions on access to software or recordings provided the sites are up.

For both UCLASS Releases, all recordings had been given ethical approval at the time of their collection, and written permission was given for their release. The UCLASS archives are undergoing continuous accumulation. Release Three will appear in 2009.

UCLASS Release One

Overview

UCLASS Release One can be accessed through the main site, as just discussed, or directly using the URL http://www.uclass.psychol.ucl.ac.uk/uclass1.htm. On entering the site, there is an announcement that all the recordings are spontaneous monolog sample types, and gives the release date. The main sections that follow provide access to 1) tabulated information about the individual speakers whose recordings are available, 2) the audio recordings that are available for download, 3) the stand-alone transcriptions (only available for some of the recordings, which also applies to recordings described in the remaining sections), 4) audio recordings with transcriptions aligned against the audio record (available only in SFS) and 5) transcriptions of recordings with time markers that point to the location of the segments in the audio recording (available for all three freeware formats). Further details about each of these follows.

Tabulated information about speakers and recordings (also available in an ACCESS table)

Information about the speakers whose recordings are included is given in an ACCESS table (the original ACCESS file can be downloaded to provide a searchable database which can be used to select speakers e.g. by gender or age). The ACCESS table starts with the recording's filename in column one. This begins with a code for gender (M/F), then has underscore and a four figure code to uniquely identify the speaker, underscore age NNyNm (where “y” stands for years and “m” stands for months), followed by underscore and a number indicating which recording in that month this represents (this is usually “1”). The second column repeats gender (M for male and F for female) to facilitate search for this attribute. The next three columns provide information on handedness (left, right, not known), whether there is a reported past history of stuttering in the family (no, yes or not known) and age of stuttering onset (in months). Column six repeats age at the time of recording (in months), to facilitate search for this attribute again. The seventh column indicates where the recording was made (clinic, UCL or home). Recording conditions are given in column eight as either quiet room (QR) or sound-treated room (STR). The recordings in the STR are usually better quality than those in a QR.

The ninth column gives the type of therapy received (family based treatment, F, or holistic treatment, H). For both types of treatment, parents were taught to identify the behaviors they were using that were helpful or detrimental to their child's fluency. This information was used to change the child's communication environment. During treatment, the parents and children were given instructions, training and exercises to deal with the problem, including using slow rates of speech in family interactions, use of different communication styles (looking at the child during conversation etc.), spending more time talking directly with the child (not in situations where there was television or other distractions), and how to cope with bullying, teasing etc. In all cases, treatment was reported to be restricted to that given at the clinic.

The tenth column gives time (in months) between therapy and when the recording was made. The eleventh column shows whether the speaker had any history of hearing problems. The twelfth and thirteenth columns indicate whether the speaker had a history of language problems or special educational needs (SEN) respectively. Children with no SEN or language problems other than the stuttering were at their age-appropriate developmental level. The fourteenth, fifteenth and sixteenth columns show whether any manual transcripts are available (orthographic, phonetic and, for the recordings that have phonetic transcriptions, those which are time-aligned, respectively). The seventeenth, eighteenth, nineteenth and twentieth columns provide perceptual scores for each recording concerning background acoustic noise, environmental noise, speaker clarity and interlocutor intrusiveness (these are described after optional software formats below). No word counts are available for the recordings in this Release. Eighty-one different speakers contributed 138 monolog recordings to Release One. None of the recordings were contributed by speakers who spoke a language other than English primarily in the home. There are multiple recordings available from some speakers which can be identified from the filename in the ACCESS table as those having the same four figure code. Table 2 summarizes details of speakers who contributed multiple recordings for Releases One and Two (broken down by sample type in the latter case).

Table 2.

Details of materials available for Releases One and Two. Total recordings, total numbers of speakers and speakers classified by the numbers of recordings they contributed are indicated for Release One monologs and Release Two monologs, readings and conversations.

| Release and material type |

# contributed | Total | ||||||

|---|---|---|---|---|---|---|---|---|

| Recordings | Speakers | 1 | 2 | 3 | 4 | 5 | Others | |

| Release One Monolog |

138 | 81 | 54 | 17 | 3 | 3 | 1 | 1@7 1@8 1@9 |

| Release Two Monolog |

82 | 35 | 15 | 7 | 2 | 8 | 3 | |

| Release Two Reading |

108 | 44 | 18 | 8 | 6 | 8 | 1 | 2@6 1@7 |

| Release Two Conversation |

128 | 81 | 58 | 12 | 3 | 6 | 0 | 1@6 1@7 |

Information on handedness, age of onset and family history has been provided for speakers where available. Table 3 gives numbers and percentages of speakers and recordings for which this information is available for the monolog sample types for Release One (similar data from Release Two given for monolog, reading and conversation sample types are included too).

Table 3.

Numbers and percentages (in brackets) of speakers and recordings for which handedness age of onset and family history are available for UCLASS Releases One and Two.

| Handedness | Age of onset | Family history | ||||

|---|---|---|---|---|---|---|

| Speakers | Recordings | Speakers | Recordings | Speakers | Recordings | |

| Release One Monolog |

33 (40.7%) |

85 (61.6%) |

34 (42.0%) |

76 (55.1%) |

25 (30.9%) |

58 (42.0%) |

| Release Two Monolog |

34 (94.4%) |

80 (97.6%) |

28 (77.8%) |

70 (85.4%) |

18 (50.0%) |

40 (48.7%) |

| Release Two Reading |

42 (95.5%) |

104 (96.3%) |

37 (84.1%) |

99 (91.7%) |

23 (52.3%) |

55 (50.9%) |

| Release Two Convers'n |

50 (61.7%) |

94 (73.4%) |

47 (58.0%) |

86 (67.2%) |

23 (52.3%) |

56 (43.7%) |

Audio recordings

The audio recordings can be accessed in WAV, SFS or MP3 format. Click on the desired format to access a particular type. Users then see a list of filenames that correspond to those in the ACCESS table (plus an extension indicating the format type). Recordings are selected by clicking on the filenames of those required. Once recordings have been accessed they can be handled as any other recording in that format (e.g. played, analyzed or stored).

Stand-alone transcriptions

Details of transcriptions of the speech separate from the audio recordings that are available follows. Transcriptions are in an orthographic (31) and a machine-readable phonetic (25) forms (users can select the required form). Orthographic transcriptions use regular orthography (dysfluencies are spelled out as they are heard). Phonetic transcriptions use an adapted form of the JSRU machine-readable phonetic transcription scheme (Howell & Huckvale, 2004). The adaptations include conventions for marking word types (content by “:” and function by “/”), dysfluencies and their types. A full description of the transcription conventions used is available at http://www.speech.psychol.ucl.ac.uk/ where it is found under Shared Resources/ How We Transcribe.

Transcriptions aligned against audio waveform

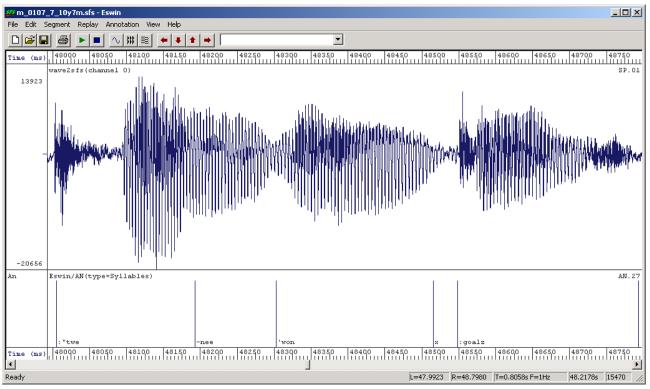

A limited number (13 described here and a further 12 below) of the phonetic transcriptions have been prepared so that they have time markers which indicate the point they occurred on the corresponding speech events (aligned transcriptions). SFS has a useful way of visualizing aligned transcriptions. It allows multiple representations of speech to be displayed on the same time axis. An example of a recording in SFS format that shows an oscillogram (top line) and a time-aligned transcription of the syllables in the utterance (second line) is given in Figure 1 (the child says “tweneee one goals”). Aligned transcriptions are available in SFS format alone.

Figure 1.

Example of an SFS display showing the oscillogram (top row) and a transcription aligned against this (second row).

The listing of transcriptions aligned against audio waves also includes the 12 examples used to illustrate how SFS can be employed to process recordings of speakers who stutter, as described in Howell and Huckvale (2004). These example recordings are listed separately for the convenience of readers who want to follow the exercises for themselves. Most of these transcriptions are aligned syllabically (i.e. marked at time points that correspond to syllable onsets). Some are aligned at the word level. Finally, one of the recordings has the start and end of phonological words indicated (Selkirk, 1984). The particular way phonological word segmentations are made on spontaneous speech recordings is described in Howell (2004).

Transcriptions with time markers

The final section gives the transcriptions with time markers appropriate for the three software formats for all 25 recordings with aligned transcriptions (CHILDES, PRAAT and SFS formats). The transcriptions are given an extension indicating level of alignment (.syll, .word and .pw for syllable, word and phonological word level respectively) and a second extension for two of the formats (.cha and .grid for CHILDES and PRAAT respectively). The way time information is represented for all three formats is described when CHILDES is described in the section on optional software formats.

UCLASS Release Two

Overview

The web address for accessing UCLASS Release Two directly is http://www.uclass.psychol.ucl.ac.uk/uclass2.htm. The website can also be accessed through the general UCLASS site as indicated earlier. Release Two starts with a similar announcement to Release One. In Release Two, the recordings are again available in CHILDES, PRAAT and SFS formats. Unlike Release One, some of the speakers in this release spoke a language other than English in the home. The sample types in this release include spontaneous monologs (the only form available in Release One), reading and conversation. To date, the conversations have not been processed in detail and so transcriptions are absent and word counts of each recording (available in this release for monologs and readings) have not been made. Some speakers who were included in Release One provided readings and conversations as well as the monologs (only the latter were included in Release One). The reading and conversation sample types recordings of these speakers are included as part of Release Two. For each sample type, the number and percentage of speakers from Release One included in Release Two, the number and percentage of recordings contributed by these speakers to Release Two and the number and percentage of Release One speakers of all the Release Two speakers are presented in Table 4.

Table 4.

Details of speakers and recording overlap for the three speech sample types (reading, monolog, conversation) between UCLASS Releases One and Two.

| The proportion of speakers in Release One who were also speakers for Release Two |

The proportion of recordings in Release Two provided by speakers for Release One |

The proportion of speakers in Release Two who were also speakers for Release One |

|

|---|---|---|---|

| Monologs | 4/81 (4.99%) | 6/82 (7.3%) | 4/35 (11.4%) |

| Reading | 14/81 (17.3%) | 40/108 (37.0%) | 14/44 (31.8%) |

| Conversation | 45/81 (55.5%) | 77/128 (60.2%) | 45/81 (55.5%) |

The top part of the Release Two site parallels that of Release One in terms of the five main sections (tabulated information, audio recordings, stand-alone transcriptions, transcriptions aligned against audio recordings, and transcriptions with time markers). However, with the extra sample types in Release Two, there are more access points, and some additional information has been provided in the information-tables. There is an additional (sixth) section where scripts referred to in the optional software formats section can be obtained.

Tabulated information about speakers and recordings (also available as ACCESS tables)

There are three ACCESS tables (one per sample type) and these are similar to those in Release One, with some supplementary information. For all three sample types, the recording filename appears in column one as for Release One, and gender is written in full in column two (female or male). Columns three to five provide information on handedness (left, right), whether there is a reported past history of stuttering in the family (no, yes or not known) and age of stuttering onset (in months) as was done for Release One. Age at the time of recording (in months), where the recording took place (Clinic, home, UCL) and recording conditions (sound treated room, STR or quiet room, QR) are given in the following three columns.

Different amounts of information are tabulated in the remaining columns for each sample type. For monologs, word counts (column nine) are reported next. These counts were made by playing the recording at a reduced speed and tallying and then counting the words. Users are warned that this is only an approximate word count value. The minimum number of words in the monolog sample type is 19 and the maximum is 322, with a mean number of words across all recordings of 181.64 (sd = 61.24). As indicated in the table, it was not possible to count the words for recording M_1207_13y5m_1 because it was unclear (this child spoke Yoruba primarily in the home). Columns 10 to 13 contain information also available for Release One. These are (in order) type of therapy (family or holistic), time between therapy and recording (in months), self reported history of hearing problems (no, yes), and language problems (no, yes). Column 14 is an additional one, which identifies the children whose primary home language (PHL) was not English. The primary language used in the family home is indicated (there are four recordings from one child who spoke Arabic in the home, and one recording each for a child where Somali, Tamil and Yoruba were the primary language spoken in the home). All the rest of the recordings were from children who spoke English as their primary home language. Column 15 supplies information (also available for Release One) concerning whether the child had SEN (indicated by no, yes). Children with no SEN or language problems other than the stuttering were again at their age-appropriate developmental level. Columns 16-18 provide information about whether orthographic, phonetic and time-aligned transcriptions are available (all coded as no, yes), as was done with Release One. Perceptual scores concerning background acoustic noise, environmental noise, speaker clarity and interlocutor intrusiveness are given in columns 19-22 (obtained in the same way as with UCLASS Release One). Thirty-five different speakers contributed 82 monolog sample types to Release Two. These 82 recordings are 25.8% of the total recordings in Release Two. Seven of the 82 (8.5%) recordings were contributed by the four speakers whose primary home language was not English.

The ACCESS table for the reading sample type has one extra column (column nine), indicating what text was used. Several specific texts are indicated. These are “Arthur the Rat”, from Abercrombie (1964) coded as ATR, a text developed at UCL entitled “One more week to Easter”, coded as OMW (the text is given in Appendix A), and the set passages used in Riley's (1994) Stuttering Severity Instrument version three coded as SSI-3 (the SSI-3 text number is given in brackets). There are also sundry other texts coded as Other. Statistics on the number of texts that were read are given in the section describing the audio recordings. The subsequent columns are the same as they appeared with the monologs. The word counts for the read sample type (column ten) are based on the printed texts, so these are approximate (as sometimes speakers miss out or repeat words). The minimum number of words is 111 and the maximum is 420. The mean number of words is 263.62 (sd = 110.33). Forty-four different speakers contributed 108 reading recordings to Release Two. These 108 recordings represent 33.9% of the total recordings in Release Two. Three of the 128 (2.3%) recordings were contributed by three speakers whose primary home language was not English (Tamil, Yoruba and Urdu).

The ACCESS table for conversation sample type has 17 of the same columns as the monologs. Column nine (word counts) is omitted. Eighty-one different speakers contributed 128 conversation recordings to Release Two. These 128 recordings represent 40.3% of the total recordings in Release Two. Fifteen of the 128 (11.7%) recordings were contributed by fourteen speakers whose primary home language was not English. Child M_0103, who primarily spoke Arabic at home, provided a conversation at 11y7m and 12y6m.

Tables 1 and 2 contain details of what recordings are available in Release Two and for which speakers there are multiple recordings for each sample type. Summary statistics about what information on handedness, age of onset and family history are available for the different sample types in Release Two, are included in Table 3.

Audio recordings

The audio recordings are accessed in the same way as with Release One.The texts that were read and recorded were the “Arthur the Rat” passage (25), “One more week to Easter” (14), various SSI-3 texts (42) and others (27). The 42 SSI-3 text-readings were appropriate for the child's reading age (Riley, 1994). As indicated earlier, these are coded in column nine of the reading ACCESS table. The numbers of readings of SSI-3 texts by different speakers are given in Table 5.

Table 5.

Number of speakers (column two) for the SSI-3 passages indicated in column one.

| SSI-3 passage number | Number of speakers on corresponding passage |

|---|---|

| 1 | 5 |

| 2 | 4 |

| 3 | 9 |

| 4 | 3 |

| 5 | 3 |

| 6 | 2 |

| 7 | 2 |

| 1 and 2 | 6 |

| 3 and 4 | 6 |

| 7 and 8 | 2 |

There are five children (total of five recordings) for whom there are reading sample types but no conversational sample types and these are listed in the left-hand column of Table 6. There are three children (total of eight recordings) for whom we have monolog sample types but do not have conversational sample types (listed in the right-hand column of Table 6).

Table 6.

Details of speakers for whom there is a reading but no conversation (left-hand column) and for whom there is a monolog but no conversation (right-hand column).

| Reading but no conversation (N=5) |

Monolog but no conversation (N=8) |

|---|---|

| F_0142_12y4m_1 | F_0142_11y3m_ |

| M_0098_12y8m_1 | F_0142_11y5m_1 |

| M_0541_12y3m_1 | F_0142_11y7m_1 |

| M_0575_15y3m_1 | F_0142_12y0m_1 |

| M_1146_10y7m_1 | M_0541_11y3m_2 |

| M_0541_11y8m_1 | |

| M_0541_12y3m_1 | |

| M_0545_15y3m_1 |

Stand-alone transcriptions

Four monolog recordings (4.9% of the total) have been orthographically transcribed from three speakers (one speaker, M_1017, provided two recordings). All speakers were male and spoke English as their primary language at home. Two monolog recordings from different speakers (2.4% of the total recordings) have been phonetically transcribed. Again both are from male speakers who spoke English as their primary language in the home. Two read recordings (1.9%) have been orthographically transcribed (one of “Arthur the Rat” by speaker M_0065 and one of “One more week to Easter” by speaker M_0874). These were different speakers again, were male and spoke English as their primary language in the home.

Transcriptions aligned against audio wave

Transcriptions aligned against the audio track in SFS format are presented for the same recordings as appeared as stand-alone transcriptions (the four orthographic monologs, two phonetic monologs and two orthographic readings). Orthographic transcriptions are aligned at the word level and phonetic transcriptions at the syllable level.

Transcriptions with time markers

Orthographic transcriptions with time markers that point to the location of the segments in the audio recordings for CHILDES, PRAAT and SFS formats are given as done for Release One (also see the below description). These transcriptions are at both syllable and word levels for different recordings for all three freeware formats (the level of the transcription can be identified by the extension, .syll or .word respectively). A second CHILDES format (non-aligned) which does not include time markers which may be useful for users of this software is included (described in the optional software formats).

Transcription conversion scripts

The orthographic transcriptions with time markers that point to the location of the segments in the audio recordings look different for CHILDES as opposed to PRAAT and SFS formats (which look similar). The bottom part of the Release Two site shows all three such transcriptions for the same extract for the three software formats. The CHILDES format used here is available for both UCLASS Releases. The particular CHILDES format used is described below in the CHILDES section entitled “Optional software formats,” as some understanding of CHILDES conventions is required in order to interpret the example transcripts. A second CHILDES format (flat transcription, also described below) has been included too. The last line of the site provides access to three PERL scripts, which allow SFS transcriptions to be converted to the two CHILDES formats and PRAAT TextGrid format.

Optional software formats

CHILDES is the most appropriate format for those who wish to do language analyses. PRAAT and SFS both have good sound replay and acoustic analysis facilities. Choice between PRAAT and SFS is to some extent a matter of personal preference. Our own reason for choosing SFS is because it provides convenient transcription facilities for recordings of the length of those that appear in UCLASS Releases One and Two.

CHILDES

CHILDES was developed specifically for research into child language. It has three components which refer to different operations performed on language data (which includes transcriptions and recordings): The data conventions are provided by CHAT (Codes for the Human Analysis of Transcripts), the software package is CLAN (Computerized Language ANalysis) and the repository for the data is CHILDES (CHIld Language Data Exchange System). CLAN allows users to perform a large number of automatic analyses after the requisite CHAT codes have been entered by the user. The analyses include morpho-syntactic analysis word frequency counts, word searches, co-occurrence analyses and mean length of utterance counts (these are not provided by PRAAT or SFS).

CLAN works in different modes, which are selected from a pull-down menu. Two of the important ones that could be employed with the UCLASS recordings are CHAT and Sonic mode. New transcriptions are typed and edited in CHAT mode. CHAT transcripts can be linked to speech recordings in Sonic mode. Sonic mode has the standard waveform editing facilities (replay, sound demarcation, transcription, scrolling etc.).

CHAT works on the basis of tiers that contain different information. Annotations in CHAT are spread over one or more tiers. The first and main tier, starts with an asterisk (*), and is followed by a three letter code. In our CHAT transcripts, we have used SUB as the label for the main tier (abbreviation for “Subject” which in most cases refers to a child). More detailed annotations are marked at dependent tiers, which start with % followed by a three-letter abbreviation indicating what is marked. The following example has a main tier (*SUB) and a dependent tier (%mor), that has the morpho-lexical features of the words in the main one marked.

*SUB: well go get it!

%mor: ADV|well V|go&PRES V|get&PRES PRO|it!

Both UCLASS Release One and UCLASS Release Two have CHAT transcripts included in Sonic mode. CHAT has a specific phonetic (%pho) dependent tier. The transcription text appears in this tier (either one syllable or one word appears in each %pho line). In this particular convention, there is no transcription text in the main tier. The main tier has pointers (given in milliseconds) to the audio recording which shows this version is in Sonic mode.

A second CHAT convention has been included in UCLASS Release Two. This is plain text that corresponds to the entire transcription, which is read into the main *SUB tier (called a flat transcription). No pointers to the recording occur because the main tier corresponds to the entire recording. This new CHAT convention is not, therefore, in Sonic mode. This convention may be useful for users who only want to do language analyses.

Three PERL scripts that convert SFS annotations to 1) sonic CHAT (ann2clan.perl) 2) flat CHAT (ann2clan-flat.perl) and 3) PRAAT Textgrid (annot2grid.perl) transcriptions are supplied and can be obtained via the links at the bottom of the Release Two site. Flat transcription is not supplied for UCLASS Release One recordings because we do not want to alter that site, as it may disrupt people currently using it. The scripts allow anyone wishing to have these alternative transcriptions to generate them.

The main strength of CHILDES derives from the fact that it fulfills many of the specific requirements of a large and geographically-distributed research community, who work in the fields of child language and language acquisition. CLAN stores all annotation tiers in the same matrix file, and this lends itself to applications where a researcher annotates a small amount of language in detail and where the resulting annotation needs to be analyzed across different levels. CHILDES has been successful as a language data exchange system because it has a user-friendly interface and because it includes a large range of analysis options. A problem is that the annotation schemes supplied in CLAN are basic and relating them to more complex annotation schemes is not straightforward. Its integral speech analysis facilities are rudimentary. Further details on CHILDES can be found at the website http://childes.psy.cmu.edu.

PRAAT

PRAAT is an audio analysis tool. It is simple to use and reads all standard audio file-formats such as WAV and MP3. The software incorporates sub-tools for neural net analysis, speech synthesis and some statistical functions. A scripting facility is available which allows users to write customized functions.

PRAAT was developed for editing and analyzing recordings, and for sound synthesis. The software reads a recording, and start and end segments can be marked when transcription is required. After transcription is complete, the transcribed text is stored with the time markers as a text file.

One of PRAAT's strengths is that it is excellent for manipulating recordings. PRAAT outputs transcription as text files, and this makes it compatible with many other language tools. It offers a simple and easy to use segmentation interface. The software is well supported and source code is provided. The main problems arise because it was not initially developed for transcription. As a result, long segments of speech are difficult to see in their entirety, and scrolling to inspect different segments could be improved. The PRAAT site is at http://www.praat.org and the manual can be accessed within the software.

SFS

Speech Filing System (SFS) provides an integrated method for dealing with different sources of information about speech recordings. The raw audio record is at the core of the system. A number of other analyses can be conducted (e.g. transcriptions by listeners, computation of spectrograms) on the same recording. The filing part of SFS provides the system that integrates analyses from these several sources for visual or statistical inspection, as was illustrated in the discussion of Figure 1. SFS has its own format but does allow other formats to be written. The integration of these sources of information is the attraction, although there are utilities that allow the audio recordings to be uploaded or dumped in .WAV or other standard formats and, similarly, .TXT files can be dumped or uploaded. The software provided by SFS includes many of the same facilities as PRAAT. SFS offers various options for people wishing to write their own scripts (in MATLAB, C, and speech measurement language, SML). SML is a high level scripting language for manipulating information stored in SFS format and is described in section 1.5 of the SFS manual at http://www.phon.ucl.ac.uk/resource/sfs/. The SFS website can be found at the same address.

CHILDES is best for language analysis of the UCLASS recordings, both PRAAT and SFS can be used for audio analysis and SFS is our preference for fluency analyses that link audio and transcriptional information together.

Perceptual evaluation of UCLASS Release one and Release Two recordings

The recordings differ in quality, which is a reality when recording large numbers of participants in clinics. A gross indication about the recording environments is given in column seven and eight of the ACCESS tables (whether the recordings were made in the clinic at UCL or in the home and whether the room was quiet or sound-treated respectively) for both Releases.

Here some further perceptual evaluation is offered that readers may find useful in evaluating which recordings to select for teaching and research purposes. Quality is especially important if the recordings are going to be used for acoustic analyses. For each recording in UCLASS Release One and UCLASS Release Two, two five-second extracts (one at the beginning and one at the end) were selected at random and assessed by the senior author. Two extracts per recording were judged to reduce the possibility that an unrepresentative extract had been selected. A Matlab program played each extract exactly as it appears on the UCLASS site (so, for instance, there was no gain control). All extracts were judged on four characteristics for each recording (all judgments were made on a four-point scale as described later). The judgments were background acoustic noise, environmental noise, speaker clarity and interlocutor intrusiveness.

Acoustic noise was mainly due to tape hiss and microphone problems. Environmental noise included sounds outside of the room (e.g. noise associated with people moving in corridors, birds singing outside windows) and noises in the room (e.g. when something was dropped, tables were kicked, the child moved and brushed clothing on the microphone). Both acoustic and environment noise can be short lived and, consequently, can differ within a recording.

Speaker clarity concerned the inherent properties of the speaker's voice orthogonal to the stutter. The judgment of clarity included whether the child had a breathy voice quality, low volume etc. A person might stutter, but nevertheless have good voice quality and volume. An attempt was also made to judge clarity irrespective of accent. Regional UK accents are possibly more diverse than those in the US (though sub-cultural accents are not necessarily more diverse in the US than in the UK). However, provided these aspects of accent did not affect clarity, they did not influence the judgment.

Some interlocutors interrupted the speaker who stuttered. Dealing with interruptions is a part of normal conversational interaction, and as such is an appropriate occurrence. The interlocutors did not necessarily know the speakers who stuttered and sometimes they butted into the conversation in a way judged not normal. A column for interlocutor intrusiveness is included for all sample types. This is most relevant for conversation, less so for spontaneous speech and marginally relevant for reading sample types (for the latter, only when the experimenter instructed, or corrected procedural mistakes that the child made).

Each of the four characteristics was judged on a four-point scale for every extract (one = good, two = satisfactory, three = poor and four = bad). For all four characteristics, the average of the judgments for the two extracts from each recording is given in columns 17-20 for Release One and 19-22 for Release Two. The columns for each Release are in the order acoustic noise, environmental noise, speaker clarity and interlocutor intrusiveness. Judgments as high as four on any of the scales are rare and such high values may be of interest in their own right. For example, there are good acoustic recordings of speakers who have poor voice quality. These recordings may be relevant to some research questions. We have provided these judgments. It is up to the users to choose the sample that best fits his or here needs/selection criteria. While there is a range of quality, there are enough samples of sufficient quality so there is sufficient choice for many applications.

Strengths and Limitations of UCLASS

Two major strengths of having these sample types on a website are that access is immediate and guaranteed. Also there are a large number of recordings. Having information available to many people simultaneously allows the possibility of sharing round time-consuming work, like transcription. The statistics on downloads of recordings provide evidence that the UCLASS sites are being extensively used.

Similar conventions to those described here are being adopted in Iran (Howell & Nilipour, in press). This involves providing access to some of the same free software formats as described above, and establishment of a shared archive of speech recordings. This archive will have an associated website in Farsi (available in 2008, initially only in Iran). The intention is to facilitate collaboration and recording-exchange on projects about stuttering by workers from different parts of Iran. There are plans to do similar things in other countries. A number of different languages are used in Iran and this will allow cross-linguistic comparison using just their own recording archive and the English UCLASS archives. The advantages of such archives are that the sharing of resources, expertise, access to minority groups within archives (when a second language is spoken, cases where stuttering is comorbid with other disorders and so on) are made far more efficient and serve to accelerate research.

The two main limitations about the archives are in terms of what has been provided and issues associated with use of an audio format. Though there are large numbers of recordings, there are some research areas which call for more to be available. A particular case is machine recognition of stuttering (see the next section). A setup through which users submit their own recordings and analyses, like that available in CHILDES, would help solve this problem. A lot of case history information has been provided in UCLASS: age of stuttering onset, handedness, history of sensory and educational problems etc. But more is always desirable (e.g. more information about the characteristics of stuttering closer to onset of the disorder would be nice). Another limitation may be that the recordings have only on average about 180 words per recording.

The sound quality is somewhat variable. We have tried to obviate this to some extent by indicating where the recording took place¸ the recording conditions and perceptual judgments about the recordings. Making recordings in homes, clinics, schools etc. leads to variable sound quality, so the automatic recognition systems (ASR) for stuttering described in the next section will need to cope with a range of recording-qualities. Thus including them in training of ASR schemes may be advantageous for developing a working system. In addition, extraction of signals from noises is receiving attention (e.g. wavelet analysis) because many people want to record speech when individuals are in scanners which are noisy (Howell, Anderson & Lucero, in press). These techniques may also be applicable with the UCLASS recordings. Care is needed when analyzing audio recordings for stuttering. For instance, it may be difficult to distinguish silences and stuttering-related behaviors like blocks, although in our experience recordings mostly contain some auditory sign that a block is taking place. Future work should also consider employing video recordings.

Applications and Future Research Directions

There are pure and applied research applications for UCLASS. Pure research has been, or can be, conducted on behavioral topics, on establishing sub-types of stuttering, on use of UCLASS as a basis of comparison with stuttering-like speech-language behaviors in other disorders and many more. Application areas include clinical assessment and training, and machine recognition of stutterings. Examples of use of UCLASS from these areas follow.

Behavioral investigations

There are past and future research components at language, speech and acoustic levels. Marshall's (2005) study is an example of past behavioral research which has been conducted that used UCLASS Release One recordings: She investigated whether stuttering rates in English-speaking adults and children were influenced by phonological and morphological complexity at the ends of words. Marshall disentangled them so that the impact of each on stuttering could be tested. The analysis of recordings from UCLASS Release One, showed that phonological and morphological complexity at the end of a word did not influence stuttering rates for English-speaking children and adults who stutter in spontaneous speech.

Another need is to see whether sample type (monolog, reading, conversation) affects stuttering rate. There are sufficient recordings in the two UCLASS Releases to enable comparison to be made between stuttering rates on spontaneous, read and conversational sample types, an area where recordings are sparse at present (Howell, 2008; van Borsel, Dor, & Rondal., 2007). Another urgent future need in the language area, is conversational analysis (Acton, 2004). Little has been done on conversational patterns of speakers who stutter in general and none at all has been reported with either UCLASS Release.

In future acoustics work, it would be informative to replicate Zebrowski's (1994) work on the duration of the dysfluencies of stuttered children (mean age eight years) using the children around this age available in Release One and Release Two. Another important area for investigation is how stuttering patterns in the UCLASS recordings, which were obtained in late childhood, compare to those observed near onset of the disorder (Yairi & Ambrose, 2005; Zebrowski, 1991).

Sub-typing

Stuttering is a heterogeneous disorder and sub-typing has often been advocated as a way of tackling this heterogeneity. However, there is a paucity of past empirical work on sub-groups of stutterers that uses large numbers of recordings to sub-group them. One aspect that could be examined is cluttering, which has received considerable recent attention (Ward, 2006). Many speakers who clutter are referred to stuttering clinics (there are no clinics that just take clutterers). Consequently, there could be a sub- type with cluttering in the UCLASS Releases and these could be located for study using one of the definitions of cluttering that distinguish it from stuttering (Ward, 2006). Particular things that could be examined are whether the distribution of dysfluencies and speech rate differ between cluttering and stuttering. There are also probably sufficient recordings to sub-divide the groups in other different ways in order to examine whether risk factors known to be associated with persistent stuttering, such as positive family history or not, gender, early versus late onset, lead to different patterns of stuttering

Comparing dysfluent behaviors

UCLASS Releases One and Two can be used as the basis for comparison between dysfluent behavior in developmental stuttering and in other clinical and genetic disorders. UCLASS has not been applied for this purpose to date, but this is a potential area where UCLASS will be used, given the rising interest in the overlap of symptoms with other clinical disorders (Howell, 2007) and the growing interest in whether developmental stuttering occurs in persons with known genetic disorders (van Borsel & Tetnowski, 2007).

Some clinical conditions where similarities have been noted between developmental stuttering and various disorders are attention deficit hyperactivity disorder, selective mutism and social phobia (an anxiety disorder). The UCLASS Releases could be used as a basis for comparing stuttering with speech from these disorders, which conceivably should help the understanding of the relationship between psychological and fluency problems in general. Symptoms like those seen in stuttering are also seen in palillalia and tic disorders. Slurring, which may be like stuttered prolongation, occurs in substance abuse. Again the degree of similarity between stuttering and these aspects of language need to be established to locate common neural mechanisms. Some of the recordings of older speakers in Release One could be used to compare disorders that occur later in life that have been reported to lead to stuttering emergence or possibly reemergence. Such disorders include Alzheimer's and Parkinson's disease.

A word of caution is appropriate. If UCLASS recordings from those who stutter are compared with recordings from other populations, then investigators should consider whether the following independent variables have been matched and/or controlled: 1) age, 2) gender, 3) primary language and accent, 4) speech sampling conditions and procedures, 5) disfluency type classifications and analyses, etc.

Clinical applications

Ongoing uses of UCLASS for clinicians is in the provision of recordings of stuttered speech to students for teaching and testing new assessment methods as they become available. In future work, students could be trained to measure speech rate, make stuttering frequency counts, to see how the results compare with those of their instructors (which could be standardized) and to appreciate the heterogeneous forms that stuttering can take. To address assessment procedures, Howell (2005) reported a study that used UCLASS Release One. He examined how the time interval assessment procedure (used in some clinics to assess stuttering) depended on length of the interval being judged. A clinical study that would merit replication using the UCLASS recordings is the classic investigation of Kully and Boberg (1988), which showed that inter-clinic agreement about stuttering events was poor. The standard of clinicians training and ways of assessing stuttered speech have improved in the 20 years since the Kully and Boberg study was performed and it is necessary to establish whether this has improved inter-clinic agreement or not.

For clinical comparison, age information has been provided in the ACCESS tables, and persons without language or SEN problems can be selected and matched against the ages of target speakers from other test groups (either for chronological or, in cases where cognitive delay has been assessed, on developmental or language scores). There are also longitudinal recordings from some of the speakers, which may be useful for comparing developmental trends across disorders.

Machine recognition of stutterings

Assessment tasks are laborious and very time consuming. Although they need to be done accurately, Kully and Boberg's (1988) study indicates that assessments vary dramatically between clinics. There has been interest for some years in developing computational methods to address these issues because they provide replicable results and can be assessed for accuracy (Howell, Sackin & Glenn, 1997a; 1997b). Howell and Huckvale (2004) gave details of how some SFS utilities could be used for automatic recognition of stuttered dysfluencies. Huckvale (2005) reviewed different methods for recognizing dysfluencies, and reported some preliminary results using UCLASS Release One. Hayes and Howell (2005) described a study, using connectionism, to establish which words were likely to be stuttered. Connectionism models mental or behavioral phenomena as the emergent processes of interconnected networks of simple units. They used the phonological characteristics of words as input units and dysfluent versus fluent events as output units.

Future work on automatic recognition of stuttering events, will focus on read sample types (the SSI-3 texts available in UCLASS Release Two). Noth and Haderlein from the University of Erlangen-Nuremberg are collaborating with the UCL team to implement and train automatic recognizers for assessing speech using Riley's (1994) SSI-3 texts. This uses the approach described in Noth, Niemann, Haderlein, Decher, Eysholdt, Rosanowski and Wittenberg (2000). Peter Heeman from the Center for Spoken Language Understanding at the Oregon Health and Science University uses the orthographic word transcription, the time-aligned phonetic annotations, and the CHILDES annotations as training recordings in his automatic recognition work.

Conclusions

Readers have been alerted to extensive amounts of stuttered speech recording available on the Internet. These recordings have been prepared for three widely used free formats. Users should be able to access stuttered recordings and analysis software. Together, the recordings and software will allow them to investigate language and speech behavior of speakers who stutter. Some specific areas they could investigate have been described.

Acknowledgments

This work was supported by grant 072639 from the Wellcome Trust to Peter Howell.

Appendix A

The “One more Week to Easter” passage

There is only one more week to Easter. I have already started my holiday. The idea of visiting my uncle during this Easter is wonderful. His farm is in this village down in Cornwall. This village is very peaceful and beautiful. I have asked my aunt if I can bring Sam, my dog, with me. I promise her I will keep him under control. He attacked and he ate some animals from her farm in October. But he is part of the family and I cannot leave him behind.

In my uncle's village, there is a shop which sells a lot of things. The owner of the shop has known my uncle since young. They were old friends. This shop sells kites among other things. I had been given one by my Dad. I couldn't believe my eyes when I opened it up. It was just an amazing thing. He had also taught me how to fly it. I enjoy it and it is fun. It brings me out to the open space more often. I am also seeing my Dad more often. He stays away from his office at weekends. That is good. There are all sorts of kites. I am actually getting myself some more during this visit.

During this visit, I'm going to ride a horse. I wasn't allowed to do that because I wasn't old enough. But I am older now. My aunt is very good at riding and she will teach me. I am asking to be allowed to stay with my aunt for the summer. I am excited by the idea. Besides riding, I can make some pocket money by helping out in the farm. They have got some cows and sheep, as well as pigs and some chickens. But I don't want to work with pigs.

They are about eight miles from the sea. My uncle can take me out on his new boat. He has spent his spare time building this boat. I am always being amazed by what he can do. He was an airman and his life is an interesting story on its own and he is full of surprises. In April, he is constructing a tree-house for Amy, my older cousin. Over Easter, it will be her eleventh birthday. If Mum lets me, I will buy her some chocolates. But Amy will be disappointed if I can't get her any. But I think she will understand. She has got no idea that I'm arranging a surprise party for her.

References

- Abercrombie D. English Phonetic Texts. London: Faber & Faber; 1964. [Google Scholar]

- Acton C. A conversation analytic perspective on stammering: Some reflections and observations. Stammering Research. 2004;1:249–270. [Google Scholar]

- Davis S, Shisca D, Howell P. Anxiety in speakers who persist and recover from stuttering. Journal of Communication Disorders. 2007;40:398–417. doi: 10.1016/j.jcomdis.2006.10.003. [DOI] [PubMed] [Google Scholar]

- Hayes J, Howell P. A connectionist evaluation of schemes to measure difficulty of words based on their phonological structure. In: Cangelosi A, Bugmann G, Borisyuk R, editors. Modeling Language, Cognition and Action: Proceedings of the 9th Neural Computation and Psychology Workshop. Singapore: World Scientific; 2005. [Google Scholar]

- Howell P. Comparison of two ways of defining phonological words for assessing stuttering pattern changes with age in Spanish speakers who stutter. Journal of Multilingual Communication Disorders. 2004;2:161–186. doi: 10.1080/14769670412331271105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howell P. The effect of using time intervals of different length on judgments about stuttering. Stammering Research. 2005;1:364–374. [PMC free article] [PubMed] [Google Scholar]

- Howell P. Signs of developmental stuttering up to age eight and at 12 plus. Clinical Psychology Review. 2007;27:287–306. doi: 10.1016/j.cpr.2006.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howell P. Do individuals with fragile X syndrome show developmental stuttering or not? Comment on “Speech fluency in fragile X syndrome” by van Borsel, Dor and Rondal. Clinical Linguistics and Phonetics. 2008;22:163–167. doi: 10.1080/02699200701777631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howell P, Anderson A, Lucero J. Motor timing and fluency. In: Maasen B, van Lieshout PHHM, editors. Speech motor control: New developments in basic and applied research. Oxford: Oxford University Press; in press. [Google Scholar]

- Howell P, Davis S, Bartrip J, Wormald L. Effectiveness of frequency shifted feedback at reducing disfluency for linguistically easy, and difficult, sections of speech (original audio recordings included) Stammering Research. 2004;1:309–315. [PMC free article] [PubMed] [Google Scholar]

- Howell P, Davis S, Williams R. Late childhood stuttering. Journal of Speech, Language and Hearing Research. doi: 10.1044/1092-4388(2008/048). in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howell P, Davis S, Williams SM. Auditory abilities of speakers who persisted, or recovered, from stuttering. Journal of Fluency Disorders. 2006;31:257–270. doi: 10.1016/j.jfludis.2006.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howell P, El-Yaniv N, Powell DJ. Factors affecting fluency in stutterers when speaking under altered auditory feedback. In: Peters H, Hulstijn W, editors. Speech Motor Dynamics in Stuttering. New York: Springer Press; 1987. pp. 361–369. [Google Scholar]

- Howell P, Huckvale M. Facilities to assist people to research into stammered speech. Stammering Research. 2004;1:130–242. [PMC free article] [PubMed] [Google Scholar]

- Howell P, Nilipour R. Handbook for Resources for Treatment and Research of Stuttering (in Farsi) in press. [Google Scholar]

- Howell P, Sackin S, Glenn K. Development of a two-stage procedure for the automatic recognition of dysfluencies in the speech of children who stutter: I. Psychometric procedures appropriate for selection of training material for lexical dysfluency classifiers. Journal of Speech, Language and Hearing Research. 1997a;40:1073–1084. doi: 10.1044/jslhr.4005.1073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howell P, Sackin S, Glenn K. Development of a two-stage procedure for the automatic recognition of dysfluencies in the speech of children who stutter: II. ANN recognition of repetitions and prolongations with supplied word segment markers. Journal of Speech, Language and Hearing Research. 1997b;40:1085–1096. doi: 10.1044/jslhr.4005.1085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huckvale M. Automatic detection of lexical dysfluencies in speech - a review and baseline implementations; Paper presented at The Characteristics and Assessment of Stuttered Speech Conference; London, England. 2005. [Google Scholar]

- Kully D, Boberg E. An investigation of interclinic agreement in the identification of fluent and stuttered syllables. Journal of Fluency Disorders. 1988;13:309–318. [Google Scholar]

- MacWhinney B. The CHILDES Project: Tools for analyzing talk. Second Edition New York: Lawrence Erlbaum Associates; 1995. [Google Scholar]

- Marshall C. The impact of word-end phonology and morphology on stuttering. Stammering Research. 2005;1:375–391. [PMC free article] [PubMed] [Google Scholar]

- Noth E, Niemann H, Haderlein T, Decher M, Eysholdt U, Rosanowski F, Wittenberg T. Automatic stuttering recognition using Hidden Markov Models; International Conference on Spoken Language Processing (ICSLP); Beijing, China. 2000. pp. 65–68. [Google Scholar]

- Riley GD. Stuttering Severity Instrument for Children and Adults. Third Edition. Austin, TX: Pro-Ed; 1994. [DOI] [PubMed] [Google Scholar]

- Selkirk E. Phonology and syntax: The relation between sound and structure. Cambridge, MA: MIT Press; 1984. [Google Scholar]

- van Borsel J, Dor O, Rondal J. Speech fluency in fragile X syndrome. Clinical Linguistics and Phonetics. 2007 doi: 10.1080/02699200701601997. available on-line through iFirst. [DOI] [PubMed] [Google Scholar]

- van Borsel J, Tetnowski JA. Fluency disorders in genetic syndromes. Journal of Fluency Disorders. 2007;32:279–296. doi: 10.1016/j.jfludis.2007.07.002. [DOI] [PubMed] [Google Scholar]

- Ward D. Stuttering and Cluttering: Frameworks for Understanding and Treatment. Hove, England: Psychology Press; 2006. [Google Scholar]

- Yairi E, Ambrose NG. Early childhood stuttering. Austin, TX: PRO-ED; 2005. [Google Scholar]

- Zebrowski PM. Duration of the speech disfluencies of beginning stutterers. Journal of Speech and Hearing Research. 1991;34:483–491. doi: 10.1044/jshr.3403.183. [DOI] [PubMed] [Google Scholar]

- Zebrowski PM. Duration of sound prolongation and sound/syllable repetition in children who stutter. Journal of Speech and Hearing Research. 1994;37:254–263. doi: 10.1044/jshr.3702.254. [DOI] [PubMed] [Google Scholar]