Abstract

This article reviews evidence from basic and translational research with pigeons and humans suggesting that the persistence of operant behavior depends on the contingency between stimuli and reinforcers, and considers some implications for clinical interventions.

Keywords: operant contingencies, Pavlovian contingencies, multiple schedules, resistance to change, pigeons, humans

The metaphor of behavioral momentum proposes that the rate of responding under constant conditions of reinforcement is analogous to the velocity of a moving object, and the persistence of that rate in the face of a challenge depends on the behavioral analogue of physical mass. Thus, steady-state response rate and its resistance to change are independent aspects of behavior, just as velocity and mass are independent aspects of a moving object. Moreover, behavioral momentum theory proposes that response rate depends on operant response–reinforcer contingencies, whereas resistance to change depends on Pavlovian stimulus–reinforcer contingencies (for review, see Nevin & Grace, 2000).

Characterizing Contingencies

Every serious practitioner of behavior analysis is familiar with the effects of contingencies between operant responses and reinforcers. Under the most basic operant contingency, a reinforcer is presented every time a designated target response occurs and is never presented in the absence of the response. This contingent relation can be weakened or changed in various ways. For example, the reinforcer may not be presented after every instance of the target response but only if some other condition is met, such as “if 10 responses have occurred” (fixed-ratio 10), or “if 1 min has elapsed since the preceding reinforcer” (fixed-interval 1 min). Another way to alter the basic contingency is to present reinforcers contingent on the nonoccurrence of the response; for example, if the target response does not occur for 10 s (differential reinforcement of other behavior [DRO] 10 s). Or the contingency may be abolished altogether by arranging that reinforcers are presented independently of the target response (noncontingent reinforcement [NCR]); for example, at variable times (VT) averaging 1 min. The effects of these contingencies, given that an effective reinforcer has been identified, are so well known and so repeatable that they can be illustrated under poorly controlled conditions in student laboratory courses.

The strength of the operant contingency may be varied by changing the probabilities of reinforcement given that a response occurs, or does not occur, in brief segments of time (Hammond, 1980). The contingency may then be expressed as the difference between those probabilities (e.g., Wasserman, Elek, Chatlosh, & Baker, 1993). In the usual free-operant situation, in which time is not readily sliced into segments, the strength of the operant contingency may be captured by the proportion of all reinforcers that are contingent on the designated response.

Contingencies between stimuli and reinforcers can be specified and modified in similar ways. In the strongest contingency, a reinforcer is presented every time a brief stimulus is presented and not otherwise, a procedure that is familiar to students of introductory psychology as Pavlovian or respondent conditioning. The Pavlovian contingency can be modified in the same general way as operant contingencies. For example, the reinforcer may be presented after only some portion of the tones (partial reinforcement, analogous to a variable-ratio [VR] schedule for an operant), or it may be presented only in the absence of the tone (inhibitory conditioning, analogous to DRO for an operant response). In discrete-trial procedures, the stimulus–reinforcer contingency may be quantified by the probabilities of reinforcer presentation given that a designated stimulus is present, or is not present in an equivalent time sample, and then calculating the correlation coefficient phi (Gibbon, Berryman, & Thompson, 1974). An alternative proposed by Gibbon (1981) that is better suited to free-operant discrimination procedures is the ratio of the reinforcer rate in the presence of a designated stimulus to the overall reinforcer rate in the experimental setting.

Stimulus–reinforcer (hereafter Pavlovian) contingencies are of obvious interest to researchers who study autoshaped key pecking, conditioned suppression, or other forms of respondent conditioning, but may be neglected by practitioners of behavior analysis because their effects are often overshadowed by the sheer power of response–reinforcer contingencies to control behavior. However, Pavlovian contingencies deserve consideration because they are embedded in any application of operant contingencies that involves stimulus control. Indeed, they may operate to undercut some of the effects of operant contingencies that are widely used and highly successful in reinforcement-based interventions in clinical settings.

Basic Research: Effects of Added Response-Independent Reinforcers

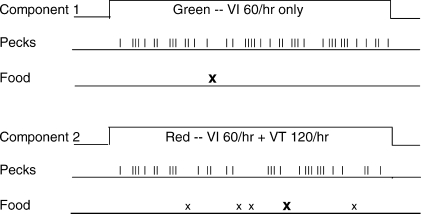

An example of operant–Pavlovian interactions from the pigeon laboratory involves a multiple schedule of reinforcement with different operant and Pavlovian contingencies in its components (Nevin, Tota, Torquato, & Shull, 1990, Experiment 1). As shown in Figure 1, there are two components, signaled by different-colored lights on the pecking key, that last for fixed durations and alternate successively in time. In Component 1 (green), responses are reinforced after variable intervals averaging 1 min (VI 1 min), yielding 60 reinforcers per hour. In Component 2 (red), responses are reinforced according to the same VI 1-min schedule (60 reinforcers per hour), and, in addition, reinforcers are presented independently of responding at variable times averaging 30 s (VT 30 s), yielding an additional 120 reinforcers per hour. The operant contingency is stronger in the green, VI-only component because all reinforcers are response contingent, whereas in the red, VI+VT component, only one third of the reinforcers are response contingent. Conversely, the Pavlovian contingency is stronger for the VI+VT component because the ratio of the reinforcer rate in that component to the total reinforcer rate in the experiment, (60+120)/(60+120+60), is three times the value of the ratio in the VI-only component, 60/(60+120+60).

Figure 1.

A time-line diagram of a multiple schedule in which reinforcers are available in both components according to VI 1-min schedules (60 reinforcers per hour; X) and in one component, reinforcers are also presented independently of responding on a VT 30-s schedule (120 reinforcers per hour; x).

To evaluate the effects of these contingencies, Nevin et al. (1990, Experiment 1) exposed pigeons to the multiple-schedule procedure (Figure 1) for 30 sessions. All pigeons exhibited higher response rates in the VI-only than in the VI+VT component, a result that makes sense in relation to the difference in operant contingencies. The same result has been obtained with single VI schedules when response-independent reinforcers were introduced (Rachlin & Baum, 1972) and is well established in the basic research literature.

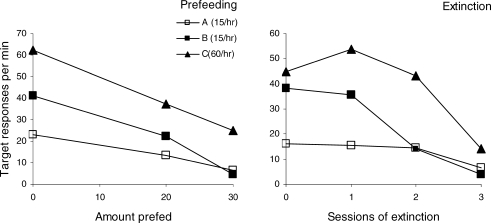

Nevin et al. (1990, Experiment 1) also arranged two tests of resistance to change: prefeeding, in which the pigeons received 40 to 60 g of food 1 hr before selected daily sessions with the usual contingencies operating in both components, and extinction, in which all reinforcers were discontinued in both components. The results are presented in Figure 2, averaged across pigeons because all exhibited the same effects. The left panel presents baseline response rates averaged over five sessions and the average rates of responding in five prefeeding sessions. As noted above, baseline response rates were higher in the VI-only component, but during prefeeding, response rates were higher in the VI+VT component. The same was true for extinction: As response rates decreased over successive sessions, extinction responding decreased later and to a lesser extent in the VI+VT component than in the VI-only component. Although the differences between components were not large, the reversal of ordering from baseline to prefeeding or to extinction was highly reliable. The conclusion is that resistance to change depended on the Pavlovian contingency, which was stronger in the VI+VT component, whereas baseline response rates depended on the operant contingency, as noted above.

Figure 2.

Rates of responding by pigeons during baseline (BL) and prefeeding test sessions (left) and during baseline and extinction test sessions (right) in the multiple VI, VI+VT schedules diagrammed in Figure 1 (from Nevin et al., 1990, Experiment 1). Note that the order of response rates for components with and without added VT reinforcers reversed during the tests.

Since 1990, the finding of increased resistance to change in the presence of a stimulus correlated with added noncontingent reinforcers has been replicated with rats (Harper, 1999), including studies with qualitatively different VI and VT reinforcers (Grimes & Shull, 2001; Shahan & Burke, 2004), with goldfish (Igaki & Sakagami, 2004), and with college students (Cohen, 1996), so it has substantial generality.

Basic Research: Effects of Added Reinforcers for Alternative Behavior

At a conference in 1988, Rick Shull told me that he and his students were getting similar results with pigeons in multiple concurrent VI schedules when the added reinforcers were contingent on an explicit alternative response. In Component A, a target response (pecking the right key) was reinforced on a VI 240-s schedule (15 reinforcers per hour) and an alternative response (pecking the left key) was reinforced concurrently on a VI 80-s schedule (45 reinforcers per hour). In Component B, the target response obtained 15 reinforcers per hour while the alternative response was not reinforced, and in Component C, the target response obtained 60 reinforcers per hour while the alternative was not reinforced. Thus, the ratios of reinforcers in Components A and C to overall reinforcer rates were the same in Components A and C, that is, (15+45)/(15+45+15+60) and 60/(15+45+15+60), and were four times greater than in Component B, that is, 15/(15+45+15+60).

As shown in Figure 3, baseline response rates were as predicted by the literature on concurrent and single VI schedules: The target response rate was about one third of the alternative response rate in Component A, roughly matching the obtained ratio of reinforcers, and was substantially higher in Component B, in which no alternative reinforcers were available. Target response rate was highest in Component C, in which its reinforcer rate was 60 per hour rather than 15 per hour.

Figure 3.

Rates of target-key responding for baseline and prefeeding test sessions (left) and for baseline (at 0 on the x axis) and extinction test sessions in three-component multiple concurrent VI VI schedules (right; from Nevin et al., 1990, Experiment 2). During baseline training, the target response obtained 15 reinforcers per hour in Components A and B, and an alternative response obtained 45 reinforcers per hour concurrently in Component A only. In Component C, the target response obtained 60 reinforcers per hour. Note that the order of response rates for Components A and B reversed during the tests.

Shull and his students also conducted prefeeding and extinction tests of resistance to change. The effects of prefeeding 20 or 30 g in successive sessions are shown in the upper left panel of Figure 3, together with baseline data, averaged across pigeons. Target response rate in Component A was less reduced by prefeeding than in Component B, and there was a reversal of ordering at 30 g that was evident in the data of all 3 pigeons. The data for extinction are displayed similarly in the upper right panel, and again the reversal in the ordering of Components A and B was evident in the data of all 3 pigeons. For both prefeeding and extinction, resistance to change in Component C was greater than in Component B, as expected from the literature on resistance to change in multiple schedules. These results, which were published as Experiment 2 in Nevin et al. (1990), suggest that resistance to change of a target response depended on the total reinforcement in a component, just as in the VI+VT component in Experiment 1.

Clinical Intervention and Translation

In Experiment 1 of Nevin et al. (1990), the presentation of response-independent reinforcers in Component 2 parallels the use of NCR in applied analyses. In Experiment 2, reinforcement of an explicit alternative response in Component A parallels the use of differential reinforcement of alternative behavior (DRA) in applied analyses. Both NCR and DRA are common features of clinical interventions designed to reduce the frequency of a target problem response. In a comprehensive review of functional analysis methods used to treat self-injurious behavior (SIB) in people with developmental disabilities, Iwata et al. (1994) found that, in 152 cases in which NCR was employed, SIB decreased to below 10% of its pretreatment level in 84% of the interventions. The success rate was 83% for DRA. Comparable success rates were reported by Asmus et al. (2004) for 138 participants who engaged in aggression and disruption as well as SIB. These findings exemplify the power of reinforcement contingencies to reduce clinically significant problem behavior. But there is a worrisome implication of the data of Nevin et al. (1990) presented above: The success of NCR and DRA in reducing problem behavior may have the unintended consequence of making that behavior more resistant to further efforts to reduce or eliminate it, because both DRA and NCR strengthen the contingency between stimuli and reinforcers.

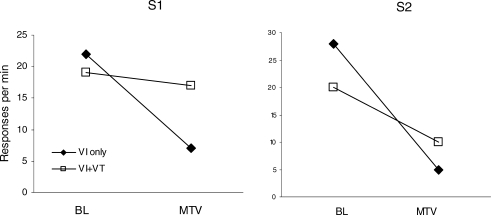

Bud Mace and I discussed the VI+VT results (Figure 2) and their implications at a conference about a year before they were published, and within weeks, he and his colleagues at Lehigh and Rutgers implemented a close replication of the VI-only versus VI+VT procedure with 2 adult residents with mental retardation in a group home. The target response was sorting different-colored utensils in alternation. Sorting both green and red items was reinforced according to a VI 1-min (60 reinforcers per hour) schedule, and in addition, response-independent reinforcers were presented according to a VT 30-s (120 reinforcers per hour) schedule while the participant was engaged in sorting one of the colors. Reinforcers were small cups of popcorn for 1 participant and coffee for the other. After 10 to 15 sessions, resistance to change was tested by turning on a video monitor with excerpts from an MTV program. The results, published by Mace et al. (1990, Part 2), are presented for individual participants, pooled over two replications of video disruption, in Figure 4. The differences between baseline rates and the effects of disruptors in the two components are ordinally similar to but substantially clearer than the pigeons' results in Figures 2 and 3.

Figure 4.

Rates of responding on a sorting task by 2 adults with mental retardation (from Mace et al., 1990). Sorting utensils in multiple-schedule components defined by different utensil colors was reinforced according to a VI 1-min schedule (60 reinforcers per hour). In addition, response-independent reinforcers were given according to a VT 30-s schedule (120 reinforcers per hour) in one component. After baseline training (BL), sorting was disrupted by presenting a distruptor (MTV). Note that the order of sorting rates for components with and without added VT reinforcers reversed during the tests.

Mace et al. (2009) have confirmed the results of Nevin et al. (1990, Experiment 2) with rats, demonstrating that concurrent reinforcement of an alternative response increased the resistance to extinction of a target response, and has obtained comparable results with problem behavior in children with developmental disabilities. They have also shown that these increases in resistance to extinction could be circumvented by reinforcing an alternative response in a distinctively different stimulus situation, so that alternative reinforcers do not enter into the Pavlovian contingency that governs the persistence of problem behavior. The effectiveness of his procedure deserves experimental analysis in a reverse translation to the basic research laboratory.

Acknowledgments

This article is based on an invited talk in a symposium on translational research at the meetings of the Association for Behavior Analysis, Phoenix, Arizona, May 2009; I thank Tim Hackenberg for arranging the event.

REFERENCES

- Ahearn W.H, Clark K.M, Gardenier N.C, Chung B.I, Dube W.V. Persistence of stereotypic behavior: Examining the effects of external reinforcers. Journal of Applied Behavior Analysis. 2003;36:439–448. doi: 10.1901/jaba.2003.36-439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asmus J.M, Ringdahl J.E, Sellers J.A, Call N.A, Andelman M.S, Wacker D.P. Use of a short-term inpatient model to evaluate aberrant behavior: Outcome data summaries from 1996 to 2001. Journal of Applied Behavior Analysis. 2004;37:283–304. doi: 10.1901/jaba.2004.37-283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen S.L. Behavioral momentum of typing behavior in college students. Journal of Behavior Analysis and Therapy. 1996;1:36–51. [Google Scholar]

- Gibbon J. The contingency problem in autoshaping. In: Locurto C.M, Terrace H.S, Gibbon J, editors. Autoshaping and conditioning theory (pp. 285–308) New York: Academic Press; 1981. [Google Scholar]

- Gibbon J, Berryman R, Thompson R.L. Contingency spaces and measures in classical and instrumental conditioning. Journal of the Experimental Analysis of Behavior. 1974;21:585–605. doi: 10.1901/jeab.1974.21-585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimes J.A, Shull R.L. Response-independent milk delivery enhances persistence of pellet-reinforced lever pressing by rats. Journal of the Experimental Analysis of Behavior. 2001;76:179–194. doi: 10.1901/jeab.2001.76-179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammond L.J. The effect of contingency upon the appetitive conditioning of free-operant behavior. Journal of the Experimental Analysis of Behavior. 1980;34:297–304. doi: 10.1901/jeab.1980.34-297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harper D.N. Drug-induced changes in responding are dependent on baseline stimulus-reinforcer contingencies. Psychobiology. 1999;27:95–104. [Google Scholar]

- Igaki T, Sakagami T. Resistance to change in goldfish. Behavioural Processes. 2004;66:139–152. doi: 10.1016/j.beproc.2004.01.009. [DOI] [PubMed] [Google Scholar]

- Iwata B.A, Pace G.M, Dorsey M.F, Zarcone J.R, Vollmer T.R, Smith R.G, et al. The functions of self-injurious behavior: An experimental-epidemiological analysis. Journal of Applied Behavior Analysis. 1994;27:215–240. doi: 10.1901/jaba.1994.27-215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace F.C, Lalli J.S, Shea M.C, Lalli E.P, West B.J, Roberts M, et al. The momentum of human behavior in a natural setting. Journal of the Experimental Analysis of Behavior. 1990;54:163–172. doi: 10.1901/jeab.1990.54-163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mace F.C, McComas J.J, Mauro B.C, Progar P.R, Taylor B, Ervin R, et al. The persistence-strengthening effects of DRA: An illustration of bidirectional translational research. The Behavior Analyst. 2009;32:293–300. doi: 10.1007/BF03392192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nevin J.A, Grace R.C. Behavioral momentum and the law of effect. Behavioral and Brain Sciences. 2000;23:73–130. doi: 10.1017/s0140525x00002405. [DOI] [PubMed] [Google Scholar]

- Nevin J.A, Tota M.E, Torquato R.D, Shull R.L. Alternative reinforcement increases resistance to change: Pavlovian or operant contingencies? Journal of the Experimental Analysis of Behavior. 1990;53:359–379. doi: 10.1901/jeab.1990.53-359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H, Baum W.M. Effects of alternative reinforcement: Does the source matter? Journal of the Experimental Analysis of Behavior. 1972;18:231–241. doi: 10.1901/jeab.1972.18-231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahan T.A, Burke K.A. Ethanol-maintained responding of rats is more resistant to change in a context with added non-drug reinforcement. Behavioral Pharmacology. 2004;15:279–285. doi: 10.1097/01.fbp.0000135706.93950.1a. [DOI] [PubMed] [Google Scholar]

- Wasserman E.A, Elek S.M, Chatlosh D.L, Baker A.G. Rating causal relations: Role of probability in judgments of response-outcome contingency. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1993;19:174–188. [Google Scholar]