Abstract

Background

Despite significant policy concerns about the role of inpatient resource utilization on rising medical costs, little information is provided to residents regarding their practice patterns and the effect on resource use. Improved knowledge about their practice patterns and costs might reduce resource utilization and better prepare physicians for today's health care market.

Methods

We surveyed residents in the internal medicine residency at the Hospital of the University of Pennsylvania. Based on needs identified via the survey, discussions with experts, and a literature review, a curriculum was created to help increase residents' knowledge about benchmarking their own practice patterns and using objective performance measures in the health care market.

Results

The response rate to our survey was 67%. Only 37% of residents reported receiving any feedback on their utilization of resources, and only 20% reported receiving feedback regularly. Even fewer (16%) developed, with their attending physician, a concrete improvement plan for resource use. A feedback program was developed that included automatic review of the electronic medical record to provide trainee-specific feedback on resource utilization and outcomes of care including number of laboratory tests per patient day, laboratory cost per patient day, computed tomography scan ordering rate, length of stay, and 14-day readmission rate. Results were benchmarked against those of peers on the same service. Objective feedback was provided biweekly by the attending physician, who also created an action plan with the residents. In addition, an integrated didactic curriculum was provided to all trainees on the hospitalist service on a biweekly basis.

Conclusions

Interns and residents do not routinely receive feedback on their resource utilization or ways to improve efficiency. A method for providing objective data on individual resource utilization in combination with a structured curriculum can be implemented to help improve resident knowledge and practice. Ongoing work will test the impact on resource utilization and outcomes.

Background

Despite significant policy concerns about the role of inpatient resource utilization on rising medical costs, little information is provided to physicians-in-training regarding their own practice pattern. Improved knowledge about their own practice patterns and hospital costs might reduce resource utilization, better prepare physicians-in-training for today's health care market, and improve the quality of care provided. Benchmarking of physicians has, in fact, been investigated as a quality-improvement tool by addressing individual physicians' practice patterns.1,2

Benchmarking is “the continuous process of measuring products, services and practices against the toughest competitors or those known as leaders in the field.”3 As an educational tool, benchmarking is attractive as it addresses several requirements of successful adult learning: (1) deficiencies are identified by providing comparative feedback (physicians-in-training and those in practice respond best when an educational intervention is aimed at a known problem); (2) learners are actively participating; and (3) instruction is in real time, objective, and can help discover mechanisms of improvement.4

Comparative feedback is a strong motivator for adult learners and, thus, has often been incorporated into quality-improvement measures.5,6 One study,7 which provided physicians with feedback on their individual laboratory utilization compared to that of their peers, demonstrated a significant and immediate reduction in utilization of laboratory tests. It even demonstrated that comparative feedback can lead to a continuous and long-lasting reduction in laboratory utilization even after the feedback is stopped. On the other hand, feedback about excessive ordering of 1 test, such as serum calcium, without comparative feedback, did not result in a significant reduction in ordering.8

We hypothesized that physicians-in-training are not currently receiving feedback—especially not comparative feedback—on their resource utilization. Even if given, we hypothesized that this feedback was rarely used to implement an intervention. We surveyed the residents of the internal medicine residency program at our institution to understand the current feedback mechanisms and their deficiencies. At the same time, we assessed the residents' knowledge of the costs of commonly ordered tests, investigating our hypothesis that such knowledge would be poor. The deficiencies identified were then used to develop real-time objective and comparative feedback on resource utilization, which supplemented an integrated and structured curriculum highlighting key concepts of provider benchmarking, resource utilization, and hospital costs. We hypothesize that this represents an important opportunity to improve the teaching of medical residents as well as the efficiency of their practice.

Methods

Setting

This study was conducted at the Hospital of the University of Pennsylvania (HUP), a tertiary-care, academic medical center. The general medicine service at HUP is associated with more than 5000 admissions per year, which account for approximately 40% of all admissions to the Department of Medicine at HUP. On this service, teams include 2 attending physicians who do rotations every 2 weeks. Each supervises 4 residents who each, in turn, manage 2 interns.

Participants and Survey

The internal medicine residency program at the University of Pennsylvania consists of 150 residents whose years of training span 3 years for categorical and preliminary residents and 4 years for those seeking a dual board certification including combined internal medicine and dermatology or internal medicine and pediatrics. A survey evaluating current feedback practices was distributed to all interns and residents in the program. The survey was developed from a previously validated evaluation survey, was tested to ensure construct validity via expert review and individual interviews, and was finally tested on a small group of residents. The survey was administered at the end of the respondents' academic year by using SurveyMonkey, available at http://www.surveymonkey.com (SurveyMonkey, Menlo Park, CA). It addressed the frequency of feedback that residents received from the attending physicians during their rotation in the general medicine service at HUP. It also asked about feedback on resource utilization and whether action plans were created with the attending physician after a feedback session. The final component of the survey asked participants to determine the cost of commonly ordered tests, including complete blood count with differential, electrolyte panel, arterial blood gas, cardiac panel (creatine kinase and troponin), unenhanced computed tomography (CT) scan of the chest and a CT scan of the abdomen and pelvis with oral and intravenous contrast. The study received approval by the Institutional Review Board.

Curriculum Design

On the basis of deficiencies identified by the survey, discussions with experts, and a literature review, we created a curriculum to increase trainees' knowledge about benchmarking their own practice patterns and using objective performance measures in the health care market. The curriculum consisted of biweekly, small-group didactic sessions each lasting 20 minutes, followed by brief discussion. It provided an overview of pay-for-performance and benchmarking in use in the health care market, including a review of key concepts of pay-for-performance and hospital quality measure. It also highlighted the Accreditation Council for Graduate Medical Education (ACGME) competencies of practice-based learning and system-based practice. The curriculum was supplemented with the presentation of a case in which a patient was readmitted within 14 days. Areas for improvement that could have prevented the readmission were then discussed. Finally, the curriculum reviewed costs of commonly ordered laboratory tests and provided examples of appropriate resource utilization. It did not focus on a goal to reduce resource utilization in general but focused on a goal to reduce waste. An example was given in which a serum rheumatologic screening panel was ordered without checking previous laboratory data obtained and entered in the system only 1 week earlier.

Benchmarking Report

Any patient admitted to the Department of Medicine at HUP during the 8 months between June 2007 and January 2008 was automatically included in the data set that was triggered by the order for an admission to the department. When a patient is admitted, the interns assign themselves as the primary intern for that patient on an Internet-based result review site called MedView (The University of Pennsylvania Health System, Philadelphia, PA). These assignments are done with high accuracy, as they are electronically connected to the resident sign-out system. The intern is then linked to the resident and attending physician via an automated interface with the Web-based scheduling software COAST (Flexible Informatics, Fort Washington, PA). The schedule is frequently updated because nurses and other physicians use the schedule to identify residents and interns on call on any given night. The provider name and identification is collected per patient day.

As patients are assigned to their primary team, patient information for each individual provider, such as number of laboratory tests ordered per day, cost of laboratory tests per day, length of stay, and readmission rate within a 14-day period, was abstracted for all patients cared for by the individual provider. This allowed for the generation of objective benchmarking reports on resource utilization. An average for laboratory and CT scan ordering and cost, as well as length of stay and readmission rate, was obtained for all patients admitted to the general medicine service during the data collection period. This allowed us to determine average cutoff points, such that an individual resource utilization could be used as a benchmark against the norm and assigned a quintile. These reports were then provided to the residents on a biweekly basis by their attending physician as a form of comparative feedback.

Statistical Analysis

For the summary statistics and quintile ranges for the benchmarking reports, data were analyzed with Stata 10.0 for Macintosh (StataCorp, College Station, TX).

Results

Current Feedback Practice

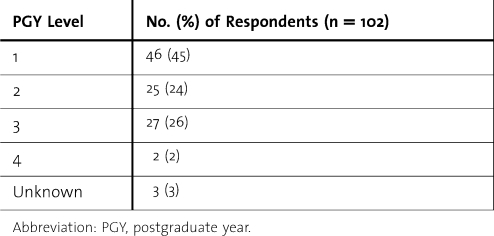

Our survey of the residents in the internal medicine residency program at the HUP had a 68% response rate (102 of 150). table 1 describes the breakdown of respondents, based on level of training. table 2 shows that only 37% of respondents were provided some feedback about their resource utilization, and just 20% reported receiving feedback regularly. Even fewer (16%) developed a concrete plan with their attending physician for improving their resource utilization and only 28% reported receiving any corrective feedback.

Table 1.

Level of Training of Survey Respondents

Table 2.

Summary Statistics of Feedback Surveya

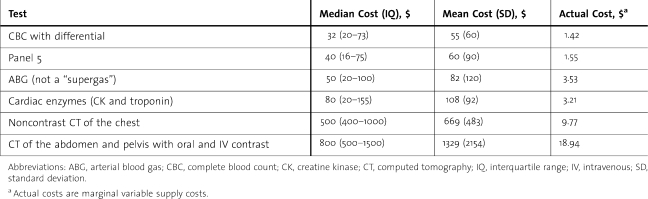

Knowledge of Costs

Ninety percent (92 of 102) of the respondents also completed the cost portion of the survey. Sixty-three percent of these respondents reported that they had no idea about the actual costs and were providing their best guess. As demonstrated in table 3, residents tended to overestimate the variable marginal supply costs of commonly ordered tests. For example, the direct cost of performing one more complete blood count with differential at our institution is $1.42. The responses ranged from $1 to $300, with an interquartile range for the respondent's best guess of $20 to $73 and a median of $32.

Table 3.

House staff Impression of Costs (in Dollars) Compared to Actual Costs of Commonly Ordered Tests

Based on the results of the survey that highlighted areas of improvement in both feedback and provider knowledge of resource utilization and costs, a new feedback program was designed with real-time objective data on resource utilization integrated with a structured curriculum.

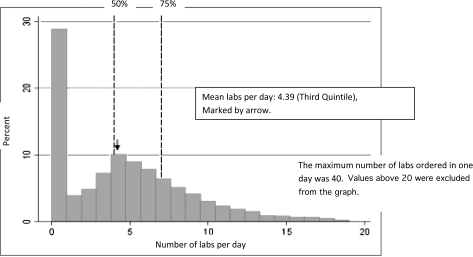

New Feedback Program

The electronic medical record is automatically reviewed to provide trainee-specific feedback on resource utilization and outcomes of care, including (1) mean number of laboratory tests per patient day, (2) mean laboratory cost per patient day, (3) CT scan ordering rate, (4) length of stay, and (5) 14-day period readmission rate. Results are benchmarked against those of their peers on the same service. The figure displays the category consisting of mean number of laboratory tests per patient day on a sample benchmarking report that would be provided to the residents. Each benchmark was presented in comparison to the performance of all residents on the same service in the preceding months. By their feedback, residents indicated that the reports were more meaningful if presented in this comparative manner rather than by presenting a mean value in isolation for each resident. These reports are reviewed biweekly by the attending physician, with the residents, as a form of objective feedback. The attending physician and residents then create an action plan for any areas of improvement or significant outliers that are identified. The most common action plans related to specific examples of readmissions, which involved discussion of possible reasons that led to readmission and how to prevent it in the future. This was supplemented with a biweekly didactic curriculum to provide an introduction to resource utilization, provider benchmarking, and hospital costs.

Figure.

A Resident Physician's Utilization of Laboratory Resources Is Benchmarked Against That of His or Her Peers on the Same Service and Displayed Graphically to Provide Comparative Feedback

Discussion

Our survey of the residents in the internal medicine residency program demonstrated that only about one-third of residents received feedback on their resource utilization, that few develop plans for improvement, and that overall knowledge of costs of commonly ordered tests is poor. These deficiencies led to a development of real-time objective and comparative feedback to residents on their resource utilization. This feedback system was integrated with a structured curriculum to review the concepts of provider benchmarking, resource utilization, and hospital costs. The curriculum had 3 goals: to provide an overview of pay-for-performance and benchmarking in use in the current health care market; to highlight the core competencies of practice-based learning and systems-based practice as outlined by the ACGME; and to review costs of commonly ordered laboratory tests while discussing examples of appropriate resource utilization. Using a combined lecture and case-based discussion, this curriculum was provided to all trainees in the general medicine service at HUP on a biweekly basis. This combination of didactics and feedback aimed at deficiencies (that were identified in feedback and provider knowledge) may represent an important opportunity to improve the teaching of medical residents and the efficiency of their practice.

Feedback is 1 of 6 general methods that have been found to be effective for changing physician behavior; yet, for feedback to be effective, physicians must be aware of their own practice and must be willing to modify their behaviors.9–11 Measuring laboratory utilization can provide individual physicians with an insight into their own ordering practice. By coupling objective, comparative reports of individual resource utilization with directed feedback from the attending physician on service with the resident, the 2 physicians can create an action plan together. Since the right amount of resource utilization or the right length of stay is not known, the feedback was designed mainly to allow trainees to understand their own practice pattern and not necessarily to reduce resource utilization unless outliers or specific situations (such as a readmission) were identified. Our utilization reports improve an individual provider's knowledge of his or her own behavior and act as a starting point of improvement for the physician-in-training, under the supervision of the attending physician.

Real-time feedback may also be used as a tool for quality improvement. Benchmarking uses continuous comparison to the best performers to identify areas for possible improvement.3 For example, Achievable Benchmarks of Care build on the idea of relative feedback for physicians by using top performers as the comparison.2,12,13 This has been shown to improve the effectiveness of quality-improvement interventions.14 By providing residents with information about their resource utilization compared to that of their peers, the comparative feedback provides a mechanism to identify areas of quality improvement.

One limitation of this study is that the effect of this type of intervention on resource utilization, house staff knowledge, and future practice patterns still needs to be investigated. The real-time benchmarking of physicians, however, is feasible and can be implemented for continuous improvement by using existing information systems. Its implementation alone improves the feedback of residents. Another limitation is that this is a single-site study. Different deficiencies in feedback of residents may exist at other institutions, which would call for alternative interventions. This may limit the ability to generalize from our findings. Finally, residents were provided with information about the variable marginal supply costs of most commonly ordered tests. To date, little information is available about which cost information most affects physician behavior, and variable marginal supply cost may not be the best one.

Conclusion

Interns and residents do not routinely receive feedback on their utilization of resources or ways to improve their efficiency. Furthermore, the knowledge of the cost of commonly ordered laboratory and radiology tests is poor. A method providing objective and comparative data about an individual provider's resource utilization in combination with a structured curriculum can be implemented to help improve trainee knowledge.

Footnotes

C. Jessica Dine, MD, MSHPR, is Assistant Professor of Medicine at the University of Pennsylvania; Jean Miller, MD, is Assistant Professor of Clinical Medicine at the University of Pennsylvania; Alexander Fuld, MD, is Fellow in Hematology and Oncology at Dartmouth University; Lisa M. Bellini, MD, is Professor of Medicine at University of Pennsylvania School of Medicine; and Theodore J. Iwashyna, MD, PhD, is Assistant Professor of Medicine at the University of Michigan.

References

- 1.Tomas S. Benchmarking: a technique for improvement. Hosp Mater Manage Q. 1993;14(4):78–82. [PubMed] [Google Scholar]

- 2.Weissman N. W., Allison J. J., Kiefe C. I., et al. Achievable benchmarks of care: the ABCs of benchmarking. J Eval Clin Pract. 1999;5(3):269–281. doi: 10.1046/j.1365-2753.1999.00203.x. [DOI] [PubMed] [Google Scholar]

- 3.Camp R. Benchmarking: Finding and Implementing Best Practices. Milwaukee, Wisconsin: ASQC Press; 1994. [Google Scholar]

- 4.Slotnick H. B. How doctors learn: the role of clinical problems across the medical school-to-practice continuum. Acad Med. 1996;71(1):28–34. doi: 10.1097/00001888-199601000-00014. [DOI] [PubMed] [Google Scholar]

- 5.Hayes R. P., Ballard D. J. Review: feedback about practice patterns for measurable improvements in quality of care—a challenge for PROs under the Health Care Quality Improvement Program. Clin Perform Qual Health Care. 1995;3(1):15–22. [PubMed] [Google Scholar]

- 6.Jencks S. F., Wilensky G. R. The health care quality improvement initiative: a new approach to quality assurance in Medicare. JAMA. 1992;268(7):900–903. [PubMed] [Google Scholar]

- 7.Gama R., Nightingale P. G., Broughton P. M., et al. Modifying the request behaviour of clinicians. J Clin Pathol. 1992;45(3):248–249. doi: 10.1136/jcp.45.3.248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Young D. W. Improving laboratory usage: a review. Postgrad Med J. 1988;64(750):283–289. doi: 10.1136/pgmj.64.750.283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Greco P. J., Eisenberg J. M. Changing physicians' practices. N Engl J Med. 1993;329(17):1271–1273. doi: 10.1056/NEJM199310213291714. [DOI] [PubMed] [Google Scholar]

- 10.Hershey C. O., Goldberg H. I., Cohen D. I. The effect of computerized feedback coupled with a newsletter upon outpatient prescribing charges: a randomized controlled trial. Med Care. 1988;26(1):88–94. doi: 10.1097/00005650-198801000-00010. [DOI] [PubMed] [Google Scholar]

- 11.Parrino T. A. The nonvalue of retrospective peer comparison feedback in containing hospital antibiotic costs. Am J Med. 1989;86(4):442–448. doi: 10.1016/0002-9343(89)90343-4. [DOI] [PubMed] [Google Scholar]

- 12.Kiefe C. I., Weissman N. W., Allison J. J., Farmer R., Weaver M., Williams O. D. Identifying achievable benchmarks of care: concepts and methodology. Int J Qual Health Care. 1998;10(5):443–447. doi: 10.1093/intqhc/10.5.443. [DOI] [PubMed] [Google Scholar]

- 13.Kiefe C. I., Woolley T. W., Allison J. J., Box J. B., Craig A. S. Determining benchmarks: a data driven search for the best achievable performance. Clin Perform Qual Health Care. 1994;2(4):190–194. [Google Scholar]

- 14.Kiefe C. I., Allison J. J., Williams O. D., Person S. D., Weaver M. T., Weissman N. W. Improving quality improvement using achievable benchmarks for physician feedback: a randomized controlled trial. JAMA. 2001;285(22):2871–2879. doi: 10.1001/jama.285.22.2871. [DOI] [PubMed] [Google Scholar]