Abstract

Background

The Accreditation Council for Graduate Medical Education has mandated multisource feedback (MSF) in the ambulatory setting for internal medicine residents. Few published reports demonstrate actual MSF results for a residency class, and fewer still include clinical quality measures and knowledge-based testing performance in the data set.

Methods

Residents participating in a year-long group practice experience called the “long-block” received MSF that included self, peer, staff, attending physician, and patient evaluations, as well as concomitant clinical quality data and knowledge-based testing scores. Residents were given a rank for each data point compared with peers in the class, and these data were reviewed with the chief resident and program director over the course of the long-block.

Results

Multisource feedback identified residents who performed well on most measures compared with their peers (10%), residents who performed poorly on most measures compared with their peers (10%), and residents who performed well on some measures and poorly on others (80%). Each high-, intermediate-, and low-performing resident had a least one aspect of the MSF that was significantly lower than the other, and this served as the basis of formative feedback during the long-block.

Conclusion

Use of multi-source feedback in the ambulatory setting can identify high-, intermediate-, and low-performing residents and suggest specific formative feedback for each. More research needs to be done on the effect of such feedback, as well as the relationships between each of the components in the MSF data set.

Introduction

Recognizing that the assessment of clinical competence is multifactorial,1–4 the Accreditation Council for Graduate Medical Education (ACGME) has mandated multisource feedback (MSF) in the ambulatory setting for internal medicine residents.5 Multisource feedback, also known as a 360-degree evaluation, was initially developed by the manufacturing industry for quality improvement purposes, with a focus on individuals working in teams.6 As medical care has become increasingly team-based, MSF has become an accepted method of feedback for physician performance4,7–13 and has been used to measure interpersonal communication, professionalism, and teamwork behaviors for residency trainees in multiple settings.14–22 Yet few studies to date have combined the evaluation of the behaviors noted here with measures of residents' medical knowledge and clinical quality.

The purpose of this study was to describe the assessment and feedback procedures used at the University of Cincinnati to evaluate residents in the ambulatory setting. We were particularly interested in the rankings provided through various modalities, including self, peer, staff, attending physician, and patient evaluations, as well as clinical quality data, in-house testing, and in-training examination testing results. Relative rankings on each modality were used to guide feedback discussions with the program director and chief resident.

Methods

In 2006, the University of Cincinnati internal medicine residency program created the ambulatory long-block23 as part of the ACGME Educational Innovation Project.24 The long-block occurs from the 17th to the 29th month of residency and is a year-long continuous ambulatory group-practice experience involving a close partnership between the residency and a hospital-based clinical practice. Long-block residents follow approximately 120 to 150 patients each, have office hours 3 half-days per week on average, and are responsive to patient needs (by answering messages, refilling medications, and so forth) every day. Otherwise, long-block residents rotate on electives and research experiences with minimal overnight call.

The ambulatory team is divided into 6 mini-teams with 3 to 4 long-block residents and 1 registered nurse or licensed practical nurse per team. Additional support for the mini-teams includes a nurse practitioner, a social worker, a pharmacotherapy clinician, an anticoagulation clinician, and multiple faculty preceptors. The team uses a disease registry to track quality. Once a week, the entire team meets to review care delivery. The long-block MSF consists of self, peer, staff, attending physician, and patient evaluations, as well as clinical quality data, in-house testing, and in-training examination testing results.

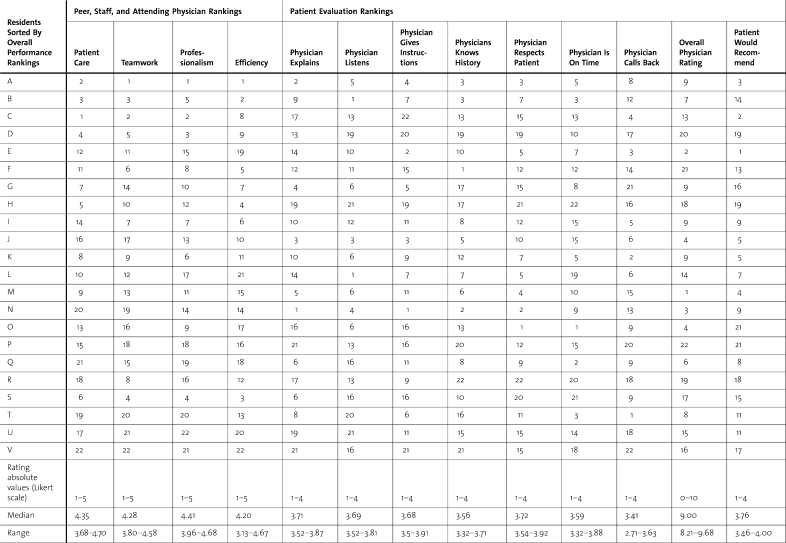

Peer, staff, and attending physician evaluations consisted of 4 questions scored on a 5-point Likert scale. Each member of the care team was asked to rate each resident on 4 dimensions: patient care, teamwork, professionalism, and efficiency. Residents received their score in comparison to the class mean and range, as well as a rank for each question. The data in table 1 represent the final evaluation for this particular long-block; it was collected from 22 residents, 13 staff members (nurses, pharmacists, social workers), and 12 attending physicians. If an evaluator did not have enough contact to rate a resident, he or she abstained. On average, each resident received 28 ratings on each dimension. Written comments were also obtained from the care team but they are not included in this analysis.

Table 1.

Resident Rankings for Peer, Staff, Attending Physician, and Patient Evaluations

Patient evaluations were collected immediately after a clinical encounter using a 10-question adaptation of the Consumer Assessment of Health Plans Study (Clinician and Group Survey–Adult Primary Care)25 (table 1). Patients indicated how often they had seen the physician they were rating (the average for this class was 4 visits by the time of the evaluation) and then they answered 9 questions using a 4-, 5-, or 10-point Likert scale. For this analysis, residents were evaluated by an average of 24 patients each.

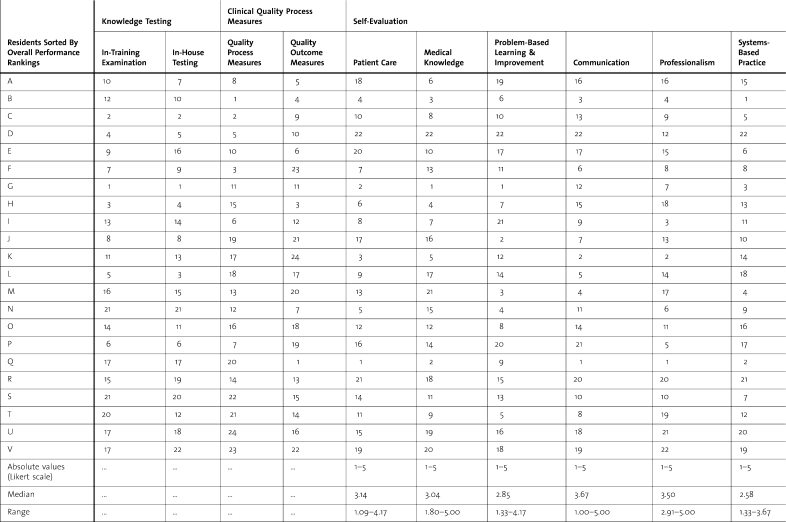

Quality data (table 2) were collected using a disease registry. The main clinical data were drawn from the diabetes physician recognition program of the National Committee for Quality Assurance.26 Residents reviewed their personal data monthly and received absolute values on each measure as well as ranks in comparison to their peers and the group as a whole. Residents also received composite rankings on overall outcome measures (A1c, low-density lipoprotein, and blood pressure) and process measures (smoking status documentation, eye examinations, foot examinations, nephropathy checks, and total patient load). Long-block residents managed 35 diabetic patients on average.

Table 2.

Resident Rankings for Knowledge-Based Testing, Clinical Quality, and Self-Evaluation

Information regarding testing came from 2 main sources. The first was the score on the in-training examination taken before the long-block. The second score came from a series of board-preparatory examinations taken over the course of the long-block. These tests covered the core of internal medicine as well as the main subspecialties. Residents received their scores in comparison to the class mean and range, as well as a rank for each test (table 2).

The self-evaluation was given at the start of the long-block and consisted of 45 questions encompassing the 6 core ACGME competencies.27 Each question was scored on a 5-point Likert scale with 1 being an “area where I know that I need improvement,” 3 being an “area where I think that I perform adequately,” and 5 being an “area where I think that I am very skilled.” An average score for each core competency self-evaluation section was generated, and then this score was compared to the group as a whole (table 2). Residents received their score in comparison to the class mean and range, as well as a rank for each section.

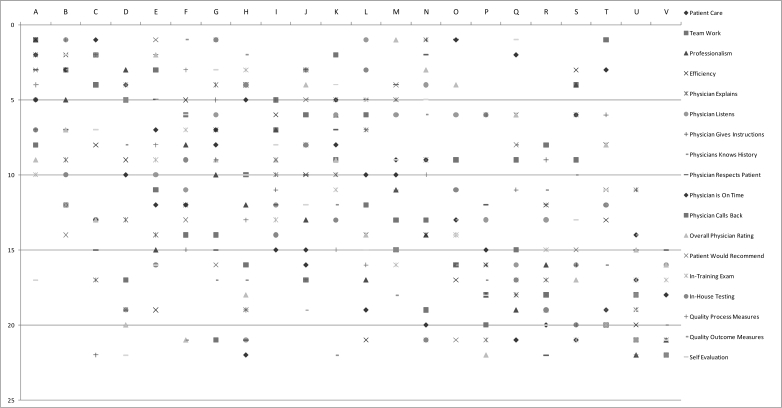

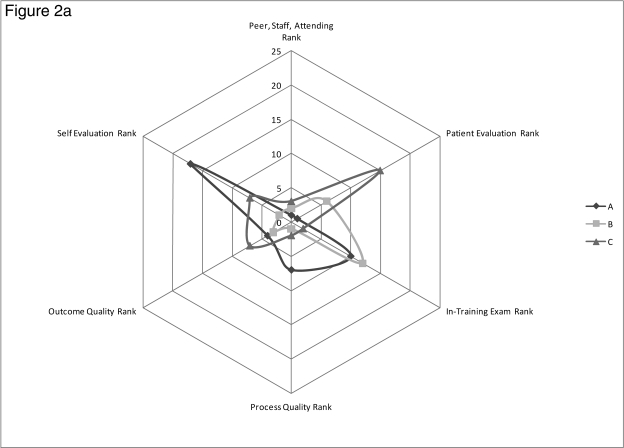

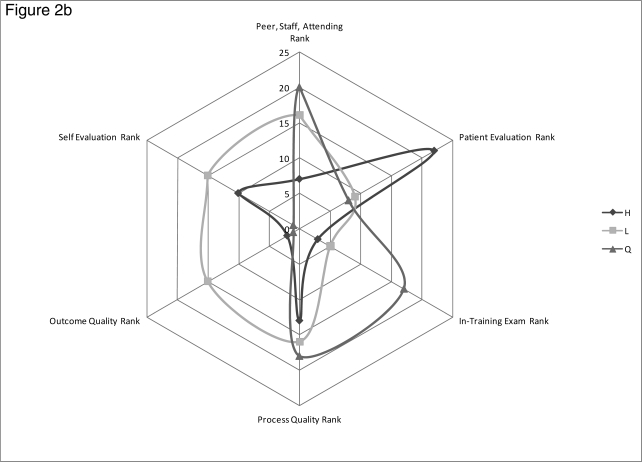

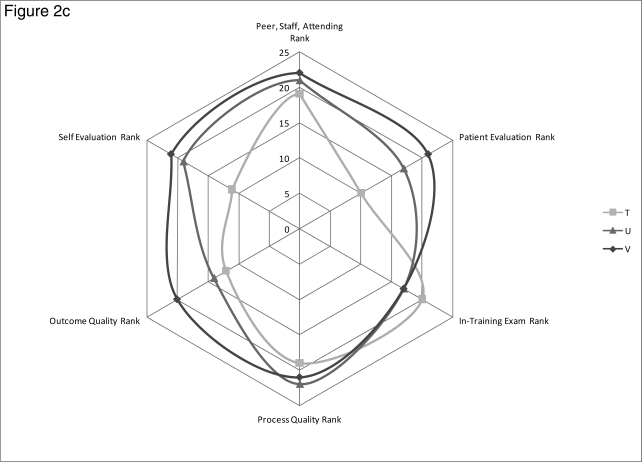

table 1 displays the ranking data for each resident in the long-block class for peer, staff, attending physician, and patient evaluations. table 2 contains the ranking data for knowledge-based testing, clinical quality measures, and the self-evaluations. For both tables the Likert scale and class median and ranges are shown where appropriate. For this analysis, residents were sorted by overall performance rank by summing the peer, staff, attending physician, patient, testing, and quality ranks, with resident A being the highest rated and resident V being the lowest rated (tables 1 and 2). Specific rankings are out of 22 for each measure except for the quality measures, which are out of 24 because the data for 2 faculty members were included in the quality data set. We calculated Cronbach alpha for the 360-degree evaluations, the patient evaluations, and the self-evaluations and obtained values of .84, .92, and .89, respectively. figure 1 displays the ranking data from both tables in graphic form. Each column represents a specific resident (A through V). Data points closer to the top of the figure indicate higher relative rankings for a given evaluation entity than data points at the bottom of the figure. Data were also placed into radar graphs (figure 2) to display and compare MSF components for individual residents. Data points closer to the center of the graph represent higher rankings than data points near the edge.

Figure 1.

Spread of Multisource Feedback Data for Each Resident

Each column represents a specific resident (A through V). Data points closer to the top of the figure indicate higher relative rankings for a given evaluation entity than data points at the bottom of the figure.

Figure 2.

Radar Graphs for Overall Rankings for Each of the Major Multisource Feedback Categories

A, Ratings for residents A through C. B, Ratings for residents H, L, and Q. C, Ratings for residents T through V. Data points closer to the center of the graph represent higher rankings than data points closer to the edge.

Residents met with the program director and chief resident in the 4th and 11th months of the long-block to review the global MSF data. Residents were asked to reflect on the relative rankings in each data set and develop a learning plan to improve any deficits. The program director and chief resident used the data to guide their specific formative feedback. If problems were identified in any of the evaluation areas before these meetings, ad hoc conferences were convened.

The study received a waiver from the University of Cincinnati institutional review board.

Results

The MSF identified residents who performed well on most measures compared with their peers (residents A and B), residents who performed poorly on most measures compared with their peers (residents U and V), and residents who performed well on some measures and poorly on others (residents C through T) (tables 1 and 2, figure 1). Each relatively high-, intermediate-, and low-performing resident had a least one aspect of the MSF that was significantly lower than the other.

Examples of focused feedback for relatively higher rated residents included:

Resident A (figure 2a) scored well in each of the performance categories, but had a low self-evaluation rating. This prompted a discussion regarding stress levels and making sure this resident's internal monologue was not excessively negative.

Resident B (figure 2a) scored highly in every category except the in-training examination, leading to a plan for improved studying even though test scores were above average.

Resident C (figure 2a) scored highly in most categories, but was rated lower by patients when compared with peers. This led to a review of the specific components of the patient evaluation that were lower (table 2–physician explains and physician gives instructions) and a plan for each.

Residents in the middle of the class received similar reviews and developed specific plans based on the data. Examples include:

Resident H (figure 2b) scored highly in the peer/staff/attending physician efficiency category (table 1) and on test scores but was rated last in the class on overall patient evaluations. This led to a reflection that efficiency as seen by the care team could be negatively perceived by patients.

Resident L (figure 2b) ranked first in the physician listens portion of the patient evaluation (table 2), but 19th in the physician is on time category (table 2) and 21st in the efficiency section of the peer/staff/attending physician evaluation (table1). This prompted a discussion of the potential tradeoffs of patient care: more time spent with patients could have potentially negative consequences on coworker perception within the practice.

Resident Q's self-evaluation scores were first overall, first in knowledge, and second in patient care, but actual performance was 16th overall, 17th in knowledge, and 20th in patient care (figure 2b). This led the resident to reflect on calibrating self-assessment and performance. Other examples of significant discordance in MSF domains include resident N (2nd in patient evaluations, 18th in peer/staff/attending physician evaluation, and 21st in testing) and resident S (4th in peer/staff/attending physician evaluation, 16th in patient evaluation, and 20th in overall clinical quality). The program director used these data to develop specific plans of reflection and action.

Figure 2.

Radar Graphs for Overall Rankings for Each of the Major Multisource Feedback Categories (Continued)

Relatively lower-performing residents (residents T, U, and V, figure 2c) were ranked lower in almost every category, and the MSF identified a number of opportunities for improvement.

Figure 2.

Radar Graphs for Overall Rankings for Each of the Major Multisource Feedback Categories (Continued)

Discussion

When assessing the performance of a class of 22 residents, one might expect to encounter consistency within the performance measure outcomes. One possibility for data assortment could have been the higher-performing residents being ranked higher in most measures, and the lower-ranked residents ranking lower in most measures (eg, resident A is ranked highest in most categories, resident B is ranked next in most categories, resident C is ranked third in most, and so forth). Our data provide a more heterogeneous set of scores for each resident on the various components of the MSF. Although residents A and B are ranked higher in many components in the MSF and residents U and V are ranked lower, the majority of residents received both high and low rankings. The variation in ranking of the components of the MSF allows for specific, high-value formative feedback for each resident. Inclusion of clinical quality data and testing scores added an important dimension to the MSF. Limiting the evaluation to a single modality or only the behavioral components of a typical 360-degree evaluation (communication, professionalism, and teamwork behaviors) would have missed significant feedback opportunities for many residents.

Single end-of-rotation rating forms typically offer few high-value formative feedback opportunities for residents. Raters often use a global impression of the resident, creating a halo effect that fails to distinguish different dimensions of clinical care.28,29 This problem exists for poorer performing residents but may actually be accentuated for higher performing residents; stellar evaluations often suggest that the resident should “keep on doing what they are doing” and little else. Use of MSF allows for focused, improvement-oriented feedback for even the highest rated residents. Although there could have been a halo effect for single raters on each type of rating instrument, we did not see this across rating instruments.

This study has several limitations. First, the extensive MSF detailed here was completed for one residency class within a unique ambulatory educational structure and may not be generalizable to other residencies. We included collection of feedback directly in the work flow of our long-block redesign, and the continuous contact between residents, staff, and patients made it relatively easy to collect a significant amount of feedback. Residencies with other ambulatory structures may find this degree of data collection unsustainable. However, use of MSF that includes the behavioral components of a typical 360-degree evaluation as well as clinical quality measures and test scores is generalizable. Second, most of the evaluation tools, with the exception of the Consumer Assessment of Health Plans Study questions and the in-training examination, have not been validated in research studies. However, regarding the peer, staff, attending physician, and patient evaluations, studies have shown an acceptable generalizability coefficient, with a minimum of 25 patient and 10 peer evaluations,4 that we were able to achieve for each resident. Third, the rankings presented in the tables represent normative comparisons and not absolute values. It is possible that this residency class as a whole could compare favorably or unfavorably to another residency class, illustrating the danger of using normative comparisons as the standard of measurement. However, the clinical quality measurement for the class as a whole bested many national benchmarks. In addition, the absolute value medians and ranges of the peer, staff, attending physician, and patient ratings were quite high overall, and it is not clear what the minimum rating should be for clinical competence. Rankings were therefore used to identify relative weaknesses in resident performance and to guide improvement strategies. Fourth, we did not show results of the feedback generated by the MSF data. The long-block lasts only 1 year. It takes time for meaningful data to be generated, and the most important evaluation period occurred in the 11th month of the long-block. However, both the residents and program director thought that these evaluations were among the most complete and constructive used in the residency program. Plans are underway to better evaluate resident progress while on the long-block and after returning to the inpatient services.

Conclusion

An ambulatory practice MSF system that includes self, peer, staff, attending physician, and patient evaluations, as well as corresponding clinical quality data and knowledge-based testing performance can identify relatively high-, intermediate-, and low-performing residents, and suggest high-value focused formative feedback for each. More research needs to be done on the effect of such feedback, as well as the relationships between each of the components in the MSF data set.

Footnotes

All authors are at the University of Cincinnati Academic Health Center. Eric J. Warm, MD, is Associate Professor and Program Director, Department of Internal Medicine; Daniel Schauer, MD, is Assistant Professor, Department of Internal Medicine; Brian Revis, MD, is Chief Resident, Department of Internal Medicine; and James R. Boex, PhD, MBA, is Professor, Department of Medical Education.

References

- 1.Holmboe E. S., Hawkins R. E. Methods for evaluating the clinical competence of residents in internal medicine: a review. Ann Intern Med. 1998;129:42–48. doi: 10.7326/0003-4819-129-1-199807010-00011. [DOI] [PubMed] [Google Scholar]

- 2.Holmboe E. S., Rodak W., Mills G., McFarlane M. J., Schultz H. J. Outcomes-based evaluation in resident education: creating systems and structured portfolios. Am J Med. 2006;119:708–714. doi: 10.1016/j.amjmed.2006.05.031. [DOI] [PubMed] [Google Scholar]

- 3.Chaudhry S. I., Holmboe E., Beasley B. W. The state of evaluation in internal medicine residency. J Gen Intern Med. 2008;23:1010–1015. doi: 10.1007/s11606-008-0578-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lipner R. S., Blank L. L., Leas B. F., Fortna G. S. The value of patient and peer ratings in recertification. Acad Med. 2002;77(suppl 10):S64–S66. doi: 10.1097/00001888-200210001-00021. [DOI] [PubMed] [Google Scholar]

- 5.Accreditation Council for Graduate Medical Education. ACGME program requirements for graduate medical education in internal medicine. Available at: http://www.acgme.org/acWebsite/downloads/RRC_progReq/140_internal_medicine_07012009.pdf. Accessed November 29, 2009.

- 6.Lockyer J. Multisource feedback in the assessment of physician competencies. J Contin Educ Health Prof. 2003;23:4–12. doi: 10.1002/chp.1340230103. [DOI] [PubMed] [Google Scholar]

- 7.Connors G., Munro T. W. 360-degree physician evaluations. Healthc Exec. 2001;16:58–59. [PubMed] [Google Scholar]

- 8.Hall W., Violato C., Lewkonia R., et al. Assessment of physician performance in Alberta: the physician achievement review. CMAJ. 1999;161:52–57. [PMC free article] [PubMed] [Google Scholar]

- 9.Violato C., Marini A., Toews J., Lockyer J., Fidler H. Feasibility and psychometric properties of using peers, consulting physicians, co-workers, and patients to assess physicians. Acad Med. 1997;72(10 suppl 1):S82–S84. doi: 10.1097/00001888-199710001-00028. [DOI] [PubMed] [Google Scholar]

- 10.Ramsey P. G., Wenrich M. D., Carline J. D., Inui T. S., Larson E. B., LoGerfo J. P. Use of peer ratings to evaluate physician performance. JAMA. 1993;269:1655–1660. [PubMed] [Google Scholar]

- 11.Wenrich M. D., Carline J. D., Giles L. M., Ramsey P. G. Ratings of the performances of practicing internists by hospital-based registered nurses. Acad Med. 1993;68:680–687. doi: 10.1097/00001888-199309000-00014. [DOI] [PubMed] [Google Scholar]

- 12.Violato C., Lockyer J., Fidler H. Multisource feedback: a method of assessing surgical practice. BMJ. 2003;326:546–548. doi: 10.1136/bmj.326.7388.546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fidler H., Lockyer J. M., Toews J., Violato C. Changing physicians' practices: the effect of individual feedback. Acad Med. 1999;74:702–714. doi: 10.1097/00001888-199906000-00019. [DOI] [PubMed] [Google Scholar]

- 14.Thomas P. A., Gebo K. A., Hellmann D. B. A pilot study of peer review in residency training. J Gen Intern Med. 1999;14:551–554. doi: 10.1046/j.1525-1497.1999.10148.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Musick D. W., McDowell S. M., Clark N., Salcido R. Pilot study of a 360-degree assessment instrument for physical medicine & rehabilitation residency programs. Am J Phys Med Rehabil. 2003;82:394–402. doi: 10.1097/01.PHM.0000064737.97937.45. [DOI] [PubMed] [Google Scholar]

- 16.Duffy F. D., Gordon G. H., Whelan G., et al. Assessing competence in communication and interpersonal skills: the Kalamazoo II report. Acad Med. 2004;79:495–507. doi: 10.1097/00001888-200406000-00002. [DOI] [PubMed] [Google Scholar]

- 17.Davis J. D. Comparison of faculty, peer, self, and nurse assessment of obstetrics and gynecology residents. Obstet Gynecol. 2002;99:647–651. doi: 10.1016/s0029-7844(02)01658-7. [DOI] [PubMed] [Google Scholar]

- 18.Higgins R. S., Bridges J., Burke J. M., O'Donnell M. A., Cohen N. M., Wilkes S. B. Implementing the ACGME general competencies in a cardiothoracic surgery residency program using 360-degree feedback. Ann Thorac Surg. 2004;77:12–17. doi: 10.1016/j.athoracsur.2003.09.075. [DOI] [PubMed] [Google Scholar]

- 19.Davies H., Archer J., Southgate L., Norcini J. Initial evaluation of the first year of the foundation assessment programme. Med Educ. 2009;43:74–81. doi: 10.1111/j.1365-2923.2008.03249.x. [DOI] [PubMed] [Google Scholar]

- 20.Archer J., Norcini J., Southgate L., Heard S., Davies H. Mini-PAT (peer assessment tool): a valid component of a national assessment programme in the UK? Adv Health Sci Educ Theory Pract. 2008;13:181–192. doi: 10.1007/s10459-006-9033-3. [DOI] [PubMed] [Google Scholar]

- 21.Wood J., Collins J., Burnside E. S., et al. Patient, faculty, and self-assessment of radiology resident performance: a 360-degree method of measuring professionalism and interpersonal/communication skills. Acad Radiol. 2004;11:931–939. doi: 10.1016/j.acra.2004.04.016. [DOI] [PubMed] [Google Scholar]

- 22.Archer J. C., Norcini J., Davies H. A. Use of SPRAT for peer review of paediatricians in training. BMJ. 2005;330:1251–1253. doi: 10.1136/bmj.38447.610451.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Warm E. J., Schauer D. P., Diers T., et al. The ambulatory long-block: an accreditation council for graduate medical education (ACGME) educational innovations project (EIP) J Gen Intern Med. 2008;23:921–926. doi: 10.1007/s11606-008-0588-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Residency Review Committee for Internal Medicine. Educational innovation project; 2005. Available at: http://www.acgme.org/acWebsite/RRC_140/140_EIPindex.asp. Accessed March 15, 2008.

- 25.Hargraves J. L., Hays R. D., Cleary P. D. Psychometric properties of the consumer assessment of health plans study (CAHPS) 2.0 adult core survey. Health Serv Res. 2003;38:1509–1527. doi: 10.1111/j.1475-6773.2003.00190.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.National Committee for Quality Assurance. Diabetes recognition program. Available at: http://www.ncqa.org/tabid/139/Default.aspx. Accessed November 29, 2009.

- 27.Accreditation Council for Graduate Medical Education. ACGME Outcome Project. Available at: http://www.acgme.org/outcome/comp/compMin.asp. Accessed March 15, 2008.

- 28.Haber R. J., Avins A. L. Do ratings on the American Board of Internal Medicine resident evaluation form detect differences in clinical competence? J Gen Intern Med. 1994;9:140–145. doi: 10.1007/BF02600028. [DOI] [PubMed] [Google Scholar]

- 29.Silber C. G., Nasca T. J., Paskin D. L., Eiger G., Robeson M., Veloski J. J. Do global rating forms enable program directors to assess the ACGME competencies? Acad Med. 2004;79:549–556. doi: 10.1097/00001888-200406000-00010. [DOI] [PubMed] [Google Scholar]