Abstract

Songbirds, which, like humans, learn complex vocalizations, provide an excellent model for the study of acoustic pattern recognition. Here we examined the role of three basic acoustic parameters in an ethologically relevant categorization task. Female zebra finches were first trained to classify songs as belonging to one of two males and then asked whether they could generalize this knowledge to songs systematically altered with respect to frequency, timing, or intensity. Birds' performance on song categorization fell off rapidly when songs were altered in frequency or intensity, but they generalized well to songs that were changed in duration by >25%. Birds were not deaf to timing changes, however; they detected these tempo alterations when asked to discriminate between the same song played back at two different speeds. In addition, when birds were retrained with songs at many intensities, they could correctly categorize songs over a wide range of volumes. Thus although they can detect all these cues, birds attend less to tempo than to frequency or intensity cues during song categorization. These results are unexpected for several reasons: zebra finches normally encounter a wide range of song volumes but most failed to generalize across volumes in this task; males produce only slight variations in tempo, but females generalized widely over changes in song duration; and all three acoustic parameters are critical for auditory neurons. Thus behavioral data place surprising constraints on the relationship between previous experience, behavioral task, neural responses, and perception. We discuss implications for models of auditory pattern recognition.

INTRODUCTION

How our brains allow us to recognize words, faces, and other patterns is an outstanding problem in neuroscience (Jusczyk and Luce 2002; Kourtzi and DiCarlo 2006). Pattern recognition is difficult because every stimulus is unique. To understand speech, for example, our brains must be able to discount variations in volume, prosody, and accent irrelevant to a word's meaning and to locate differences between words essential to meaning. How does the brain achieve these two tasks? One model (Cadieu et al. 2007; Serre et al. 2007; Sugrue et al. 2005) is that it transforms the stimulus to emphasize the most important differences, then classifies these representations according to some set of rules.

While neural representations of complex stimuli have been studied extensively, the relationship between these representations and classification behavior remains much less well understood. Many physiological studies assume that animals classify stimuli as we do (Hung et al. 2005; Kreiman et al. 2006) or explicitly train animals on categories chosen by experimenters (Freedman et al. 2001). Finally, many investigations have focused on the visual system, using patterns that are relatively stable over time. In contrast, acoustic patterns vary in time, leading to distinct temporal activity patterns. While several studies have demonstrated that these temporal patterns of spiking carry significant auditory information (Narayan et al. 2006), their role in acoustic pattern recognition has not been tested behaviorally.

Songbirds provide a useful model for studying the relationship between neural representations and acoustic pattern recognition. Like humans, songbirds recognize both groups and individuals (Appeltants et al. 2006; Becker 1982; Brooks and Falls 1975a,b; Burt et al. 2000; Gentner et al. 2000; D. B. Miller 1979; E. H. Miller 1982; Nelson 1989; Nelson and Marler 1989; Prather et al. 2009; Scharff et al. 1998; Stripling et al. 2003; Vignal et al. 2004, 2008) on the basis of their learned vocalizations. A bird's song is a high-dimensional stimulus not unlike a human voice with structure that can be parameterized in many ways. Although a bird's song is recognizable, each rendition is different from the last: its volume will depend on the distance between singer and listener; background noises—often other songs—will corrupt the signal (Zann 1996); and the pitch and duration of individual notes will vary slightly (Glaze and Troyer 2006; Kao and Brainard 2006). To recognize a bird by his song, then, females must be able both to discriminate between the songs of different individuals and to ignore the variations in a single bird's song.

The neural representation of songs—particularly in the primary auditory area field L (Fortune and Margoliash 1992, 1995; Vates et al. 1996)—has been studied in detail (Nagel and Doupe 2006, 2008; Narayan et al. 2006; Sen et al. 2001; Theunissen et al. 2000; Wang et al. 2007; Woolley et al. 2005, 2006, 2009). Most field L neurons are highly tuned in frequency, time, or both (Nagel and Doupe 2008; Woolley et al. 2009). In addition, field L responses change significantly with mean volume (Nagel and Doupe 2006, 2008). The behavioral limits of song recognition have received comparatively little attention, however. How much variation in pitch or duration can birds tolerate before a song becomes unrecognizable? Over what range of volumes are birds able to discriminate songs of different individuals? These questions are critical to understanding how the representation of song features or parameters by neurons in the zebra finch brain are related to birds' perception of these features and their behavioral responses to song.

We used generalization of a learned discrimination (Beecher et al. 1994; Gentner and Hulse 1998) as a model paradigm for studying song recognition. In this operant task, a bird is taught to categorize two sets of songs obtained from two different conspecific individuals. The birds can use any of the parameters available in the training set to make the categorization. In this sense, the task differs greatly from other psychophysical tasks in which a single stimulus parameter is varied, and the subject is therefore intentionally focused on this parameter. After learning the categorization task, birds continue to perform but are intermittently tested using novel “probe” stimuli. Probe stimulus sets contain song renditions modified along a single parameter. How the bird categorizes these altered songs can thus reveal which aspects of the original songs are used for categorization. Probe stimuli are rewarded without respect to the bird's choice, ensuring that the bird cannot learn the “correct” answer for these stimuli from the reward pattern. Performance significantly above chance on a probe stimulus is considered evidence for “generalization” to that probe, i.e., the altered stimulus still falls within the perceptual category defined by the bird in the task.

Here we used this generalization paradigm to investigate the role of three simple parameters in behavioral song recognition: pitch (fundamental frequency), duration, and intensity. We chose these parameters because frequency, temporal, and intensity selectivity are the parameters most frequently used to characterize neural responses to sound; thus we hoped that this behavioral study would provide a basis for comparing neural and behavioral responses to complex ethologically relevant stimuli. We found that birds trained to classify songs did not generalize well to pitch-shifted songs but were surprisingly robust in their categorization of songs with altered duration. Moreover, most birds generalized only to volumes within 20–40 dB of the training stimuli. However, when explicitly trained to do so, birds could discriminate songs with different durations and correctly classify songs over a 50 dB volume range. Together these data suggest that birds use different aspects of the neural representation of song to solve different tasks and that behavioral experiments are necessary to determine how animals categorize stimuli in different contexts. By studying behavioral categorization in animal models like songbirds, where neural representations can be well-characterized, we may gain insights into how human brains perform pattern recognition.

METHODS

Behavioral task and training

We trained adult female zebra finches (n = 21) to perform a classification task in a two-alternative forced choice paradigm. Of these, 10 were trained to classify the songs of two unfamiliar conspecific males played with a mean amplitude of 73 dB SPL, 5 were trained to classify the same songs played at 30 dB SPL, and 6 were trained to discriminate compressed from expanded songs from the same male. Training took place in custom-built operant cages (Lazlo Bocskai, Ken McGary, University of California at San Francisco) and was controlled using a custom-written Matlab program that interfaced with a TDT RP2 or RX8 board (Tucker Davis Technologies, Alachua, FL). Playback of songs was made from small bookshelf speakers (Bose, Framingham, MA). Intensity of playback was calibrated using pure tones and a calibrated microphone (Brüel and Kjaer, Naerum, Denmark).

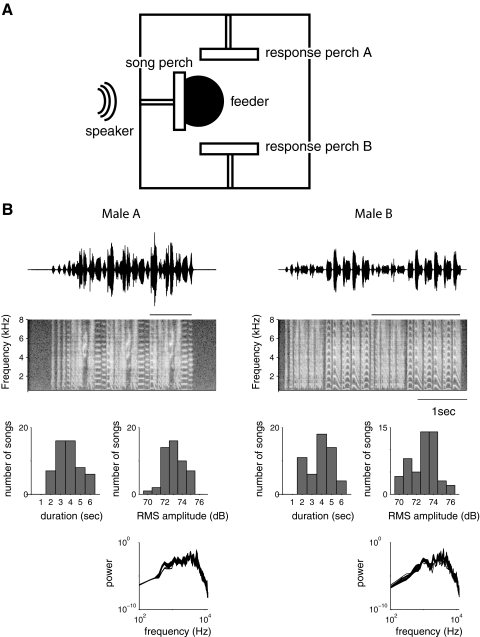

A trial began when the female hopped on a central “song perch” that faced a speaker (Fig. 1A). Following this hop, the computer selected a stimulus from a database of songs from two individuals, described in detail in the following text. The female had to decide which male the song belonged to and to report her choice by hopping on one of two response perches, located to the right (for male A) and to the left (for male B) of the song perch. If she classified the song correctly, a feeder located under the song perch was raised for 2–5 s, allowing her access to food. If she classified the song incorrectly, no new trials could be initiated for a time-out period of 20–30 s. Females had ≤6 s after song playback ended to make a response and were allowed to respond at any point during song playback (see Fig. 1B for song durations); playback was halted as soon as a response was made. If no response was made within 6 s of the song ending, the trial was scored as having no response, and a new trial could be initiated by hopping again on the song perch.

Fig. 1.

An operant discrimination task to study song categorization. A: diagram of the operant cage seen from above. Three perches are located on three sides of the cage. After a hop on the central perch, the computer plays a song from the speaker located directly in front of it. The bird can respond by hopping on either of the 2 response perches. After a correct answer, a feeder (2 in diam, 0.5 in deep, located 0.5–0.75 in beneath the cage) is raised to be flush with the cage floor for 2–5 s, allowing the bird access to seed. After an incorrect answer, all perches cease to function for a 20–30 s time-out. B: songs used for training. The goal of our study was to train birds to associate each response perch with the songs of 1 individual. Example songs from the 2 individuals chosen are shown here both as oscillograms (sound pressure vs. time, top) and spectrograms (frequency vs. time, 2nd row). Each song is composed of several repeated motifs; in each song, 1 motif is indicated by the bar over the spectrograms. The 2 individuals differ in the temporal pattern (oscillogram) and frequency content (spectrogram) of their syllables. Fifty songs from each individual were used in training to avoid over-training on a single song rendition. The distribution of overall song durations, mean (RMS) amplitudes, and power spectra are similar for the 2 individuals as shown (bottom).

Birds required 1–2 wk of training to acquire this task. Training consisted of four stages. In “song mode,” the female was habituated to the operant cage. Food was freely available. Hopping on the song perch produced a song drawn at random from a separate habituation database, which contained no songs from the two individuals used for classification trials. The habituation database included 28 song renditions from each of four zebra finches, for a total of 112 stimuli. In “food mode,” food was restricted, and the female had to hop on one of the two response perches to raise the feeder. The song perch continued to produce songs from the habituation database. Food and song could be procured independently. In “sequence mode,” the female had to hop first on the song perch, then on either of the two side perches, within 6 s, to receive a reward. Finally, in “discrimination mode,” the female began classification trials. The habituation song set was replaced with two sets of training songs, and trials were rewarded (with food) or punished (with a 20–30 s time-out) depending on which response perch the female chose.

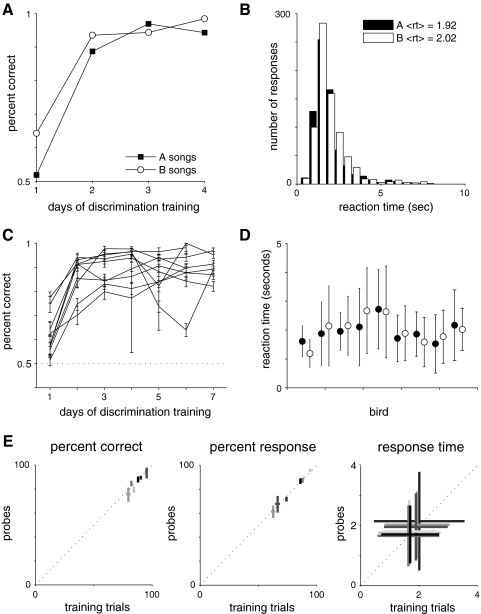

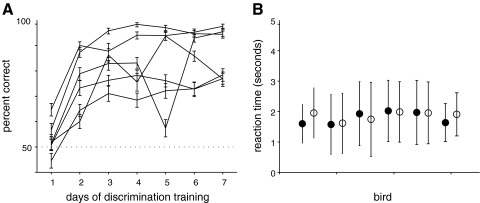

Birds spent 1 or 2 days on each of the first three training stages (song, food, and sequence). Birds were moved to the next stage of training when they performed >200 hops per day (for song mode), or earned >200 rewards per day (for food and sequence mode). Females generally learned the classification task (>75% correct) within 2–5 days of beginning classification trials (Fig. 2, A and C). One female did not perform significantly above chance after three weeks of training and was removed from the study.

Fig. 2.

Learning and generalization of the song categorization task. A: learning curve for 1 individual showing percent of correct responses on A (■) and B (□) songs as a function of the number of days of discrimination training. By the 2nd day of training, this bird performed well above chance on both stimulus types. This bird had 9 days of pretraining (song, food, and sequence modes, as described in methods) before beginning discrimination trials. B: distribution of reaction times (after training) for the bird in A. The mean reaction times were 1.92 s for A songs and 2 s for B songs. This indicates that the bird generally heard ≥1 full motif of the song but usually responded before the stimulus had played to completion (Fig. 1B, 2–6 s overall duration). C: learning curves for 9 trained birds. Means ± SD of percent correct are shown for each day. The dotted line at 0.5 represents chance behavior. All show greater than chance performance by the second day of training. D: mean and SD of reaction time for all A (●) and B (○) songs for each bird. E: responses to novel renditions of the same 2 birds' songs were used as a control to test whether birds had learned to associate the perch with an individual rather than simply memorizing a particular set of acoustic signals. Novel renditions were presented as probes, meaning that they occurred with low probability, and were rewarded at a fixed rate regardless of the birds' response (see methods). Left: the mean and 95% confidence intervals on the percent of correct responses for novel probe songs (y axis) vs. training songs (x axis). Middle: the mean and 95% confidence intervals for the percent of responses to these 2 types of stimuli. Right: the mean and SD of reaction time. In all cases, responses to novel renditions of the birds' songs were not significantly different from responses to the songs used in training.

Females occasionally developed a bias in which they classified most of one individual's songs correctly (>90% correct) but performed more poorly (70–90% correct) on songs from the other individual. When this happened, we altered the percent of correct trials that received a reward so that the preferred perch was rewarded at a lower rate (70–90%). This procedure was usually successful at correcting large biases in performance, and reward rates were equalized over 1–3 subsequent days. Of 21 birds used in the study, 12 developed occasional biases lasting 1–3 days. Data from days on which the two perches were rewarded at different rates were not used for analysis.

In addition to this manual correction, the computer automatically adjusted the rate of song presentation to play more songs associated with the nonpreferred perch. At the beginning of a session, the computer formed a list of all songs in the database. When a song was played and got a response, it was removed from this list. Songs that did not receive a response remained on the list. Songs were drawn from this list until all songs in the database had elicited a response. At the end of such a “block”, the computer calculated the percent correct for “A” songs and “B” songs over the last 25 responses to each type of song. If these percentages were unequal, it randomly removed A or B songs from the next block so that the percent of A (or B) songs presented was inversely proportional to the fraction of correct responses to A (or B) songs P(next A) = P(correct on B)/[P(correct on A) + P(correct on B)].

According to this formula, if the bird made the same number of correct responses to A and B songs, both songs would be presented equally. However, if she got only one set of songs correct (for example, if she chose the A perch on all trials and so got all As correct and all Bs incorrect), the computer would adjust song presentation rates so that only songs associated with the nonpreferred perch were presented during the next block. Together with manual adjustment of reward rates, this adaptive song presentation scheme helped ensure that females correctly classified both sets of songs as rewarded and did not display too strong a bias toward one perch or the other.

Females were allowed to work continually for the duration of their 14 h day. In general, females received all of their food by performing this task. However, bird weight was monitored closely, and birds received supplemental food when their weight decreased ≥10%. Birds had free access to water throughout the experiment. Water was located on one side of the cage, next to one of the two response perches. After birds reached criterion in their discrimination behavior, the overall reward rate for correct trials was lowered to 75–95%, to increase the total number of trials birds performed each day. Whether a bird was rewarded or not on a given trial was determined randomly; no songs were specifically chosen to be rewarded or not. Correct trials that did not produce a reward did not produce punishment. All incorrect trials continued to be punished with a time-out. UCSF's Institutional Animal Care and Use Committee approved all experimental procedures.

Classification of novel and altered stimuli

Probe trials were used to test the trained females' ability to generalize the learned classification to new or altered stimuli. Probe stimuli could be novel bouts from the two males used for training or songs from the training set altered along a single parameter. Probe trials were introduced only after birds reached criterion and were performing stably at the lowered reward rate (75–95%). Probe trials were randomly interspersed between training trials at a low rate (10–15% of trials), were rewarded at a fixed rate equal to the overall reward rate (75–95%) regardless of which response perch the female chose, and were never punished. This ensured that no information about the “correct” category of the probe was contained in its reward rate and that females could not easily discriminate probe trials from normal trials on the basis of reward. Probe trials that did not receive a response were not rewarded and remained on the probe stimulus list until they elicited a response. In this task, females were considered to generalize only if their performance was above chance for both A and B probe types. To perform significantly below chance, birds would have to systematically classify A probe songs as B songs, and B probe songs as A songs because percent correct was always calculated for A and B songs taken together.

Song stimuli

The training database consisted of 100 song bouts, 50 from one male (“male A”, Fig. 1B), and 50 from another (“male B”, Fig. 1B). We used this large database to ensure that females learned to associate each perch with an individual and not with a few specific renditions of his song. Each bout consisted of one to six repeated “motifs” (the learned syllable sequence; see bars above the spectrograms in Fig. 1B) and was drawn randomly from a set of recording sessions containing both directed (sung to a female) and undirected (sung alone) song. The original song files for male A were recorded over the course of 4 days and contained 13 directed and 59 undirected bouts. For male B, 14 directed and 96 undirected bouts were recorded over the course of 2 days. Bouts were separated by ≥2 s of silence. Song recordings were made from a stationary speaker located within a normal housing cage 53 × 53 × 53 cm; males were allowed to move freely during recording. For playback, we normalized the songs such that the mean intensity over the stimulus set was the same for songs of both individuals (73.0 ± 1.1 dB RMS SPL, calculated over the total duration of the stimulus, 91.8 ± 1.2 dB SPL max for male A; 72.7 ± 1.8 dB RMS SPL average, 92.5 ± 1.8 dB SPL max for male B) but preserved the range of variation in volume recorded at the microphone. All intensities reported in the paper are dB SPL over the total duration of the stimulus. The mean song volume was chosen to approximate what a female housed with the male in a soundbox might hear. (For comparison, the average loudness of a male's song at 0.4 m—larger than the cage width or the typical male-female distance during directed song, is 60–70 dB) (Cynx and Gell 2004). When necessary, bouts were cropped at the end of a motif so that the total duration was not more than 6 s. All stimuli were filtered between 250 and 8 kHz (Butterworth 2-pole filter). When necessary, low levels of white noise were added to the recordings to ensure that the background noise level was the same for all stimuli (37 dB SPL). The two stimulus sets thus had similar distributions of total duration, RMS volume, and overall power spectrum (Fig. 1B, bottom) but had quite distinct syllable structure and timing (Fig. 1B, top). Human listeners (lab members) could easily identify the singer of each song.

Song manipulations

We used a phase vocoder algorithm (Flanagan and Golden 1966) to independently manipulate the overall pitch and duration of training songs. To alter the duration of a song without changing its pitch, the vocoder broke it into short overlapping segments and took the Fourier transform of each segment, yielding a magnitude and phase for each frequency. We used segments of 256 samples, or 10.5 ms at 25 kHz, overlapping by 128 samples. This produced a function of time and frequency similar to the spectrogram often used to visualize an auditory stimulus (such as those shown in Fig. 1B, middle) but with a phase value as well as a magnitude for each point

We then chose a new time scale for the song and interpolated the magnitude and phase of the signal at the new time points based on the magnitude and phase at nearby times.

Finally, the vocoder reassembled the stretched or compressed song from the interpolated spectrogram. This process yielded a song that was slower or faster than the original with largely unchanged frequency information.

To alter the pitch of a song without changing its duration, we first used the preceding algorithm to stretch or compress the song in time. We then resampled it back to its original length yielding a song with a higher (for stretched songs) or lower (for compressed songs) pitch but the same duration and temporal pattern of syllables as the original. Songs altered in pitch or duration were not re-filtered after this manipulation.

The pitch and duration of individual zebra finch notes are known to vary across song renditions. Analyses in other papers indicate that pitch can vary by 1–3% (0.1–0.4 octaves) (Kao and Brainard 2006), while duration can vary by 1–4% (Cooper and Goller 2006; Glaze and Troyer 2006). Variation within the normal range for individual birds should not affect the bird's classification of an individual's song in an operant classification task, whereas variation beyond that range might or might not, depending on the importance of the parameter to recognition and classification. We therefore created songs with durations and pitches increased and decreased by 0, 1, 2, 4, 8, 16, 32, and 64%. This resulted in durations ranging from 1/1.64 or 61% to 164% of the original song length and pitches ranging from 0.71 octaves lower to 0.71 octaves higher than the original pitch. Pitch-shifted and time-dilated songs were generated from 2 or 10 different base stimuli: 1 or 5 from the A training set and 1 or 5 from the B training stimuli. We computed the averaged responses to each manipulation of the 2 or 10 base stimuli to calculate performance as a function of pitch shift or duration change. Songs with 0% change were processed in the same way as other manipulated stimuli and were presented as probe stimuli to ensure that nothing about our manipulation process or probe stimulus presentation alone caused the birds to respond differently.

The loudness or volume of song stimuli was adjusted by scaling the digital representation of the song before outputting it to the speaker. This ensured that baseline noise levels in the recorded song presentations were consistently 46 dB below the mean amplitude of the song.

Data analysis

A trial was initiated every time the bird hopped on the song perch. Each trial could be scored as correct, incorrect, or no response. The percent of correct responses (“percent correct”) was calculated by dividing the total number of correct responses by the total number of trials to which the bird responded. The percent of responses (“percent response”) was calculated by dividing the total number of responses by the total number of trials. Reaction time was calculated from the song perch hop to the subsequent response hop. Ninety-five percent confidence intervals on percent correct were calculated by fitting the total number of correct responses, and the total number of responses, to a binomial model (binofit.m, Matlab). The same algorithm was used to estimate confidence intervals on percent response. Error bars on reaction time measurements represent the mean SD across several days. For estimates of behavior on control trials, we show the mean and SD of percent correct and percent response across days. We use this estimate rather than the binomial estimate because there are so many more control training trials (9 control trials for every probe) that the binomial estimate of error on control trials is exceedingly small.

As a convention, in this paper we state that a female generalized to a probe stimulus when the lower 95% confidence interval on her percent correct was >50% (chance); in addition, the term “correct” response here refers to correct classification of a song as belonging to individual A or B.

RESULTS

Classification training and generalization

Nine of the 10 initial females trained on the classification task at 73 dB reached criterion within 7 days of beginning classification trials (Fig. 2, A and C) consistent with this being a task that is ethologically relevant to the birds. One bird failed to reach criterion (75%) after 17 days of training and was removed from the study. Performance by the remaining birds was good with >80% of songs classified correctly. Mean response times ranged from 1.4 to 2.7 s (Fig. 2, B and D), long enough to hear one to two motifs of each song but generally shorter than the entire song bout.

As a control, to test that females had learned to associate different individuals with different perches rather than memorizing the rewarded classification of all 100 songs in the database, we tested the responses of seven females to 10 novel song bouts from each male that were not included in the original training set. These novel song bouts were presented as probe stimuli (see methods). Responses to novel bouts were not significantly different from responses to training songs, in percent of correct responses (Fig. 2E, left), percent of songs responded to (E, middle), and reaction time (right). These data indicate that classification was robust to bout-to-bout variations in individual song structure and suggest that these females had learned to associate each perch with an individual rather than with the exact song variants used in the training.

Classification of pitch-shifted songs

To examine the role of pitch in classifying songs of different individuals, we presented trained females with pitch-shifted versions of training songs and observed how the females classified these novel stimuli. Pitch-shifted songs were presented in a probe configuration as described in the preceding text and in methods. We shifted the pitch of songs from each male by ± 0, 0.01, 0.03, 0.06, 0.11, 0.21, 0.40, and 0.71 octaves, equal to multiplying and dividing the frequency by 100, 101, 102, 104, 108, 116, 132, and 164%. This logarithmic series of shifts was chosen to span and exceed the range of natural variability in note pitch; the pitch of individual notes has been shown to vary by 1–3% of their fundamental frequency, in undirected song (Kao and Brainard 2006). As illustrated in Fig. 3A, the frequency content of pitch-shift probes was altered, but their temporal pattern—including the sequence of harmonic and noisy notes—was not. As a result, human listeners could readily distinguish even strongly pitch-shifted songs of one individual from those of the other.

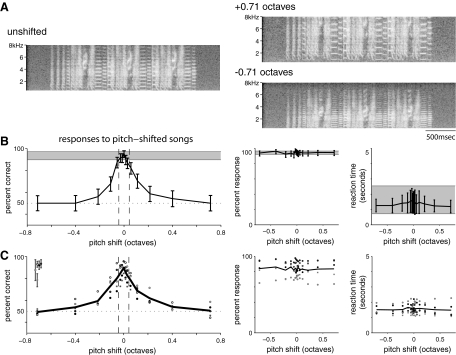

Fig. 3.

Responses to pitch-shifted songs. A: examples of pitch-shifted songs. Left: unshifted song. Top right: same song shifted up by 0.71 octaves (64%). Bottom right: same song shifted down by 0.71 octaves (64%). In this manipulation, the frequency content of the song was changed but the temporal pattern remained the same. A shift in pitch leads to vertical expansion or compression of notes with harmonic structure. Pitch-shifted probe stimuli were presented at low probability (10–15%) between unaltered training trials and were rewarded without regard to the female's response. B: performance of one bird on pitch-shifted probe stimuli. Left: percent correct as a function of pitch shift (—). Error bars represent 95% confidence intervals on percent correct obtained by fitting data to a binomial distribution. , the mean ± SD of the bird's performance on control training trials, averaged across the same days (n = 16). - - - the range of natural pitch variation in individual's songs (±3%) · · · , chance behavior. The bird's performance overlaps with control performance within this range and falls off rapidly outside it. Middle: percent response as a function of pitch shift (—) with 95% confidence intervals.

, the mean ± SD of the bird's performance on control training trials, averaged across the same days (n = 16). - - - the range of natural pitch variation in individual's songs (±3%) · · · , chance behavior. The bird's performance overlaps with control performance within this range and falls off rapidly outside it. Middle: percent response as a function of pitch shift (—) with 95% confidence intervals. , the mean ± SD of percent response for control trials. Response rates do not change with pitch and overlap with response rates for control trials. Right: reaction time as a function of pitch shift (—) with SD.

, the mean ± SD of percent response for control trials. Response rates do not change with pitch and overlap with response rates for control trials. Right: reaction time as a function of pitch shift (—) with SD. , the mean ± SD of reaction time for control trials. Reaction times for probe trials fall within the range of reaction times for control trials. C: responses of 5 birds (including the example in B) to pitch-shifted probe stimuli. Left: differently shaded symbols represent the percent correct for each bird at each condition. The black line represents the mean across birds. Error bars at the top left represent the mean ± SD of each birds' performance on control trials. Data from the same bird are represented by the same symbol as in the main plot. All birds show a decrease in performance for songs outside the range of natural variability and chance behavior for the largest pitch shifts. Middle: percent response for all birds as a function of pitch shift with the mean across birds shown in black. Right: mean reaction time for all birds with the mean across birds shown in black.

, the mean ± SD of reaction time for control trials. Reaction times for probe trials fall within the range of reaction times for control trials. C: responses of 5 birds (including the example in B) to pitch-shifted probe stimuli. Left: differently shaded symbols represent the percent correct for each bird at each condition. The black line represents the mean across birds. Error bars at the top left represent the mean ± SD of each birds' performance on control trials. Data from the same bird are represented by the same symbol as in the main plot. All birds show a decrease in performance for songs outside the range of natural variability and chance behavior for the largest pitch shifts. Middle: percent response for all birds as a function of pitch shift with the mean across birds shown in black. Right: mean reaction time for all birds with the mean across birds shown in black.

For five birds, we manipulated a single song bout from each male; for three of these birds, we repeated the experiment manipulating five song bouts from each male. No significant differences were found between these results, and the data from both were pooled.

Figure 3B (left) shows one female's response to pitch-shifted probe songs. The gray bar represents the mean ± SD across days of her performance on control trials (rewarded at 75–95% if correct, punished if incorrect). For small pitch shifts (<0.05 octaves), her performance on probes overlapped with her control performance. However, her performance fell off rapidly for shifts larger than those naturally observed (±3%, indicated by - - -), and she performed at chance for pitch shifts of 0.2 octaves or greater. The middle and right hand panels of Fig. 3B show this bird's percent response (middle) and reaction time (right) for probes (—) and control trials ( ). Percent response and reaction times for probes were not statistically different from those for control trials.

). Percent response and reaction times for probes were not statistically different from those for control trials.

Similar results were seen in all birds tested on these probes (n = 5). As shown in Fig. 3C (left), all birds performed close to their control levels for probe stimuli within the range of natural within-individual variation, but performed poorly for shifts outside this range. All birds performed at chance for the largest pitch shifts (±0.71 octaves). Percent response and reaction time did not change significantly with pitch (Fig. 3C, middle and right). Together these data indicate that pitch strongly determines how songs are classified in this task.

Classification of songs with altered duration

To examine the importance of stimulus duration for classification of individuals' songs, we presented probe versions of the training stimuli that were stretched or compressed in time. The frequency content of these songs was preserved, as were the relative durations of different syllables and intervals (Fig. 4A, methods). Probe stimuli with altered duration were constructed from the same song bouts used to create pitch-shifted probes. Duration, like pitch, was multiplied and divided by 100, 101, 102, 104, 108, 116, 132, and 164% (equivalent to durations ranging from 61 to 164% of the original song length). The duration of individual notes varies by 1–4% in natural song (Cooper and Goller 2006; Glaze and Troyer 2006; Kao and Brainard 2006). Our manipulations of duration and pitch thus spanned an equivalent range, both in terms of percent change and in comparison to the range of natural within-individual variability in each parameter.

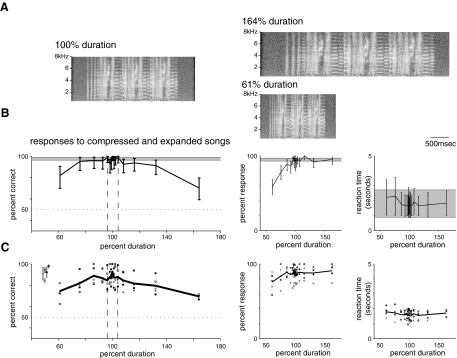

Fig. 4.

Responses to songs with altered duration. A: examples of songs with altered duration. Left: unaltered song. Top right: same song expanded by 64%. Bottom right: same song compressed by 64% (duration = 1/1.64 or 61%). Expansion or compression alters the duration of both syllables and intervals but does not change their relative durations nor the frequency content of the song. Duration probes were presented at low probability between unaltered training trials and were rewarded without regard to the female's response. B: performance of 1 bird on duration probe stimuli. Left: percent correct as a function of song duration (—). Error bars and  as described in the legend for Fig. 3B. - - -, the range of natural variability in individuals' song durations (±4%). From 76 to 132% duration the bird's performance on probes overlaps with her performance on control trials, and she performs significantly above chance on all probes. Middle: percent response as a function of song duration. Response rates for probes were comparable to response rates for control trials except for the most compressed songs. Right: reaction time as a function of duration. Reaction time generally did not increase with song duration. C: responses of the same 5 birds to probe stimuli with altered durations. All symbols as in Fig. 3C. Left: percent correct as a function of probe song duration. All birds performed significantly above chance for all duration probe stimuli, although their performance decreased below control levels for the largest changes (61 and 164% duration). Middle: percent response as a function of song duration. Many birds showed a small decrease in response rate only to the most compressed (61% duration) stimuli. Right: reaction time as a function of song duration. Most birds showed no change in reaction time with changes in song duration.

as described in the legend for Fig. 3B. - - -, the range of natural variability in individuals' song durations (±4%). From 76 to 132% duration the bird's performance on probes overlaps with her performance on control trials, and she performs significantly above chance on all probes. Middle: percent response as a function of song duration. Response rates for probes were comparable to response rates for control trials except for the most compressed songs. Right: reaction time as a function of duration. Reaction time generally did not increase with song duration. C: responses of the same 5 birds to probe stimuli with altered durations. All symbols as in Fig. 3C. Left: percent correct as a function of probe song duration. All birds performed significantly above chance for all duration probe stimuli, although their performance decreased below control levels for the largest changes (61 and 164% duration). Middle: percent response as a function of song duration. Many birds showed a small decrease in response rate only to the most compressed (61% duration) stimuli. Right: reaction time as a function of song duration. Most birds showed no change in reaction time with changes in song duration.

Females showed far greater tolerance for percentage changes in song duration than for percentage changes in song pitch. Figure 4B (left) shows percent correct as a function of probe song duration for the example bird shown in Fig. 3B. One hundred percent duration indicates control interpolation without overall change; represents the bird's performance on normally rewarded control trials, and - - - represent the range of natural variation (±4%). In contrast to her behavior on pitch-shifted probes, this female responded well above chance to all duration probes, including those compressed or expanded well beyond the range of natural variability of duration within an individual. For duration changes ≤32%, her performance overlapped with her control performance. For a 64% change in duration, her performance dropped but remained above chance. Percent response (Fig. 4B, middle) was similar to control for all probes except the most compressed (61% duration). Reaction times (Fig. 4B, right) were not significantly different from control for all probes.

represents the bird's performance on normally rewarded control trials, and - - - represent the range of natural variation (±4%). In contrast to her behavior on pitch-shifted probes, this female responded well above chance to all duration probes, including those compressed or expanded well beyond the range of natural variability of duration within an individual. For duration changes ≤32%, her performance overlapped with her control performance. For a 64% change in duration, her performance dropped but remained above chance. Percent response (Fig. 4B, middle) was similar to control for all probes except the most compressed (61% duration). Reaction times (Fig. 4B, right) were not significantly different from control for all probes.

Data from four additional birds (Fig. 4C) confirmed this result. Birds behaved well above chance on all probes, covering a 2.6-fold range of song durations. Performance dropped slightly for large duration changes (61 and 164% of original duration) but remained over chance (95% confidence intervals) for all birds. Most birds showed a small drop in response rates for the most compressed songs (61% duration) but responded equally well to all other probe stimuli. Reaction times for one bird increased with song duration but remained similar for the other birds. Together these data indicate that syllables and intervals within a broad range of durations are sufficient for classification of songs from different individuals.

Females might generalize to songs with altered duration because they consider them to be similar to the training songs or because they are unable to distinguish them from the training songs. To test whether females generalized because they were simply unable to discriminate compressed and expanded songs, we trained six naive females to discriminate songs played at 132 from songs played at 1/132%, or 76%, of the original duration. We chose these two values because responses to both were indistinguishable from responses to training stimuli in the first experiment.

Figure 5 shows percent correct as a function of training day for birds trained on the duration classification task. These curves are very similar to those for birds trained on the individual classification task and suggest that the duration task is no harder than discriminating two individuals. These data indicate that birds are capable of discriminating expanded from contracted songs but that they treat them similarly in the individual classification task.

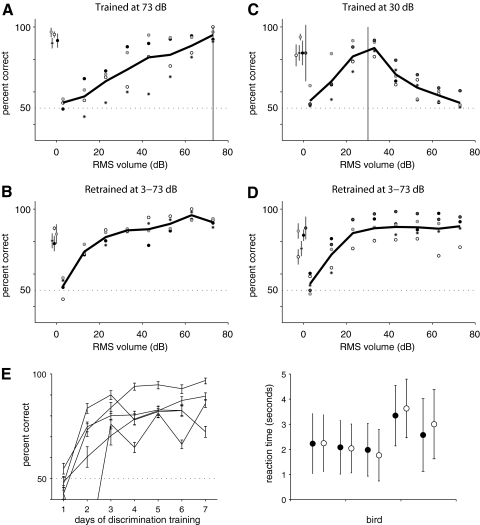

Fig. 5.

Learned discrimination of songs with different durations. A: learning curves for 6 naive birds trained to discriminate songs that differed only in duration. Mean ± SD of percent correct are shown for each day. Birds were trained to discriminate 50 versions of the original B song, compressed to 76% of the original duration, from the 50 versions of the same B songs expanded to 164% of their original duration. These two manipulations were chosen because when birds were asked to categorize songs of two different individuals, the responses to these compressed and expanded songs were not significantly different from responses to the original songs. When explicitly trained to discriminate based on duration, all birds learned to discriminate better than chance by the 2nd day of training. B: mean ± SD of reaction time for compressed (●) and expanded (○) songs for all birds. There was no significant difference in reaction time for the two different song types.

Classification of songs with altered mean volume

To examine the effects of song volume on song classification, we tested four birds, trained on songs with a mean amplitude of 73 dB, with probe stimuli played at a range of volumes (3–73 dB in 10 dB steps, Fig. 6A). Ten silent probes (with the same durations as the original probe songs) were included as well. To compare the birds' generalization abilities to their discrimination thresholds, we then retrained these birds at the full range of volumes and repeated the generalization test (Fig. 6B).

Fig. 6.

Testing generalization from one volume to another. A: performance of 4 birds trained at 73 dB RMS on probe songs played at 3–73 dB. Birds did not consistently generalize across volumes: 1 bird performed significantly worse than its control behavior for all tested stimuli softer than 73 dB, whereas another performed significantly worse for stimuli below 63 dB. Two other birds showed a small amount of generalization, but performed significantly worse than control for stimuli softer than 43 and 33 dB, respectively. B: performance of the same 4 birds after retraining on stimuli played at 3–73 dB. Performance at low volumes (13–43 dB) improved for all birds, indicating that their initial generalization performance at these intensities was not as good as the birds' ability to discriminate these stimuli. C: performance of 5 birds trained with 30 dB RMS songs on probe songs at 3–73 dB. The birds performed significantly above chance for songs from 13–43 dB but performed at chance level for songs played at 63 and 73 dB. The vertical line in (A) and (C) indicate the RMS volume of the training songs. D: performance of the same birds in (C) on identical probes after 1–2 days of retraining with songs at many volumes. All symbols as in (Fig. 3C). After retraining, the birds performed above chance for all songs between 13 and 73 dB. These data indicate that failure to correctly classify loud songs in C was not due to an inability to discriminate them. E, left: Learning curves for 5 naive birds trained to discriminate songs of two different individuals played at 30 dB RMS. Mean ± SD of percent correct are shown for each day. All birds learned to discriminate better than chance by the 3rd day of training. Right: Mean and SD of reaction times for birds trained on 30 dB songs.

Surprisingly, birds did not generalize consistently across volumes in this task, although there was variation across birds. Two birds performed significantly worse than their control behavior even on stimuli played 10–20 dB softer than mean training volume, a third performed significantly worse for stimuli >30 dB softer, while a fourth showed performance significantly different from her behavior on training trials when songs were >40 dB softer than the training set. For all birds, performance on low volume generalization trials after training at 73 dB only was significantly worse than their performance after retraining at all volumes (Fig. 6B), indicating that their initial reduced performance was not due to an inability to hear or to discriminate stimuli played at these low levels.

One explanation for these results is that birds trained at 73 dB do not attend to stimuli played at much softer volumes. To address this possibility, we trained five naive birds to discriminate songs played at 30 dB RMS, then tested them on the same range of probe stimuli (3–73 dB; Fig. 6C). We found that none of these birds correctly generalized the learned classification to louder volumes. Birds responded above chance only to stimuli within 20 dB of the training volume. In response to the loudest stimuli (73 dB) all birds performed at chance.

As with the birds initially trained at 73 dB, retraining of these birds on all volumes improved performance markedly. Within 1 day of retraining, all birds responded above chance to stimuli from 13 to 73 dB (Fig. 6D). Together these data suggest that birds do not automatically generalize a song classification learned at one volume to songs played at a very different volume. Instead, they generalize only within a range of ∼20–40 dB.

DISCUSSION

The goal of this study was to determine what parameters govern whether a female zebra finch classifies a novel song as belonging to a familiar individual and to provide a basis for comparing neural response properties to an ethologically relevant classification behavior. In general, we expect that different parameters of communication sounds may be important for different tasks. Human listeners, for example, use different cues to determine the identity of a word versus the identity of its speaker (Avendaño et al. 2004; O'Shaughnessy 1986; Walden et al. 1978). Different aspects of song may carry information about a singer's species, family, identity, distance, and social intent (Becker 1982; Marler 1960; Miller 1982). In this study, we chose to focus on the parameters underlying classification of songs from two conspecific individuals.

We used an established method, generalization of a learned classification task, as a paradigm for studying the cues birds use to associate a song with an individual (Beecher et al. 1994; Gentner and Hulse 1998). In this operant task, a bird is taught to categorize many stimuli without any constraint on the cues used for identification, then tested on novel probe stimuli in which particular cues are modified. Probe stimuli are rewarded without respect to the bird's choice, ensuring that the bird cannot learn the imposed ”correct“ answer for these stimuli from the reward pattern. Performance significantly above chance on a probe stimulus is considered evidence for generalization to that probe. By presenting sets of natural stimuli and letting the bird choose to what to attend, this method allows one to determine the strategies birds use to categorize complex stimuli in a given context. This paradigm has several other advantages: birds learn it quickly, it makes it possible to present many test stimuli in a short period of time, it can be used to test both male and female responses using the same paradigm, and it is easy to distinguish incorrect responses (choice of the wrong perch), which signify a failure to generalize the classification, from simple lack of response (no perch chosen), which could signify tiredness, indifference, or lack of attention. Other assays, such as measuring approach behavior (Miller 1979), calls (Vicario 2004; Vicario et al. 2002), singing (Stripling et al. 2003), or displays (Nowicki et al. 2002; O'Lochlen and Beecher 1999) in response to song presentation, have also been successfully used to gauge mate recognition and song familiarity, but it is often difficult to determine the difference between incorrect and absent responses. Techniques such as multi-dimensional scaling (e.g., Dooling et al. 1987) have also provided insight into what acoustic parameters birds use to group or classify calls; however, this approach requires the experimenter to parameterize the differences between the stimuli being classified. This can be difficult to do in the case of complex songs, where syllable structure and timing differ greatly between individuals.

Because our goal was to identify parameters used to identify conspecific individuals, we made an effort to choose a training set that included a large range of the variations in an individual's song that a female housed in our laboratory might encounter. We therefore trained females on 50 song renditions from each male, which varied in bout length, social intent (directed vs. undirected), mean volume (over a range experienced in a standard laboratory cage), and the pitch and duration of individual notes. We tested that females had learned to associate each perch with an individual rather than a specific set of song renditions, by testing that they correctly generalized the task to novel song renditions drawn from the same set of recordings. We note that some variations a female might encounter even in the lab were not included in the training set (such as background noise from other birds) nor were variations that a laboratory female would be unlikely to encounter (recordings from the same male over a large range of distances). These choices may have affected females' ability to generalize to songs strongly altered in intensity, as we discuss further below.

After verifying that females had correctly learned the task, we probed their performance by asking whether they could generalize the classification to songs altered in frequency, duration, and intensity. In these experiments, our goal was to test stimuli both inside and outside the range of natural variability to compare the birds' generalization behavior to the response properties of auditory neurons. From the perspective of auditory neurons, which are highly sensitive to these parameters, one might expect that any change would strongly affect classification. Alternatively, one might expect that a bird's experience is the best predictor of the critical parameters. A given male zebra finch's song varies little in pitch and duration but can in principle vary over a large range of intensities. Thus a strategy based on the cues most informative for song discrimination might dictate classification sensitive to changes in pitch and duration but not intensity. Our results show, however, that birds do not classify stimuli according to either of these simple predictions.

For one, we found that birds relied much more on pitch than on duration (in terms of percentage difference) to categorize modified songs as belonging to one of two individuals. Although these cues vary by similar amounts within naturally occurring songs (1–3% for pitch, 1–4% for duration), females showed much narrower tolerance for pitch than for duration. Females generalized over a wider range than the 0.5% just noticeable difference for pitch measured in most avian species (Dooling et al. 2000), suggesting that discrimination abilities did not limit birds' ability to perform the classification task on pitch-shifted stimuli. However, performance on pitch-shifted stimuli fell to chance rapidly once stimuli fell outside of the range of natural variation. Studies in other songbird species have shown that generalization of a tone sequence discrimination task to new frequencies is constrained to the frequency range encompassed by the original training stimuli (Hulse and Cynx 1985; Hulse et al. 1998). Our results are consistent with these findings and illustrate that this frequency range constraint holds for more complex natural stimuli.

In contrast, females performed significantly above chance over a more than twofold range of syllable durations. Just noticeable differences reported in the literature for timing vary depending on the question being asked. Parakeets can detect a change of 10–20% in the duration of a signal (Dooling and Haskell 1978), while zebra finches are able to detect gaps of only 2–3 ms in broadband noise (Okanoya and Dooling 1990). In our study, we found that the females were easily able to discriminate songs compressed by 32% from those expanded by 32%, although they generalized completely to both these stimuli. Together with our results for pitch-shifted stimuli, these data support the idea that discrimination abilities do not necessarily predict generalization abilities and that generalization must be tested separately.

More naturalistic studies of songbirds, although they tend to examine a smaller range of variation, also support the importance of frequency for vocal identification (Brooks and Falls 1975b; for review, see Falls 1982; Vignal et al. 2008). A greater selectivity for frequency than timing cues for individual identification has also been seen in mice. Auditory cortex responses of mouse mothers carry more information about pup calls than those of virgins, primarily because of changes in frequency coding (Galindo-Leon et al. 2009; Liu and Schreiner 2007).

These results parallel aspects of human speaker identification. Like zebra finches, humans depend heavily on pitch cues to identify speakers (O'Shaughnessy 1986) but not to identify phonemes (Avendaño et al. 2004). Word duration can be important as well (Walden 1978) but is less reliable than spectral features. Thus the acoustic parameters used by these songbirds to identify individuals may be closer to those used by human beings to identify speakers rather than words.

These findings on pitch and duration have important consequences for understanding how neural responses to song may be related to perceptual behavior in this task. Many neurons in field L, the avian analog of primary auditory cortex, are tightly tuned in time (Nagel and Doupe 2008), causing them to respond to syllable onsets with precisely time-locked spikes (Woolley et al. 2006). This temporal pattern of spiking provides significant information about what song was heard (Narayan et al. 2006), and several papers have therefore suggested that these temporal patterns of spiking are critical for birds' discrimination and recognition of songs (Larson et al. 2009; Wang et al. 2007). Because these patterns are primarily driven by syllable onsets, the absolute temporal pattern of spiking in a single neuron should change as a song is expanded or contracted in time. The ability of birds to generalize across large changes in duration calls into question simple models that use such temporal spiking patterns in field L to classify songs.

An alternate model of song classification could be based on the particular population of cells activated by song rather than individual neurons' temporal pattern of firing. Because many field L neurons are tightly tuned in frequency, the subset of field L neurons that fire during the course of a song will depend on the spectral content of that song and will thus vary between songs of different individuals. For neurons that care primarily about frequency, a pitch-shifted song should activate a different population of neurons than the original song, while a song altered only in duration should activate the same ensemble as the original. The observed tolerance for duration shifts over pitch shifts supports this model. According to this scheme, birds should tolerate only frequency shifts smaller than the typical spectral tuning widths of field L neurons, which would not shift the population of neurons activated. In fact, in our behavioral task, females' limited tolerance for pitch-shifted songs showed remarkable similarity to spectral tuning widths: the half-width of the pitch tuning curves was 0.11–0.22 octaves, while the half-widths of field L neurons have a minimum of 0.13 octaves, and a median of 0.33 octaves (Nagel and Doupe 2008). This suggests that which cells are active at a given time is more important for this classification task than the precise pattern of activity in those cells.

Temporal patterns of spiking may be used for other types of auditory tasks, however. For example, our data show that birds can discriminate songs that differ only in duration, suggesting that they can use timing information to make perceptual decisions. Moreover because behavioral categorization eventually falls off at large duration shifts (64% change), temporal patterns likely play some role in song categorization. This may reflect a dependence of perceptual classification on the slow temporal evolution of an ensemble of neurons active during song, however, rather than on the details of spike timing in individual neurons.

In a second set of experiments, we asked birds whether they could generalize a song classification task learned at one stimulus intensity to another. The ability to generalize across intensities is considered fundamental to pattern recognition tasks in both the auditory and visual domains. Yet most auditory neurons show strong nonlinearities in their response as a function of sound level (Geissler 1998; Nagel and Doupe 2006). An outstanding question has therefore been where in the auditory system invariance to volume emerges (Billimoria et al. 2009; Drew and Abbott 2003; Wang et al. 2007).

Surprisingly, we found that in this task most females did not generalize to songs >20 dB louder or softer than the training stimuli. This held true even when the probe songs were much louder than the training stimuli (while still within naturalistic sound levels) and belies the simple idea that birds automatically generalize singer identity across volumes. One possible explanation for this finding lies in the role of intensity cues in behavior: studies in the field have shown that male birds respond differently to playback of a neighbor's song from different distances and positions (Brooks and Falls 1975a; Naguib and Todt 1998; Stoddard et al. 1991). Our training set contained only songs recorded over a relatively narrow range of distances—those a male could reach within a standard laboratory cage. That females did not generalize to songs played back at very different volumes may indicate that the category they learned was “male A at a given distance” rather than simply “male A.” This could be tested by explicitly training on recordings made at a larger range of distances. These data also raise the possibility that the different representations of loud and soft sounds in field L have perceptual consequences, and that intensity invariance is not a central or automatic function of the ascending auditory system; in the visual system, some perceptual invariances can also be unexpectedly labile (Cox et al. 2005).

Understanding how the brain allows humans and animals to recognize complex patterns is a fundamental goal of neuroscience. Often, however, physiological studies assume that we know what tasks the brain solves—such as building invariance to position and scale and luminance in the visual system, concentration in the olfactory system, and intensity in the auditory system. Our data suggest that animals can classify stimuli in unexpected ways and do so differently depending on the task at hand. Relating neural activity to perceptual behavior will require detailed and quantitative assessments of behavior as well as of neural activity.

GRANTS

This work was supported by National Institute of Mental Health Grants MH-55987 and MH-077970 to A. J. Doupe and by a Howard Hughes Medical Institute fellowship to K. I. Nagel.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

ACKNOWLEDGMENTS

We thank T. Gentner, B. Dooling, and D. Schoppik for advice in setting up the behavioral apparatus, L. Bocskai and D. Floyd for constructing the behavioral boxes, K. McGary for help with electronics, S. C. Woolley for recording the birdsongs, and S. Sober and M. Brainard for comments on the manuscript. Phase vocoder code by D.P.W. Ellis.

Present address of K. Nagel: Dept. of Neurobiology, Harvard Medical School, 220 Longwood Ave. Boston, MA 02115.

REFERENCES

- Avendaño C, Deng L, Hermansky H, Gold B. The analysis and representation of speech. In: Speech Processing in the Auditory System. New York: Springer-Verlag, 2004, p. 63– 100 [Google Scholar]

- Billimoria CP, Kraus BJ, Narayan R, Maddox RK, Sen K. Invariance and sensitivity to intensity in neural discrimination of natural sounds. J Neurosci 28: 6304– 6308, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becker PH. The coding of species-specific characteristics in bird sounds. In: Acoustic Communication in Birds, edited by Kroodsma DE, Miller EH, Ouellet H. New York: Academic, 1982, vol. 1, p. 213–252 [Google Scholar]

- Beecher MD, Campbell SE, Burt JM. Song perception in the song sparrow: birds classify by song type but not by singer. Anim Behav 47: 1343– 1351, 1994 [Google Scholar]

- Brooks RJ, Falls JB. Individual recognition by song in white-throated sparrows. II. Effects of location. Can J Zool 53: 1412– 1420, 1975a [Google Scholar]

- Brooks RJ, Falls JB. Individual recognition by song in white-throated sparrows. III. Song features used in individual recognition. Can J Zool 53: 1749– 1761, 1975b [Google Scholar]

- Burt JM, Lent KL, Beecher MD, Brenowitz EA. Lesions of the anterior forebrain song control pathway in female canaries affect song perception in an operant task. J Neurobiol 42: 1– 13, 2000. [DOI] [PubMed] [Google Scholar]

- Cadieu C, Kouh M, Pasupathy A, Connor CE, Riesenhuber M, Poggio T. A model of V4 shape selectivity and invariance. J Neurophysiol 98: 1733– 1750, 2007 [DOI] [PubMed] [Google Scholar]

- Cox DD, Meier P, Oertelt N, DiCarlo JJ. Breaking position-invariant object recognition. Nat Neurosci 8: 1145– 1147, 2005 [DOI] [PubMed] [Google Scholar]

- Cooper BG, Goller F. Physiological insights into the social-context-dependent changes in the rhythm of the song motor program. J Neurophysiol 95: 3798– 3809, 2006 [DOI] [PubMed] [Google Scholar]

- Cynx J, Gell C. Social mediation of vocal amplitude in a songbird, Taeniopygia guttata. Anim Behav 67: 451– 455, 2004 [Google Scholar]

- Dooling RJ, Haskell RJ. Auditory duration discrimination in the parakeet. J Acoust Soc Am 63: 1640– 1642, 1978 [DOI] [PubMed] [Google Scholar]

- Dooling RJ, Lohr B, Dent ML. Hearing in birds and reptiles. In: Comparative Hearing, edited by Dooling RJ, Fay RR, Popper AN. New York: Springer-Verlag, 2000, p. 308–360 [Google Scholar]

- Dooling RJ, Park TJ, Brown SD, Okanoya K. Perceptual organization of acoustic stimuli by budgerigars (Melopsittacus undulatus). II. Vocal signals. J Comp Neurol 101: 367– 381, 1987 [DOI] [PubMed] [Google Scholar]

- Drew PJ, Abbott LF. Model of song selectivity and sequence generation in area HVc of the songbird. J Neurophysiol 89: 2697– 2706, 2003 [DOI] [PubMed] [Google Scholar]

- Falls JB. Individual recognition by sound in birds. In: Acoustic Communication in Birds, edited by Kroodsma DE, Miller EH, Quellet H. New York: Academic, 1982, vol. 2, p. 237–278 [Google Scholar]

- Flanagan RM, Golden M. Phase vocoder. Bell Syst Techn J 45: 1493– 1509, 1966 [Google Scholar]

- Fortune ES, Margoliash D. Cytoarchitectonic organization and morphology of cells of the field L complex in male zebra finches (Taenopygia guttata). J Comp Neurol 325: 388– 404, 1992 [DOI] [PubMed] [Google Scholar]

- Fortune ES, Margoliash D. Parallel pathways and convergence onto HVc and adjacent neostriatum of adult zebra finches (Taeniopygia guttata). J Comp Neurol 360: 413– 441, 1995 [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. Categorical representation of visual stimuli in the primate prefrontal cortex. Science 291: 312– 316, 2001 [DOI] [PubMed] [Google Scholar]

- Galindo-Leon EE, Lin FG, Liu RC. Inhibitory plasticity in a lateral band improves cortical detection of natural vocalizations. Neuron: 705–716, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geisler CD. From Sound to Synapse: Physiology of the Mammalian Ear. Oxford, UK: Oxford Univ. Press, 1998 [Google Scholar]

- Gentner TQ, Hulse SH. Perceptual mechanisms for individual vocal recognition in European starlings, Sturnus vulgaris. Anim Behav 56: 579– 594, 1998 [DOI] [PubMed] [Google Scholar]

- Gentner TQ, Hulse SH, Bentley GE, Ball GF. Individual vocal recognition and the effect of partial lesions to HVc on discrimination, learning, and categorization of conspecific song in adult songbirds. J Neurobiol 42: 117– 133, 2000 [DOI] [PubMed] [Google Scholar]

- Glaze CM, Troyer TW. Temporal structure in zebra finch song: implications for motor coding. J Neurosci 26: 991– 1005, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gross-Isserhof R, Lancet D. Concentration-dependent changes of perceived odor quality. Chem Senses 13: 191– 204, 1998 [Google Scholar]

- Hulse SH, Cynx J. Relative pitch perception is constrained by absolute pitch in songbirds. J Comp Psychol 99: 176– 196, 1985 [Google Scholar]

- Hulse SH, Page SC, Braaten RF. Frequency range size and the frequency range constraint in auditory perception by European starlings (Sturnus vulgaris). Anim Learn Behav 18: 238– 245, 1990 [Google Scholar]

- Hung CP, Kreiman G, Poggio T, DiCarlo JJ. Fast readout of object identity from macaque inferior temporal cortex. Science 310: 863– 866, 2005 [DOI] [PubMed] [Google Scholar]

- Jusczyk PW, Luce PA. Speech perception and spoken word recognition: past and present. Ear Hear 23: 2– 40, 2002 [DOI] [PubMed] [Google Scholar]

- Kao MH, Brainard MS. Lesions of an avian basal ganglia circuit prevent context-dependent changes to song variability. J Neurophysiol 96: 1441– 1455, 2006 [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, DiCarlo JJ. Learning and neural plasticity in visual object recognition. Curr Opin Neurobiol 16: 152– 158, 2006 [DOI] [PubMed] [Google Scholar]

- Kreiman G, Hung CP, Kraskov A, Quiroga RQ, Poggio T, DiCarlo JJ. Object selectivity of local field potentials and spikes in the macaque inferior temporal cortex. Neuron 49: 433– 445, 2006 [DOI] [PubMed] [Google Scholar]

- Larson E, Billimoria CP, Sen K. A biologically plausible computational model for auditory object recognition. J Neurophysiol 101: 323– 331, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu RC, Schreiner CE. Auditory cortical detection and discrimination correlates with communicative significance. PLoS Biol 5: 1426– 1439, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35a.Marler P. Bird songs and mate selection. In: Animal Sounds and Communication, edited by Lanyon WE, Tavolga WN. Washington, DC: Am Inst Biol Sci, 1960, p. 348–367 [Google Scholar]

- Miller DB. The acoustic basis of mate recognition by female zebra finches (Taeniopygia guttata). Anim Behav 27: 376– 380, 1979 [Google Scholar]

- Miller EH. Character and variance shift in the acoustic signals of birds. In: Acoustic Communication in Birds, edited by Kroodsma DE, Miller EH, Ouellet H. New York: Academic, 1982, vol. 1, p. 253–285 [Google Scholar]

- Naugib MD, Todt D. Recognition of neighbors' song in a species with large and complex song repertoires: the Thrush Nightingale. J Avian Biol 29: 155– 160, 1998 [Google Scholar]

- Nagel KI, Doupe AJ. Temporal processing and adaptation in the songbird auditory forebrain. Neuron 51: 845– 859, 2006 [DOI] [PubMed] [Google Scholar]

- Nagel KI, Doupe AJ. Organizing principles of spectro-temporal encoding in the avian primary auditory area field L. Neuron 58: 938– 955, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Narayan R, Graña G, Sen K. Distinct time scales in cortical discrimination of natural sounds in songbirds. J Neurophysiol 96: 252– 258, 2006 [DOI] [PubMed] [Google Scholar]

- Nelson DA. Song frequency as a cue for recognition of species and individuals in the field sparrow (Spizella pusilla). J Comp Psychol 103: 171– 176, 1989 [DOI] [PubMed] [Google Scholar]

- Nelson DA, Marler P. Categorical perception of a natural stimulus continuum: birdsong. Science 244: 976– 978, 1989 [DOI] [PubMed] [Google Scholar]

- Nowicki S, Searcy WA, Peters S. Quality of song learning affects female response to male bird song. Proc Biol Sci 269: 1949– 1954, 2002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okanoya K, Dooling RJ. Detection of gaps in noise by budgerigars (Melopsittacus undulatus) and zebra finches (Peophila guttata). Hear Res 50: 185– 192, 1990 [DOI] [PubMed] [Google Scholar]

- O'Lochlen AL, Beecher MD. Mate, neighbour and stranger songs: a female song sparrow perspective. Anim Behav 58: 13– 20, 1999 [DOI] [PubMed] [Google Scholar]

- O'Shaughnessy D. Speaker recognition. IEEE Acoust Speech Signal Process 3: 4– 17, 1986 [Google Scholar]

- Prather JF, Nowicki S, Anderson RC, Peters S, Mooney R. Neural correlates of categorical perception in learned vocal communication. Nat Neurosci 12: 221– 228, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scharff C, Nottebohm F, Cynx J. Conspecific and heterospecific song discrimination in male zebra finches with lesions in the anterior forebrain pathway. J Neurobiol 36: 81– 90, 1998 [PubMed] [Google Scholar]

- Sen K, Theunissen FE, Doupe AJ. Feature analysis of natural sounds in the songbird auditory forebrain. J Neurophysiol 86: 1445– 1458, 2001 [DOI] [PubMed] [Google Scholar]

- Serre T, Oliva A, Poggio T. A feedforward architecture accounts for rapid categorization. Proc Natl Acad Sci USA 104: 6424– 6249, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shamir M, Sen K, Colburn HS. Temporal coding of time-varying stimuli. Neural Comput 19: 3239– 3261, 2007 [DOI] [PubMed] [Google Scholar]

- Stoddard PK, Beecher MD, Horning CL, Campbell SE. Recognition of individual neighors by song in the song sparrow, a species with song repertoires. Behav Ecol Sociobiol 29: 211– 215, 1991 [Google Scholar]

- Stripling R, Milewski L, Kruse AA, Clayton DF. Rapidly learned song-discrimination without behavioral reinforcement in adult male zebra finches (Taeniopygia guttata). Neurobiol Learn Mem 79: 41– 50, 2003 [DOI] [PubMed] [Google Scholar]

- Sugrue LP, Corrado GS, Newsome WT. Choosing the greater of two goods: neural currencies for valuation and decision making. Nat Rev Neurosci 6: 363– 375, 2005 [DOI] [PubMed] [Google Scholar]

- Theunissen FE, Sen K, Doupe AJ. Spectral-temporal receptive fields of nonlinear auditory neurons obtained using natural sounds. J Neurosci 20: 2315– 2331, 2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vates GE, Broome BM, Mello CV, Nottebohm F. Auditory pathways of caudal telencephalon and their relation to the song system of adult male zebra finches. J Comp Neurol 366: 613– 642, 1996 [DOI] [PubMed] [Google Scholar]

- Vicario DS. Using learned calls to study sensory-motor integration in songbirds. Ann NY Acad Sci 1016: 246– 262, 2004 [DOI] [PubMed] [Google Scholar]

- Vicario DS, Raksin JN, Naqvi NH, Thande N, Simpson HB. The relationship between perception and production in songbird vocal imitation: what learned calls can teach us. J Comp Physiol [A] 188: 897– 908, 2002 [DOI] [PubMed] [Google Scholar]

- Vignal C, Mathevon N, Mottin S. Audience drives male songbird response to partner's voice. Nature 430: 448– 451, 2004 [DOI] [PubMed] [Google Scholar]

- Vignal C, Mathevon N, Mottin S. Mate recognition by female zebra finch: analysis of individuality in male call and first investigations on female decoding process. Behav Process 77: 191– 198, 2008 [DOI] [PubMed] [Google Scholar]

- Walden BE, Montgomery AA, Gibeily GJ, Prosek RA, Schwartz DM. Correlates of psychological dimensions in talker similarity. J Speech Hear Res 21: 265– 275, 1978 [DOI] [PubMed] [Google Scholar]

- Wang L, Narayan R, Graña G, Shamir M, Sen K. Cortical discrimination of complex natural stimuli: can single neurons match behavior? J Neurosci 27: 582– 589, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolley SM, Fremouw TE, Hsu A, Theunissen FE. Tuning for spectro-temporal modulations as a mechanism for auditory discrimination of natural sounds. Nat Neurosci 8: 1371– 1379, 2005 [DOI] [PubMed] [Google Scholar]

- Woolley SM, Gill PR, Theunissen FE. Stimulus-dependent auditory tuning results in synchronous population coding of vocalizations in the songbird midbrain. J Neurosci 26: 2499– 2512, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolley SM, Gill PR, Fremouw T, Theunissen FE. Functional groups in the avian auditory system. J Neurosci 29: 2780– 2793, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zann RA. The Zebra Finch: A Synthesis of Field and Laboratory Studies. Oxford, UK: Oxford Univ. Press, 1996. [Google Scholar]