Abstract

Recent studies have described vestibular responses in the dorsal medial superior temporal area (MSTd), a region of extrastriate visual cortex thought to be involved in self-motion perception. The pathways by which vestibular signals are conveyed to area MSTd are currently unclear, and one possibility is that vestibular signals are already present in areas that are known to provide visual inputs to MSTd. Thus, we examined whether selective vestibular responses are exhibited by single neurons in the middle temporal area (MT), a visual motion-sensitive region that projects heavily to area MSTd. We compared responses in MT and MSTd to three-dimensional rotational and translational stimuli that were either presented using a motion platform (vestibular condition) or simulated using optic flow (visual condition). When monkeys fixated a visual target generated by a projector, half of MT cells (and most MSTd neurons) showed significant tuning during the vestibular rotation condition. However, when the fixation target was generated by a laser in a dark room, most MT neurons lost their vestibular tuning whereas most MSTd neurons retained their selectivity. Similar results were obtained for free viewing in darkness. Our findings indicate that MT neurons do not show genuine vestibular responses to self-motion; rather, their tuning in the vestibular rotation condition can be explained by retinal slip due to a residual vestibulo-ocular reflex. Thus, the robust vestibular signals observed in area MSTd do not arise through inputs from area MT.

Introduction

Self-motion perception relies heavily on both visual motion (optic flow) and vestibular cues (for review, see Angelaki et al., 2009). Neurons with directional selectivity for both visual and vestibular stimuli are strong candidates to mediate multisensory integration for self-motion perception. In particular, neurons in both the dorsal portion of the medial superior temporal area (MSTd) and the ventral intraparietal area (VIP) show selectivity to complex optic flow patterns experienced during self-motion (Tanaka et al., 1986; Duffy and Wurtz, 1991, 1995; Graziano et al., 1994; Schaafsma and Duysens, 1996; Bremmer et al., 2002a), and also respond selectively to translation and rotation of the body in darkness (Duffy, 1998; Bremmer et al., 1999, 2002b; Froehler and Duffy, 2002; Schlack et al., 2002; Gu et al., 2006; Takahashi et al., 2007). Recent experiments have linked area MSTd to perception of heading based on vestibular signals (Gu et al., 2007), and have implicated MSTd neurons in combining optic flow and vestibular cues to enhance heading discrimination (Gu et al., 2008).

Despite considerable focus on the roles of MSTd and VIP in visual-vestibular integration, the pathways by which vestibular signals reach these areas remain unknown. One hypothesis is that visual and vestibular inputs arrive at MSTd/VIP through distinct pathways, including a vestibular pathway that remains unidentified. An alternative hypothesis is that vestibular signals are already present in areas that are known to provide visual inputs to MSTd and VIP. The middle temporal (MT) area is an important visual motion processing region in the superior temporal sulcus (STS) (Born and Bradley, 2005), and projects heavily to other functional areas in macaques, including MSTd and VIP (Maunsell and van Essen, 1983; Ungerleider and Desimone, 1986). Area MT has mainly been studied in relation to purely visual functions (Born and Bradley, 2005), and MT neurons are generally not thought to receive strong extra-retinal inputs. For example, MT neurons were reported to show little response during smooth pursuit eye movements, whereas MST neurons were often strongly modulated by pursuit (Komatsu and Wurtz, 1988; Newsome et al., 1988). However, a recent study showed that responses of many MT neurons to visual stimuli were modulated by extra-retinal signals, which serve to disambiguate the depth sign of motion parallax (Nadler et al., 2008). This finding could reflect either vestibular or eye movement inputs to MT. Hence, we investigated whether MT neurons respond to translational and rotational vestibular stimulation.

We used an experimental protocol similar to that used previously to characterize visual and vestibular selectivity in MSTd (Gu et al., 2006; Takahashi et al., 2007). In control experiments, we measured vestibular tuning in complete darkness or during fixation of a laser-generated fixation point in a dark room. We find that MT responses are not modulated by vestibular stimulation in the absence of retinal image motion. We conclude that, although visual motion signals in MSTd likely arise through projections from MT (Maunsell and van Essen, 1983; Ungerleider and Desimone, 1986), vestibular information reaches MSTd through distinct, as yet uncharacterized, routes.

Materials and Methods

Responses of MT and MSTd neurons were recorded from three adult rhesus monkeys (Macaca Mulatta) chronically implanted with a head restraint ring and a scleral search coil to monitor eye movements. A recording grid was placed inside the ring and used to guide electrode penetrations into the superior temporal sulcus (for details, see Gu et al., 2006). All animal procedures were approved by the Washington University Animal Care and Use Committee and fully conformed to National Institutes of Heath guidelines. Monkeys were trained using operant conditioning to fixate visual targets for fluid rewards.

Single neurons were isolated using a conventional amplifier, a bandpass eight-pole filter (400–5000 Hz), and a dual voltage–time window discriminator (BAK Electronics). The times of occurrence of action potentials and all behavioral events were recorded with 1 ms resolution by the data acquisition computer. Eye movement traces were low-pass filtered and sampled at 250 Hz. Raw neural signals were also digitized at 25 kHz and stored to disk for off-line spike sorting and additional analyses.

Areas MT and MSTd were identified based on the characteristic patterns of gray and white matter transitions along electrode penetrations, as examined with respect to magnetic resonance imaging scans, and based on the response properties of single neurons and multiunit activity (for details, see Gu et al., 2006). Receptive fields of MT/MSTd neurons were mapped by moving a patch of drifting random dots around the visual field and observing a qualitative map of instantaneous firing rates on a custom graphical interface. In some neurons, quantitative receptive field maps were also obtained (see Fig. 4) using a reverse correlation method as described below. We recorded from any MT/MSTd neuron that was spontaneously active or that responded to a large field of flickering random dots.

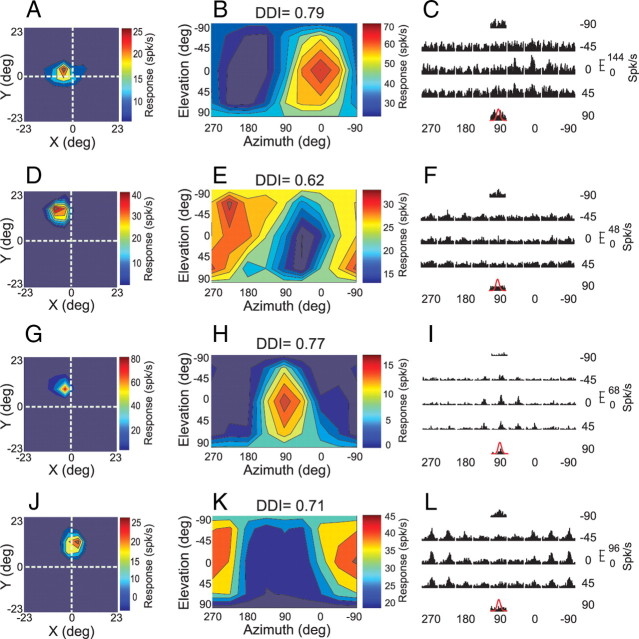

Figure 4.

Receptive field maps and vestibular rotation responses for 4 MT neurons tested in the projector condition. A, D, G, J, Receptive field maps as obtained using a multi-patch reverse correlation method (see Materials and Methods). Each color map represents a region of visual space with a length and width one-half as large as the display screen. The fixation point was presented at the intersection of the dashed white lines. The cell depicted in A had a receptive field that overlapped the fixation point, whereas the other cells did not. B, E, H, K, Vestibular rotation tuning profiles, format as in Figure 1A,B. C, F, I, L, PSTHs corresponding to the 26 distinct directions of rotation tested in the projector condition. Format as in Figure 1C,D.

Vestibular and visual stimuli.

Data were collected in a virtual reality apparatus (for details, see Gu et al., 2006; Takahashi et al., 2007) that consists of a six-degree of freedom motion platform (MOOG 6DOF2000E; Moog) and a stereoscopic video projector (Mirage 2000; Christie Digital Systems). Animals viewed visual stimuli projected onto a tangent screen (60 × 60 cm) at a viewing distance of 32 cm, resulting in a field of view of ∼90 × 90°. Because the apparatus was enclosed on all sides with black, opaque material, there was no change in the visual image during movement, other than visual stimuli presented on the tangent screen and small retinal slip induced by fixational eye movements. The visual stimulus consisted of a three-dimensional (3D) cloud of “stars,” which was generated by an OpenGL graphics board (nVidia Quadro FX3000G) having a pixel resolution of 1280 × 1024.

The “rotation” protocol consisted of real or simulated rotations (right hand rule) around 26 distinct axes separated by 45° in both azimuth and elevation (see Fig. 1, inset). This included all combinations of movement vectors having 8 different azimuth angles (0°, 45°, 90°, 135°, 180°, 225°, 270°, and 315°) and 3 different elevation angles: 0° (the horizontal plane), −45°, and 45° (8 × 3 = 24 directions). For example, azimuth angles of 0° and 180° (elevation = 0°) correspond to pitch-up and pitch-down rotations, respectively. Azimuth angles of 90° and 270° (elevation = 0°) correspond to roll rotations (right-ear-down and left-ear-down, respectively). Two additional stimulus conditions had elevation angles of –90° or 90°, corresponding to leftward or rightward yaw rotation, respectively (bringing the total number of directions to 26). Each movement trajectory had a duration of 2 s and consisted of a Gaussian velocity profile. Rotation amplitude was 9° and peak angular velocity was 23.5°/s. Five repetitions of each motion direction were randomly interleaved, along with a null condition in which the motion platform remained stationary and only a fixation point appeared on the display screen (to assess spontaneous activity). Note that the rotation stimuli were generated such that all axes of rotation passed through a common point that was located in the mid-sagittal plane of the head along the interaural axis. Thus, the animal was always rotated around this point at the center of the head.

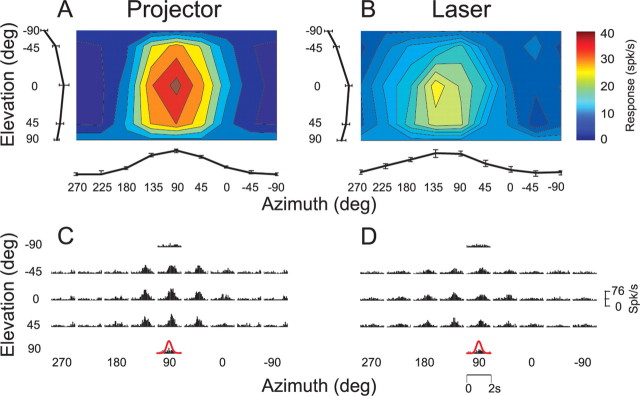

Figure 1.

A–D, 3D rotation tuning for an MT neuron tested during the vestibular condition (A, C), and the visual condition (B, D). The fixation point and visual stimuli were generated by a video projector in this case. Color contour maps in A and B show mean firing rate plotted as a function of 26 distinct azimuth and elevation angles (see inset). Each contour map shows the Lambert cylindrical equal-area projection of the original spherical data (Gu et al., 2006). Tuning curves along the margins of each color map illustrate mean ±SEM firing rates plotted as a function of either elevation or azimuth (averaged across azimuth or elevation, respectively). The PSTHs in C and D illustrate the corresponding temporal response profiles (each PSTH is 2 s in duration). The red Gaussian curves (bottom) illustrate the stimulus velocity profile.

The “translation” protocol consisted of straight translational movements along the same 26 directions described above for the rotation protocol. Again, each movement trajectory had a duration of 2 s with a Gaussian velocity profile. Translation amplitude was 13 cm (total displacement), with a peak acceleration of ∼0.1 G (0.98 m/s2) and a peak velocity of 30 cm/s.

The rotation and translation protocols both included two stimulus conditions. (1) In the “vestibular” condition, the monkey was moved in the absence of optic flow. The screen was blank, except for a fixation point that remained at a fixed head-centered location throughout the motion trajectory (i.e., the fixation point moved with the animal). (2) In the “visual” condition, the motion platform was stationary, while optic flow simulating movement through a cloud of stars was presented on the screen. In initial experiments, MT neurons were tested with both vestibular and visual (optic flow) versions of the rotation and translation protocols, interleaved within the same block of trials (for details, see Takahashi et al., 2007).

To further explore the origins of response selectivity observed under the vestibular rotation condition, subsequent experiments concentrated only on the vestibular rotation stimulus, which was delivered under 3 different viewing conditions, in separate blocks of trials (135 trials per block). The first viewing condition, which we refer to as the ‘projector’ condition, was identical to that used in previous studies to characterize the vestibular responses of MSTd neurons (Gu et al., 2006; Takahashi et al., 2007). In this case, the animal foveated a central, head-fixed target that was projected onto the screen by the Mirage projector. The projected fixation target was a small (0.2° × 0.2°) yellow square with a luminance of 76 cd/m2. In this condition, the animal was not in complete darkness because of the background illumination of the projector that was 1.8 cd/m2. The background image produced by the Mirage DLP projector has a faint but visible “screendoor” pattern. Thus, the visual background in the projector condition did contain a subtle visual texture.

In the second viewing condition, which we refer to as the “laser” condition, the projector was turned off and the animal was in darkness, except for a small fixation point that was projected onto the display screen by a head-fixed red laser. The laser-projected fixation target was also ∼0.2° × 0.2° in size, and had an apparent luminance similar to that in the projector condition despite the different spectral content. Even following extensive dark adaptation, the display screen was not visible to human observers in the laser condition. Correspondingly, measurements with a Tektronix J17 photometer did not reveal a measurable luminance greater than zero.

For both the projector and laser conditions, the animal was required to foveate the fixation target for 200 ms before the onset of the motion stimulus (fixation windows spanned 2 × 2° of visual angle). The animals were rewarded at the end of each trial for maintaining fixation throughout the stimulus presentation. If fixation was broken at any time during the stimulus, the trial was aborted and the data were discarded. Finally, the third viewing condition, which we refer to as the “darkness” condition, consisted of motion in complete darkness; both the projector and laser were turned off and the animal was freely allowed to make eye movements. In this block of trials, there was no behavioral requirement to fixate, and rewards were delivered manually to keep the animal alert.

We did not collect data using the translation protocol in all of the above viewing conditions because we found little modulation of MT responses during vestibular translation in the projector condition (see Fig. 2C,D; supplemental Fig. 1, available at www.jneurosci.org as supplemental material).

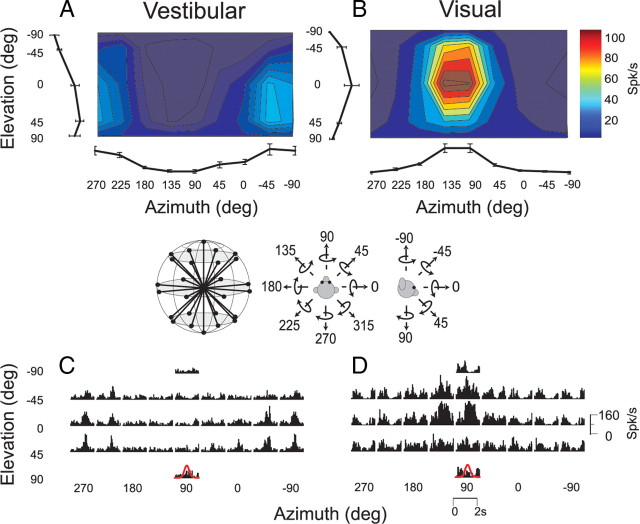

Figure 2.

A–D, Summary of the selectivity of MT neurons in response to (A, B) visual and vestibular rotation and (C, D) visual and vestibular translation. Scatter plots in A and C compare the DDI in the visual versus vestibular conditions. Histograms in B and D show the absolute difference in 3D direction preferences between visual and vestibular conditions (|Δ preferred direction|) for the rotation and translation protocols, respectively. Data in B, D are included only for neurons with significant tuning in both stimulus conditions. All data are from the projector condition.

Data analysis.

Analysis and statistical tests were performed in Matlab (MathWorks) using custom scripts. For each stimulus direction and motion type, we computed neural firing rate during the middle l s interval of each trial and averaged across stimulus repetitions to compute the mean firing rate (Gu et al., 2006). Mean responses were then plotted as a function of azimuth and elevation to create 3D tuning functions. To plot these spherical data on cartesian axes, the data were transformed using the Lambert cylindrical equal-area projection (Snyder, 1987), where the abscissa represents azimuth angle and the ordinate corresponds to a sinusoidally transformed version of the elevation angle. Statistical significance of directional selectivity was assessed using one-way ANOVA for each neuron.

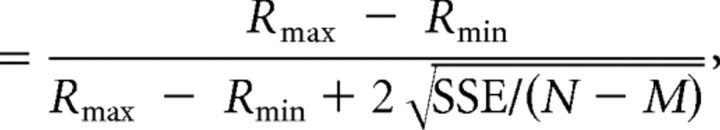

The neuron's preferred direction for each stimulus condition was described by the azimuth and elevation of the vector sum of the individual responses (after subtracting spontaneous activity). In this computation, the mean firing rate in each trial is considered to represent the magnitude of a 3D vector, the direction of which was defined by the azimuth and elevation angles of the particular stimulus (Gu et al., 2006). To quantify the strength of spatial tuning, we computed a direction discrimination index (DDI) (Prince et al., 2002; DeAngelis and Uka, 2003), as follows:

Discrimination index

|

where Rmax and Rmin are the mean firing rates of the neuron along the directions that elicited maximal and minimal responses, respectively. SSE is the sum squared error around the mean responses, N is the total number of observations (trials), and M is the number of stimulus directions (M = 26). This index quantifies the amount of response modulation (due to changes in stimulus direction) relative to the noise level.

Eye movement analysis.

In the projector and laser conditions, animals were required to maintain fixation on a head-fixed target during rotation or translation. Thus, no eye movements were required for the animal to maintain fixation, and the animal should suppress reflexive eye movements driven by the vestibulo-ocular reflex (VOR). Failure to fully suppress the VOR in these stimulus conditions would lead to retinal slip that could elicit visual responses that might be mistakenly interpreted as vestibular responses. Thus, it is important to characterize any residual eye movements that occur in the fixation trials, as well as the more substantial eye movements that would be expected in the darkness condition due to the VOR.

Because torsional eye movements were not measured in these experiments, we could not quantify eye movements that might occur in response to components of roll rotation. Thus, we focused our eye movement analyses on rotations and translations about the 8 stimulus directions within the fronto-parallel plane (yaw and pitch rotations; lateral and vertical translations), as these movements may be expected to elicit horizontal and vertical eye movements. Eye velocity was computed by differentiating filtered eye position traces (boxcar filter, 50 ms width). Fast phases, including saccades, were removed by excluding periods where absolute eye velocity exceeded 6°/s. Data from each individual run were then averaged across stimulus repetitions into mean horizontal and vertical eye velocity profiles (see Fig. 3A,B).

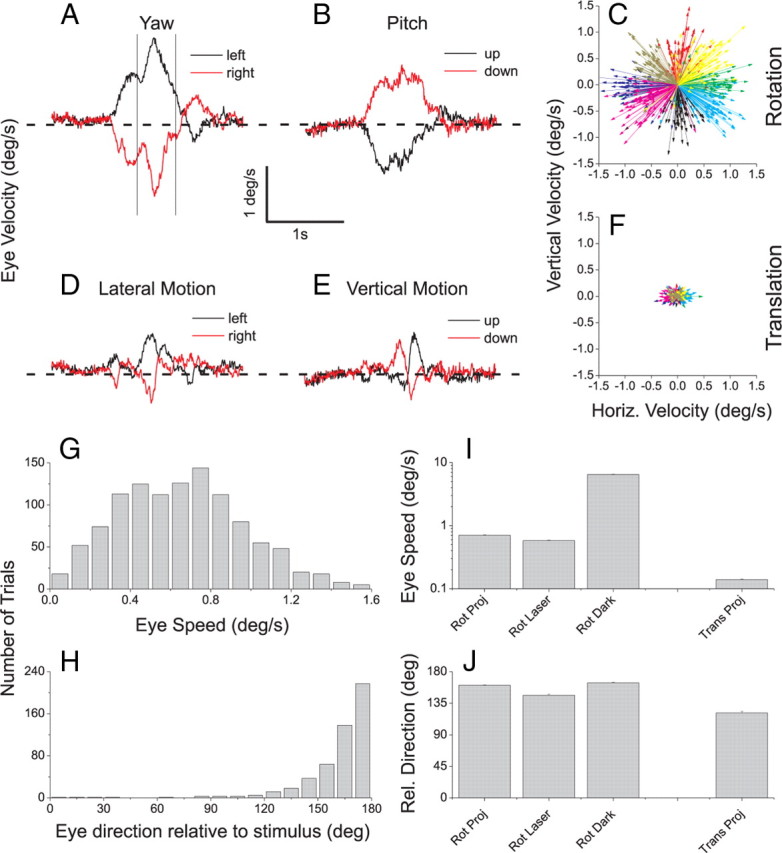

Figure 3.

Eye movement analysis showing incomplete suppression of reflexive eye movements. A, Average horizontal eye velocity is shown during leftward (black) and rightward (red) yaw rotation (vestibular condition). Traces show average eye velocity across all of the recording sessions for which data are shown in Figure 2. B, Average vertical eye velocity during upward (black) and downward (red) pitch rotation. C, Vector plot summary of the residual eye velocities in response to vestibular rotation for one monkey in the projector condition. Each vector represents the average eye velocity for one direction of rotation in one experimental session (green, leftward yaw; red, pitch down; purple, rightward yaw; black, pitch up). D, Average horizontal eye velocity during left/right translation. E, Average vertical eye velocity during up/down translation. F, Vector plot summary for the vestibular translation condition for the same animal as in C (green, left; red, down; purple, right; black, up). G, Distribution of eye speed (vector magnitude) for the same data depicted in C. H, Distribution of eye direction relative to stimulus direction for the data depicted in C. I, Average eye speed across animals for vestibular rotation (projector, laser, and darkness conditions) and vestibular translation (projector condition only). J, Average eye direction relative to stimulus direction for vestibular rotation and translation (format as in I).

To quantify the direction and speed of eye movements relative to stimulus motion, we measured the time-average horizontal and vertical components of eye velocity within a 400 ms time window (0.9–1.3 s poststimulus onset) that generally encompassed the peak eye velocity (see Fig. 3A). The eye velocity components were then averaged across stimulus repetitions for each distinct direction of motion. Thus, for each recording session, we obtained an average eye velocity vector for each different direction of motion within the fronto-parallel plane. A set of such eye movement vectors for one animal is shown in Figure 3C for vestibular rotation in the projector condition.

Receptive field mapping and reverse correlation analysis.

It was important to determine the receptive field locations of MT neurons relative to both the fixation target and the boundaries of the display screen, as retinal slip of these visual features may be expected to elicit responses. For a subset of MT neurons, we obtained quantitative maps of visual receptive field structure using a multi-patch reverse correlation method that we have previously used to characterize MSTd receptive fields (for details, see Chen et al., 2008).

Briefly, either a 4 × 4 or 6 × 6 grid covered a region of the display screen containing the receptive field of the MT neuron under study. At each location in the grid, a patch of drifting random dots was presented. Dots drifted in one of 8 possible directions, 45 apart, and the direction of motion of each patch was chosen randomly (from a uniform distribution) every 100 ms (6 video frames). The direction of motion of each patch was independent of the other patches presented simultaneously. The speed of motion was generally 40°/s, although this was sometimes reduced for MT neurons that preferred slower speeds.

Responses of MT neurons to this multipatch stimulus were analyzed using reverse correlation, as detailed previously (Chen et al., 2008). For each location in the mapping grid, each spike was assigned to the direction of the stimulus that preceded the spike by T ms, where T is the reverse correlation delay. This was repeated for a range of correlation delays from 0 to 200 ms, yielding a direction-time map for each location in the mapping grid. The correlation delay that produced the maximal variance in the direction maps was then selected, and a direction tuning curve for each grid location was computed at this peak correlation delay. These direction tuning curves were fit with a wrapped Gaussian function, and the amplitude of the tuning curve at each grid location was taken as a measure of neural response for each stimulus location. These values were then plotted as a color contour map (see Fig. 4) to visualize the spatial location and extent of the visual receptive field. These quantitative maps were found to agree well with initial estimates of MT receptive fields based on hand mapping.

Results

Data were collected from 102 MT and 53 MSTd neurons recorded from 6 hemispheres of three monkeys. Two monkeys were used for MT and two for MSTd recordings. One monkey was therefore used for both MT and MSTd recordings. Neurons were not selected according to directional tuning for visual or vestibular stimuli; rather, any neuron was recorded that exhibited spontaneous activity or activity in response to flicker of a large-field random-dot stimulus. In initial recordings from area MT, we used the same experimental protocol used by Takahashi et al. (2007) to study MSTd neurons, hereafter referred to as the projector condition (see Materials and Methods). Figure 1 illustrates data from an example MT neuron tested with the 3D rotation protocol, in which the animal was rotated around 26 axes corresponding to all combinations of azimuth and elevation angles in increments of 45° (see inset). Each movement trajectory, either real (vestibular condition) or visually simulated (visual condition), had a duration of 2 s and consisted of a Gaussian velocity profile. This MT neuron showed clear tuning for both vestibular and visual rotations, as illustrated by the color contour plots of mean firing rate (Fig. 1A,B) and by the corresponding peristimulus time histograms (PSTHs) (Fig. 1C,D).

Of 55 MT cells tested under both the vestibular and visual rotation conditions, about half (27, 49%) showed significant tuning in the vestibular condition (ANOVA, p < 0.05), whereas all 55 showed significant tuning in the visual condition. Rotational tuning, as assessed by a direction discrimination index (or DDI, see Materials and Methods), was significantly stronger in the visual than the vestibular condition overall (Wilcoxon signed-rank test, p ≪ 0.001) (Fig. 2A). As shown in Figure 2B, direction preferences for visual versus vestibular rotation tended to be opposite, as was also seen regularly in area MSTd (Takahashi et al., 2007).

In contrast to the rotation condition, only 8/47 (17%) MT cells showed significant tuning during the vestibular translation condition (ANOVA, p < 0.05). All 47 neurons showed significant visual translation tuning. In general, vestibular translation responses were quite weak, and resulted in DDI values that were much smaller than those elicited by visual translation stimuli (Fig. 2C) (Wilcoxon signed-rank test, p ≪ 0.001). Inspection of PSTHs from all neurons revealed that response modulations were generally not consistent with either stimulus velocity or acceleration. Supplemental Figure 1, available at www.jneurosci.org as supplemental material, shows responses from 4 MT neurons with the largest DDI values in the vestibular translation condition. Among the 8 neurons with weak but significant spatial tuning, the difference in direction preferences between the visual and vestibular translation conditions was relatively uniform and not biased toward 180° (Fig. 2D).

The rotational stimuli used here normally generate a robust rotational vestibulo-ocular reflex (RVOR). Although the animals were trained to suppress their RVOR, the 2 × 2° fixation window allows for some residual eye velocity, thus resulting in a visual motion stimulus caused by the fixation point moving relative to the retina. Furthermore, because the DLP projector created substantial background illumination with a very faint screendoor texture (see Materials and Methods), these residual eye movements could activate MT neurons and account for the observed responses in the vestibular rotation condition (Fig. 2A, filled symbols).

Some aspects of the data in Figure 2 support this interpretation. First, the direction preference of MT cells in the vestibular rotation condition was generally opposite to their visual preference (Fig. 2B). Because stimulus directions are referenced to body motion (real or simulated), a vestibular response that is due to retinal slip from an incompletely suppressed RVOR would result in a direction preference opposite to that seen in the visual condition. Second, MT responses were weaker for vestibular translation than vestibular rotation. This result is expected if responses are driven by retinal slip, because the translational VOR (TVOR) is much weaker than the RVOR over the range of stimulus parameters used here (for review, see Angelaki and Hess, 2005). These considerations support the possibility that the observed MT responses to vestibular stimulation might not represent an extra-retinal response to self-motion but rather reflect the exquisite sensitivity of MT neurons to retinal motion slip. Thus, it is critical to characterize the residual eye movements that occur during fixation in the projector condition.

Eye movement analysis

To determine whether there were systematic eye movements in response to vestibular rotation and translation in the projector condition, we analyzed eye traces measured during movements within the fronto-parallel plane (see Materials and Methods for details). Figure 3A shows the average horizontal eye velocity across 54 sessions in response to vestibular yaw rotation. Leftward yaw rotation (black trace) results in a rightward eye movement with a peak velocity near 2°/s. Similarly, rightward yaw rotation (red trace) results in leftward eye velocity as expected from an incompletely suppressed RVOR. A similar pattern of eye traces in seen in Figure 3B for vestibular pitch rotation. These residual eye movements are summarized for one animal by the vector plot in Figure 3C. Each vector represents the average eye velocity for a particular direction of motion in one recording session, measured during a 400 ms time window centered on the peak eye velocity (Fig. 3A, vertical lines). For example, the set of green vectors in Figure 3C represents eye velocity in response to leftward yaw rotation, and the red vectors represent eye movements in response to pitch down. The distribution of eye speeds (vector magnitudes) corresponding to the data of Figure 3C is shown in Figure 3G, and it can be seen that the average eye speed is <1°/s. Figure 3H shows that the residual eye movements in response to vestibular rotation were generally opposite to the direction of head rotation.

During vestibular translation, some residual eye velocity was also observed (Fig. 3D,E). Note, however, that these residual eye movements were substantially smaller in amplitude and narrower in time. They were also more biphasic in waveform (Fig. 3E) thus resulting in less cumulative retinal slip. This difference is emphasized by the vector plot in Figure 3F, which shows that eye movements for this animal were much smaller in response to vestibular translation and less systematically related to stimulus direction. These data are summarized across animals in Figure 3, I and J. Mean eye speed in response to translation (0.14°/s) was significantly smaller than mean eye speed (0.71°/s) in response to rotation (Fig. 3I, leftmost vs rightmost bars) (p < 0.001, t test). Moreover, the average directional difference between eye and stimulus was larger (161°) for rotation than translation (121°), as shown in Figure 3J.

The eye movement analyses of Figure 3 are consistent with the possibility that significant vestibular rotation tuning in the projector condition may be driven by residual eye movements that are much stronger than those seen in the vestibular translation condition. We next examined whether these vestibular rotation responses depended on receptive field location.

Receptive field analysis

If MT responses in the vestibular rotation condition are driven by retinal slip due to an incompletely suppressed RVOR, then these responses may depend on receptive field location. Retinal slip of the fixation target or the visible screen boundaries might be expected to activate MT neurons with receptive fields that are near the fovea or at large eccentricities, respectively. Alternatively, MT neurons at all eccentricities might respond to retinal slip of the faint visual texture produced by the background luminance of the projector. Our analysis suggests that both of these factors contribute to the observed responses.

Figure 4 shows receptive field maps measured using a reverse correlation technique (left column), vestibular rotation tuning profiles (middle column), and response PSTHs (right column) for 4 example MT neurons. Receptive field maps were obtained using a reverse correlation technique (Chen et al., 2008). The neuron in Figure 4A–C has a receptive field that overlaps the fovea (Fig. 4A), and shows robust responses in the vestibular rotation condition that are well tuned (Fig. 4B,C). These responses may be driven by retinal slip of the fixation target. In contrast, the other three neurons depicted in Figure 4 have receptive fields that are well separated from both the fixation target and the boundaries of the projection screen (screen boundaries are at ±45°), yet these neurons still produce robust responses in the vestibular rotation condition. We infer that the responses of these MT neurons are likely driven by retinal slip of the faint background texture produced by the DLP projector.

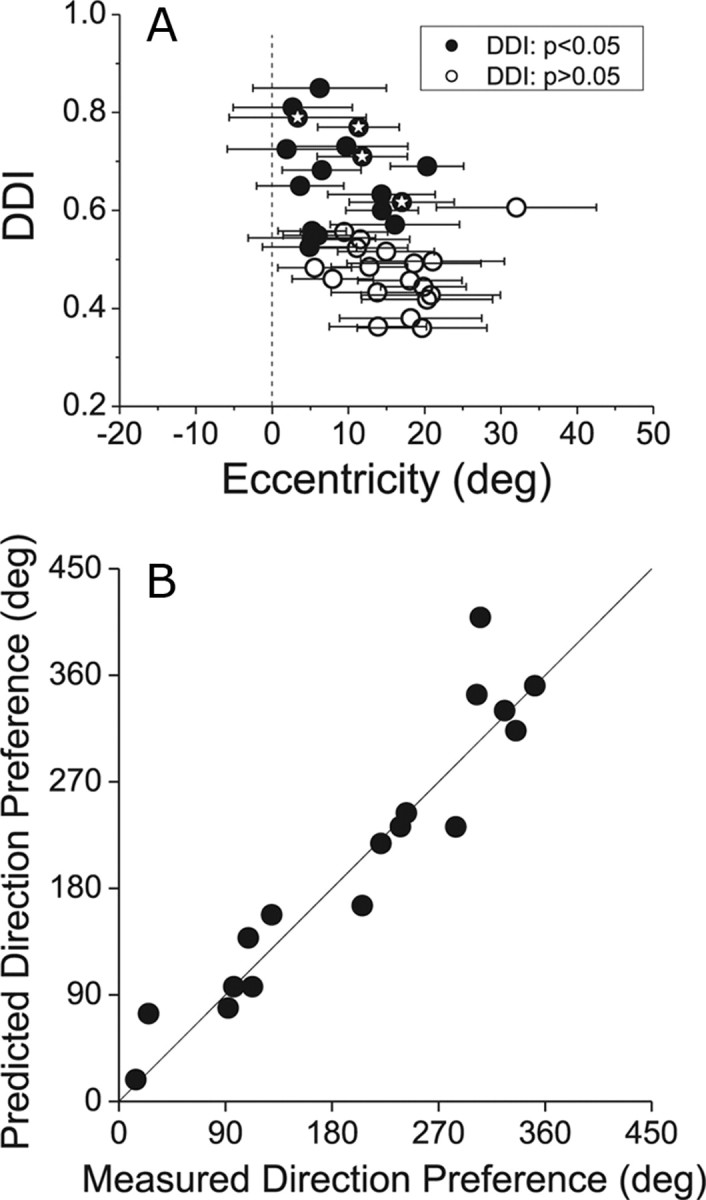

Figure 5A summarizes the relationship between directional selectivity (DDI) in the vestibular rotation condition and receptive field location. The location of data points along the abscissa represents the eccentricity of the center of the receptive field, and the horizontal error bars represent the size of the receptive field (±2 SD of a two-dimensional Gaussian fit). Filled symbols denote MT neurons with significant vestibular rotation tuning (p < 0.05), and symbols containing stars indicate the example neurons of Figure 4. There is a significant negative correlation (Spearman r = −0.50, p < 0.01) between DDI and receptive field eccentricity, indicating that proximity of the receptive field to the fixation point does contribute to vestibular rotation tuning. Nevertheless, there are several neurons (including the 3 examples in Fig. 4D–L) that show significant vestibular rotation tuning yet have receptive fields well separated from the fovea and screen boundaries. Thus, retinal slip of both the fixation point and faint visual background both appear to drive MT responses.

Figure 5.

A, Relationship between the strength of directional tuning in the vestibular rotation condition (using the projector) and the eccentricity of MT receptive fields. Each receptive field map, as in Figure 4, was fit with a two-dimensional Gaussian function. The eccentricity of the center of the receptive field is plotted on the abscissa, and the DDI is plotted on the ordinate. Filled symbols denote neurons with statistically significant rotation tuning (p < 0.05). Horizontal error bars represent receptive field size as ±2 SD of the Gaussian fit. Thus, the horizontal error bars contain 95% of the area of the receptive field. Symbols filled with stars indicate the 4 example neurons shown in Figure 4. B, Comparison of measured visual direction preferences with predicted preferences from vestibular rotation tuning in the projector condition. See Results for details. The strong correlation suggests that vestibular rotation tuning in the projector condition reflects visual responses to retinal slip.

If rotation tuning in the projector condition is driven by retinal slip due to an incompletely suppressed RVOR, then the 3D rotation preference of MT neurons should be linked to the 2D visual direction preference of the neurons. For 17 MT neurons with significant vestibular rotation tuning, reverse correlation maps were available to test this prediction. For each of these neurons, we computed the projection of the preferred 3D rotation axis onto the fronto-parallel plane (for 10/17 neurons, the 3D rotation preference was within 40° of the fronto-parallel plane, and none of the neurons had a 3D rotation preference within 30° of the roll axis). By adding 90° to this projected rotation preference (right-hand rule), we predicted the 2D visual motion direction within the fronto-parallel plane that should best activate the neuron, given that residual eye movements are opposite to the fronto-parallel component of rotation (Fig. 3H,J). This predicted direction preference is plotted on the ordinate in Figure 5B. From the reverse correlation map, we extracted the 2D visual direction preference of each neuron by averaging the direction preferences for each location within the map that showed significant directional tuning (Chen et al., 2008). This measured visual direction preference is plotted on the abscissa in Figure 5B. Measured and predicted direction preferences were strongly correlated (circular-circular correlation, r = 0.98, p < 0.001), indicating that the vestibular rotation preference in the projector condition was highly predictable from the visual direction preference measured separately using reverse correlation.

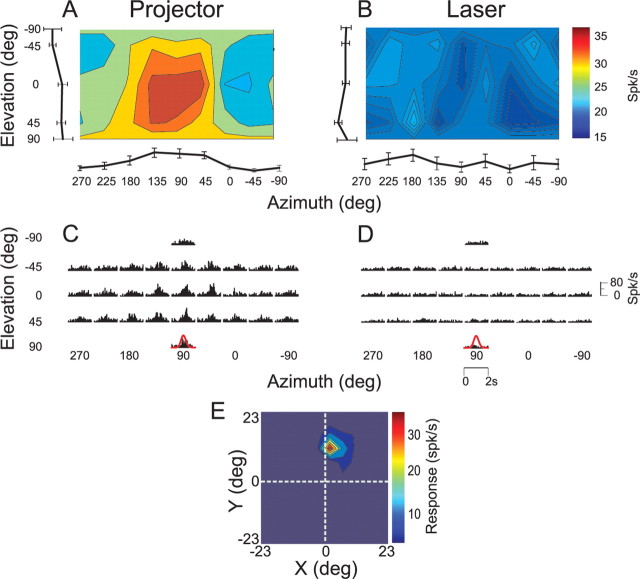

Vestibular rotation tuning in MT: projector versus laser and darkness conditions

To further test whether MT responses to vestibular rotation can be explained by retinal slip due to residual RVOR, two additional stimulus conditions were used (see Materials and Methods for details). In the laser condition, the fixation target was generated by a head-fixed laser in an otherwise completely dark room. This condition eliminates the faint visual background texture produced by the projector. In the darkness condition, no fixation point was presented and the animal was in complete darkness. Thus, visual fixation was not required and the animal was allowed to generate an RVOR. The darkness condition should eliminate any remaining responses driven by retinal slip of the fixation point.

Responses of an example MT neuron under both the projector and laser conditions are illustrated in Figure 6. This cell showed clear rotation tuning (Fig. 6A) (DDI = 0.61, ANOVA, p < 0.01) and stimulus-related PSTHs (Fig. 6C) when the fixation target was generated by the projector. When identical rotational motion was delivered while the monkey fixated a laser-generated target in an otherwise dark room, responses were much weaker and rotation tuning was no longer significant (Fig. 6B) (DDI = 0.49, ANOVA, p = 0.16). The corresponding PSTHs in the laser condition lacked clear stimulus-related temporal modulation (Fig. 6D). Given that both the projector and laser conditions involved fixation of a small (0.2 × 0.2°) visual target and that residual eye movements were similar in these two condition (Fig. 3I,J), these data suggest that most of the response of this neuron to vestibular rotation was driven by retinal slip of the faintly textured background illumination of the projector. This inference is consistent with the observation that this neuron had a receptive field that did not overlap the fixation point or the screen boundaries (Fig. 6E).

Figure 6.

A–D, 3D direction tuning profiles for an MT neuron tested in the vestibular rotation condition when the fixation point is generated by (A, C) the video projector or (B, D) a head-fixed laser. Color contour maps in A and B show the mean firing rate as a function of azimuth and elevation angles (format as in Fig. 1). PSTHs in C and D show the corresponding temporal response profiles. E, Receptive field map for this neuron, format as in Figure 4.

We studied the responses of 41 MT cells (15 and 26 from each of the two animals used for MT recordings) under the projector condition and either the laser (n = 36) or darkness (n = 22) conditions (17 MT cells were tested under all 3 conditions). To quantify the similarity in rotational tuning across conditions, we computed a correlation coefficient between pairs of 3D tuning profiles, such as those shown in Figure 6, A and B. To obtain this correlation coefficient, the mean response to each direction in the projector condition was plotted against the mean response to the corresponding direction in the laser/darkness condition, and a Pearson correlation coefficient was computed from these data.

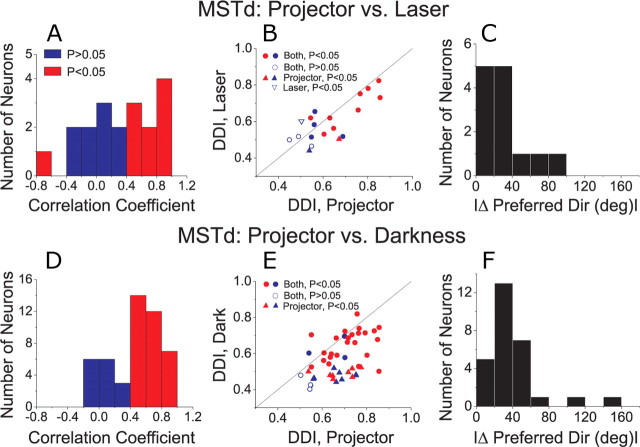

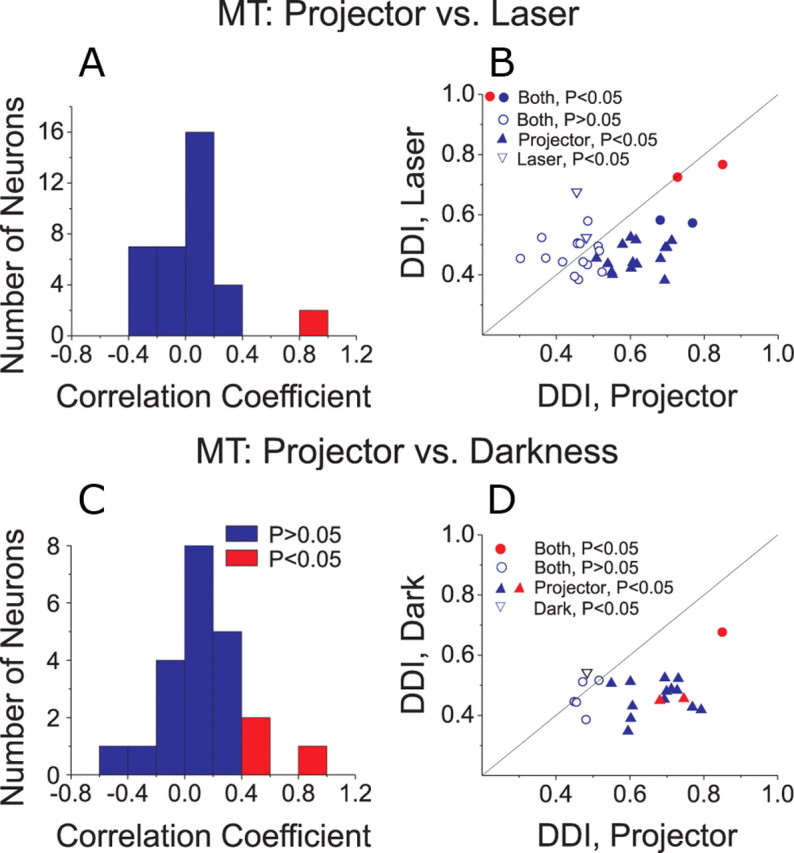

Among 36 MT cells tested under both the projector and laser conditions, only 2 (6%) showed a statistically significant correlation (p < 0.05) between the two tuning profiles (Fig. 7A), and the mean correlation coefficient across neurons was not significantly different from zero (t test, p = 0.29). This result is further illustrated by plotting the corresponding DDI values in Figure 7B. There was no significant correlation between the strength of rotational tuning under the projector and laser conditions, as assessed by DDI (p = 0.30). Only 6/36 (16%) MT cells showed significant tuning (ANOVA, p < 0.05) in the laser condition, and 4 of these neurons also showed significant tuning in the projector condition (Fig. 7B, filled circles). Two of these 4 neurons had receptive fields that overlapped the fovea, and these were the only neurons to show a significant correlation between rotational tuning in the projector and laser conditions (Fig. 7A,B, red bars/symbols). Responses of these two neurons, which had the two largest DDI values in the laser condition, were thus likely driven by retinal slip of the fixation target. Among the vast majority of neurons (30/36, 84%) with no significant tuning in the laser condition, half showed significant tuning in the projector condition (Fig. 7B, filled upright triangles, n = 15) and half showed no significant tuning in either the projector or laser conditions (Fig. 7B, open circles, n = 15).

Figure 7.

A–D, Comparison of vestibular rotation tuning in MT between projector and laser (A, B) or darkness (C, D) conditions. A, C, Histograms show the distribution of correlation coefficients between 3D tuning profiles measured in the projector versus laser (A) or projector versus darkness (C) conditions. Red bars indicate cells with significant (p < 0.05) correlations. B, D, Scatter plots compare the DDI between stimulus conditions. Filled circles, Cells with significant tuning (ANOVA, p < 0.05) under both conditions. Open circles, Cells with nonsignificant tuning under both conditions. Filled upright triangles, Cells with significant tuning only in the projector condition. Open inverted triangles, Cells with significant tuning only in the laser condition. Red symbols denote cells with significant (p < 0.05) correlation coefficients between the projector/laser or projector/darkness conditions.

Figure 7, C and D, shows the corresponding comparisons between the projector and darkness conditions. Again, the mean correlation coefficient between tuning profiles was not significantly different from zero (t test, p = 0.25) and only 3/22 MT cells had tuning curves that were significantly correlated between the projector and darkness conditions (Fig. 7C, red). In addition, there was no significant correlation between DDI values for the projector and darkness conditions (Fig. 7D, p = 0.60). Only two MT cells (9%) showed significant rotational tuning in darkness (Fig. 7D), and the DDI was rather low for one of these cells (open inverted triangle). The other cell (filled red circle) was an outlier in Figure 7D, and we cannot firmly exclude the possibility that this cell was recorded from area MST near the boundary with MT. Among the remaining neurons, 15/22 cells (68%) showed significant rotation tuning in the projector condition only (Fig. 7D, filled triangles) and 5/22 cells showed no significant tuning in either the projector or darkness conditions. Across the population of MT neurons, the median DDI was significantly greater for the projector condition than either the laser or darkness conditions (Wilcoxon matched pairs test, p < 0.01 for both comparisons). Overall, MT responses in the vestibular rotation condition were rare when retinal slip of the visual background was removed in the laser and darkness conditions.

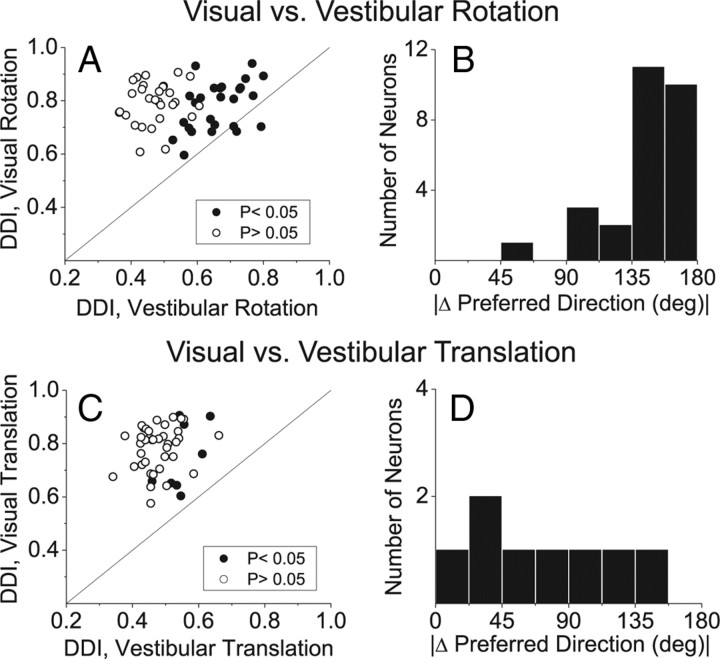

Vestibular rotation tuning in MSTd: projector versus laser and darkness conditions

For comparison with MT, we characterized the vestibular rotation tuning of 19 MSTd neurons under both laser and projector conditions, and 48 MSTd neurons under both projector and darkness conditions (14 cells were tested in all 3 conditions). Unlike MT cells, the majority of MSTd neurons showed clear response modulation and consistent 3D rotational tuning under all stimulus conditions. Responses from an example MSTd neuron are shown in Figure 8. This cell showed clear rotation tuning under both the projector and laser conditions, although response strength was a bit lower in the laser condition (Fig. 8, A vs B; DDI = 0.855 vs 0.731, respectively). Direction preferences were also fairly similar (projector: 89° azimuth and 7° elevation; laser: 123° azimuth and 15° elevation). In addition, response PSTHs largely followed the Gaussian velocity profile of the rotation stimulus under both stimulus conditions (Fig. 8, C vs D).

Figure 8.

A–D, 3D rotation tuning profiles for an MSTd neuron tested under the projector (A, C) and laser (B, D) conditions. Color contour maps in A and B show mean firing rate as a function of azimuth and elevation angles. PSTHs in C and D illustrate the corresponding temporal response profiles (format as in Fig. 1).

Across the population of MSTd neurons studied, rotation tuning profiles measured in the projector condition were often significantly correlated (Pearson correlation, p < 0.05) with those measured in the laser condition (Fig. 9A, 10/19 significant) or the darkness condition (Fig. 9C, 33/48 significant). In both cases, the average correlation coefficient was significantly greater than zero (t test, Fig. 9A: p = 0.007; Fig. 9C: p ≪ 0.001), unlike the pattern of results seen in area MT (Fig. 7A,C). Similarly, the strength of vestibular rotation tuning in the projector condition (as measured by DDI) was significantly correlated with that measured in the laser (Fig. 9B: p = 0.0016) and darkness conditions (Fig. 9D: p < 0.001). When comparing laser and projector conditions, most cells were significantly tuned in both conditions (13/19, 68%; Fig. 9B, filled circles) or neither condition (3/19, open circles). Similarly, when comparing projector and darkness conditions, 28 of 48 (58%) MSTd neurons showed significant tuning in both conditions (Fig. 9D, filled circles), whereas only 4 cells lacked significant tuning in both conditions. Among the remaining MSTd neurons, 16 of 48 cells (33%) showed a significant DDI in the projector condition but not in darkness. These neurons, like those in MT, may be responding to weak background motion in the projector condition. Thus, the percentage of rotation-selective neurons in the vestibular condition was likely overestimated to some degree by Takahashi et al. (2007).

Figure 9.

A–E, Comparison of vestibular rotation tuning in area MSTd between the projector and laser (A–C) or darkness (C–E) conditions. A, D, Histograms show the distribution of correlation coefficients between 3D tuning profiles measured in the projector versus laser (A) or projector versus darkness (D) conditions. B, E, Scatter plots compare the DDI between stimulus conditions (format as in Figure 7). C, F, Histograms show the absolute difference in 3D direction preferences (|Δ preferred direction|) between projector and laser conditions (C) or projector and darkness conditions (F). Only cells with significant tuning in both conditions were included in these histograms.

Discussion

Along with optic flow, vestibular information helps us navigate through space. A number of studies have reported the presence of both optic flow and inertial motion tuning in areas MSTd (Duffy, 1998; Bremmer et al., 1999; Page and Duffy, 2003; Gu et al., 2006; Takahashi et al., 2007) and VIP (Schaafsma and Duysens, 1996; Bremmer et al., 2002a). In particular, two recent studies have emphasized a potential role of MSTd in heading perception based on vestibular signals. First, responses of MSTd neurons show significant trial-by-trial correlations with perceptual decisions about heading that rely strongly on vestibular input (Gu et al., 2007). Second, MSTd responses to inertial motion were abolished after bilateral labyrinthectomy, showing that these responses arise from activation of the vestibular system (Takahashi et al., 2007). In addition, a subpopulation of MSTd neurons with congruent visual and vestibular tuning appears to integrate visual and vestibular cues for improved heading sensitivity (Gu et al., 2008).

Despite the importance of visual/vestibular integration for self-motion perception, the location in the brain where visual and vestibular signals first combine remains unknown. More specifically, the source of the vestibular signals observed in area MSTd is unclear. These signals could arrive in MSTd through the same pathways that carry visual signals or through some separate pathway from other vestibular regions of the brain. Since one of the major projections to areas MSTd and VIP arises from visual area MT (Maunsell and van Essen, 1983; Ungerleider and Desimone, 1986), the present experiments were designed to explore whether MT neurons show directionally selective responses to physical translations and rotations of the subject, as seen previously in area MSTd (Gu et al., 2006, 2007; Takahashi et al., 2007) and area VIP (Bremmer et al., 2002a; Schlack et al., 2002; Klam and Graf, 2006; Chen et al., 2007). Area MT is well known for its important roles in the processing of visual motion (Born and Bradley, 2005), and has been studied extensively with regard to its roles in perception of direction (Britten et al., 1992; Pasternak and Merigan, 1994; Salzman and Newsome, 1994; Purushothaman and Bradley, 2005), perception of speed (Pasternak and Merigan, 1994; Orban et al., 1995; Priebe and Lisberger, 2004; Liu and Newsome, 2005), perception of depth (DeAngelis et al., 1998; Uka and DeAngelis, 2003, 2004, 2006; Chowdhury and DeAngelis, 2008), and perception of 3D structure from motion and disparity (Xiao et al., 1997; Bradley et al., 1998; Dodd et al., 2001; Vanduffel et al., 2002; Nguyenkim and DeAngelis, 2003). Responses of MT neurons have been generally considered primarily visual in origin. For example, smooth pursuit eye movements were found to robustly modulate responses of MST neurons but not MT neurons (Newsome et al., 1988). However, eye position has been reported to modulate MT responses (Bremmer et al., 1997). In addition, we have recently shown that extra-retinal signals modulate responses of MT neurons to code depth sign from motion parallax (Nadler et al., 2008). In that study, both head movements and eye movements were possible sources of extra-retinal inputs. That finding, combined with the close anatomical connectivity between MT and MSTd, further motivated the present study to examine vestibular responses in area MT.

In the same experimental protocol that we used previously to study vestibular rotation responses in MSTd (Takahashi et al., 2007) and VIP (Chen et al., 2007), we found that approximately half of MT neurons were significantly tuned for vestibular rotation. In contrast, only 17% of MT neurons were tuned to vestibular translation in the projector condition, compared with ∼60% of MSTd neurons (Gu et al., 2006). As supported by the eye movement data in Figure 3, this difference in strength of vestibular responses to rotation and translation likely arises from differences between the rotational and translational VOR (for review, see Angelaki and Hess, 2005). Whereas the RVOR is robust at low frequencies, the TVOR gain in monkeys is small under the conditions of our experiment: 30 cm viewing distance and a relatively slow motion stimulus (Schwarz and Miles, 1991; Telford et al., 1995, 1997; Angelaki and Hess, 2001; Hess and Angelaki, 2003). Furthermore, because of the unpredictable direction and transient nature of the motion profiles we used, animals could not completely suppress their reflexive eye movements. This incomplete suppression of the VOR results in varying amounts of retinal slip (Fig. 3). Because of the background illumination of the video projector used to generate the head-fixed fixation target (see Materials and Methods), MT neurons would thus be stimulated visually by this retinal slip. This problem would be expected to be larger for rotation, because of the robustness and larger gain of the RVOR under the conditions of our experiment.

To further explore this possibility, we measured vestibular rotation tuning when the head-fixed fixation target was generated by a laser in an otherwise dark room. The results of Figure 7, A and B, show that much of the tuning seen in the projector condition was due to retinal slip of either the fixation target or the faintly textured background illumination of the video projector. We also recorded MT responses during vestibular rotation in complete darkness, with no fixation requirement. The latter condition eliminates all retinal stimulation, but has the caveat that the eyes are continuously moving during stimulus delivery. Only two MT neurons (9%) showed significant rotation tuning in darkness, just above the number expected by chance. Considering all of the data, we conclude that the rotation selectivity of MT neurons in the projector condition was largely due to retinal slip introduced by incomplete RVOR suppression. Note that the laser and darkness control experiments were not repeated for the vestibular translation condition because MT neurons showed little vestibular tuning for translation in the projector condition to begin with, presumably because a weak TVOR generates much less residual retinal slip (Fig. 3).

The virtual elimination of MT responses during vestibular rotation in darkness raises the question of whether retinal slip also drives the vestibular rotation tuning reported previously for MSTd neurons (Takahashi et al., 2007). This is not the case, however, as we found that most (58–68%) MSTd neurons maintained their rotation tuning in both the laser and darkness conditions. This indicates true vestibular responses in MSTd, consistent with the previous finding that these responses are abolished following labyrinthectomy (Takahashi et al., 2007). Nevertheless, approximately one-third of MSTd neurons were significantly tuned in the vestibular rotation condition only when the projector was used and not in the laser and darkness conditions. For these neurons, retinal slip due to incomplete suppression of the RVOR is a likely contributor. Thus, the percentage of MSTd neurons that are genuinely tuned to vestibular rotation is probably lower than reported previously by Takahashi et al. (2007), and is approximately the same as seen in the vestibular translation condition (∼60%) (Gu et al., 2006). This conclusion is consistent with the percentage of rotation-selective MSTd neurons observed during 0.5 Hz oscillations in total darkness (Liu and Angelaki, 2009).

In summary, unlike area MSTd, area MT does not modulate during vestibular stimulation in the absence of retinal motion. Thus, the visual/vestibular convergence observed in MSTd and VIP does not originate through inputs from area MT. It remains to be determined which of the other projections to MSTd and VIP carry vestibular information. Given the recent finding that vestibular signals in area MSTd have resolved the tilt/translation ambiguity inherent in the otolith afferents (Liu and Angelaki, 2009), a plausible source of polysynaptic vestibular input to area MSTd may be the posterior vermis of the cerebellum as neurons in this region, but not earlier stages of subcortical vestibular processing, also disambiguate tilt and translation (Yakusheva et al., 2007). In any case, it seems likely that visual motion signals in MSTd/VIP arrive mainly through projections from MT, whereas vestibular information arrives through distinct, not yet established routes. These findings suggest that visual/vestibular convergence for self-motion perception may first occur along the dorsal visual stream in cortical areas MSTd/VIP. Future studies will address these possibilities.

Footnotes

This work was supported by National Institutes of Health (NIH) Grants EY017866 and EY019087 (to D.E.A.) and NIH Grant EY016178 (to G.C.D.). We thank Amanda Turner and Erin White for excellent monkey care and training, Babatunde Adeyemo for eye movement analyses, and Yong Gu for valuable advice throughout these experiments.

References

- Angelaki DE, Hess BJ. Direction of heading and vestibular control of binocular eye movements. Vision Res. 2001;41:3215–3228. doi: 10.1016/s0042-6989(00)00304-7. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Hess BJ. Self-motion-induced eye movements: effects on visual acuity and navigation. Nat Rev Neurosci. 2005;6:966–976. doi: 10.1038/nrn1804. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Gu Y, Deangelis GC. Multisensory integration: psychophysics, neurophysiology, and computation. Curr Opin Neurobiol. 2009;19:452–458. doi: 10.1016/j.conb.2009.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Born RT, Bradley DC. Structure and function of visual area MT. Annu Rev Neurosci. 2005;28:157–189. doi: 10.1146/annurev.neuro.26.041002.131052. [DOI] [PubMed] [Google Scholar]

- Bradley DC, Chang GC, Andersen RA. Encoding of three-dimensional structure-from-motion by primate area MT neurons. Nature. 1998;392:714–717. doi: 10.1038/33688. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Ilg UJ, Thiele A, Distler C, Hoffmann KP. Eye position effects in monkey cortex. I. Visual and pursuit-related activity in extrastriate areas MT and MST. J Neurophysiol. 1997;77:944–961. doi: 10.1152/jn.1997.77.2.944. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Kubischik M, Pekel M, Lappe M, Hoffmann KP. Linear vestibular self-motion signals in monkey medial superior temporal area. Ann N Y Acad Sci. 1999;871:272–281. doi: 10.1111/j.1749-6632.1999.tb09191.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W. Heading encoding in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002a;16:1554–1568. doi: 10.1046/j.1460-9568.2002.02207.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002b;16:1569–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: a comparison of neuronal and psychophysical performance. J Neurosci. 1992;12:4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, Henry E, DeAngelis GC, Angelaki DA. Comparison of responses to three-dimensional rotation and translation in the ventral intraparietal (VIP) and medial superior temporal (MST) areas of rhesus monkey. Soc Neurosci Abstr. 2007;33 715.19/FF12. [Google Scholar]

- Chen A, Gu Y, Takahashi K, Angelaki DE, Deangelis GC. Clustering of self-motion selectivity and visual response properties in macaque area MSTd. J Neurophysiol. 2008;100:2669–2683. doi: 10.1152/jn.90705.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chowdhury SA, DeAngelis GC. Fine discrimination training alters the causal contribution of macaque area MT to depth perception. Neuron. 2008;60:367–377. doi: 10.1016/j.neuron.2008.08.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeAngelis GC, Uka T. Coding of horizontal disparity and velocity by MT neurons in the alert macaque. J Neurophysiol. 2003;89:1094–1111. doi: 10.1152/jn.00717.2002. [DOI] [PubMed] [Google Scholar]

- DeAngelis GC, Cumming BG, Newsome WT. Cortical area MT and the perception of stereoscopic depth. Nature. 1998;394:677–680. doi: 10.1038/29299. [DOI] [PubMed] [Google Scholar]

- Dodd JV, Krug K, Cumming BG, Parker AJ. Perceptually bistable three-dimensional figures evoke high choice probabilities in cortical area MT. J Neurosci. 2001;21:4809–4821. doi: 10.1523/JNEUROSCI.21-13-04809.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duffy CJ. MST neurons respond to optic flow and translational movement. J Neurophysiol. 1998;80:1816–1827. doi: 10.1152/jn.1998.80.4.1816. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Sensitivity of MST neurons to optic flow stimuli. II. Mechanisms of response selectivity revealed by small-field stimuli. J Neurophysiol. 1991;65:1346–1359. doi: 10.1152/jn.1991.65.6.1346. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Response of monkey MST neurons to optic flow stimuli with shifted centers of motion. J Neurosci. 1995;15:5192–5208. doi: 10.1523/JNEUROSCI.15-07-05192.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Froehler MT, Duffy CJ. Cortical neurons encoding path and place: where you go is where you are. Science. 2002;295:2462–2465. doi: 10.1126/science.1067426. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Andersen RA, Snowden RJ. Tuning of MST neurons to spiral motions. J Neurosci. 1994;14:54–67. doi: 10.1523/JNEUROSCI.14-01-00054.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci. 2006;26:73–85. doi: 10.1523/JNEUROSCI.2356-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, DeAngelis GC, Angelaki DE. A functional link between area MSTd and heading perception based on vestibular signals. Nat Neurosci. 2007;10:1038–1047. doi: 10.1038/nn1935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci. 2008;11:1201–1210. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hess BJ, Angelaki DE. Dynamic modulation of ocular orientation during visually guided saccades and smooth-pursuit eye movements. Ann N Y Acad Sci. 2003;1004:132–141. doi: 10.1196/annals.1303.012. [DOI] [PubMed] [Google Scholar]

- Klam F, Graf W. Discrimination between active and passive head movements by macaque ventral and medial intraparietal cortex neurons. J Physiol. 2006;574:367–386. doi: 10.1113/jphysiol.2005.103697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Komatsu H, Wurtz RH. Relation of cortical areas MT and MST to pursuit eye movements. I. Localization and visual properties of neurons. J Neurophysiol. 1988;60:580–603. doi: 10.1152/jn.1988.60.2.580. [DOI] [PubMed] [Google Scholar]

- Liu J, Newsome WT. Correlation between speed perception and neural activity in the middle temporal visual area. J Neurosci. 2005;25:711–722. doi: 10.1523/JNEUROSCI.4034-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu S, Angelaki DE. Vestibular signals in macaque extrastriate visual cortex are functionally appropriate for heading perception. J Neurosci. 2009;29:8936–8945. doi: 10.1523/JNEUROSCI.1607-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maunsell JH, van Essen DC. The connections of the middle temporal visual area (MT) and their relationship to a cortical hierarchy in the macaque monkey. J Neurosci. 1983;3:2563–2586. doi: 10.1523/JNEUROSCI.03-12-02563.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadler JW, Angelaki DE, DeAngelis GC. A neural representation of depth from motion parallax in macaque visual cortex. Nature. 2008;452:642–645. doi: 10.1038/nature06814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newsome WT, Wurtz RH, Komatsu H. Relation of cortical areas MT and MST to pursuit eye movements. II. Differentiation of retinal from extraretinal inputs. J Neurophysiol. 1988;60:604–620. doi: 10.1152/jn.1988.60.2.604. [DOI] [PubMed] [Google Scholar]

- Nguyenkim JD, DeAngelis GC. Disparity-based coding of three-dimensional surface orientation by macaque middle temporal neurons. J Neurosci. 2003;23:7117–7128. doi: 10.1523/JNEUROSCI.23-18-07117.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orban GA, Saunders RC, Vandenbussche E. Lesions of the superior temporal cortical motion areas impair speed discrimination in the macaque monkey. Eur J Neurosci. 1995;7:2261–2276. doi: 10.1111/j.1460-9568.1995.tb00647.x. [DOI] [PubMed] [Google Scholar]

- Page WK, Duffy CJ. Heading representation in MST: sensory interactions and population encoding. J Neurophysiol. 2003;89:1994–2013. doi: 10.1152/jn.00493.2002. [DOI] [PubMed] [Google Scholar]

- Pasternak T, Merigan WH. Motion perception following lesions of the superior temporal sulcus in the monkey. Cereb Cortex. 1994;4:247–259. doi: 10.1093/cercor/4.3.247. [DOI] [PubMed] [Google Scholar]

- Priebe NJ, Lisberger SG. Estimating target speed from the population response in visual area MT. J Neurosci. 2004;24:1907–1916. doi: 10.1523/JNEUROSCI.4233-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prince SJ, Pointon AD, Cumming BG, Parker AJ. Quantitative analysis of the responses of V1 neurons to horizontal disparity in dynamic random-dot stereograms. J Neurophysiol. 2002;87:191–208. doi: 10.1152/jn.00465.2000. [DOI] [PubMed] [Google Scholar]

- Purushothaman G, Bradley DC. Neural population code for fine perceptual decisions in area MT. Nat Neurosci. 2005;8:99–106. doi: 10.1038/nn1373. [DOI] [PubMed] [Google Scholar]

- Salzman CD, Newsome WT. Neural mechanisms for forming a perceptual decision. Science. 1994;264:231–237. doi: 10.1126/science.8146653. [DOI] [PubMed] [Google Scholar]

- Schaafsma SJ, Duysens J. Neurons in the ventral intraparietal area of awake macaque monkey closely resemble neurons in the dorsal part of the medial superior temporal area in their responses to optic flow patterns. J Neurophysiol. 1996;76:4056–4068. doi: 10.1152/jn.1996.76.6.4056. [DOI] [PubMed] [Google Scholar]

- Schlack A, Hoffmann KP, Bremmer F. Interaction of linear vestibular and visual stimulation in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1877–1886. doi: 10.1046/j.1460-9568.2002.02251.x. [DOI] [PubMed] [Google Scholar]

- Schwarz U, Miles FA. Ocular responses to translation and their dependence on viewing distance. I. Motion of the observer. J Neurophysiol. 1991;66:851–864. doi: 10.1152/jn.1991.66.3.851. [DOI] [PubMed] [Google Scholar]

- Snyder J. Map projections: a working manual. Washington, DC: United States Government Printing Office; 1987. pp. 182–190. [Google Scholar]

- Takahashi K, Gu Y, May PJ, Newlands SD, DeAngelis GC, Angelaki DE. Multimodal coding of three-dimensional rotation and translation in area MSTd: comparison of visual and vestibular selectivity. J Neurosci. 2007;27:9742–9756. doi: 10.1523/JNEUROSCI.0817-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka K, Hikosaka K, Saito H, Yukie M, Fukada Y, Iwai E. Analysis of local and wide-field movements in the superior temporal visual areas of the macaque monkey. J Neurosci. 1986;6:134–144. doi: 10.1523/JNEUROSCI.06-01-00134.1986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Telford L, Howard IP, Ohmi M. Heading judgments during active and passive self-motion. Exp Brain Res. 1995;104:502–510. doi: 10.1007/BF00231984. [DOI] [PubMed] [Google Scholar]

- Telford L, Seidman SH, Paige GD. Dynamics of squirrel monkey linear vestibuloocular reflex and interactions with fixation distance. J Neurophysiol. 1997;78:1775–1790. doi: 10.1152/jn.1997.78.4.1775. [DOI] [PubMed] [Google Scholar]

- Uka T, DeAngelis GC. Contribution of middle temporal area to coarse depth discrimination: comparison of neuronal and psychophysical sensitivity. J Neurosci. 2003;23:3515–3530. doi: 10.1523/JNEUROSCI.23-08-03515.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uka T, DeAngelis GC. Contribution of area MT to stereoscopic depth perception: choice-related response modulations reflect task strategy. Neuron. 2004;42:297–310. doi: 10.1016/s0896-6273(04)00186-2. [DOI] [PubMed] [Google Scholar]

- Uka T, DeAngelis GC. Linking neural representation to function in stereoscopic depth perception: roles of the middle temporal area in coarse versus fine disparity discrimination. J Neurosci. 2006;26:6791–6802. doi: 10.1523/JNEUROSCI.5435-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ungerleider LG, Desimone R. Cortical connections of visual area MT in the macaque. J Comp Neurol. 1986;248:190–222. doi: 10.1002/cne.902480204. [DOI] [PubMed] [Google Scholar]

- Vanduffel W, Fize D, Peuskens H, Denys K, Sunaert S, Todd JT, Orban GA. Extracting 3D from motion: differences in human and monkey intraparietal cortex. Science. 2002;298:413–415. doi: 10.1126/science.1073574. [DOI] [PubMed] [Google Scholar]

- Xiao DK, Marcar VL, Raiguel SE, Orban GA. Selectivity of macaque MT/V5 neurons for surface orientation in depth specified by motion. Eur J Neurosci. 1997;9:956–964. doi: 10.1111/j.1460-9568.1997.tb01446.x. [DOI] [PubMed] [Google Scholar]

- Yakusheva TA, Shaikh AG, Green AM, Blazquez PM, Dickman JD, Angelaki DE. Purkinje cells in posterior cerebellar vermis encode motion in an inertial reference frame. Neuron. 2007;54:973–985. doi: 10.1016/j.neuron.2007.06.003. [DOI] [PubMed] [Google Scholar]