Abstract

Adult observers generally find it difficult to recognize and distinguish faces that belong to categories with which they have limited visual experience. One aspect of this phenomenon is commonly known as the “Other-Race Effect” (ORE) since this behavior is typically highly evident in the perception of faces belonging to ethnic or racial groups other than that of the observer. This acquired disadvantage in face recognition likely results from highly specific “tuning” of the underlying representation of facial appearance, leading to efficient processing of commonly-seen faces at the expense of poor generalization to other face categories. In the current study we used electrophysiological (event-related potentials or ERPs) and behavioral measures of performance to characterize face processing in racial categories defined by dissociable shape and pigmentation information. Our goal was to examine the specificity of the representation of facial appearance in more detail by investigating how race-specific face shape and pigmentation separately modulated neural responses previously implicated in face processing, the N170 and N250 components. We found that both components were modulated by skin color, independent of face shape, but that only the N250 exhibited sensitivity to face shape. Moreover, the N250 appears to only respond differentially to the skin color of upright faces, showing a lack of color sensitivity for inverted faces.

Keywords: Face Recognition, Other-race Effect, Event-Related Potentials

Introduction

The so-called “other-race” effect describes the phenomenon in which observers typically find faces difficult to recognize and distinguish from one another if they belong to an ethnic or racial group to which the observer has had little exposure (Sporer, 2001). Anecdotally, this is often expressed as a subjective impression that members of an other-race group look alike (Malpass & Kravitz, 1969; Malpass, 1981). Empirically, observers display distinct impairments in face memory for other-race faces (Meissner & Brigham, 2001), both in terms of recognition accuracy and response bias (Slone, Brigham & Meissner, 2000). In natural scenes, changes to other-race faces are detected more slowly than changes to own-race faces, despite the fact that observers attend to both face types equally (Hirose & Hancock, 2007). Beyond these basic differences in accuracy, observers also appear to process other-race faces in qualitatively different ways. For example, there is some evidence that holistic processing is not applied to other-race faces to the same degree as it is to own-race faces (Michel et al., 2006). Whole/Part effects (Tanaka & Farah, 1993) appear to be more evident for own-race faces than for other-race faces (Tanaka, Kiefer, & Bukach, 2004), and there also appears to be an overall own-race advantage for both “component” and “configural” processing (Hayward, Rhodes, & Schwaninger, 2007). In the context of visual search, other-race faces are easier to detect in a field of own-race distracters than the reverse situation (Levin, 2001; Chiao et al., 2006). This is more or less consistent with other search asymmetry results insofar as a disparity in the fidelity of target and distracter encoding tends to produce similar effects (Rosenholtz, 2001; Rosenholtz, 2004). Finally, category-contingent aftereffects have been reported for own- and other-race faces (Little et al., 2008) suggesting that distinct neural populations may support the processing of these face types.

One simple way to summarize a great deal of the behavioral literature regarding other-race face perception is to say that other-race faces do not appear to be processed by “expert” or “face-like” mechanisms to the same degree as own-race faces. That is not to say they are not perceived as faces, but rather that the efficient, expertise-based strategies adopted for the processing of own-race faces are either applied less skillfully or simply with less success to other-race faces. Conceptually, one could say that other-race faces fall outside the “tuning” of facial appearance used by whatever representation supports recognition behavior. An important question then is to determine the specificity of the neural representation of facial appearance in the context of own- and other-race face perception. We continue by briefly discussing existing results relevant to this issue, concentrating on the literature describing own- and other-race face perception using event-related potentials (ERPs) since this is the methodology we have adopted in the current study.

It is very challenging to summarize previous results concerning the electrophysiological response to own- and other-race faces. To date, multiple studies have compared ERPs to faces belonging to “in-group” and “out-group” faces, but significant variability in task design, experimental stimuli, and analysis techniques make it difficult to condense existing results into a coherent picture of how other-race face processing may differ from that of own-race faces. For example, many studies have been conducted using White observers (and thus White “own-race” faces) but in some cases a comparison between White and Black faces is made (Ito & Urland, 2003; Ito & Urland, 2005) while in others a comparison between White and East Asian faces is made (Caldara et al., 2003; Herrmann et al., 2007; Stahl, Wiese, & Schweinberger, 2008). Few studies have employed more than one “other” race type (see Willardsen-Jense & Ito, 2006 for an exception). Although it is tempting to assume that the processing of all other-race faces is the same, as yet there is no clear evidence that this is true. Furthermore, task demands vary even more dramatically across different studies. In some cases, observers are asked to categorize faces by race and/or gender (Ito & Urland, 2003) while other experiments require an old/new judgment for previously studied items (Stahl, Wiese, & Schweinberger, 2008). Other tasks have required observers to make judgments as simple as a secondary target detection task during recording (Caldara et al., 2003) and as complex as deciding whether or not to shoot at an individual who may be carrying either a cell phone or a firearm (Cornell, Urland, & Ito, 2006). Beyond these substantial differences in task, different studies tend to focus on different ERP components including the N170 (Caldara et al., 2003), the P2 (Ito & Urland, 2003), or the N250r (Herrmann et al., 2007) or adopt tools like Principal Components Analysis (Ito, Thompson, & Cacioppo, 2004), making it still more difficult to determine how different results might fit together into a coherent theoretical package. While many of these studies have reported a variety of differences between the ERPs elicited by own-race faces and other-race faces, we are left with a fairly loose confederacy of results that are not easily relatable to one another.

Given the lack of agreement regarding the nature of the ORE in electrophysiology, the primary goal of the current study was to ask a fundamental question regarding the “tuning” of face-specific ERP components along dimensions relevant to other-race face perception: To what extent do shape cues and pigmentation cues independently contribute towards the modulation of ERP responses to own- and other-race faces? We emphasize that this question addresses how physical differences in the stimulus affect subsequent processing as measured by ERP responses. This is in contrast to studies designed to investigate social or emotional responses to other-race faces (Bernstein et al., 2007), which often attempt to equalize image-level differences between faces belonging to “in-group” and “out-group” categories (Maclin & Malpass, 2001; Chiao et al. 2001). We also have not adopted a complex cognitive task in our design, concentrating instead on how image properties related to racial categorization of the face modulate the neural response in the absence of demanding task requirements. Finally, rather than isolate a particular ERP component for analysis, we present a survey of the P1, N170, P2, and N250 components, each of which have been implicated as taking part in various stages of face processing. Multiple studies have reported larger responses to other-race faces at these components (Itier, 2002; Caldara et al., 2004, Ito & Urland, 2003; Scott et al., 2006) without isolating the cues that are responsible for the differential response. Our study thus represents an attempt to characterize the neural other-race effect in more depth by teasing apart physical stimulus variables that may contribute independently to other-race face perception, concentrating on perceptual factors relevant to the ORE, and simultaneously examining multiple relevant components of the ERP response.

We chose to concentrate on the potentially separate contributions of face pigmentation and face shape to the ORE since previous behavioral work has indicated that these visual cues make independent contributions to face recognition in general (Russell, 2003; Russell & Sinha, 2006; Russell & Sinha, in press) and the social perception of other-race faces in particular (Dixon & Maddox, 2005). These behavioral results raise the important question of how the neural representation of facial appearance is “tuned” along these dimensions, which is of direct relevance to the study of own- and other-race face perception. Since perceived category membership (deciding that a face is White or Black) is a function of both shape and pigmentation data, we suggest that knowing how race-specific visual data of each type modulates behavioral and neural responses is crucial. Thus far, we have little information regarding how shape and pigmentation may separately contribute to the behavioral ORE (Bar-Haim, Seidel, & Yovel, 2008). In many studies, the visual features that define racial categories are confounded either by using natural faces as stimuli or adopting ‘race morphing’ techniques to define a continuum of faces that progress between one category and another via global modification of all differences between face types (Walker & Tanaka, 2003). These techniques have made it difficult to understand how face shape and pigmentation may separately contribute to the ultimate perceptual representation of a face as belonging to one racial group or another.

Our contribution to the characterization of the neural response to other-race faces was therefore to examine the nature of the response to faces which contain dissociated shape and pigmentation information obtained from distinct racial categories. To accomplish this goal, we employed computer-generated stimuli that permitted independent manipulation of face shape and face pigmentation via a “morphable model” of facial appearance (Blanz & Vetter, 1999). We were thus able to create faces that were completely matched for 3D shape, for example, but had race-specific pigmentation applied to their surfaces (and vice-versa). We begin by describing a straightforward behavioral experiment we conducted to ensure that the synthetic faces we created were sufficient to induce a behavioral ORE. We then continue by describing the results of our ERP analysis, which use the same images to examine the neural response to dissociated shape and pigmentation information.

Experiment 1 – A Behavioral ORE for synthetic faces with dissociated shape and pigmentation information

Before examining ERP responses to the synthetic faces we used to dissociate shape and pigmentation information, we first conducted a simple match-to-sample ORE behavioral experiment with our stimuli. This task allowed us to determine whether or not we could obtain a behavioral ORE with computer-generated stimuli, providing an important foundation for our prospective ERP results. We therefore treated this preliminary behavioral assay as a means of determining whether the faces we created had sufficient ecological validity.

An additional question we chose to build into our study was how the ORE (if evident) interacted with the well-known face inversion effect (FIE). Behaviorally, inverting a face makes it harder to recognize (Yin, 1969) and discriminate, impairs observer efficiency (Sekuler et al., 2004), and may induce a less holistic strategy relative to upright face recognition (Freire, Lee, & Symons, 2000). These behavioral consequences resemble accounts of the behavioral other-race effect as discussed above, making the perception of inverted faces a potentially useful “model system” for the perception of other-race faces (Valentine & Bruce, 1986; Valentine, 1991). Besides this, inverted faces confer additional utility as a control for low-level visual differences.

Methods

Subjects

Ten Caucasian volunteers (7 female) participated in the match-to-sample face discrimination task. All participants were between 20-35 years of age and reported normal or corrected-to-normal vision. Furthermore, all participants reported greater exposure to White faces than Black faces (No extensive exposure to a Black family member or friend over a long period of time or an extended stay in a region with a majority of Black individuals). Informed consent was obtained from all participants in accord with the ethical standards enacted by the Children’s Hospital Boston IRB.

Stimuli

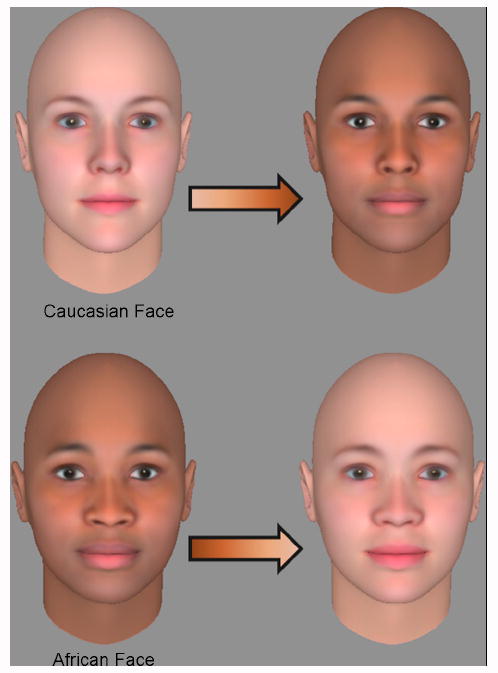

Our image set was composed of 200 upright faces and 200 inverted faces. Each of these sets was further divided into four categories of 50 images each depicting: 1) Individuals with White face shape and pigmentation, 2) Individuals with White face shape and Black pigmentation, 3) Individuals with Black face shape and White pigmentation, or 4) Individuals with Black shape and Black pigmentation. These images were created using 3-D graphics software (FaceGen, Singular Inversions Inc.) that allowed for independent manipulation of face shape and reflectance data. The program’s estimates of facial appearance across racial groups are based on physical measurements of 3D faces obtained from individuals belonging to the multiple groups supported by the application’s interface. Generating random faces involves drawing samples from the distributions of faces learned from these data. When manipulating shape/pigmentation relationships, we maintained the variability of pigmentation across all conditions by always creating “hybrid” stimuli using a particular individual’s shape information and a different individual’s pigmentation information (Figure 1).

Figure 1.

We created four distinct types of faces by combining the 3D shape of White and Black model faces with White and Black skin. The figure depicts how an individual face with White shape and color (top left) could be given Black skin (top right). The bottom row depicts the complementary process by which faces with Black shape and skin color were given White skin color. “Shape” as used in the current study always refers to the real 3D form of the computer-generated head.

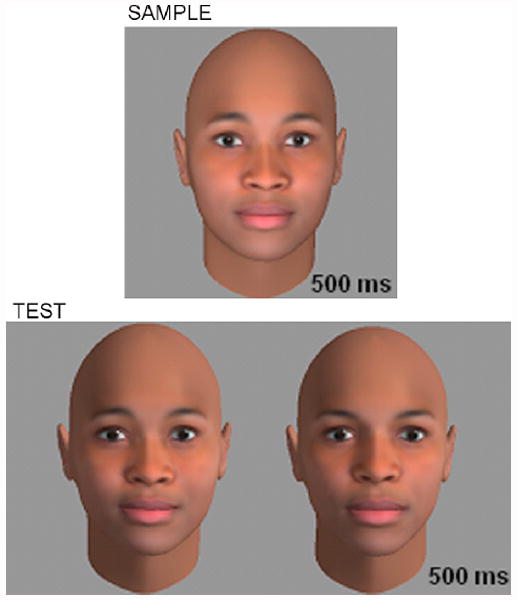

Procedure

The behavioral test consisted of a match-to-sample face discrimination task. All observers saw faces presented on an LCD monitor in a dark room, with faces subtending approximately 6 degrees of visual angle. All faces were presented on a medium-gray background. On each trial, observers were first presented with a sample face for 500ms (viewed frontally) followed by a delay period of 800ms, and then two simultaneously presented test faces that were visible for 500ms (also viewed frontally). One of these test images depicted the same individual as the sample image and the second of which depicted some new individual. Participants were required to select the test face that matched the sample for identity as quickly as possible. To require the observer to do more than simple template-matching to successfully carry out the task, test images were rendered with a strong side illuminant and sample images were rendered with diffuse frontal lighting (Figure 2). Observers completed 50 unique trials per condition for a grand total of 400 trials. No sample faces were repeated in the same orientation during the experiment, but each face appeared once in the upright condition and once in the inverted condition. This repetition is critical, since it ensures that low-level image properties are perfectly matched across the inversion manipulation. Observers’ accuracy in all conditions was recorded.

Figure 2.

Schematic view of our behavioral match-to-sample task. Observers first viewed a sample face for 500ms before viewing two candidate test images, each rendered from a different lighting direction than the sample. The individual depicted in the sample image was always present at test (in this case pictured at left). The change in illumination was employed between sample and test to discourage simple pattern-matching strategies.

Results

For all observers we computed the percentage of correct responses in each category. A 2×2×2 repeated-measures ANOVA was carried out with face shape (White vs. Black 3D form), face pigmentation (White vs. Black skin color), and orientation (upright vs. inverted images) as within-subject factors. Table 1 displays mean match-to-sample accuracy in all categories.

Table 1.

Average matching accuracy across subjects in our behavioral match-to-sample task. Numbers in parentheses represent the standard error of the mean.

| Upright | Inverted | |

|---|---|---|

| White Shape/White Pigmentation | 75.3% (2.4) | 55.3% (3.1) |

| Black Shape/Black Pigmentation | 76.1% (4.6) | 68.6% (2.8) |

| White Shape/Black Pigmentation | 76.9% (5.0) | 68.0% (5.3) |

| Black Shape/White Pigmentation | 76.1% (3.0) | 66.9% (2.6) |

The ANOVA revealed a main effect of image orientation (F(1,9)=37.8, p < 0.001) and an interaction between face shape and face pigmentation (F(1,9)=5.97, p = 0.037). A marginal interaction between orientation and face shape was also observed (F(1,9)=3.63, p = 0.089). The main effect of orientation was driven by decreased accuracy for inverted faces, while the interaction between shape and color was driven by significantly poorer accuracy for faces with white shape and color information (confirmed by post-hoc analysis of the 95% confidence intervals obtained by collapsing over face orientation). Given that performance with all upright faces is roughly equivalent, this last effect suggests that the key difference in performance in this task is mostly due to the extremely particularly poor performance for inverted faces with white shape and pigmentation. Indeed, post-hoc tests confirm that this is the only condition in which observers do not perform significantly better than chance (one-sample t-test, p < 0.05 in all other conditions, p =0.14 for inverted white-shape/white-pigmentation faces).

Discussion

The results of our behavioral experiment demonstrate the existence of an other-race effect using our novel synthetic faces that is consistent with previous literature concerning the nature of visual expertise in face processing and generic object recognition. Observers in this task display particularly poor performance for inverted faces that possess White face shape and White skin color. Put another way, when faces possess one or both attributes of the unfamiliar racial group, the impact of image inversion appears to be lessened. This finding that greater expertise leads to an enhanced inversion effect is consistent with previous studies of visual learning for both complex objects (Gauthier & Tarr, 1997) and generic visual patterns (Husk, Bennett, & Sekuler, 2007). Additionally, the finding that changing only the skin color of the faces displayed to the observer is sufficient to induce a change in behavior is intriguing. Observers are known to be less efficient in using the visual information in inverted faces (Sekuler et al., 2004), but our result demonstrates that for images rendered from the same 3D model a change in perceived race induced solely by skin tone is sufficient to further disrupt inverted face processing. Overall, the data from this task indicates that recognition performance is tuned very tightly to the faces dominating observers’ visual experience. Any deviation from a frequently-seen face category along either the shape or pigmentation dimension changes behavior, demonstrating that the underlying representation of facial appearance is highly selective.

Experiment 2 – ERP analysis of the ORE

Having established that the synthetic faces we have used to dissociate shape and pigmentation data in other-race faces are sufficient to induce performance differences related to perceived race, we continued by examining the ERP response to these faces at multiple components thought to be associated with face processing in adult observers.

We chose to examine 2 distinct ERP components, including the N170 and the N250. In each case, our goal is to examine how perceived race (as defined by either shape or pigmentation) affected the neural response. These components were selected since it has been suggested that they have some amount of selectivity for face stimuli (Itier & Taylor, 2004). The N170 is thought to reflect structural encoding of the stimulus as a face (Bentin, 1996), and the N250 hasa previously been discussed as a possible index of visual expertise (Scott et al., 2006), both of which are highly relevant to the current study (though as we shall discuss later, we cannot make some strong claims regarding expertise given the limitations of our design). At each component, we asked whether the race-specific shape or pigmentation properties of the face significantly modulated the magnitude and (where possible) the latency of response. Further, by including inverted faces in the design, it was possible to ask whether or not any observed differences were tuned to upright faces, or generic by-products of the physical patterns used. The consequences of face inversion on ERPs (specifically the N170) are well-known and widely replicated (Bentin, 1996; Rossion et al., 1999, 2000), making it possible for us to ask how the effects of shape and pigmentation compare to well-known and robust differences in the neural response to upright and inverted faces. Moreover, we note again that inverted faces offer an important control for low-level image properties and also sidestep concerns regarding the physical variability of stimuli across categories raised by Theirry et al. (2007).

Subjects

Fourteen right-handed Caucasian volunteers (12 female) participated in the ERP task. All participants were between 20-35 years of age, reported normal or corrected-to-normal vision, and reported greater exposure to White faces than Black faces according to the criteria described above. Informed consent was obtained from all participants in accord with the ethical standards enacted by the Children’s Hospital Boston IRB.

Stimuli

A subset of the stimuli used in the behavioral task were used in this experiment. Specifically, only the faces with frontal lighting were presented in this task. Otherwise, all four categories of faces obtained by crossing Black/White face shape with Black/White pigmentation were shown to observers, including both upright and inverted versions. As in the behavioral task, we included 50 trials of each condition for a grand total of 400 trials.

Procedure

During the ERP testing session, brain activity was recorded while participants viewed the computer-generated faces described above. As in our behavioral task, all observers saw faces presented on an LCD monitor in a dark room, with faces subtending approximately 6 degrees of visual angle. All faces were presented on a medium-gray background. Images were randomly selected without replacement for presentation throughout the experiment, and were displayed for 500ms. Given that our goal was to determine how perceptual factors influence the neural response to faces with varying shape and pigmentation, we opted to use a very simple orientation judgment to assure that our participants were actively attending to our stimuli. Naïve observers are capable of performing this task rapidly and at high accuracy for unfamiliar faces (Balas, Cox, & Conwell, 2007) making it a useful means of assuring that subjects look at each stimulus, but do not need to engage in highly demanding perceptual or cognitive processing during the recording period. On each trial, observers were asked to indicate the orientation of the face (“upright” or “inverted”) via a standard button box held on their lap during the task. The box was held with both hands, such that the left and right thumbs were used to depress the response buttons. Hand assignment for these responses was balanced across observers such that half of our participants indicated “upright” with the left hand and “inverted” with the right, while the remainder responded in the opposite fashion. We note that each face appeared once in the upright condition and once in the inverted condition, roughly equalizing the low-level visual information presented across orientation. Given that we have chosen to analyze the N250 component (which is known to be sensitive to repetition) we pause to consider the potential impact of this aspect of our design on the analysis of the N250. Critically, repetition effects at the N250 are most prominent for familiar faces (Pfutze, Sommer & Schweinberger, 2002), immediate repetitions of an identical stimulus (Schweinberger et al., 2002; Begleiter et al., 1995), and are essentially non-existent when repetitions occur across gaps of many trials (Schweinberger, Huddy, & Burton, 2004). Our own design uses unfamiliar faces that are only repeated across a significant image transformation (inversion) with many interleaving trials, and so we feel confident that the use of repeated stimuli does not have substantial consequences here.

Continuous EEG was recorded using a 128-channel Geodesic Sensor Net (Electrical Geodesics, Inc.), referenced online to vertez (Cz). The electrical signal was amplified with 0.1 to 100Hz band-pass filtering, digitized at a 250 Hz sampling rate, and stored on a computer disk. The data were analyzed offline using NetStation 4.2 analysis software (Electrical Geodesics, Inc.). The continuous EEG signal was segmented into 900ms epochs, starting 100ms prior to stimulus onset. Data were filtered with a 30Hz low-pass elliptical filter and baseline-corrected to the mean of the 100ms period before stimulus onset. NetStation’s automated artifact detection tools were used to examine the data for eye blinks, eye movements, and bad channels. Segments were excluded from further analysis if they contained an eye blink (threshold +/- 70μV…) or eye movement (threshold +/- 50μV…). In the remaining segments, individual channels were marked bad if the difference between the maximum and minimum amplitudes across the entire segment exceeded 80μV. If more than 10% of the 128 channels (more than 13 channels) were marked bad in a segment, we removed the entire segment from our analysis. Alternatively, if we observed fewer than this number of bad channels, these channels were replaced using spherical spline interpolation. Average waveforms from all stimulus categories were calculated for each participant (using only trials on which a correct orientation response was made) and re-referenced to the average reference configuration.

Results

We begin by noting that the behavioral data obtained during this task revealed no effects of face shape, pigmentation, or orientation on either the accuracy of the orientation judgment or the response time to make a correct judgment. Observer performance was near ceiling in all conditions and responses were made rapidly and accurately. We continued by examining only ERPs obtained after correct responses were made.

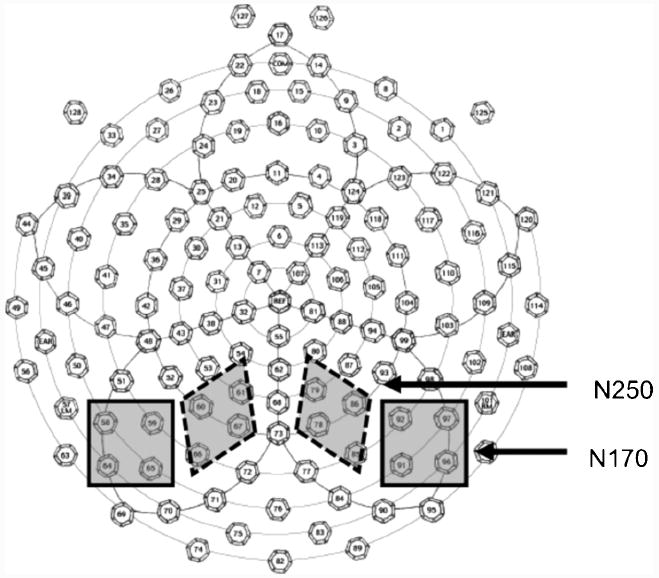

Grand-averaged ERP waveforms were inspected to identify the components of interest. For each component, sensors and time windows of interest were determined by first identifying where the component was maximal and subsequently identifying a time window that reliably captured variation across subjects (Figure 3 outlines the sensor grouping used for each component). An important distinction between the current study and some previous work (Tanaka & Pierce, 2009) is that we have selected distinct sensors to analyze the N170 and the N250 as opposed to using the same grouping to measure both components. Our motivation for doing so was simply that these sensors were those obtained by separately evaluating where each component was most prominent, which led in this case to separate electrode sites. Putting it more simply, there was not a clear N250 component for all subjects at the sites identified for the N170 analysis. We could thus either attempt to compromise and analyze both components over sensors that would likely be non-optimal for both components, or select non-overlapping groups where each component was clearly evident. We have adopted the latter strategy here, in the interests of maintaining consistent sensor selection criteria throughout our analysis.

Figure 3.

The sensor groups used to analyze the N170, and N250 components. Note that previous research has often used the same sensors to measure the N170 and the N250. We did not do so here because our electode selection criteria depended upon identifying sensors where the relevant component was maximal across conditions, leading to non-overlapping sensor groupings for the N170 and N250. We discuss this point in more detail in the main text.

We also note that In the case of the N250, some subjects did not have a well-defined peak, making it necessary to use mean amplitude to quantify response magnitude rather than the peak of the response. This is not uncommon in studies of the N250 (Tanaka et al., 2006; Tanaka & Pierce, 2009) and we therefore used mean amplitude across both the N170 and the N250 for the sake of consistency. We were however able to measure latency for the N170 based on peak magnitude within the relevant time window, though the magnitude of the peak was not used for any statistical analysis. For each component under consideration, a 4-way 2×2×2×2 repeated-measures ANOVA was carried out with hemisphere, orientation, face shape, and face pigmentation as within-subjects factors. We continue by describing the results for each component separately, specifying the sensors of interest and the time windows used in each analysis. To simplify the statistical report, we have omitted third-order and higher interactions, due to the difficulty in interpreting their meaning.

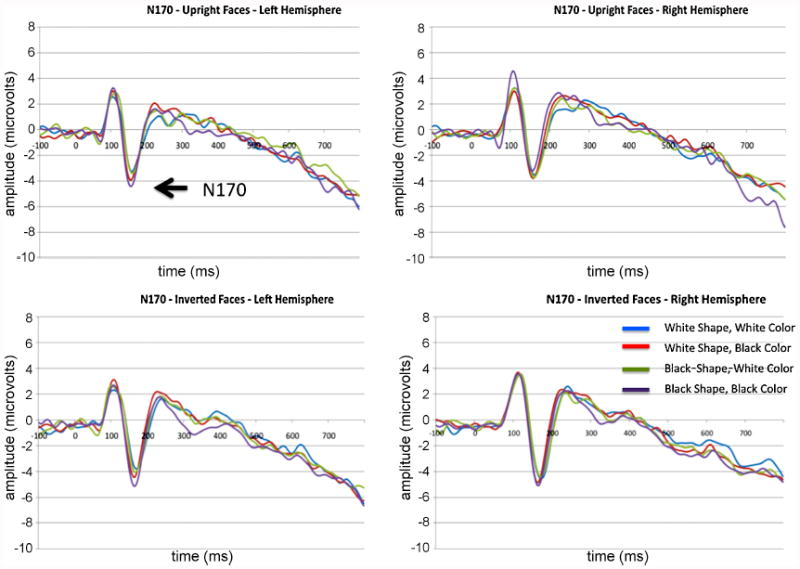

N170

We observed maximal responses of the N170 over sensors 58, 59, 64, & 65 in the left hemisphere and sensors 91, 92, 96, & 97 in the right hemisphere. Having identified these locations where the component was of greatest magnitude, we defined a time window of 120-175ms for determining mean amplitude and extracting latency at peak amplitude.

Mean Amplitude

N170 mean amplitude was significantly modulated by the pigmentation of a face (F(1,13)=9.7, p=0.008), while the effect of inversion (larger response to inverted faces) was only marginally significant (F(1,13)=3.87, p = 0.071). No other main effects or interactions reached significance. The main effect of face pigmentation was driven by a larger response to Black faces compared to White, independent of the 3D shape of the face (Figure 4).

Figure 4.

The N170 component observed at left and right hemisphere sensors of interest (numbered in the text). Waveforms for upright and inverted faces are presented in the top and bottom rows, respectively.

Latency

The peak latency of the N170 was significantly modulated by both the orientation of the face (F(1,13)=44.7, p < 0.001) and face pigmentation (F(1,13)=17.7, p =0.001). The main effect of orientation was the result of slower latencies to inverted faces (consistent with previous literature) while the main effect of pigmentation was driven by faster latencies to Black faces.

N250

We observed maximal responses of the N250 over sensors 60, 61, 66, & 67 in the left hemisphere and sensors 78, 79, 85, & 86 in the right hemisphere. Having identified these locations where the component was of greatest magnitude, we defined a time window of 230-300ms for the N250 (a time window consistent with Tanaka et al., 2006). Unfortunately, we were unable to determine robust component peaks for all subjects making it difficult to determine peak latency. We therefore have omitted this analysis from our report.

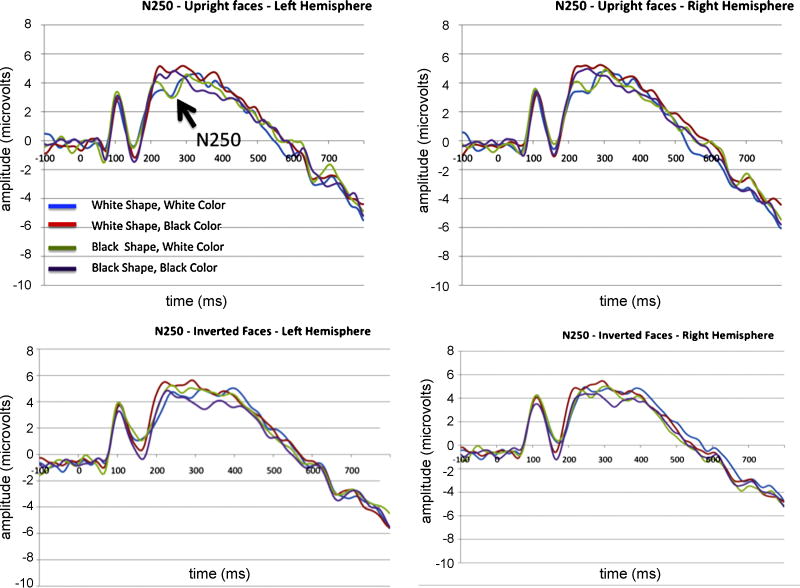

N250 mean amplitude

The mean amplitude of the N250 was significantly modulated by both the shape (F(1,13)=8.7, p = 0.011) and the pigmentation (F(1,13)=12.3, p = 0.004) of the face (Figure 5). Having White shape made N250 magnitudes less negative, while having white pigmentation made them more negative. We further observed a significant interaction between orientation and pigmentation (F(1,13)=4.7, p = 0.048) such that pigmentation differences were more prominent for upright faces than inverted ones.

Figure 5.

The N250 component observed at left and right hemisphere sensors of interest (numbered in the text). Waveforms for upright and inverted faces are presented in the top and bottom rows, respectively.

Discussion

We begin by discussing our ERP results in relation to previous work characterizing typical responses to faces in general and how our data compares to established effects in the literature. First, we do observe an inversion effect at the N170 that is consistent with the existing literature. The N170 response is significantly delayed, while the increase in magnitude was non-significant, which is not an uncommon response profile for this component. Second, the sensor groupings where maximal responses were observed for these components and the time windows isolated for analysis are also in reasonable agreement with previously reported parameters. However, an important feature of our data that is not consistent with earlier results is the absence of a hemisphere effect favoring the right hemisphere over the left. This hemispheric difference has been reported in multiple studies (Rossion et al., 2003) and we lack an a priori reason for expecting it to be absent in the current experiment. It may be related to our use of synthetic faces, or the absence of hair, or any other difference in design between our study and others. We note that may stimulus factors can influence the laterality of the N170, including presentation time (Mercure et al. 2008). Though our own stimulus duration is relatively long (500ms) it is still possible that other factors intrinsic to our design reduced the laterality of this component. For the moment, we can only acknowledge that this difference exists and continue by interpreting our results regarding shape and pigmentation with attention paid to both hemispheres.

To speak to our goal of determining the tuning of various “face-specific” components for race-specific shape and pigmentation, our results revealed differential processing of faces depending on both shape and pigmentation information, though the nature of these differences depended on the component under investigation. The N170 displayed a “shape-blind” response in that only the pigmentation of the face appeared to modulate responses. Black pigmentation speeded the N170 peak, an effect being inconsistent with the possible analogy between other-race face perception and inverted face perception. In general, the N170 to inverted faces is larger (as we observe for the Black pigmentation faces) but is delayed rather than peaking earlier (which the Black pigmentation faces appear to do here.) One must be cautious regarding the interaction of peak amplitude and latency-to-peak, but this is a preliminary piece of data suggesting that the analogy between other-race face perception and inverted face perception is invalid. We also must admit the possibility that these differences (as well as the increased magnitude of the N170 in response to Black pigmentation) may represent nothing more than purely low-level image differences that are not directly relevant to face processing considered broadly, or to other-race face processing in particular. Regardless, the “shape-blindness” of this component is intriguing. Despite physical differences in shape that are readily apparent to observers (and contributed to differential performance in our behavioral task) the N170 does not appear to encode this aspect of race-specific visual information. These results challenge some interpretations of the N170 insofar as our data suggests that the N170 cannot purely encode “2nd-order” or “configural” face structure. This aspect of the face is not altered by our pigmentation manipulation and is thus insufficient to explain our results.

To contextualize our work relative to earlier studies, previous efforts have reported the absence of such an effect (Caldara et al., 2003), while others indicate the presence of an ORE for the N170 (Ito & Urland, 2005; Stahl, Wiese, & Schweinberger, 2008), but may differ as to the direction of the effect. Interestingly, the direction of our other-race amplitude effect is in agreement with results obtained from Asian faces (Stahl, Wiese, & Schweinberger, 2008) but goes in the other direction as results obtained using African-American faces (Ito & Urland, 2005). We suggest that our use of full-color images may be an important addition to previous work. We note that previous results have largely used only grayscale images (or not reported the color content of their stimuli) and our results strongly suggest that face pigmentation may be a critical factor determining the properties of the N170 response.

We continue by considering our results at the N250 in light of existing literature regarding other-race face processing and expertise at this component. There are multiple interesting features of our data. We find that the N250 is indeed sensitive to both the shape and the pigmentation that we expect our observers to be “experts” with, consistent with previous results regarding this component (Scott et al., 2006). Critically, at this component we also observe an important interaction between the orientation of the face and the pigmentation, such that pigmentation differences are only observed for the upright faces. While we have heretofore been forced to acknowledge that our data may reflect purely low-level process percolating up to these components, this interaction between orientation and pigmentation makes a strong case for face-specific processing at the N250 that is sensitive to pigmentation. If merely low-level processes were in play, we would expect equal differences regardless of orientation, since image-level features do not differ as a function of face orientation. By obtaining this interaction, it would seem that the pigmentation effect we have observed for the N250 may indeed be relevant to aspects of facial appearance beyond low-level image properties.

Finally, we close by noting that we do not find a simple relationship between our behavioral data from Experiment 1 and any of the data obtained from the components examined in Experiment 2. To some extent, this is not of great concern. The behavioral response produced by observers in our behavioral task likely represents the integration of multiple processes, which may mean that looking for a true neural correlate of that behavior in any one component closely associated with visual perception could prove difficult. Nonetheless, this remains an intriguing question for future work.

General Discussion

We have found behavioral and electrophysiological evidence that race-specific face shape and pigmentation may make independent contributions to the modulation of face-specific responses. We conclude by discussing important limitations of the current study and suggest avenues for future work.

The most obvious limitation of the current study is the fact that we only recruited Caucasian observers. While this is typical of other published ERP investigations of the other-race effect (Caldara et al., 2003; Ito & Urland, 2003, 2005; Ito, Thompson & Cacioppo, 2004) it also limits the scope of our results. To demonstrate a true other-race effect it is desirable to look for cross-over interactions between recognition performance and the race (or visual experience) of observers, which we are not able to carry out with our data. We thus cannot conclude that our results are necessarily due to the visual expertise of White observers, but may reflect perceptual processing that is independent of experience and subsequent expert-level processing. Still, our data demonstrates that visual features known to be directly relevant to defining racial categories (within which expertise has been demonstrated by previous studies) can independently modulate the neural response to faces. It is still fair to speak of the “tuning” of these responses in the population we have considered, since the effects we have observed still reflect the sensitivity of these components to some visual properties of the face (pigmentation) and insensitivity to others (shape, in the case of the N170). What we may not do is conclude that the underlying representation has been tuned by experience with a particular group of faces.

Besides this very substantial question of how the representation we have characterized here is acquired, there are many other factors not considered here that may additionally be relevant to the perception of own- and other-race faces, both behaviorally and neurally. First, the stimuli we generated for use in both tasks were reasonable models of typical, natural White and Black faces (and their cross-pigmented counterparts) in which skin color and luminance were confounded. This reflects the natural viewing scenario, but raises an interesting question: Is color of luminance the relevant factor? There are intriguing behavioral effects related to the perception of luminance in own- and other-race faces (Corneille et al., 2004; Levin & Banaji, 2006), but to the best of our knowledge there has been no direct assessment of how these two distinct aspects of skin appearance contribute to the other-race effect. Indeed, many previous studies have used only grayscale images, making it impossible to assess any color-related effects. Additional behavioral and electrophysiological work on this issue would further contribute to our understanding of the scope and limitations of the “expert” face representation. A second somewhat-related issue is the potential for extending this paradigm to include assessment of the ORE for a wider range of racial and ethnic categories. Not all pairs of face categories differ as dramatically in color and luminance as White and Black faces, which may mean that the ORE manifests quite differently when the perception of White faces (by White observers) is compared to East Asian, Indian, or Middle Eastern faces. By and large, there has been little effort to measure the other-race effect across multiple face categories (though see (Kelly et al., 2005) for a developmental investigation of this issue). We also point out that investigating the other-race effect in prosopagnosic patients would lend a further important perspective into how visual information is recruited to define faces as belonging to various groups. Despite over all poor abilities with faces, the extent to which prosopagnosic individuals exhibit “classic” or “hybrid” OREs as defined here would shed light into which aspects of facial appearance are inaccessible to such observers, providing key insight into the neural architecture of face processing.

Acknowledgments

Special thanks to Katherine Hung, Vanessa Vogel-Farley, and Alissa Westerlund for technical assistance during data collection. Thanks also to Margaret Moulson and Jukka Leppanen for their observations and advice regarding data analysis. This research was supported by NIH Grant R01 MH078829-10A1 awarded to CAN.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Balas B, Cox D, Conwell E. The Effect of Real-World Personal Familiarity on the. Speed of Face Information Processing. PLoS One. 2007;2(11):e1223. doi: 10.1371/journal.pone.0001223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bar-Haim Y, Seidel T, Yovel G. The role of skin colour in face recognition. Perception. 2009;38:145–148. doi: 10.1068/p6307. [DOI] [PubMed] [Google Scholar]

- 3.Beigleiter H, Porjesz B, Wang WY. Event-related brain potentials differentiate priming and recognition to familiar and unfamiliar faces. Electroencephalography and Clinical Neurophysiology. 1995;94:41–49. doi: 10.1016/0013-4694(94)00240-l. [DOI] [PubMed] [Google Scholar]

- 4.Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bernstein MJ, Young SG, Hugenberg K. The cross-category effect: Mere social categorization is sufficient to elicit an own-group bias in face recognition. Psychological Science. 2007;18:706–712. doi: 10.1111/j.1467-9280.2007.01964.x. [DOI] [PubMed] [Google Scholar]

- 6.Blanz V, Vetter T. A Morphable Model for the Synthesis of 3D Faces. SIGGRAPH ’99 Conference Proceedings. 1999:187–194. [Google Scholar]

- 7.Caldara R, Rossion B, Bovet P, Hauert CA. Event-related potentials and time course of the ‘other-race’ face classification advantage. Cognitive Neuroscience and Neuropsychology. 2004;15:905–910. doi: 10.1097/00001756-200404090-00034. [DOI] [PubMed] [Google Scholar]

- 8.Caldara R, Thut G, Servoir P, Michel CM, Bovet PM, Renault B. Face versus non face object perception and the “other-race” effect: a spatio-temporal event-related potential study. Clinical Neuropsychology. 2003:515–528. doi: 10.1016/s1388-2457(02)00407-8. [DOI] [PubMed] [Google Scholar]

- 9.Chiao JY, Heck HE, Nakayama K, Ambady N. Priming Race in Biracial Observers Affects Visual Search for Black and White Faces. Psychological Science. 2006;17(5):387–392. doi: 10.1111/j.1467-9280.2006.01717.x. [DOI] [PubMed] [Google Scholar]

- 10.Corneille O, Huart J, Becquart E, Bredart S. When Memory Shifts Toward More Typical Category Exemplars: Accentuation Effects in the Recollection of Ethnically Ambiguous Faces. Journal of Personality and Social Psychology. 2004;86:236–250. doi: 10.1037/0022-3514.86.2.236. [DOI] [PubMed] [Google Scholar]

- 11.Correll J, Urland GR, Ito TA. Event-related potentials and the decision to shoot: The role of threat perception and cognitive control. Journal of Experimental Social Psychology. 2006;42:120–128. [Google Scholar]

- 12.Dixon TL, Maddox KB. Skin tone, crime news, and social reality judgments: Priming the schema of the dark and dangerous Black criminal. Journal of Applied Social Psychology. 2005;35:1555–1570. [Google Scholar]

- 13.Friere A, Lee K, Symons L. The face inversion effect as a deficit in the encoding of configural information: Direct evidence. Perception. 2000;29:159–170. doi: 10.1068/p3012. [DOI] [PubMed] [Google Scholar]

- 14.Gauthier I, Tarr MJ. Becoming a “Greeble” expert: Exploring mechanisms for face recognition. Vision Research. 1997;37:1673–1682. doi: 10.1016/s0042-6989(96)00286-6. [DOI] [PubMed] [Google Scholar]

- 15.Halit H, de Haan M, Johnson MH. Modulation of event-related potentials by prototypical and atypical faces. Neuroreport. 2000;11:1871–1875. doi: 10.1097/00001756-200006260-00014. [DOI] [PubMed] [Google Scholar]

- 16.Hayward WG, Rhodes G, Schwaninger A. An own-race advantage for components as well as configurations in face recognition. Cognition. 2007;106:1017–1027. doi: 10.1016/j.cognition.2007.04.002. [DOI] [PubMed] [Google Scholar]

- 17.Hermann MJ, Schreppel T, Jager D, Koehler S, Ehlis AC, Fallgatter AJ. The other-race effect for face perception: an event-related potential study. Journal of Neural Transmission. 2007;114:951–957. doi: 10.1007/s00702-007-0624-9. [DOI] [PubMed] [Google Scholar]

- 18.Hirose Y, Hancock PJB. Equally attending but still not seeing: An eye-tracking study of change detection in own and other race faces. Visual Cognition. 2007;15:647–660. [Google Scholar]

- 19.Husk JS, Bennett PJ, Sekuler AB. Houses and textures: Investigating the characteristics of inversion effects. Vision Research. 2007;47:3350–3359. doi: 10.1016/j.visres.2007.09.017. [DOI] [PubMed] [Google Scholar]

- 20.Itier RJ, Taylor MJ. Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. Neuroimage. 2002;15:353–372. doi: 10.1006/nimg.2001.0982. [DOI] [PubMed] [Google Scholar]

- 21.Itier RJ, Taylor MJ. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cerebral Cortex. 2004;14:132–142. doi: 10.1093/cercor/bhg111. [DOI] [PubMed] [Google Scholar]

- 22.Ito TA, Thompson E, Cacioppo JT. Tracking the Time Course of Social Perception: The Effects of Racial Cues on Event-Related Brain Potentials. Personality and Social Psychology Bulletin. 2004;30:1267–1280. doi: 10.1177/0146167204264335. [DOI] [PubMed] [Google Scholar]

- 23.Ito TA, Urland GR. The influence of processing objectives on the perception of faces: An ERP study of race and gender perception. Cognitive, Affective, & Behavioral Neuroscience. 2005;5:21–36. doi: 10.3758/cabn.5.1.21. [DOI] [PubMed] [Google Scholar]

- 24.Ito TA, Urland GR. Race and Gender on the Brain: Electrophysiological Measures of Attention to the Race and Gender of Multiply Categorizable Individuals. 2003;85:616–626. doi: 10.1037/0022-3514.85.4.616. [DOI] [PubMed] [Google Scholar]

- 25.Kelly DJ, Quinn PC, Slater Am, Lee K, Gibson A, Smith M, Ge L, Pascalis O. Three-month-olds, but not newborns, prefer own-race faces. Developmental Science. 2005;8:F31–F36. doi: 10.1111/j.1467-7687.2005.0434a.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Levin DT. Race as a visual feature: using visual search and perceptual discrimination asks to understand face categories and the cross-race recognition deficit. Journal of Experimental Psychology: General. 2000;129(4) doi: 10.1037//0096-3445.129.4.559. [DOI] [PubMed] [Google Scholar]

- 27.Levin DT, Banaji MR. Distortions in the Perceived Lightness of Faces: The Role of Race Categories. Journal of Experimental Psychology: General. 2006;135:501–512. doi: 10.1037/0096-3445.135.4.501. [DOI] [PubMed] [Google Scholar]

- 28.Little AC, DeBruine LM, Jones BC, Waitt C. Category contingent aftereffects for faces of different races, ages and species. Cognition. 2008;106:1537–1547. doi: 10.1016/j.cognition.2007.06.008. [DOI] [PubMed] [Google Scholar]

- 29.MacLin OH, Malpass RS. Racial categorization of faces: The ambiguous-race face effect. Psychology, Public Policy and Law: Special Edition on the Other-Race Effect. 2001;7:98–118. [Google Scholar]

- 30.Malpass RS, Kravitz J. Recognition of faces of own and other race. Journal of Personality and Social Psychology. 1969;13:330–334. doi: 10.1037/h0028434. [DOI] [PubMed] [Google Scholar]

- 31.Malpass RS. Training in face recognition. In: Davies GM, Ellis HD, Shepherd JW, editors. Perceiving and remembering faces. London: Academic Press; 1981. pp. 271–285. [Google Scholar]

- 32.Meissner CA, Brigham JC. Thirty years of investigating the own-race bias memory for faces: a meta-analytic review. Psychology ,Public Policy, & Law. 2001;7:3–35. [Google Scholar]

- 33.Mercure E, Dick F, Halit H, Kaufman J, Johnson M. Differential Lateralization for Words and Faces: Category or Psychophysics? Journal of Cognitive Neuroscience. 2008;20(11):2070–2087. doi: 10.1162/jocn.2008.20137. [DOI] [PubMed] [Google Scholar]

- 34.Michel C, Rossion B, Han J, Chung C-S, Caldara R. Holistic Processing is Finely Tuned for Faces of One’s Own Race. Psychological Science. 2006;17:608–615. doi: 10.1111/j.1467-9280.2006.01752.x. [DOI] [PubMed] [Google Scholar]

- 35.Pfutze EM, Sommer W, Schweinberger SR. Age-related showing in face and name recognition: Evidence from event-related brain potentials. Psychology and Aging. 2002;17:140–160. doi: 10.1037//0882-7974.17.1.140. [DOI] [PubMed] [Google Scholar]

- 36.Rosenholtz R. Search asymmetries? What search asymmetries? Perception & Psychophysics. 2001;63:476–489. doi: 10.3758/bf03194414. [DOI] [PubMed] [Google Scholar]

- 37.Rosenholtz R. The effect of background color on asymmetries in color search. Journal of Vision. 2004;3:224–240. doi: 10.1167/4.3.9. [DOI] [PubMed] [Google Scholar]

- 38.Rossion B, Delvenne JF, Debatisse D, Goffaux V, Bruyer R, Crommelinck M, Guerit JM. Spatio-temporal localization of the face inversion effect: an event-related potentials study. Biological Psychology. 1999;50:173–189. doi: 10.1016/s0301-0511(99)00013-7. [DOI] [PubMed] [Google Scholar]

- 39.Rossion B, Gauthier I, Tarr MJ, Despland B, Bruyer R, Linotte S, Crommelinck M. The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport. 2000;11:69–74. doi: 10.1097/00001756-200001170-00014. [DOI] [PubMed] [Google Scholar]

- 40.Rossion B, Joyce CA, Cottrell GW, Tarr MJ. Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. NeuroImage. 2003;20:1609–1624. doi: 10.1016/j.neuroimage.2003.07.010. [DOI] [PubMed] [Google Scholar]

- 41.Russell R, Sinha P. Real world face recognition: The importance of surface reflectance properties. Perception. doi: 10.1068/p5779. In press. [DOI] [PubMed] [Google Scholar]

- 42.Russell R. Sex, beauty, and the relative luminance of facial features. Perception. 2003;32:1093–1107. doi: 10.1068/p5101. [DOI] [PubMed] [Google Scholar]

- 43.Russell R, Sinha P, Biederman I, Nederhouser M. Is pigmentation important for face recognition? Evidence from contrast negation. Perception. 2006;35:749–759. doi: 10.1068/p5490. [DOI] [PubMed] [Google Scholar]

- 44.Schweinberger SR, Huddy V, Burton AM. N250r: A face-selective brain response to stimulus repetitions. Neuroreport. 2004;15:1501–1505. doi: 10.1097/01.wnr.0000131675.00319.42. [DOI] [PubMed] [Google Scholar]

- 45.Schweinberger SR, Pickering EC, Jentzsch I, Burton AM, Kaufmann JM. Event-related brain potential evidence for a response of interior temporal cortex to familiar face repetitions. Cognitive Brain Research. 2002;14:398–409. doi: 10.1016/s0926-6410(02)00142-8. [DOI] [PubMed] [Google Scholar]

- 46.Scott LS, Tanaka JW, Sheinberg DL, Curran T. A reevaluation of the electrophysiological correlates of expert object processing. Journal of Cognitive Neuroscience. 2006;18:1453–1465. doi: 10.1162/jocn.2006.18.9.1453. [DOI] [PubMed] [Google Scholar]

- 47.Sekuler AB, Gaspar CM, Gold JM, Bennett PJ. Inversion leads to quantitative, not qualitative, changes in face processing. Current Biology. 2004;14:391–396. doi: 10.1016/j.cub.2004.02.028. [DOI] [PubMed] [Google Scholar]

- 48.Slone AE, Brigham JC, Meissner CA. Social and cognitive factors affecting the own-race bias in Whites. Basic & Applied Social Psychology. 2000;22:71–84. [Google Scholar]

- 49.Sporer SL. Recognizing faces of other ethnic groups: An integration of theories. Psychology, Public Policy & Law. 2001;7:36–97. [Google Scholar]

- 50.Stahl J, Wiese H, Schweinberger SR. Expertise and own-race bias in face processing: an event-related potential study. Neuroreport. 2008;19:583–587. doi: 10.1097/WNR.0b013e3282f97b4d. [DOI] [PubMed] [Google Scholar]

- 51.Tanaka JW, Curran T, Porterfield AL, Collins D. Activation of Preexisting and Acquired Face Representations: The N250 Event-related Potential as an Index of Face Familiarity. Journal of Cognitive Neuroscience. 2006;18:1488–1497. doi: 10.1162/jocn.2006.18.9.1488. [DOI] [PubMed] [Google Scholar]

- 52.Tanaka JW, Farah MJ. Parts and wholes in face recognition. Quarterly Journal of Experimental Psychology: Human Experimental Psychology. 1993;46A:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- 53.Tanaka JW, Kiefer M, Bukach CM. A holistic account of the own-race effect in face recognition: Evidence from a cross-cultural study. Cognition. 2004;93:B1–B9. doi: 10.1016/j.cognition.2003.09.011. [DOI] [PubMed] [Google Scholar]

- 54.Tanaka JW, Pierce LJ. The neural plasticity of other-race face recognition. Cognitive, Affective, & Behavioral Neuroscience. 2009;9:122–131. doi: 10.3758/CABN.9.1.122. [DOI] [PubMed] [Google Scholar]

- 55.Thierry G, Martin CD, Downing P, Pegna AJ. Controlling for interstimulus perceptual variance abolishes N170 face selectivity. Nature Neuroscience. 2007;10:505–511. doi: 10.1038/nn1864. [DOI] [PubMed] [Google Scholar]

- 56.Valentine T, Bruce V. The effect of race, inversion, and encoding activity upon face recognition. Acta Psychologica. 1986;61:259–273. doi: 10.1016/0001-6918(86)90085-5. [DOI] [PubMed] [Google Scholar]

- 57.Valentine T. A unified account of the effects of distinctiveness, inversion, and race in face recognition. The Quarterly Journal of Experimental Psychology. 1991;43:161–204. doi: 10.1080/14640749108400966. [DOI] [PubMed] [Google Scholar]

- 58.Walker PM, Tanaka J. An encoding advantage for own-race versus other-race faces. Perception. 2003;32:1117–1125. doi: 10.1068/p5098. [DOI] [PubMed] [Google Scholar]

- 59.Willadsen-Jensen EC, Ito TA. Ambiguity and the timecourse of racial perception. Social Cognition. 2006;24:580–606. [Google Scholar]

- 60.Yin RK. Looking at upside-down faces. Journal of Experimental Psychology. 1969;81:141–145. [Google Scholar]