Abstract

The neural basis of syntax is a matter of substantial debate. In particular, the inferior frontal gyrus (IFG), or Broca s area, has been prominently linked to syntactic processing, but anterior temporal lobe has been reported to be activated instead of IFG when manipulating the presence of syntactic structure. These findings are difficult to reconcile because they rely on different laboratory tasks which tap into distinct computations, and may only indirectly relate to natural sentence processing. Here we assessed neural correlates of syntactic structure building in natural language comprehension, free from artificial task demands. Subjects passively listened to Alice in Wonderland during functional magnetic resonance imaging and we correlated brain activity with a word-byword measure of the amount syntactic structure analyzed. Syntactic structure building correlated with activity in the left anterior temporal lobe, but there was no evidence for a correlation between syntactic structure building and activity in inferior frontal areas. Our results suggest that the anterior temporal lobe computes syntactic structure under natural conditions.

Keywords: Language, Neuroimaging, Syntax, aTL

Introduction

The combination of words into an infinite number of complex phrases is a fundamental property of human language. Yet, despite 130 years of neuroscience research of language, the brain basis of this ability remains hotly debated. Syntactic computation, i.e. the capacity to build sentence structure, has famously been associated with the left inferior frontal gyrus (IFG) (Ben-Shachar, Hendler, Kahn, Ben-Bashat, & Grodzinsky, 2003; Caplan, Chen, & Waters, 2008; Caplan et al., 2002; Dapretto & Bookheimer, 1999; Embick, Marantz, Miyashita, O’Neil, & Sakai, 2000; Grodzinsky, 2001; Just, Carpenter, Keller, Eddy, & Thulborn, 1996; Stromswold, Caplan, Alpert, & Rauch, 1996). However, a growing body of evidence also suggests a role for the left anterior temporal lobe (aTL) in basic syntactic computation (Dronkers, Wilkins, Van Valin, Redfern, & Jaeger, 2004; Friederici, Meyer, & von Cramon, 2000; Humphries, Binder, Medler, & Liebenthal, 2006; Mazoyer et al., 1993; Rogalsky & Hickok, 2008; Stowe et al., 1998; Vandenberghe, Nobre, & Price, 2002). Reconciling these divergent findings is vital for understanding the neurobiological mechanisms underlying language, as well as for developing clinical treatments of language impairment.

Syntactic processing as a whole divides into many sub-computations, including the combinatory operations that build larger phrases from smaller ones, as well as the various computations that serve to establish long distance dependencies. In this work we focused on the localization of the basic combinatory operation, i.e., the process by which words are combined to form larger phrases, or “Merge” as it is often called in theoretical linguistics (Chomsky, 1995). Combining words into phrases is pervasive during everyday language comprehension, and we sought to measure brain activity associated with this computation while minimizing the influence of artificial experimental factors by having subjects perform a relatively every-day activity: passively listen to a story.

Traditional models of the brain basis of syntax, derived from deficitlesion studies, have long associated IFG with the processing of certain types of syntactically complex sentences (Zurif, 1995), yet the specific linguistic functions performed by IFG remain a matter of significant debate (see e.g. Caplan et al., 2007; Grodzinsky, 2001 and associated commentaries). A series of neuroimaging studies has built on the deficit/lesion literature by manipulating the syntactic complexity of sentences that have a similar semantic interpretation. For example, Stromswold et al. (1996) used positron emission tomography (PET) to compare the processing of simple right-branching bi-clausal sentences (the child spilled the juice that __ stained the rug) with more complex center-embedded sentences (the juice that the child spilled __ stained the rug) and reported increased activity in Broca’s area for complex sentences. Recent research extending this work has found that Broca s area is consistently activated by various kinds of sentences involving long-distance dependencies (Ben-Shachar et al., 2003; Ben-Shachar, Palti, & Grodzinsky, 2004). These manipulations are confounded by different demands on working memory (Chen, West, Waters, & Caplan, 2006; Stowe et al., 1998), but there is also evidence that when working memory is independently manipulated, parts of Broca s area appear to be sensitive to language-specific, but not non-linguistic, working memory demands (Santi & Grodzinsky, 2006, 2007). These studies suggest an important role for Broca’s area in syntactic processing of complex sentences, but they do not provide straightforward evidence for its involvement in the combinatory process that builds syntactic structure, henceforth “syntactic structure building.”

Studies that manipulate the attention of the participant, whether by varying the experimental task or the stimuli, have also been used to support a link between Broca s area and aspects of syntactic processing. Embick et al. (2000), for example, had subjects read sentences with either grammar or spelling mistakes and asked them to indicate how many errors they observed. In another study, Dapretto & Bookheimer (1999) asked participants to make semantic same/different judgments on sentence pairs which differed in surface characteristics that were either “syntactic” (e.g. active versus passive) or “semantic” (e.g. synonyms such as lawyer and attorney). Both of these studies reported increased activity in Broca s area for the “syntactic” task. However, it is unclear to what extent these meta-linguistic attention manipulations tap into the computations that are engaged during natural syntactic processing, limiting the ability of these results to definitively answer questions about syntactic structure building. In sum, while there is a range of evidence linking the processing of syntactically complex structures with Broca s area, it remains open whether this part of the cortex participates in syntactic structure building.

Several studies suggest that aTL is activated instead of IFG when syntactic structure is manipulated. These studies have primarily employed a sentence versus word list protocol in which subjects were shown either word lists that lacked a syntactic structure or coherent syntactically well-formed sentences constructed from the same or similar words as in the lists (Mazoyer et al., 1993). These conditions are likely to require an equivalent degree of lexical processing, but only the sentences are assumed to engender processing associated with composing words together. In this manipulation, sentences have systematically elicited more aTL activity than word lists, a finding that has been replicated with both visual (Stowe et al., 1998; Vandenberghe et al., 2002) and auditory stimuli (Friederici et al., 2000; Humphries et al., 2006; Rogalsky & Hickok, 2008). These studies provide evidence linking the anterior temporal lobe with sentence processing, leading to the hypothesis that the aTL is involved in syntactic structure building (Friederici & von Cramon, 2001; Grodzinsky & Friederici, 2006). Sentences and word lists, however, differ in many ways that are unrelated to the presence or absence of syntactic structure, making it difficult to definitively conclude from these studies that the aTL is involved in building syntactic structure. Furthermore, reconciling these findings with those that implicate Broca s area is particularly difficult because each literature relies on different experimental tasks.

We sought to investigate the localization of syntactic structure building under relatively naturalistic conditions, without the potential confound of artificial experimental task demands. We examined brain activity while participants listened to a story, adapting and developing a technique used to study visual processing during naturalistic viewing conditions. This approach has previously been applied while subjects watch a popular movie (Bartels & Zeki, 2004; Hasson, Malach, & Heeger, In press; Hasson, Nir, Levy, Fuhrmann, & Malach, 2004; Mukamel et al., 2005; Nir et al., 2007; Wilson, Molnar-Szakacs, & Iacoboni, 2008). While some of these naturalistic studies have investigated what cortical regions are relevant for language processing (Bartels & Zeki, 2004; Wilson et al., 2008), they have not aimed to disentangle the different operations that contribute to comprehension.

To investigate syntactic structure building under naturalistic conditions, we had subjects listen to a story, Alice in Wonderland, while brain activity was recorded using functional magnetic resonance imaging (fMRI). We used a word-by-word measure of syntactic structure to estimate the number of structure building operations computed at each word. This was contrasted with a measure that tracked the difficulty of accessing each word from the mental lexicon. These measures were correlated with hemodynamic activity to assess how structure building and lexical access affect brain activity while listening to a story.

We estimated word-by-word syntactic structure building by counting the number of syntactic nodes used to integrate each word into the phrase structure (Frazier, 1985; Hawkins, 1994; Miller & Chomsky, 1963). This metric was chosen because it transparently relates to the process of syntactic structure building that we were targeting. Our metric resembles Yngve s (1960) hypothesis that associates the depth of syntactic embedding with processing load. Unlike Yngve’s hypothesis, however, which focused on the processing demands made by holding unfinished constituents in working memory, our focus was on processing associated with structure building. Thus, our metric is designed to track the amount of structure that has to be postulated at each word. Our approach builds on Frazier (1985:157–169) who argues that “the ‘work’ involved in the syntactic processing of unambiguous sentences is to be identified with the postulation of non-terminal nodes” (p. 168).

A number of theorists have linked syntactic node count with syntactic complexity, or the difficulty of determining the syntactic structure for a particular string (Frazier, 1985; Hawkins, 1994; Miller & Chomsky, 1963). Hawkins (1994, ch. 4) reviews a large body of cross-linguistic corpus-based evidence showing a systematic preference for constructions which minimize the number of syntactic nodes per constituent, independent of the number of words. In addition, the number of syntactic nodes correlates with processing difficulty associated with relative clauses built on different types of noun phrases (see Hale, 2006:663–664).

Syntactic node count, however, is only one of many factors that have been implicated in parsing difficulty. Other factors include effort associated with holding and accessing representations in working memory (e.g., Gibson, 1998, 2000; Vasishth & Lewis, 2006) and the relative likelihood of applying a syntactic rule given the context (Hale, 2006; Levy, 2008). There is significant disagreement as to the relative importance of these factors in behavioral measurements of parsing difficulty, and we remain uncommitted as to the identity and weighting of these factors. Our interest is not in assessing brain activity associated with processing difficulty. Rather, this study is focused on localizing the neural basis of a specific computation: syntactic structure building. We do not assume that the amount of syntactic structure in a natural context should necessarily affect processing difficulty as measured by behavioral methods. We do, however, expect that more applications of the structure building computation should be associated with increased neurovascular activity in a region associated with this computation.

Effort associated with lexical access was estimated using word frequency. This variable robustly modulates the ease of lexical access (Balota, Cortese, Sergent-Marshall, Spieler, & Yap, 2004) and has been previously studied with neuroimaging methods (Carreiras, Mechelli, & Price, 2006; Fiebach, Friederici, Muller, & von Cramon, 2002; Fiez, Balota, Raichle, & Petersen, 1999; Kuo et al., 2003; Prabhakaran, Blumstein, Myers, Hutchison, & Britton, 2006).

Methods

Participants

Nine healthy subjects (3 women), ages 22–34, participated in the experiments. All subjects were right-handed fluent English speakers with normal audition, and all provided written informed consent. Procedures complied with the safety guidelines for MRI research and were approved by the University Committee on Activities Involving Human Subjects at New York University.

Materials

Subjects listened to a 30-minute portion of the story Alice’s Adventures in Wonderland by Lewis Carroll (recording of the book on compact disc, Brilliance Audio, Grand Haven, MI, 46417, 1995, starting at track 06, see supplementary materials for full text). The story was read by a male speaker of British English. After the experiment, subjects filled out a multiple-choice questionnaire concerning the details of the story to verify comprehension and attention (see supplemental materials).

Soundwave Power

As a preliminary to our linguistic analysis, we first identified regions involved in processing low-level aspects of the auditory stimulus. We extracted the absolute value of the sound power for an 18 minute audio segment which was not used in our linguistic analysis. We then convolved the sound power with a canonical hemodynamic response function (Boynton, Engel, Glover, & Heeger, 1996), and sub-sampled the sound power to .5hz, to match the sampling rate of our fMRI measurements, creating a vector which modeled the hemodynamic response to changes in sound power.

Linguistic Predictors

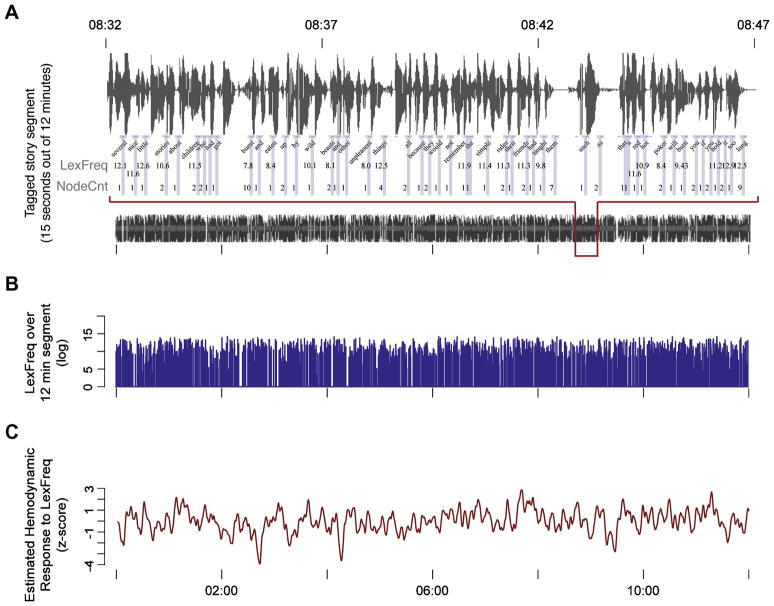

To identify brain activity associated with the linguistic computations of interest, a twelve-minute segment of the auditory story was annotated for two linguistic variables, syntactic node count and lexical frequency (Figures 1 and 2). Word boundaries in the 12 minute auditory segment were manually identified.

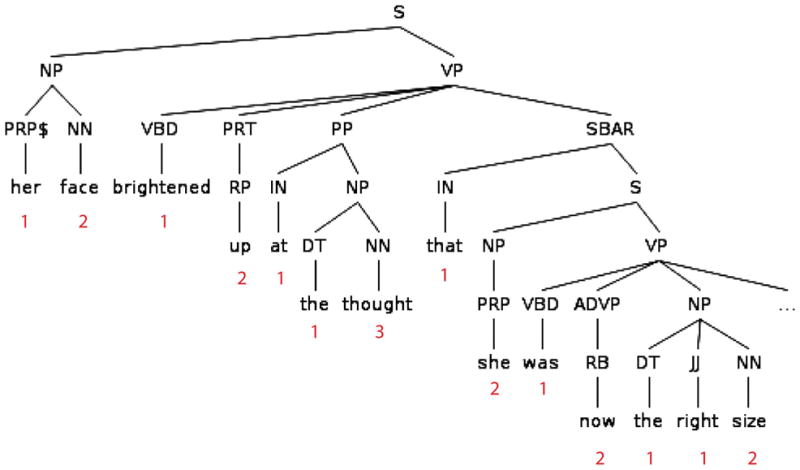

Figure 1.

A sentence fragment from the story. The numbers indicate the NodeCnt measure associated with each word. NodeCnt provided an estimate of the amount of structure built word-by-word (see Methods). Node labels follow the conventions of the Treebank 2 scheme (Marcus et al., 1994).

Figure 2.

Illustration of how predictors were generated. (A) A 15-second epoch of the sound signal illustrating how word boundaries were identified and annotated with lexical frequency and node count information. (B) We assigned a node count and lexical frequency value (shown) to each time point when a word was presented during the time course of the 12 minute story segment. (C) We convolved each predictor (e.g. lexical frequency, shown) with a function describing the estimated hemodynamic response (Boynton et al., 1996) and re-sampled at .5hz to match the characteristics of the recorded fMRI data. Note the lexical frequency time course in panel B includes low frequency components evident as fluctuations in panel C. NodeCnt, number of syntactic nodes; LexFreq, lexical frequency.

To identify brain activity associated with word-by-word syntactic structure building, we counted the number of syntactic nodes used to integrate that word into the phrase structure for each sentence. In the terminology of phrase-structure trees, each lexical item forms a terminal node, and every node that dominates one or more lexical items is a non-terminal node. For each non-terminal node in the phrase-structures in our story, we identified the right-most terminal dominated by that node. We then counted, for each word, the number of nodes for which it was the right-most terminal. To be more precise, let phrase α be a set of terminals and non-terminals dominated by a node β. Moving incrementally across a string, the phrase α is open if we have encountered the left-most terminal dominated by β but not the right-most terminal. The phrase α is closed if we have encountered the right-most terminal dominated by β. We counted the number of phrases that became closed at each word. Note that this is equivalent to counting the number of right brackets in a phrase-marker that is described in bracket-notation.

Syntactic structure was determined using an automated parser (Bikel, 2002), which had been trained on a corpus of text from the Wall Street Journal for which syntactic phrase-structure information was marked in accordance with the Treebank 2 scheme (Marcus et al., 1994). The syntactic parse for the story segment was manually reviewed for correctness and then each word was annotated with the number of syntactic phrases which were closed at the presentation of that word. An example syntactic tree from the story, along with the node counts, is given in Figure 1.

To assess activity associated with lexical access, the second variable of interest, we determined the log surface frequency of all open-class words. Frequency counts were based on the HAL written-language corpus of approximately 131 million words, made available through the English Lexicon Project (Balota et al., 2007) We excluded closed-class function words from the lexical frequency measurements. Behavioral research has generally found no effect of frequency for the kinds of closed-class items used in this story, a finding typically taken to indicate qualitatively different processing and representation for closed vs. open-class words (Bradley, 1983; Segalowitz & Lane, 2000). Because our current aim was not to address the closed vs. open-class distinction, we only entered the frequencies of the open-class items into the analysis, treating closed-class words as equivalent to silence.

Annotating the 12-minute segment with values for node count (NodeCnt) and log lexical frequency (LexFreq) lead to a vector of values for each factor matched to the temporal presentation of each word in the story at the millisecond level (Fig. 2A, B). A third vector was also created which simply identified the end-point of each word, where a value of 1 indicated the endpoint of a word, and a value of 0 at all other time points. This factor tracked the rate at which words were presented in the story (Rate) and provided a global measure of any stimulus-locked hemodynamic activity. Assuming that linguistic processing at all levels of representation is at least partially dependent on the rate of linguistic input, Rate provided a means to capture activity ranging from low-level auditory processes through to semantic integration.

Each of these vectors was then convolved with a canonical hemodynamic response function (Boynton et al., 1996) and sub-sampled at .5hz to match the temporal resolution of the fMRI data (Fig. 2C). Convolution introduced a high degree of correlation between Rate and the two linguistic predictors, NodeCnt and LexFreq (r = 0.63 and r = 0.47, respectively). Such correlation must be taken into account when trying to distinguish neural activity associated with individual linguistic operations from other processes tied to the rate of word presentation.

Accordingly, the two linguistic predictors were orthogonalized against the Rate predictor. This orthogonalization step created two predictors which modeled brain activity associated with syntactic structure building and lexical access that could not be explained by the time-course of linguistic input (Rate). Importantly, the two orthogonalized predictors were not only uncorrelated with Rate but they were also only weakly anti-correlated with each other (r = −0.20). In concert with Rate, the orthogonalized NodeCnt and LexFreq predictors provided the tools to disentangle the independent effects of syntactic structure building and lexical access on brain activity that was time-locked to the presentation of the story.

Experimental Protocol

Subjects listened to the 30-minute story segment once as well as a repeated presentation of the first 12 minutes of the same segment (starting at track 06, lasting 11 minutes, 35 seconds), which corresponded to the portion of the story that had been annotated with the linguistic metrics. The story segment was padded with 24 seconds of silence at the beginning and end. Stimuli were presented using Quicktime software (Apple, California) and were delivered to the subjects using commercial, MRI-compatible, high-fidelity headphones (Confon HP-SI01 by MR Confon, Magdeburg, Germany). Volume was set independently for each subject to ensure comfort while allowing for maximum stimulation. Subjects were instructed to listen attentively.

MRI Acquisition

Functional magnetic resonance imaging at 3T (Allegra, Siemens, Erlangen) was used to measure blood-oxygen level dependent (BOLD) changes in cortical activity. During each fMRI scan, a time series of volumes was acquired using a T2*-weighted echo planar imaging (EPI) pulse sequence and a standard head coil (repetition time, 2000ms; echo time, 30ms; flip angle, 80º; 32 slices; 3×3×4mm voxels; field of view, 192mm). In addition, T1-weighted high-resolution (1×1×1mm) anatomical images were acquired with a magnetization-prepared rapid acquisition gradient echo (MPRAGE) pulse sequence for each subject to allow accurate co-registration with the functional data as well as segmentation and three-dimensional reconstruction of the cortical surface.

Data Analysis

MRI Pre-processing

The fMRI data were analyzed with the BrainVoyager software package (Brain Innovation, Masstricht, Netherlands) and with additional software written with Matlab (MathWorks Inc. Natick, MA). Anatomical scans were transformed to Talairach space (Talairach & Tournoux, 1988). Functional scans were subjected to within-session 3D-motion correction, linear trend removal, and were registered with each subject s anatomical scan. Low frequencies below 0.006 Hz were also removed by high-pass filtering and scans were spatially smoothed by a Gaussian filter of 6 mm full width at half maximum (FWHM) to facilitate statistical analysis across subjects. We cropped the first 17 and last 20 time points of each scan, which corresponded to the initial and final silence periods and to the delayed hemodynamic responses to stimulus onset and offset. The data from the 30-minute runs were divided into two segments, a 12-minute portion for which the story had been tagged, and the remaining 18-minute segment. Finally, to improve the signal-to-noise, we averaged the 12-minute portions across the two repeated presentations for each subject.

Correlation to Sound-wave Amplitude

We identified regions associated with processing low-level properties of the auditory signal (Fig. 3, red). This was done by correlating the measured fMRI time series from every voxel in each subject with the sound power predictor from the 18-minute portion of the story which was not used in the subsequent linguistic analysis. We applied the Fisher transformation to the resulting correlation coefficients and performed t-tests on these coefficients to identify which voxels showed a significant correlation with sound power, treating subjects as a random effect. Statistical significance was assessed after adjusting for serial correlation within the residuals (Goebel, 1996).

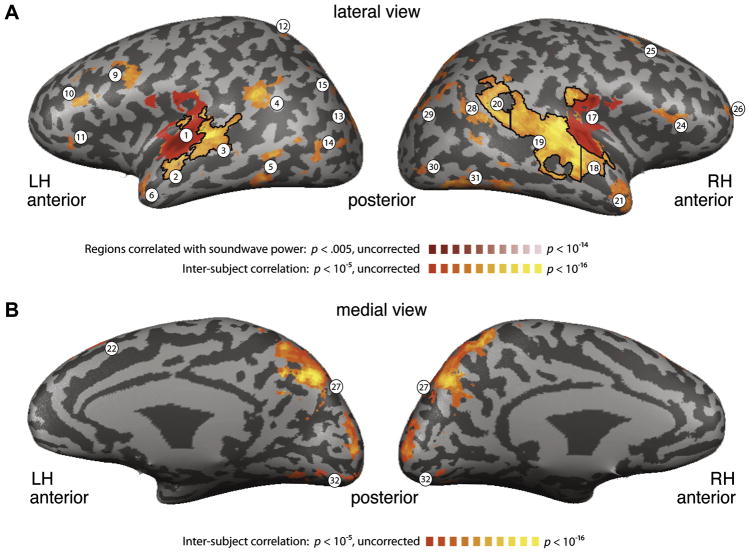

Figure 3.

Regions of interest (ROIs). Regions exhibiting significant correlation between subjects using ISC (orange; r > .21) and regions correlated with the sound power (red). Dark lines indicate anatomically and functionally-based sub-divisions of the ROIs (see Methods). Numbers for each ROI correspond to Table 1. (A) Lateral view. Red, sound power was correlated with activity bilaterally in a portion of the STG including Heschl s Gyrus. Orange, activity was correlated across subjects in a large portion of the temporal lobe bilaterally, as well as IFG and MFG. (B) Medial view. Activity was correlated across subjects in a portion of the parietal lobe (precuneus and cuneus) extending into the lingual gyrus.

We corrected for multiple comparisons by establishing a minimum size for clusters of voxels showing a significant effect (Forman et al., 1995). The cluster-size threshold was determined using Monte-Carlo simulation by creating 1000 three-dimensional images containing normally distributed random noise. The simulation was implemented in a software plug-in for the Brainvoyager QX analysis package. The simulated data were matched to the dimensions, voxel size, and smoothing parameters (i.e., spatial correlation) of the actual data. The simulated data were used to determine the likelihood of observing, by chance, clusters of contiguous voxels that were significant at a specified individual voxel threshold. With a per-voxel threshold of .005 (uncorrected p-value), clusters larger than 21 significant voxels were observed in less than 5% of the simulated runs, and we set the cluster-size threshold accordingly to control for corrected p-value of .05.

The resulting statistical map is shown on an inflated 3D reconstruction of the cortical surface from a single subject.

Inter-Subject Correlation

Inter-subject correlation (ISC; Hasson et al., 2004) was used to identify areas showing reliable response time-courses across subjects, i.e., areas that responded in a similar way across all subjects who listened to the story (Fig. 3, orange). In computing ISC, the time-course of activity recorded from one subject (or group of subjects) is used to predict the time-course of activity from another subject (or another group). Voxels with a high correlation between subjects were presumed to be driven by the external stimulus. This method has been previously used to detect time-locked selective response time courses within a large number of brain regions during natural viewing conditions (e.g., a movie; Hasson et al., 2004).

Note that the ISC does not require any prior knowledge of the expected brain signals in response to a story. Thus, the ISC provides an unbiased method to detect all reliable response patterns across subjects, independently of the linguistic predictors described above. The ISC map was used to define regions of interest (ROIs) which were later analyzed for syntactic and lexical effects (see below).

ISC was computed with data from the 18-minute portion of the story which was not used in the subsequent linguistic analysis. This ensured that the identification and subsequent analysis of the ROIs were statistically independent. We divided the subjects (arbitrarily) into two groups and averaged the data across subjects in each group, creating two group averages. The 18-minute time series of fMRI responses from each group were then divided into 10 epochs, each lasting 1 minute, 48 seconds. A series of correlations were then carried out between groups. For every voxel, the time-course within each epoch n from the first group was correlated with that of the matching epoch n from the second group. The mean of these 10 correlations was then computed per voxel.

We selected a statistical threshold and controlled for multiple comparisons by estimating the maximum r values that would be observed if subjects were not exposed to the same stimulus. Specifically, we correlated each fMRI time-series epoch n from one group with epoch n+1 from the second group (and the last epoch in one group with the first epoch in the other group). We identified the minimum r value for which there was no spurious ISC with a cluster-size of 5 functional voxels. The resulting statistical threshold was r = .18 but we used a value of r = .21 to be conservative and to make it easier to delineate the boundaries between some of the ROIs. In particular, for r = .18, the occipitoparietal ROIs were not clearly separated from the right temporal lobe ROIs.

The ISC was also computed using a complementary procedure, with comparable results (not shown). Correlations were computed, separately for each voxel, between each pair of individual subjects, and the resulting correlation coefficients were averaged across pairs of subjects. Results matched those observed when averaging first across subjects in each of the two groups, albeit with lower correlation values because the individual subject data were noisier than group data.

Anatomical & Functionally Defined ROIs

Before conducting the linguistic analysis, we subdivided some of the ROIs based on functional and anatomical criteria. Low-level auditory ROIs were identified in each hemisphere as regions correlating with sound-wave power, as described above (Table 1 and Fig. 3). ISC identified a number of ROIs (Table 1 and Fig. 3) including contiguous regions spanning large portions of the superior temporal lobe bilaterally, crossing several anatomical boundaries. These large superior temporal lobe regions were subdivided into 3 sub-regions in each hemisphere, as follows. We defined an anterior portion of the superior temporal gyrus (STG) consisting of all voxels exhibiting high ISC (thresholded as described above), anterior to the (anterior-posterior) midpoint of the low-level auditory ROI in each hemisphere, and also non-overlapping with the low-level auditory ROI. Similarly, we defined a posterior portion of the STG consisting of all voxels exhibiting high ISC, posterior to the midpoint of the low-level auditory ROI in each hemisphere, and non-overlapping with the low-level auditory ROI. Lastly, we defined an angular gyrus ROI in the right hemisphere. We identified the point on the superior edge of the middle temporal gyrus, individually for each subject, where it begins to curve upwards around the superior temporal sulcus, and used the average coordinates of this point across subjects to determine the mean location of the boundary between the middle temporal gyrus and angular gyrus. Voxels contiguous with the large temporal lobe region exhibiting high ISC, but posterior and superior to this junction, were identified as a distinct angular gyrus ROI.

Table 1.

Location and size of regions of interest (ROIs), along with activity evoked by the two linguistic predictors: syntactic node count and lexical frequency. ROI numbers in parentheses, regions that do not appear on the cortical surface projection in Figure 3. BA, Broadmann s area within which each ROI was found. Coordinates, Talairach coordinates of the centroid of each ROI. Regions with parenthetical numbers do not appear on the surface projection in Figure 3. Size, # voxels (3×3×3 mm) included in each ROI. fMRI response (% change in image intensity), mean regression coefficient (beta value) averaged across subjects. SEM, standard error of the mean. FDR, false discovery rate statistic. Grey boxes indicate regions where activity was significantly correlated with a predictor.

| # | Region | BA | Coordinates |

Size | Syntactic Complexity |

Lexical Frequency |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | fMRI Response | SEM | q(FDR) | fMRI Response | SEM | q(FDR) | |||||

| Left Hemisphere | 1 | Superior Temporal Gyrus, Transverse Temporal Gyrus | 41, 22 | −50 | −17 | 9 | 241 | −0.08 | 0.04 | 0.107 | 0.10 | 0.02 | 0.021 |

| 2 | Anterior Superior Temporal Gyrus | 22 | −55 | −13 | −1 | 39 | 0.06 | 0.03 | 0.150 | 0.07 | 0.03 | 0.106 | |

| 3 | Posterior Superior Temporal Gyrus | 22, 42 | −55 | −29 | 7 | 182 | 0.05 | 0.03 | 0.151 | 0.08 | 0.03 | 0.071 | |

| 4 | Supramarginal Gyrus | 39, 22 | −56 | −55 | 23 | 47 | 0.05 | 0.04 | 0.192 | 0.03 | 0.03 | 0.335 | |

| 5 | Fusiform Gyrus, Inferior Temporal Gyrus | 37 | −53 | −55 | −12 | 42 | 0.05 | 0.02 | 0.110 | 0.01 | 0.03 | 0.472 | |

| 6 | Anterior Temporal Lobe | 38 | −50 | 11 | −16 | 47 | 0.12 | 0.03 | 0.030 | 0.03 | 0.03 | 0.347 | |

| (7) | Superior Frontal Gyrus | 6 | −9 | 14 | 64 | 27 | −0.01 | 0.04 | 0.465 | 0.04 | 0.03 | 0.207 | |

| (8) | Superior Frontal Gyrus | 6 | −23 | −7 | 65 | 7 | −0.02 | 0.04 | 0.353 | 0.04 | 0.02 | 0.146 | |

| 9 | Precentral Gyrus | 6 | −45 | 2 | 29 | 39 | −0.03 | 0.03 | 0.256 | 0.06 | 0.02 | 0.040 | |

| 10 | Middle Frontal Gyrus | 46 | −45 | 24 | 26 | 17 | −0.01 | 0.03 | 0.411 | 0.03 | 0.03 | 0.369 | |

| 11 | Inferior Frontal Gyrus | 47, 45 | −50 | 20 | 1 | 9 | 0.02 | 0.03 | 0.338 | 0.02 | 0.04 | 0.430 | |

| 12 | Superior Parietal Lobule | 7 | −29 | −66 | 51 | 11 | 0.01 | 0.03 | 0.460 | 0.10 | 0.02 | 0.023 | |

| 13 | Middle Occipital Gyrus | 18 | −22 | −86 | 12 | 23 | 0.01 | 0.03 | 0.402 | 0.04 | 0.03 | 0.151 | |

| 14 | Middle Occipital Gyrus | 19 | −41 | −75 | 3 | 19 | −0.02 | 0.04 | 0.402 | 0.02 | 0.04 | 0.432 | |

| 15 | Cuneus | 19 | −26 | −83 | 29 | 10 | −0.01 | 0.03 | 0.441 | 0.08 | 0.03 | 0.068 | |

| (16) | Cerebellum | −22 | −69 | −20 | 33 | 0.02 | 0.02 | 0.296 | 0.06 | 0.04 | 0.180 | ||

| Right Hemisphere | 17 | Transverse Temporal Gyrus, Insula | 41, 13 | 49 | −17 | 10 | 143 | −0.12 | 0.02 | 0.019 | 0.09 | 0.02 | 0.030 |

| 18 | Anterior Superior Temporal Gyrus | 22 | 54 | −10 | 0 | 60 | 0.00 | 0.03 | 0.377 | 0.06 | 0.03 | 0.096 | |

| 19 | Superior Temporal Gyrus, Middle Temporal Gyrus | 22, 41 | 54 | −29 | 5 | 313 | −0.04 | 0.02 | 0.151 | 0.01 | 0.02 | 0.384 | |

| 20 | Angular Gyrus | 22, 39 | 47 | −59 | 20 | 167 | −0.03 | 0.02 | 0.171 | 0.07 | 0.03 | 0.073 | |

| 21 | Anterior Temporal Lobe | 38 | 46 | 9 | −21 | 68 | 0.06 | 0.03 | 0.119 | 0.03 | 0.02 | 0.239 | |

| 22 | Superior Frontal Gyrus | 8 | 4 | 24 | 52 | 8 | −0.08 | 0.04 | 0.095 | 0.03 | 0.02 | 0.137 | |

| (23) | Superior Frontal Gyrus | 8 | −2 | 33 | 47 | 33 | −0.04 | 0.02 | 0.135 | 0.05 | 0.02 | 0.042 | |

| 24 | Inferior Frontal Gyrus | 45 | 49 | 16 | 19 | 35 | 0.02 | 0.03 | 0.239 | 0.02 | 0.02 | 0.318 | |

| 25 | Middle Frontal Gyrus | 6 | 26 | 6 | 56 | 73 | −0.03 | 0.03 | 0.284 | 0.06 | 0.02 | 0.051 | |

| 26 | Superior Frontal Gyrus | 10 | 33 | 55 | 18 | 12 | −0.05 | 0.02 | 0.078 | 0.06 | 0.02 | 0.039 | |

| 27 | Precuneus, Cuneus, Lingual Gyrus | 7, 19, 17 | 0 | −73 | 35 | 772 | −0.04 | 0.03 | 0.179 | 0.08 | 0.02 | 0.022 | |

| 28 | Posterior Middle Temporal Gyrus | 39 | 44 | −67 | 19 | 7 | 0.01 | 0.03 | 0.445 | 0.08 | 0.02 | 0.034 | |

| 29 | Middle Occipital Gyrus | 18 | 22 | −88 | 18 | 7 | 0.01 | 0.03 | 0.416 | 0.06 | 0.02 | 0.070 | |

| 30 | Lingual Gyrus | 18, 17 | 12 | −92 | −17 | 12 | 0.00 | 0.03 | 0.474 | 0.04 | 0.02 | 0.152 | |

| 31 | Cerebellum | 37 | −64 | −16 | 169 | −0.02 | 0.02 | 0.314 | 0.05 | 0.04 | 0.222 | ||

| 32 | Cerebellum | 1 | −73 | −10 | 8 | 0.03 | 0.03 | 0.266 | 0.02 | 0.03 | 0.405 | ||

Analysis of Syntactic and Lexical Effects

The effects of lexical access and syntactic computation were assessed separately for each of the now pre-defined ROIs (Table 1 and Fig. 3). For each subject, the time-courses of activity for all voxels within an ROI were averaged and fit with a regression model containing the NodeCnt, LexFreq, and Rate predictors (after orthogonalization, see above). We applied the Fisher transformation to the resulting regression coefficients, and then evaluated them using t-tests across subjects. Results from the ROI analysis included a large number of statistical comparisons across predictors and regions, and we corrected for multiple comparisons using false discovery rate (FDR) (Benjamini & Hochberg, 1995; Benjamini & Yekutieli, 2001; Genovese, Lazar, & Nichols, 2002). FDR is a method for controlling for the number of false positives amongst the number of statistically significant observations, rather than controlling for the number of false positives across all comparisons as is done with the conservative Bonferroni correction. We report results using an FDR threshold of q = .05.

Finally, in addition to the ROI analysis, we also sought to evaluate whether converging results could be observed without relying on pre-defined ROIs and to determine if the ROI analysis missed any activation of importance. To this end, we performed a complementary analysis, assessing the correlation between our predictors (after orthogonalization) and brain activity across the entire brain volume. We fit a regression model containing all three predictors to the data from each subject, and performed t-tests (i.e., subjects were treated as a random effect), separately for each voxel, on the resulting coefficients. We then identified regions where activity was correlated with NodeCnt or LexFreq, correcting for multiple comparisons following the same procedure as for the soundwave amplitude analysis.

Results

Comprehension questionnaire

Subjects showed a high degree of accuracy in their performance on the multiple-choice comprehension questionnaire administered after the story run (M = 86.4%, SD = 12.2%),. This performance can be better understood in comparison to nine control subjects who were administered the questionnaire without having participated in the story-listening experiment. Control participants performed poorly on the questionnaire (M = 44.9%, SD = 11.9%), t(16) = −7.28, p < .001). The high degree of accuracy from subjects who listened to the story suggests that they paid attention to the story, despite the absence of an explicit task.

Correlation to soundwave amplitude

Two regions of activation were correlated with the power of the auditory stimulus (Fig. 3, red), one in the left STG around the auditory cortex, and one in a homologous region the right hemisphere (Table 1). These regions encompass the reported location of primary auditory cortex as defined cytoarchitectonically (Rademacher et al., 2001). These results demonstrate that our method was capable of identifying brain regions in which the activity correlated with a signal (sound power) that contained high frequencies, despite the relatively low sampling rate of fMRI and the temporal sluggishness of the hemodynamic response. In fact, the sound power and the linguistic predictors were all broadband, containing low as well as high frequencies (as is the case, for example, with a white noise signal). The low frequency fMRI response time-courses were reliably correlated with the low frequency components of the sound power signal (Mukamel et al., 2005). This analysis also localized low-level auditory ROIs which were used subsequently.

Inter-subject correlation

Inter-subject correlation (ISC) revealed a large network of brain areas exhibiting reliable response time-courses across subjects. These brain regions included an extensive region of the temporal lobe bilaterally, focal regions in the IFG and middle frontal gyrus (MFG), and portions of the precuneus and cuneus in the medial parietal lobe and extending into the occipital lobe (Fig. 3, orange, and Table 1). The large clusters of high ISC in the temporal lobe were sub-divided into three ROIs in each hemisphere based on anatomical and functional criteria (Fig. 3, dark black lines, see Methods for details). Altogether, these procedures resulted in 32 separate ROIs which we examined with our linguistic predictors (Fig. 3 and Table 1). Regions of particular interest for the aims of this research included bilateral aTL regions (Regions 6 and 21), bilateral IFG regions (Regions 11 and 24), bilateral low-level auditory regions (Regions 1 and 17), the segment of the STG immediately anterior to low-level auditory regions bilaterally (Regions 2 and 18), the segments immediately posterior to low-level auditory regions (Regions 3 and 9), the left supramarginal gyrus (region 4), and the right angular gyrus (region 20).

Analysis of syntactic and lexical effects

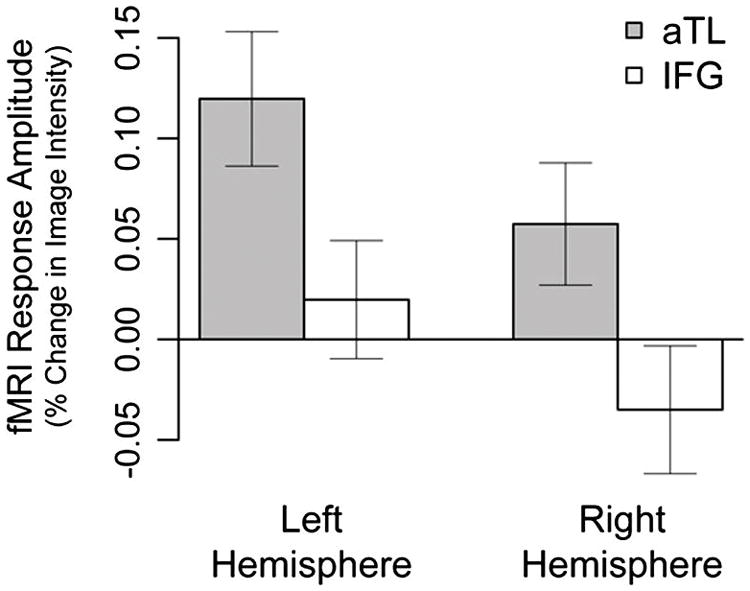

Our results support the hypothesis that the left aTL plays a central role in syntactic structure building. We assessed the neural correlates of syntactic structure building (NodeCnt) and lexical access (LexFreq) by analyzing the fMRI responses in each of the 32 ROIs, using multiple regression. NodeCnt was significantly correlated with activity in the left aTL (Region 6) and was significantly anti-correlated with activity in the right low-level auditory ROI (Region 17). Moreover, the left aTL effect was highly specific in the sense that left aTL was the only region out of our 32 ROIs that showed significant positive correlation with NodeCnt. Given the long tradition that has focused on the role of the IFG in syntactic computation, we directly compared the syntactic structure building in IFG and aTL. We conducted a post-hoc 2×2 ANOVA on the regression coefficients for syntactic node count in the two regions (aTL and IFG) and across hemispheres (Fig. 4). There was a significant effect for hemisphere, such that the left hemisphere showed higher correlation with NodeCnt than the right hemisphere, F(1,8) = 7.183, p < .05. Crucially, there was a greater effect for NodeCnt in aTL than in IFG, F(1,8) = 14.082, p < .01. There was no interaction (p > .8). This dissociation between aTL and IFG further confirms that the positive effect of syntactic structure building was specific to the aTL.

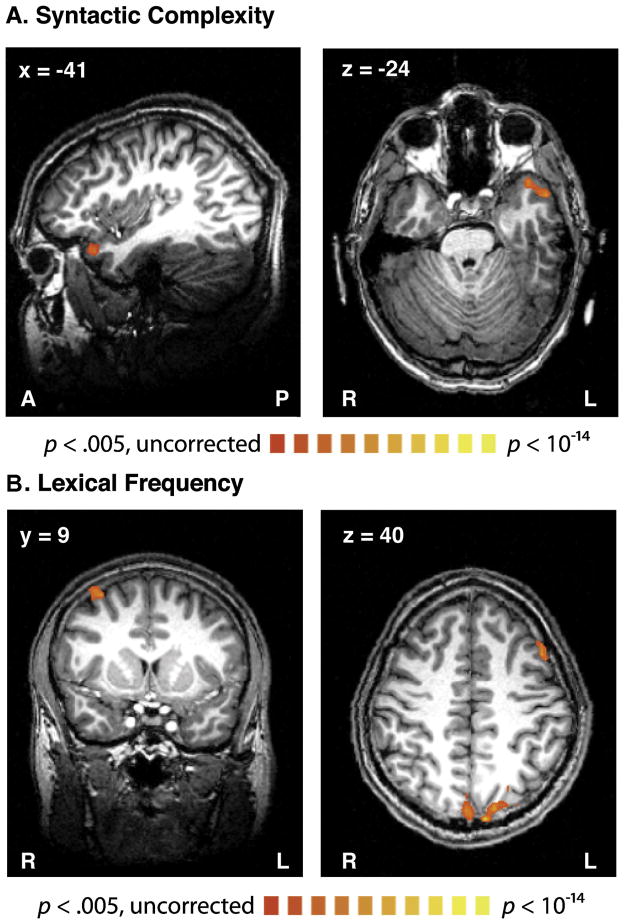

Figure 4.

The estimated responses evoked by syntactic structure building (NodeCnt) in aTL (grey bars) and IFG (white bars) across hemispheres. The correlation between NodeCnt and activity in aTL was significantly greater than that in the IFG; the effect was also significantly greater in the left hemisphere than in the right (see text for details). Error bars indicate standard error of the mean.

In contrast to the results for syntactic node count, lexical frequency was correlated (Table 1) with activity in low-level auditory ROIs bilaterally (Regions 1 and 17) as well as right superior frontal gyrus (SFG: Regions 24 and 26), middle frontal gyrus (MFG: Region 25), left Precentral Gyrus (Region 9) and several regions clustering in the medial parietal lobe spanning the precuneus, cuneus, superior parietal lobule, and extending into the lingual gyrus (Reginos 12, 27 and 28).

These results were corroborated by a subsequent wholebrain analysis in which a regression model containing all of our predictors was fit to the recorded fMRI data, separately for each voxel, throughout the brain (Fig. 5 and Table 2). Again, NodeCnt was correlated with a single cluster in the left aTL which overlapped with the left aTL ROI (Region 6). LexFreq correlated with activity in four regions: the MFG bilaterally and the Precuneus bilaterally, the latter regions showing overlap with the precuneus ROI (Region 27).

Figure 5.

Regions where activity correlated with (A) Syntactic structure-building, or (B) Lexical frequency. Activation is shown on the brain of a single representative subject thresholded at p < .05 (corrected for multiple comparisons). Structure building correlated with activity in the left aTL. Lexical frequency is correlated activity in the left and right MFG and the precuneus bilaterally. Correction for multiple comparisons followed the same procedure used in the soundwave power analysis in the main text (see Methods).

Table 2.

Location (Brodmann s area as well as Talairach coordinates) and spatial extent (# voxels, 3×3×3 mm) for regions in which activity correlated significantly with NodeCnt and LexFreq (p < .05, corrected for multiple comparisons, see Methods). BA, Brodmann s Area.

| Region | BA | Coordinates |

Size | ||

|---|---|---|---|---|---|

| x | y | z | |||

| NodeCnt | |||||

| Left Anterior Superior Temporal Gyrus | 38 | −45 | 19 | −22 | 28 |

| LexFreq | |||||

| Left Middle Frontal Gyrus | 6 | 32 | 12 | 57 | 35 |

| Left Precuneus | 7, 19 | 1 | −80 | 45 | 67 |

| Right Precuneus | 7, 19 | −21 | −79 | 42 | 47 |

| Right Middle Frontal Gyrus | 8, 9 | −47 | 22 | 38 | 21 |

Discussion

In this study we employed a novel approach using fMRI to examine the neural correlates of language processing with naturalistic stimuli. We used this method to address a controversy about the brain basis of basic syntactic computation by aiming to distinguish activity associated with word-by-word syntactic structure building.

Syntactic structure building was strongly associated with activity in the left aTL, including portions of the STG and MTG. As reviewed above, previous research which has associated syntactic processing with either the left IFG (Ben-Shachar et al., 2003; Caplan et al., 2008; Dapretto & Bookheimer, 1999; Embick et al., 2000; Grodzinsky, 2001; Just et al., 1996), or the left aTL (Dronkers et al., 2004; Friederici et al., 2000; Humphries et al., 2006; Mazoyer et al., 1993; Rogalsky & Hickok, 2008; Stowe et al., 1998) has been difficult to reconcile, in part because of the variety of laboratory tasks used which may tap into different aspects of syntactic processing. Our study is the first to use naturalistic stimulation to address this controversy and our results strongly support the aTL localization for a specific syntactic computation: syntactic structure building (Friederici & von Cramon, 2001; Grodzinsky & Friederici, 2006).

A difference between our findings and those of previous studies that have linked syntax with the aTL is that we observed syntax-related activity only in the left aTL, while some previous studies have reported bilateral activation. This apparent discrepancy might be resolved by noting that studies that contrast sentences vs. words in the auditory domain are confounded by intonational differences between sentences and word lists (Humphries, Love, Swinney, & Hickok, 2005). When intonation has been varied independently of sentence structure (Humphries et al., 2005), a region in the left anterior temporal lobe (specifically, parts of the superior temporal sulcus and the MTG) was identified that responded to sentential structure independent of any intonational differences. Our findings are thus consistent with this left-lateral effect for sentential structure.

We also observed an anti-correlation between syntactic node count and activity in the right STG in the ROI analysis, in a region that included primary auditory cortex. This effect is difficult to interpret as our syntax-related hypotheses did not pertain to this region, though we might speculate that the effect in the auditory cortex may be related to the relative ease of syntactic computation at the ends of sentences, where a rich prior context has heavily constrained the possible syntactic completions (cf. Hale, 2006; Levy, 2008). Such a hypothesis is consistent with the observation that that strong syntactic predictions can lead to reduced activation in visual cortex during reading (Dikker, Rabagliati, & Pylkkänen, 2009, in press)

In contrast to the left aTL, we did not find evidence for syntactic structure building in the left IFG (Broca s area). Both areas exhibited high ISC consistent with decades of research demonstrating that both areas play a role in linguistic processing. But only the left aTL, not the left IFG, exhibited both high ISC and a statistically significant correlation with NodeCnt. We interpret this dissociation between left IFG and left aTL in the context of a theoretical distinction between memory-related syntactic operations, such as the computations involved in resolving long-distance dependencies between words in a linguistic representation, and a structure building computation that combines words into phrases. The latter is hypothesized to be computed in anterior temporal regions, while the former involves left IFG activation (Grodzinsky & Friederici, 2006). Our results conform to this type of model.

Syntactic structure building may correlate with other computations that are involved in sentence-level interpretation. In particular, syntactic composition is tightly intertwined with the operations that build the meaning of a sentence. In this study we did not attempt to disentangle syntactic composition from semantic composition and, in fact, dissociating the two would be extremely difficult using this type of a story-listening protocol or any other protocol with complex, naturalistic speech. However, several recent studies which have manipulated semantic composition independently of syntactic composition have not found effects of semantics in the aTL but rather in ventro-medial pre-frontal cortex (Brennan & Pylkkänen, 2008; Pylkkänen, Martin, McElree, & Smart, 2008; Pylkkänen & McElree, 2007). These results reinforce the likelihood that our aTL effect does, in fact, reflect the syntactic as opposed to the semantic aspects of sentence comprehension.

In contrast to the focal aTL effects of our syntactic predictor, our lexical predictor, lexical frequency, was associated with a larger number of regions. Some of these regions, particularly the precuneus and the medial frontal gyrus, have been identified in previous auditory and visual studies as being sensitive to this factor (Kuo et al., 2003; Prabhakaran et al., 2006). The ROI analysis also showed an effect in the STG surrounding the auditory cortex bilaterally, consistent with models where this region is involved in the early stages of lexical processing, mapping acoustic to phonological representations (Hickok & Poeppel, 2007). We also observed several additional regions where activity correlated with lexical frequency, including the left precentral gyrus, the left superior parietal lobule, and the right superior frontal gyrus. Regardless, the lexical frequency results serve as a control, demonstrating that activity in aTL is not correlated with any linguistic predictor time-locked to the story.

We observed only positive correlations between lexical frequency and brain activity. While some studies have reported both positive and negative correlations between brain activity and lexical frequency (Kuo et al., 2003; Prabhakaran et al., 2006), others have only reported activity that is negatively correlated with lexical frequency (Carreiras et al., 2006; Fiebach et al., 2002; Fiez et al., 1999). The absence of regions showing anti-correlations between brain activity and lexical frequency may be unexpected in light of the fact that high lexical frequency is thought to facilitate lexical access. However, lexical access is not straightforwardly associated with decreased brain activity. Specifically, lexical frequency does not lead to a reduction in neuromagnetic measurements of neural activity. Rather, some studies have reported that lexical frequency shifts the latency of activity in the left superior temporal lobe (Embick, Hackl, Schaeffer, Kelepir, & Marantz, 2001; Pylkkänen & Marantz, 2003), while others find more activity in the left STG for higher frequency words (Solomyak & Marantz, 2009). Furthermore, our study is the first to examine brain activity associated with lexical frequency in a spoken narrative context. Sentential and narrative contexts are known to influence the ease of lexical access (Gurjanov, Lukatela, Moskovljevic, Savic, & Turvey, 1985), along with a variety of further lexical properties such as concreteness and animacy (e.g., Binder, Westbury, McKiernan, Possing, & Medler, 2005; Caramazza & Shelton, 1998). Further experimentation is necessary to understand the interaction between these factors.

Conclusion

In summary, we examined the neural basis of natural syntactic and lexical processing with a novel approach which correlates the time course of brain activity with the changing linguistic properties of a naturalistic speech stimulus (a story). This method allowed us to examine the neural basis of sentence processing while minimizing the potential effects of artificial task demands. Our primary finding is that a measure of syntactic structure building is correlated with activity in the left anterior temporal lobe, supporting the view that the left anterior temporal cortex contributes to the processing of syntactic composition. Our results suggest that the naturalistic story-listening method may provide a valuable tool for exploring language processing in a variety of subject populations, including those for which standard experimental tasks are inappropriate.

Supplementary Material

Acknowledgments

This work was supported by the Weizmann-NYU Demonstration Fund in Neuroscience, a Fulbright Doctoral Student Fellowships (Y.N.), the National Science Foundation Grant BCS-0545186 (L.P.), and the National Institute of Health Grant R01-MH69880 (D.J.H.). We thank Asaf Bachrach, Alec Marantz and David Poeppel for helpful discussion and feedback and Gijs Brouwer and Ilan Dinstein for assistance with data collection.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Balota DA, Cortese MJ, Sergent-Marshall SD, Spieler DH, Yap MJ. Visual word recognition of single-syllable words. Journal of Experimental Psychology: General. 2004;133(2):283–316. doi: 10.1037/0096-3445.133.2.283. [DOI] [PubMed] [Google Scholar]

- Balota DA, Yap MJ, Cortese MJ, Hutchison KA, Kessler B, Loftis B, et al. The English Lexicon Project. Behavior Research Methods. 2007;39(3):445–459. doi: 10.3758/bf03193014. [DOI] [PubMed] [Google Scholar]

- Bartels A, Zeki S. Functional Brain Mapping During Free Viewing of Natural Scenes. Human Brain Mapping. 2004;21:75–85. doi: 10.1002/hbm.10153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Shachar M, Hendler T, Kahn I, Ben-Bashat D, Grodzinsky Y. The Neural Reality of Syntactic Transformations: Evidence from fMRI. Psychological Science. 2003;14(5):433–440. doi: 10.1111/1467-9280.01459. [DOI] [PubMed] [Google Scholar]

- Ben-Shachar M, Palti D, Grodzinsky Y. Neural correlates of syntactic movement: converging evidence from two fMRI experiments. NeuroImage. 2004;21(4):1320–1336. doi: 10.1016/j.neuroimage.2003.11.027. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the False Discovery Rate: A Practical and Powerful Approach to Multiple Testing. Journal of the Royal Statistical Society. Series B (Methodological) 1995;57(1):289–300. [Google Scholar]

- Benjamini Y, Yekutieli D. The control of the false discovery rate in multiple testing under dependency. Ann Statist. 2001;29(4):1165–1188. [Google Scholar]

- Bikel DM. Design of a multi-lingual, parallel-processing statistical parsing engine. Proceedings of the second international conference on Human Language Technology Research,; 2002. pp. 178–182. [Google Scholar]

- Binder JR, Westbury CF, McKiernan KA, Possing ET, Medler DA. Distinct Brain Systems for Processing Concrete and Abstract Concepts. Journal of Cognitive Neuroscience. 2005;17(6):905–917. doi: 10.1162/0898929054021102. [DOI] [PubMed] [Google Scholar]

- Boynton GM, Engel SA, Glover GH, Heeger DJ. Linear Systems Analysis of Functional Magnetic Resonance Imaging in Human V1. Journal of Neuroscience. 1996;16(13):4207–4221. doi: 10.1523/JNEUROSCI.16-13-04207.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley DC. Computational Distinctions of Vocabulary Type. Indiana University Linguistics Club; 1983. [Google Scholar]

- Brennan J, Pylkkänen L. Processing events: Behavioral and neuromagnetic correlates of Aspectual Coercion. Brain and Language. 2008;106(2):132–143. doi: 10.1016/j.bandl.2008.04.003. [DOI] [PubMed] [Google Scholar]

- Caplan D, Chen E, Waters G. Task-dependent and task-independent neurovascular responses to syntactic processing. Cortex. 2008;44(3):257–275. doi: 10.1016/j.cortex.2006.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caplan D, Vijayan S, Kuperberg G, West C, Waters G, Greve D, et al. Vascular responses to syntactic processing: Event-related fMRI study of relative clauses. Human Brain Mapping. 2002;15(1):26–38. doi: 10.1002/hbm.1059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caplan D, Waters G, Kennedy D, Alpert N, Makris N, Dede G, et al. A study of syntactic processing in aphasia II: neurological aspects. Brain Lang. 2007;101(2):151–177. doi: 10.1016/j.bandl.2006.06.226. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Shelton JR. Domain-specific knowledge systems in the brain the animate-inanimate distinction. J Cogn Neurosci. 1998;10(1):1–34. doi: 10.1162/089892998563752. [DOI] [PubMed] [Google Scholar]

- Carreiras M, Mechelli A, Price CJ. Effect of Word and Syllable Frequency on Activation during Lexical Decision and Reading Aloud. Human Brain Mapping. 2006;27(2):963–972. doi: 10.1002/hbm.20236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen E, West WC, Waters G, Caplan D. Determinants of bold signal correlates of processing object-extracted relative clauses. Cortex. 2006;42(4):591–604. doi: 10.1016/s0010-9452(08)70397-6. [DOI] [PubMed] [Google Scholar]

- Chomsky N. The Minimalist Program. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- Dapretto M, Bookheimer SY. Form and Content: Dissociating Syntax and Semantics in Sentence Comprehension. Neuron. 1999;24(2):427–432. doi: 10.1016/s0896-6273(00)80855-7. [DOI] [PubMed] [Google Scholar]

- Dikker S, Rabagliati H, Pylkkänen L. Sensitivity to syntax in visual cortex. Cognition. 2009;110(3):293–321. doi: 10.1016/j.cognition.2008.09.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dikker S, Rabagliati H, Pylkkänen L. Early occipital sensitivity to syntactic category is based on form typicality. Psychological Science. doi: 10.1177/0956797610367751. in press. [DOI] [PubMed] [Google Scholar]

- Dronkers NF, Wilkins DP, Van Valin RD, Redfern BB, Jaeger JJ. Lesion analysis of the brain areas involved in language comprehension; Towards a New Functional Anatomy of Language. Cognition. 2004;92(1–2):145–177. doi: 10.1016/j.cognition.2003.11.002. [DOI] [PubMed] [Google Scholar]

- Embick D, Hackl M, Schaeffer J, Kelepir M, Marantz A. A magnetoencephalographic component whose latency reflects lexical frequency. Cognitive Brain Research. 2001;10(3):345–348. doi: 10.1016/s0926-6410(00)00053-7. [DOI] [PubMed] [Google Scholar]

- Embick D, Marantz A, Miyashita Y, O’Neil W, Sakai KL. A Syntactic Specialization for Broca’s Area. Proceedings of the National Academy of Sciences. 2000;97(11):6150–6154. doi: 10.1073/pnas.100098897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiebach CJ, Friederici AD, Muller K, von Cramon DY. fMRI Evidence for Dual Routes to the Mental Lexicon in Visual Word Recognition. Journal of Cognitive Neuroscience. 2002;14(1):11–23. doi: 10.1162/089892902317205285. [DOI] [PubMed] [Google Scholar]

- Fiez JA, Balota DA, Raichle ME, Petersen SE. Effects of lexicality, frequency, and spelling-to-sound consistency on the functional anatomy of reading. Neuron. 1999;24(1):205–218. doi: 10.1016/s0896-6273(00)80833-8. [DOI] [PubMed] [Google Scholar]

- Forman SD, Cohen JD, Fitzgerald M, Eddy WF, Mintun MA, Noll DC. Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): use of a cluster-size threshold. Magn Reson Med. 1995;33(5):636–647. doi: 10.1002/mrm.1910330508. [DOI] [PubMed] [Google Scholar]

- Frazier L. Syntactic complexity. In: Dowty D, Karttunen L, Zwicky AM, editors. Natural language parsing: Psychological, computational, and theoretical perspectives. Cambridge Univ Press; 1985. pp. 129–187. [Google Scholar]

- Friederici AD, Meyer M, von Cramon DY. Auditory language comprehension: An event-related fMRI study on the processing of syntactic and lexical information. Brain and Language. 2000;75(3):465–477. [PubMed] [Google Scholar]

- Friederici AD, von Cramon DY. Syntax in the brain: Linguistic versus neuroanatomical specificity. Brain and Behavioral Sciences. 2001;23(1):32–33. [Google Scholar]

- Genovese CR, Lazar NA, Nichols T. Thresholding of Statistical Maps in Functional Neuroimaging Using the False Discovery Rate. NeuroImage. 2002;15(4):870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Gibson E. Linguistic complexity: Locality of syntactic dependencies. Cognition. 1998;68:1–76. doi: 10.1016/s0010-0277(98)00034-1. [DOI] [PubMed] [Google Scholar]

- Gibson E. The Dependency Locality Theory: A Distance-Based Theory of Linguistic Complexity. In: Marantz A, Miyashita Y, O’Neil W, editors. Image, Language, Brain: Papers from the First Mind Articulation Project Symposium. Cambridge, MA: MIT Press; 2000. pp. 95–126. [Google Scholar]

- Goebel R. Brainvoyager: a program for analyzing and visualizing functional and structural magnetic resonance data sets. Neuroimage. 1996;3:604. [Google Scholar]

- Grodzinsky Y. The neurology of syntax: Language use without Broca’s area. Behavioral and Brain Sciences. 2001;23(01):1–21. doi: 10.1017/s0140525x00002399. [DOI] [PubMed] [Google Scholar]

- Grodzinsky Y, Friederici AD. Neuroimaging of syntax and syntactic processing. Current Opinion in Neurobiology. 2006;16(2):240–246. doi: 10.1016/j.conb.2006.03.007. [DOI] [PubMed] [Google Scholar]

- Gurjanov M, Lukatela G, Moskovljevic J, Savic M, Turvey MT. Grammatical priming of inflected nouns by inflected adjectives. Cognition. 1985;19(1):55–71. doi: 10.1016/0010-0277(85)90031-9. [DOI] [PubMed] [Google Scholar]

- Hale J. Uncertainty about the Rest of the Sentence. Cognitive Science. 2006;30(4) doi: 10.1207/s15516709cog0000_64. [DOI] [PubMed] [Google Scholar]

- Hasson U, Malach R, Heeger DJ. Reliability of cortical activity during natural stimulation. Trends in Cognitive Sciences. 2009 doi: 10.1016/j.tics.2009.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U, Nir Y, Levy I, Fuhrmann G, Malach R. Intersubject Synchronization of Cortical Activity During Natural Vision. Science. 2004;303:1634–1640. doi: 10.1126/science.1089506. [DOI] [PubMed] [Google Scholar]

- Hawkins JA. A Performance Theory of Order and Constituency. Cambridge University Press; 1994. [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nature Reviews Neuroscience. 2007;8(5):393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Humphries C, Binder JR, Medler DA, Liebenthal E. Syntactic and semantic modulation of neural activity during auditory sentence comprehension. Journal of Cognitive Neuroscience. 2006;18(4):665–679. doi: 10.1162/jocn.2006.18.4.665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphries C, Love T, Swinney D, Hickok G. Response of anterior temporal cortex to syntactic and prosodic manipulations during sentence processing. Human Brain Mapping. 2005;26(2):128–138. doi: 10.1002/hbm.20148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Just MA, Carpenter PA, Keller TA, Eddy WF, Thulborn KR. Brain activation modulated by sentence comprehension. Science. 1996;274(5284):114. doi: 10.1126/science.274.5284.114. [DOI] [PubMed] [Google Scholar]

- Kuo WJ, Yeh TC, Lee CY, Wu Yu-Te, Chou CC, Ho LT, et al. Frequency effects of Chinese character processing in the brain: an event-related fMRI study. NeuroImage. 2003;18(3):720–730. doi: 10.1016/s1053-8119(03)00015-6. [DOI] [PubMed] [Google Scholar]

- Levy R. Expectation-based syntactic comprehension. Cognition. 2008;106(3):1126–1177. doi: 10.1016/j.cognition.2007.05.006. [DOI] [PubMed] [Google Scholar]

- Marcus M, Kim G, Marcinkiewicz MA, MacIntyre R, Bies A, Ferguson M, et al. The Penn Treebank: Annotating predicate argument structure. ARPA Human Language Technology Workshop,; 1994. pp. 114–119. [Google Scholar]

- Mazoyer BM, Tzourio N, Frak V, Syrota A, Murayama N, Levrier O, et al. The cortical representation of speech. Journal of Cognitive Neuroscience. 1993;5(4) doi: 10.1162/jocn.1993.5.4.467. [DOI] [PubMed] [Google Scholar]

- Miller GA, Chomsky N. Finitary Models of Language Users. Handbook of Mathematical Psychology 1963 [Google Scholar]

- Mukamel R, Gelbard H, Arieli A, Hasson U, Fried I, Malach R. Coupling between neuronal firing, field potentials, and FMRI in human auditory cortex. Science. 2005;309(5736):951–954. doi: 10.1126/science.1110913. [DOI] [PubMed] [Google Scholar]

- Nir Y, Fisch L, Mukamel R, Gelbard-Sagiv H, Arieli A, Fried I, et al. Coupling between Neuronal Firing Rate, Gamma LFP, and BOLD fMRI Is Related to Interneuronal Correlations. Current Biology. 2007;17(15):1275–1285. doi: 10.1016/j.cub.2007.06.066. [DOI] [PubMed] [Google Scholar]

- Prabhakaran R, Blumstein SE, Myers EB, Hutchison E, Britton B. An event-related fMRI investigation of phonological-lexical competition. Neuropsychologia. 2006;44(12):2209–2221. doi: 10.1016/j.neuropsychologia.2006.05.025. [DOI] [PubMed] [Google Scholar]

- Pylkkänen L, Marantz A. Tracking the time course of word recognition in MEG. Trends in Cognitive Science. 2003;7(5):187–189. doi: 10.1016/s1364-6613(03)00092-5. [DOI] [PubMed] [Google Scholar]

- Pylkkänen L, Martin AE, McElree B, Smart A. The Anterior Midline Field: Coercion or decision making? Brain and Language. 2008;108(3):184–190. doi: 10.1016/j.bandl.2008.06.006. [DOI] [PubMed] [Google Scholar]

- Pylkkänen L, McElree B. An MEG study of silent meaning. Journal of Cognitive Neuroscience. 2007;19(11):1905–1921. doi: 10.1162/jocn.2007.19.11.1905. [DOI] [PubMed] [Google Scholar]

- Rademacher J, Morosan P, Schormann T, Schleicher A, Werner C, Freund HJ, et al. Probabilistic mapping and volume measurement of human primary auditory cortex. NeuroImage. 2001;13(4):669–683. doi: 10.1006/nimg.2000.0714. [DOI] [PubMed] [Google Scholar]

- Rogalsky C, Hickok G. Selective Attention to Semantic and Syntactic Features Modulates Sentence Processing Networks in Anterior Temporal Cortex. Cerebral Cortex. 2008 doi: 10.1093/cercor/bhn126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santi A, Grodzinsky Y. Taxing working memory with syntax: Bihemispheric modulations. Human Brain Mapping. 2006 doi: 10.1002/hbm.20329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santi A, Grodzinsky Y. Working memory and syntax interact in Broca’s area. NeuroImage. 2007;37(1):8–17. doi: 10.1016/j.neuroimage.2007.04.047. [DOI] [PubMed] [Google Scholar]

- Segalowitz SJ, Lane KC. Lexical Access of Function versus Content Words. Brain and Language. 2000;75(3):376–389. doi: 10.1006/brln.2000.2361. [DOI] [PubMed] [Google Scholar]

- Solomyak O, Marantz A. Evidence for Early Morphological Decomposition in Visual Word Recognition. Journal of Cognitive Neuroscience. 2009 doi: 10.1162/jocn.2009.21296. [DOI] [PubMed] [Google Scholar]

- Stowe LA, Broere CA, Paans AM, Wijers AA, Mulder G, Vaalburg W, et al. Localizing components of a complex task: sentence processing and working memory. Neuroreport. 1998;9(13):2995–2999. doi: 10.1097/00001756-199809140-00014. [DOI] [PubMed] [Google Scholar]

- Stromswold K, Caplan D, Alpert N, Rauch S. Localization of syntactic comprehension by Positron Emission Tomography. Brain and Language. 1996;52:452–473. doi: 10.1006/brln.1996.0024. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. Thieme Medical Publishers; New York: 1988. [Google Scholar]

- Vandenberghe R, Nobre AC, Price CJ. The Response of Left Temporal Cortex to Sentences. Journal of Cognitive Neuroscience. 2002;14(4) doi: 10.1162/08989290260045800. [DOI] [PubMed] [Google Scholar]

- Vasishth S, Lewis RL. Argument-Head Distance and Processing Complexity: Explaining Both Locality and Antilocality Effects. Language. 2006;82(4):767–794. [Google Scholar]

- Wilson SM, Molnar-Szakacs I, Iacoboni M. Beyond Superior Temporal Cortex: Intersubject Correlations in Narrative Speech Comprehension. Cerebral Cortex. 2008;18(1) doi: 10.1093/cercor/bhm049. [DOI] [PubMed] [Google Scholar]

- Yngve VH. A Model and an Hypothesis for Language Structure. Proceedings of the American Philosophical Society. 1960;104(5):444–466. [Google Scholar]

- Zurif EB. Brain regions of relevance to syntactic processing. An invitation to cognitive science. 1995;1:381–398. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.