Abstract

Background

The use of electronic medical records to identify common healthcare-associated infections (HAIs), including pneumonia, surgical site infections, bloodstream infections and urinary tract infections (UTIs), has been proposed as an efficient tool to perform HAI surveillance and to guide infection prevention efforts. Increased attention on HAIs as a safety and quality issue has led to public health reporting requirements and a focus on quality improvement activities around HAIs. Traditional surveillance to detect HAIs and focus prevention efforts is labor intensive and computer algorithms could be useful to screen electronic data and provide actionable information.

Methods

We compared seven computer-based decision rules to identify UTIs in a sample of 33,834 admissions to an urban academic health center. These decision rules included combinations of laboratory data (culture reports and urinalysis findings), patient clinical data (fever), and administrative data (ICD-9 codes).

Results

Out of 33,834 hospital admissions, 3,870 UTIs were identified by at least one of the decision rules. The use of ICD-9 codes alone identified 2,614 UTIs. Laboratory-based definitions identified 2,773 infections but when the presence of fever was included, only 1,125 UTIs were identified. The estimated sensitivity of ICD-9 codes was 55.6% (95% CI, 52.5%, 58.5%) when compared with a culture and symptom-based definition. Of the UTIs identified by ICD-9 codes, 167/1,125 (14.8%) also met two urine culture decision rules.

Discussion

Using the example of UTI identification, we demonstrate how different algorithms may be appropriate depending on the goal of case identification. Desirable characteristics of electronic surveillance methods for mandatory reporting, process improvement, and economic analysis are reviewed.

Infections that develop during the course of hospitalization are considered a major public health problem, costing 20 to 30 billion dollars and associated with 100,000 excess deaths each year.1–3 Many healthcare-associated infections (HAIs) are believed to be preventable through the use of evidence-based practice guidelines, and an increasing number of states use HAI rates as a quality measure and require public reporting of these conditions.4 Proper surveillance of infections is important to identify institution-specific patterns and to facilitate focused prevention efforts.5,6 Prevention activities are increasingly important as recent changes to Medicare and Medicaid policies exclude coverage for certain potentially preventable HAIs.7 It is therefore important that valid and reliable methods be used to identify HAIs.

The traditional gold standard for the identification of HAIs has been chart review by an experienced infection preventionist using standardized criteria. The Centers for Disease Control and Prevention’s National Healthcare Safety Network (NHSN) has developed a widely used set of case definitions for select HAIs.8 In 2009, NHSN revised the surveillance definition for urinary tract infections (UTIs) to include Symptomatic Urinary Tract Infection (SUTI), Asymptomatic Bacteremic Urinary Tract Infection (ABUTI), and Other Urinary Tract Infection (OUTI).9 Depending on the onset and timing of symptoms, UTIs can be classified as community-associated or healthcare-associated. In the healthcare settings, UTIs are generally associated with the use of urinary catheters and a major change in the 2009 NHSN definitions focused on the presence of urinary catheters. As opposed to clinical management guidelines published by other groups10, surveillance definitions are intended guide consistent and reliable case finding and reporting by using a combination of signs (e.g., fever, tenderness), symptoms (e.g., urgency, frequency, dysuria), and laboratory findings (e.g., positive urine cultures and pyuria - the presence of white blood cells in a urine sample). Expert chart review has been shown to be a valid and reliable measure to detect HAIs, but it is time consuming and diverts infection control efforts away from prevention and control of infections.11 Use of computerized records have been suggested as a way to increase the efficiency of HAI surveillance.12, 13 In addition to commercially available software systems, in-house computerized algorithms can be developed to scan clinical and administrative data in order to identify HAIs. However, as with any diagnostic test, these algorithms are imperfect - they miss some infections and falsely identify others. Depending on the purpose and goals of case identification, different performance characteristics of computerized algorithms may be more desirable.

As part of a parent study to determine the distribution of costs between providers, payers, and patients associated with the UTIs (“Distribution of the costs of antimicrobial resistant infections” (R01 NR010822), we designed a series of methods to screen electronic medical records to identify cases of UTIs. Beginning in late 2008, an interdisciplinary team of clinicians, infection prevention professionals, informatics specialists, epidemiologists, and health economists developed and validated decision rules to identify UTIs among patients at our center. The goal of this paper is to demonstrate the results from different computer decision rules to identify UTIs and to compare the estimated incidence rates using each method. After outlining the criteria we used to identify UTIs, we show how the application of these different computer rules result in different sets of UTIs and in different estimates of infection rates. Finally, we discuss the relevance of these findings in the setting of quality improvement and as an aide in management decision making and patient safety initiatives. This is important to answer the question: What are the most desirable characteristics of surveillance systems to identify HAIs for different purposes?

Methods

Discharge data were obtained from an urban tertiary hospital located in New York City with approximately 745 beds and part of a large teaching and research academic health center. Following approval from the Columbia University Medical Center Institutional Review Board (Federalwide Assurance #00002636), clinical and administrative data elements were extracted from three sources for all hospital discharges for 2007. Administrative data including admission data and both primary and secondary International Statistical Classification of Diseases and Related Health Problems (ICD-9) codes were extracted from the cost accounting and billing system with codes that were obtained from the medical records by hospital based coders. We used the relevant code for UTI (ICD-9 599.0). We also screened records for coded symptoms of UTI as defined by NHSN: suprapubic tenderness (ICD-9 608.9, 625.9), dysuria (ICD-9 788.1), frequency (ICD-9 788.41) and urgency (ICD-9 788.63). Clinical data included temperature readings from the physician and nursing order entry system of the electronic health record. Consistent with NHSN guidelines, fever was defined as a temperature greater than 100.4° F (38° C). The University’s Clinical Data Warehouse, a repository of clinical, laboratory and administrative data, was mined for urine culture and urinalysis results. A positive culture was identified as ≥100,000 colony-forming units (CFU)/ml. Cultures with 10,000–100,000 CFU/ml were considered positive if a urinalysis within 48 hours also demonstrated pyuria (> 3 white blood cells/high power field).9 All patient information was de-identified and linked to a randomly generated study ID number.

Seven sets of computer decision rules were developed and applied to patient records. These rules were written to extract readily available data to identify UTIs consistent with NHSN criteria which require the presence of at least one sign or symptom of UTI and a positive culture result. Rule 1 was comprised of hospital discharges containing the diagnosis code for UTI. Rules 2 and 3 identified patients with positive urine culture results (>100,000 CFU/ml for rule 2 and 10,000–100,000 CFU/ml plus pyuria for rule 3). Rule 4 was the combination of UTIs identified in rule 2 and 3. Rules 5 and 6 added fever to culture-based rules 2 and 3. Rule 7 was the combination of culture-based rules and the presence of fever. (see Table 1) For each decision rule, we calculated the number of UTIs, estimated UTI rate, overall, negative and positive agreement between ICD-9 codes and the final decision rules. We also determined kappa coefficients and prevalence-adjusted kappa values.14

Table 1.

Decision rules used to identify UTIs.

| Decision Rule | Advantages and Disadvantages | Rate estimate per 100 admissions | |

|---|---|---|---|

| 1. | ICD-9 Code for UTI++ | Easily obtained from administrative data Relies on accurate coding of infections May include patients who are treated for UTI presumptively | 7.7 |

| 2. | Positive Urine Culture* (>100,000 CFU/ml) | Readily obtained from laboratory Patients who are treated without culture are missed | 6.1 |

| 3. | Positive Urine Culture* (10–100,000 CFU/ml) PLUS pyuria+ | Low organism count may represent colonization, not infection Presence of pyuria may be difficult to identify from lab reports | 3.4 |

| 4. | Combined rules 2 & 3 | 8.2 | |

| 5. | Positive Urine Culture* (>100,000 CFU/ml) PLUS fever** | Cases identified are likely to represent true infection Many patients (especially elderly) may not have fever with UTI | 2.5 |

| 6. | Positive Urine Culture* (10–100,000 CFU/ml) PLUS pyuria+ PLUS fever** | Cases with low culture count but no U/A performed are missed Three criteria are likely to increase probability Fever may be difficult to identify Many patients (especially elderly) may not have fever with UTI | 1.3 |

| 7. | Combined rules 5 & 6 | 3.3 |

Positive urine culture must contain ≤ 2 organisms

Fever defined as T>38C within 48 hours of culture

Pyuria defined as ≥ 3 WBC/high power field within 24 hours of culture

ICD9 Codes 599.xx

Results

A total of 33,834 hospital admissions were included in the analysis. Using at least one criterion, UTIs were identified in 2,614 admissions. Depending on the rule used, rates of UTI ranged from 1.3 to 8.2 UTIs per 100 patient admissions. (Table 1)

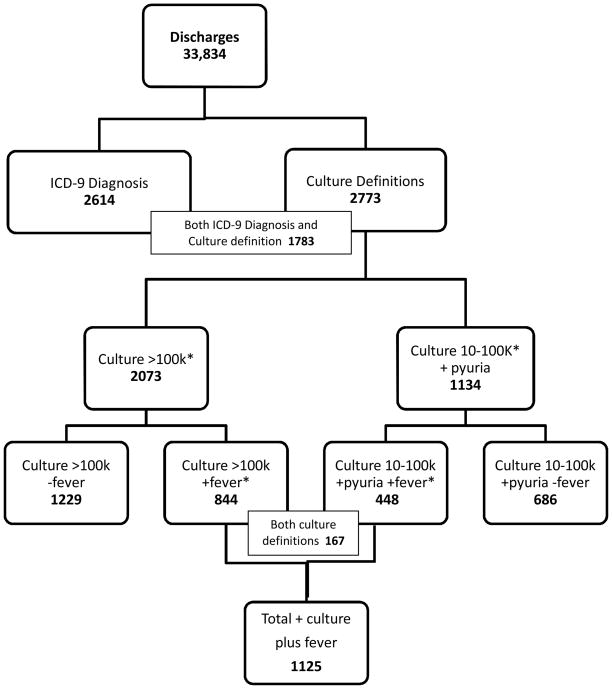

Using the culture definitions (decision rules 2 or 3), 2,773 cases were classified as UTIs. (Figure 1) When fever was added to the culture definitions, the number classified as UTI fell to 1,125 UTIs. Because of repeat cultures within a short period of time or multiple organisms from a single culture, 434 out of 2773 (15.7%) of culture-positive patients met both culture criteria (rule 2 and rule 3) but were only included once in the overall count. Among patients with a fever and positive culture, 167/1125 (14.8%) met both culture criteria.

Figure 1.

Urinary tract infections identified using different criteria. Urine culture reports are reported as 10,000–100,000 colony-forming units (CFU)/ml or over 100,000 CFU/ml and fever is defined as temperature over 38 degrees C. *Some patients met both culture criteria because of sequential cultures or multiple organisms present.

ICD-9 symptom codes were infrequently used in this data set. Suprapubic tenderness (608.9, 625.9) was coded in 14 cases (0.04%), dysuria (788.1) in 27 (0.08%), frequency (788.41) in 37 (0.11%) and urgency (788.63) in 8 (0.02%) of admissions. Out of 86 cases with at least one symptom code, only 3 of these (3.5%) also met the criteria for UTI using another definition.

Out of 2,073 patients with culture >100,000 CFU/ml (decision rule 3), 1,229 (59.3%) did not have a fever and thus were not included in the UTI cases. Similarly, out of 1,134 patients with positive culture between 10 and 100,000 CFU/ml and abnormal urinalysis, 686 (60.5%) did not have a fever and were not included in the UTI count.

In 1,125 patients with a positive culture and fever, 625 had an ICD-9 code for UTI. (Table 2) ICD-9 UTI codes were present in 2,114/32,709 patients who did not meet the culture/fever definition. In order to adjust for UTI prevalence, we calculated an adjusted kappa between ICD-9 codes and the combined culture/fever definition (rule 7) which was 0.85. 14 Compared to rule 7, the sensitivity of ICD-9 codes for UTI was 55.6% (95% CI; 52.7%, 58.5%). ICD-9 codes were present at similar rates for patients with culture-positive UTI by rule 2 (1215/2,073; 58.6%) and rule 3 (624/1,134; 54.8%) and among culture positive patients with fever including rule 5 (504/844; 59.7%) and rule 6 (251/448; 56.0%).

Table 2.

Urinary tract infections identified by culture or culture plus fever by presence of ICD-9 code.

| Culture (>100k or 10–100k plus pyuria) | Culture plus fever (>100k or 10–100k plus pyuria plus T>38°C) | |||

|---|---|---|---|---|

| Positive (% of total) | Negative (% of total) | Positive (% of total) | Negative (% of total) | |

| ICD-9 Positive | 1783 (5.3%) | 831 (2.5%) | 625 (1.8%) | 1989 (5.9%) |

| ICD-9 Negative | 990 (2.9%) | 30230 (89.3%) | 500 (1.5%) | 30720 (90.8%) |

| Kappa = 0.63 | Kappa = 0.29 | |||

| (Kappa 95% CI) (.616, .649) | (Kappa 95% CI) (.264, .316) | |||

| Positive Agreement = 66.2% | Positive Agreement = 32.3% | |||

| Negative Agreement = 97.1% | Negative Agreement = 95.9% | |||

Discussion

While the use of electronic data has the potential to increase efficiency of surveillance activities, these results confirm limitations of the use of electronic systems to accurately identify UTI cases and demonstrate several important factors that must be considered when building valid systems to identify UTIs. First, when using potentially overlapping definitions, patients who may appear in more than one definition should not be included in another. For example, had we failed to exclude patients who met both culture definitions, we would have over-estimated the number of UTI.

In our study, we employed several techniques to improve the accuracy of electronic data for UTI surveillance. First, we augmented administrative codes with clinical and microbiologic data.15 For example, a majority of patients with abnormal urine culture results did not meet the NHSN criteria for UTI. Although combining data sources has been reported to improve sensitivity and specificity in detecting other HAIs, to our knowledge this is the first study using both clinical and microbiologic data to augment ICD-9 codes for UTI identification.16–18

In order to improve detection, we attempted to use a range of ICD-9 codes including urinary symptoms codes, but some codes were rarely entered and were therefore of little value in the decision rules.15 As reported by others, the quality of reported clinical data is an important consideration when designing these systems.19, 20 In this current study, symptom codes were of little use in detecting UTIs, possibly because billing and reimbursement are not affected by the presence of one of these codes and therefore such clinical information is incompletely documented in the data elements reviewed. This phenomenon has been reported with other clinically important data which does not impact on reimbursement rates.14

Although we developed a range of estimates for UTI rate using different sources of data, rates of UTI identified by ICD-9 codes and the abnormal laboratory results were similar (7.7 vs. 8.2 per 1,000). The ideal metrics for HAIs, including UTIs, have not been determined, but efforts are underway to use available data to identify quality issues.21 Recognizing that all practical measures will have limitations, future research should explore whether current available measures could serve as useful proxy measurements for “true” rates and to what extent measures need to be adjusted to reflect severity of case mix or population differences.

There are several limitations to this study. First, while the combination of fever and culture results had a high level of face validity and we believe that nearly all of the patients meeting our culture plus fever criteria did have a UTI by NHSN criteria, we did not perform a ‘gold standard’ expert case review of each medical record. Because we did not confirm case status for all patients, we were unable to calculate other measures of test performance including specificity, positive predictive values, or negative predictive values. Quantifying test performance requires a review of a large number of medical records in order to ensure that cases are correctly classified.22 One approach to address this problem would be to review the records of a random sample of non-cases and use these results to reclassify a proportion of non-diseased individuals.18

We did not differentiate community versus healthcare-associated UTI nor did we collect information about catheter-associated UTIs.7, 23 It is possible that UTIs which originate in the community are coded at different rates than hospital onset UTIs, and this would bias our results. NHSN criteria were developed to identify UTIs as part of HAI surveillance and these algorithms were not designed to identify all clinically important UTIs nor have they been validated as diagnostic criteria for community-onset UTIs. The data generated in this study were limited to electronic medical records from one academic health center and it is possible that these patients reflect a more severe spectrum of disease. If more severe UTI cases were coded at higher rates than less severe cases, these results would be an overestimation of the performance of ICD-9 codes.24

Despite these limitations, electronic data can be useful for a variety of purposes as long as decision makers are mindful of the limitations of the data and the limitations of systems used to analyze clinical and administrative data. Clearly, different decision rules are required for different purposes. Decision rules or criteria should be developed to minimize misclassification since misclassification of disease or exposure status status could both influence results. Disease misclassification occurs when an individual is classified incorrectly as having disease when disease is not present or vice versa. Exposure misclassification occurs when individuals are inappropriately identified as having an exposure or risk factor when it is not present.

Depending on the use of the data and anticipated effect of disease or exposure misclassification, different characteristics of decision rules may be required. In the following section, ideal characteristics of decision rules are presented for four different purposes to illustrate the importance of building decision rules consistent with the desired result.

Example 1: Use of electronic systems to screen hospital data in addition to traditional surveillance activities

In this example, computerized systems might be used to scan large volumes of electronic data to identify possible cases for review by an infection preventionist.11, 17 For such a situation, the ideal decision rules should reliably exclude non-cases that is, it should have high sensitivity and negative predictive value. This ensures that potential cases are not missed by computerized screening. Many studies have demonstrated the low sensitivity of electronic data for HAI identification, so the most appropriate aim of this process would be as a supplement to traditional surveillance.

Example 2: Use of electronic data systems to accurately identify cases of UTI in order to identify features of “extreme contrast” cases

For our project, we attempted to accurately identify cases of UTIs within a large data set in order to study overall costs in UTI versus non-UTI cases. Since we sought to make comparisons based on the overall costs, it was essential to have high specificity. The effect of UTI cases that were misclassified on the overall study would therefore be that they would contribute to average cost of control patients. In effect, we chose to be conservative and expected that any observed differences in cost would be an underestimate. Using relatively stringent clinical criteria validated by expert review, Patkar, et.al.25 reported 100% specificity of a set of rules to identify a subtype of severe UTIs among patients with rheumatoid arthritis although the sensitivity of these criteria was 5%. These criteria were “intentionally constructed to favor specificity over sensitivity.” (p. 324)

Example 3: Use of electronic data for mandatory reporting to regulatory bodies, comparison of hospital quality, and payment modification

For the purposes of electronic surveillance and reporting, ideal decision rules should have both high negative predictive value and high positive predictive value. This ensures that true positive cases are not missed and that negative cases are not included in the case count. The data from this study confirm other reports of the poor sensitivity for the use of electronic records to identify UTIs, and that the combination of currently available data and technology to mine these data is inadequate for this purpose.18, 26, 27

Example 4: Use of electronic data to monitor trends in UTIs within a facility

Controversy exists about the comparison of HAI rates as a measure of the quality of care between facilities. Within a single facility, however, determining the impact of quality improvement activities depends primarily on reliable, repeatable measures. For this purpose, the goal should be consistency of data collection, reporting and analysis.

Consistent with other authors, we found that administrative data alone is inadequate as a reliable means to perform surveillance, though variations of decision rules were appropriate for the purposes of this project and may be appropriate in other settings.15 When developing criteria for identifying UTIs, it is important that the potential effect of misclassification be considered and controlled. Since current technology does not allow absolute precision and accuracy in the detection of HAIs, decision rules should attempt to control the most influential misclassification based on the objectives of the analysis. The ideal metrics should be reliable as well as practical and efficient and should provide the end user with data that is appropriate to inform decision making. Further research is required to identify the most appropriate balance of cost and reliability for HAI measures. Specifically, the next step should be the development and standardization of reliable metrics which use available data to guide quality improvement activities and for public reporting. Authors who report use of electronic data for surveillance purposes should clearly state the purpose of the study (e.g., quality improvement activities, public reporting) in order permit analysis of the appropriateness of the data and methods used. As we have demonstrated, it is appropriate to use different analytic strategies depending on the end use of the analysis.

Acknowledgments

This study was supported by the National Institute of Nursing Research grant, “Distribution of the costs of antimicrobial resistant infections.” (R01 NR010822). Dr. Landers received support from the National Institute of Nursing Research as a fellow at the Center for Interdisciplinary Research to Reduce Antibiotic Resistance (CIRAR), Columbia University School of Nursing (5T90NR010824-02).

Footnotes

The authors have no conflict of interests to declare.

References

- 1.Stone PW, Braccia D, Larson E. Systematic review of economic analyses of health care-associated infections. Am J Infect Control. 2005 Nov;33:501–9. doi: 10.1016/j.ajic.2005.04.246. [DOI] [PubMed] [Google Scholar]

- 2.Klevens RM, et al. Estimating health care-associated infections and deaths in US hospitals, 2002. Public Health Rep. 2007 Mar.-April;122:160–6. doi: 10.1177/003335490712200205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Scott RD. [(last accessed April 8, 2010)];The direct medical costs of healthcare-associated infections in U.S. hospitals and the benefits of prevention. http://www.cdc.gov/ncidod/dhqp/pdf/Scott_CostPaper.pdf.

- 4.Meier BM, Stone PW, Gebbie KM. Public health law for the collection and reporting of health care associated infections. Am J Infect Control. 2008 Oct;36:537–51. doi: 10.1016/j.ajic.2008.01.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Arias KA. Surveillance. In: Carrico Ruth., editor. APIC text of infection control and epidemiology. 3. Washington, DC: APIC; 2009. [Google Scholar]

- 6.Yokoe DS, Classen D. Improving patient safety through infection control: A new healthcare imperative. Infect Control Hosp Epidemiol. 2008 Oct;29:3–11. doi: 10.1086/591063. [DOI] [PubMed] [Google Scholar]

- 7.Saint S, et al. Catheter-associated urinary tract infection and the Medicare rule changes. Ann Intern Med. 2009;150:877–884. doi: 10.7326/0003-4819-150-12-200906160-00013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Horan TC, Andrus M, Dudeck MA. CDC/NHSN surveillance definition of health care-associated infection and criteria for specific types of infections in the acute care setting. Am J Infect Control. 2008;36:309–332. doi: 10.1016/j.ajic.2008.03.002. [DOI] [PubMed] [Google Scholar]

- 9. [(last accessed April 7, 2010)];National Healthcare Safety Network: Catheter-Associated Urinary Tract Infection Event. http://www.cdc.gov/nhsn/pdfs/pscManual/7pscCAUTIcurrent.pdf.

- 10.Hooton TM, et al. Diagnosis, prevention, and treatment of catheter-associated urinary tract infeciton in adults: 2009 international clinical practice guidelines from the Infectious Diseases Society of America. Clin Infect Dis. 2010;50:625–63. doi: 10.1086/650482. [DOI] [PubMed] [Google Scholar]

- 11.Brusaferro S, et al. Surveillance of hospital-acquired infections: A model for settings with resource constraints. Am J Infect Control. 2006 Aug;34:362–366. doi: 10.1016/j.ajic.2006.03.002. [DOI] [PubMed] [Google Scholar]

- 12.Leal J, Laupland K. Validity of electronic surveillance systems: A systematic review. J Hosp Infect. 2008 Jul;69:220–229. doi: 10.1016/j.jhin.2008.04.030. [DOI] [PubMed] [Google Scholar]

- 13.Greene LR, et al. APIC position paper: The importance of surveillance technologies in the prevention of health care-associated infections. Am J Infect Control. 2009 Aug;37:510–513. [Google Scholar]

- 14.Chen G, et al. Measuring agreement of administrative data with chart data using prevalence unadjusted and adjusted kappa. BMC Med Res Methodol. 2009 Jan;9:5. doi: 10.1186/1471-2288-9-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Jhung MA, Banerjee SN, Weinstein RA. Administrative coding data and health care-associated infections. Clin Infect Dis. 2009 Apr;49:949–955. doi: 10.1086/605086. [DOI] [PubMed] [Google Scholar]

- 16.Bellini C, et al. Comparison of automated strategies for surveillance of nosocomial bacteremia. Infect Control Hosp Epidemiol. 2007 Sep;28:1030–1035. doi: 10.1086/519861. [DOI] [PubMed] [Google Scholar]

- 17.Leth RA, Moller JK. Surveillance of hospital-acquired infections based on electronic hospital registries. J Hosp Infect. 2006 Jan;62:71–9. doi: 10.1016/j.jhin.2005.04.002. [DOI] [PubMed] [Google Scholar]

- 18.Stevenson KB, et al. Administrative coding data, compared with CDC/NHSN criteria, are poor indicators of health care-associated infections. Am J Infect Control. 2008 Apr;36:155. doi: 10.1016/j.ajic.2008.01.004. [DOI] [PubMed] [Google Scholar]

- 19.Vawdrey DK, et al. Assessing data quality in manual entry of ventilator settings. Journal of the American Medical Informatics Association. 2007 May–Jun;14:295–303. doi: 10.1197/jamia.M2219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Aitchison J, Kay JW, Lauder IJ. Statistical concepts and applications in clinical medicine. Boca Raton, FL: Chapman & Hall/CRC; 2005. [Google Scholar]

- 21.Cohen AL, et al. Recommendations for metrics for multidrug-resistant organisms in healthcare settings: SHEA/HICPAC position paper. Infect Cont Hosp Epidemiol. 2008 Oct;29:901–913. doi: 10.1086/591741. [DOI] [PubMed] [Google Scholar]

- 22.Kelly H, et al. Estimating sensitivity and specificity from positive predictive value, negative predictive value and prevalence: Application to surveillance systems for hospital-acquired infections. J Hosp Infect. 2008 Jun;69:164–168. doi: 10.1016/j.jhin.2008.02.021. [DOI] [PubMed] [Google Scholar]

- 23.Brown J, Doloresco F, III, Mylotte JM. “Never events”: Not every hospital-acquired infection is preventable. Clin Infect Dis. 2009 Apr; doi: 10.1086/604719. [DOI] [PubMed] [Google Scholar]

- 24.Knotternus JA, Buntinx F, vanWeel C. General introduction: Evaluation of diagnostic procedures. In: Knotternus KA, Buntinx F, editors. The evidence base of clinical diagnosis: Theory and methods of diagnostic research. 2. West Sussex, UK: Wiley-Blackwell; 2009. [Google Scholar]

- 25.Patkar NM, et al. Administrative codes combined with medical records based criteria accurately identified bacterial infections among rheumatoid arthritis patients. J Clin Epidemiol. 2009 Mar;62:321–327. doi: 10.1016/j.jclinepi.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zhan C, et al. Identification of hospital-acquired catheter-associated urinary tract infections from Medicare claims: Sensitivity and positive predictive value. Med Care. 2009 Mar;47:364–369. doi: 10.1097/MLR.0b013e31818af83d. [DOI] [PubMed] [Google Scholar]

- 27.Sherman ER, et al. Administrative data fail to accurately identify cases of healthcare-associated infection. Infect Control Hosp Epidemiol. 2006 Apr;27:332–337. doi: 10.1086/502684. [DOI] [PubMed] [Google Scholar]